Application of artificial intelligence (AI) technology to new retail scenarios often results in several innovative endeavors. Primary focus around the digitalization of "Customers, Products, Scenarios", led to the emergence of many new applications, including store customer flow statistics using the pedestrian detection algorithm, and product identification and self-service sales counters based on the image classification algorithm. This article explores the application of AI technology to understand interactions between consumers and products in retail scenarios to further inspect the value of "Customers, Products, and Scenarios" digitalization. It aims to figure out when and in which scenario consumers become interested in what kind of products.

To seek an answer to the question, "when and in which scenario consumers become interested in what kind of products", precisely determine the time when an action is performed around a shelf in a store. Associate the action with the customer information, including the customer's age, gender, and type (returning or new customer). Furthermore, accurately determine the Stock Keeping Unit (SKU) from which a product is picked up or taken away.

Unlike self-service sales counters and offline large screens, surveillance cameras do not have clear images because they are far away from products. Cameras in shopping malls and supermarkets do not have complete images because products are too densely arranged, as shown in Figure 1. In such scenarios, visual cameras only determine whether customers perform any pick-up actions, and do not directly provide product information for "Customers, Products, and Scenarios".

Figure 1 Snapshots of product pick-ups

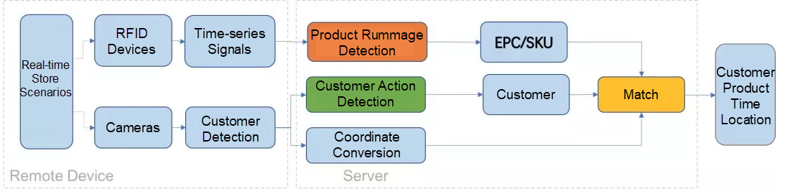

To obtain clear images in such scenarios, use radio-frequency identification (RFID) tags to detect whether products are picked up by customers. RFID tags are widely used in new retail scenarios. The lower price point per tag makes employing passive RFID systems economical for the retail industry. Appropriate algorithms must be used to generate data on the time and scenario of interactions between a specific customer and product. Figure 2 shows the overall implementation process.

Figure 2 Technical diagram of customer-product action detection

In the above diagram, cameras and RFID receivers are deployed in the remote offline store to record real-time videos and receive time-series signals from RFID tags. The remote server deployed in the store executes the customer action detection algorithm to capture pedestrian images from surveillance videos and transmits the images to the back end. The back-end server determines the RFID tags that are likely to be picked up based on received time-series signals. A shop attendant associates the Electronic Product Code (EPC) of the RFID tag with the SKU number of the product and records the information in the database. The server then obtains the SKU information based on the EPCs of tags.

Meanwhile, the back-end server sends received images to the MobileNet classifier to detect the ones with potential pick-up actions. The back-end server also converts the coordinates of customers in the images to their physical locations. Finally, the back-end server associates products with pick-up actions based on the time and potentiality of action to obtain the optimal matches. These matches illustrate information about the time and scenario of interactions between a specific customer and product.

This article describes three key algorithms used in customer-product action detection:

This algorithm detects customer actions in videos and consecutively in single-frame images to meet new business needs.

Unlike pedestrian detection, interactions between customers and products is a time-series process. For example, the actions of picking up, rummaging, and trying on in this process last for a while. Therefore, to precisely understand user behavior, it is important to classify video actions through model learning or prediction based on the entire video. Driven by the wide application of deep neural networks, the video action classification technology has undergone great development recently.

The most famous models include the Convolutional Neural Network (CNN) model [1] developed in 2014, Long-term Recurrent Convolutional Network (LRCN) model [2] developed in 2015, and Inception-V1 C3D (I3D) model [3] developed in 2017. The LRCN model uses 2D convolution to extract features from single-frame images and uses a recurrent neural network (RNN) to extract the time series relationships between frames. This model works well in video action classification but takes a long time to train. The I3D model uses 3D convolution to extract features from the entire video, thereby increasing the training speed.

However, open-source datasets such as UCF101, HMDB51, and Kinetics have only a few limited types of actions in sports and musical performance activities, but not data in shopping malls and supermarkets. Therefore, shopping malls and supermarkets construct and provide action-videos to outsourcing teams for annotation. In the shopping malls and supermarket scenarios, interactions between customers and products last for a very short time. Only 0.4% of the daily videos contain such interactions, resulting in sparse positive samples.

To explore positive samples as much as possible and improve the efficiency of annotation by outsourcing teams, we use the Lucas-Kanade optical flow algorithm to pre-process and filter videos with target activities. After this processing, the proportion of positive samples increase to 5%. To improve the confidence level of samples, we also verify and annotate positive samples to ensure their accuracy.

Let's deep dive into the following attempts made to detect actions in videos.

Using the Pose algorithm and Track algorithm automatically crop a video to obtain the real-time short video composed of actions by each hand of a customer. Use the I3D model to train the classifier for hand actions and optimize the cropping logic of the I3D model.

Cropping a short video composed of actions by each hand of a customer helps to accurately determine the locations where these actions occur. Customer-product actions such as picking up and rummaging are normally completed by hands, so the actions of other parts of a human body may be filtered out without negatively impacting the detection results. Hand-focused cropping reduces the computing workload of the model and speeds up model convergence. In this algorithm, the Pose model is based on Open Pose [4] and the Track model is based on Deep Sort [5]. Both models are mature and can be used directly.

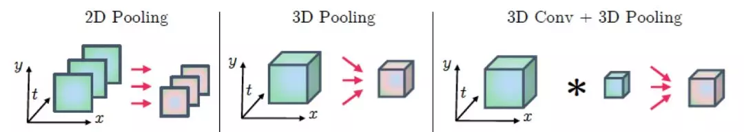

This article mainly describes how to use the I3D model for cropping of hand actions in videos. Image-based learning tasks usually use a 2D convolution kernel to extract features through sliding and pooling. Video-based learning tasks extend the traditional 2D convolution to 3D convolution for feature extraction. As shown in Figure 3, 3D convolution contains the time dimension that is unavailable in 2D convolution.

Figure 3 Diagrams of 2D convolution pooling and 3D convolution pooling

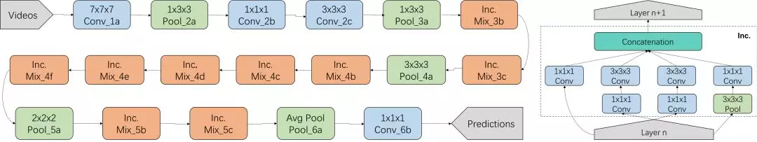

This article uses the recently proposed I3D model, which extends from the traditional 2D convolution to 3D convolution. This model has the best detection result over Kinetics, HMDB51, and UCF101 datasets. Figure 4 shows the I3D model framework. In this schematic diagram, all convolutions and pooling operations are performed at 3D levels. The Mix module is a perception sub-module in the Inception V1 model and consists of four branches, as shown on the right.

Figure 4 I3D model framework (left) and Inception sub-module structure

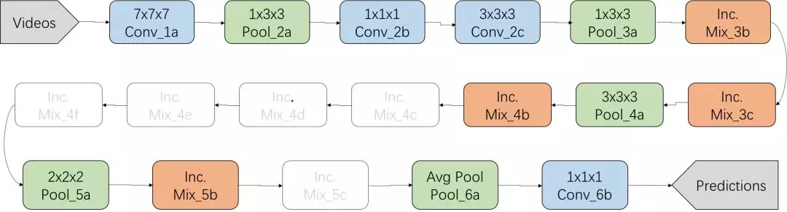

Application of 3D convolution to customer behavior interpretation tasks in a shopping mall or supermarket scenarios shows that this model has too many parameters and is prone to overfitting. Meanwhile, deep network layers lead to poor model generalization ability and a weak function of dropout. To solve the problems, let's simplify the Inception-V1 model by removing the unnecessary multi-layer Inception module (Mix_4c, Mix_4d, Mix_4e, Mix_4f, Mix_5c) and getting the depth of convolution kernels in each Inception module. Figure 5 shows the simplified model.

Figure 5 Cropping logic improved in the I3D model

After operation simplification, the classification accuracy of the model increases from 80.5% to 87.0%. After the adjustment of hyper parameters, the accuracy finally increases to 92%.

This algorithm converts samples to TFRecord data and uploads the data to the data storage center of Alibaba Group. Also, it employs the Machine Learning Platform for AI (PAI) to train models (30 GPUs) in a distributed environment and applies the trained model to customer behavior interpretation. The actual prediction results of the current algorithm are listed in the video_action_recognition_results.mp4. Currently, the back-end server detects customer actions in a single video in real time.

However, against the traditional image-based detection, video action classification detection has the following problems:

The customer action detection algorithm based on video convolution offers high accuracy (92%) and provides an accurate action location (accurate to the wrist). However, the video C3D model and the Pose model have too many parameters and are complex. One server runs only one detection algorithm, resulting in low computing performance. In addition, the C3D algorithm requires videos containing continuous hand actions within a timeframe, which brings great challenges to the Tracking algorithm. To apply the action detection algorithm to multi-store scenarios with multi-channel monitoring signals, we further developed action detection based on single-frame images.

This algorithm allows us to classify and detect potential actions in a single image. Compared to the video-based action detection, action detection based on single-frame images provides lower accuracy since a single image provides only a little information. However, the low accuracy is compensated by integrating the RFID detection result.

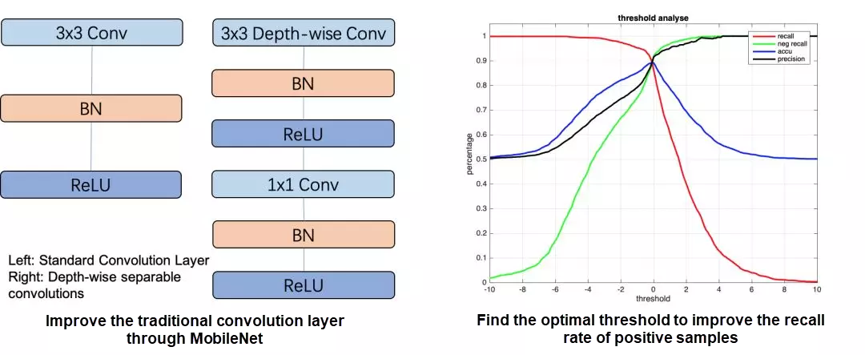

Potential action detection based on a single image is a typical binary classification algorithm. To reduce the demand for computing capability, let's use MobileNet[6] as the classification model. MobileNet is an efficient, lightweight network model that optimizes the computing capacity of the traditional convolution layer based on depthwise convolutions and pointwise convolutions.

Compared to the traditional convolution network, MobileNet keeps the accuracy almost unchanged while reducing the calculated data amount and the number of parameters to 10-20% of the origin. This article uses the MobileNet_V1 model and sets the depth multiplier of the convolution channel number to 0.5 for further reducing the model scale. We authorize an outsourcing team to annotate image action datasets and balance the samples to ensure consistent positive and negative samples during the training process.

In the business scenario that requires capturing moments with actions as many as possible, optimize parameters based on the Logits output of the model. Parameter optimization keeps the classification accuracy at about 89.0% while increasing the recall rate of positive samples to 90% to ensure that positive samples are recalled as many as possible.

Figure 6 Optimized MobileNet model

The image-based action detection algorithm has a classification accuracy of up to 89% and a recall rate of 90%. The accuracy is slightly lower than that of the video-based action detection algorithm, and the detected position is not accurate to the wrist. Figure 7 shows samples for predicting whether a customer picks up clothes. If an image has a gray stripe at the bottom, it is a positive sample. If an image has a black stripe at the bottom, it is a negative sample.

Figure 7 Sample result of image-based action classification

The image-based action detection algorithm uses the lightweight MobileNet model, which greatly reduces the computing complexity. Therefore, it only takes about 10 minutes to search a store's tens of thousands of potential action images from one day. This algorithm processes only images sent to the back-end server, greatly reducing the workload of onsite devices and servers. However, the image-based action detection algorithm only accurately predicts the pedestrian location but does not provide the exact action position. Images several seconds before and after the detected one with the same action may be detected as positive samples, affecting the accuracy of time correlation.

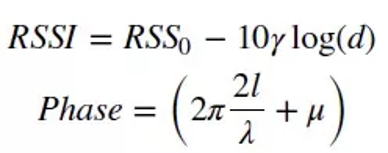

When a customer rummages through clothes, the RFID tag attached to the clothes shakes slightly. The RFID receiver records the changes of the feature values such as the received signal strength indicator (RSSI) and phase of received signals and transmits the information to the backend server. The algorithm analyzes the feature values backhauled by each antenna to determine whether a product has been rummaged. Predicting product rummage actions based on RFID signals affects the result by many factors, including signal noise, multipath effect, accidental electromagnetic noise, and signal shielding by counters. Besides, a non-linear relationship [7] exists between the size of the RFID reflection signal and the receiver-tag distance.

In this formula, d indicates the distance between the RFID tag and the receiver. l = d mod λ. λ is determined by the multipath effect and the current environment. μ indicates the offset caused by errors of various static devices. As per the formula, the receiver installation location and the store environment have a great impact on RFID signals. It is challenging for us to enable the product rummage detection algorithm to be applicable to receivers installed in different locations in various stores.

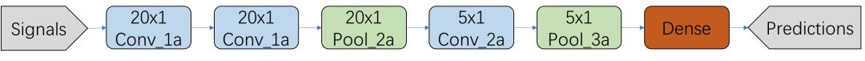

Preceding sections show how to establish a supervision model to detect product rummage actions. Collect the RSSI and phase values of time-series signals from two RFID antennas deployed in the store, and combine them into the following features:

In this table, Ant1 and Ant2 indicate the signals collected by two antennas from the same RFID tag. Diff indicates operation difference on the signals, and Avg indicates a mean operation on the signals. Finally, consecutive signals are collected from each sample for 8 seconds at a speed of 50 frames per second to form 400 × 10 two-dimensional features. Besides, we use the following model to train collected features based on self-built datasets. The final classification accuracy is 91.9%.

Figure 8 RFID CNN model

The product rummage detection algorithm using a supervised learning model achieves high prediction accuracy. However, this algorithm has a poor generalization capability for practical applications. When the counter or antenna location changes, the RFID signals change dramatically. Therefore, the trained model based on the original datasets is hardly applicable to the new scenario. To cope with this problem, we employ the unsupervised model to improve the generalization capability of this detection algorithm.

Specifically, it is critical to note that phase information is relevant to the relative offset instead of the spatial location. The detailed relationships are shown in the phase frequency distribution chart. Frequency information is relevant to the action speed instead of the spatial location. The detailed relationships are shown in the domain frequency distribution chart.

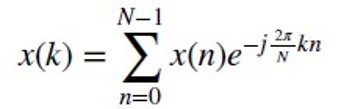

Strictly speaking, the amplitude information is relevant to the spatial location. However, we consider frequency information irrelevant to the spatial location because we focus only on the frequency distribution (the proportion of different frequency bands). To obtain the frequency information, perform Discrete Fourier Transform (DFT) on the RSSI and phase values of received signals as shown below.

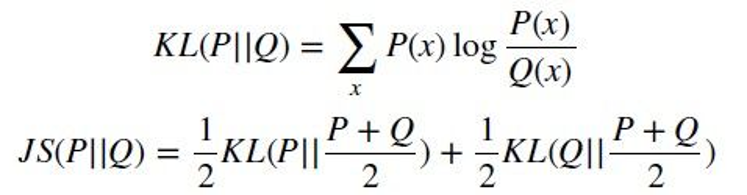

Then, obtain the distribution charts of frequency signals and phase signals. Based on the resulting charts, calculate the Jensen-Shannon (JS) divergence to calculate the current probability distribution from that of the previous moment. JS is different from Kullback-Leibler (KL) divergence wherein it extends KL divergence to calculate a symmetrical score and distance measure of one probability distribution from another.

The model detects product rummage actions based on the JS divergence difference between two samples at consecutive moments. The threshold of the JS divergence difference is adjusted according to the scenario to achieve a detection accuracy similar to the one obtained by a supervised learning model.

A supervised learning model and an unsupervised learning model are used to detect product rummage actions. This algorithm precisely locates the SKU of rummaged products. The detection accuracy of the supervised learning model is 91.9%. The detection accuracy of the unsupervised learning model is a little higher, at 94%. The JS divergence measurement improves the generalization capability of the algorithm. The threshold of the JS divergence difference is adjusted to fit in different scenarios.

Image-based customer behavior detection and RFID-based product rummage detection are two separate processes. RFID-based detection provides information about a rummaged product but doesn't locate the customer who performs the action. On the other hand, Image-based action detection figures out the customer who had a potential product rummage action but doesn't predict the rummaged products.

In actual scenarios, only a few actions occur at the same time in the same location. Therefore, on the basis of time, it is possible to match the rummaged product determined by RFID-based detection with the customer predicted by image-based detection. Although the customer and product are associated in this way, problems still persist.

The cumulative error between the time when a customer with potential rummage is detected and the time when a rummaged product is detected might be as long as 5 seconds to 15 seconds. This error may occur in any of the following cases:

These cases result in multiple customers with potential rummage and multiple seemingly rummaged products over the neighboring locations and moments.

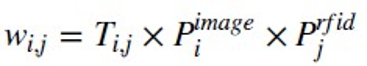

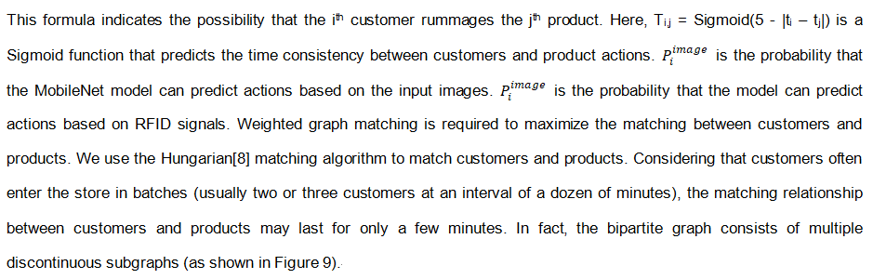

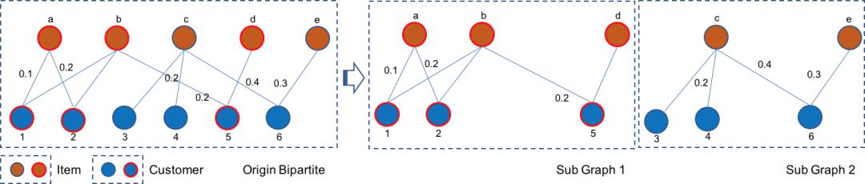

This algorithm associates the actions detected by RFID devices near a shelf with the customer predicted by image-based detection. Both the moment consistency and potentiality of actions are accounted for in the association. This ensures that the customer, product, action, and moment in the two algorithms match each other. When multiple customers have potential actions in the same area or multiple products in the same area are seemingly rummaged, graph matching is performed to find the best match between these products and customers. In this article, the degree of matching between customers and products (edge weight) is defined as follows:

Figure 9 Bipartite graph and sub-graphs of customer-product graph matching

Divide the original bipartite graph into several discontinuous sub-graphs and match each sub-graph separately to reduce the complexity of computing and storage. In this way, the edge weight relationships are stored in an adjacency matrix, greatly improving the algorithm efficiency. The time taken to match hundreds or even thousands of customers and products throughout the day decreases from several hours to several minutes.

The customer-product association algorithm based on graph matching obtains the best match between customers and products throughout the day. After improvement, computing efficiency greatly improves, lowering the time required from several hours to several minutes. In addition, the customer-product association algorithm is the last step in the entire customer-product detection process. The accuracy of upstream algorithm models, including the customer detection accuracy and accuracy of the customer-product matching degree, affects the association accuracy. Single-frame image-based customer action detection has an accuracy of 89%, and RFID-based product rummage detection has an accuracy of 94%.

The customer-product detection process integrates the following three algorithms to apply for prediction in a store.

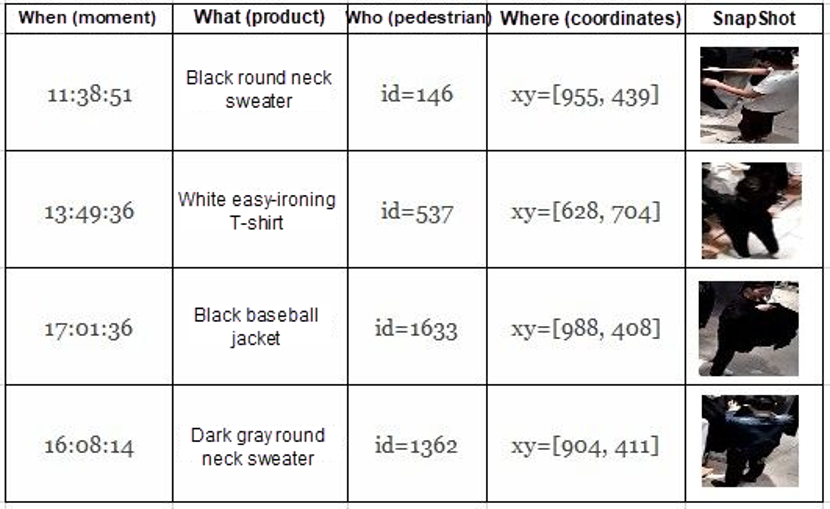

The following table lists some sample results of these algorithms to help them understand better. This table describes interactions among four factors- When (moment), Where (coordinates in image), Who (pedestrian ID), and What (product SKU).

The pedestrian ID is the number that the customer detection algorithm assigns to the user entering the store. The coordinates are extracted from the image and transformed to the physical location in the store in the follow-up steps. A snapshot captures the moment with a product rummage action.

Although single-frame image-based action detection has relatively low accuracy, it significantly improves the accuracy of association between customers and products. Comparing the customer-product data obtained by using the two action detection algorithms shows that single-frame images increase the final matching accuracy from 40.6% to 85.8%.

The association accuracy is affected by accuracy of upstream algorithm models as the customer-product association algorithm is the last step in the entire customer-product detection process. Add the two-dimensional factors, time association degree, and action potentiality, to graph matching. Finally, the accuracy of matching between customers and rummaged products increases to 85.8%. The following conclusions are drawn based on the practice discussed in the article.

However, these algorithms are still in their infancy and need a further extension in the following aspects:

1) The pedestrian action detection algorithm based on single-frame images still has much room for optimization. Increase the number of positive samples in datasets from one thousand to tens of thousands to significantly improve the performance. Try optimized classification models such as VGG, ResNet, and Inception when the server's computing capability improves.

2) Currently, the action detection algorithm based on single-frame images is accurate only to the pedestrian location. Try a new detection model to accurately predict the position of action.

3) Currently, an RFID receiver only detects signals from dozens to hundreds of products at a distance of 1-3 m. Besides, the capacity and scope of the RFID receiver are highly limited by the collection frequency and antenna polling mechanism. Optimize the hardware used by the RFID-based detection algorithm to reduce costs and improve the detection capacity, scope, and accuracy.

4) RFID-based detection requires threshold adjustment while using an unsupervised learning model. Optimize this method to make it applicable to different stores.

5) Currently, the customer-product association is implemented based on the time and action potentiality. Associate the product location with the customer location to further improve the association's accuracy.

References:

[1] Karpathy, Andrej, et al. Large-scale Video Classification with Convolutional Neural Networks. Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 2014.

[2] Donahue, Jeffrey, et al. Long-term Recurrent Convolutional Networks for Visual Recognition and Description. Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 2015.

[3] Carreira, Joao, and Andrew Zisserman. Quo Vadis, Action Recognition? A New Model and the Kinetics Dataset. Computer Vision and Pattern Recognition (CVPR), 2017 IEEE Conference on. IEEE, 2017.

[4] Cao, Zhe, et al. Realtime Multi-person 2d Pose Estimation Using Part Affinity Fields. arXiv preprint arXiv:1611.08050 (2016).

[5] Wojke, Nicolai, Alex Bewley, and Dietrich Paulus. Simple Online and Real-time Tracking with a Deep Association Metric. Image Processing (ICIP), 2017 IEEE International Conference on. IEEE, 2017.

[6] Howard, Andrew G., et al. MobileNets: Efficient Convolutional Neural Networks for Mobile Vision Applications. arXiv preprint arXiv:1704.04861 (2017).

[7] Liu, Tianci, et al. TagBooth: Deep Shopping Data Acquisition Powered by RFID Tags. Computer Communications (INFOCOM), 2015 IEEE Conference on. IEEE, 2015.

[8] Kuhn, Harold W. The Hungarian Method for the Assignment Problem. Naval Research Logistics Quarterly 2.1-2 (1955): 83-97.

Alibaba Customer Services Assistant: Human-Machine Collaboration to Improve Efficiency

How to Improve User Participation: The Rise of Interactive Recommendations

2,599 posts | 764 followers

FollowAlibaba Clouder - January 22, 2020

Alibaba Clouder - September 26, 2018

Alibaba Clouder - March 16, 2020

Alibaba Clouder - November 11, 2019

Alibaba Clouder - August 20, 2018

Alibaba Clouder - June 24, 2020

2,599 posts | 764 followers

Follow Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn More ApsaraDB for HBase

ApsaraDB for HBase

ApsaraDB for HBase is a NoSQL database engine that is highly optimized and 100% compatible with the community edition of HBase.

Learn More Offline Visual Intelligence Software Packages

Offline Visual Intelligence Software Packages

Offline SDKs for visual production, such as image segmentation, video segmentation, and character recognition, based on deep learning technologies developed by Alibaba Cloud.

Learn MoreMore Posts by Alibaba Clouder