By Hang Yin

The service mesh is a cloud-native, application-aware infrastructure designed to provide applications with standard, universal, and non-intrusive capabilities for traffic management, observability, and security. Dubbo is an easy-to-use, high-performance microservice development framework that addresses service governance and communication challenges in microservice architectures. Official SDK implementations are available for Java, Golang, and other languages.

By integrating into the service mesh, Dubbo applications can seamlessly gain several cloud-native capabilities, including mTLS encrypted communication, unified cloud-native observability (Prometheus, logs, and mesh topology), and non-intrusive traffic management. Based on Alibaba Cloud Service Mesh (ASM), this article introduces how microservice applications developed using the Dubbo framework can be integrated into the service mesh, enabling seamless access of the microservice system to cloud-native infrastructure.

First, prepare an ACK cluster and an ASM instance on Alibaba Cloud as the deployment environment for your applications.

• An ASM instance is created. For specific operations, please refer to Create an ASM Instance or Update an ASM Instance.

• The cluster is added to the ASM instance. For specific operations, please refer to Add a cluster to an ASM instance.

• Create a namespace named dubbo-demo in the ASM instance and enable automatic Sidecar injection for the namespace. For specific operations, please refer to Manage Global Namespaces.

If possible, we strongly recommend updating your application to use Dubbo3 and communicate via the Triple protocol. Dubbo3 is better adapted to cloud-native deployment methods and provides the Triple protocol as a communication specification. The Triple protocol is fully compatible with gRPC, supports Request-Response, Streaming, and other communication models, and can run on both HTTP/1 and HTTP/2. With the Triple protocol, the Layer 7 communication information between Dubbo applications can be identified by the service mesh, providing more comprehensive cloud-native capabilities.

When using Dubbo2 and the Dubbo protocol for communication, the service mesh can only intercept TCP Layer 4 traffic, limiting the service mesh capabilities that can be utilized.

The Dubbo community has already provided a standard solution for integrating into the service mesh. In general, we recommend that you follow this scenario to implement your Dubbo applications and enable service-to-service calls in a cloud-native manner, seamlessly integrating into the service mesh.

Reference:

https://github.com/apache/dubbo-samples/tree/master/3-extensions/registry/dubbo-samples-mesh-k8s

Use the kubeconfig file provided by the ACK cluster to connect to the cluster and run the following command to deploy the application:

kubectl apply -f- <<EOF

apiVersion: v1

kind: Service

metadata:

name: dubbo-samples-mesh-provider

namespace: dubbo-demo

spec:

type: ClusterIP

sessionAffinity: None

selector:

app: dubbo-samples-mesh-provider

ports:

- name: grpc-tri

port: 50052

targetPort: 50052

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: dubbo-samples-mesh-provider-v1

namespace: dubbo-demo

spec:

replicas: 2

selector:

matchLabels:

app: dubbo-samples-mesh-provider

version: v1

template:

metadata:

labels:

app: dubbo-samples-mesh-provider

version: v1

annotations:

# Prevent istio rewrite http probe

sidecar.istio.io/rewriteAppHTTPProbers: "false"

spec:

containers:

- name: server

image: apache/dubbo-demo:dubbo-samples-mesh-provider-v1_0.0.1

imagePullPolicy: Always

ports:

- name: grpc-tri

containerPort: 50052

protocol: TCP

- name: http-health

containerPort: 22222

protocol: TCP

livenessProbe:

httpGet:

path: /live

port: http-health

initialDelaySeconds: 10

periodSeconds: 5

timeoutSeconds: 1

readinessProbe:

httpGet:

path: /ready

port: http-health

initialDelaySeconds: 5

periodSeconds: 5

timeoutSeconds: 2

startupProbe:

httpGet:

path: /startup

port: http-health

failureThreshold: 30

initialDelaySeconds: 10

periodSeconds: 5

timeoutSeconds: 2

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: dubbo-samples-mesh-provider-v2

namespace: dubbo-demo

spec:

replicas: 2

selector:

matchLabels:

app: dubbo-samples-mesh-provider

version: v2

template:

metadata:

labels:

app: dubbo-samples-mesh-provider

version: v2

annotations:

# Prevent istio rewrite http probe

sidecar.istio.io/rewriteAppHTTPProbers: "false"

spec:

containers:

- name: server

image: apache/dubbo-demo:dubbo-samples-mesh-provider-v2_0.0.1

imagePullPolicy: Always

ports:

- name: grpc-tri

containerPort: 50052

protocol: TCP

- name: http-health

containerPort: 22222

protocol: TCP

livenessProbe:

httpGet:

path: /live

port: http-health

initialDelaySeconds: 10

periodSeconds: 5

timeoutSeconds: 1

readinessProbe:

httpGet:

path: /ready

port: http-health

initialDelaySeconds: 5

periodSeconds: 5

timeoutSeconds: 2

startupProbe:

httpGet:

path: /startup

port: http-health

failureThreshold: 30

initialDelaySeconds: 10

periodSeconds: 5

timeoutSeconds: 2

---

apiVersion: v1

kind: Service

metadata:

name: dubbo-samples-mesh-consumer

namespace: dubbo-demo

spec:

type: ClusterIP

sessionAffinity: None

selector:

app: dubbo-samples-mesh-consumer

ports:

- name: grpc-dubbo

protocol: TCP

port: 50052

targetPort: 50052

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: dubbo-samples-mesh-consumer

namespace: dubbo-demo

spec:

replicas: 1

selector:

matchLabels:

app: dubbo-samples-mesh-consumer

version: v1

template:

metadata:

labels:

app: dubbo-samples-mesh-consumer

version: v1

annotations:

# Prevent istio rewrite http probe

sidecar.istio.io/rewriteAppHTTPProbers: "false"

spec:

containers:

- name: server

image: apache/dubbo-demo:dubbo-samples-mesh-consumer_0.0.1

imagePullPolicy: Always

ports:

- name: grpc-tri

containerPort: 50052

protocol: TCP

- name: http-health

containerPort: 22222

protocol: TCP

env:

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

# This environment variable does not need to be configured by default. When the domain name suffix used inside k8s is artificially changed, it is only necessary to configure this

#- name: CLUSTER_DOMAIN

# value: cluster.local

livenessProbe:

httpGet:

path: /live

port: http-health

initialDelaySeconds: 5

periodSeconds: 5

readinessProbe:

httpGet:

path: /ready

port: http-health

initialDelaySeconds: 5

periodSeconds: 5

startupProbe:

httpGet:

path: /startup

port: http-health

failureThreshold: 30

initialDelaySeconds: 5

periodSeconds: 5

timeoutSeconds: 2

EOFThe deployed application contains a consumer and a provider. The consumer initiates a call to the provider.

For the service code, you can directly view the official Dubbo repository in the preceding reference. According to the official description, the configuration of the Dubbo application contains several key points:

@DubboReference(version = "1.0.0", providedBy = "dubbo-samples-mesh-provider", lazy = true).For detailed configuration files, please refer to dubbo.properties.

Continue to view the istio-proxy log on the consumer side using kubectl. You can see that the requests sent to the provider leave access logs in istio-proxy.

kubectl -n dubbo-demo logs deploy/dubbo-samples-mesh-consumer -c istio-proxy|grep outbound

...

{"authority_for":"dubbo-samples-mesh-provider.dubbo-demo.svc.cluster.local:50052","bytes_received":"140","bytes_sent":"161","downstream_local_address":"192.168.4.76:50052","downstream_remote_address":"10.0.239.171:56276","duration":"15","istio_policy_status":"-","method":"POST","path":"/org.apache.dubbo.samples.Greeter/greetStream","protocol":"HTTP/2","request_id":"b613c0f5-1834-45d8-9857-8547d8899a02","requested_server_name":"-","response_code":"200","response_flags":"-","route_name":"default","start_time":"2024-07-15T06:56:01.457Z","trace_id":"-","upstream_cluster":"outbound|50052||dubbo-samples-mesh-provider.dubbo-demo.svc.cluster.local","upstream_host":"10.0.239.196:50052","upstream_local_address":"10.0.239.171:41202","upstream_response_time":"6","upstream_service_time":"6","upstream_transport_failure_reason":"-","user_agent":"-","x_forwarded_for":"-"}Note that the upstream_cluster field in the access logs is now outbound|50052||dubbo-samples-mesh-provider.dubbo-demo.svc.cluster.local, which confirms that the provider is correctly registered with the service mesh and that the requests are intercepted and routed by the service mesh.

Connect to the ASM instance using kubectl (refer to Use Kubectl on the Control Plane to Access Istio Resources), and run the following commands with kubectl.

kubectl apply -f- <<EOF

apiVersion: networking.istio.io/v1alpha3

kind: VirtualService

metadata:

name: dubbo-samples-mesh-provider

namespace: dubbo-demo

spec:

hosts:

- dubbo-samples-mesh-provider.dubbo-demo.svc.cluster.local

http:

- route:

- destination:

host: dubbo-samples-mesh-provider.dubbo-demo.svc.cluster.local

subset: v1

port:

# Specifies the port on the host being addressed. If the service exposes only one port, you don't need to choose the port explicitly

number: 50052

weight: 80

- destination:

host: dubbo-samples-mesh-provider.dubbo-demo.svc.cluster.local

subset: v2

port:

# Specifies the port on the host being addressed. If the service exposes only one port, you don't need to choose the port explicitly

number: 50052

weight: 20

---

apiVersion: networking.istio.io/v1alpha3

kind: DestinationRule

metadata:

name: dubbo-samples-mesh-provider

namespace: dubbo-demo

spec:

host: dubbo-samples-mesh-provider.dubbo-demo.svc.cluster.local

trafficPolicy:

loadBalancer:

# Envoy load balancing strategy

simple: ROUND_ROBIN

subsets:

- name: v1

labels:

version: v1

- name: v2

labels:

version: v2

EOFThe destination rule above divides the provider into two versions, v1 and v2, based on pod labels, and the virtual service specifies the traffic ratio to v1 and v2 versions to be approximately 80:20.

At this point, redeploy the consumer (you can delete the existing consumer pod and wait for a new pod to start), and after some time, view the new consumer logs. You can observe that the traffic ratio to the provider has changed.

kubectl -n dubbo-demo logs deploy/dubbo-samples-mesh-consumer

...

==================== dubbo unary invoke 0 end ====================

[15/07/24 07:06:54:054 UTC] main INFO action.GreetingServiceConsumer: consumer Unary reply <-message: "hello,service mesh, response from provider-v2: 10.0.239.196:50052, client: 10.0.239.196, local: dubbo-samples-mesh-provider, remote: null, isProviderSide: true"

==================== dubbo unary invoke 1 end ====================

[15/07/24 07:06:59:059 UTC] main INFO action.GreetingServiceConsumer: consumer Unary reply <-message: "hello,service mesh, response from provider-v1: 10.0.239.189:50052, client: 10.0.239.189, local: dubbo-samples-mesh-provider, remote: null, isProviderSide: true"

==================== dubbo unary invoke 2 end ====================

[15/07/24 07:07:04:004 UTC] main INFO action.GreetingServiceConsumer: consumer Unary reply <-message: "hello,service mesh, response from provider-v1: 10.0.239.189:50052, client: 10.0.239.189, local: dubbo-samples-mesh-provider, remote: null, isProviderSide: true"

==================== dubbo unary invoke 3 end ====================

[15/07/24 07:07:09:009 UTC] main INFO action.GreetingServiceConsumer: consumer Unary reply <-message: "hello,service mesh, response from provider-v1: 10.0.239.175:50052, client: 10.0.239.175, local: dubbo-samples-mesh-provider, remote: null, isProviderSide: true"

==================== dubbo unary invoke 4 end ====================

[15/07/24 07:07:14:014 UTC] main INFO action.GreetingServiceConsumer: consumer Unary reply <-message: "hello,service mesh, response from provider-v1: 10.0.239.175:50052, client: 10.0.239.175, local: dubbo-samples-mesh-provider, remote: null, isProviderSide: true"

==================== dubbo unary invoke 5 end ====================

[15/07/24 07:07:19:019 UTC] main INFO action.GreetingServiceConsumer: consumer Unary reply <-message: "hello,service mesh, response from provider-v1: 10.0.239.189:50052, client: 10.0.239.189, local: dubbo-samples-mesh-provider, remote: null, isProviderSide: true"

...It can be seen that with the usage officially provided by Dubbo, Dubbo services can directly call each other using the cloud-native Kubernetes Service domain names.

At this point, there is no problem in using various capabilities of the service mesh, and there is no difference between Dubbo services and regular HTTP services.

Although Dubbo and cloud-native infrastructure are seamlessly integrated in the preceding best practice scenario, applications are required not to use the registry in that scenario. For some Dubbo applications that are already integrated into the registry and are in operation, it may not be feasible to immediately switch to this approach.

In such cases, ASM provides relevant plug-ins in the plug-in center to allow applications to first integrate into the service mesh and then transition to the community's best practice cloud-native solution.

Connect to the cluster using the kubeconfig file of the ACK cluster and deploy the sample application using kubectl with the following commands:

apiVersion: v1

kind: ConfigMap

metadata:

name: frontend-config

namespace: dubbo-demo

data:

application.properties: |-

# Specify the application name of Dubbo

dubbo.application.name=shop-frontend

# Specify the QoS port

dubbo.application.qos-port=20991

# Enable token verification for each invocation

dubbo.provider.token=false

# Specify the registry address

# dubbo.registry.address=nacos://localhost:8848?username=nacos&password=nacos

dubbo.registry.address=nacos://${nacos.address:localhost}:8848?username=nacos&password=nacos

# Specify the port of Dubbo protocol

dubbo.protocol.port=20881

dubbo.protocol.name=tri

dubbo.protocol.serialization=hessian2

#spring.freemarker.template-loader-path: /templates

spring.freemarker.suffix=.ftl

server.servlet.encoding.force=true

server.servlet.encoding.charset=utf-8

server.servlet.encoding.enabled=true

---

apiVersion: v1

kind: ConfigMap

metadata:

name: shop-order-v1-config

namespace: dubbo-demo

data:

application.properties: |-

# Specify the application name of Dubbo

dubbo.application.name=shop-order

# Specify the QoS port

dubbo.application.qos-port=20992

# Enable token verification for each invocation

dubbo.provider.token=false

# Specify the registry address

# dubbo.registry.address=nacos://localhost:8848?username=nacos&password=nacos

dubbo.registry.address=nacos://${nacos.address:localhost}:8848?username=nacos&password=nacos

# Specify the port of Dubbo protocol

dubbo.protocol.port=20882

dubbo.protocol.name=tri

---

apiVersion: v1

kind: ConfigMap

metadata:

name: shop-order-v2-config

namespace: dubbo-demo

data:

application.properties: |-

# Specify the application name of Dubbo

dubbo.application.name=shop-order

# Specify the QoS port

dubbo.application.qos-port=20993

# Enable token verification for each invocation

dubbo.provider.token=false

# Specify the registry address

# dubbo.registry.address=nacos://localhost:8848?username=nacos&password=nacos

dubbo.registry.address=nacos://${nacos.address:localhost}:8848?username=nacos&password=nacos

# Specify the port of Dubbo protocol

dubbo.protocol.port=20882

dubbo.protocol.name=tri

dubbo.protocol.serialization=hessian2

---

apiVersion: v1

kind: ConfigMap

metadata:

name: shop-user-config

namespace: dubbo-demo

data:

application.properties: |-

# Specify the application name of Dubbo

dubbo.application.name=shop-user

# Specify the QoS port

dubbo.application.qos-port=20994

# Enable token verification for each invocation

dubbo.provider.token=false

# Specify the registry address

# dubbo.registry.address=nacos://localhost:8848?username=nacos&password=nacos

dubbo.registry.address=nacos://${nacos.address:localhost}:8848?username=nacos&password=nacos

# Specify the port of Dubbo protocol

dubbo.protocol.port=20884

dubbo.protocol.name=tri

dubbo.protocol.serialization=hessian2

---

apiVersion: v1

kind: ConfigMap

metadata:

name: shop-detail-v1-config

namespace: dubbo-demo

data:

application.properties: |-

# Specify the application name of Dubbo

dubbo.application.name=shop-detail

# Specify the QoS port

dubbo.application.qos-port=20995

# Enable token verification for each invocation

dubbo.provider.token=false

# Specify the registry address

# dubbo.registry.address=nacos://localhost:8848?username=nacos&password=nacos

dubbo.registry.address=nacos://${nacos.address:localhost}:8848?username=nacos&password=nacos

# Specify the port of Dubbo protocol

dubbo.protocol.port=20885

dubbo.protocol.name=tri

dubbo.protocol.serialization=hessian2

---

apiVersion: v1

kind: ConfigMap

metadata:

name: shop-detail-v2-config

namespace: dubbo-demo

data:

application.properties: |-

# Specify the application name of Dubbo

dubbo.application.name=shop-detail

# Specify the QoS port

dubbo.application.qos-port=20996

# Enable token verification for each invocation

dubbo.provider.token=false

# Specify the registry address

# dubbo.registry.address=nacos://localhost:8848?username=nacos&password=nacos

dubbo.registry.address=nacos://${nacos.address:localhost}:8848?username=nacos&password=nacos

# Specify the port of Dubbo protocol

dubbo.protocol.port=20885

dubbo.protocol.name=tri

dubbo.protocol.serialization=hessian2

---

apiVersion: v1

kind: ConfigMap

metadata:

name: comment-v1-config

namespace: dubbo-demo

data:

application.properties: |-

dubbo.application.name=shop-comment

# Specify the QoS port

dubbo.application.qos-port=20997

# Enable token verification for each invocation

dubbo.provider.token=false

# Specify the registry address

# dubbo.registry.address=nacos://localhost:8848?username=nacos&password=nacos

dubbo.registry.address=nacos://${nacos.address:localhost}:8848?username=nacos&password=nacos

# Specify the port of Dubbo protocol

dubbo.protocol.port=20887

dubbo.protocol.name=tri

dubbo.protocol.serialization=hessian2

---

apiVersion: v1

kind: ConfigMap

metadata:

name: comment-v2-config

namespace: dubbo-demo

data:

application.properties: |-

# Specify the application name of Dubbo

dubbo.application.name=shop-comment

# Specify the QoS port

dubbo.application.qos-port=20998

# Enable token verification for each invocation

dubbo.provider.token=false

# Specify the registry address

# dubbo.registry.address=nacos://localhost:8848?username=nacos&password=nacos

dubbo.registry.address=nacos://${nacos.address:localhost}:8848?username=nacos&password=nacos

# Specify the port of Dubbo protocol

dubbo.protocol.port=20888

dubbo.protocol.name=tri

dubbo.protocol.serialization=hessian2

---

apiVersion: v1

kind: Namespace

metadata:

name: dubbo-system

---

# Nacos

apiVersion: apps/v1

kind: Deployment

metadata:

name: nacos

namespace: dubbo-system

spec:

replicas: 1

selector:

matchLabels:

app: nacos

template:

metadata:

labels:

app: nacos

spec:

containers:

- name: consumer

image: nacos/nacos-server:v2.1.2

imagePullPolicy: Always

resources:

requests:

memory: "2Gi"

cpu: "500m"

ports:

- containerPort: 8848

name: client

- containerPort: 9848

name: client-rpc

env:

- name: NACOS_SERVER_PORT

value: "8848"

- name: NACOS_APPLICATION_PORT

value: "8848"

- name: PREFER_HOST_MODE

value: "hostname"

- name: MODE

value: "standalone"

- name: NACOS_AUTH_ENABLE

value: "true"

---

apiVersion: v1

kind: Service

metadata:

name: nacos

namespace: dubbo-system

spec:

type: ClusterIP

sessionAffinity: None

selector:

app: nacos

ports:

- port: 8848

name: server

targetPort: 8848

- port: 9848

name: client-rpc

targetPort: 9848

---

# Dubbo Admin

apiVersion: v1

kind: ConfigMap

metadata:

name: dubbo-admin

namespace: dubbo-system

data:

# Set the properties you want to override, properties not set here will be using the default values

# check application.properties inside dubbo-admin project for the keys supported

application.properties: |

admin.registry.address=nacos://nacos.dubbo-system.svc:8848?username=nacos&password=nacos

admin.config-center=nacos://nacos.dubbo-system.svc:8848?username=nacos&password=nacos

admin.metadata-report.address=nacos://nacos.dubbo-system.svc:8848?username=nacos&password=nacos

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: dubbo-admin

namespace: dubbo-system

labels:

app: dubbo-admin

spec:

replicas: 2

selector:

matchLabels:

app: dubbo-admin

template:

metadata:

labels:

app: dubbo-admin

spec:

containers:

- image: apache/dubbo-admin:0.6.0

name: dubbo-admin

ports:

- containerPort: 38080

volumeMounts:

- mountPath: /config

name: application-properties

volumes:

- name: application-properties

configMap:

name: dubbo-admin

---

apiVersion: v1

kind: Service

metadata:

name: dubbo-admin

namespace: dubbo-system

spec:

selector:

app: dubbo-admin

ports:

- protocol: TCP

port: 38080

targetPort: 38080

---

# Skywalking

apiVersion: apps/v1

kind: Deployment

metadata:

name: skywalking-oap-server

namespace: dubbo-system

spec:

replicas: 1

selector:

matchLabels:

app: skywalking-oap-server

template:

metadata:

labels:

app: skywalking-oap-server

spec:

containers:

- name: skywalking-oap-server

image: apache/skywalking-oap-server:9.3.0

imagePullPolicy: Always

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: skywalking-oap-dashboard

namespace: dubbo-system

spec:

replicas: 1

selector:

matchLabels:

app: skywalking-oap-dashboard

template:

metadata:

labels:

app: skywalking-oap-dashboard

spec:

containers:

- name: skywalking-oap-dashboard

image: apache/skywalking-ui:9.3.0

imagePullPolicy: Always

env:

- name: SW_OAP_ADDRESS

value: http://skywalking-oap-server.dubbo-system.svc:12800

---

apiVersion: v1

kind: Service

metadata:

name: skywalking-oap-server

namespace: dubbo-system

spec:

type: ClusterIP

sessionAffinity: None

selector:

app: skywalking-oap-server

ports:

- port: 12800

name: rest

targetPort: 12800

- port: 11800

name: grpc

targetPort: 11800

---

apiVersion: v1

kind: Service

metadata:

name: skywalking-oap-dashboard

namespace: dubbo-system

spec:

type: ClusterIP

sessionAffinity: None

selector:

app: skywalking-oap-dashboard

ports:

- port: 8080

name: http

targetPort: 8080

---

apiVersion: v1

kind: Service

metadata:

labels:

app: shop-frontend

name: shop-frontend

namespace: dubbo-demo

spec:

ports:

- name: http-8080

port: 8080

protocol: TCP

targetPort: 8080

- name: grpc-tri

port: 20881

protocol: TCP

targetPort: 20881

selector:

app: shop-frontend

---

# App FrontEnd

apiVersion: apps/v1

kind: Deployment

metadata:

name: shop-frontend

namespace: dubbo-demo

spec:

replicas: 1

selector:

matchLabels:

app: shop-frontend

template:

metadata:

labels:

app: shop-frontend

spec:

volumes:

- name: skywalking-agent

emptyDir: { }

- name: config-volume

configMap:

name: frontend-config

items:

- key: application.properties

path: application.properties

initContainers:

- name: agent-container

image: apache/skywalking-java-agent:8.13.0-java17

volumeMounts:

- name: skywalking-agent

mountPath: /agent

command: [ "/bin/sh" ]

args: [ "-c", "cp -R /skywalking/agent /agent/" ]

containers:

- name: shop-frontend

image: apache/dubbo-demo:dubbo-samples-shop-frontend_0.0.1

imagePullPolicy: Always

ports:

- name: http-8080

containerPort: 8080

protocol: TCP

- name: grpc-tri

containerPort: 20881

protocol: TCP

- name: dubbo-qos

containerPort: 20991

protocol: TCP

volumeMounts:

- name: skywalking-agent

mountPath: /skywalking

- name: config-volume

mountPath: /app/resources/application.properties

subPath: application.properties

env:

- name: JAVA_TOOL_OPTIONS

value: "-javaagent:/skywalking/agent/skywalking-agent.jar"

- name: SW_AGENT_NAME

value: shop::shop-frontend

- name: SW_AGENT_COLLECTOR_BACKEND_SERVICES

value: "skywalking-oap-server.dubbo-system.svc:11800"

---

apiVersion: v1

kind: Service

metadata:

labels:

app: shop-order

name: shop-order

namespace: dubbo-demo

spec:

ports:

- name: grpc-tri

port: 20882

protocol: TCP

targetPort: 20882

selector:

app: shop-order

---

# App Order V1-1

apiVersion: apps/v1

kind: Deployment

metadata:

name: shop-order-v1

namespace: dubbo-demo

spec:

replicas: 2

selector:

matchLabels:

app: shop-order

orderVersion: v1

template:

metadata:

labels:

app: shop-order

orderVersion: v1

spec:

volumes:

- name: skywalking-agent

emptyDir: { }

- name: config-volume

configMap:

name: shop-order-v1-config

items:

- key: application.properties

path: application.properties

initContainers:

- name: agent-container

image: apache/skywalking-java-agent:8.13.0-java17

volumeMounts:

- name: skywalking-agent

mountPath: /agent

command: [ "/bin/sh" ]

args: [ "-c", "cp -R /skywalking/agent /agent/" ]

containers:

- name: shop-order

image: apache/dubbo-demo:dubbo-samples-shop-order_v1_0.0.1

imagePullPolicy: Always

ports:

- name: grpc-tri

containerPort: 20882

protocol: TCP

- name: dubbo-qos

containerPort: 20992

protocol: TCP

volumeMounts:

- name: skywalking-agent

mountPath: /skywalking

- name: config-volume

mountPath: /app/resources/application.properties

subPath: application.properties

env:

- name: JAVA_TOOL_OPTIONS

value: "-javaagent:/skywalking/agent/skywalking-agent.jar"

- name: SW_AGENT_NAME

value: shop::shop-order

- name: SW_AGENT_COLLECTOR_BACKEND_SERVICES

value: "skywalking-oap-server.dubbo-system.svc:11800"

- name: DUBBO_LABELS

value: "orderVersion=v1"

---

# App Order V2

apiVersion: apps/v1

kind: Deployment

metadata:

name: shop-order-v2

namespace: dubbo-demo

spec:

replicas: 1

selector:

matchLabels:

app: shop-order

orderVersion: v2

template:

metadata:

labels:

app: shop-order

orderVersion: v2

spec:

volumes:

- name: skywalking-agent

emptyDir: { }

- name: config-volume

configMap:

name: shop-order-v2-config

items:

- key: application.properties

path: application.properties

initContainers:

- name: agent-container

image: apache/skywalking-java-agent:8.13.0-java17

volumeMounts:

- name: skywalking-agent

mountPath: /agent

command: [ "/bin/sh" ]

args: [ "-c", "cp -R /skywalking/agent /agent/" ]

containers:

- name: shop-order

image: apache/dubbo-demo:dubbo-samples-shop-order_v2_0.0.1

imagePullPolicy: Always

ports:

- name: grpc-tri

containerPort: 20882

protocol: TCP

- name: dubbo-qos

containerPort: 20993

protocol: TCP

volumeMounts:

- name: skywalking-agent

mountPath: /skywalking

- name: config-volume

mountPath: /app/resources/application.properties

subPath: application.properties

env:

- name: JAVA_TOOL_OPTIONS

value: "-javaagent:/skywalking/agent/skywalking-agent.jar"

- name: SW_AGENT_NAME

value: shop::shop-order

- name: SW_AGENT_COLLECTOR_BACKEND_SERVICES

value: "skywalking-oap-server.dubbo-system.svc:11800"

- name: DUBBO_LABELS

value: "orderVersion=v2;"

---

apiVersion: v1

kind: Service

metadata:

labels:

app: shop-user

name: shop-user

namespace: dubbo-demo

spec:

ports:

- name: grpc-tri

port: 20884

protocol: TCP

targetPort: 20884

selector:

app: shop-user

---

# App User

apiVersion: apps/v1

kind: Deployment

metadata:

name: shop-user

namespace: dubbo-demo

spec:

replicas: 1

selector:

matchLabels:

app: shop-user

template:

metadata:

labels:

app: shop-user

spec:

volumes:

- name: skywalking-agent

emptyDir: { }

- name: config-volume

configMap:

name: shop-user-config

items:

- key: application.properties

path: application.properties

initContainers:

- name: agent-container

image: apache/skywalking-java-agent:8.13.0-java17

volumeMounts:

- name: skywalking-agent

mountPath: /agent

command: [ "/bin/sh" ]

args: [ "-c", "cp -R /skywalking/agent /agent/" ]

containers:

- name: shop-user

image: apache/dubbo-demo:dubbo-samples-shop-user_0.0.1

imagePullPolicy: Always

ports:

- name: grpc-tri

containerPort: 20884

protocol: TCP

- name: dubbo-qos

containerPort: 20994

protocol: TCP

volumeMounts:

- name: skywalking-agent

mountPath: /skywalking

- name: config-volume

mountPath: /app/resources/application.properties

subPath: application.properties

env:

- name: JAVA_TOOL_OPTIONS

value: "-javaagent:/skywalking/agent/skywalking-agent.jar"

- name: SW_AGENT_NAME

value: shop::shop-user

- name: SW_AGENT_COLLECTOR_BACKEND_SERVICES

value: "skywalking-oap-server.dubbo-system.svc:11800"

---

apiVersion: v1

kind: Service

metadata:

labels:

app: shop-detail

name: shop-detail

namespace: dubbo-demo

spec:

ports:

- name: grpc-tri

port: 20885

protocol: TCP

targetPort: 20885

selector:

app: shop-detail

---

# App Detail-1

apiVersion: apps/v1

kind: Deployment

metadata:

name: shop-detail-v1

namespace: dubbo-demo

spec:

replicas: 1

selector:

matchLabels:

app: shop-detail

detailVersion: v1

template:

metadata:

labels:

app: shop-detail

detailVersion: v1

spec:

volumes:

- name: skywalking-agent

emptyDir: { }

- name: config-volume

configMap:

name: shop-detail-v1-config

items:

- key: application.properties

path: application.properties

initContainers:

- name: agent-container

image: apache/skywalking-java-agent:8.13.0-java17

volumeMounts:

- name: skywalking-agent

mountPath: /agent

command: [ "/bin/sh" ]

args: [ "-c", "cp -R /skywalking/agent /agent/" ]

containers:

- name: shop-detail

image: apache/dubbo-demo:dubbo-samples-shop-detail_0.0.1

imagePullPolicy: Always

ports:

- name: grpc-tri

containerPort: 20885

protocol: TCP

- name: dubbo-qos

containerPort: 20995

protocol: TCP

volumeMounts:

- name: skywalking-agent

mountPath: /skywalking

- name: config-volume

mountPath: /app/resources/application.properties

subPath: application.properties

env:

- name: JAVA_TOOL_OPTIONS

value: "-javaagent:/skywalking/agent/skywalking-agent.jar"

- name: SW_AGENT_NAME

value: shop::shop-detail

- name: SW_AGENT_COLLECTOR_BACKEND_SERVICES

value: "skywalking-oap-server.dubbo-system.svc:11800"

- name: DUBBO_LABELS

value: "detailVersion=v1; region=beijing"

---

# App Detail-2

apiVersion: apps/v1

kind: Deployment

metadata:

name: shop-detail-v2

namespace: dubbo-demo

spec:

replicas: 1

selector:

matchLabels:

app: shop-detail

detailVersion: v2

template:

metadata:

labels:

app: shop-detail

detailVersion: v2

spec:

volumes:

- name: skywalking-agent

emptyDir: { }

- name: config-volume

configMap:

name: shop-detail-v2-config

items:

- key: application.properties

path: application.properties

initContainers:

- name: agent-container

image: apache/skywalking-java-agent:8.13.0-java17

volumeMounts:

- name: skywalking-agent

mountPath: /agent

command: [ "/bin/sh" ]

args: [ "-c", "cp -R /skywalking/agent /agent/" ]

containers:

- name: shop-detail

image: apache/dubbo-demo:dubbo-samples-shop-detail_v2_0.0.1

imagePullPolicy: Always

ports:

- name: grpc-tri

containerPort: 20885

protocol: TCP

- name: dubbo-qos

containerPort: 20996

protocol: TCP

volumeMounts:

- name: skywalking-agent

mountPath: /skywalking

- name: config-volume

mountPath: /app/resources/application.properties

subPath: application.properties

env:

- name: JAVA_TOOL_OPTIONS

value: "-javaagent:/skywalking/agent/skywalking-agent.jar"

- name: SW_AGENT_NAME

value: shop::shop-detail

- name: SW_AGENT_COLLECTOR_BACKEND_SERVICES

value: "skywalking-oap-server.dubbo-system.svc:11800"

- name: DUBBO_LABELS

value: "detailVersion=v2; region=hangzhou;"

---

apiVersion: v1

kind: Service

metadata:

labels:

app: shop-comment

name: shop-comment

namespace: dubbo-demo

spec:

ports:

- name: grpc-tri

port: 20887

protocol: TCP

targetPort: 20887

selector:

app: shop-comment

---

#App Comment v1

apiVersion: apps/v1

kind: Deployment

metadata:

name: shop-comment-v1

namespace: dubbo-demo

spec:

replicas: 1

selector:

matchLabels:

app: shop-comment

commentVersion: v1

template:

metadata:

labels:

app: shop-comment

commentVersion: v1

spec:

volumes:

- name: skywalking-agent

emptyDir: { }

- name: config-volume

configMap:

name: comment-v1-config

items:

- key: application.properties

path: application.properties

initContainers:

- name: agent-container

image: apache/skywalking-java-agent:8.13.0-java17

volumeMounts:

- name: skywalking-agent

mountPath: /agent

command: [ "/bin/sh" ]

args: [ "-c", "cp -R /skywalking/agent /agent/" ]

containers:

- name: shop-comment

image: apache/dubbo-demo:dubbo-samples-shop-comment_0.0.1

imagePullPolicy: Always

ports:

- name: grpc-tri

containerPort: 20887

protocol: TCP

- name: dubbo-qos

containerPort: 20997

protocol: TCP

volumeMounts:

- name: skywalking-agent

mountPath: /skywalking

- name: config-volume

mountPath: /app/resources/application.properties

subPath: application.properties

env:

- name: JAVA_TOOL_OPTIONS

value: "-javaagent:/skywalking/agent/skywalking-agent.jar"

- name: SW_AGENT_NAME

value: shop::shop-comment

- name: SW_AGENT_COLLECTOR_BACKEND_SERVICES

value: "skywalking-oap-server.dubbo-system.svc:11800"

- name: DUBBO_LABELS

value: "commentVersion=v1; region=beijing"

---

#App Comment v2

apiVersion: apps/v1

kind: Deployment

metadata:

name: shop-comment-v2

namespace: dubbo-demo

spec:

replicas: 1

selector:

matchLabels:

app: shop-comment

commentVersion: v2

template:

metadata:

labels:

app: shop-comment

commentVersion: v2

spec:

volumes:

- name: skywalking-agent

emptyDir: { }

- name: config-volume

configMap:

name: comment-v2-config

items:

- key: application.properties

path: application.properties

initContainers:

- name: agent-container

image: apache/skywalking-java-agent:8.13.0-java17

volumeMounts:

- name: skywalking-agent

mountPath: /agent

command: [ "/bin/sh" ]

args: [ "-c", "cp -R /skywalking/agent /agent/" ]

containers:

- name: shop-comment

image: apache/dubbo-demo:dubbo-samples-shop-comment_v2_0.0.1

imagePullPolicy: Always

ports:

- name: grpc-tri

containerPort: 20887

protocol: TCP

- name: dubbo-qos

containerPort: 20998

protocol: TCP

volumeMounts:

- name: skywalking-agent

mountPath: /skywalking

- name: config-volume

mountPath: /app/resources/application.properties

subPath: application.properties

env:

- name: JAVA_TOOL_OPTIONS

value: "-javaagent:/skywalking/agent/skywalking-agent.jar"

- name: SW_AGENT_NAME

value: shop::shop-comment

- name: SW_AGENT_COLLECTOR_BACKEND_SERVICES

value: "skywalking-oap-server.dubbo-system.svc:11800"

- name: DUBBO_LABELS

value: "commentVersion=v2; region=hangzhou;"

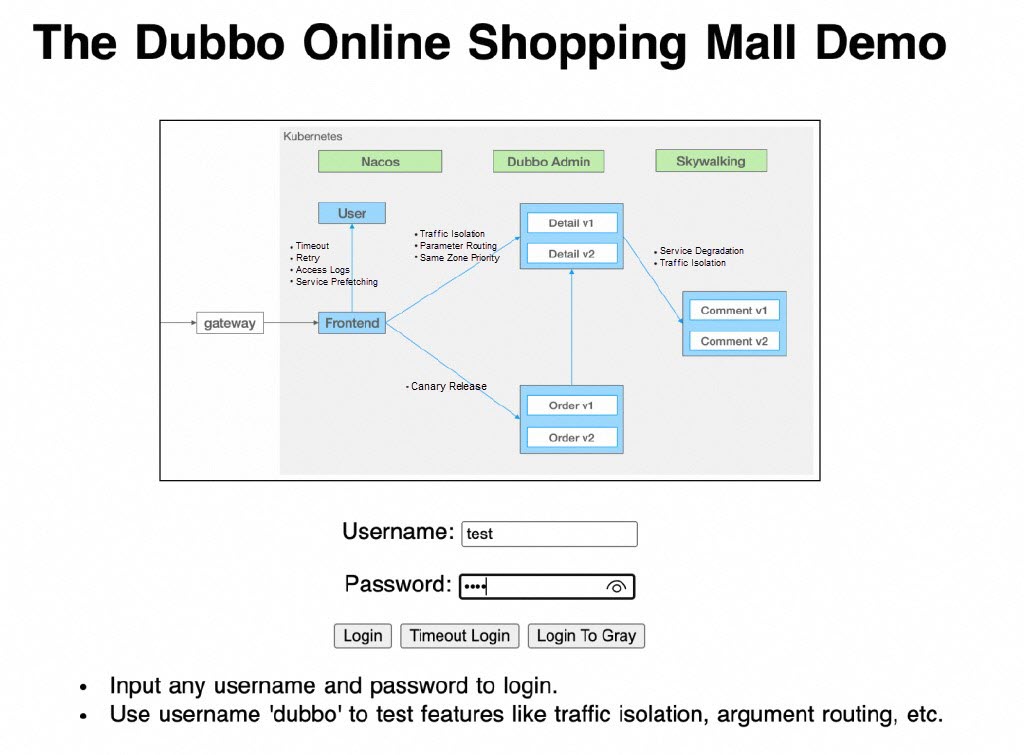

EOFThe sample service uses the sample-shop application from the Dubbo community. For details about the application and its code implementation, please refer to:

https://github.com/apache/dubbo-samples/tree/master/10-task/dubbo-samples-shop

All services in this sample application are connected to a Nacos registry (deployed under the dubbo-system), which demonstrates the transitioning process of applications from using a registry to using a service mesh.

Compared with the sample application provided by the community, the YAML above makes the following changes for scenario demonstration:

When Dubbo applications use the registry, the caller directly learns the server address from the registry information and calls the service directly by that address, without calling through the Kubernetes Service domain name. Therefore, injecting Sidecar directly into such applications does not enable Sidecar to intercept and manage traffic between services.

ASM provides the "Support Spring Cloud Services" plug-in, whose main principle is to add a host header to requests to help the service mesh identify the target Kubernetes Service and manage traffic accordingly. Functionally, this also supports Dubbo applications transitioning to use service mesh capabilities through the same solution but requires that Dubbo applications use RPC protocols based on HTTP, such as Triple.

Here are the specific steps to enable the plug-in:

For the Support Spring Cloud Services plug-in, the main parameters include pod_cidrs and provider_port_number in the cluster.

• pod_cidrs: The Pod CIDR of the ACK or ASK cluster. You can log on to the ACK Console and view the Pod CIDR under the Basic Information tab on the cluster information page. If the container network plug-in is Terway, you can view the CIDR corresponding to the Pod vSwitch under the Cluster Resources tab on the cluster information page for configuration. This parameter is required and the default value is just an example.

• provider_port_number: The service port of the provider. When multiple providers are deployed in the cluster with different service ports, you can configure multiple plug-in instances or leave this parameter unconfigured.

For example, you can fill in the following parameters:

provider_port_number: '20881' # The provider is set to port 20881.

pod_cidrs:

- 10.0.0.0/16 # The Pod CIDR in the cluster, or the cluster switch CIDR (in the case of using Terway)You can obtain the application access address through port-forward.

kubectl port-forward -n dubbo-demo deployment/shop-frontend 8080:8080Access localhost:8080 in a web browser and log in using any characters as the username and password.

Since this example has not fully adapted to the Triple protocol, the application will generate an error, but you can see the access logs through the istio-proxy of the frontend service:

kubectl -n dubbo-demo logs deploy/shop-frontend -c istio-proxy

...

{"authority_for":"shop-detail.dubbo-demo.svc.cluster.local:20885","bytes_received":"69","bytes_sent":"0","downstream_local_address":"10.0.239.181:20885","downstream_remote_address":"10.0.239.165:35638","duration":"31","istio_policy_status":"-","method":"POST","path":"/org.apache.dubbo.metadata.MetadataService/getMetadataInfo","protocol":"HTTP/2","request_id":"240aaced-a67d-4b10-a252-a738f67b346d","requested_server_name":"-","response_code":"200","response_flags":"URX","route_name":"default","start_time":"2024-07-15T08:21:29.620Z","trace_id":"-","upstream_cluster":"outbound|20885||shop-detail.dubbo-demo.svc.cluster.local","upstream_host":"10.0.239.169:20885","upstream_local_address":"10.0.239.165:52506","upstream_response_time":"31","upstream_service_time":"31","upstream_transport_failure_reason":"-","user_agent":"-","x_forwarded_for":"-"}You can see that logs similar to those above are the same as those in the best practice scenario, where the request and the Kubernetes Service of the routing destination are correctly identified by the service mesh.

If you disable the plug-in and attempt to access the application again, you will see logs like this:

kubectl -n dubbo-demo logs deploy/shop-frontend -c istio-proxy

...

{"authority_for":"10.0.239.163:20884","bytes_received":"65","bytes_sent":"0","downstream_local_address":"10.0.239.163:20884","downstream_remote_address":"10.0.239.165:56152","duration":"2","istio_policy_status":"-","method":"POST","path":"/org.apache.dubbo.samples.UserService/login","protocol":"HTTP/2","request_id":"1560b7ef-e9f6-4ddf-883b-e4f56d8753db","requested_server_name":"-","response_code":"200","response_flags":"-","route_name":"allow_any","start_time":"2024-07-15T08:30:02.068Z","trace_id":"-","upstream_cluster":"PassthroughCluster","upstream_host":"10.0.239.163:20884","upstream_local_address":"10.0.239.165:56166","upstream_response_time":"2","upstream_service_time":"2","upstream_transport_failure_reason":"-","user_agent":"-","x_forwarded_for":"-"You can see that the upstream_cluster field is PassthroughCluster. At this point, the service mesh cannot identify the called service as the Kubernetes Service registered within the service mesh, and the application will not be able to utilize the cloud-native capabilities of the service mesh.

Note: The above scenario should only be used as a transition solution. Ultimately, we still recommend transitioning to the best practice solution provided by the Dubbo community for integrating Dubbo applications into the service mesh to avoid any potential future incompatibilities or errors.

This article discusses how Dubbo applications can integrate into the service mesh and gain various cloud-native capabilities, proposing both a best practice scenario and a transition scenario. We first recommend using the best practice scenario provided by the Dubbo community to integrate into the service mesh, and when necessary, you can transition to the best practice scenario through the transition solution.

Use Argo Workflows SDK for Python to Create Large-Scale Workflows

Use Alibaba Cloud ASM to Efficiently Manage LLM Traffic Part 1: Traffic Routing

175 posts | 31 followers

FollowAlibaba Cloud Native Community - June 29, 2022

Alibaba Cloud Native - October 9, 2021

Aliware - August 18, 2021

Alibaba Cloud Native Community - May 23, 2023

Alibaba Cloud Native Community - November 22, 2023

Alibaba Cloud Native Community - December 30, 2021

175 posts | 31 followers

Follow Container Service for Kubernetes

Container Service for Kubernetes

Alibaba Cloud Container Service for Kubernetes is a fully managed cloud container management service that supports native Kubernetes and integrates with other Alibaba Cloud products.

Learn More Microservices Engine (MSE)

Microservices Engine (MSE)

MSE provides a fully managed registration and configuration center, and gateway and microservices governance capabilities.

Learn More Function Compute

Function Compute

Alibaba Cloud Function Compute is a fully-managed event-driven compute service. It allows you to focus on writing and uploading code without the need to manage infrastructure such as servers.

Learn More Managed Service for Prometheus

Managed Service for Prometheus

Multi-source metrics are aggregated to monitor the status of your business and services in real time.

Learn MoreMore Posts by Alibaba Container Service