By Xiheng, Alibaba Cloud Technical Expert

On April 16, we livestreamed the second SIG Cloud-Provider-Alibaba seminar. This livestream demonstrated how Alibaba Cloud designs and builds high-performance pod networks in cloud-native environments. This article recaps the content in the livestream, provides download links, and answers questions asked during the livestream. I hope it will be useful to you.

First, I will introduce Cloud Provider SIG. This is a Kubernetes cloud provider group that is dedicated to making the Kubernetes ecosystem neutral for all cloud providers. It coordinates different cloud providers and tries to use a unified standard to meet developers' requirements. As the first cloud provider in China that joined Cloud Provider SIG, Alibaba Cloud also promotes Kubernetes standardization. We coordinate on technical matters with other cloud providers, such as AWS, Google, and Azure, to optimize the connections between clouds and Kubernetes and unify modular and standard protocols of different components. We welcome you to join us in our efforts.

With the increasing popularity of cloud-native computing, more and more application loads are deployed on Kubernetes. Kubernetes has become the cornerstone of cloud-native computing and a new interaction interface between users and cloud computing. As one of the basic dependencies of applications, the network is a necessary basic component in cloud-native applications and also the biggest concern of many developers as they transition to cloud-native. Users must consider many network issues. For example, the pod network is not on the same plane as the original machine network, the overlay pod network causes packet encapsulation performance loss, and Kubernetes load balancing and service discovery are not sufficiently scalable. So, how do we build a cluster pod network?

This article will describe how Alibaba Cloud designs and builds high-performance, cloud-native pod networks in cloud-native environments.

The article is divided into three parts:

First, this article introduces the basic concepts of the Kubernetes pod network:

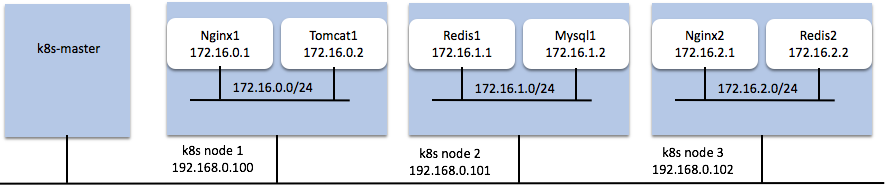

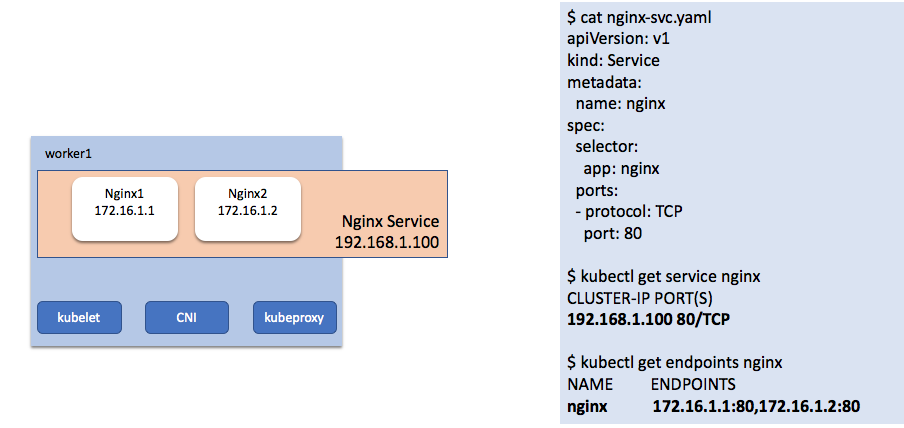

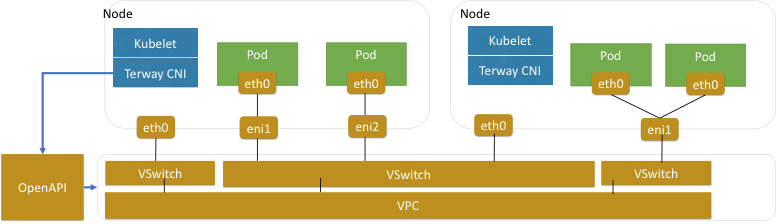

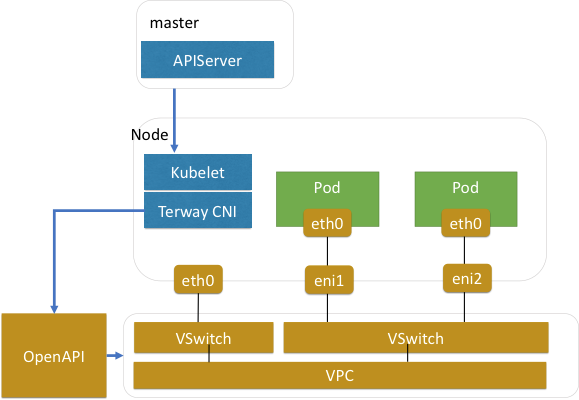

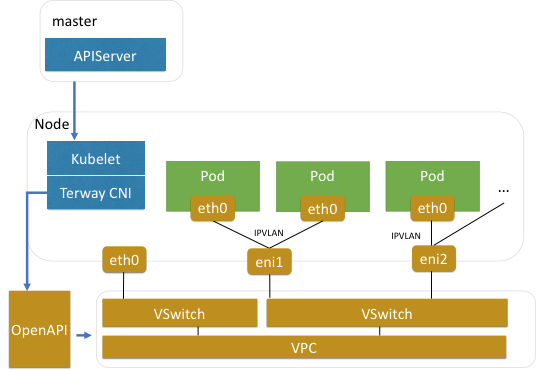

The following figure shows the Kubernetes pod network.

Pod network connectivity (CNI) involves the following factors:

To achieve these network capabilities, address assignment and network connectivity are required. These capabilities are implemented with CNI network plugins.

Container Network Interface (CNI) provides a series of network plugins that implement APIs allowing Kubernetes to configure pod networks. Common CNI plugins include Terway, Flannel, and Calico.

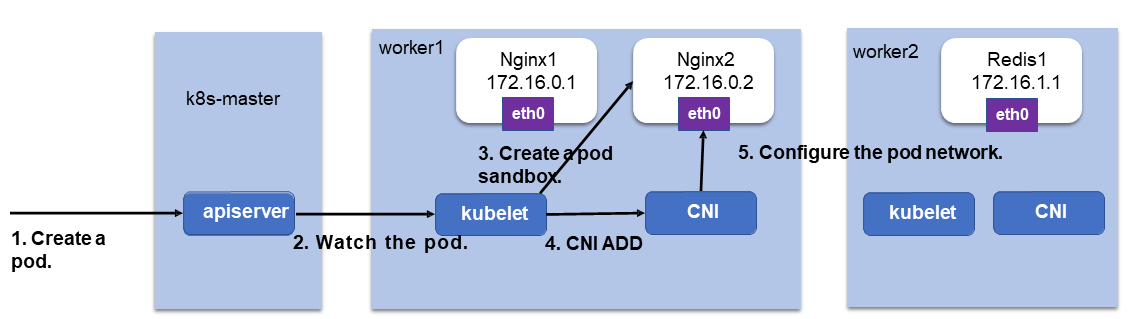

When we create a pod:

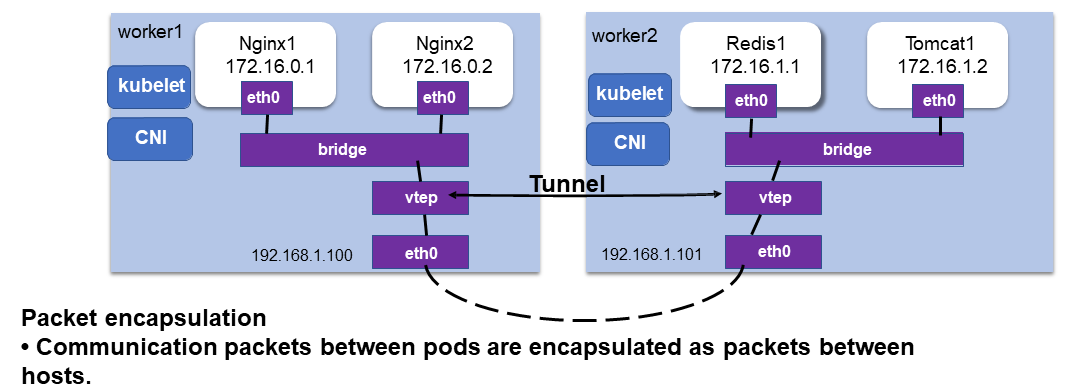

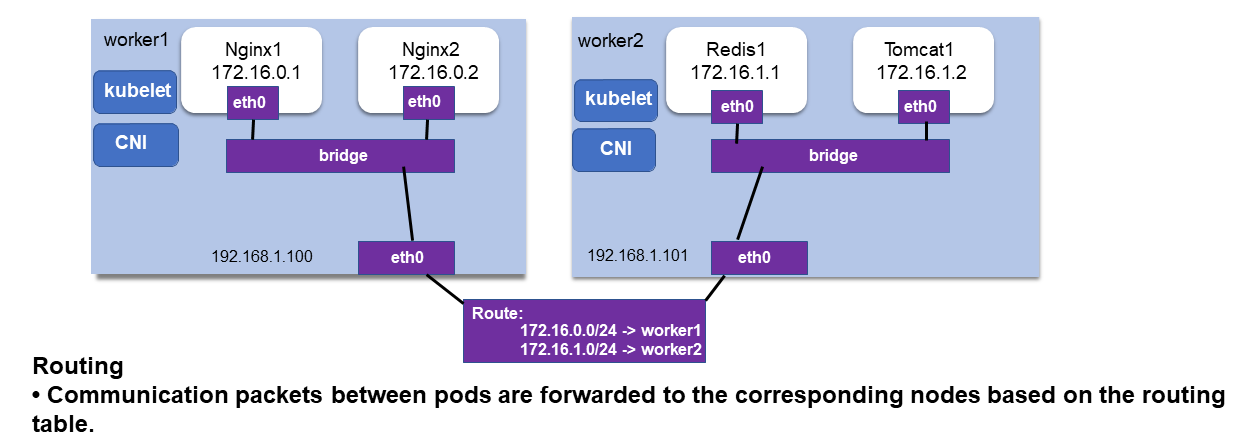

Typically, pods are not in the same plane as the host network, so how can the pods communicate with each other? Generally, the following two solutions are used to connect pods:

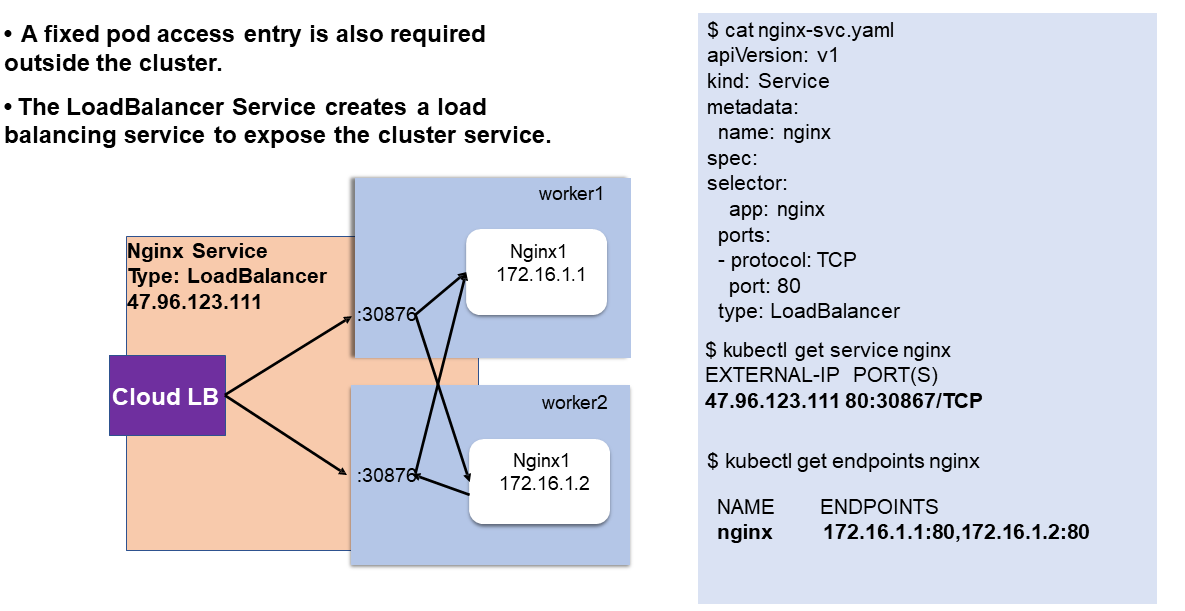

We need Kubernetes Service for the following reasons:

labelSelector to select a group of pods and perform load balancing at the Service IP address and port for the IP addresses and ports of the selected group of pods.LoadBalancer Service

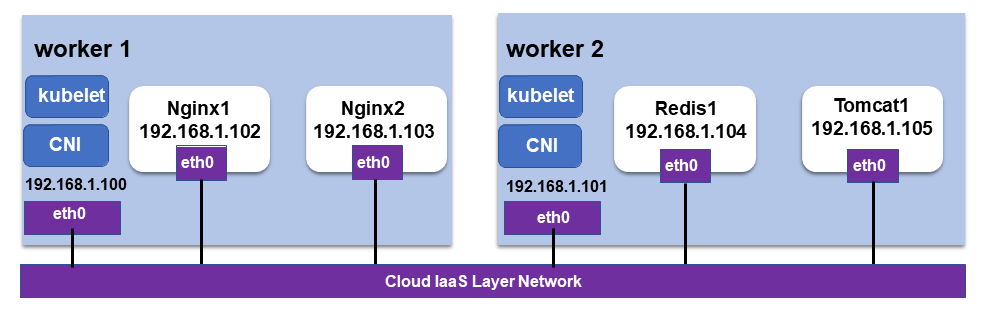

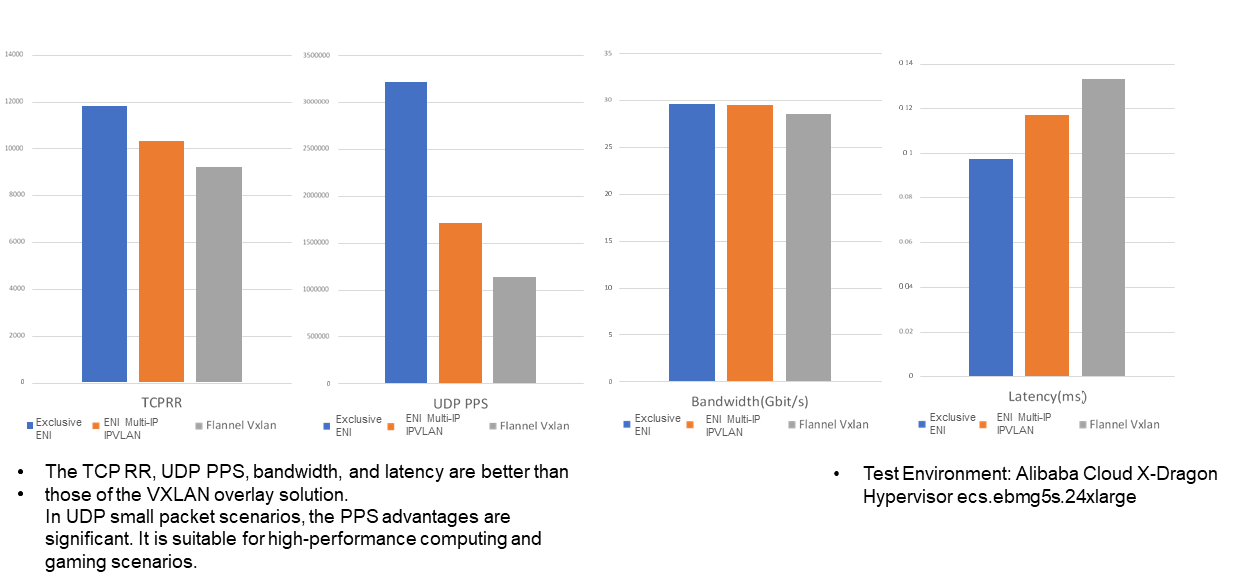

The cloud IaaS layer network is already virtualized. If network virtualization is further performed in pods, the performance loss is significant.

The cloud-native container network uses native cloud resources on the cloud to configure the pod network.

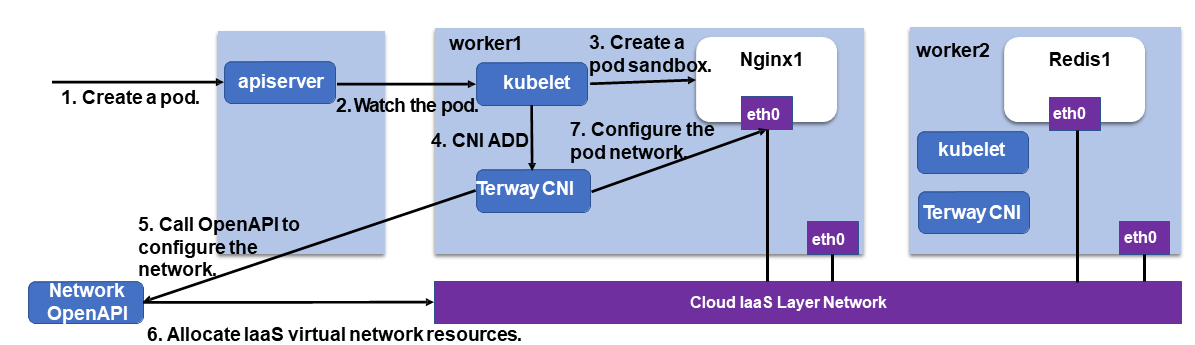

CNI calls the cloud network open APIs to allocate network resources.

Pod networks become first-class citizens in a VPC. Therefore, using cloud-native pod networks has the following advantages:

LoadBalancer backend without requiring port forwarding on nodes.IaaS layer network resources (using Alibaba Cloud as an example):

ENIs or ENI secondary IP addresses are allocated to pods to implement the cloud-native pod network.

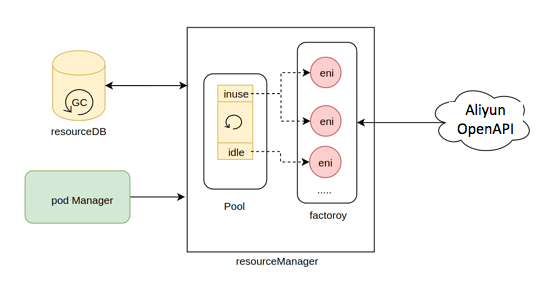

How to solve the gap between cloud resources and rapid pod scaling:

Terway uses an embedded resource pool to cache resources and accelerates startup.

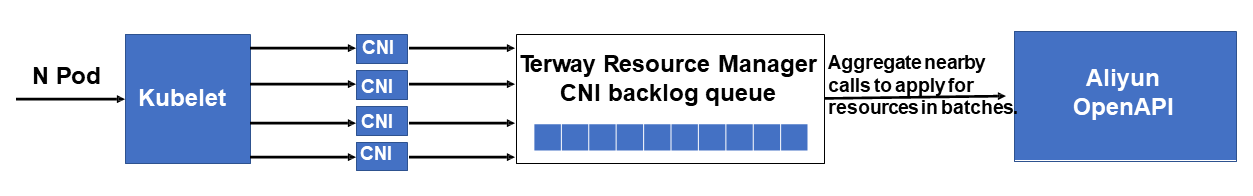

You can configure, call, and batch apply for resources for concurrent pod networks, as shown in the following figure.

We must also consider many other resource management policies, such as how to select vSwitches for pods, to ensure sufficient IP addresses, and how to balance the number of queues and interrupts of the ENIs on each node to ensure minimal competition.

For more information, check the Terway documentation and code.

This mode is implemented in CNI:

This method has the following features and advantages:

This mode is implemented in CNI:

This method has the following features and advantages:

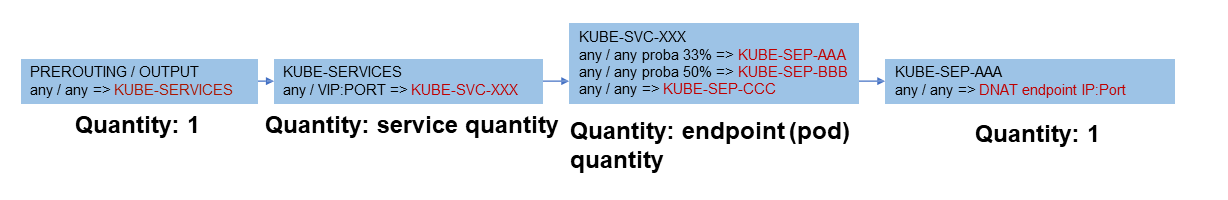

By default, Kubernetes Service implements kube-proxy and uses iptables to configure Service IP addresses and load balancing, as shown in the following figure.

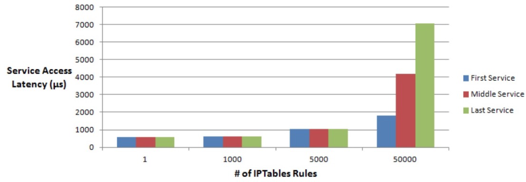

NetworkPolicy Performance and Scalability IssuesKubernetes NetworkPolicy controls whether to allow communication between pods. Currently, mainstream NetworkPolicy components are implemented based on iptables, which also have iptables scalability issues.

NetworkPolicy ScalabilityeBPF is described as:

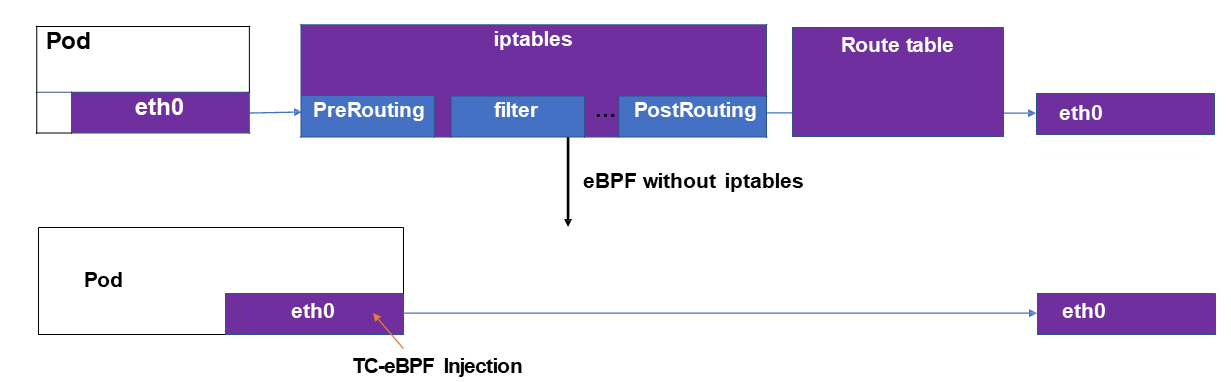

tc-ebpf.

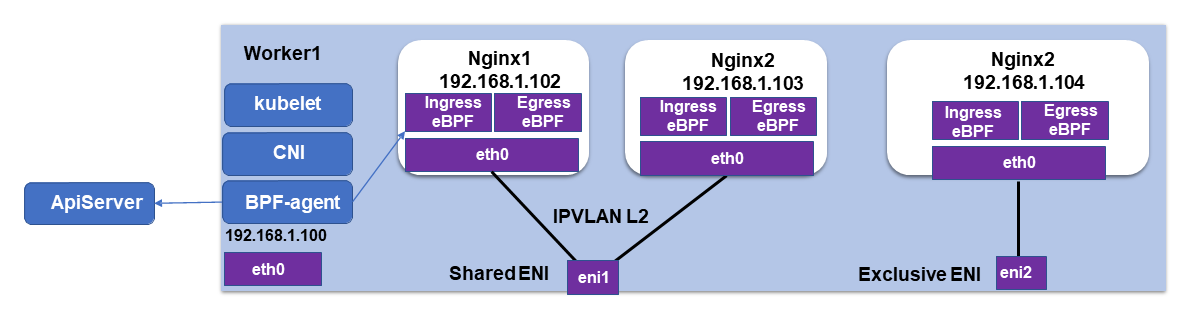

As shown in the preceding figure, if you use the tc tool to inject the eBPF program to the ENI of a pod, Service and NetworkPolicy can be solved in the ENI. Then, network requests are sent to the ENI. This greatly reduces network complexity.

NetworkPolicy and is configured with the ingress and egress rules of the pod ENI.NetworkPolicy rules and determines whether to transmit a request.

Note: We use Cilium as the BPF agent on nodes to configure the BPF rules for pod ENIs. For more information about Terway-related adaptation, please visit this website.

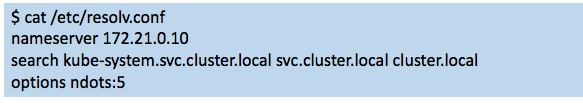

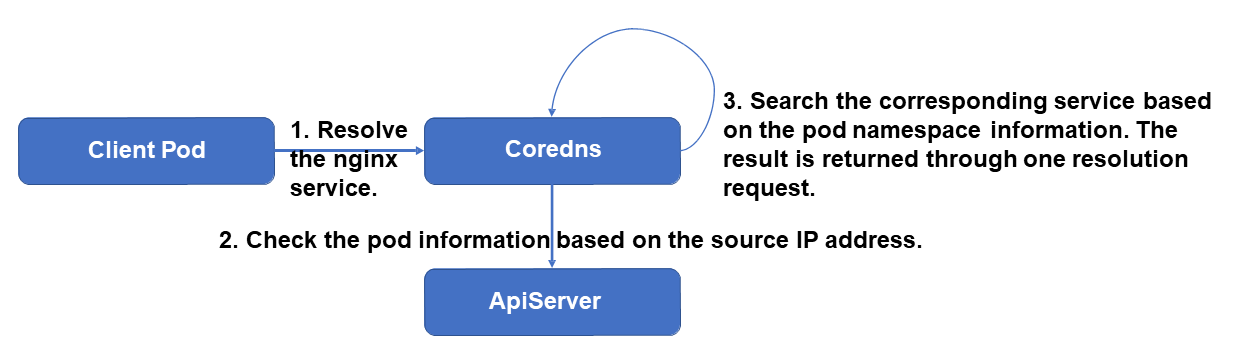

A Kubernetes pod must perform many searches when it resolves the DNS domain name. As shown in the preceding figure, when the pod requests aliyun.com, it will resolve the following DNS configuration in sequence:

aliyun.com.kube-system.svc.cluster.local -> NXDOMAINaliyun.com.svc.cluster.local -> NXDOMAINaliyun.com.cluster.local -> NXDOMAINaliyun.com -> 1.1.1.1CoreDNS is centrally deployed on a node. When a pod accesses CoreDNS, the resolution link is too long and the UDP is used. As a result, the failure rate is high.

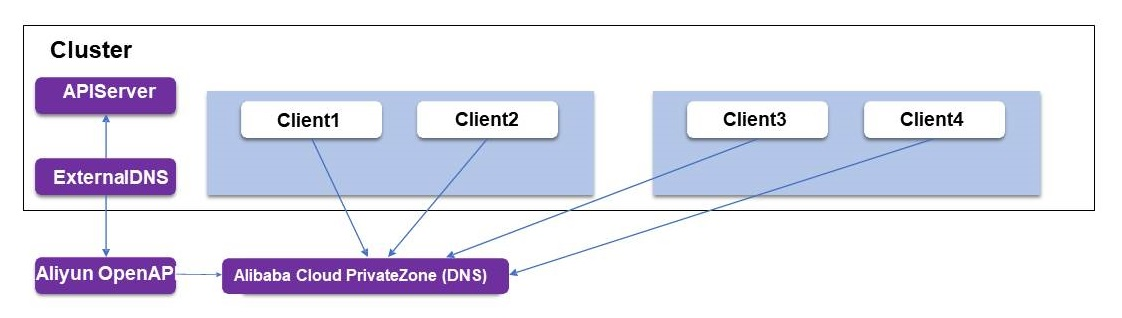

Change a client-side search to a server-side search.

When the pod sends a request to CoreDNS to resolve the domain name:

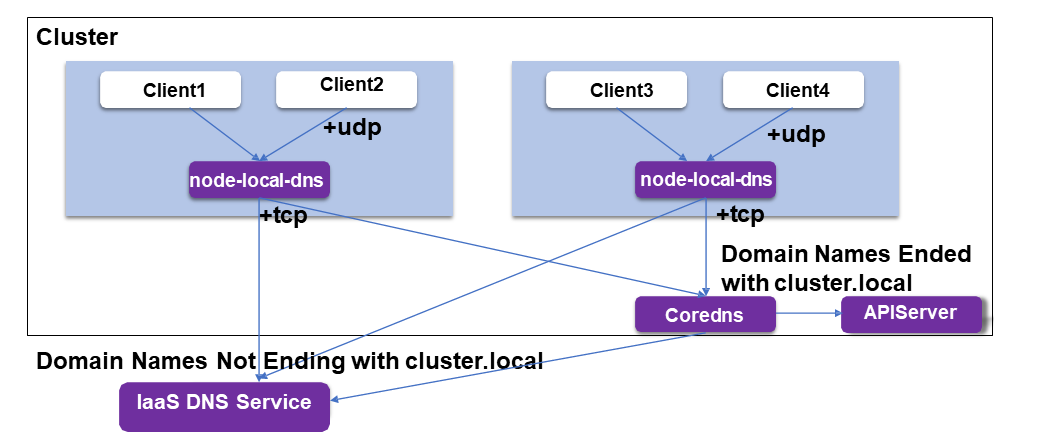

node-local-dns on Each Node

node-local-dns intercepts DNS queries from pods.node-local-dns distributes external domain name traffic to ensure that external domain name requests are no longer sent to the central CoreDNS.

This is how Alibaba Cloud designs and builds high-performance, cloud-native pod networks. With cloud-native development, more types of application loads will run on Kubernetes and more cloud services will be integrated into pod scenarios. We believe that more functions and application scenarios will be incubated in high-performance, cloud-native pod networks in the future.

Those who are interested in this topic are welcome to join us.

Q1: Is the veth pair used to connect the pod network namespace and the host when a pod network namespace is created?

A1:

Q2: How are the security audits performed when a pod's IP address is not fixed?

A2:

NetworkPolicy implementation, pods are identified by labels. When pods are rebuilt, the IP addresses of pods created with the labels are dynamically updated.Statefulset application is updated on a node, Terway reserves the pod IP address for a period so that it can be quickly used when the pod is restarted. The IP address remains unchanged during the update process.Q3: Does IPVLAN have high requirements for the kernel?

A3: Yes. On Alibaba Cloud, we can use aliyunlinux2 kernel 4.19. In earlier kernels versions, Terway also supports the veth pair + policy routing method to share secondary IP addresses on the ENI. However, the performance may be low.

Q4: Will the pod startup speed be affected if eBPF is started in a pod? How long does it take to deploy eBPF in a pod?

A4: The eBPF program code is not too large. Currently, it increases the overall deployment time by several hundred milliseconds.

Q5: Is IPv6 supported? What are its implementation problems? Is there kernel or kube-proxy code issues?

A5: Currently, IPv6 addresses can be exposed through LoadBalancer. However, IPv6 addresses are converted to IPv4 addresses in LoadBalancer. Currently, pods do not support IPv6 addresses. IPv6 addresses are supported starting for kube-proxy starting from Kubernetes 1.16. We are actively tracking this issue and plan to implement native pod IPv4/IPv6 dual stack together with Alibaba Cloud IaaS this year.

Q6: The source IP address is used to obtain the accessed service during each CoreDNS resolution request. Is the Kubernetes API called to obtain the accessed service? Will this increase pressure on the API?

A6: No. The preceding shows the structure. CoreDNS AutoPath listens to pod and service changes from the API server using the watch&list mechanism and then updates the local cache.

Q7: Are Kubernetes Service requests sent to a group of pods in polling mode? Are the probabilities of requests to each pod the same?

A7: Yes. The probabilities are the same. It is similar to the round robin algorithm used in load balancing.

Q8: IPVLAN and eBPF seem to be supported only by later kernel versions. Do they have requirements for the host kernel?

A8: Yes. We can use aliyunlinux2 kernel 4.19 on Alibaba Cloud. In earlier kernel versions, Terway also supports the veth pair + policy routing method to share secondary IP addresses on the ENI. However, the performance may be low.

Q9: How does Cilium manage or assign IP addresses? Do other CNI plugins manage the IP address pool?

A9: Cilium has two ways to assign IP addresses. host-local: Each node is segmented and then assigned sequentially. CRD-backend: The IPAM plugin can assign IP addresses. Cilium in Terway only performed network policies and service hijacking and load balancing and did not assign or configure IP addresses.

Q10: Does Cilium inject BPF into the veth of the host instead of veth of the pod? Have you made any changes?

A10: Yes. Cilium modifies the peer veth of the pod. After testing, we found that the performance of IPVLAN is better than veth. Terway uses IPVLAN for a high-performance network without the peer veth. For more information about our modifications for adaptation, please visit this website. In addition, Terway uses Cilium only for NetworkPolicy and Service hijacking and load balancing.

Q11: How does a pod access the cluster IP address of a service after the Terway plugin is used?

A11: The eBPF program embedded into the pod ENI is used to load the service IP address to the backend pod.

Q12: Can you talk about Alibaba Cloud's plans for service mesh?

A12: Alibaba Cloud provides the Alibaba Cloud Service Mesh (ASM) product. Subsequent developments will focus on ease-of-use, performance, and global cross-region integrated cloud, edge, and terminal connections.

Q13: Will the ARP cache be affected if a pod IP address is reused after the node's network is connected? Will IP address conflicts exist if a node-level fault occurs?

A13: First, the cloud network does not have the ARP problem. Generally, layer-3 forwarding is adopted at the IaaS layer. The ARP problem does not exist even if IPVLAN is used locally. If Macvlan is used, the ARP cache is affected. Generally, macnat can be used (both ebtables and eBPF can be implemented.) Whether an IP conflict occurs depends on the IP management policy. In the current Terway solution, IPAM directly calls IaaS IPAM, which does not have this problem. If you build the pod network offline yourself, consider the DHCP strategy or static IP address assignment to avoid this problem.

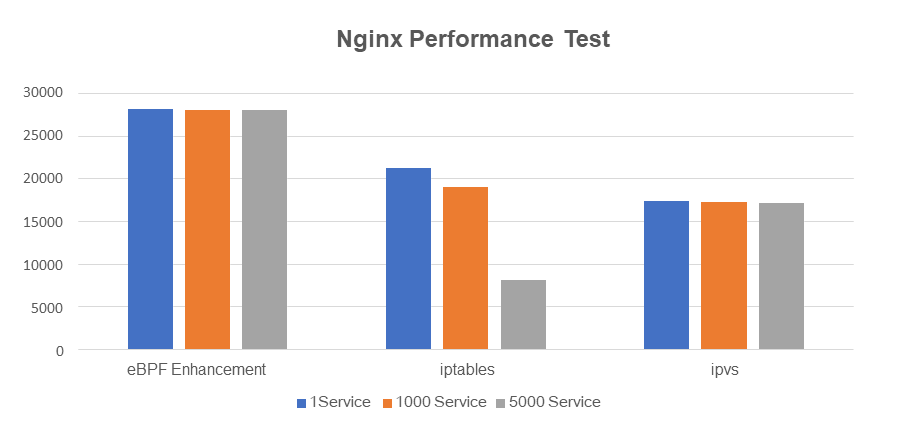

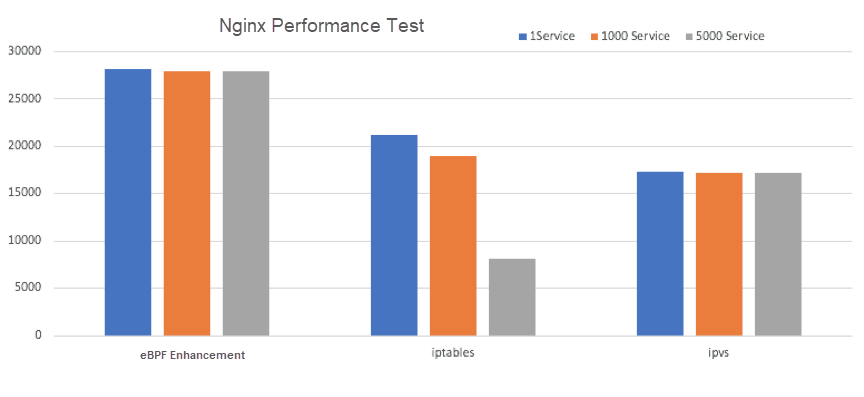

Q14: After the link is simplified using eBPF, the performance is improved significantly (by 31%) compared to iptables and (by 62%) compared to IPVS. Why is the performance improvement relative to IPVS more significant? What if it is mainly for linear matching and update optimization for iptables?

A14: The comparison here is based on one service and concerns the impact of link simplification. In iptables mode, NAT tables are used for DNAT and only the forward process is involved. In IPVS mode, input and output are involved. Therefore, iptables may be better in the case of a single service. However, the linear matching of iptables causes significant performance deterioration when many services are involved. For example, IPVS is better when there are 5,000 services.

Q15: If I do not use this network solution and think that large-scale service use will affect performance, are there any other good solutions?

A15: The performance loss in kube-proxy IPVS mode is minimal in large-scale service scenarios. However, a very long link is introduced, so the latency will increase a little.

Xiheng is an Alibaba Cloud Technical Expert and maintainer of the Alibaba Cloud open-source CNI plugin Terway project. He is responsible for Container Service for Kubernetes (ACK) network design and R&D.

Introduction to Kubernetes-Native Workflows Based on the Argo Project Newly Hosted by CNCF

640 posts | 55 followers

FollowAlibaba Developer - June 30, 2020

Alibaba Cloud Native Community - June 29, 2022

Alibaba Cloud Native - May 23, 2022

OpenAnolis - October 26, 2022

Alibaba Container Service - December 4, 2024

Apache Flink Community China - November 6, 2020

640 posts | 55 followers

Follow Container Service for Kubernetes

Container Service for Kubernetes

Alibaba Cloud Container Service for Kubernetes is a fully managed cloud container management service that supports native Kubernetes and integrates with other Alibaba Cloud products.

Learn More Cloud-Native Applications Management Solution

Cloud-Native Applications Management Solution

Accelerate and secure the development, deployment, and management of containerized applications cost-effectively.

Learn More ACK One

ACK One

Provides a control plane to allow users to manage Kubernetes clusters that run based on different infrastructure resources

Learn More Alibaba Cloud Service Mesh

Alibaba Cloud Service Mesh

Alibaba Cloud Service Mesh (ASM) is a fully managed service mesh platform that is compatible with Istio.

Learn MoreMore Posts by Alibaba Cloud Native Community