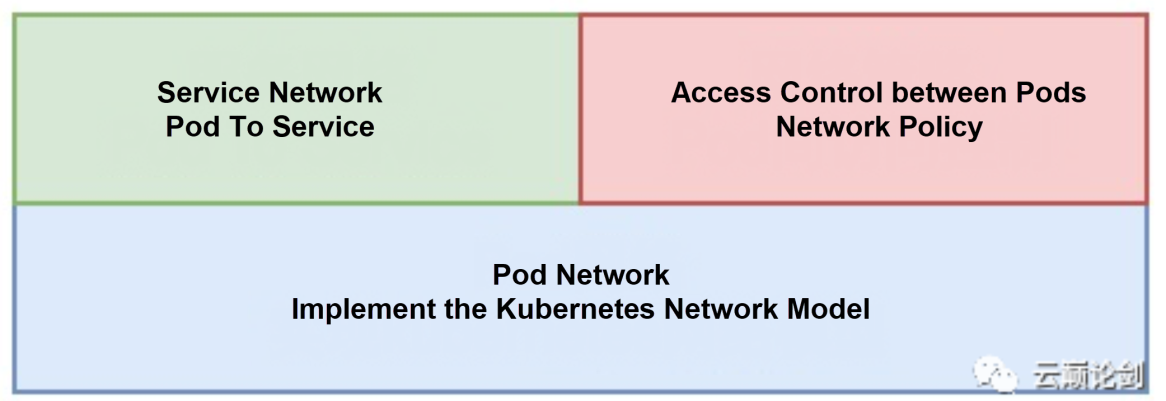

A Kubernetes network can be divided into Pod network, service network, and network policy. The Pod network connects Pods and Nodes in the cluster to a flat network with three layers according to the Kubernetes network model. It is the base for implementing service network and network policy. The service network adds Server Load Balancer (SLB) to the path of the Pod network to support access from pods to the service. Network policy provides Resource Access Management (RAM) between Pods through the stateful firewall.

Service network and network policy are mostly implemented based on the Netfilter framework of Linux kernel including conntrack, iptables, nat, and ipvs. As a packet filtering technology that was added to the kernel in 2001, Netfilter provides a flexible framework and rich functions, which is the most widely used packet filtering technology in Linux. However, there are issues in performance and expansion capability. For example, iptables rules update and match slowly due to linear structure. Conntrack has fast performance degradation in high concurrency scenarios and full connection tables. These problems make the service network and network policy difficult to meet the requirements of large-scale Kubernetes clusters and high-performance scenarios.

To solve these, there are some methods through cloud network products. For example, implement service network with SLB, or network policy with security group. Network products on the cloud provide a wide range of network functions and guarantee SLA. However, since the current cloud network products mainly focus on IaaS, its creation speed, quota, and other restrictions are difficult to meet the elasticity and speed requirements of cloud-native scenarios.

The implementation of the Pod network can be divided into two categories according to whether it passes through kernel L3 protocol stack: routing and Bridge through L3 protocol stack; IPVlan, MacVlan bypass L3 protocol stack. The solution that passes through the L3 protocol stack is compatible with the current service network and the implementation of network policies since the complete L3 processing is retained in the datapath. But the performance of such solutions is low due to the heavier logic of L3. The solution of the bypass L3 protocol stack has good extensibility due to the simple datapath logic, but it is difficult to implement service networks and network policies.

To sum up, the following is the performance issues of Kubernetes networks are summarized:

| Issues | |

| Service network, network policy | ● The solution is based on the netfilter framework: low performance and extensibility. ● The cloud product solution: unable to meet the requirements of cloud-native scenarios. |

| Pod network | ● Pass L3 protocol stack: low performance. ● bypass L3 protocol stack: difficult to realize service network and network policy. |

Considering the current situation of the Kubernetes network, we need to re-implement a lightweight service network and network strategy that does not depend on Netfilter. On the one hand, it can be applied to high-performance Pod networks such as IPVlan and MacVlan, and complement the support for service networks and network policies. On the other hand, new implementations need to have better performance and scalability.

eBPF implements a register-based virtual machine in the kernel and has its 64bit instruction set. Through the system call, we can load a written eBPF program to the kernel and trigger it to run when the corresponding event occurs. At the same time, the kernel also provides relevant verification mechanisms to ensure that the loaded eBPF program can be executed to avoid risks such as damage to the kernel, painc, and security risks.

Kernel currently adds eBPF support to many modules and defines many eBPF program types. Each type determines where it can be loaded into the kernel, the kernel helper function that can be called, the object passed when running the eBPF program, and the read and write permissions on the kernel object. At present, the network is one of the subsystems with more eBPF applications and faster development. Multiple types of eBPF support exist from the network interface controller at the bottom of the protocol stack to the socket at the upper layer.

For the performance of the current netfilter, the community is implementing a new packet filtering technology named bpfilter: https://lwn.net/Articles/755919/

From the perspective of network developers, eBPF is providing a programmable data plane in the kernel, and the kernel ensures the security of the program. Based on this, we hope to implement a high-performance service network and network policy, which is applied to pod networks such as IPVlan, MacVlan, mounting, and other bypass L3. This provides a network solution with good comprehensive performance in Kubernetes.

The tc-ebpf is located at the L2 of the Linux protocol stack and can hook the network device inbound and outbound traffic to the datapath implemented by eBPF. There are many advantages of implementing a service network&network policy based on tc-ebpf:

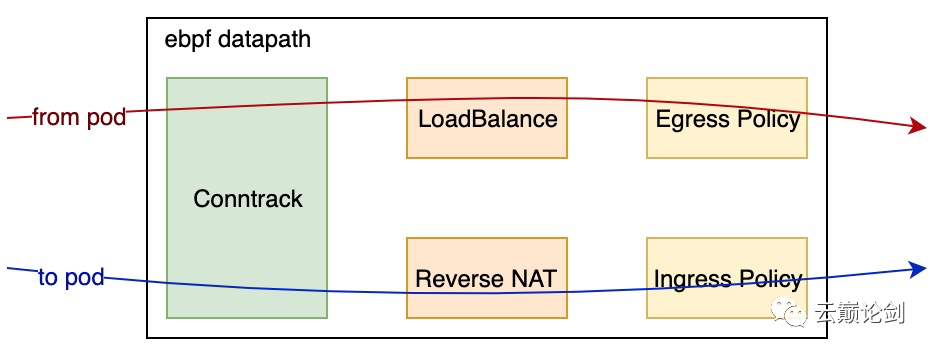

Building a service network&network policy through tc-ebpf requires the following modules:

Conntrack is the basic module of service network and network policy. Egress and ingress traffic is processed by conntrack first, and the connection status of container traffic is generated.

LoadBalance + ReverseNat is the module of the service network. LoadBalance selects the available endpoint for the egress traffic and synchronizes the SLB information to the connection tracing information. ReverseNat searches for the LoadBalance information for the ingress traffic and executes nat to change the source IP address to cluster ip.

The Policy module applies user-defined policy rules for new connections based on the connection status of Conntrack.

We have investigated some implementations in the current community. Cilium is one of the best in building Kubernetes networks based on eBPF technology. eBPF is used to implement pod network, service network, and network policy. However, we found that the network types currently supported by cilium are not well applied to public cloud scenarios.

Terway is an open-source container network plug-in with high performance by the Alibaba Cloud Container Service team. It supports the use of Alibaba Cloud elastic network cards to implement container networks. It makes the container network and the virtual machine network lay on the same network plane. There will be no packet loss and other losses when the container network communicates between different hosts. The cluster size is not limited by routing entries without distributed routing.

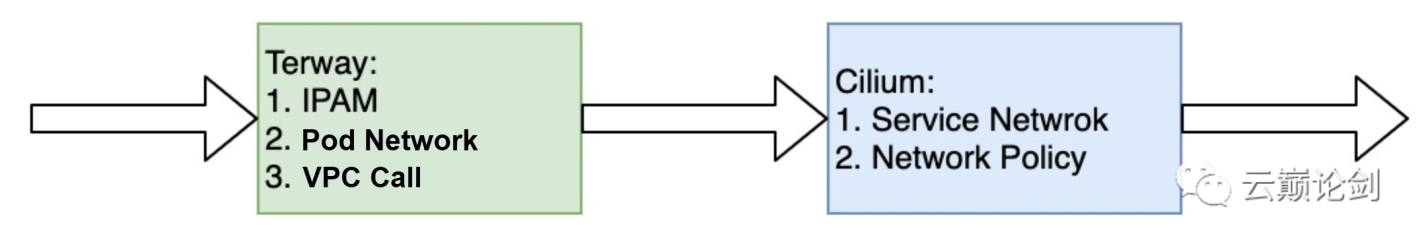

We cooperated with Terway to connect Terway and Cilium through the CNI chain, further optimizing the network performance of Kubernetes.

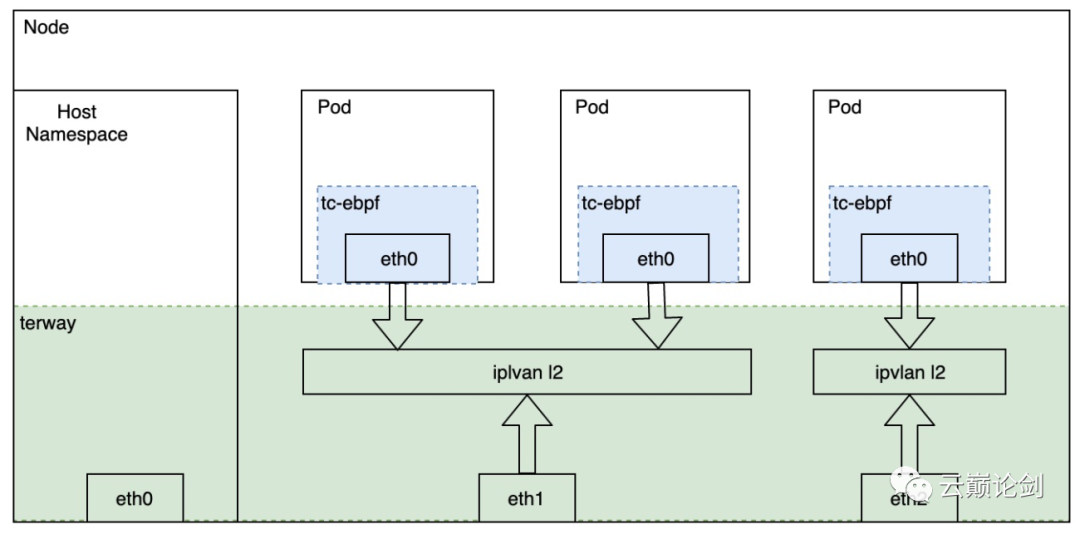

As the first CNI of the CNI chain, Terway creates the pod network on Alibaba Cloud VPC to connect pods to VPC and provide high-performance container network communication. Cilium, as the second CNI, implements a high-performance service network and network policy.

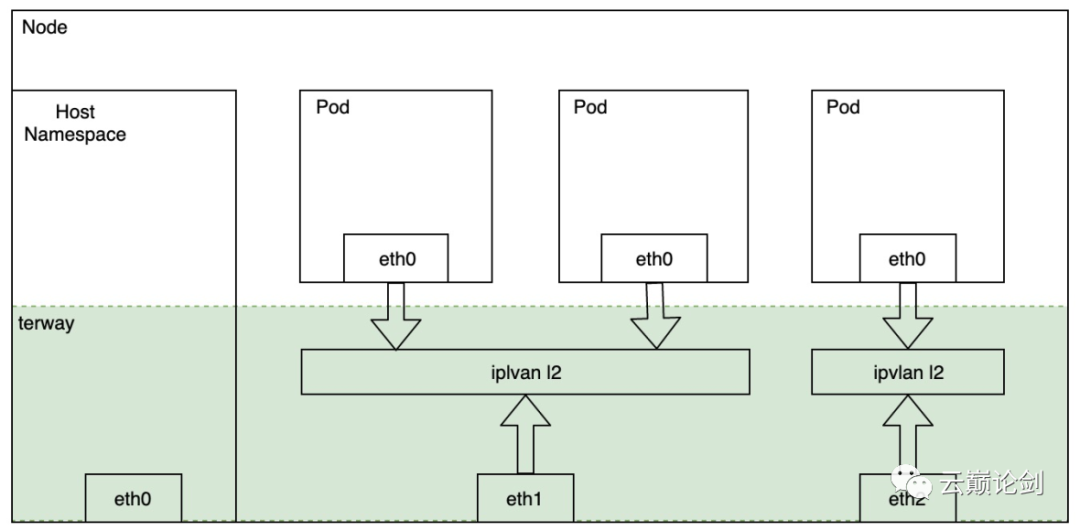

Combining factors such as pod density and performance on a single node, the Pod network uses the eni multi-ip of VPC based on IPVlan L2.

At the same time, implement a CNI chain mode for IPVlan mode in Cilium, and access to the datapath of tc-eBPF on the Pod network created by terway creation to implement the service network and network policy.

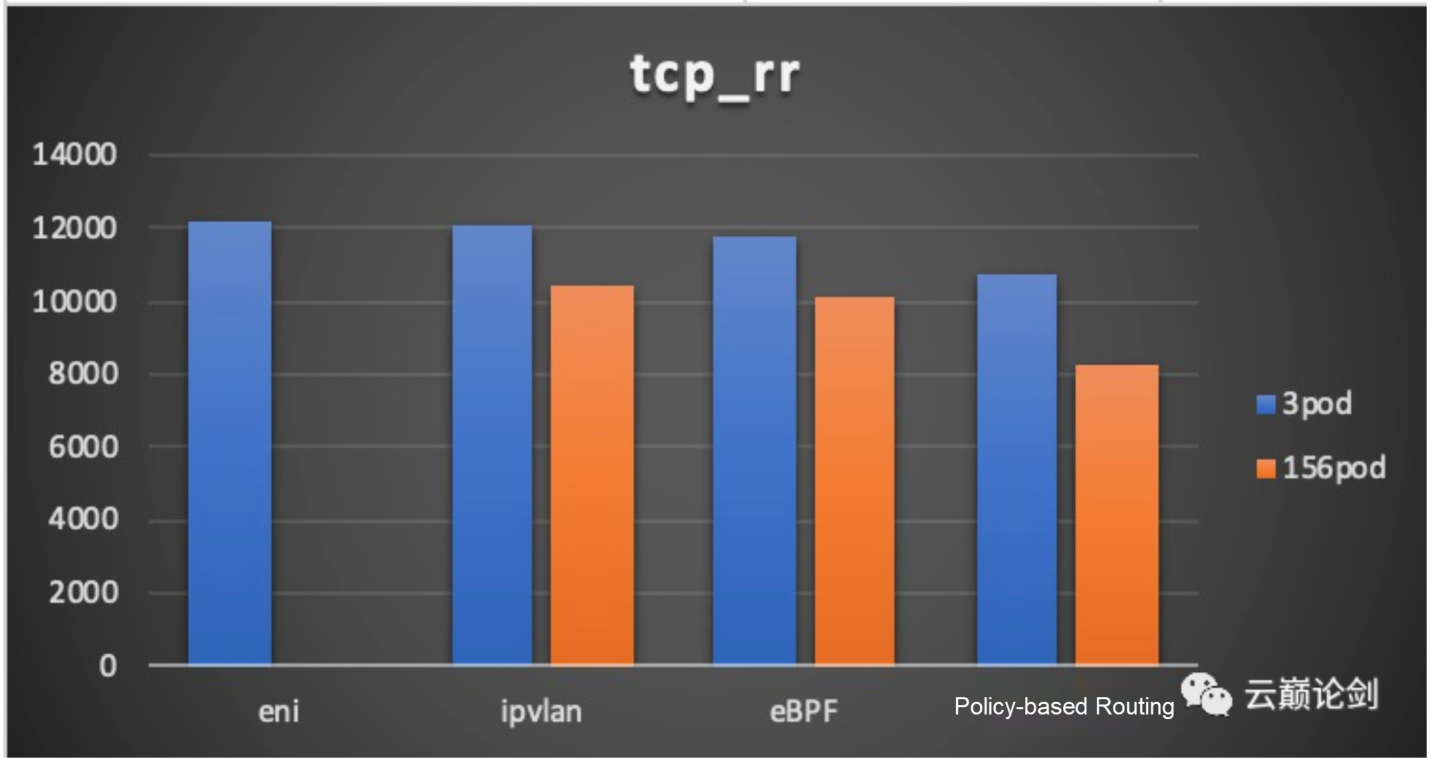

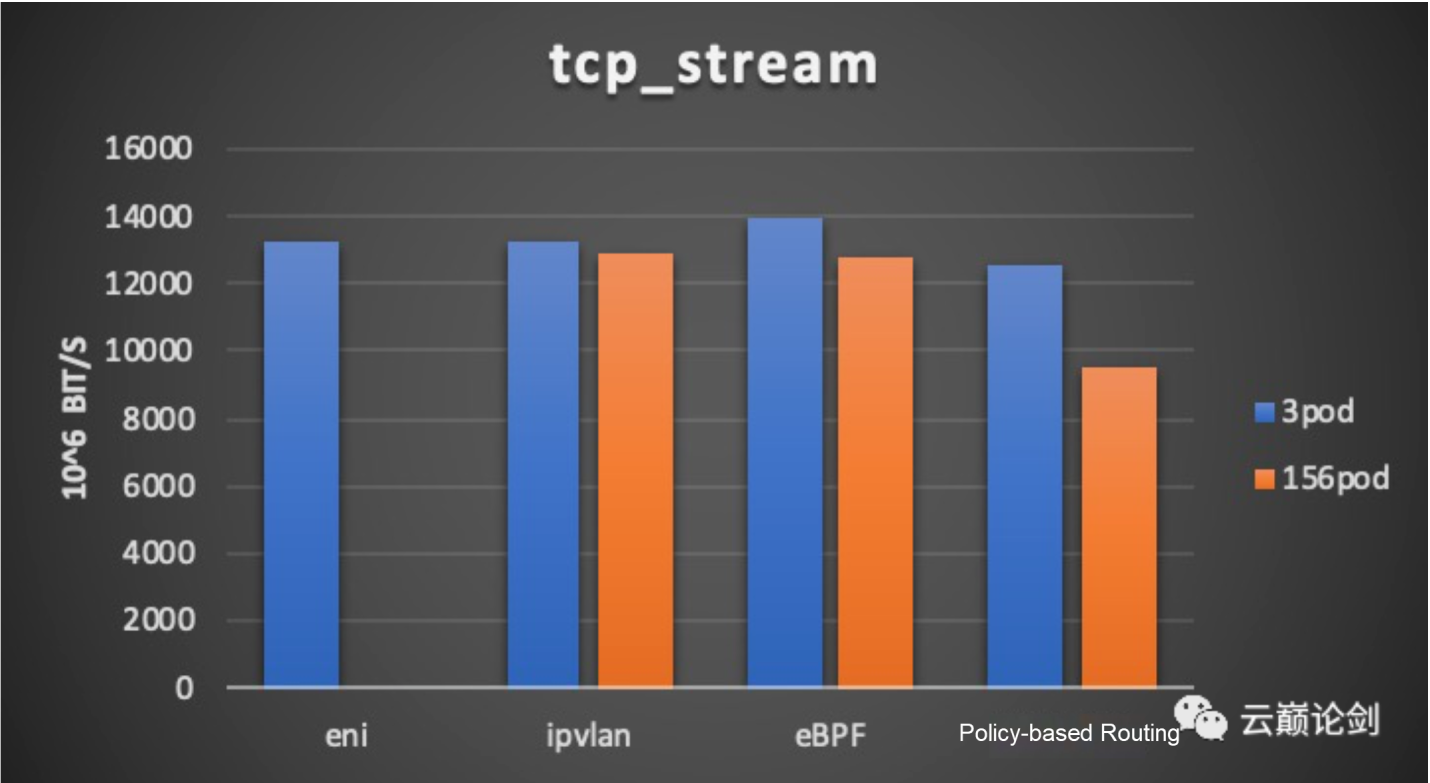

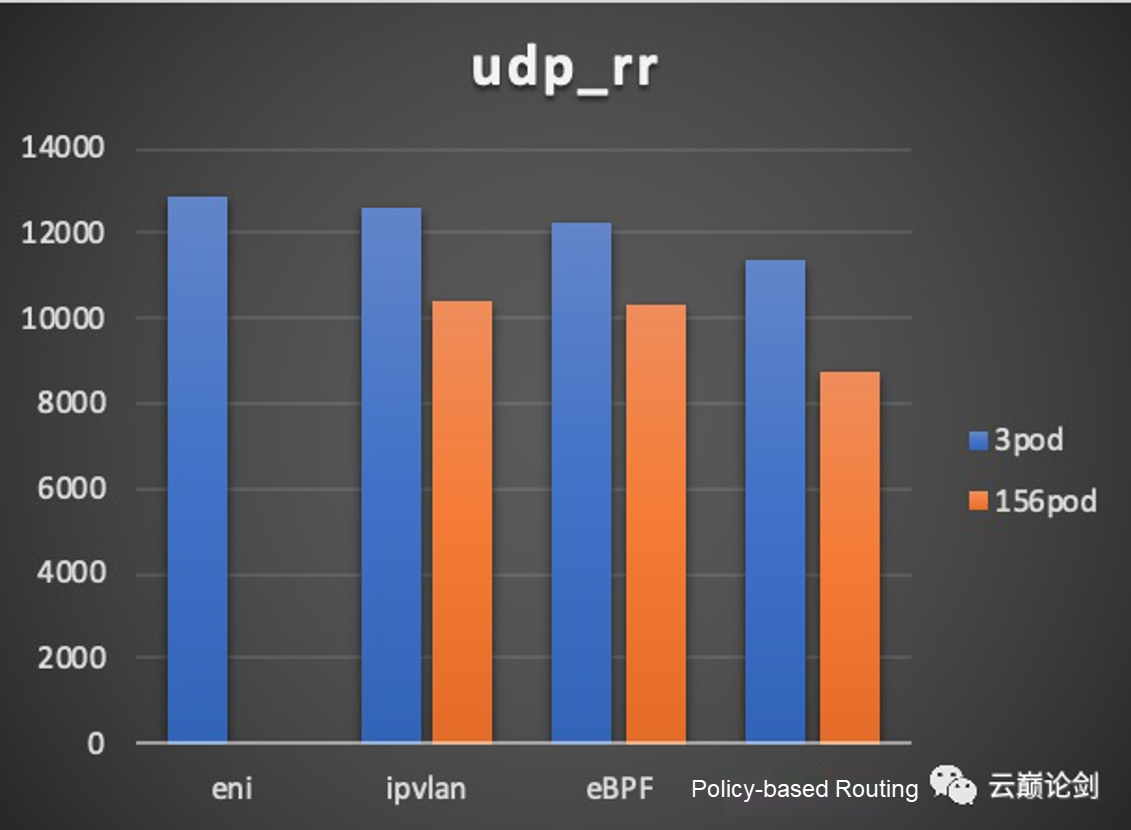

The network performance evaluation is mainly compared with Terway's current three network types:

The Performance Testing Conclusions of Terway With eBPF mode in this scheme:

Pod network performance

Extended performance

Service network performance

Extended performance

Network policy performance

tcp performance testing result:

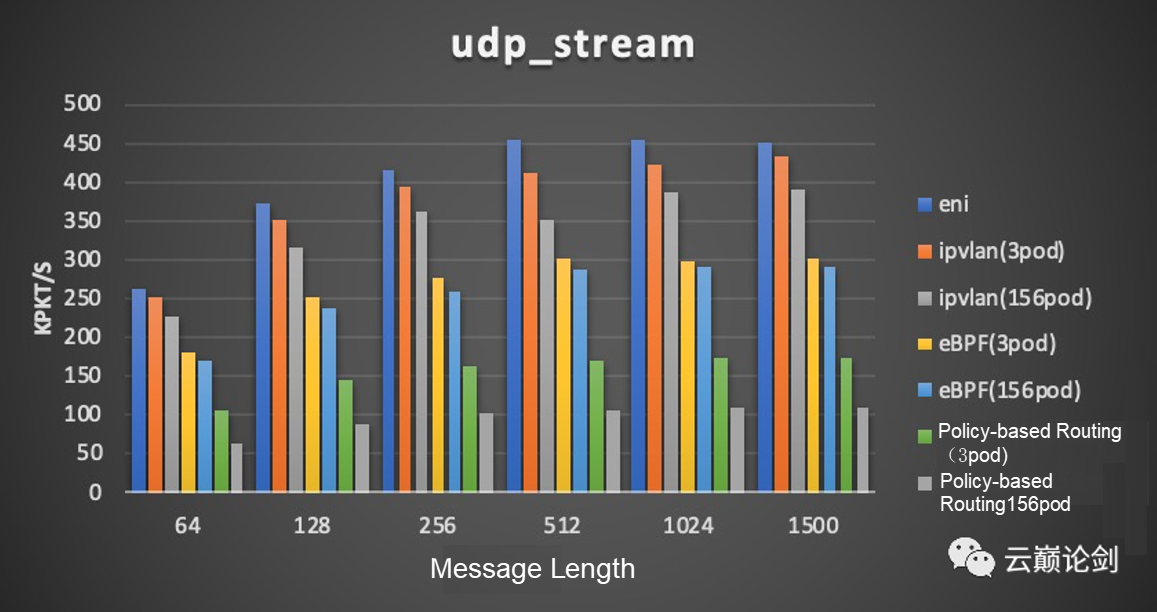

udp performance testing result:

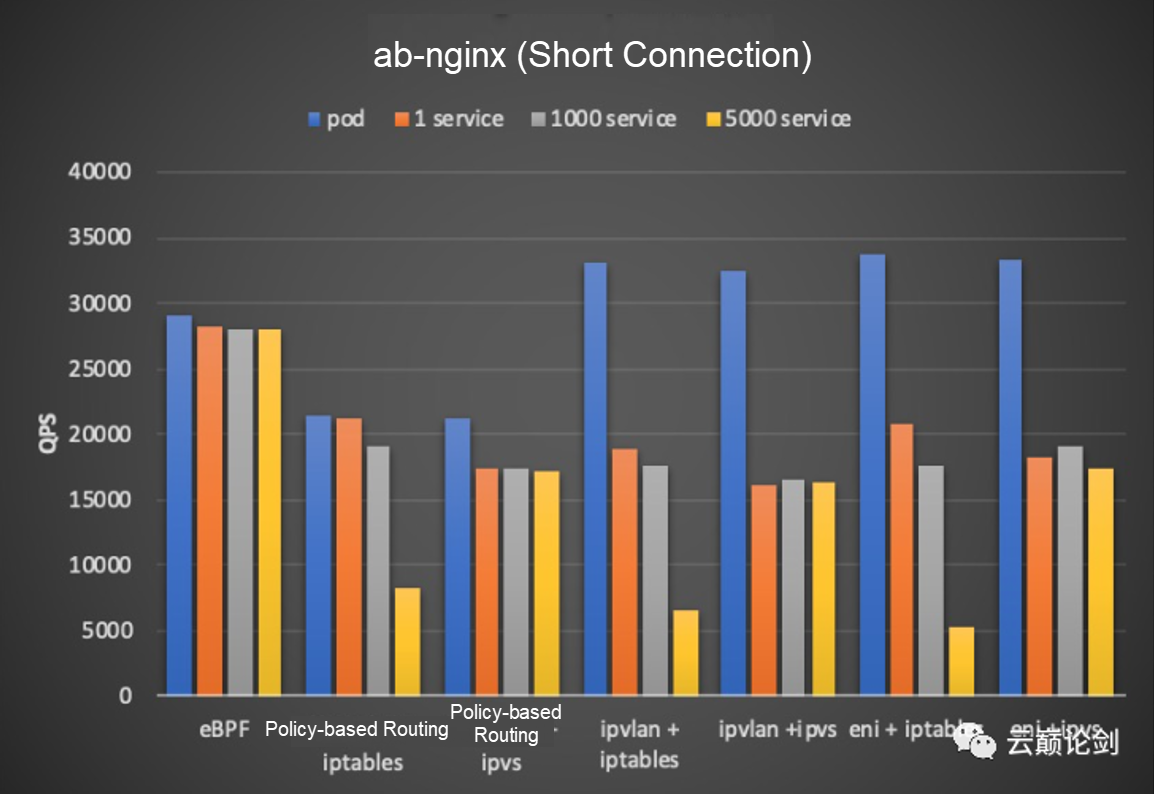

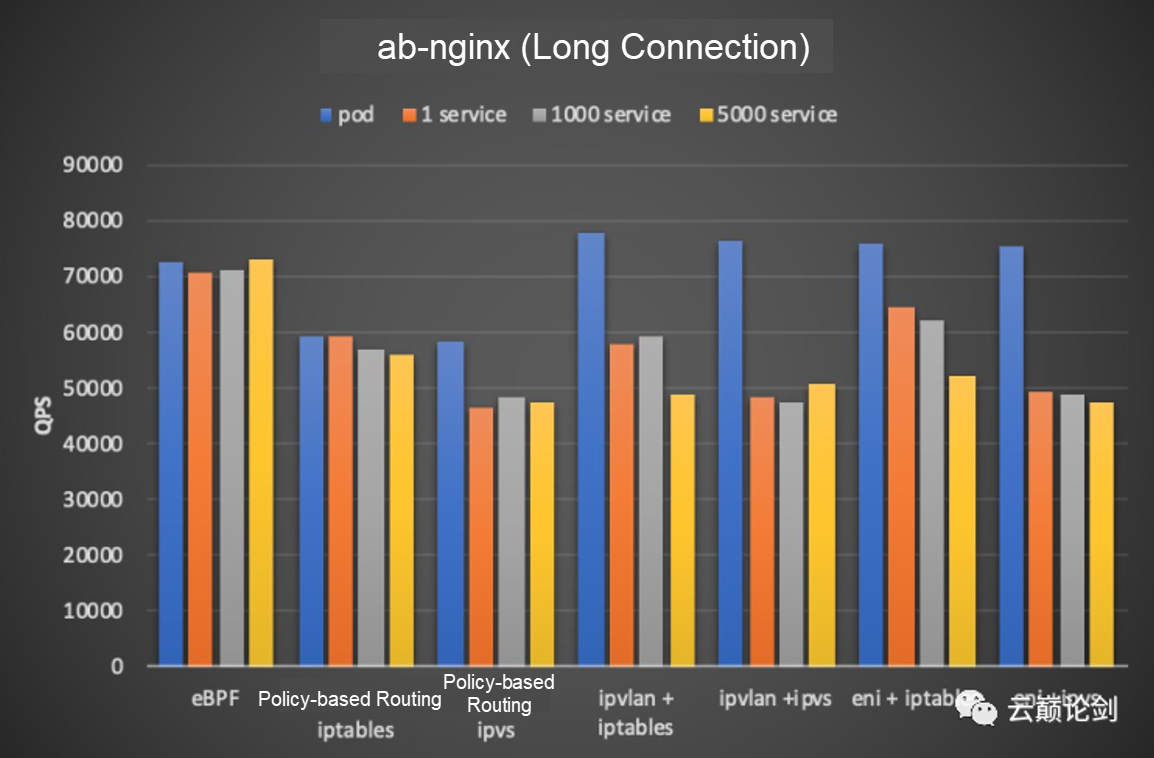

The service network tests the forwarding and scaling capabilities of service networks under different network models. The test compares the pod network. The service is 1,100, and 5000 types. The test tool uses ab and nginx, and nginx is configured as 8 cores.

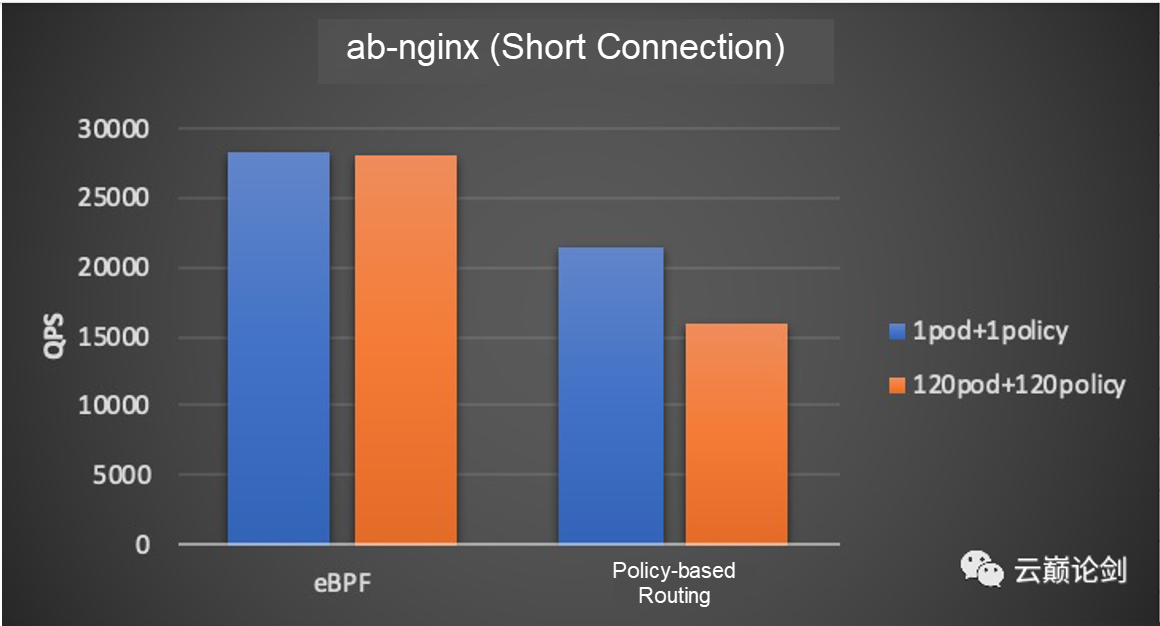

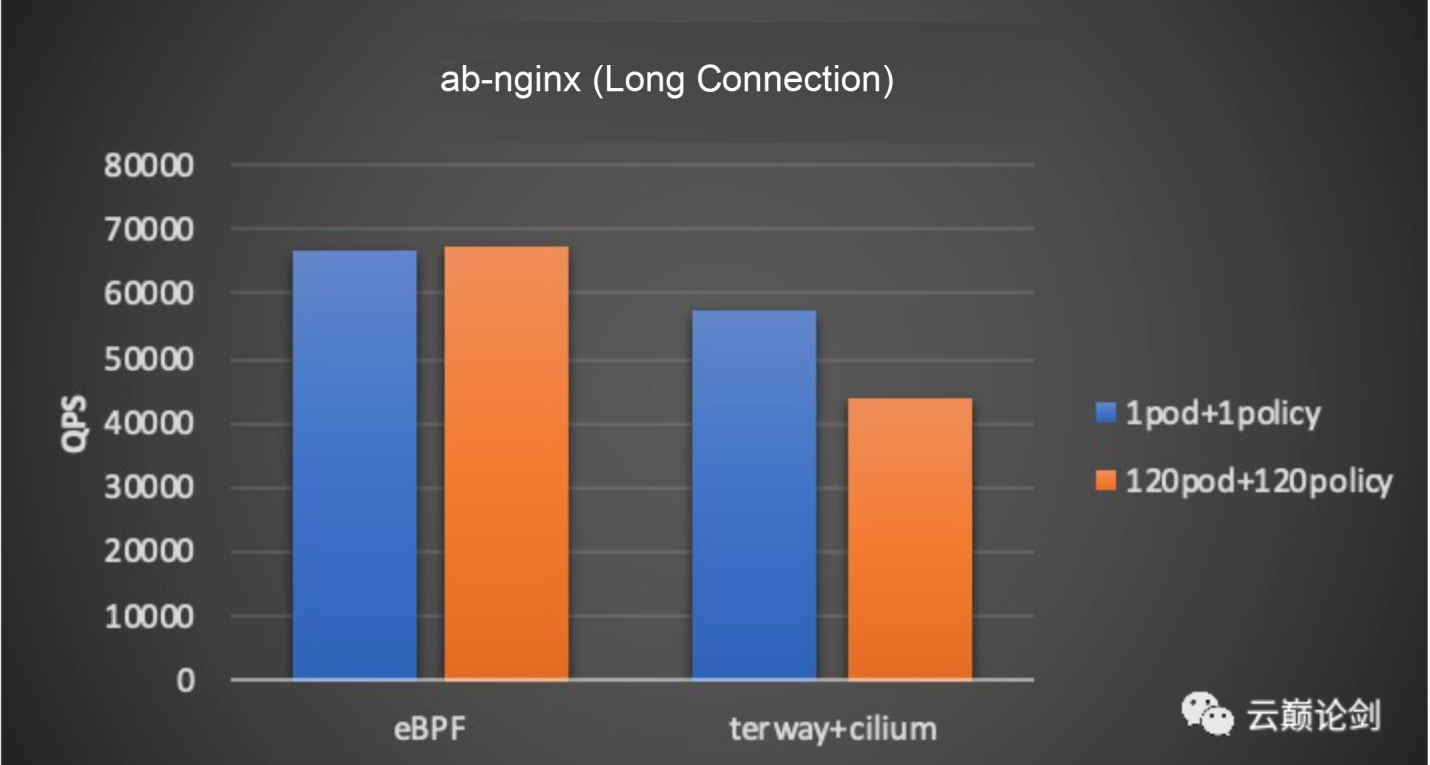

Terway only has policy-based routing. This test compares the performance data of the two modes when the pod under test is configured with 1 policy and 1 pod and 120 policies and 120 pods. The test tool is ab-nginx and nginx configured as 8 cores.

To analyze the performance, scalability, and other issues in the current Kubernetes network (Pod network, service network, and network policy), we investigated the kenrel eBPF technology and the open-source eBPF container solution Cilium. Finally, we combined Terway on Alibaba Cloud and implemented a high-performance container network solution based on the network characteristics of ENI and multiple IPs. At the same time, the CNI chain solution of IPVlan L2 implemented in cilium has also been open-sourced in the Cilium community.

In the current solution, the service network and network policy of Host Namespace are still implemented by kube-proxy. At present, the service network traffic in Host Namespace accounts for a small proportion of cluster traffic, the traffic types are complex (including cluster ip, node port, extern ip), and the priority and the benefit implemented by eBPF are low.

Since tc-ebpf is before L3 of Linux, it is not yet able to support the reorganization of the sharding packet. This will lead to the failure of the service network-network policy to sharding packets. Therefore, we designed a scheme that redirects shardings to L3 protocol stack re-entry eBPF. However, considering that Kubernetes clusters are mostly deployed in similar networks, the probability of occurrence of sharded messages is relatively low and is not implemented in the current version. In addition, cilium has added service network&network policy support for sharding packets in eBPF. At present, the implementation still has a problem which is sharding packets fails to be out of order to the end.

As a new technology, eBPF provides huge possibilities for network data plane development of kerenl. Our current solution implements service network and network policy at L2. Although there is a certain improvement in performance compared with the netfilter-based solution, the conntrack module is still an overhead that is unable to be ignored on the network. On the other hand, we are also thinking about whether conntrack is needed on the pod of Kubernetes or the datapath in node. We also wonder whether conntrack without load overhead can be implemented based on the socket information.

Based on this, we are exploring the possibility of implementing the service network and network policy at the socket layer. We hope that we can use eBPF at the socket layer to build a conntrack with minimal overhead, thus realizing the service network and network policy scheme with better performance and extensibility.

In addition, we want to implement an IPVlan mode CNI chain mode in Cilium and connect to the tc-eBPF datapath based on the pods created by terway to implement service network and network policy.

Improving Kubernetes Service Network Performance with Socket eBPF

99 posts | 6 followers

FollowAlibaba Cloud Native Community - January 19, 2023

Alibaba Cloud Native - November 16, 2023

OpenAnolis - October 26, 2022

Alibaba Cloud Native - March 6, 2024

Alibaba Developer - September 7, 2020

Alibaba Cloud Native - March 6, 2024

99 posts | 6 followers

Follow Cloud-Native Applications Management Solution

Cloud-Native Applications Management Solution

Accelerate and secure the development, deployment, and management of containerized applications cost-effectively.

Learn More Container Service for Kubernetes

Container Service for Kubernetes

Alibaba Cloud Container Service for Kubernetes is a fully managed cloud container management service that supports native Kubernetes and integrates with other Alibaba Cloud products.

Learn More Networking Overview

Networking Overview

Connect your business globally with our stable network anytime anywhere.

Learn More Accelerated Global Networking Solution for Distance Learning

Accelerated Global Networking Solution for Distance Learning

Alibaba Cloud offers an accelerated global networking solution that makes distance learning just the same as in-class teaching.

Learn MoreMore Posts by OpenAnolis