By Teng Ma and Mingxing Zhang

As Moore’s Law slows down, horizontal scaling has become the predominant strategy for enhancing system performance. Nonetheless, the inherent complexities of distributed programming present substantial challenges in creating efficient, correct, and resilient systems. Streamlining this process remains a fundamental objective of distributed programming frameworks.

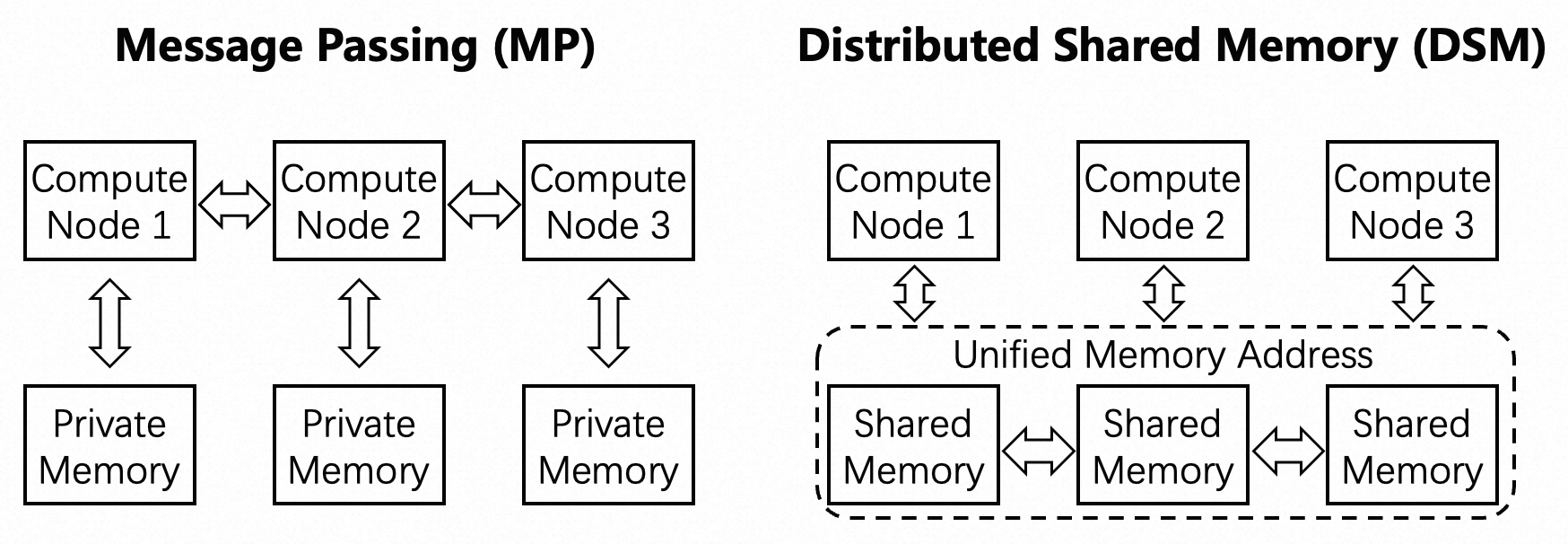

In the realm of distributed programming, two main paradigms prevail: message passing (MP) and distributed shared memory (DSM). DSM is designed to be more ease-of-use because it provides a unified memory space that abstracts away the complexities of explicit data communication. This design allows for programming distributed applications almost as straightforwardly as multi-threaded applications on a single machine. However, in practice, the less intuitive MP model is more commonly used than DSM. This preference is often attributed to the assumption that the high cost of remote communication significantly hampers the efficiency of DSM systems.

Nevertheless, rapid advancements in networking and interconnect technologies have profoundly impacted our understanding of this field. Especially with the emergence of Compute Express Link (CXL), we are presented with an opportune moment to revisit our perspectives on traditional distributed programming paradigms, prompting a reconsideration of DSM’s potential in contemporary distributed systems.

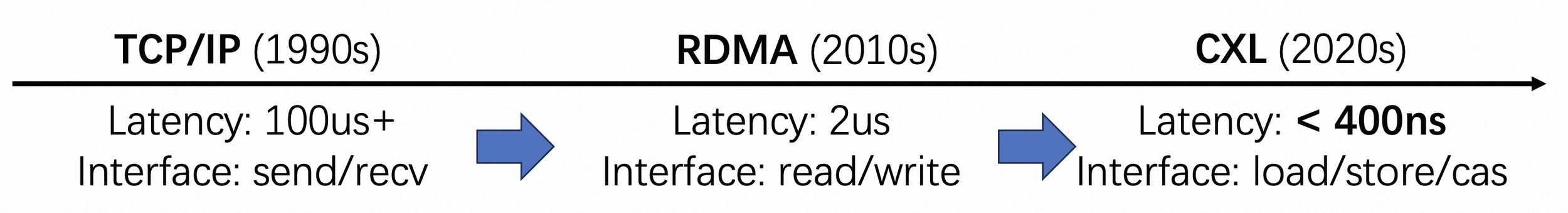

The transition from Ethernet to Remote Direct Memory Access (RDMA), and now to CXL, marks a significant evolution in interconnectivity technologies. The widespread adoption of RDMA has dramatically transformed modern data centers, profoundly impacting the design of many influential and extensively utilized distributed systems. It has notably reduced remote data access latency from over 100 microseconds to the microsecond level, while also offering a one-sided memory read/write interface that greatly reduces overhead on the remote side.

The subsequent introduction of CXL represents the latest advancement, aiming to provide high-speed and, critically, coherent data transfer between different nodes. DirectCXL [1], for instance, connects a host processor with remote memory resources, enabling load/store instructions with a remote CXL memory access latency of approximately 300~500 nanoseconds. Thus, it’s on par with the latency of accessing a remote NUMA node.

CXL 2.0 introduces memory pooling as a significant advancement, enabling the creation of a global memory resource pool that optimizes overall memory utilization. This is made possible through the CXL switch and memory controller, facilitating dynamic allocation and de-allocation of memory resources. Pond, as the first full-stack memory pool fulfilling the requirements of cloud providers [2], is striving to deploy a CXL-based memory pool on the Microsoft Azure cloud platform. Currently, the majority of mainstream cloud service providers have announced plans to support CXL-based memory pool.

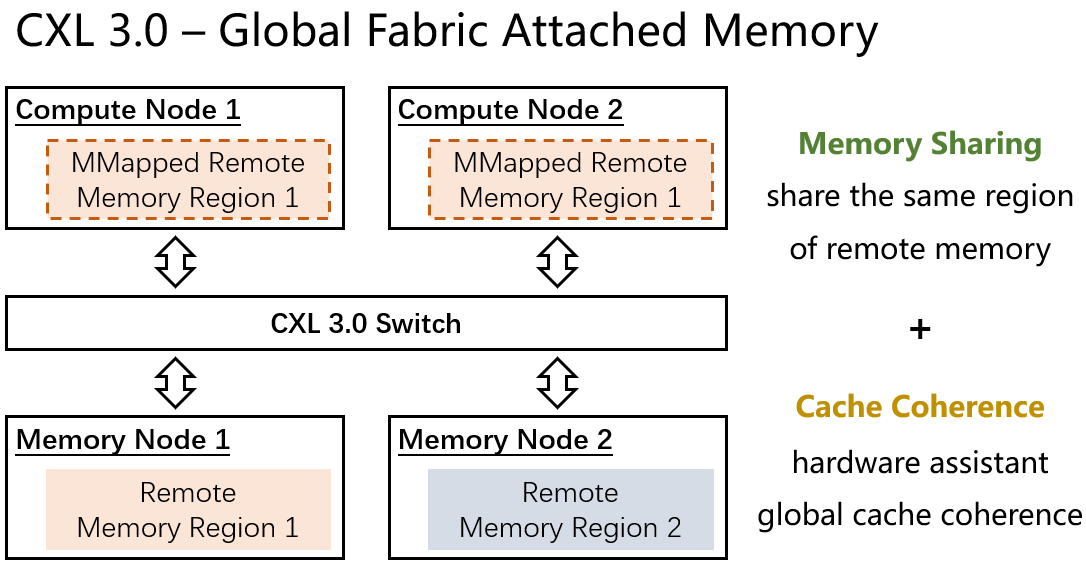

Furthermore, the upcoming CXL 3.0/3.1 version promises memory sharing [3], allowing the same memory region to be mapped across multiple machines. In this setup, the hardware would automatically manage cache coherence for concurrent accesses from different machines, essentially realizing a hardware-based DSM model. This development presents exciting possibilities for the future of distributed computing.

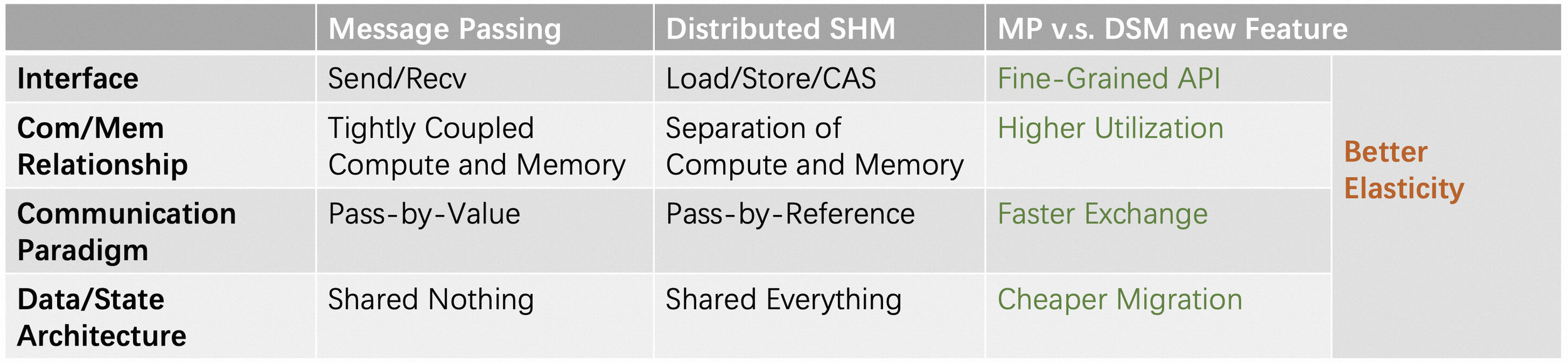

Even now CXL’s hardware implementation is still catching up to its ambitious specifications, it is currently a good time to revisit the distinctions between MP and DSM in the context of the forthcoming CXL era, to understand their differences and identify optimal application scenarios. At first glance, the principal difference between these paradigms is the interface they utilize. MP relies on a traditional Send/Receive interface, while CXL is better aligned with DSM by providing a fine-grained remote load/store instruction set. However, we think that the underlying assumptions of the compute/memory relationship are more pivotal.

Firstly, message passing systems typically assume a tightly coupled architecture, where each node can only access its local memory. In contrast, CXL-enabled DSM systems naturally fits into a disaggregated architecture that separates compute and memory resources into distinct pools [4], allowing for more flexible and efficient resource utilization.

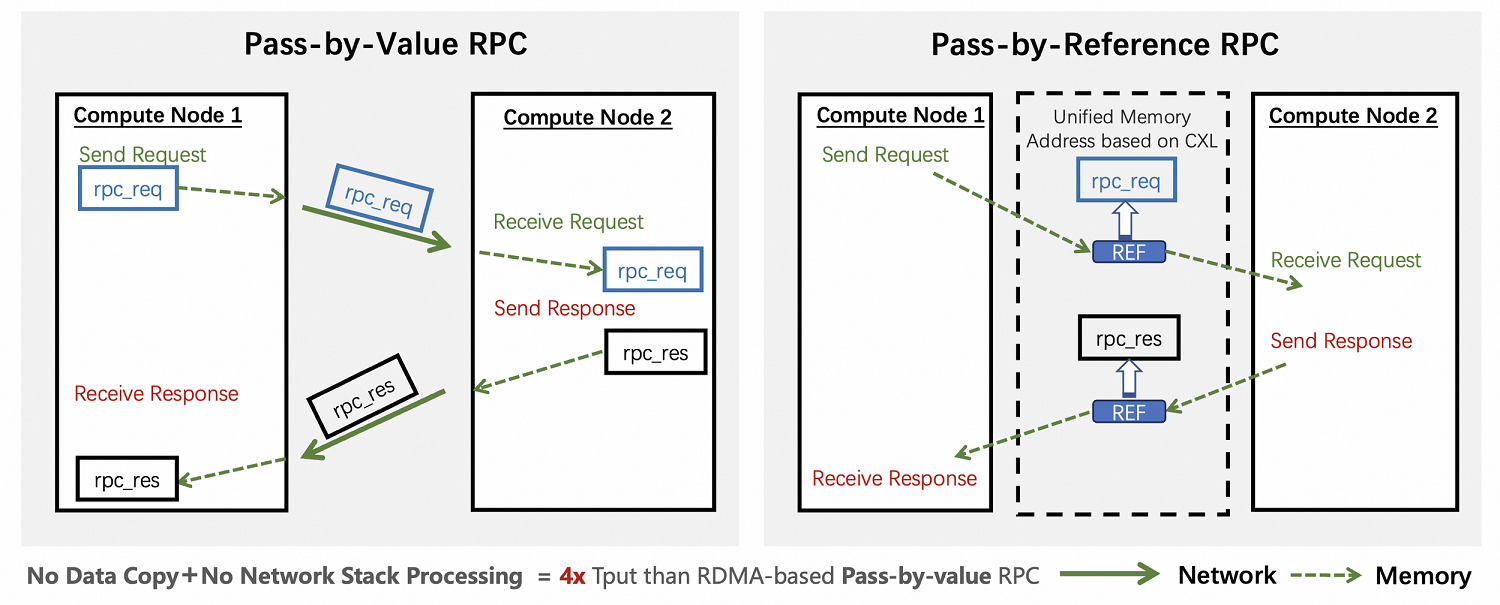

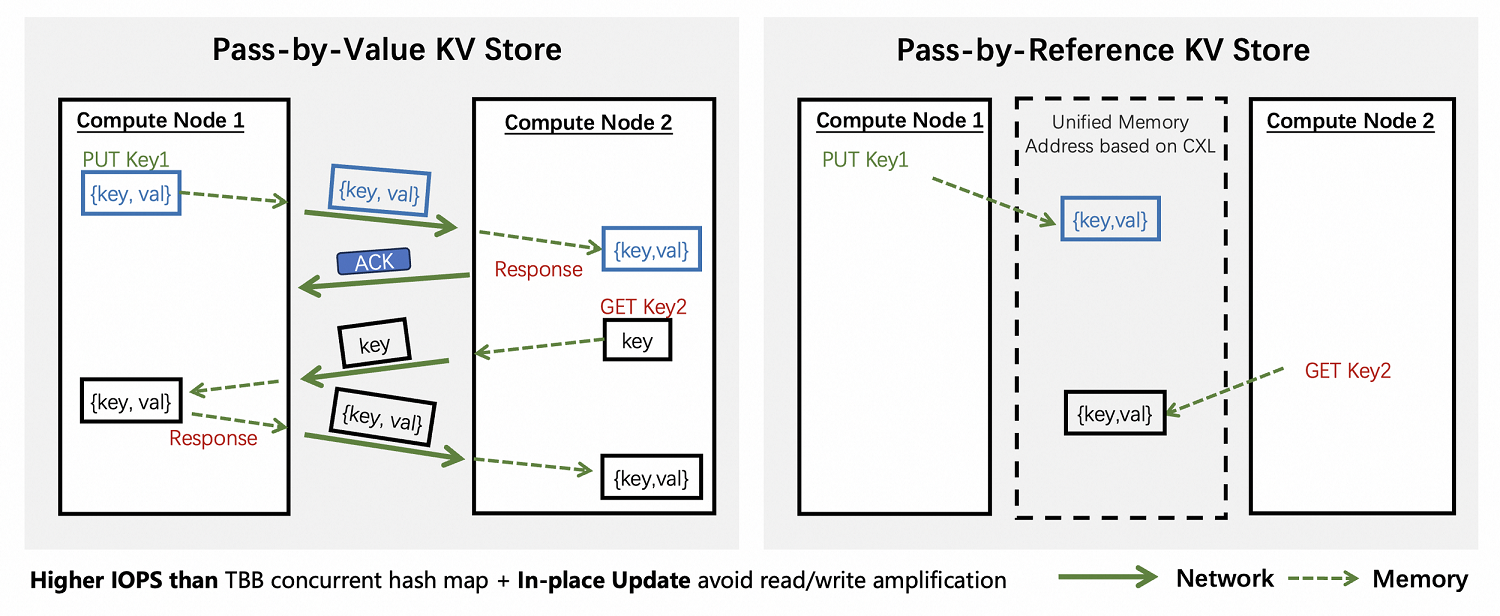

Secondly, in terms of data communication, message passing generally involves data payloads being copied from one node to another, a pass-by-value approach. DSM, alternatively, necessitates just the exchange of object references, embodying a pass-by-reference method. This facilitates access to only necessary data subparts and enables in-place updates, presenting distinct benefits for various scenarios. For example, we developed a proof of concept CXL-based pass-by-reference RPC system. It avoids both data copying and network stack processing and hence outperforms traditional RDMA-based systems by over 4x in throughput.

Lastly, the DSM’s global memory space accessibility implies a shared-everything data/state architecture. This is particularly beneficial for quickly migrating workloads. For instance, resolving load imbalances in a shared-nothing architecture necessitates heavy data repartitioning, whereas in a shared-everything setup, only small metadata representing data partition ownership need to be exchanged.

In summary, CXL-based DSM excels in situations that need high flexibility. CXL architecture naturally supports this flexibility by offering both a dynamic and efficient way to allocate and access remote memory resources. This is essential for systems that need to scale quickly and efficiently.

Nevertheless, transitioning to the CXL-based DSM paradigm isn’t just about leveraging advancements in hardware. It involves embracing a unified memory space that hosts shared states, which in turn enables quicker data transfers and migrations while uncoupling computing from memory for enhanced scalability. However, managing these shared states also presents more complexity than the conventional shared-nothing architecture, given the potential for concurrent access and the partial failures that may arise.

In essence, the challenges we face stem from the separate failure domains of the shared distributed objects and the clients referencing them. This separation allows clients the freedom to join, leave, or even fail during operations, as they create, release, and exchange references to remote memory. While this flexibility is user-friendly, it poses significant challenges in memory management. We’ve termed this the Partial Failure Resilient DSM (RDSM), to set it apart from scenarios where all clients fail simultaneously. We believe that effectively handling partial failures is crucial to expanding the use of DSM.

To address these challenges, our work, CXL-SHM [5], proposes an approach that employs reference counting to reduce the manual workload involved in reclaiming remote memory that has been allocated. However, a standard reference counting system is not robust against system failures.

As an illustration, we split the process of maintaining the automatic reference count into two distinct actions. When attaching a reference, the first step is to increment the reference count. Following that, we link the reference by assigning its value to the address of the referenced space. Detaching a reference is a similar two-step process: decrement the reference count, and then set the reference to NULL. These procedures might be straightforward as it has only two simple steps, but their sequence is critical. If a system crash occurs between these two steps, problems arise.

For example, if we increase the reference count but fail to set the reference due to a crash, this could lead to a memory leak. A simple solution is to use a lock to ensure that the modification of the reference count is idempotent, and to log this change for recovery purposes. Unfortunately, this method is effective only in scenarios where all clients fail at the same time. In situations of partial failure, where a client may crash after acquiring a lock, this could lead to further complications. To address this, we have shifted from using lock operations in our original algorithm to a non-blocking update process using a distributed vector clock. This adjustment enables us to maintain a globally consistent timeline without the need for locking mechanisms. The paper provides additional details on this methodology.

Beyond the technique detail of implementing a partial failure resilient CXL-based DSM, we found that this paradigm aligns perfectly with the evolving trend of cloud computing, which is essentially a relentless pursuit of extreme elasticity. With the development from IaaS to Container-as-a-Service, to currently Serverless, cloud infrastructure advancements have led to increasingly finer billing granularity. This shift allows both users and providers to reach higher levels of resource utilization. However, traditional applications often fail to take full advantage of these developments due to their inherent lack of elasticity, particularly the localized nature of their memory states. Overcoming this limitation stands out as a crucial opportunity for future CXL research.

[1] Donghyun Gouk, Sangwon Lee, Miryeong Kwon, and Myoungsoo Jung. 2022. Direct Access High-Performance Memory Disaggregation with DirectCXL. In 2022 USENIX Annual Technical Conference (USENIX ATC 22). 287–294.

[2] LI, H., BERGER, D. S., HSU, L., ERNST, D., ZARDOSHTI, P., NOVAKOVIC, S., SHAH, M., RAJADNYA, S.,LEE, S., AGARWAL, I., ET AL. Pond: Cxl-based memory pooling systems for cloud platforms. In Proceedings of the 28th ACM International Conference on Architectural Support for Programming Languages and Operating Systems, Volume 2 (2023), pp. 574–587.

[3] 2022. Compute Express Link 3.0. https://www.computeexpresslink.org/_files/ugd/0c1418_a8713008916044ae9604405d10a7773b.pdf

[4] 2022. Compute Express Link CXL 3.0 is the Exciting Building Block for Disaggregation. https://www.servethehome.com/compute-expresslink-cxl-3-0-is-the-exciting-building-block-for-disaggregation/

[5] ZHANG, M., MA, T., HUA, J., LIU, Z., CHEN, K., DING, N., DU, F., JIANG, J., MA, T., AND WU, Y. Partial failure resilient memory management system for (cxl-based) distributed shared memory. In Proceedings of the 29th Symposium on Operating Systems Principles (2023), pp. 658–674.

Dr. Teng Ma is a researcher at Alibaba. His research interests include Remote Direct Memory Access (RDMA), CXL (memory pooling and sharing), Kernel scheduling, Non-Volatile Memory (NVM), Out-of-core Graph Processing, and key-value store systems.

Mingxing Zhang is a tenure-track Assistant Professor of Computer Science at Tsinghua University. His research interests lie primarily in designing novel parallel and distributed systems that provide/implement just-right abstraction layers in novel ways.

Picture Credit: Teng Ma and Mingxing Zhang (besides featured image generated by Midjourney).

Editor: Dong Du (Shanghai Jiao Tong University)

Simplifying OS Migration: Alibaba Cloud Linux Supports Cross-Version Upgrades

101 posts | 6 followers

FollowApsaraDB - December 3, 2025

Alibaba Cloud Community - November 14, 2025

ApsaraDB - April 9, 2025

Alibaba Cloud Community - September 27, 2025

Alibaba Cloud Community - September 30, 2025

Neel_Shah - September 17, 2025

101 posts | 6 followers

Follow Remote Rendering Solution

Remote Rendering Solution

Connect your on-premises render farm to the cloud with Alibaba Cloud Elastic High Performance Computing (E-HPC) power and continue business success in a post-pandemic world

Learn More Global Internet Access Solution

Global Internet Access Solution

Migrate your Internet Data Center’s (IDC) Internet gateway to the cloud securely through Alibaba Cloud’s high-quality Internet bandwidth and premium Mainland China route.

Learn More Smart Access Gateway

Smart Access Gateway

SmartAG provides an end-to-end cloud deployment solution for connecting hardware and software to Alibaba Cloud.

Learn More Secure Access Service Edge

Secure Access Service Edge

An office security management platform that integrates zero trust network access, office data protection, and terminal management.

Learn MoreMore Posts by OpenAnolis