By Chen Qiukai (Qiusuo)

KubeDL is an open-source and Kubernetes-based AI workload management framework for Alibaba. It is an abbreviation for "Kubernetes-Deep-Learning". We hope the experience from Alibaba's large-scale machine learning and job scheduling and management can facilitate the development of the technical community. KubeDL has joined CNCF(Cloud Native Computing Foundation) as a Sandbox project. We will continue to explore best practices in cloud-native AI scenarios to help algorithm scientists innovate better and more efficiently.

KubeDL brings HostNetwork to distributed training jobs, supports computing nodes to communicate with each other through host networks to improve network performance, and adapts to the network environment of new high-performance data center architectures such as RDMA/SCC. In addition, KubeDL also brings new solutions to problems such as mutual awareness of new ports after FailOver caused by the HostNetwork mode.

GitHub Repository: https://github.com/kubedl-io/kubedl

Introduction to KubeDL: https://kubedl.io/model/intro/

The Kubernetes native container network model defines a series of "Pod-Pod" communication protocols that do not rely on NAT. The VxLAN-based Overlay network implements this model well (such as the classic Flannel) and solves the pain points of network management in many large-scale container orchestration systems. including:

• Smooth Migration of Pods: The overlay network is a virtual network with two layers built based on physical networks. Pod IP is not bound to any node. When a node is down or other hardware exceptions occur, the corresponding service Pod can be restarted on other nodes through the same IP address. As long as the underlying physical network is connected without interruption, the availability of services will not be affected. In large-scale distributed Machine Learning training, KubeDL is also a computing node failover based on the premise that "Pods may drift, but the Service is fixed";

• The Scale of Network Nodes: Classic NAT address resolution usually uses ARP broadcast protocol to automatically learn the mapping between IP and MAC addresses of adjacent nodes. However, when nodes are large, a broadcast can cause an ARP storm and network congestion. An overlay network based on tunnel crossing only needs to know the MAC addresses of a few VTEP nodes to realize packet forwarding, which reduces the pressure on the network;

• Tenant Network Isolation: The powerful Kubernetes network plug-ins extensibility, coupled with VxLAN of protocol design, can easily implement the virtual network of the subdivided to realize the network isolation between tenants;

These are all benefits brought by the virtual container network, but the cost of virtualization is the loss of network performance: Pod and host network are connected through a pair of Veth virtual bridge devices to realize mutual isolation of network namespaces. Every data packet communicating between "Pod-Pod" needs to go through the process of "packet-routing-Ethernet-routing-unpacking" to reach the Pod at the opposite end. Slowing down network performance will also increase the processing pressure of the host core network stack and thus increase the load.

With the rise of distributed training modes such as multi-modal model training and large-scale dense parameter model training, as well as the explosion of data set the scale and characteristic parameters, network communication has become the "shortboard" of distributed training efficiency. The most direct way to optimize network performance is to use HostNetwork communication, eliminating the overhead of container network virtualization. With the maturity of RDMA (RoCE), Nvidia GPU Direct, and other technologies, these new high-performance network technologies are being applied to large-scale commercial production environments to improve the efficiency of model training. By bypassing the overhead of the kernel network stack and technologies such as data direct reading with zero-copy, they make full use of network bandwidth. Efficiency Is Money! These native high-performance network communication library primitives, such as RDMA_CM, also depend on host network implementations and are unable to communicate directly based on Pod virtual network.

KubeDL extends the communication model of host networks based on standard container network communication to support distributed training. It also solves common problems in distributed training, such as port conflicts and mutual awareness of new ports after failover, to realize high-performance networks.

In the standard container network communication model, different workload roles such as Master/Worker/PS realize service discovery through headless service. Pods communicate with each other through constant domain names. CoreDNS realizes domain name to Pod IP resolution. Since Pods can drift but service and its affiliated domain names are constant, failover can be well realized even if runtimes of some Pods are abnormal. Reconnect with other Pods after the abnormal Pods are pulled up again.

apiVersion: training.kubedl.io/v1alpha1

kind: "TFJob"

metadata:

name: "mnist"

namespace: kubedl

spec:

cleanPodPolicy: None

tfReplicaSpecs:

PS:

replicas: 2

restartPolicy: Never

template:

spec:

containers:

- name: tensorflow

image: kubedl/tf-mnist-with-summaries:1.0

command:

- "python"

- "/var/tf_mnist/mnist_with_summaries.py"

- "--log_dir=/train/logs"

- "--learning_rate=0.01"

- "--batch_size=150"

volumeMounts:

- mountPath: "/train"

name: "training"

resources:

limits:

cpu: 2048m

memory: 2Gi

requests:

cpu: 1024m

memory: 1Gi

volumes:

- name: "training"

hostPath:

path: /tmp/data

type: DirectoryOrCreate

Worker:

replicas: 3

restartPolicy: ExitCode

template:

spec:

containers:

- name: tensorflow

image: kubedl/tf-mnist-with-summaries:1.0

command:

- "python"

- "/var/tf_mnist/mnist_with_summaries.py"

- "--log_dir=/train/logs"

- "--learning_rate=0.01"

- "--batch_size=150"

volumeMounts:

- mountPath: "/train"

name: "training"

resources:

limits:

cpu: 2048m

memory: 2Gi

requests:

cpu: 1024m

memory: 1Gi

volumes:

- name: "training"

hostPath:

path: /tmp/data

type: DirectoryOrCreateTake a Tensorflow distributed training job with a classic PS-Worker architecture as an example. The worker is responsible for calculating the gradient of parameters, and PS is responsible for aggregating, updating, and broadcasting parameters. Therefore, each PS may establish connections and communicate with all Workers, and vice versa.

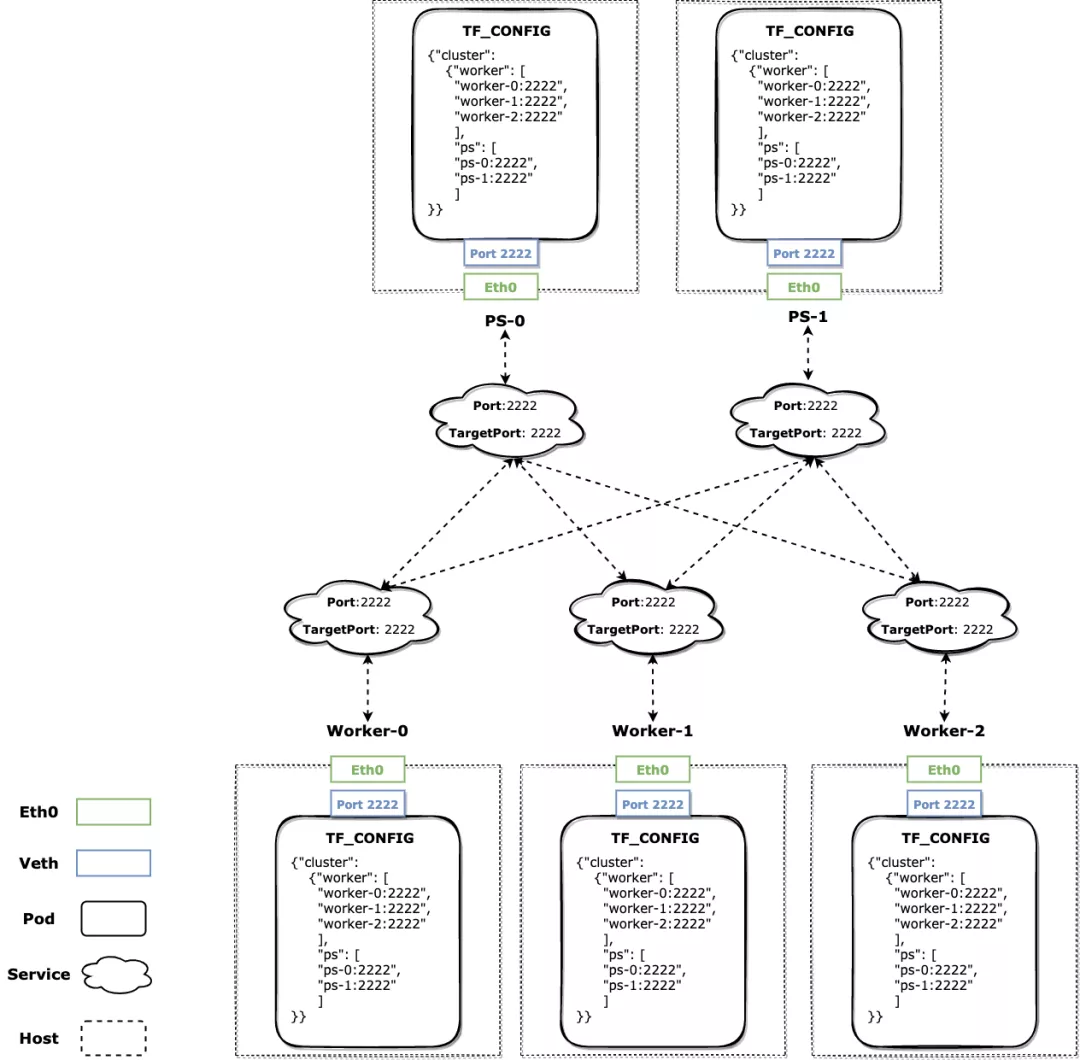

In the implementation of the Tensorflow framework, such a job topology is described by a TF Cluster Spec structure. Each Role (PS or Worker) instance contains an Index to identify its index number. You can obtain the service address or other Role instances through Role + Index to establish a connection to start communication. In the standard container network type, if the user submits the following TFJob, KubeDL will generate a TF Cluster Spec and pass it in the form of environment variables, and be received by the framework. At the same time, Headless Service is prepared for each Role instance. Its Endpoint domain name address is the service address in the corresponding TF Cluster Spec. Each Pod has an independent Linux Network Namespace, and the Pod's port address space is also isolated from each other. Therefore, the same container port can also be used for scheduling to the same node.

At this point, instances of different roles can start distributed training and communication through the native mode of Tensorflow.

The benefits of standard container networks are obvious. Simple and intuitive network settings and failover-friendly network fault tolerance all enable this solution to meet the needs of most scenarios. However, how should it work in scenarios where there are demands for high-performance networks? KubeDL provides a solution for the host network.

Following the above example, the method of enabling the host network is simple, just add an annotation to TFJob, and the rest of the job configuration does not need to be modified, as shown below:

apiVersion: training.kubedl.io/v1alpha1

kind: "TFJob"

metadata:

name: "mnist"

namespace: kubedl

annotations:

kubedl.io/network-mode: host

spec:

cleanPodPolicy: None

tfReplicaSpecs:

PS:

...

Worker:

...When KubeDL finds that the job claims to use the host network, it completes the connection settings for the network by performing the following steps:

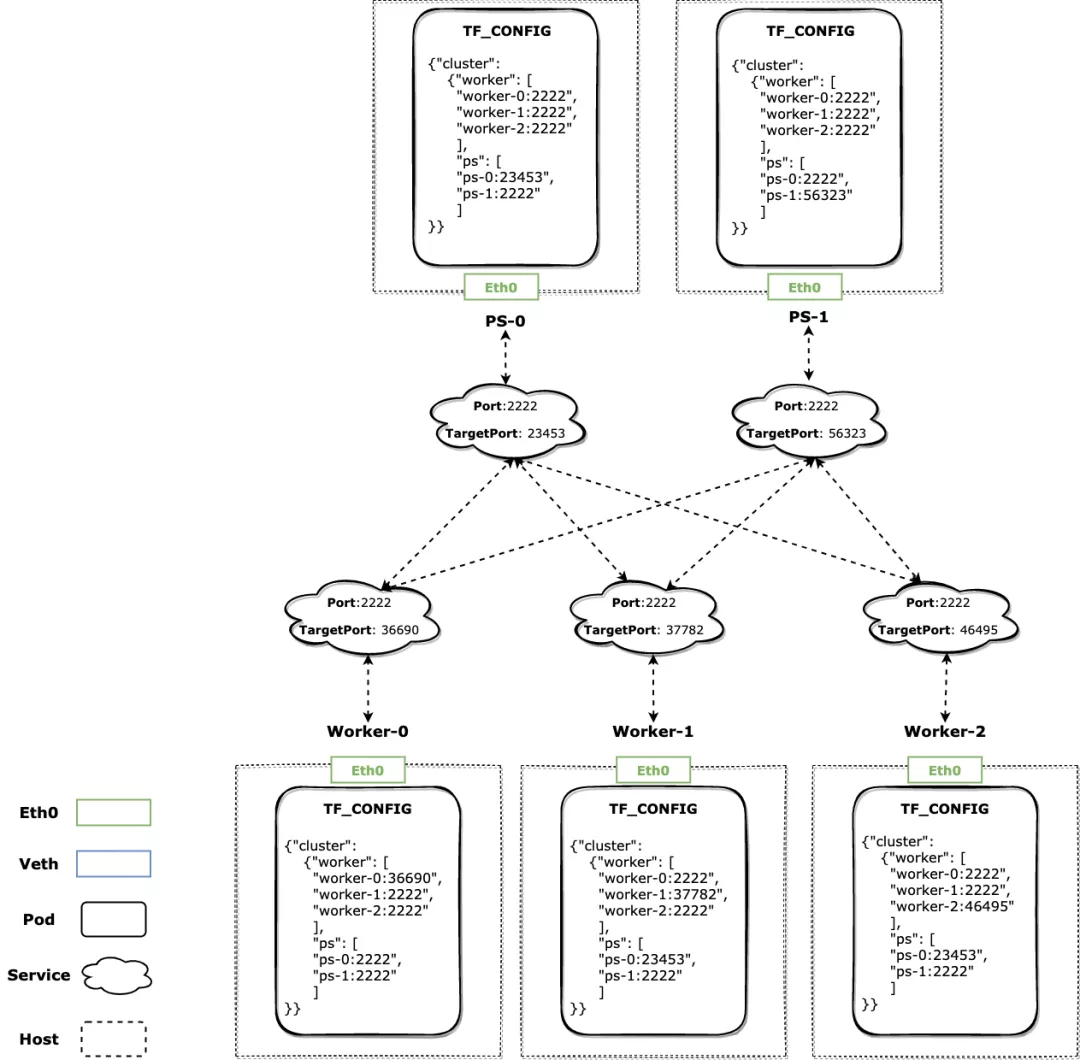

• When creating Pods, no fixed ports are used, but a host port is randomly selected within a certain port range, and the corresponding exposed container port number is set, which is passed to the subsequent control flow through context;

• Enable HostNetwork for Pods and set the DNS resolution policy to Host first;

• Headless Service is no longer created. Instead, it is a normal traffic forwarding Service. The exposed port is the original constant value and the target port is the real value of the Pod;

• In the generated TF Cluster Spec, the corresponding Role + Index shows that the Local address port is the real host port, and the address ports of other Role instances are constant, which can be correctly forwarded through Service no matter how the other Pods drift;

• When Failover occurs, KubeDL will re-select the port for the rebuilt Pods, and the newly started Pods will obtain the new Local address port through TF_CONFIG. At the same time, KubeDL ensures that the target port of the corresponding Service is correctly updated, and other roles connected to it can continue to communicate after the Service target port is updated.

Such a host network built according to the topology of the training job is ready to be replaced. The difference is that all Pods share a Network Namespace with the host, so they share the port number of the host. The communication between Pods has also changed from resolving domain names to Pod IP and establishing connections through Service to realize traffic forwarding. On the other hand, the TF Cluster Spec has changed but the mode of the native Tensorflow has not changed. The current Pod directly obtains the Local Port listening, while the other Pod addresses seem to be constant. The domain name corresponding to the Service and the exposed port are always constant. Only the target port may change with FailOver, all of which become insensitive through KubeDL processing.

We use Tensorflow as an example of a host network because its Cluster Spec complexity is more representative. However, KubeDL's built-in workloads (such as PyTorch, XGBoost, etc.) have also implemented the network topology settings corresponding to the host network type for the behavior of its framework.

KubeDL implements the communication mode based on the native host network by extending the existing standard container network communication mode of distributed training jobs. While gaining network performance gains in common training scenarios, it adapts to the environment of high-performance network architectures such as RDMA/SCC and improves the operating efficiency of distributed training jobs. This communication mode has been widely used in Alibaba's internal production clusters. For example, the latest AliceMind super-large model released by DAMO Academy at Apsara Conference is the product of training in high-performance computing clusters through the KubeDL host network + RDMA. We expect more developers to participate in the construction of the KubeDL community and optimize the scheduling and running efficiency of deep learning workloads together!

Visit the KubeDL page to learn more about the project.

AppActive: An Open-Source Multi-Active Disaster Tolerance Architecture

ChaosBlade-Box, a New Version of the Chaos Engineering Platform Has Released

664 posts | 55 followers

FollowAlibaba Cloud Native Community - March 29, 2024

Alibaba Developer - April 26, 2022

Alibaba Container Service - January 15, 2026

Alibaba Cloud Native - April 2, 2024

Alibaba Clouder - November 28, 2018

Alibaba Cloud Native Community - November 3, 2025

664 posts | 55 followers

Follow Platform For AI

Platform For AI

A platform that provides enterprise-level data modeling services based on machine learning algorithms to quickly meet your needs for data-driven operations.

Learn More Accelerated Global Networking Solution for Distance Learning

Accelerated Global Networking Solution for Distance Learning

Alibaba Cloud offers an accelerated global networking solution that makes distance learning just the same as in-class teaching.

Learn More Container Service for Kubernetes

Container Service for Kubernetes

Alibaba Cloud Container Service for Kubernetes is a fully managed cloud container management service that supports native Kubernetes and integrates with other Alibaba Cloud products.

Learn More Networking Overview

Networking Overview

Connect your business globally with our stable network anytime anywhere.

Learn MoreMore Posts by Alibaba Cloud Native Community