USENIX Annual Technical Conference (USENIX ATC) is a top-level conference in the field of computer systems, which was selected onto the list of A-class International Conferences recommended by the China Computer Association (CCF). 64 out of 341 papers contributed to this conference were accepted, with an acceptance rate of 18.8%.

The Alibaba Cloud Serverless Team was the first to propose a decentralized fast image distribution technology in the FaaS scenario. The team's paper was accepted at USENIX ATC'21. The following explains the core contents of this paper, especially the end-to-end latency during function cold start for Custom Container Runtime provided by Alibaba Cloud.

For information related to the paper, please see this link.

Serverless Computing (FaaS) is a new cloud computing paradigm that allows customers to focus on their code based on business logic. The virtualization of the underlying system, resource management, and auto scaling are maintained by cloud system service providers. Serverless Computing supports container ecosystems and unlocks a variety of business scenarios. However, due to the complex images, large volumes of containers, and high dynamic workloads of FaaS, many industry-leading products and technologies cannot be applied to the FaaS platform. Therefore, efficient container distribution is problematic on the FaaS platform.

FaaSNet is designed and proposed in this paper. FaaSNet is a highly scalable lightweight system middleware. It uses the image acceleration format for container distribution, which targets large-scale container image startup (function cold start) under burst traffic in FaaS. The core component of FaaSNet includes Function Tree (FT), which is a decentralized and self-balanced binary tree topology. All nodes in this tree topology are equivalent.

The FaaSNet is integrated into function compute products. The experimental results show that, compared with native Function Compute (FC), FaaSNet provides 13.4 times the container startup speed for FC. For unstable end-to-end latency due to burst in requests, FaaSNet takes 75.2% less time than FC to restore end-to-end latency to a normal level.

Container image customization has been supported by FC since September 2020. AWS Lambda released the Lambda container image in December 2020, indicating that FaaS is embracing the container ecosystem. In addition, FC also released image acceleration for function compute in February 2021. The two features of FC unlock more FaaS application scenarios and allow users to migrate their container business logic to FC seamlessly, with GB-level image startup in seconds.

When the Function Compute backend encounters a large number of equests that cause excessive cold starts, there may still be great pressure on the bandwidth even with the help of the container registry. Multiple machines will pull image data from the same container registry at the same time, causing bandwidth bottlenecks or throttling of container mirroring service, which makes it take longer to pull and download image data (even with the image acceleration.) A simpler solution is to improve the bandwidth of the function compute background Registry. Unfortunately, this cannot uproot the problems and will cause additional system overhead.

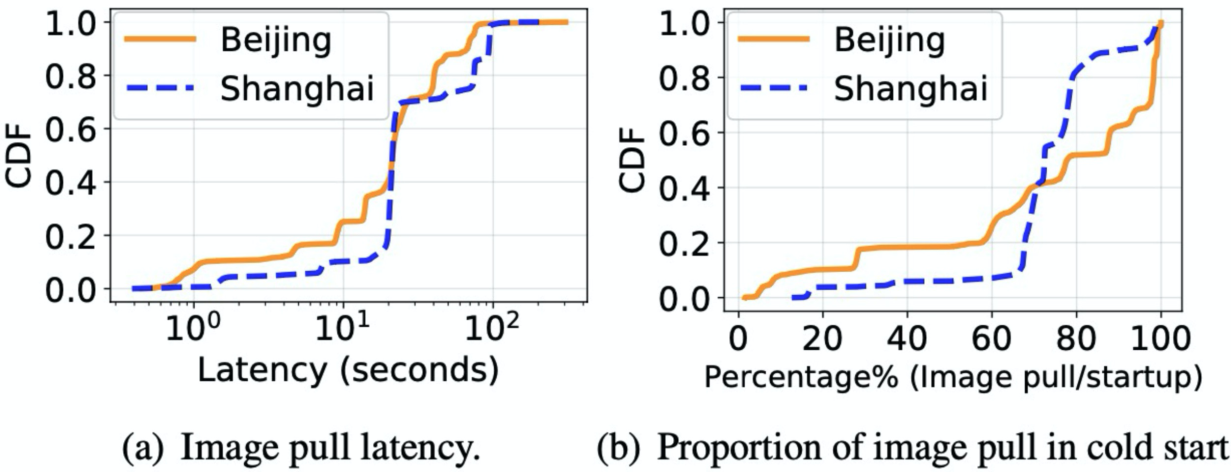

First, we analyzed the online data of two regions (Beijing and Shanghai):

Workload analysis shows that most of the time for cold start of functions is spent acquiring container image data. Therefore, optimizing this delay can improve the cold start performance of functions significantly.

According to the online O&M history, a big user will instantly pull up 4,000 function images. The size of these images is 1.8 GB before decompression and 3-4 GB after. A throttling alarm for container service is received immediately after a heavy request arrives, which prolongs the latency of some requests. Sometimes a container startup failure message may be received when the situation gets worse. These problems need to be solved urgently.

Several related technologies in academia and the industry can accelerate image distribution, such as Alibaba's DADI, Dragonfly, and Uber's open-source Kraken.

DADI provides an efficient image acceleration format for on-demand reading. FaaSNet also utilizes the container acceleration format. In image distribution technology, DADI adopts a tree topology and organizes the networking between nodes with an image-layer-granularity mode. One layer corresponds to one tree topology, and each VM exists in multiple logical trees. The DADI P2P distribution requires root nodes with several performance specifications, such as CPU and bandwidth, to play the data back-to-source role and maintain the peer manager role in the topology. The tree structure of DADI is static, and the speed of container provisioning generally does not last long. Therefore, by default, the DADI root nodes disband the topology logic in 20 minutes instead of maintaining it all the time.

Dragonfly is also a P2P-based distribution network for images and files. It contains the components of Supernode (Master node) and dfget (Peer node). Similar to DADI, Dragonfly relies on Supernode of large specifications in numbers to support the entire cluster. It manages and maintains a fully connected topology through the central Supernode because multiple dfget nodes contributing different pieces of the same file have achieved point-to-point transmission to the target node. Supernode performance is a potential bottleneck of the cluster throughput performance.

The origin and tracker nodes of Kraken manage the entire network as the central node, and the agent exists on each peer node. Kraken's tracker node only manages the connections of peers in the organization cluster. Kraken also allows the communications for data transmission between peers. However, Kraken is a container image distribution network in layers, and the networking logic will become a more complex full connection mode as well.

There are several things that the three industry-leading technologies above share in common. First, all three use image layers as distribution units. Since the networking logic is fine-grained, each peer node may link to multiple active data connections at the same time.

The second and the third node rely on the management of the networking logic of the central node and the coordination of peers in the cluster. The central node of DADI and Dragonfly also implements data back-to-source, which requires the deployment of several large machines to bear very high traffic in the production use, together with parameter tuning to achieve the expected performance indicators.

We reflect our design under the FC ECS architecture with the prerequisites above. Each machine in the FC ECS architecture has 2 CPU cores, 4GB of memory, and 1 Gbps of Intranet bandwidth, and the lifecycle of these machines is not reliable, which may be recycled at any time.

This causes three serious problems:

With these unsolved problems, next, we will elaborate on the FaaSNet design.

According to the three mature P2P solutions described above, function- awareness is not implemented, and the topology and logic in the cluster focus on fully connected networks, which impose certain requirements on machine performance. These presets are not compatible with the system implementation of FC ECS. So, we proposed Function Tree (FT), a function-level and function-aware logical tree topology.

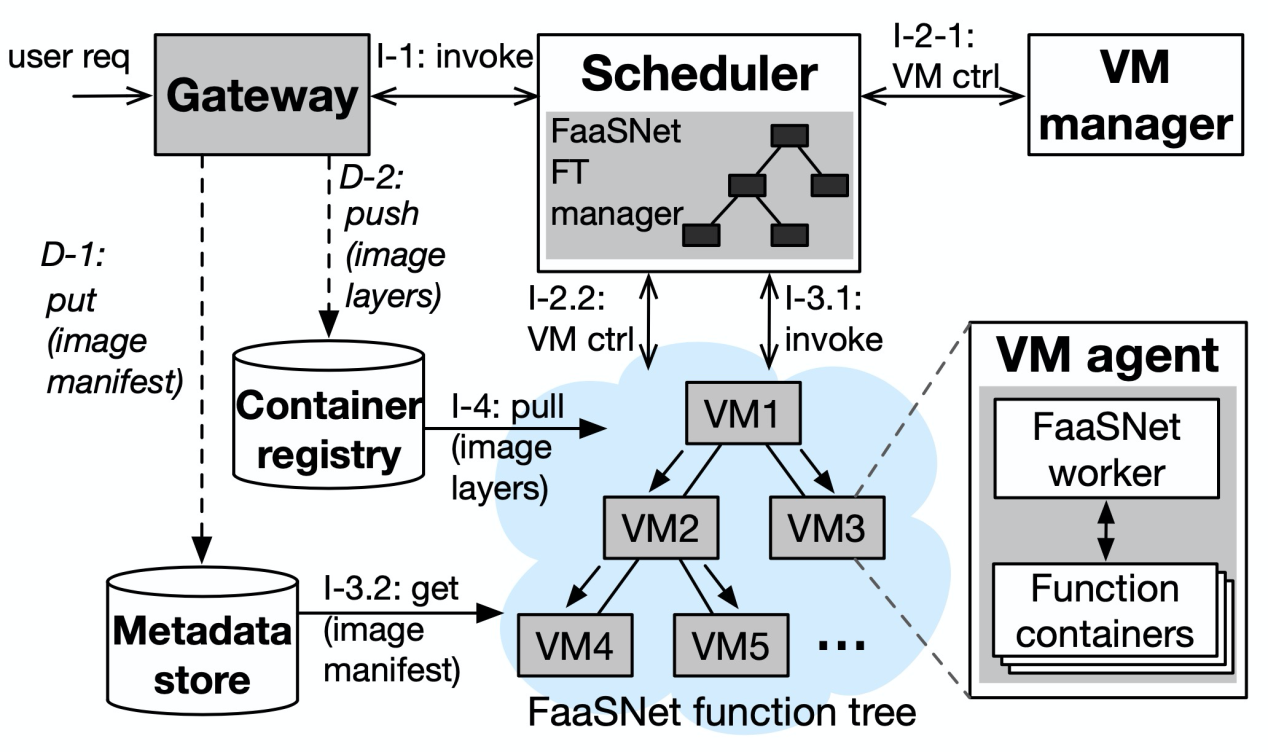

The gray part in the figure is the part of FaaSNet that has undergone system transformation, while the other white modules carry on with the existing system architecture of FC. Note: All Function Trees of FaaSNet are managed in the FC scheduler. Each VM has a VM agent that works with the scheduler to perform gRPC communication and receive upstream and downstream messages. The VM agent also obtains and distributes the image data of input and output components.

We upgraded the topology to the function/Image level to solve the three problems above, which can effectively reduce the number of network connections on each VM. Additionally, we have designed a tree topology based on the AVL tree. Next, we will elaborate on our Function Tree design.

1) Decentralized Self-Balancing Binary Tree Topology

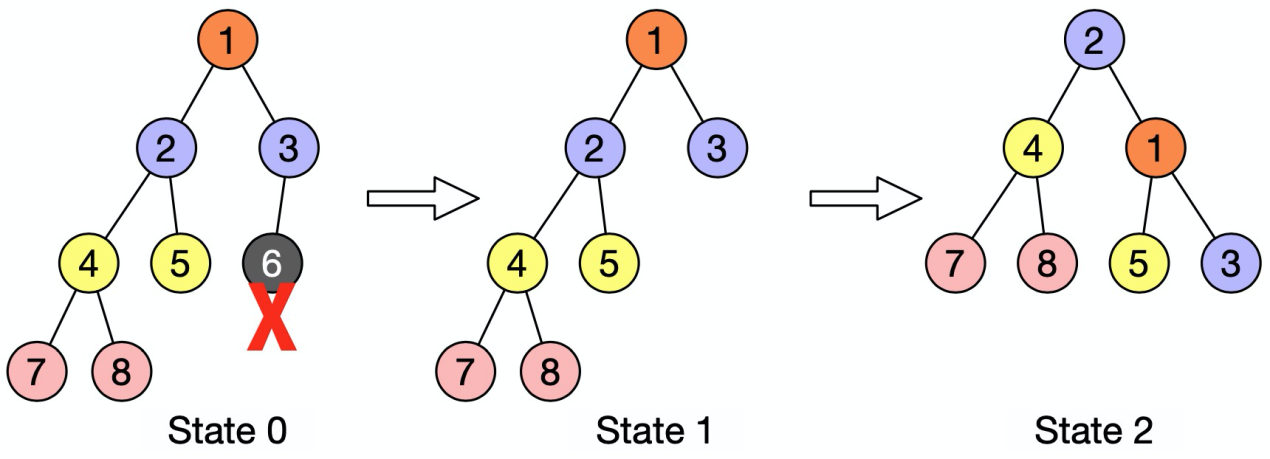

The design of FT is inspired by the AVL tree algorithm. In FT, the concept of node weight does not exist currently, and all nodes are equivalent, including the root node. When a node is added to or deleted from the tree, the entire tree maintains a perfect-balanced structure, ensuring that the absolute value of the height difference between the left and right sub-trees of any node is less than one. After a node is added or deleted, FT will adjust the shape of the tree (left/right) to reach the equilibrium structure, as shown in the right-hand example below. As shown in the following picture, Node 6 to be recycled will lead to the imbalance of the height of the left and right sub-trees with Node 1 as the parent node, and the right-hand operation is required to reach the equilibrium state. State2 represents the final state after rotation in which Node 2 becomes a new tree root node. Note: All nodes represent ECS instances in FC.

In FT, all nodes are equivalent. Their main responsibilities include pulling data from the upstream node distributing data to two child nodes downstream. Note: In FT, we do not specify the root node. The only difference between the root node and other nodes is that its upstream is the source site, and the root node is not responsible for any metadata management. The next section describes how to manage meta information.

2) Overlap of Multiple FTs on Multiple Peers

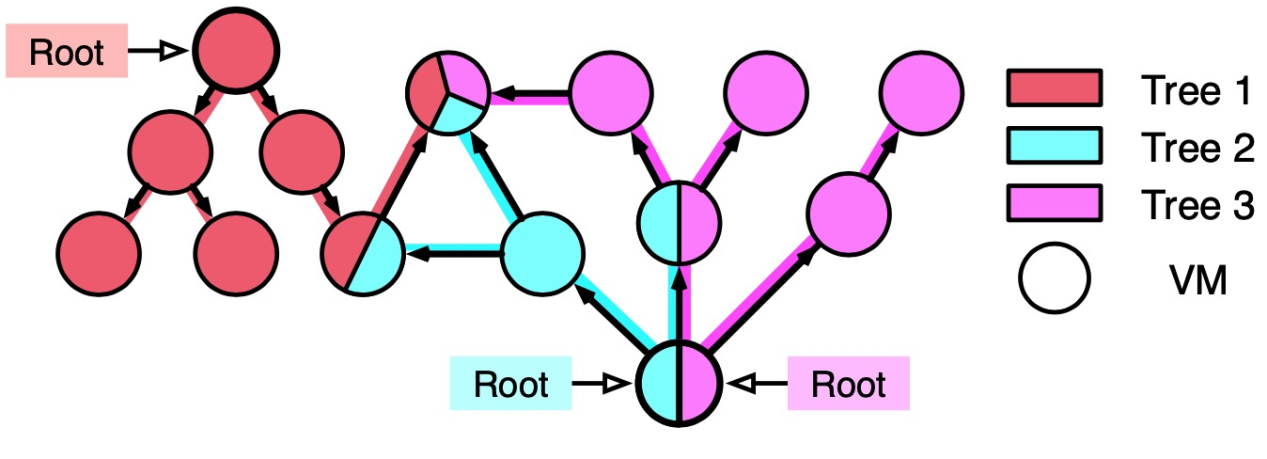

Different functions of the same user will exist in one peer node. Therefore, one peer node must be located in multiple FTs. As shown in the preceding figure, there are three FTs in the instance, which respectively belong to func 0-2. However, since FT management is independent of each other, FT can also help each node find the correct upstream node even with overlapping transmission.

In addition, we set a ceiling of the number of functions that a machine can hold to realize the function-awareness feature, solving the problem of uncontrollable data pulled down from multiple functions.

In this experiment, we select an image for the DAS application and use python as the base image. The container image is larger than 700MB before decompression with 29 layers. We select the stress testing part for interpretation. Please refer to the original paper for all the test results. For the test system, we made a comparison between Alibaba's DADI, Dragonfly, and Uber's open-source Kraken framework.

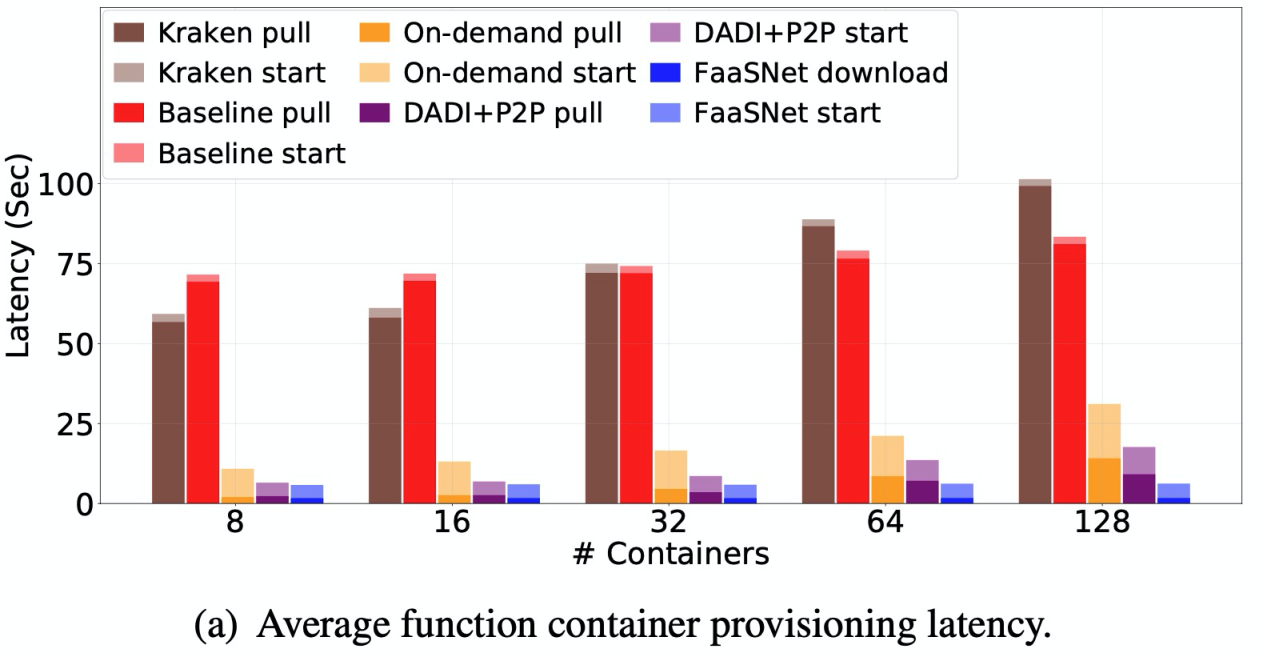

The latency recorded in the stress testing part is the user-perceived average latency of end-to-end cold start. Compared with the traditional FC, the image acceleration feature significantly alleviates the end-to-end latency. However, as the concurrency increases, more servers pull data from the central container registry at the same time, which results in the competition for network bandwidth that causes an increase in end-to-end latency (orange and purple bar.) However, in FaaSNet, due to the decentralized design, only one root node pulls data from the source Station and distributes the data downward, regardless of the concurrency pressure on the source station. Therefore, the architecture features high system scalability, and the average latency does not rise when the concurrency pressure increases.

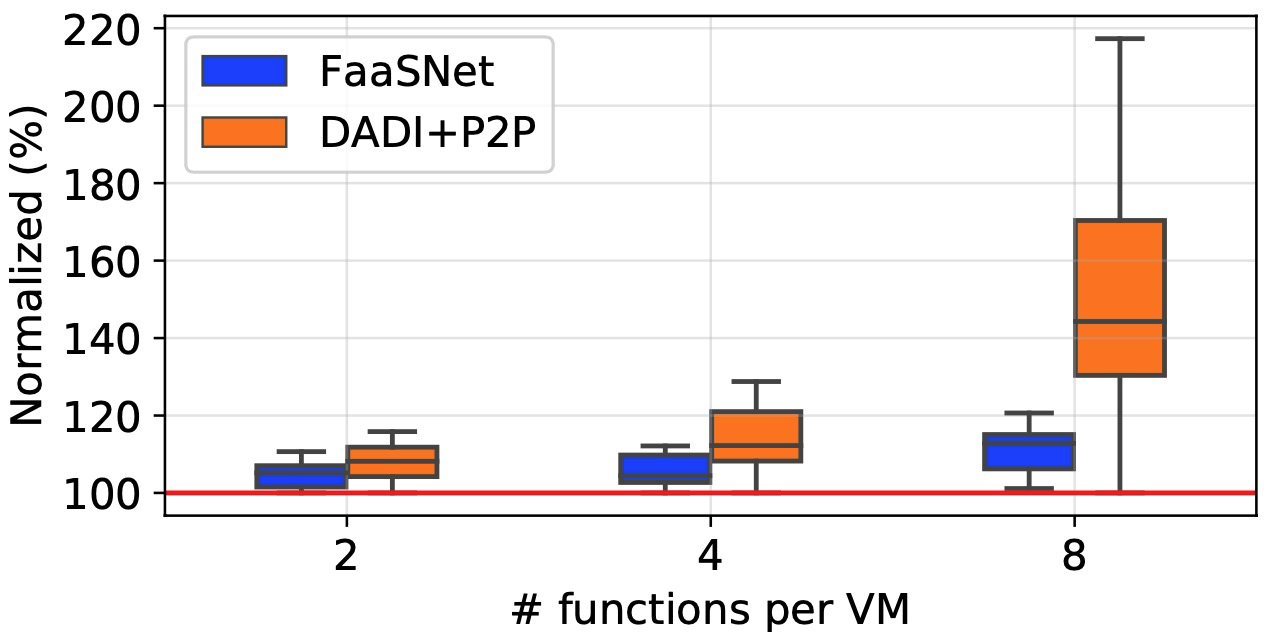

At the end of the stress testing, we explored how well functions (multiple functions) of different images would perform on the same VM. Here, we make a comparison between FC (DADI + P2P) with image acceleration enabled and DADI P2P equipped and FaaSNet.

The vertical axis in the preceding figure indicates the standardized end-to-end latency. As the number of functions in different images increases, the layer increases and the specification of each ECS instance in FC becomes smaller with excessive bandwidth pressure on each VM. There is performance degradation in DADI P2P, and the end-to-end latency has been extended to more than 200%. However, since FaaSNet establishes image-level connections and the number of connections is much lower than the layer tree of DADI P2P, good performance can be maintained.

High scalability and fast image distribution can help FaaS service providers unlock custom container Image scenarios. FaaSNet uses the lightweight, decentralized, and self-balanced Function Tree to avoid the performance bottlenecks caused by the central nodes. It does not introduce additional system overhead and fully reuses the existing FC system components and architecture. FaaSNet can perform real-time networking to realize function-awareness based on the dynamic nature of workloads without workload analysis and pre-processing.

FaaSNet targets FaaS and many cloud-native scenarios, such as Kubernetes and Alibaba SAE, to deal with burst traffic and resolve the pain points that affect user experience due to excessive cold start. This fundamentally solves the problem of slow container cold start.

FaaSNet is the first cloud vendor in China to publish a paper on container startup acceleration technology to cope with burst traffic in Serverless scenarios at a top international conference. We hope this work can provide new opportunities for the container-based FaaS platform, fully opening the door to embrace the container ecosystem and unlocking more application scenarios, such as machine learning and big data analytics.

Alibaba Cloud Sets the Standard for Open Application Architecture in Cloud Computing

Technical Interpretation of Gartner APM Magic Quadrant: On-Demand Storage

208 posts | 12 followers

FollowAlibaba Cloud Community - November 25, 2021

Alibaba Container Service - July 31, 2024

Alibaba Cloud Native Community - November 23, 2023

Alibaba Cloud Community - April 25, 2022

Alibaba Clouder - November 9, 2020

Alibaba Clouder - December 11, 2020

208 posts | 12 followers

Follow Function Compute

Function Compute

Alibaba Cloud Function Compute is a fully-managed event-driven compute service. It allows you to focus on writing and uploading code without the need to manage infrastructure such as servers.

Learn More ECS(Elastic Compute Service)

ECS(Elastic Compute Service)

Elastic and secure virtual cloud servers to cater all your cloud hosting needs.

Learn More Serverless Workflow

Serverless Workflow

Visualization, O&M-free orchestration, and Coordination of Stateful Application Scenarios

Learn More Serverless Application Engine

Serverless Application Engine

Serverless Application Engine (SAE) is the world's first application-oriented serverless PaaS, providing a cost-effective and highly efficient one-stop application hosting solution.

Learn MoreMore Posts by Alibaba Cloud Native