By Bruce Wu

This is the second article of the serverless Kubernetes framework series, focusing on Fission. For more information about the background knowledge of serverless products and about Kubeless, check out the first article of this series.

Fission is an opensource serverless product developed under the leadership of a private cloud service provider Platform9. Fission takes advantage of the flexible and powerful orchestration capabilities of Kubernetes to manage and schedule containers. This allows Fission to focus on developing the functions-as-a-service (FaaS) feature. The goal of Fission is to become an opensource version of AWS Lambda. According to Cloud Native Computing Foundation (CNCF), Fission is a serverless platform product.

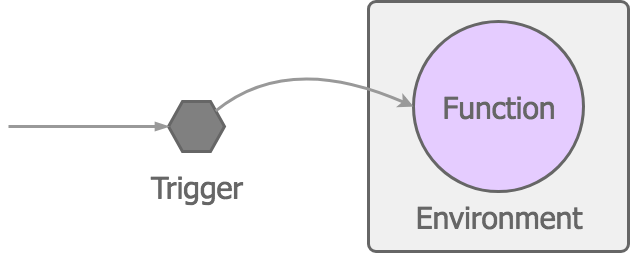

Fission has three core concepts: Function, Environment , and Trigger. There relationships are presented in the following figure:

1. Function: the snippet that is written by using a specific language for execution.

2. Environment: the special language environment that is used to run the user function.

3. Trigger: used to associate functions and event sources. If you compare event sources to producers, and functions to executors, triggers are a bridge that connects them together.

Fission has three key components: Controller, Router, and Executor.

1. Controller: provides operation interfaces for adding, deleting, and updating fission resources, including: functions, triggers, environments, and Kubernetes event watches. It is the main object of the Fission command line interface (CLI) that is responsible for interaction.

2. Router: obtains access to the function, and implements the HTTP trigger. It is responsible for forwarding user requests and events generated by various source events to the target function.

3. Executor: Fission has two types of executors: PoolManager and NewDeploy. These executors control the lifecycle of Fission functions.

This section describes how Fission works from the following perspectives:

1. Function executor: the basis for understanding how Fission works.

2. Pod-specialization: the key to understand how Fission builds executable functions based on the user source code.

3. Trigger: the gate for understanding various methods that trigger the execution of a Fission function.

4. Auto-scaling: the shortcut for understanding how Fission dynamically adjusts the number of functions based on the work load.

5. Log processing: the effective measure for understanding how Fission processes various function logs.

The study of this article is made based on Kubeless v1.2.0 and Kubernetes v1.13.

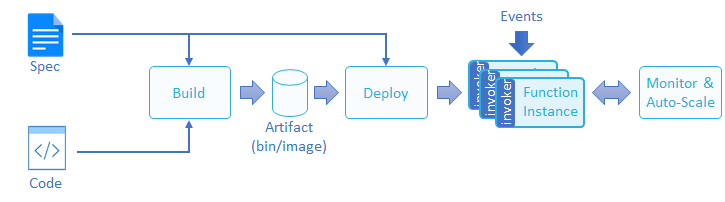

The lifecycle of a function is defined as follows by CNCF. The lifecycle here refers to the general process of function building, deployment, and running.

To understand Fission, you need to first understand how it manages the lifecycle of its functions. The function executor of Fission is a key component that Fission uses to control the lifecycle of its functions. Fission has two types of executors: PoolManager and NewDeploy. They are described separately as follows:

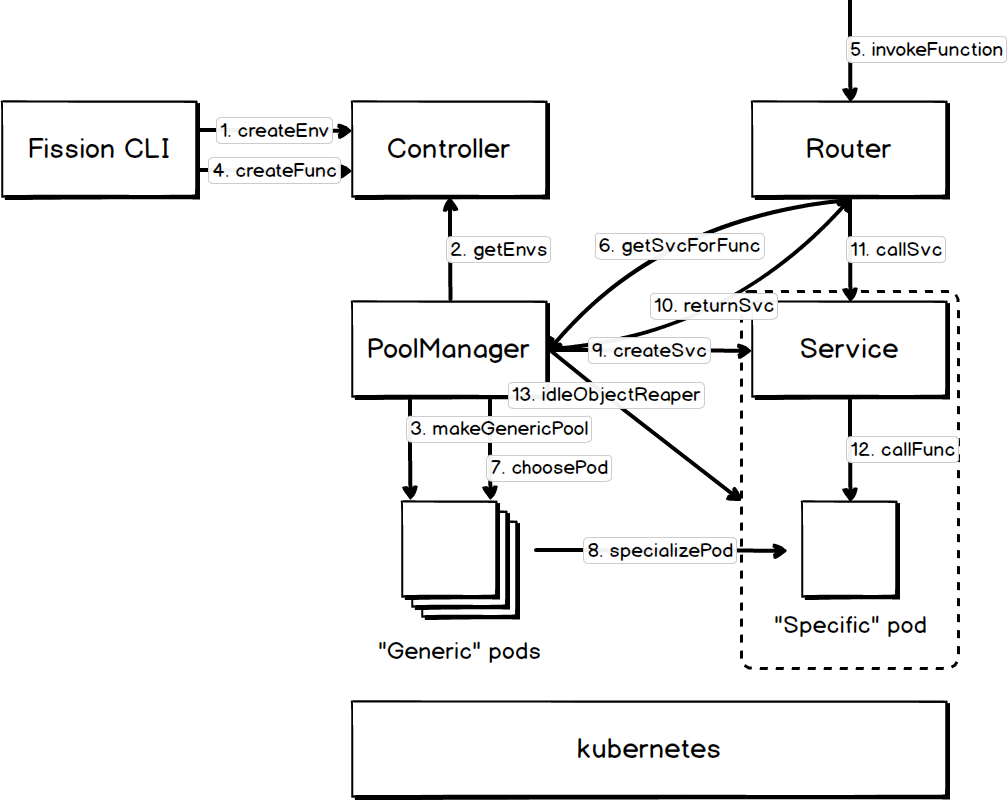

By using the pooling technology, PoolManager maintains a specified number of generic pods for each environment, and specializes the required pods when a function is triggered. This significantly reduces the cold start time of a function. In addition, PoolManager automatically clears functions that have not been accessed for a certain period of time to reduce the idling cost. The following figure shows how PoolManager works.

The lifecycle of a function is described as follows:

1. Fission CLI is used to send a request to the controller, to build the required language environment for running the function. For example, you can use the following command to create a Python runtime environment.

fission environment create --name python --image fission/python-env2. PoolManager regularly synchronizes the environment resources list. For more information, see eagerPoolCreator.

3. PoolManager traverses the environment resources list, and creates a generic pod pool for each environment by using the deployment object. For more information, see MakeGenericPool.

4. Use Fission CLI to send a request to the controller to create the function. In this stage, the controller merely persists the storage of the source code and other information of the function, and does not really build an executable function. For example, you can use the following command to create a function named hello. The function runs in the previously created Python runtime environment, the source code comes from hello.py, and the executor is poolmgr.

fission function create --name hello --env python --code hello.py --executortype poolmgr5. Router receives the request to trigger the function, and loads the corresponding information of the target function.

6. Router sends a request to the executor to obtain access to the function. For more information, see GetServiceForFunction.

7. PoolManager randomly chooses a pod as the carrier to run the function from the generic pod pool of the runtime environment specified for the function. Here, modify the tag of the pod to make it "independent" from the deployment. For more information, see _choosePod. K8s automatically creates a new pod after it detects that the actual number of pods managed by the deployment object is smaller than the number of target replicas. This explains how K8s ensures the number of pods remains the same in the generic pod pool.

8. Specialize the chosen pod. For more information, see specializePod.

9. Create a ClusterIP type service for the specific pod. For more information, see createSvc.

10. Return the service information of the function to the router, which will then cache the serviceUrl to avoid sending requests to the executor too frequently.

11. Router uses the returned serviceUrl to access the function.

12. The request will finally be routed to the pod that runs the function.

13. If the function has not been accessed for a specified period of time, it will be deleted automatically, together with the pod and service of this function. For more information, see idleObjectReaper.

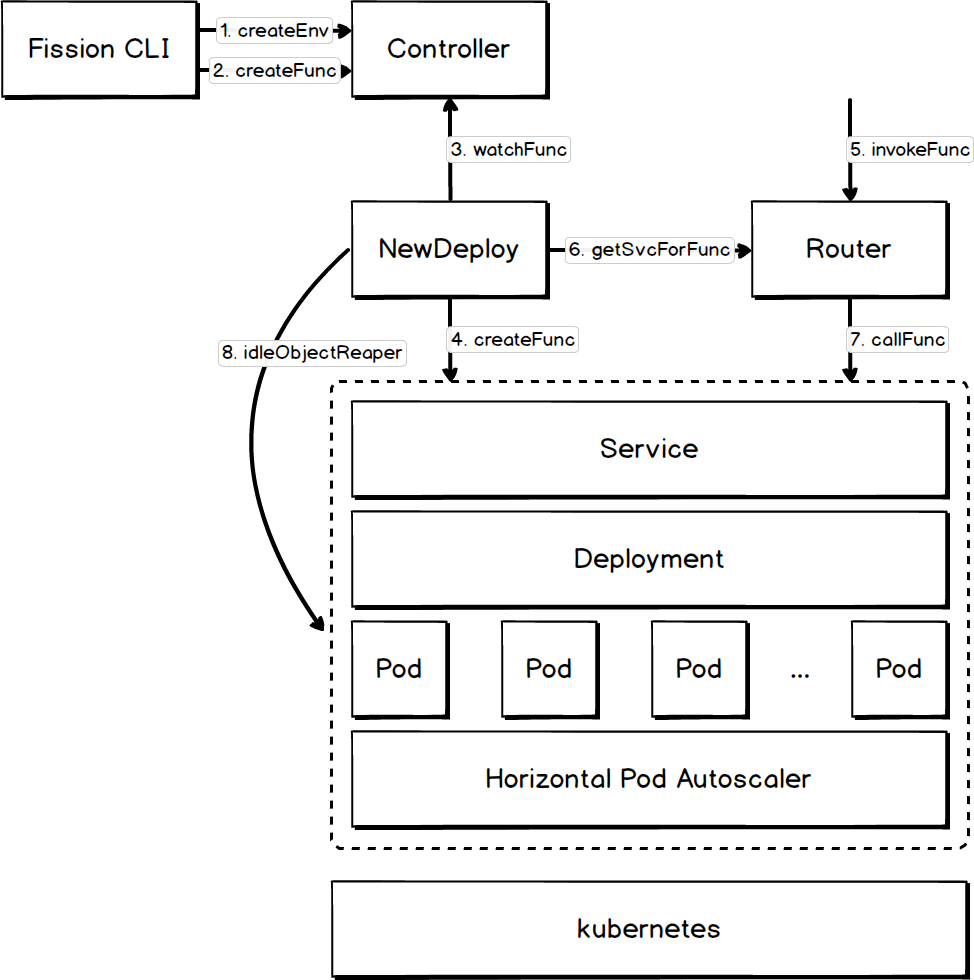

PoolManager properly balances the cold start time and the idling cost of a function, but it cannot enable the function to automatically scale up and down according to measurement metrics. The NewDeploy executor implements the auto-scaling and load balancing of pods that run the function. The following figure shows how NewDeploy works.

The lifecycle of a function is described as follows:

1. Fission CLI is used to send a request to the controller, to build the required language environment for running the function.

2. Use Fission CLI to send a request to the controller to create the function. For example, you can use the following command to create a function named hello. The function runs in the previously created Python runtime environment, the source code comes from hello.py, and the executor is newdeploy. The number of target replicas is controlled between one and three, and the target CPU usage is 50%.

fission fn create --name hello --env python --code hello.py --executortype newdeploy --minscale 1 --maxscale 3 --targetcpu 503. NewDeploy registers a funcController to continuously monitor ADD, UPDATE, and DELETE events of the function. For more information, see {initFuncController](https://github.com/fission/fission/blob/v0.12.0/executor/newdeploy/newdeploymgr.go#L153).

4. After detecting an ADD event of the function, NewDeploy determines, based on the value of minscale, whether it should immediately create the corresponding resources for this function.

i. When minscale is greater than 0, NewDeploy immediately creates the service, deployment, and HPA for this function. Pods are managed by deployment, and every pod that runs this function will be specialized.

ii. When minscale is less than 0, the resources will not be created until the function is actually triggered.

5. Router receives the request to trigger the function, and loads the corresponding information of the target function.

6. Router sends a request to newdeploy to obtain access to the function. If the resources required by the function have been created, directly return the access to the function. Otherwise, return the function access after the corresponding resources are created.

7. Router uses the returned serviceUrl to access the function.

8. If the function has not been accessed for a specified period of time, the number of target replicas of the function will be reduced to minScale. However, the resources such as service, deployment, and HPA are not deleted. For more information, see idleObjectReaper.

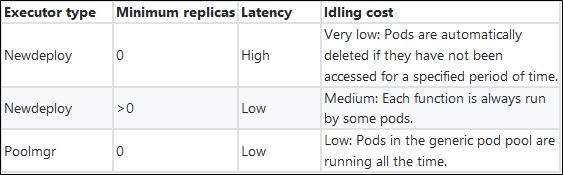

During actual usage, you may ask which executor can reduce the latency and idling cost. Characteristics of these two executors are provided in the following table.

Fission exposes the function executors to users, which increases the product usage cost. In fact, you can combine the poolmgr and newdeploy technologies. You can easily use HPA to auto-scale pods that run the functions by creating deployment objects and managing specific pods.

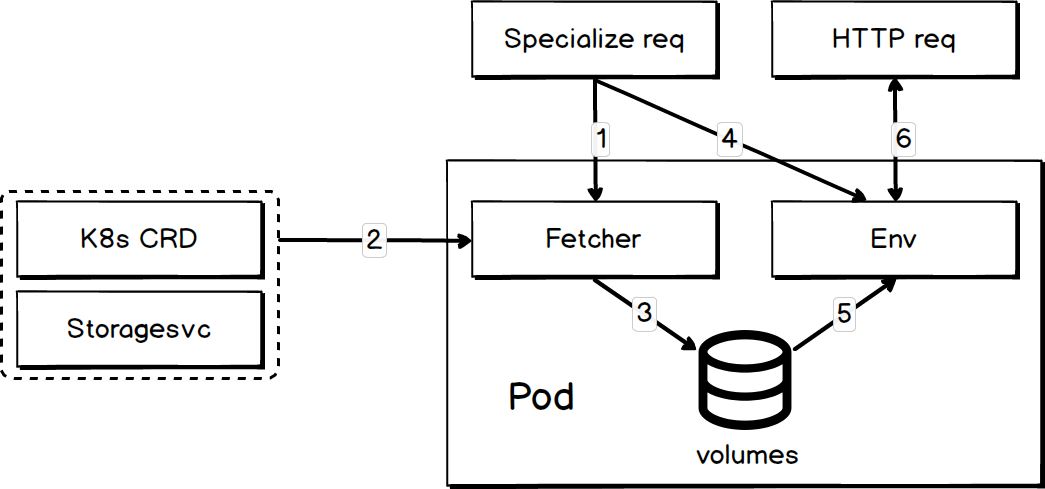

Pod specialization was referred to multiple times when we introduced the function executors. It allows Fission to convert an environment container to a function container. The core of pod specialization is to send a specialization request to a container, which will load the user function upon receiving the request. The following figure shows how it works.

A function pod consists of the following two types of containers:

The specific steps are listed as follows:

1. The Fetcher container receives a request to fetch a user function.

2. Fetcher retrieves the user function from K8s CRD or storagesvc.

3. Fetcher places the files of the function in a shared volume. If the files are compressed, Fetcher also decompresses the files.

4. The Env container receives a command to load the user function.

5. Env loads the user function that has been placed in the shared volume by Fetcher.

6. After the specialization is complete, the Env container begins to process user requests.

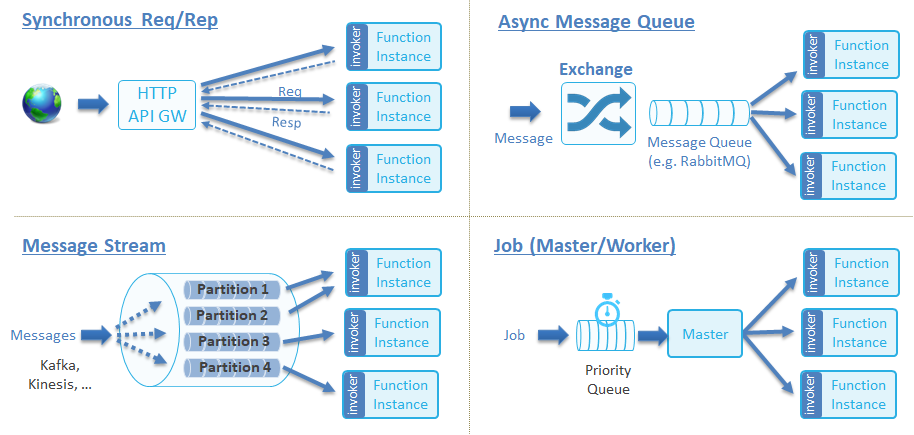

The preceding section describes the building, loading and running logic of a Fission function. This section mainly describes how to trigger the execution of a Fission function based on various events. CNCF divides function triggering methods into the following categories. For more information, see Function Invocation Types.

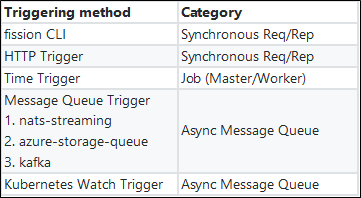

For Fission functions, the simplest triggering method is to use Fission CLI. It also supports various other triggers. The currently supported triggering methods of Fission functions and their triggering types are presented in the following table.

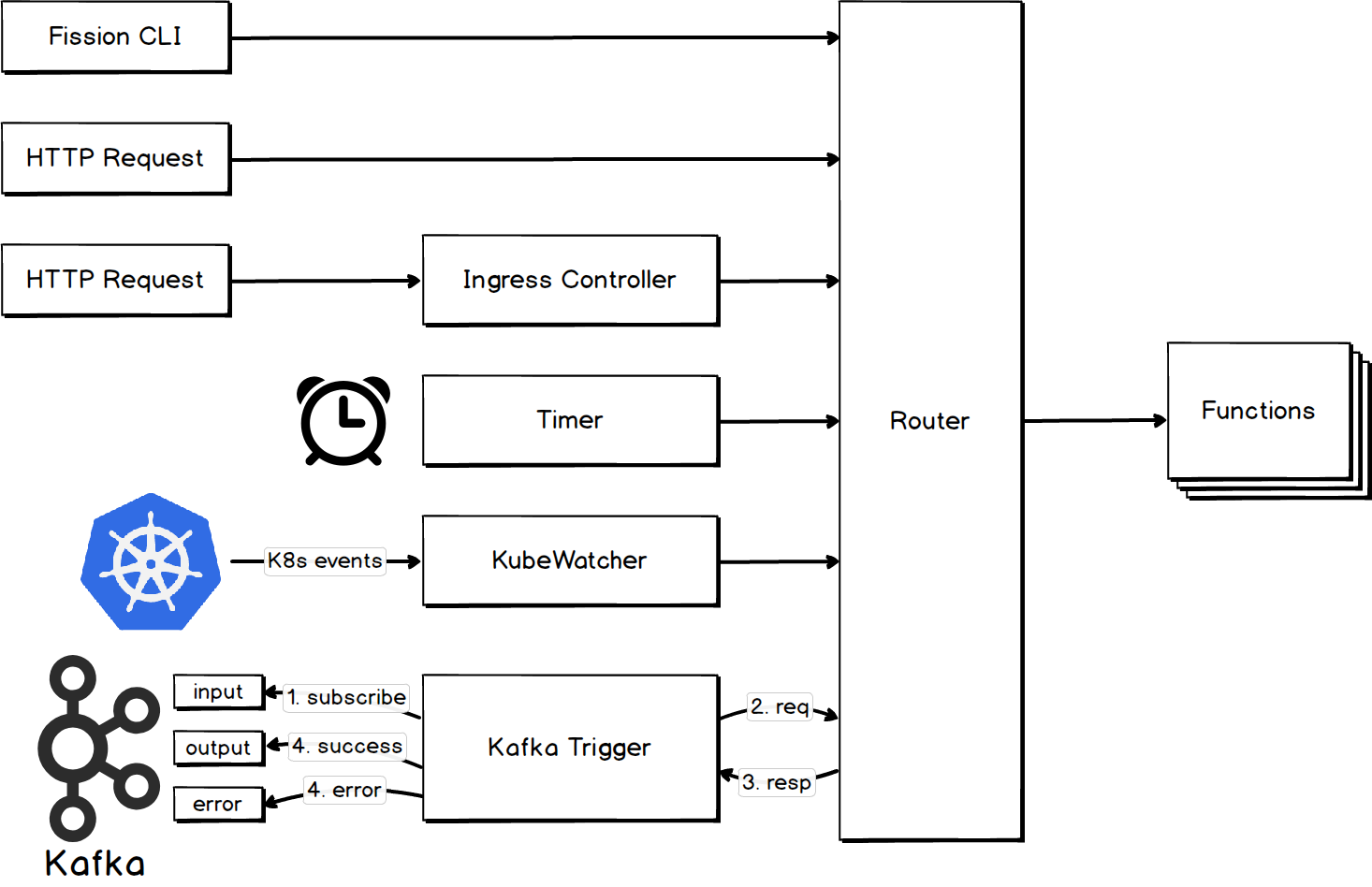

The following figure shows the triggering mechanism of some triggering methods of Fission functions:

All requests sent to Fission functions are forwarded by a router. Then Fission creates a NodePort or LoadBalancer service for the router, so the router can receive external requests.

In addition to accessing the function by sending requests to the router, you can also use the K8s ingress mechanism to implement an HTTP trigger. You can use the following command to create an HTTP trigger, and specify the access path as /echo.

fission httptrigger create --url /echo --method GET --function hello --createingress --host example.comAfter you run this command, the following ingress object will be created. For more information about the logic of creating an ingress object, see CreateIngress.

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

# The name of the ingress project

name: xxx

...

spec:

rules:

- host: example.com

http:

paths:

- backend:

# Point to the router service

serviceName: router

servicePort: 80

# Access path

path: /echoIngress is used to describe routing rules. To make sure these rule take effect and forward requests, the cluster must also have a running ingress controller. To know more about how ingress works, see the "HTTP trigger" section of Mechanism explanation of opensource serverless products (I) - Kubeless.

If you hope to regularly trigger the execution of a function, you need to create a time trigger for the function. Fission uses the deployment object to deploy the timer component. This component is responsible for managing time triggers that you have created. Timer synchronizes the time triggers list at a fixed interval, and regularly triggers the execution of functions that are bound with these time triggers by using the cron library robfig/cron. This library is widely used in the Go programming language (Golang).

You can use the following command to create a time trigger named halfhourly for the hello function. This trigger triggers the execution of the hello function every half an hour. The standard cron syntax is used to define the function execution plan.

fission tt create --name halfhourly --function hello --cron "*/30 * * * *"

trigger 'halfhourly' createdTo support asynchronous triggering, Fission allows you to create a message queue trigger. The currently available message queues are nats-streaming, azure-storage-queue, and kafka. The next section describes the usage and implementation mechanism of a message queue trigger by taking Kafka as an example.

You can use the following command to create a kafka-based message queue trigger hellomsg. This trigger subscribes to messages of the input topic, and it immediately triggers function execution when it receives any message. The function execution result is written to the output topic if a function execution succeeds, or to the error topic if it fails.

fission mqt create --name hellomsg --function hello --mqtype kafka --topic input --resptopic output --errortopic error Fission uses the deployment object to deploy the mqtrigger-kafka component. This component is responsible for managing Kafka triggers that you have created. The mqtrigger-kafka component synchronizes the Kafka triggers list at a fixed interval, and creates a Go routine for each trigger to execute the triggering logic. The triggering logic is listed as follows:

1. Consume the messages of the topic that has been specified by the topic field.

2. Trigger the execution of the function by sending a request to the router, and wait for the function execution result.

3. The function execution result is written to the topic specified by the resptopic field if the function execution succeeds, or to the topic specified by the errortopic if it fails.

1. Fission offers some commonly used triggers, but does not support the Message/Record Streams triggering method as mentioned in the CNCF specification. This method requires messages to be processed in a sequential order.

2. If you want to integrate some other event sources, you can design triggers for them by referencing to the Fission triggers design pattern.

K8s implements automatic horizontal scaling of pods by using Horizontal Pod Autoscaler (HPA). For Fission, only functions that are created by using the NewDeploy executor can implement auto-scaling by using HPA.

You can use the following command to create a function named hello. Any pod that runs this function will be associated with an HPA, which will control the number of pods that run this function between one and six. HPA also maintains the average CPU usage of these pods at 50% by adding or reducing the pods.

fission fn create --name hello --env python --code hello.py --executortype newdeploy --minmemory 64 --maxmemory 128 --minscale 1 --maxscale 6 --targetcpu 50The version of the HPA API used by Fission is autoscaling/v1. You can use this command to create the following HPA:

apiVersion: autoscaling/v1

kind: HorizontalPodAutoscaler

metadata:

labels:

executorInstanceId: xxx

executorType: newdeploy

functionName: hello

...

# The name of the HPA

name: hello-${executorInstanceId}

# The namespace where the HPA is located

namespace: fission-function

...

spec:

# The maximum number of replicas allowed

maxReplicas: 6

# The minimum number of replicas allowed

minReplicas: 1

# The target that is associated with this HPA

scaleTargetRef:

apiVersion: extensions/v1beta1

kind: Deployment

name: hello-${executorInstanceId}

# The CPU usage of the target

targetCPUUtilizationPercentage: 50For more information about how HPA works, see the Auto -Scaling section of the first article in this series. That section elaborates how K8s retrieves and uses the measurement data, and the currently used auto-scaling policy.

1. Similar to Kubeless, Fission avoids directly exposing the complex HPA creation details to users. However, this compromises the performance.

2. Currently, the auto-scaling feature provided by Fission is very limited, and it works only for functions that are created by using the NewDeploy executor. In addition, it supports only one measurement metric, the CPU usage (Kubeless supports both CPU usage and QPS). This is mainly because Fission currently uses HPA version hpa-v1. If you want to implement auto-scaling based on new measurement metrics, or to comprehensively use multiple measurement metrics, directly use features provided by hpa-v2.

3. Currently, the HPA auto-scaling policy adjusts the number of target replicas passively based on what happened, and does not support performing proactive scaling based on historical rules.

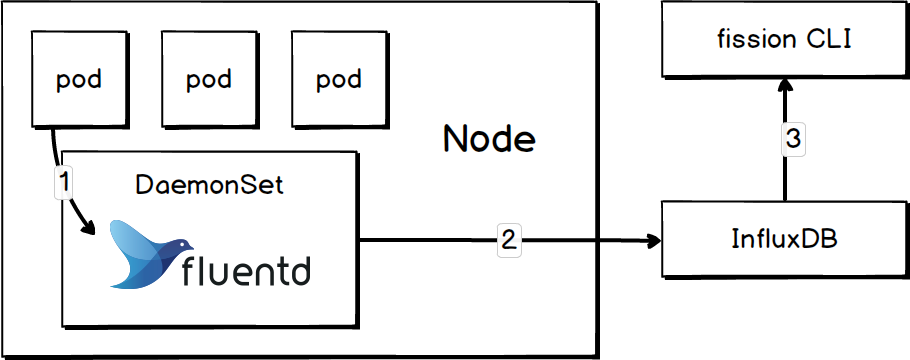

To better understand the running status of functions, you usually need to collect, process, and analyze logs generated by functions. The following figure shows how Fission logs are processed.

The log processing process is listed as follows:

1. Use DaemonSet to deploy a fluentd instance on every work node of the cluster for collecting container logs of the current node. For more information, see logger. Here, the fluentd container mounts the host directories /var/log/ and /var/lib/docker/ containers that contain the container logs, for the convenience of log collection.

2. Fluentd stores the collected logs to influxdb.

3. You can use Fission CLI to view function logs. For example, you can use the command fission function logs --name hello to view logs generated by the hello function.

Currently, Fission supports centralized storage of function logs, but the query analysis feature is very limited. In addition, influxdb is more suitable for storage of the monitoring metrics data, and cannot meet diversified needs of log processing and analysis.

Functions are run in containers. Therefore, function logs can be processed as container logs. For container logs, Alibaba Cloud Log Service team provides mature and complete solutions.

After introducing the basic mechanism of Fission, we will compare it with Kubeless in the following aspects:

1. Triggering methods: Both products support common triggering methods, but Kubeless supports more options and allows convenient integration of new data sources.

2. Auto-scaling: The auto-scaling capabilities of both products are very basic. They support only a few measurement metrics, and their underlying layers are implemented by K8s HPA.

3. The function cold start time: Fission reduces the function cold start time by using the pooling technology. So far, Kubeless has not optimized this part.

4. Advanced features: Fission supports several advanced sections, such as phased release and custom workflow, which are not supported by Kubeless.

Kubeless: A Deep Dive into Serverless Kubernetes Frameworks (1)

Alibaba Cloud LOG Java Producer - A Powerful Tool to Migrate Logs to the Cloud

57 posts | 12 followers

FollowAlibaba Clouder - August 1, 2019

Alibaba Cloud Storage - June 4, 2019

Alibaba Clouder - June 8, 2020

Alibaba Developer - August 8, 2019

Farah Abdou - November 27, 2024

Kidd Ip - May 29, 2025

57 posts | 12 followers

Follow ACK One

ACK One

Provides a control plane to allow users to manage Kubernetes clusters that run based on different infrastructure resources

Learn More Container Service for Kubernetes

Container Service for Kubernetes

Alibaba Cloud Container Service for Kubernetes is a fully managed cloud container management service that supports native Kubernetes and integrates with other Alibaba Cloud products.

Learn More Container Registry

Container Registry

A secure image hosting platform providing containerized image lifecycle management

Learn More Function Compute

Function Compute

Alibaba Cloud Function Compute is a fully-managed event-driven compute service. It allows you to focus on writing and uploading code without the need to manage infrastructure such as servers.

Learn MoreMore Posts by Alibaba Cloud Storage