By Bruce Wu

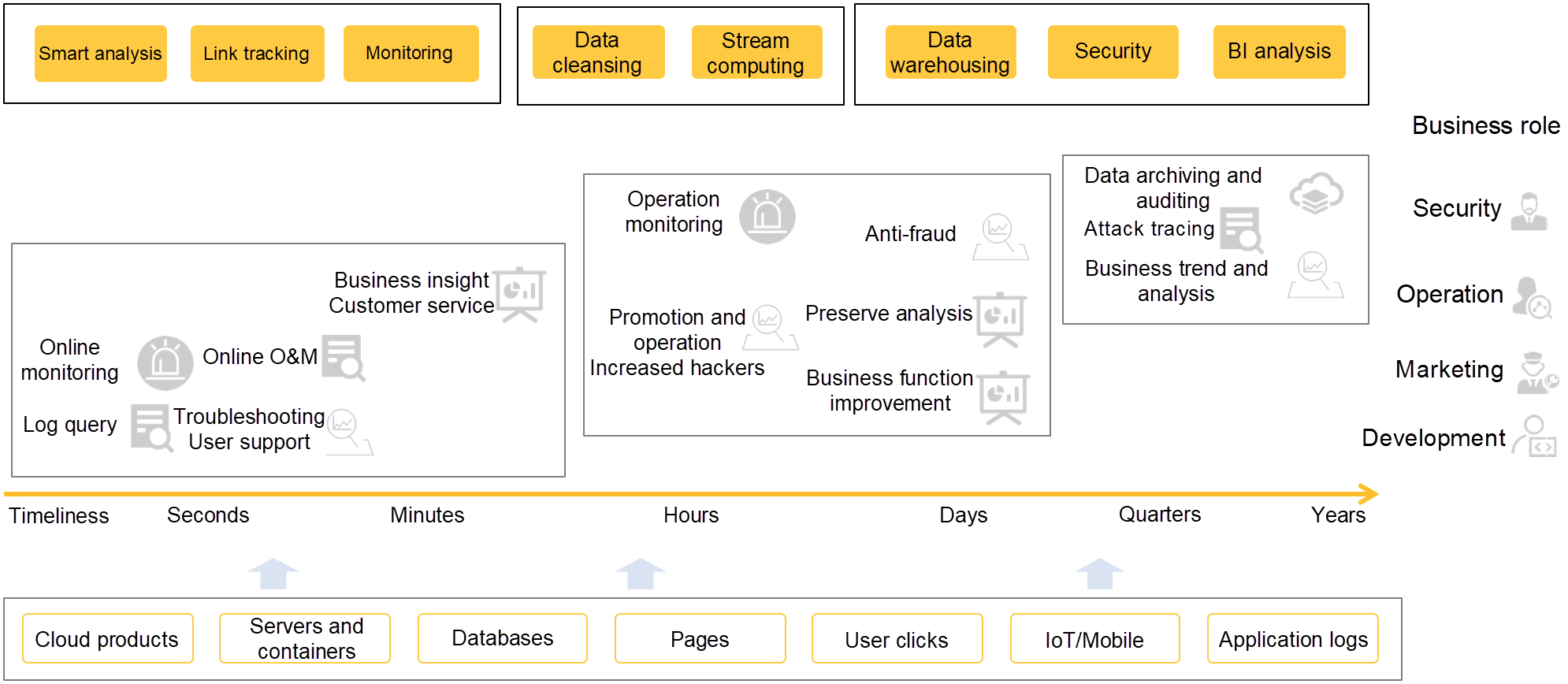

Logs are ubiquitous. As a carrier that records changes in the world, logs are widely used in many fields, such as marketing, research and development, operation, security, BI, and auditing.

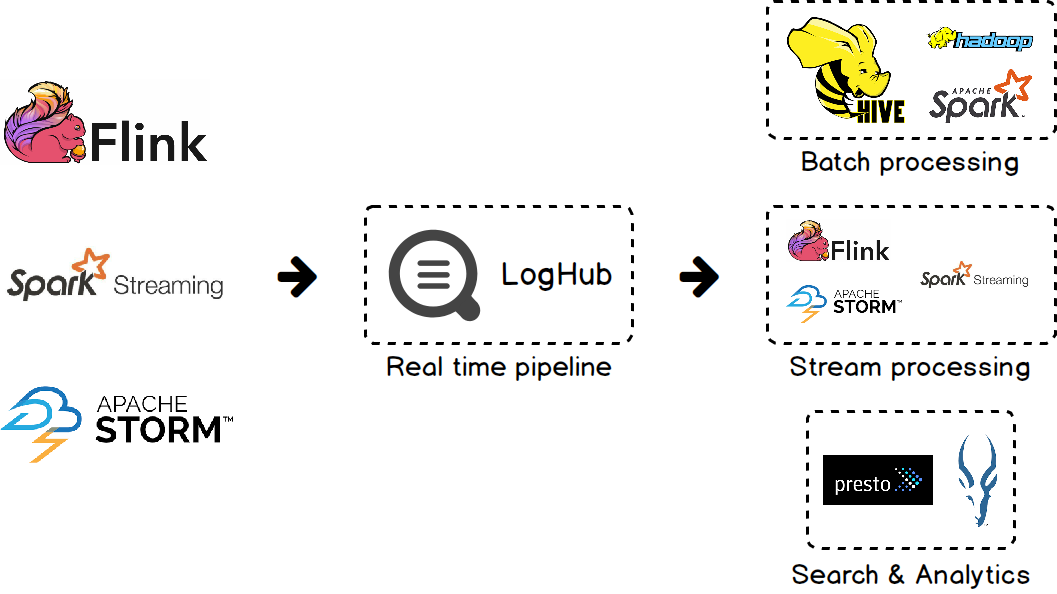

Alibaba Log Service is an all-in-one service platform for log data. Its core component LogHub has become an infrastructure of big data processing, especially real-time data processing, by virtue of outstanding features such as high throughput, low latency, and auto-scaling. Jobs running on big data computing engines such as Flink, Spark, and Storm write data processing results or intermediate results to LogHub in real time. With data from LogHub, downstream systems are able to provide many services such as query analysis, monitoring alarms, machine learning, and iterative calculation. The LogHub big data processing architecture is provided in the following figure.

To ensure that the system runs properly, you must use convenient and highly efficient data writing methods. Directly using APIs or SDKs are insufficient to meet data writing capability requirements in the big data scenario. In this context, Alibaba Cloud LOG Java Producer was developed.

Alibaba Cloud LOG Java Producer is an easy to use and highly configurable Java class library. It has the following features:

Using Producer to write data to LogHub has the following advantages in comparison with using APIs or SDKs:

With large amounts of data and limited resources, to achieve the desired throughput, you need to implement complex logic, such as multi-threading, cache policy, batching, and retries in case of failures. Producer implements the preceding logic to improve your application performance and to simplify your application development process.

With sufficient cache memory, Producer caches data to be sent to LogHub. When you call the send method, the specified data is sent immediately without blocking the process. This achieves the separation of computing and the I/O logic. Later, you can obtain the data sending result from the returned future object or the registered callback.

The size of memory used by Producer to cache the data to be sent can be controlled by parameters as well as the number of threads used to perform data sending tasks. This can avoid unrestricted resource consumption by Producer. In addition, it allows you to balance the resource consumption and write throughput according to the actual situation.

To sum up, Producer provides many advantages by automatically handling the complex underlying details and exposing simple interfaces. In addition, it does not affect normal operations of upper layer services, significantly reducing the data access threshold.

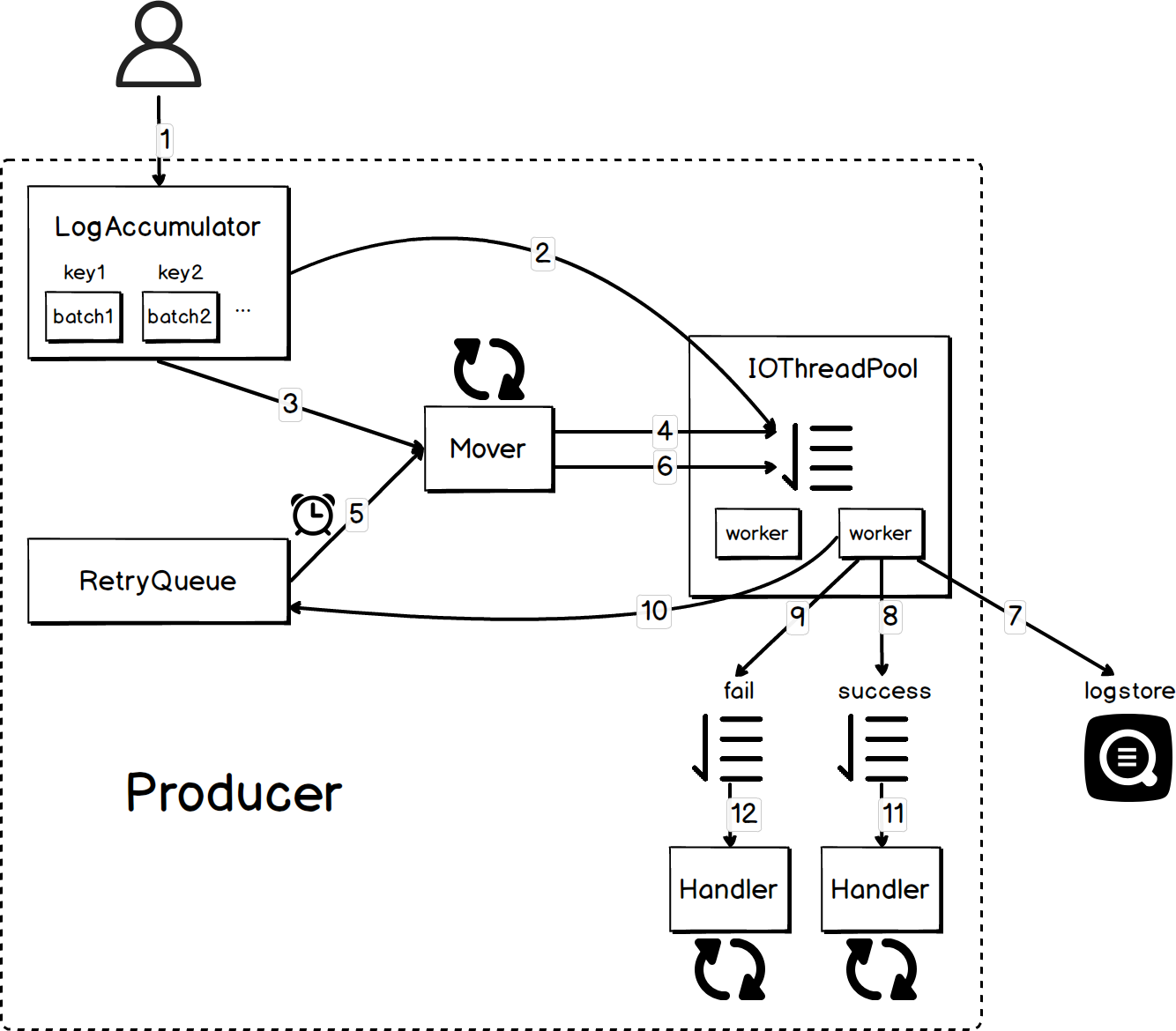

To help you better understand the performance of Producer, this section describes how it works, including its data writing logic, implementation of core components, and graceful shutdown. The overall architecture of Producer is presented in the following figure.

The data write logic of Producer:

After you call the producer.send() method to send data to your specified logstore, the data will be loaded to a producer batch in LogAccumulator. Generally, the send method returns the results immediately. However, when your Producer instance does not have sufficient room to store the target data, the send method will be blocked until any one of the following conditions is met:

If it fails to be sent and meets any of the following conditions, it goes to the failure queue:

Core components of Producer include LogAccumulator, RetryQueue, Mover, IOThreadPool, SendProducerBatchTask, and BatchHandler.

To improve the throughput, a common practice is to accumulate data into larger batches, and send data in batches. The main role of LogAccumulator described in this section is to merge data into batches. To merge different data into a big batch, the data must have the same project, logstore, topic, source, and shardHash properties. LogAccumulator caches these data to different positions of the internal map based on these properties. The key of the map is the quintuple of the above five properties, and the value is ProducerBatch. To ensure thread safety and high concurrency, ConcurrentMap is used.

Another function of LogAccumulator is to control the total size of cached data. Semaphore is used to implement the control logic. Semaphore is an AbstractQueuedSynchronizer-based (AQS-based) synchronization tool with high performance. It first tries to obtain shared resources through spinning, and to reduce the context switch overhead.

RetryQueue is used to store batches that have failed to be sent and are waiting to be retried. Each of these batches have a field to indicate the time to send batch. To efficiently pull expired batches, Producer has a DelayQueue to store these batches. DelayQueue is a time-based priority queue, and the earliest expiring batch will be processed first. This queue is thread-safe.

Mover is an independent thread. It periodically sends expired batches from LogAccumulator and RetryQueue to IOThreadPool. Mover occupies CPU resources even when it is idle. To avoid wasting CPU resources, Mover waits for expired batches from RetryQueue for a period when it cannot find qualified batches to be sent from LogAccumulator and RetryQueue. This period is the maximum cache time lingerMs that you have configured.

The worker thread in IOThreadPool sends data to the logstore. The size of IOThreadPool can be specified by the ioThreadCount parameter, and the default value is twice the number of processors.

SendProducerBatchTask is encapsulated with the batch sending logic. To avoid blocking I/O threads, no matter whether the target batch is successfully sent, SendProducerBatchTask sends the target batch to a separate queue for callback execution. In addition, if a failed batch satisfies the retry conditions, it is not immediately resent in the current I/O thread. If it is immediately resent, it usually fails again. Instead, SendProducerBatchTask sends it to the RetryQueue according to the exponential backoff policy.

Producer starts a SuccessBatchHandler and a FailureBatchHandler to handle successfully sent and failed batches. After a handler completes the execution of the callback or setting the future of a batch, it releases the memory occupied by this batch for use by new data. Separate handling ensures the successfully sent and failed batches are isolated. This ensures the smooth operation of Producer.

To implement graceful shutdown, the following requirements must be met:

To meet the preceding requirements, the close logic of Producer is designed as follows:

As you can see, the graceful shutdown and safe exit are achieved by closing queues and threads one by one based on the data flow direction.

Alibaba Cloud LOG Java Producer is a comprehensive upgrade of the earlier version of Producer. It solves many problems with the earlier version, including the high CPU usage in the case of network exceptions, and slight data loss upon closing Producer. In addition, the fault tolerance mechanism is enhanced. Producer can ensure proper resource usage, high throughput, and strict isolation even after you make any operation mistakes.

Fission: A Deep Dive Into Serverless Kubernetes Frameworks (2)

57 posts | 12 followers

FollowAlibaba Clouder - November 11, 2019

Alibaba Cloud Storage - June 19, 2019

Alibaba Clouder - November 8, 2019

Alibaba Cloud Storage - June 19, 2019

Alibaba Cloud Storage - June 19, 2019

Alibaba Cloud Native Community - March 20, 2023

57 posts | 12 followers

Follow Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn More Quick BI

Quick BI

A new generation of business Intelligence services on the cloud

Learn More Data Lake Storage Solution

Data Lake Storage Solution

Build a Data Lake with Alibaba Cloud Object Storage Service (OSS) with 99.9999999999% (12 9s) availability, 99.995% SLA, and high scalability

Learn MoreMore Posts by Alibaba Cloud Storage