By Priyankaa Arunachalam, Alibaba Cloud Community Blog author.

In this article, part two of a two-part series, you will explore how to use Alibaba Cloud DataWorks together with MaxCompute for your data processing and analytics workflow. In the previous article, part one, you learned how to create tables and analyze data in DataWorks. Now, in this second part, you will continue to take a deeper dive into the Data Integration and other features of DataWorks.

If you haven't already, we recommend that you read the first article in this series, Diving into Big Data with DataWorks , before you continue any further in this article.

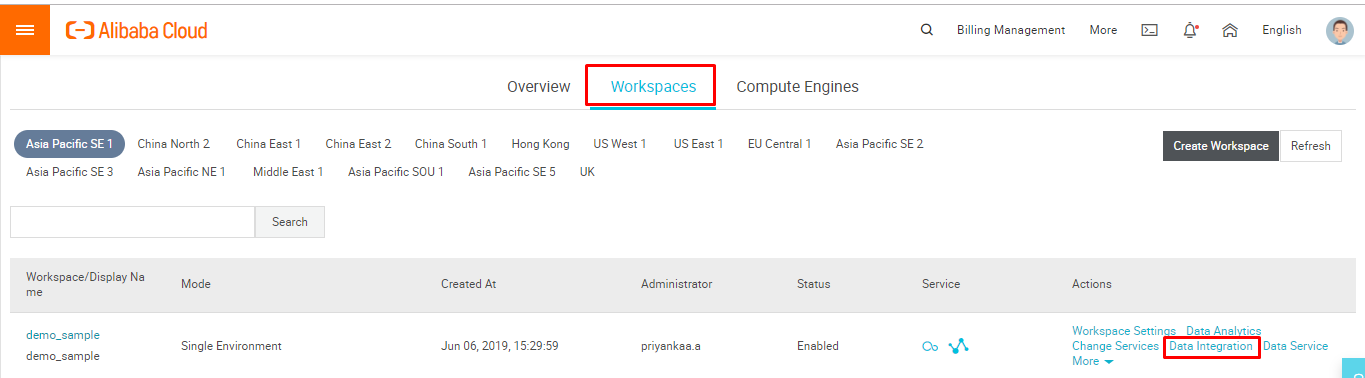

Alibaba Cloud Data Integration is a data synchronization platform that is designed to implement fast and stable data migration and synchronization between multiple heterogeneous data sources. To utilize the Data Integration feature of DataWorks, navigate to Workspaces and click Data Integration next to the workspace you created in the previous tutorial.

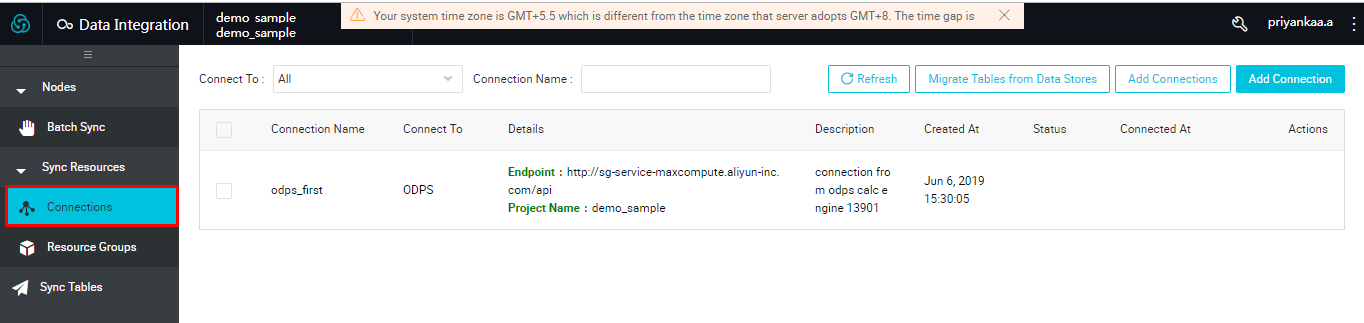

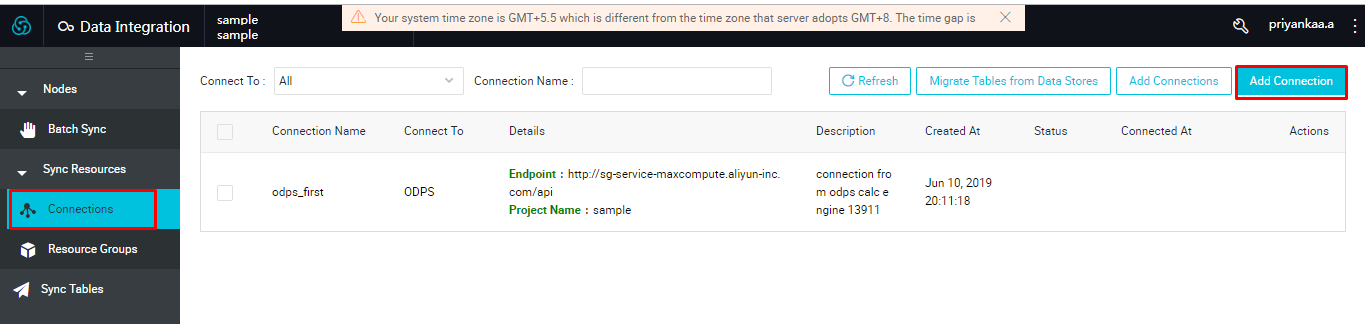

This will lead to the Data Integration page. Click Connections in the left panel to define the connections which is to be used as source and target. Here you can see the MaxCompute connection, which will be set automatically once the MaxCompute is activated.

To configure more connections like MySQL, Oracle, OSS and other, click Add Connections.

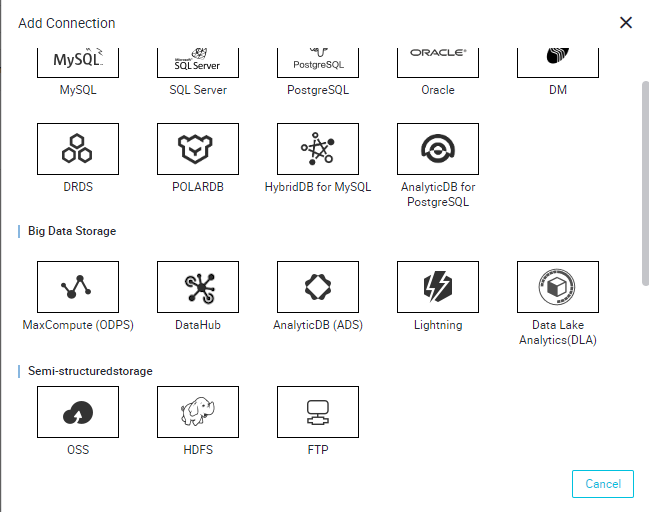

This will show you a list of options with various relational and non-relational databases between which you can transfer data, as shown below:

Data integration feature of DataWorks supports various data sources like

To build a better understanding of all of this, let's take a dive into bringing data back and forth between MaxCompute tables. When to this, there's a lot of tools, including DataHub, HDFS and DataLake Analytics, which you can use to migrate data in an easy manner. These will be covered in future blogs. For now, let's take a look at the parameters for MaxCompute configurations if we are to use MaxCompute:

The way in which you configure these parameters differs based on the data source you use. Generally, there process is you'll need to check out for the configurations, enter them, test the connection and click the Complete button when you're done. Now, suppose if you are using the Oracle Database. In which case, you'll need to click on Oracle from the list of Data Sources and enter the corresponding details of your Oracle Database to test the connection. Once the connection is established successfully, it can be used either as a source or as a target in the Data Integration process.

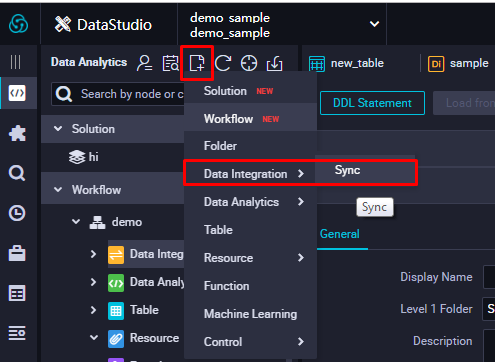

Next, to start with the whole Data Integration process, navigate to the DataStudio page and choose Data Integration > Sync from the drop-down menu.

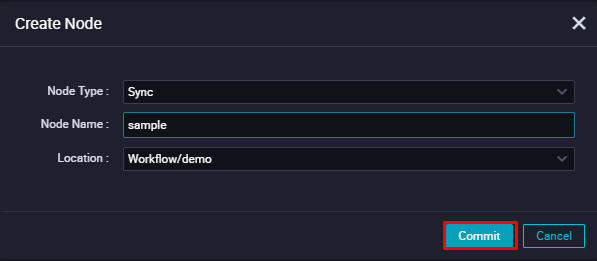

In the Create Node wizard, provide a name and location for the node and click Commit.

There are two ways of creating Data Integration jobs

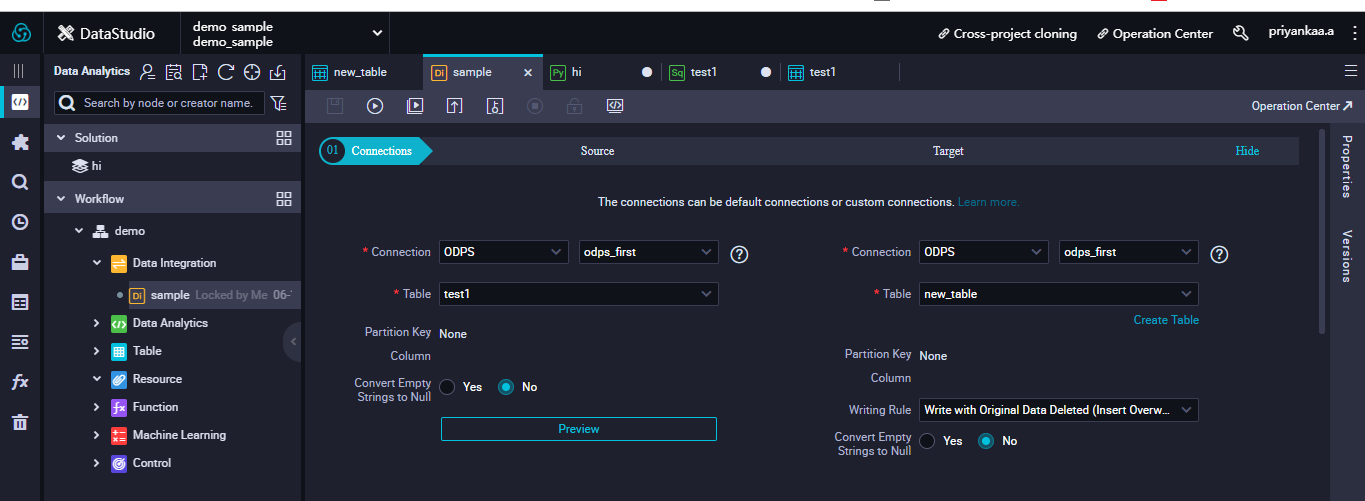

By doing the following steps, the system will create a Data Synchronisation task and will redirect to the page where you can choose the source and target and work with the mappings. In this demo, we are migrating data from one table to another within MaxCompute. As a result, you'll want to select ODPS (this refers to MaxCompute, by the way) as the source and target. The MaxCompute data source allows you to read and write data on MaxCompute, and supports reader and writer plug-ins.

By default, a data source named odps_first is generated for each project. The MaxCompute project name is the same as that of the current project computing engine. You can manually configure the source and target in this page.

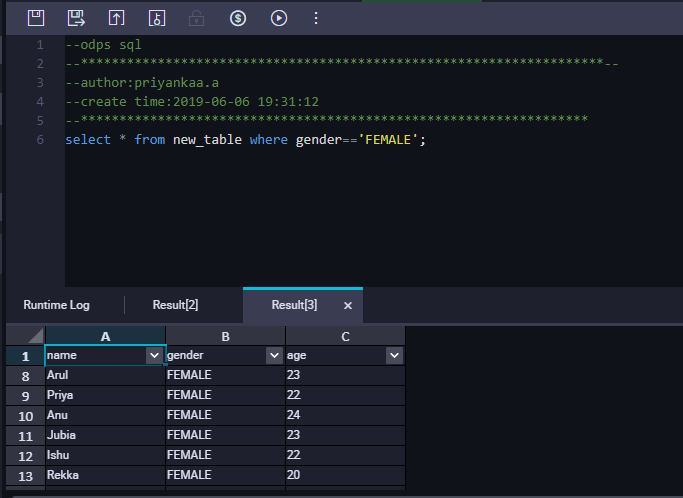

The source here is the table which we already created and uploaded a sample flat file into it. For the target, I have just created another table named new_table and completed with the schema creation. Now the source table test1 has the sample employee details within it, which can be previewed by clicking on Preview button. The target table new_table doesn't have any data as of now. Provide the read and write permissions for the tables wherever required.

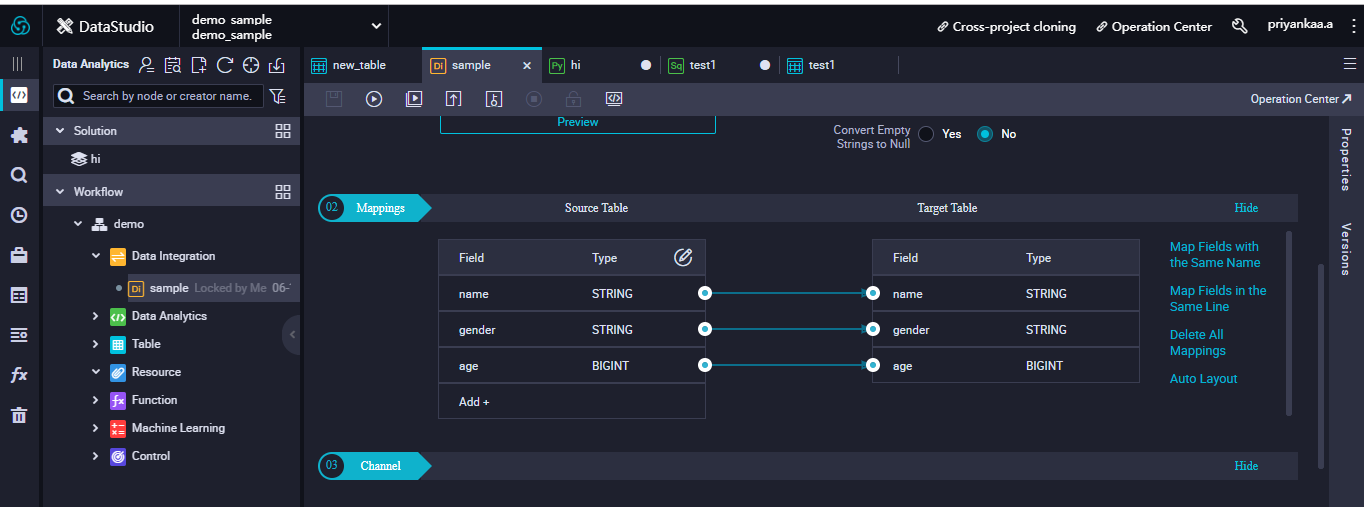

When you complete the source and target configurations, you need to specify a mapping relationship between the read and write end columns, and select Map Fields with the same name or Map Fields in same-line mapping.

So, under Mappings, map the columns from the source table to the columns in the target table. This is one important step, as improper mapping can lead to failed import or record mismatch.

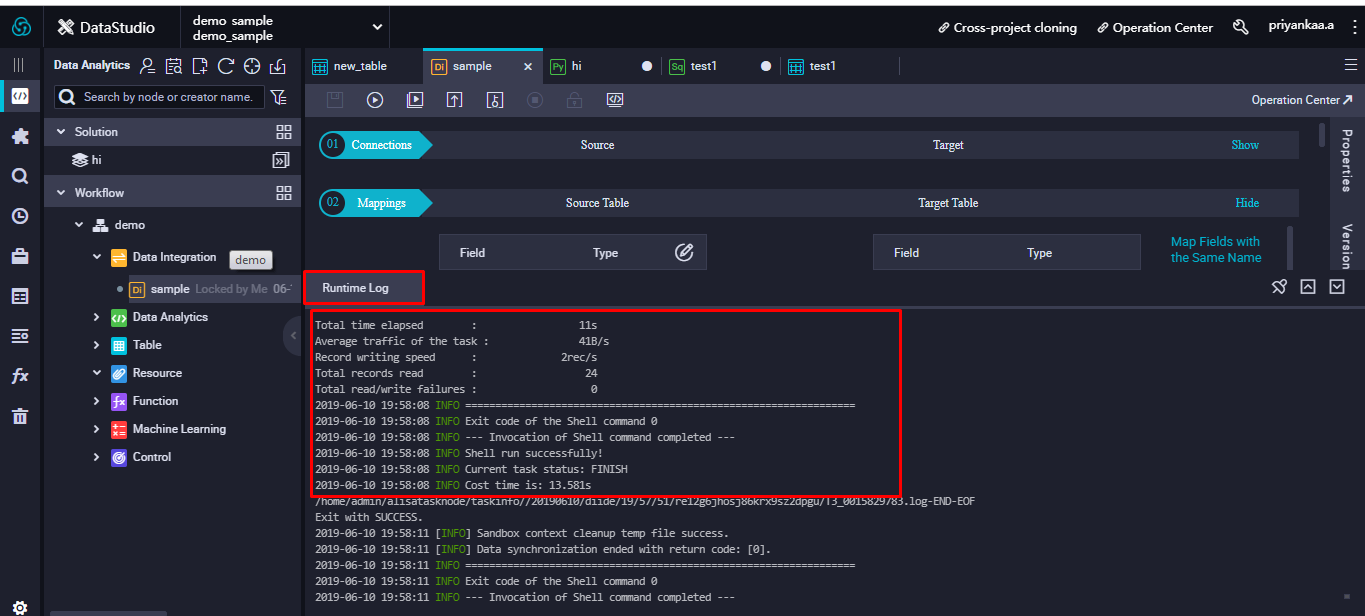

Once done with the Mappings, click on save and then run the job. On successful execution, you will have a message prompted saying shell run successfully!

Let's query the new table to check whether the data has been migrated successfully.

And it's done!

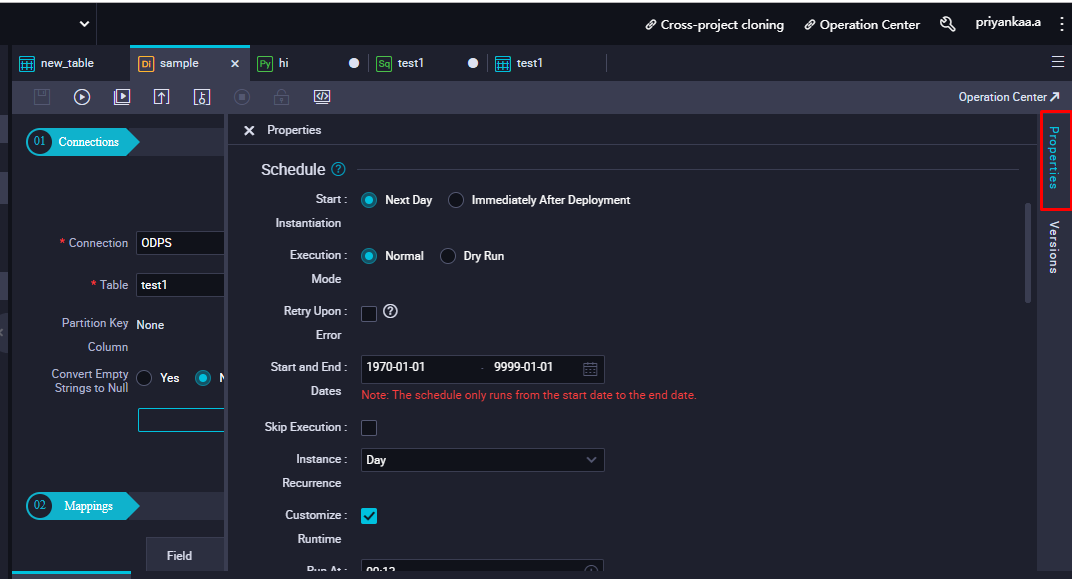

Navigate to Properties on right to alter configurations and make use of scheduling. Use the scheduling parameters to filter synchronization task data. Here you can set the synchronization task run cycle, run time, task dependency, and more in the scheduling properties

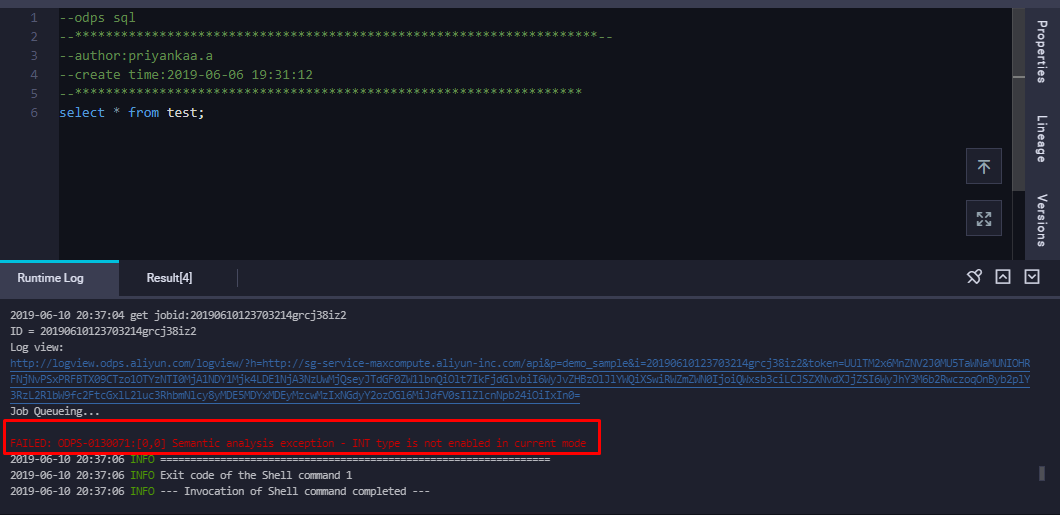

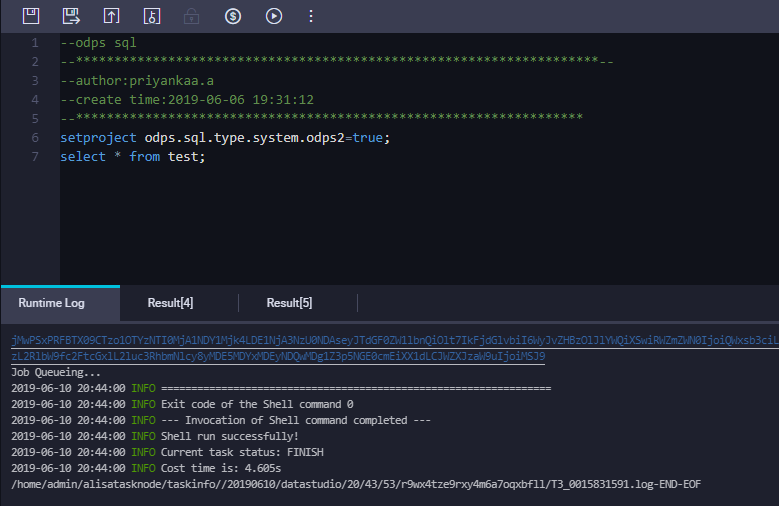

There is a set of basic datatypes which is supported by MaxCompute 2.0. To use a new data type other than the ones from the list, you must configure the property set odps.sql.type.system.odps2=true; or setproject odps.sql.type.system.odps2=true statement as needed, and look for the impact on the existing tasks. If you do not set this statement, an error Semantic analysis exception - xxxx type is not enabled in current mode will occur. A sample of this is shown in the image below:

To resolve this issue or use a new data type, let's run the command setproject odps.sql.type.system.odps2=true along with the needed query as follows:

On running this query, there will be no expense estimate for setproject odps.sql.type.system.odps2=true command and an estimate will be shown for other set of queries which you run. On successful execution of the query, the corresponding output will be displayed and the error will be resolved.

We can also make use of Bulk Sync feature in the Data Integration part of DataWorks. Data sources in Bulk Mode is currently only available for a Data Source Type with a Public Network IP. This is similar to creation of Data Synchronisation task but the thing is that you have to choose Bulk mode in the Configuration and check for the Bulk Testing Connectivity in the Data Source Page, for the specified data source. Only if the Connected Status of the data source is Success, you can work with the Data source in Bulk Sync.

You have to adjust DMU and concurrent configuration of synchronization jobs for optimized maximum synchronization speed. The factors that affect data synchronization speed are Source-side data sources which include Database performance, Concurrency and Network which includes throughput and network speed. Typically, a database with better performance can tolerate a higher concurrency. Therefore, the data synchronization job can be configured for high-concurrency data extraction.

Sync Task configuration for Data Integration

Python on MaxCompute (abbreviated as PyODPS) can be used as a node on data development platforms like DataWorks. DataWorks provide the PyODPS running environment, execution and scheduling.

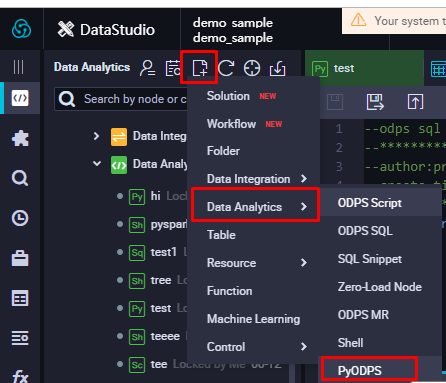

To make use of Python on MaxCompute, create a PyODPS node by clicking on Data Analytics > PyODPS.

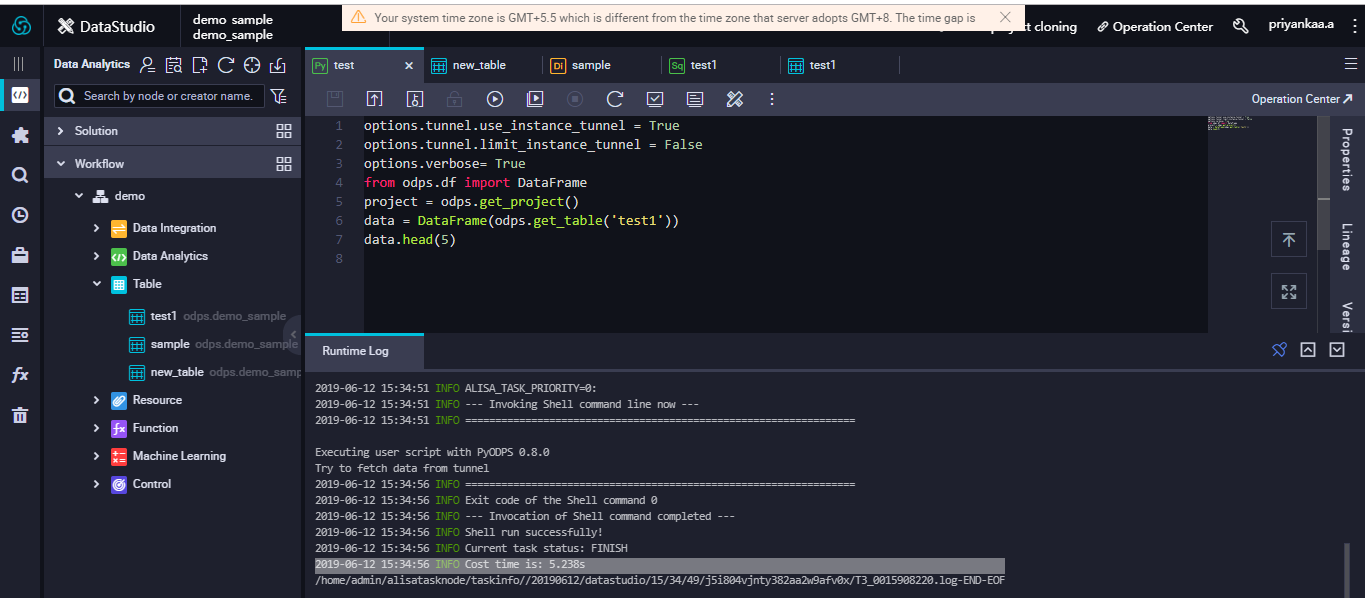

Give a name and location for the PyODPS node and click on Commit. This will create a PyODPS node. There is no need to manually define an ODPS object as the PyODPS node in DataWorks includes a global variable odps or o, which is the ODPS object. Create a Dataframe object which is used to reference the data source. The data source may be a MaxCompute table, MaxCompute partition, or a pandas Dataframe, so on and so forth.

The operations in DataFrame are not run automatically. They are run only when you explicitly call the execute action or specify actions that internally call this action. This is same situation as for spark DataFrames, where transformations do not take place until an action is performed. In spark, this type of execution is called Lazy evaluation, which made the Spark model simple and unique. The same concept is carried by PyODPS here for effective operations.

PyODPS provides a set of configuration options, which can be obtained through odps.options.

For example,

options.tunnel.use_instance_tunnel = True, which is not enabled by default on DataWorks.options.verbose=True. By default, this parameter is set to True in DataWorks which allows the system to print the log view and other details during operation.In this blog, you've got to see a bit more about how to take advantage of all of the features included in DataWorks to help kickstart your data processing and analytics workflow. As you can see from the discussion above, Alibaba DataWorks works well in a variety of deployment scenarios. In fact, it has loads of features and works well with several data development strategies, allows for easy migration of workloads between various Data Sources and provides a means for easier Data Analysis, ML capabilities and security.

2,593 posts | 793 followers

FollowAlibaba Clouder - September 3, 2019

Alibaba Clouder - September 2, 2019

Alibaba Clouder - July 20, 2020

Alibaba Clouder - September 27, 2019

JDP - October 28, 2021

Alibaba Clouder - September 2, 2019

2,593 posts | 793 followers

Follow MaxCompute

MaxCompute

Conduct large-scale data warehousing with MaxCompute

Learn More DataWorks

DataWorks

A secure environment for offline data development, with powerful Open APIs, to create an ecosystem for redevelopment.

Learn More E-MapReduce Service

E-MapReduce Service

A Big Data service that uses Apache Hadoop and Spark to process and analyze data

Learn MoreMore Posts by Alibaba Clouder