By Yunhao and Shiyuan

According to the Data Age 2025 report released by the Internet Data Center (IDC), the global annual data size will increase from 33ZB in 2018 to 175ZB in 2025, with an average of about 491EB data generated every day. As the volume of data continues growing, the costs of data storage become an important part of the IT budget. For example, if you store 1PB data for a year, the costs difference between high-performance storage media and low-cost storage media is many times greater. Since critical business requires high-performance access, the enterprises cannot store all the data on low-speed devices. They need to use different types of storage media according to the frequency of data access to achieve the lowest costs and highest efficiency. Therefore, the first choice for enterprises to store massive amounts of data is to use tiered storage technology, which stores data at different layers and migrates data between the layers automatically. This article introduces data warehouse products as the infrastructure for data storage and management in enterprises and discusses the key issues and core technologies to reduce enterprise storage costs through tiered storage technology.

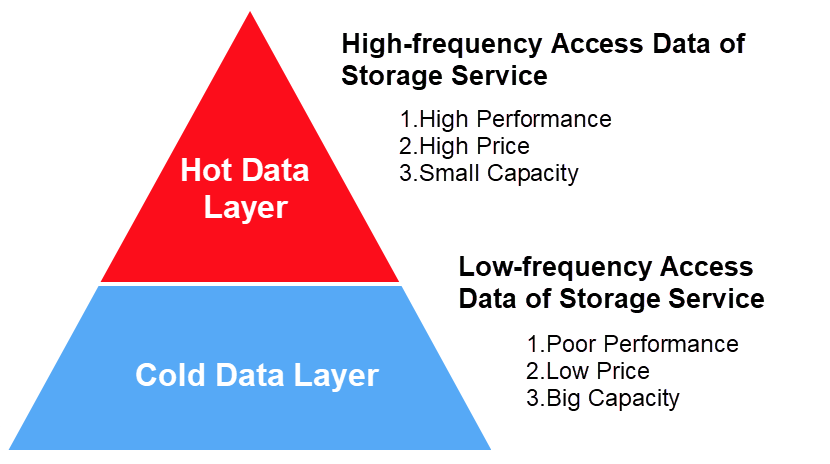

Tiered storage divides data into hot data with high-frequency access and cold data with low-frequency access. The data are stored in the hot data layer and cold data layer to achieve a balance between high performance and low costs. The hot data layer adopts high-performance storage media with high unit costs. The storage capacity is normally small, and only key businesses data are stored, such as ERP, CRM data, and latest order data, to control the budget. The cold data layer stores data of non-critical businesses, such as audit logs and operation logs, or historical data, such as order data from one month ago. This part of the data has a large volume, low access frequency, and low-performance requirements. Therefore, storage media with low costs and large capacity can be used to reduce costs. As time passes by, the access frequency of some hot data decreases. This is normally referred to as data cooling. In this case, the storage system can migrate these data to the cold data layer automatically to lower the costs.

The core challenges that data warehouse products face when implementing tiered storage are listed below:

This chapter introduces how to implement tiered storage in data warehouse products and solve core challenges with the Alibaba Cloud AnalyticDB MySQL as the prototype.

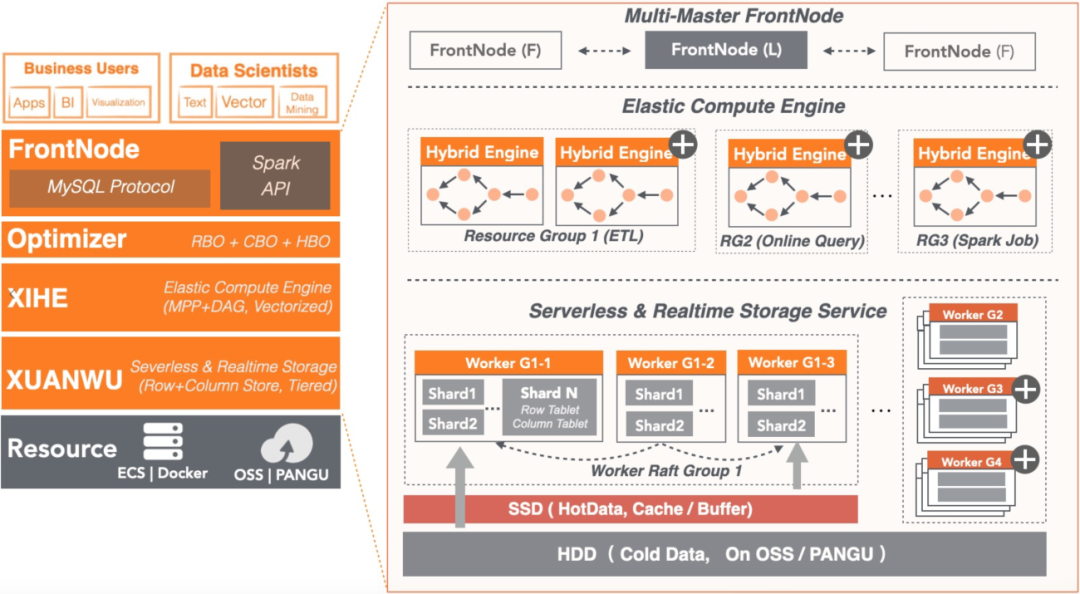

The overall structure of ADB is divided into three layers:

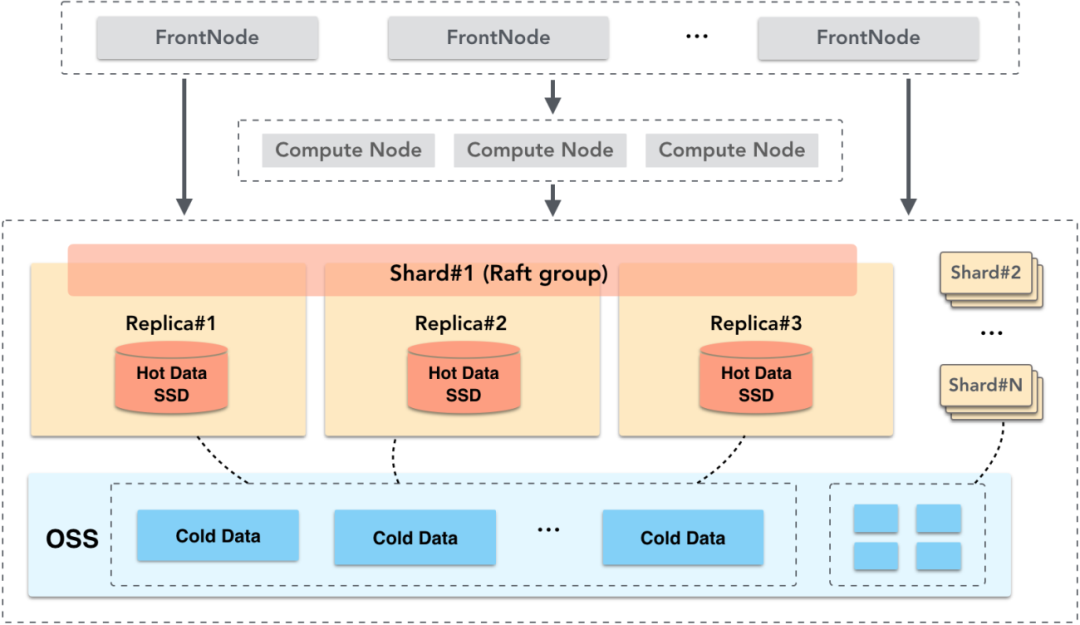

High-performance storage media must be adopted to meet the needs of quick queries in hot business data. SSD is relatively more costly than HDD, but SSD enjoys high IOPS and high bandwidth. Therefore, ADB builds the hot data layer on SSD and uses the multiple data copies mechanism. When a storage node is faulty, you can switch service nodes to ensure high reliability and availability. Cold data in business generally refers to historical business data or log data. Due to the large volume and low access frequency, high capacity and low costs are the major factors in choosing the storage media. ADB builds the cold data layer on Alibaba Cloud Object Storage Service (OSS). OSS is a massive, low-cost, and long-lasting cloud storage service provided by Alibaba Cloud. The durability of data design is no less than 99.9999999999%, and the service availability is no less than 99.995%. These features provided by OSS meet the low costs and high reliability requirements of the cold data layer. In addition, rather than maintaining HDD disks by yourself, OSS has infinite capacity expansion to meet the requirements of massive data storage. Also, OSS supports remote access, so the copies of storage nodes can achieve data sharing to reduce costs.

In business scope, the definitions of hot and cold data are clear. For example, some CRM and ERP data in enterprises that require high-frequency access are hot data. Audit logs or order data from several days ago can be defined as cold data due to their low-frequency access. The core issue is how to define the hot and cold data in business and make sure the storage locations are accurate in tiered storage. For example, at promotion events, a large number of users are interacting with businesses online. At this time, if the tiered storage migrates key data to the cold data layer incorrectly, such as customer information and product information, it will cause damage to related query performance. Moreover, it may also lead to business problems, such as customers not being able to log in or click anything. Eventually, the enterprise will suffer a lot. ADB solves this problem by specifying the storage policy at the time of users creating the table, which correlates the hot and cold data in business and tiered storage precisely. There is an example below:

All data are stored in the SSD and will not be cooled down. This method is suitable for scenarios that require high access performance, such as CRM and ERP data.

Create table t1(

id int,

dt datetime

) distribute by hash(id)

storage_policy = 'HOT';All data are stored in OSS. It is suitable for such situations where storage costs need to be reduced, and the data are in large volume and of low-frequency access, such as audit log data.

Create table t2(

id int,

dt datetime

) distribute by hash(id)

storage_policy = 'COLD';This applies to scenarios where hot data and cold data appear in a time window. For example, game log data and advertisement clickstream data from the last seven days that require high-frequency access are stored as hot data. Data from the last seven days can be cooled to cold data, resulting in low-cost storage.

Note: The mixed table must be used in conjunction with the partitions. In addition to storage_policy, the property of hot_partition_count must be specified. hot_partition_count means that the N partitions of the largest values in descending order are hot partitions and the rest are cold partitions. In the following example, the table is partitioned by day. hot_partition_count = 7 indicates that seven partitions with the largest values are hot data over the last seven days.

Create table t3(

id int,

dt datetime

) distribute by hash(id)

partition by value(date_format(dt, '%Y%m%d'))

lifecycle 365

storage_policy = 'MIXED' hot_partition_count = 7;The table access features may change as the business needs change. You can modify the table storage policy to meet new storage requirements at any time.

(1) Alter a hot table to a cold table:

Alter table t1 storage_policy = 'COLD';(2) Alter the number of hot partitions to hot data over the last 14 days:

Alter table t3 storage_policy = 'MIXED' hot_partition_count = 14;Over time, the access frequency of hot data decreases, and these data become cold data. For example, some log data are rarely accessed after a few days. Therefore, we need to migrate them from the hot data layer to the cold data layer to reduce the costs. The core problem here is to distinguish which part of the data has a lower temperature and needs to be migrated. The following section shows a hot and cold mixed table to illustrate how ADB solves this problem. The following is a log sheet. The data from the last three days are hot data, which meet the requirements of the high-performance online query. However, the data from three days before that are cold data, which are low-cost storage and meet the requirements of low-frequency access.

Create table Event_log (

event_id bigint,

dt datetime,

event varchar

) distribute by hash(event_id)

partition by value(date_format(dt, '%Y%m%d')) lifecycle 365

storage_policy = 'MIXED' hot_partition_count = 3;In this example, the table is partitioned by day.

partition by value(date_format(dt, '%Y%m%d')) lifecycle 365You can switch to the mixed mode. The data of the last three days are hot data.

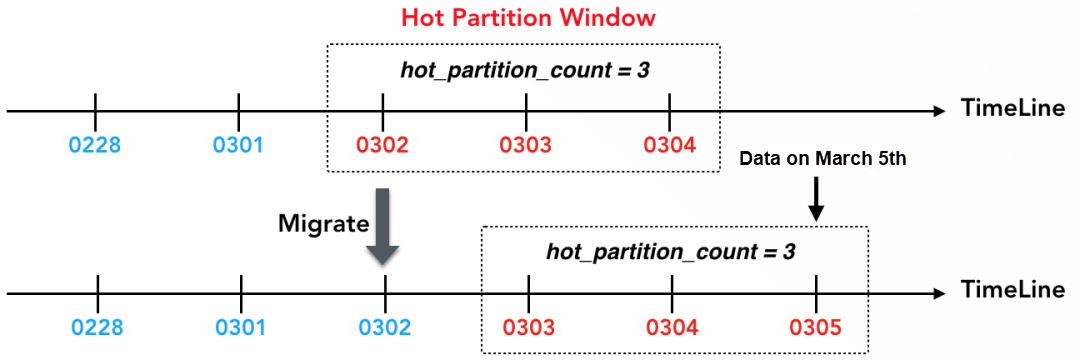

storage_policy = 'MIXED' hot_partition_count = 3In ADB, hot data and cold are partitioned as the smallest granularity. A partition is either in a hot or cold layer. Then, you can determine whether a partition is a hot partition based on the hot partition window. (The hot_partition_count attribute of the table defines the size of the hot partition window.) In this example, assuming the current date is March 4, the data of the three days March 2, 3, and 4 are in the hot partition window and are hot partitioned. After the data on March 5 are written, the data on March 3, March 4, and March 5 form a new hot partition window. The data on March 2 are cooled down as cold data, the backend system migrates the hot and cold data to the cold layer automatically. The hot partitioning window enables users to define hot and cold boundaries based on the business scenario. Then, it migrates the data automatically once they are converted to cold data.

Cold data are stored in OSS, which is a remote storage system and accessed by the network, with high latency. For example, operations (such as determining whether a file exists) or getting metadata (such as the length of the file) are performed with access delays of milliseconds per interaction. Meanwhile, the bandwidth of OSS is limited. An account only provides GB-level bandwidth with 100,000 QPS. When it exceeds the limit, OSS will limit the data traffic. A large number of files are stored in data warehouses. If the access to OSS is not optimized, query exceptions may occur. For example, the query may involve millions of files, and getting the metadata will reach the limit of OSS QPS. This will eventually lead to query timeout and other problems. Therefore, it is necessary to optimize OSS access to ensure service availability and improve query performance. Metadata access optimization and data access optimization are introduced below:

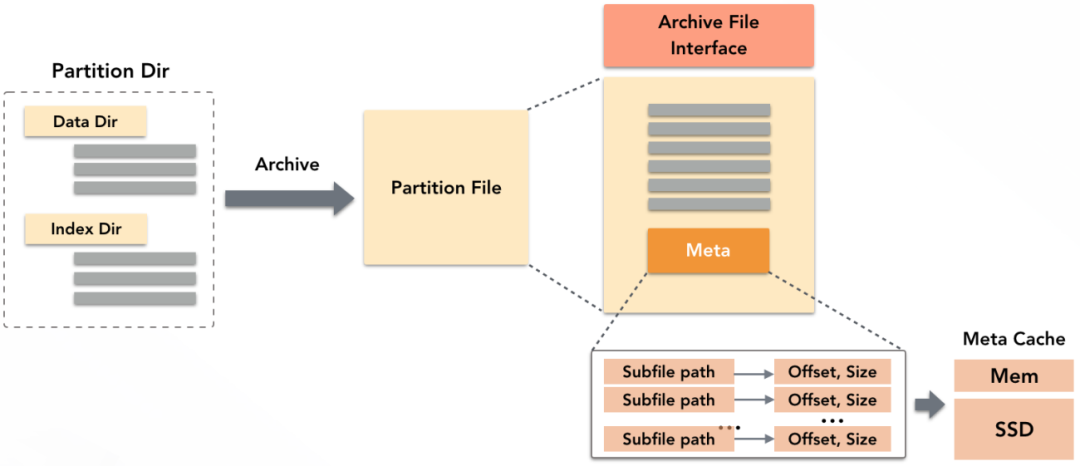

ADB stores quantities of data files and index files at the bottom layer like a data warehouse. ADB optimizes metadata access by archiving files. This means packing all files in a partition into an archive file and providing a POSIX class file access interface where the contents of files can be read.

The metadata of the archive file stores metadata, such as the offset and length of each sub-file. When reading, the metadata of the archive file is loaded first, and the metadata of all subfiles can be obtained within one interaction, which reduces the interaction times by a hundred-fold. ADB has created a small cache space in the memory of the storage node and on the SSD to cache the metadata of the archive file and accelerate the migration further. Once loaded, it does not need to access OSS to obtain the metadata. In addition, after archiving, all sub-files can be read with one input stream, avoiding the expenditure for opening an input stream for each sub-file.

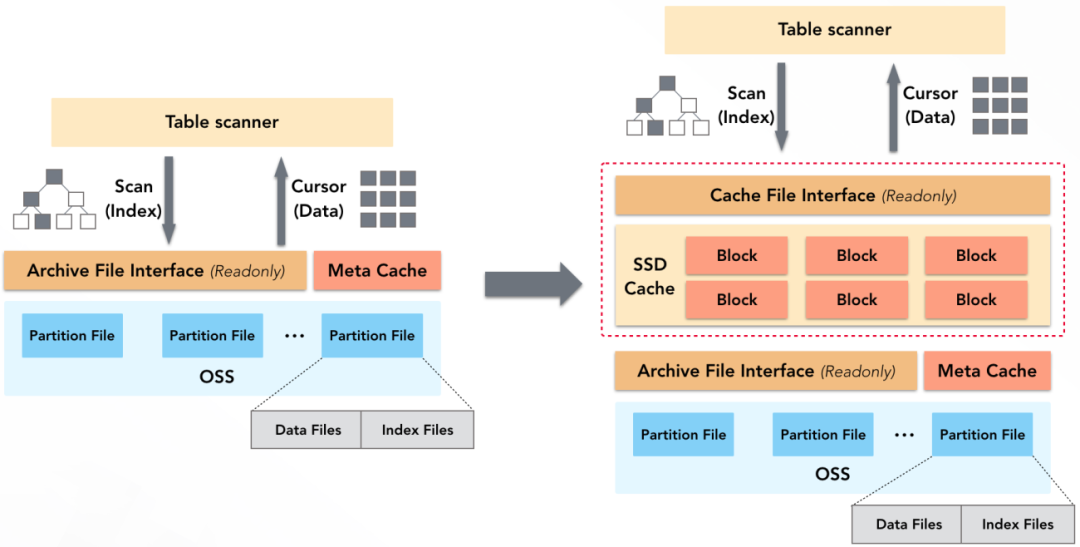

The files in OSS must be read to scan indexes or read data blocks in the query. However, the access performance and access bandwidth of OSS are limited. The ADB storage nodes use a space in the SSD automatically for the data Cache to accelerate the file reading. The upper layer of the Cache provides a file access interface of POSIX class, thus Table Scanner can access the content in the Cache the same way it would access a common file.

All accesses (such as index and data) to OSS in a query can be accelerated by the SSD Cache. The SSD accesses OSS only when the data are not in the Cache. ADB also made the following optimizations to Cache:

As data size grows in enterprises, storage costs become an important part of the enterprise budget. As the infrastructure of data storage and data management for enterprises, data warehouses have achieved a balance between low storage costs and high performance through tiered storage technology. This article uses AnalyticDB MySQL, a cloud-native data warehouse, as a prototype to address the key challenges in the tiered storage technology. It also introduces ways to solve the key problems, such as hot and cold data definitions, hot and cold data migration, and cold data access optimization through hot and cold policy definition, hot partition window, file archiving, and SSD Cache.

Deploy Application Stack Parse Server with MongoDB on Alibaba Cloud

Compilation Optimization: LLVM Code Generation Technology Details and Its Application in Databases

Alibaba Cloud Community - October 15, 2024

Apache Flink Community China - July 28, 2020

ApsaraDB - February 7, 2025

ApsaraDB - November 18, 2025

Alibaba Cloud MaxCompute - July 15, 2021

Apache Flink Community - February 24, 2025

Data Lake Storage Solution

Data Lake Storage Solution

Build a Data Lake with Alibaba Cloud Object Storage Service (OSS) with 99.9999999999% (12 9s) availability, 99.995% SLA, and high scalability

Learn More Hybrid Cloud Distributed Storage

Hybrid Cloud Distributed Storage

Provides scalable, distributed, and high-performance block storage and object storage services in a software-defined manner.

Learn More OSS(Object Storage Service)

OSS(Object Storage Service)

An encrypted and secure cloud storage service which stores, processes and accesses massive amounts of data from anywhere in the world

Learn More Storage Capacity Unit

Storage Capacity Unit

Plan and optimize your storage budget with flexible storage services

Learn MoreMore Posts by ApsaraDB