Alibaba Cloud Content Delivery Network (CDN) serves more than 300,000 customers through its 1,500+ nodes deployed across over 70 countries and regions on six continents with a bandwidth capacity greater than 120 Tbit/s, making Alibaba Cloud the only CDN provider in China that has been rated as "Global" by Gartner. As the business and node bandwidth grow, quality of service (QoS) optimization has become a topic that is worth discussing. In the Apsara User Group—CDN and Edge Computing Session at the Computing Conference 2018 in Hangzhou, Liu Tingwei, Senior Technical Expert at Alibaba Cloud, shared with the audience the technical practices of CDN QoS optimization.

Alibaba Cloud CDN originated from Taobao's self-built CDN in 2006. It originally provided Taobao with acceleration services for small files such as images and web pages, and gradually began to serve the entire internal business of Alibaba Group. In March 2014, Alibaba Cloud CDN was officially put into commercial use. Since its commercialization, we had paid great attention to product standardization. Alibaba Cloud CDN provided a powerful console and a complete set of application programming interfaces (APIs), which could be used by users immediately after purchase, just like other cloud resources.

In the next few years, Alibaba Cloud CDN released the high-speed CDN 6.0, P2P CDN (PCDN), Secure CDN (SCDN), Dynamic Route for CDN (DCDN), and other related products in succession. In March 2018, Alibaba Cloud was rated as "Global" by Gartner in its latest Market Guide for CDN Services. In this summer, Alibaba Cloud CDN carried 70% of the live traffic for the 2018 FIFA World Cup.

However, as the business and node bandwidth grow, QoS problems have become more and more prominent. Liu said, "When discussing QoS optimization, we need to consider the entire ecosystem to combine technical and industry backgrounds and search for all possible directions to which optimization can be made. This figure best describes how we felt at the beginning: We could see our goals ahead, but we had no way out. Then, our team tried to calm down and make up our minds to completely solve QoS problems."

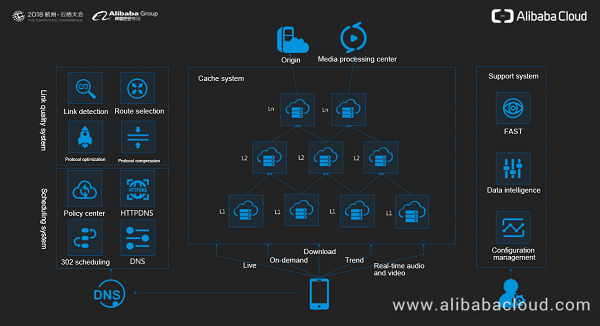

The following figure is a simplified CDN logical architectural diagram, which shows that CDN is a complete ecosystem. From the perspective of a user's access path, we can see what subsystems are involved in each phase of the ecosystem. First, the user's access request is sent to a scheduling subsystem, in which the carrier's local Domain Name System (LDNS) and the CDN's scheduling system jointly assign a nearest edge node to the user. Then, when the access request arrives at the edge node, the user obtains the corresponding live, on-demand, download, trend, or real-time audio and video content from the edge node. This part is implemented by the cache system. Next, the link quality system takes charge of data transmission between cache nodes, between cache nodes and the user, and between cache nodes and the origin. Finally, there is a business support system for configuration, data, and monitoring.

During his sharing, Liu focused on scheduling, link quality, and Five-hundred-meter Aperture Spherical Telescope (FAST)-based monitoring. In just half an hour, he vividly and splendidly illustrated the QoS optimization practices in the entire CDN ecosystem. The audience said that they benefited a lot from his speech.

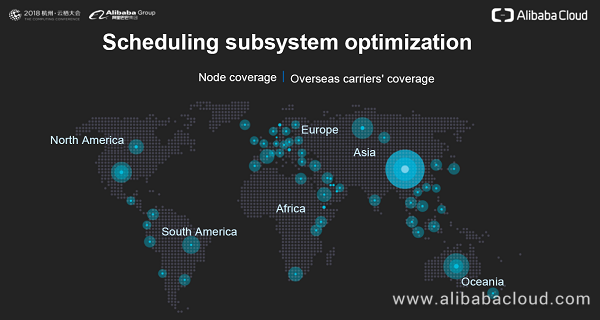

The problem to be solved in scheduling is how to dispatch users to healthy nodes that are nearest to them. The most critical factor is the node coverage capability, followed by scheduling and flow control.

Liu said that good node coverage is not solely dependent on node construction. The site selection and measurement of nodes are critical to making nodes better cover users. Alibaba Cloud has been building overseas nodes on a large scale since 2017. So far, it has built over 300 overseas nodes to meet the acceleration requirements of users in China for their development overseas and those of users outside China.

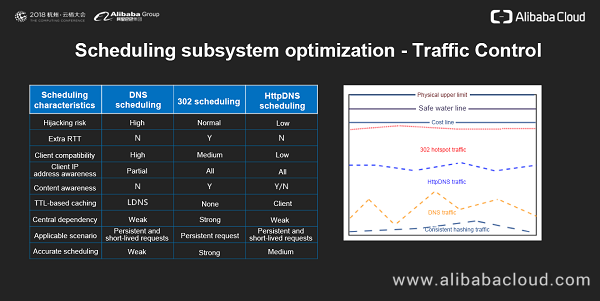

If a public LDNS or users' LDNS is configured incorrectly, users cannot be dispatched properly to the nearest node. Accordingly, the QoS deteriorates. To solve this problem on apps, Alibaba Cloud provides HttpDNS scheduling. For web pages where HttpDNS cannot be implemented, Alibaba Cloud solves this problem through 302 scheduling or correction. If a user is not dispatched to the nearest node because of LDNS problems, the edge node receiving the user request can perform 302 correction for large files to dispatch the user to the nearest node.

"Another issue is LDNS profiling. We know that different LDNSs vary in processing polling, forwarding, and the time to live (TTL). The frontend and backend IP addresses of an LDNS are also in different proportions. We cannot request all LDNSs to resolve IP addresses in accordance with our requirements. However, we can adjust our own resolution policy based on the LDNS characteristics. Some people think that we do not need to care about LDNS problems because we can use 302 correction. Can we just rely on 302 correction?" Liu said, "The answer is no. The round trip time (RTT) of each 302 scheduling is about 20 ms, which cannot be underestimated. When we optimize the QoS of small files such as images, we can improve the QoS only by 1–2 ms. For example, the response time for processing an image of 1 KB is around 10–15 ms. If another 20 ms is added, the performance deteriorates by half. As a result, the user conversion rate of our customers may drop by 10%, which is unbearable."

One of the greatest differences between Alibaba Cloud CDN and traditional CDNs lies in automated and intelligent flow control. Facing more than 1,000 nodes, hundreds of thousands of machines, and over 100 Tbit/s traffic, how do we dispatch traffic evenly to nodes that are optimal to users? This cannot be done manually. Moreover, we have only two O&M engineers held responsible for the entire Alibaba Cloud CDN scheduling. It means that Alibaba Cloud CDN must be highly automated and intelligent to accurately dispatch traffic. There are two optimization schemes: One is offline planning, through which we predict and dispatch traffic based on the trend of historical traffic; the other is real-time scheduling, through which we plan traffic based on the characteristics of burst traffic.

How do we achieve offline and real-time flow control? We compare each node to a bottle and scheduling traffic to sand. Then, DNS traffic can be compared to big stones, whereas HttpDNS traffic and 302 traffic are more like sand. In this context, the scheduling granularity can be specific to each case. Offline planning focuses on large chunks of DNS traffic. Real-time flow control deals with HttpDNS traffic and 302 traffic. The combination of offline and real-time flow control ensures that the water level is balanced on each node and that each user is dispatched to the optimal node. Alibaba Cloud's flow control system not only well supports the normal CDN business, but also withstands the burst traffic in events such as the 11.11 Global Shopping Festival, CCTV Spring Festival Gala, and FIFA World Cup.

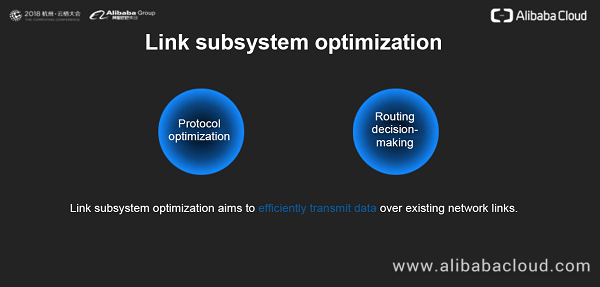

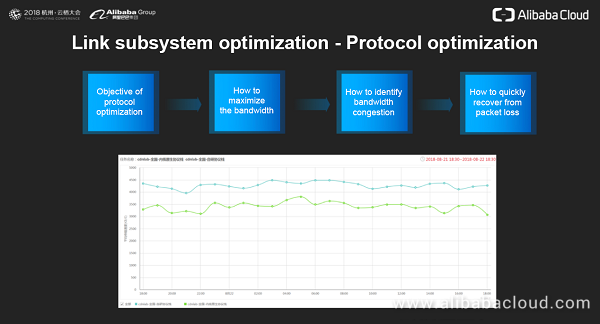

Next, Liu led the audience to link quality optimization. The problem to be solved by the link subsystem is how to efficiently transmit data over existing network links.

What is the objective of protocol stack optimization? The optimization direction varies in different scenarios, because we need to consider the balance of costs, performance, and fairness. For the user-oriented link, Alibaba Cloud does its utmost to ensure users' QoS even at the expense of some costs. Alibaba Cloud has improved the performance of the protocol stack by 30% by adjusting the congestion algorithm and packet loss detection algorithm.

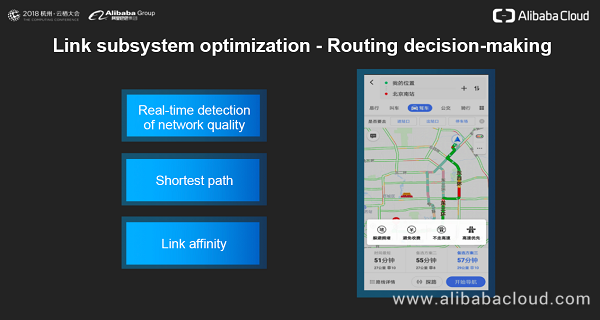

Liu used a vivid metaphor, "If the CDN node network is compared to highways, the routing decision-making is implemented by AutoNavi. For each dynamic request from a user, the link subsystem decides on the optimal path from the nearest access point to the origin."

When we open AutoNavi to plan a route from the Alibaba Center to the Beijing South Railway Station, we can find that:

First, AutoNavi marks the congestion level on each road of each route, which is equivalent to real-time detection of network quality.

Second, AutoNavi has planned three routes for us, which is equivalent to route selection. The CDN also selects three shortest paths between the origin and the user.

Third, AutoNavi gives options such as high-speed priority and congestion avoidance, as well as the restricted areas under the distinctive license plate policy in Beijing. This feature is equivalent to link affinity. For example, if the origin is located in China Mobile Tietong, the CDN is then connected to China Mobile Tietong.

The FAST system aims to detect and diagnose QoS problems in a timely manner, and automatically process the problems based on historical experience. It mainly provides two features: The monitoring feature detects problems in a timely manner, while the alarm processing feature automatically processes the detected problems based on historical experience.

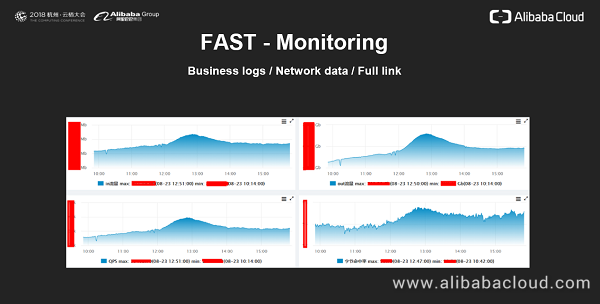

The core of monitoring is data sources. When talking about data sources, we may first think of business logs, which record data such as the business bandwidth, queries per second (QPS), hit rate, and request processing time of a domain name. We can use such data to perform basic business monitoring. However, in a scenario where the QoS of a carrier declines in a certain area but the business remains normal and the user only perceives that the download speed becomes slower, this QoS problem cannot be identified based on business logs. Instead, data at the network level, such as the packet loss rate, RTT, connection establishment time, and average request size, needs to be measured.

All these network data is collected at the kernel level. Then, we can use such data to identify basic QoS problems of download business. If a customer encounters stalling during live broadcast, how can the problem be identified through monitoring? In this scenario, full-link monitoring is required. Alibaba Cloud implements a full-link and global data monitoring system for live broadcast business.

With data in hand, we all know that we can associate data with alarms. Is this enough?

As the business develops, alarms are configured for various data sources. As a result, the system is flooded with more and more alarms, which cannot be effectively processed. Therefore, the primary problem to be solved is how to ensure the accuracy and convergence of alarms.

How do we conduct alarm convergence?

After alarm convergence is conducted, we may still find that manual processing is not timely enough. In this case, the automated processing capability is required. Automated processing actually relies on the transformation of manual processing experience into system capabilities. That is, we can train the system with the common troubleshooting methods, data, and means to routinize the troubleshooting procedure.

For example, live broadcast is very sensitive to stalling, and it also raises high requirements for the response time. We can formulate a procedure based on the troubleshooting means and processing of stalling problems. Once a problem is detected, an alarm is directly generated to inform the system of the occurrence, cause, and repair strategy of this problem.

In addition, we need to be aware of the following issues:

First, the traps of automated processing. We cannot be so naive to believe that everything can be automated, because simple automated processing often sets huge traps in the system. We can use the isolation of node faults as an example. If 1,000 out of 1,500 nodes are detected faulty, what should we do? Can we repair the faulty nodes offline in peace while just relying on automated processing? If we do so, the remaining 500 nodes will be overwhelmed by traffic. We must set a fuse to achieve controllable automated processing.

Second, the exhaustivity of automated processing. Automated processing cannot be a simple repetition of manual experience. We require the system to possess the capability of auto-learning. With the machine learning capability, the system can use the abnormal data related to alarms to develop its own capability of processing unknown problems.

In addition to pragmatic technologies, Liu also shared some specific customer examples to deepen the audience's understanding.

The first example is AirAsia. Alibaba Cloud provides AirAsia with a global DCDN solution, which provides route optimization and tunneling services for dynamic data. The solution has helped AirAsia increase the response speed by 150%.

The second example is Tokopedia, the biggest online sales platform for customer to customer (C2C) business in Indonesia. Alibaba Cloud provides Tokopedia with a full-link Hypertext Transfer Protocol Secure (HTTPS) SCDN solution, which guarantees secure payments and achieves acceleration by more than 100%. Taking advantage of the Auto Scaling feature of Alibaba Cloud CDN, this solution has helped Tokopedia easily deal with burst traffic, which is dozens of times more than usual, in multiple sales promotions.

The third example is Toutiao, the fastest growing mobile Internet product in China. Besides Toutiao, the customer also offers TikTok (known as Douyin in China), Vigo Video (known as Huoshan in China), and other popular products based on its leading technical capabilities in the country. Alibaba Cloud CDN works together with the Toutiao technical team to establish an end-to-end (E2E) short video quality monitoring system. After overall optimization, the sum of the video stalling rate, interruption rate, and failure rate has been lowered to less than 1%.

The last example is Huya, a leading interactive live broadcast platform in China. Alibaba Cloud CDN and Huya have jointly implemented E2E full-link monitoring for live broadcast. The solution can monitor and locate live broadcast problems and causes in real time, to ensure users' smooth experience of live broadcast on Huya.

At the end of the speech, Liu said, "Although we have done a lot of work to optimize the QoS, there is still a long way to go. We welcome all experts to join us and work together to build the best CDN in the world!"

Lazada Expands Double 11 Coverage in ASEAN with Alibaba Cloud CDN

2,593 posts | 792 followers

FollowAlibaba Clouder - July 29, 2020

淘系技术 - November 17, 2020

Alibaba Developer - May 21, 2021

Alibaba Cloud Native Community - December 1, 2022

Alibaba Cloud Native Community - July 26, 2022

Alibaba Cloud Native Community - September 18, 2023

2,593 posts | 792 followers

Follow CDN(Alibaba Cloud CDN)

CDN(Alibaba Cloud CDN)

A scalable and high-performance content delivery service for accelerated distribution of content to users across the globe

Learn More OSS(Object Storage Service)

OSS(Object Storage Service)

An encrypted and secure cloud storage service which stores, processes and accesses massive amounts of data from anywhere in the world

Learn More CEN

CEN

A global network for rapidly building a distributed business system and hybrid cloud to help users create a network with enterprise level-scalability and the communication capabilities of a cloud network

Learn MoreMore Posts by Alibaba Clouder