By Youyi

Alibaba has many years of practical experience in the hybrid deployment of SLO-aware workload scheduling and has reached the industry-leading level. SLO-aware workload scheduling is a method to schedule different types of workloads to the same node. The workloads run at different resource demand levels to meet SLOs. This improves resource utilization. This article will focus on the relevant technical details and usage methods so users can enjoy the technical dividends brought by SLO.

As a general computing resource hosting framework, Kubernetes hosts multiple types of business loads, including online services, big data, real-time computing, and AI. From the perspective of business requirements for resource quality, these services can be divided into Latency Sensitive (LS) and Best Effort (BE).

For the LS type, a reliable service usually applies for a large resource request and limit to ensure the stability of resources (to cope with sudden business traffic and traffic growth caused by the disaster in the data center). This is the main reason why the resource allocation rate of the Kubernetes cluster is easily above 80%, but the utilization rate is lower than 20%. It is also the reason why Kubernetes introduces the BestEffort QoS type.

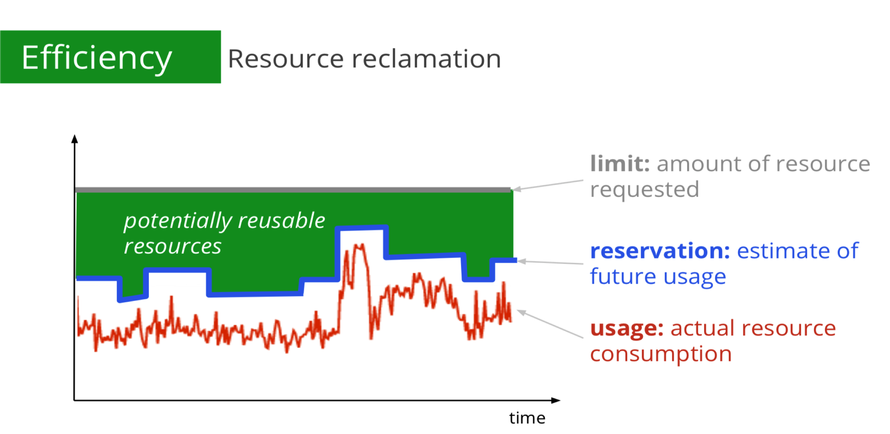

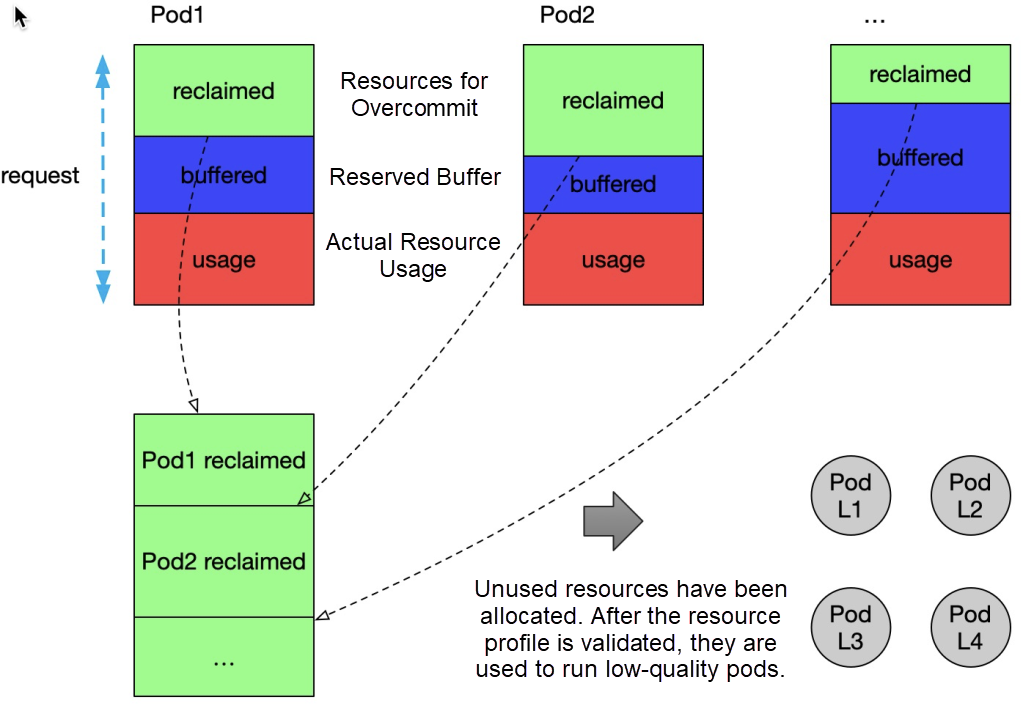

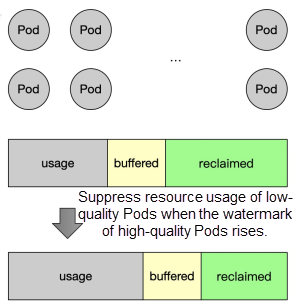

To make full use of this part of the allocated but unused resources, we define the red line in the figure as usage, the blue line to the red line, which is the reserved part of the resources, is defined as buffered, and the green coverage part is defined as reclaimed, as shown in the following figure:

This part of resources represents the amount of dynamic resource overcommitment: ∑ reclaimed (Guaranteed/Burstable). You can make full use of the characteristics of workloads that require different quality of resource operation and improve the overall resource utilization of the cluster by allocating this part of idle resources to BE-type businesses.

The Alibaba Cloud Container Service for Kubernetes (ACK) differentiated SLO extension suite provides the ability to quantify this part of oversold resources, dynamically calculate the current amount of reclaimed resources, and update the node metadata of Kubernetes in real-time in the form of standard extended resources.

# Node

status:

allocatable:

# milli-core

alibabacloud.com/reclaimed-cpu: 50000

# bytes

alibabacloud.com/reclaimed-memory: 50000

capacity:

alibabacloud.com/reclaimed-cpu: 50000

alibabacloud.com/reclaimed-memory: 100000When a low-optimal BE task uses reclaimed resources, it only needs to add the expressions qos and reclaimed-resource to the Pod. qos = LS corresponds to a high priority. qos = BE corresponds to a low priority. reclaimed-cpu/memory is the specific resource demand of the BE Pod.

# Pod

metadata:

label:

alibabacloud.com/qos: BE # {BE, LS}

spec:

containers:

- resources:

limits:

alibabacloud.com/reclaimed-cpu: 1000

alibabacloud.com/reclaimed-memory: 2048

requests:

alibabacloud.com/reclaimed-cpu: 1000

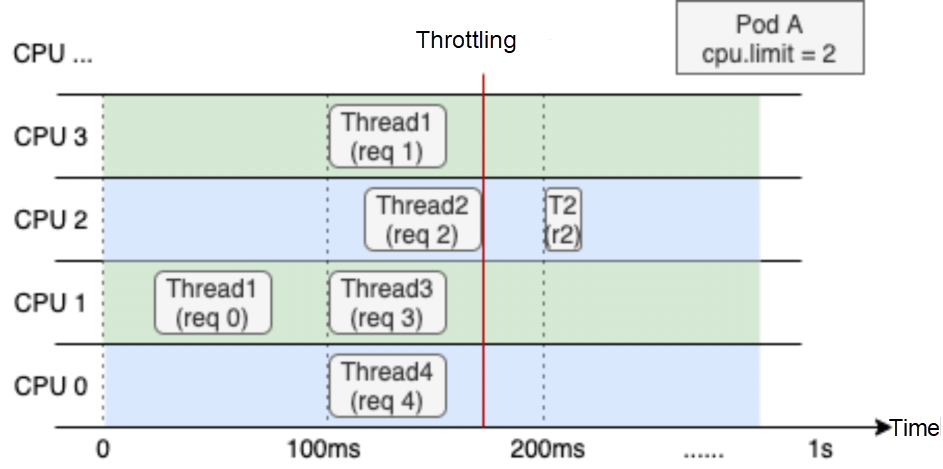

alibabacloud.com/reclaimed-memory: 2048Kubernetes allows you to specify CPU limits, which can be reused based on time-sharing. If you specify a CPU limit for a container, the OS limits the amount of CPU resources that can be used by the container within a specific time. For example, you set the CPU limit of a container to 2. The OS kernel limits the CPU time slices that the container can use to 200 milliseconds within each 100-millisecond period.

The following figure shows the thread allocation of a web application container that runs on a node with four vCPUs. The CPU limit of the container is set to 2. The overall CPU utilization within the last second is low. However, Thread 2 cannot be resumed until the third 100-millisecond period starts because CPU throttling is enforced somewhere in the second 100-millisecond period. This increases the response time (RT) and causes long-tail latency problems in containers.

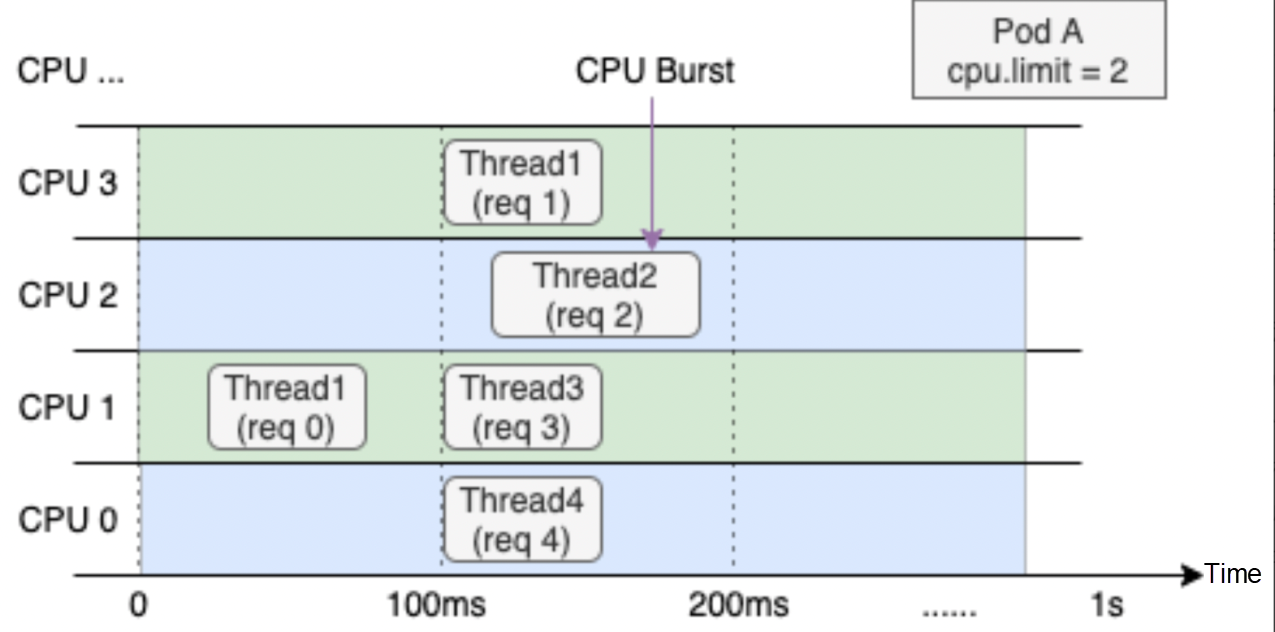

The CPU Burst mechanism can effectively solve the RT long tail problem of latency-sensitive applications, allowing containers to accumulate some CPU time slices when they are idle to meet resource requirements during bursts and improve container performance. Alibaba Cloud Container Service has completed comprehensive support for the CPU Burst mechanism. ACK detects CPU throttling events for kernel versions that do not support CPU Burst and dynamically adjusts the CPU limit to achieve the same effect as CPU Burst.

With the enhancement of host hardware performance, the container deployment density of a single node is improved. The resulting problems (such as inter-process CPU contention and cross-NUMA memory access) are also more serious, affecting application performance. Under multi-core nodes, processes are often migrated to their different cores during the running process. Considering that the performance of some applications is sensitive to CPU context switching, Kubelet also provides static policies to allow Guarantee type Pods to monopolize CPU cores. However, the strategy has the following shortcomings:

ACK implements topology-aware scheduling and flexible core binding policies based on the Scheduling framework. This provides better performance for CPU-sensitive workloads. ACK topology-aware scheduling can be adapted to all QoS types and can be enabled on-demand in the Pod dimension. At the same time, you can select the optimal combination of node and CPU topology in the whole cluster.

As described in the resource model, the total amount of resources of a node reclaimed-resource changes dynamically following the actual resource usage of the high-priority container. The actual available CPU quantity of the BE container on the node side is affected by the load of the LS container to ensure the running quality of the LS container.

As shown in the preceding figure, when the LS container resource usage increases, the number of CPUs available for the BE container decreases accordingly due to the limit of the load water level red line. At the system level, this is reflected in the CPU binding range of the container cgroup and the allocation limit of CPU time slices.

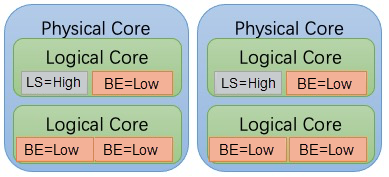

Alibaba Cloud Linux 2 supports the Group Identity feature from the kernel-4.19.91-24.al7 of the kernel version. You can distinguish the priorities of process tasks in containers by setting different identities for containers. The kernel has the following features when scheduling tasks with different priorities:

Low-priority tasks do not affect the performance of high-priority tasks. It is mainly reflected in:

The group identity feature allows you to set an identity for each container to prioritize tasks in the container. The group identity feature relies on a dual red-black tree architecture. A low-priority red-black tree is added based on the red-black tree of the Completely Fair Scheduler (CFS) scheduling queue to store low-priority tasks.

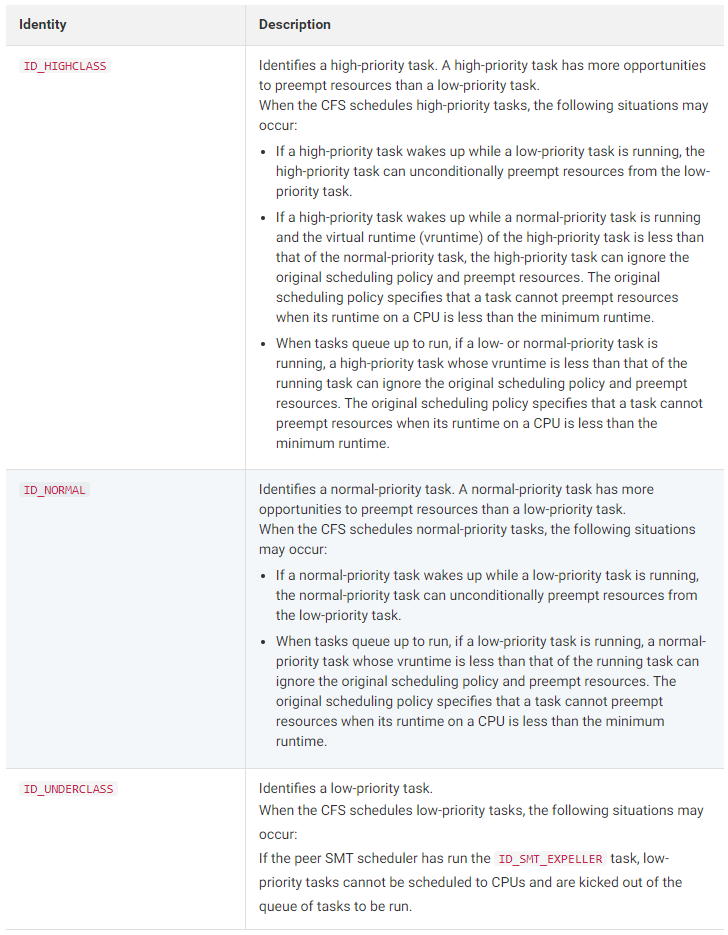

When the kernel schedules the tasks that have identities, the kernel processes the tasks based on their priorities. The following table describes the identities in descending order of priority:

Please refer to the documentation on the official website for more information about the kernel Group Identity capabilities

In the Bare Metal environment, the Last Level Cache (LLC) and Memory Bandwidth Allocation (MBA) of the container can be dynamically adjusted. Performance interference to the LS container can be avoided by limiting the fine-grained resources of the BE container process.

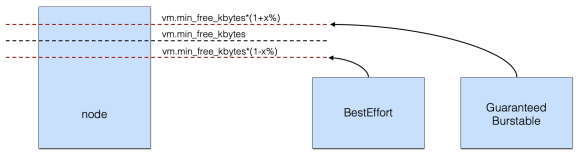

Global memory reclaim has a great impact on system performance in Linux kernels. Resource-consuming (BE) tasks request a large amount of memory instantly, especially when latency-sensitive (LS) tasks and best effort tasks are deployed together, which causes the free memory of the system to reach the global minimum watermark (global wmark_min). As a result, direct memory reclaim is enabled for all system tasks, which causes performance jitters for latency-sensitive tasks. Global kswapd backend reclaim and memcg backend reclaim cannot resolve this problem.

As such, Alibaba Cloud Linux 2 provides the memcg global minimum watermark rating feature. Based on global wmark_min, the global wmark_min of BE is moved up to make it enter direct memory reclamation in advance. Move the global wmark_min of LS down to avoid direct memory reclamation as much as possible. When a BE task requests a large amount of memory, the global minimum watermark is increased to throttle resources used for the task for a short period to prevent direct memory reclaim for LS tasks. After a specified amount of memory is reclaimed by means of global kswapd backend reclaim, the BE task is no longer throttled.

Please see the official website documentation for more information about the global memory minimum watermark rating capability.

After the global minimum watermark rating, the memory resources of the LS container are not affected by the global memory reclamation. However, memory reclamation is triggered when the container is tight. Direct memory reclamation is a synchronous reclamation that occurs in the memory allocation context. Therefore, it affects the performance of the running process in the current container.

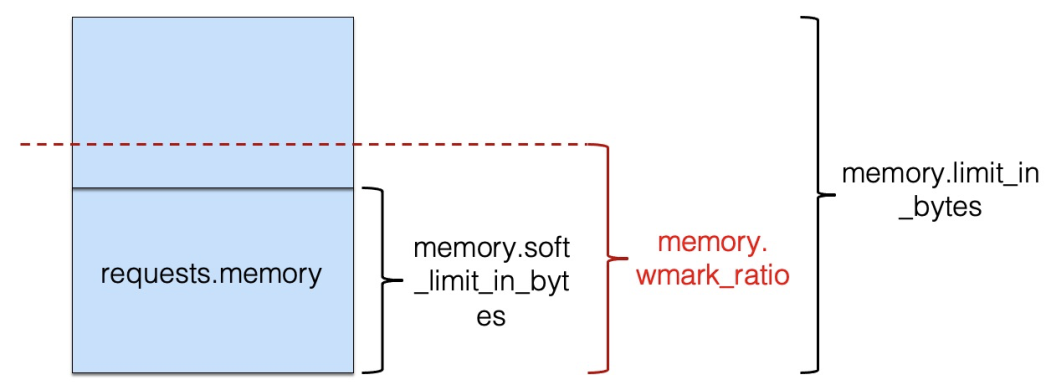

Alibaba Cloud Linux 2 provides the backend asynchronous reclaim feature for container granularity to resolve this problem. This feature differs from the global kswapd kernel thread because this feature uses the workqueue mechanism instead of creating a corresponding memcg kswapd kernel thread. Additionally, memcg control interfaces (memory.wmark_ratio) are added in each of the cgroup v1 and cgroup v2 interfaces.

When the container memory usage exceeds the memory.wmark_ratio, the kernel will automatically enable the asynchronous memory reclamation mechanism to advance the direct memory reclaim and improve the running quality of services.

Please see the document on the official website for more information about the asynchronous recycling capability of container memory in the background.

The preceding section describes a variety of CPU resource suppression capabilities for low-priority offline containers, which can ensure the resource usage of LS businesses. However, when the CPU is suppressed, the performance of the BE task will be affected, and evicting and rescheduling it to other idle nodes can make the task complete faster. In addition, if the BE task holds such resources as kernel global locks when it is suppressed, continuous CPU failure may cause priority inversion and affect the performance of LS applications.

Therefore, the differentiated SLO suite provides eviction capabilities based on CPU resource satisfaction. When the resource satisfaction of BE containers continues to fall below a certain watermark, containers that use reclaimed resources will be evicted in sequence according to the priority from low to high.

The resource requirements of LS containers are guaranteed first for memory resources in hybrid scenarios, even if multiple methods can be used to urge the kernel to reclaim the page cache in advance. However, there is still the risk of OOM caused by full RSS memory in the case of overcommitted memory resources. The ACK differentiated SLO suite provides the eviction capability based on the memory watermark. When the Memory usage level of the whole machine exceeds the watermark, the container is killed and evicted in sequence according to the priority to avoid triggering the OOM of the whole machine and affecting the normal operation of the high-quality container.

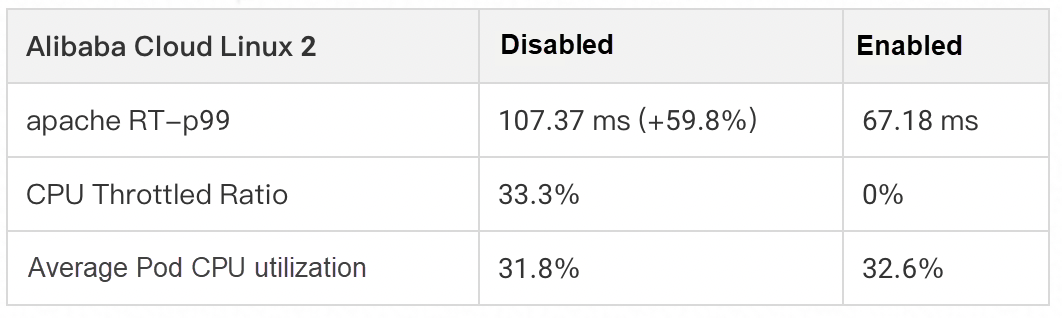

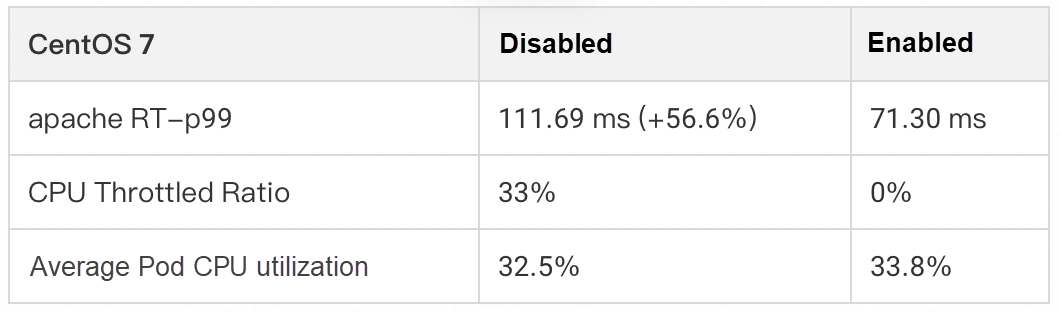

We use Apache HTTP Server as a delay-sensitive online application to evaluate the effect of CPU Burst capability on response time (RT) improvement by simulating request traffic. The following tables show metrics before and after CPU Burst is enabled for Alibaba Cloud Linux 2 and CentOS 7.

After comparing the preceding data, we know:

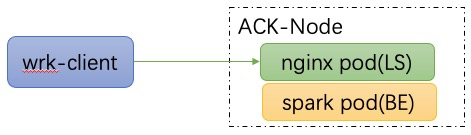

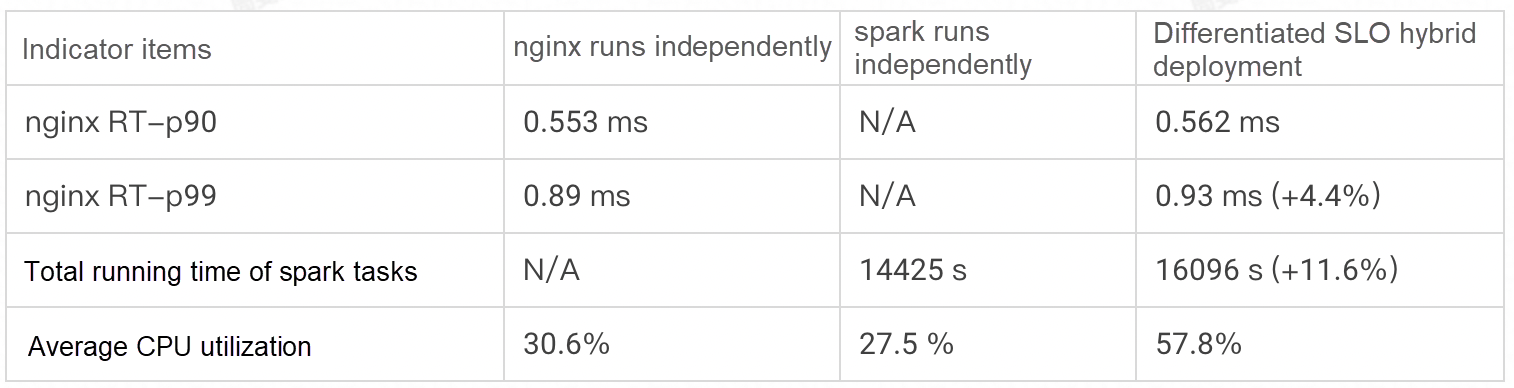

Taking the Web service + big data scenario as an example, we selected Nginx as the Web service (LS), and the hybrid part with Spark benchmark application (BE) is on the same node of the ACK cluster. This article introduces the hybrid effect of ACK differentiated SLO suite in actual scenarios.

Comparing the baseline in the non-hybrid scenario and the data in the differentiated SLO hybrid scenario, we can see that the ACK differentiated SLO suite can improve cluster utilization (30%) while ensuring the service quality of online applications (performance interference < 5%).

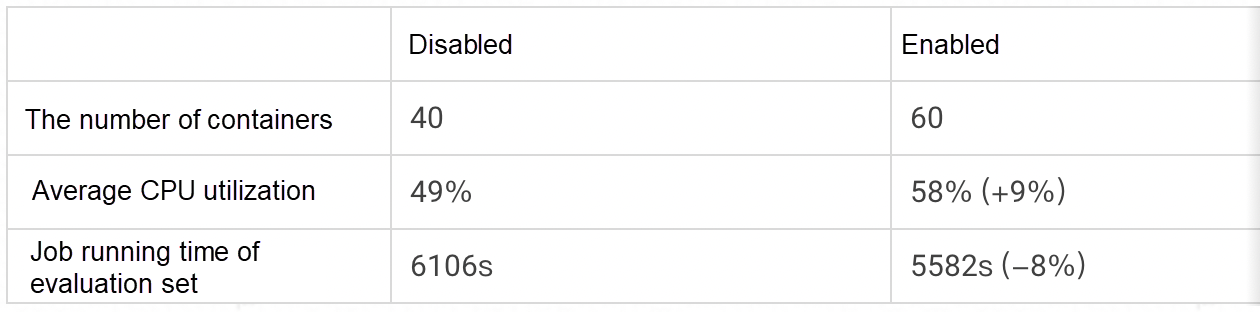

Compared with latency-sensitive online services, big data type applications are not sensitive to resource quality requirements. The differentiated SLO hybrid can improve the container deployment density of big data clusters, improve cluster resource utilization, and shorten the average job uptime. Let's take the Spark TPC-DS evaluation set as an example to introduce the effect of ACK differentiated SLO suites on cluster resource utilization.

The following data shows the comparison of various data metrics before and after the "SLO-based resource scheduling optimization" feature is enabled:

Alibaba Cloud Container Service (ACK) features that support differentiated SLO will be released on the official website. The features can be used independently to ensure the service quality of applications and can also be used together in hybrid scenarios. Practice shows that SLO-based resource scheduling optimization can effectively improve application performance. The SLO-based resource scheduling optimization of ACK can increase the utilization of cluster resources to a considerable level, especially in the hybrid scenario. The technology can control the performance interference introduced by the hybrid within 5% for online delay-sensitive services.

Click here to view the detailed introduction of the differentiated SLO features of Alibaba Cloud Container Service.

How Does SchedulerX Help Users Solve Distributed Task Scheduling Problems?

527 posts | 52 followers

FollowAlibaba Cloud Native Community - December 1, 2022

Alibaba Cloud Community - December 6, 2021

Alibaba Cloud Native Community - July 19, 2022

Alibaba Cloud Native Community - March 11, 2025

Alibaba Container Service - December 5, 2024

Alibaba Clouder - July 15, 2020

527 posts | 52 followers

Follow Alibaba Cloud Linux

Alibaba Cloud Linux

Alibaba Cloud Linux is a free-to-use, native operating system that provides a stable, reliable, and high-performance environment for your applications.

Learn More Hybrid Cloud Solution

Hybrid Cloud Solution

Highly reliable and secure deployment solutions for enterprises to fully experience the unique benefits of the hybrid cloud

Learn More Container Service for Kubernetes

Container Service for Kubernetes

Alibaba Cloud Container Service for Kubernetes is a fully managed cloud container management service that supports native Kubernetes and integrates with other Alibaba Cloud products.

Learn More ACK One

ACK One

Provides a control plane to allow users to manage Kubernetes clusters that run based on different infrastructure resources

Learn MoreMore Posts by Alibaba Cloud Native Community