By Qiaopu and Shenxin

In a cloud-native environment, cluster providers often deploy different types of workloads in the same cluster to take advantage of varying peak effects of businesses. This allows for time-sharing reuse of resources and helps avoid resource waste. However, mixed deployment among different workloads can often result in resource contention and mutual interference. One of the most common scenarios is the hybrid deployment of online and offline workloads. When offline workloads occupy a large portion of computing resources, it affects the response time of online workloads. Similarly, if online workloads occupy a significant amount of computing resources for a long time, it compromises the task completion time of offline workloads. This phenomenon is known as the Noisy Neighbor problem.

Depending on the extent of hybrid deployment and the type of resources involved, there are various approaches to solving this problem. Quota management can limit the resource usage of workloads from the perspective of the entire cluster. Koordinator provides a multi-level elastic quota management feature [1]. At the individual server level, CPU, memory, disk I/O, and network resources may be shared by different workloads. Koordinator already offers some resource isolation and guarantee capabilities for CPU and memory, and work is underway to provide similar capabilities for disk I/O and network resources.

This article primarily focuses on how Koordinator helps facilitate the sharing of CPU resources between different types of workloads (online to online, and online to offline) when they are deployed on the same node.

Essentially, the Noisy Neighbor issue with CPU resources arises when different workloads share CPU resources without coordination.

To address these issues, Kubernetes provides a topology manager and a CPU manager on the standalone side. However, this feature only attempts to take effect after the Pod has been scheduled to the machine. As a result, pods may be scheduled to CPU resources, but the CPU topology may not meet the workload requirements.

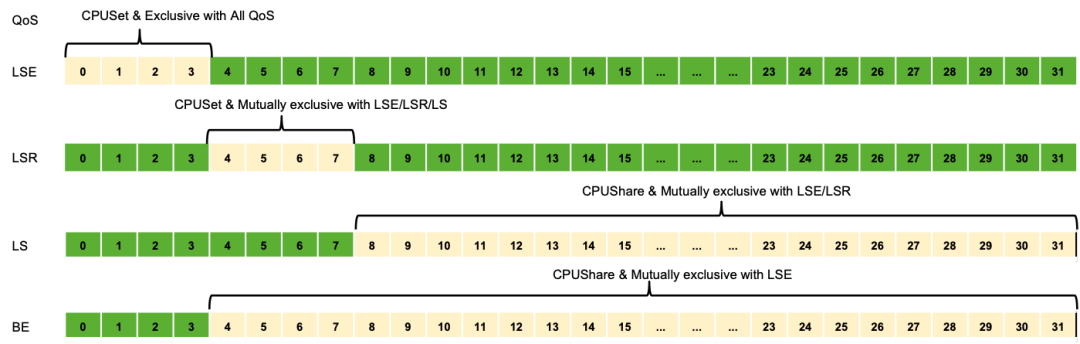

To address the aforementioned issues and shortcomings, Koordinator has designed application-oriented QoS semantics and CPU orchestration protocols, as shown in the following figure.

Latency Sensitive (LS) is applied to typical microservice workloads. Koordinator isolates them from other latency-sensitive workloads to ensure optimal performance. Latency Sensitive Reserved (LSR) is similar to Kubernetes Guaranteed, but it adds the semantics of reserving binding cores for LS-based applications. Latency Sensitive Exclusive (LSE) is commonly used for CPU-sensitive applications like middleware. Koordinator not only fulfills the semantics similar to LSR but also ensures that the allocated CPU is not shared with any other loads.

Additionally, to improve resource utilization, Best Effort (BE) loads can share CPU resources with LSR and LS. To prevent interference from BE on delay-sensitive applications, Koordinator provides strategies such as interference detection and BE suppression. This article does not focus on these strategies, but you can explore them in subsequent articles.

For LSE-type applications on hyper-threading architectures, only the assigned load can be guaranteed to dominate the logical core. When other loads are present on the same physical core, application performance may still be affected. Koordinator allows you to configure various CPU orchestration policies using pod annotations to enhance performance.

CPU orchestration policies are classified into CPU-bound policies and CPU-exclusive policies. The CPU-bound policy determines the distribution of allocated logical cores among physical cores. This policy includes FullPCPU and SpreadByPCPU. FullPCPU allocates complete physical cores to applications, effectively mitigating the Noisy Neighbor problem. SpreadByPCPU is primarily used for latency-sensitive applications with varying peak and valley characteristics, enabling applications to fully utilize the CPU at specific times. The CPU exclusive policy determines the exclusivity level of the assigned logical cores, and you can avoid using physical cores or NUMANodes with the same exclusive policy.

Koordinator allows you to configure a NUMA allocation policy to determine how to select a suitable NUMA node during scheduling. MostAllocated indicates allocating from the NUMA node with the least available resources, reducing fragmentation and leaving more allocation space for subsequent loads. However, this approach may impact the performance of parallel code relying on barriers. DistributeEvenly indicates evenly distributing CPUs across NUMA nodes, which can improve the performance of the aforementioned parallel code. LeastAllocated suggests allocating from the NUMA node with the most available resources.

Furthermore, Koordinator's CPU allocation logic is handled in the central scheduler. This provides a global perspective and avoids the dilemma faced by the standalone solution in Kubernetes where there may be sufficient CPU resources but topology mismatches.

Koordinator's refined CPU orchestration capability can significantly enhance the performance of CPU-sensitive workloads in multi-application hybrid deployment scenarios. To provide a clearer understanding and intuitive experience of Koordinator's refined CPU orchestration capability, this article deploys online applications in different ways and observes service latency during stress testing to evaluate the impact of CPU orchestration.

In this scenario, multiple online applications are deployed on the same machine and subjected to a 10-minute stress test to simulate CPU core switching scenarios that may occur in production practices. For hybrid deployment of online and offline applications, Koordinator offers policies such as interference detection and BE suppression.

| No. | Deployment Mode | Description | Scenario |

| A | Ten online applications are deployed on the node, and each application node has 4 CPUs, all of which use Kubernetes Guaranteed QoS. | Koordinator does not provide refined CPU orchestration capability for applications. | Due to CPU core switching, applications share logical cores, affecting application performance. We recommend that you do not use it. |

| B | Ten online applications are deployed on the node, and each application node has 4 CPUs, all of which use LSE QoS. The CPU binding policy is FullPCPUs. | Koordinator provides CPU core binding capability for LSE pods and does not share physical cores among online applications. | Particularly sensitive online scenario, which does not accept CPU sharing at the physical core level. |

| C | Ten online applications are deployed on the node, and each application node has 4 CPUs, all of which use LSR QoS. CPU binding policy is SpreadByPCPUs, and PCPUs dominate CPU. | Koordinator provides CPU core binding capability for LSR pods and allows online application logical cores to use more physical cores. | It is often used to share physical cores with offline pods to implement time-sharing reuse at the physical core level. This article does not focus on the hybrid part of online and offline applications, so only the online application overuse is tested. |

This experiment uses the following metrics to evaluate the performance of NGINX applications in different deployment modes:

• The Response Time (RT): a performance metric of online applications. The shorter the RT, the better the performance of online services. The RT metric is obtained by collecting the information printed after the wrk stress test ends. The experiment reflects the time the NGINX application takes to respond to the wrk request. For example, RT-p50 indicates the maximum time (median) taken by NGINX to respond to the top 50% wrk requests. RT-p90 indicates the maximum time taken by Nginx to respond to the top 90% wrk requests.

• Request per Second (RPS) RPS: the number of requests that an online application services per second. The more the RPS, the better the performance of the online service.

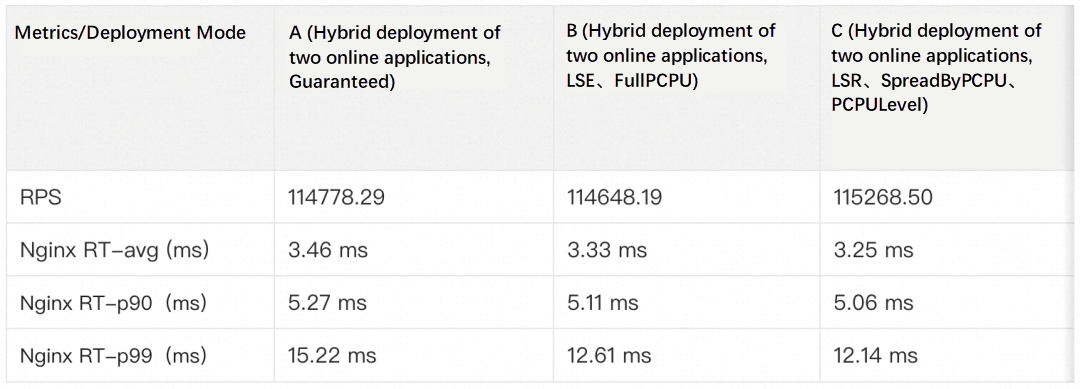

The experimental results are as follows:

• Comparing B and A, it can be found that after using LSE QoS binding core, the service response time P99 is significantly reduced, which greatly reduces the long tail phenomenon.

• Comparing C and B, it can be found that after using LSR QoS binging core and allowing the logical core to occupy more physical core resources, online services can bear more requests in the case of shorter service response time.

To sum up, in scenarios where online services are deployed on the same machine, refined CPU orchestration using koordinator can effectively suppress the Noisy Neighbor problem and reduce the performance degradation caused by CPU core switching.

First, you must prepare a Kubernetes cluster and install the Koordinator [2]. This article selects two nodes of a Kubernetes cluster. One node serves as the test machine and will run the NGINX online server. The other serves as the stress testing machine and will run the wrk of the client, request Web services from Nginx, and make stress testing requests.

1. Use ColocationProfile [3] to inject refined CPU orchestration protocols into applications

Refined CPU Orchestration Protocol of group B:

apiVersion: config.koordinator.sh/v1alpha1

kind: ClusterColocationProfile

metadata:

name: colocation-profile-example

spec:

selector:

matchLabels:

app: nginx

# Use LSE QoS

qosClass: LSE

annotations:

# Use FullPCPU

scheduling.koordinator.sh/resource-spec: '{"preferredCPUBindPolicy":"FullPCPUs"}'

priorityClassName: koord-prodRefined CPU orchestration protocol of group C:

apiVersion: config.koordinator.sh/v1alpha1

kind: ClusterColocationProfile

metadata:

name: colocation-profile-example

spec:

selector:

matchLabels:

app: nginx

# Use LSR QoS

qosClass: LSR

annotations:

# Use SpreadByPCPU and dominate physical core.

scheduling.koordinator.sh/resource-spec: '{"preferredCPUBindPolicy":"SpreadByPCPUs", "preferredCPUExclusivePolicy":"PCPULevel"}'

priorityClassName: koord-prod2. Online Services. This article uses the NGINX online server. The Pod YAML is as follows:

---

# Example of the NGINX configuration.

apiVersion: v1

data:

config: |-

user nginx;

worker_processes 4; # The number of NGINX workers, which affects the concurrency of the NGINX server.

events {

worker_connections 1024; # Default value: 1024.

}

http {

server {

listen 8000;

gzip off;

gzip_min_length 32;

gzip_http_version 1.0;

gzip_comp_level 3;

gzip_types *;

}

}

#daemon off;

kind: ConfigMap

metadata:

name: nginx-conf-0

---

# NGINX instance, as an online application.

apiVersion: v1

kind: Pod

metadata:

labels:

app: nginx

name: nginx-0

namespace: default

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/hostname

operator: In

values:

- "${node_name}"

schedulerName: koord-scheduler

priorityClassName: koord-prod

containers:

- image: 'koordinatorsh/nginx:v1.18-koord-exmaple'

imagePullPolicy: IfNotPresent

name: nginx

ports:

- containerPort: 8000

hostPort: 8000 # The port that is used to perform stress tests.

protocol: TCP

resources:

limits:

cpu: '4'

memory: 8Gi

requests:

cpu: '4'

memory: 8Gi

volumeMounts:

- mountPath: /apps/nginx/conf

name: config

hostNetwork: true

restartPolicy: Never

volumes:

- configMap:

items:

- key: config

path: nginx.conf

name: nginx-conf-0

name: config3. Run the following command to deploy the NGINX application.

kubectl apply -f nginx-0.yaml4. Run the following command to view the pod status of the NGINX application.

kubectl get pod -l app=nginx -o wideThe following output indicates that the NGINX application has been running on the test machine.

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-0 1/1 Running 0 2m46s 10.0.0.246 cn-beijing.10.0.0.246 <none> <none>5. On the stress testing machine, run the following command to deploy the stress testing tool wrk.

wget -O wrk-4.2.0.tar.gz https://github.com/wg/wrk/archive/refs/tags/4.2.0.tar.gz && tar -xvf wrk-4.2.0.tar.gz

cd wrk-4.2.0 && make && chmod +x ./wrk1. Use the stress testing tool wrk to initiate a stress testing request to the NGINX application.

# Replace node_ip with the IP address of the tested machine. Port 8000 of the NGINX service is exposed to the tested machine.

taskset -c 32-45 ./wrk -t120 -c400 -d600s --latency http://${node_ip}:8000/2. After the wrk is run, obtain the stress test result of wrk. The output format of wrk is as follows: Repeat the test several times to obtain relatively stable results.

Running 10m test @ http://192.168.0.186:8000/

120 threads and 400 connections

Thread Stats Avg Stdev Max +/- Stdev

Latency 3.29ms 2.49ms 352.52ms 91.07%

Req/Sec 0.96k 321.04 3.28k 62.00%

Latency Distribution

50% 2.60ms

75% 3.94ms

90% 5.55ms

99% 12.40ms

68800242 requests in 10.00m, 54.46GB read

Requests/sec: 114648.19

Transfer/sec: 92.93MBIn a Kubernetes cluster, different workloads may compete for resources such as CPU and memory, which can impact the performance and stability of the service. To address the issue of resource contention, you can utilize Koordinator to configure a more refined CPU orchestration policy for applications. This allows different applications to effectively share CPU resources and mitigate the Noisy Neighbor problem. Experimental results demonstrate that Koordinator's advanced CPU orchestration capability successfully suppresses CPU resource contention and enhances application performance.

You are welcome to join us through Github/Slack.

[1] Multi-level Elastic Quota Management

https://koordinator.sh/docs/user-manuals/multi-hierarchy-elastic-quota-management/

[2] Install Koordinator

https://koordinator.sh/docs/installation/

[3] ColocationProfile

https://koordinator.sh/docs/user-manuals/colocation-profile/

How Does DeepSpeed + Kubernetes Easily Implement Large-Scale Distributed Training?

Born for Data Elasticity, Alibaba Cloud Cloud-native Storage Speeds Up Again

508 posts | 49 followers

FollowAlibaba Cloud Native Community - December 7, 2023

Alibaba Cloud Native Community - December 1, 2022

Alibaba Cloud Native Community - December 7, 2023

Alibaba Cloud Native Community - March 29, 2023

Alibaba Cloud Native Community - June 29, 2023

Alibaba Cloud Native Community - July 19, 2022

508 posts | 49 followers

Follow Cloud-Native Applications Management Solution

Cloud-Native Applications Management Solution

Accelerate and secure the development, deployment, and management of containerized applications cost-effectively.

Learn More Container Service for Kubernetes

Container Service for Kubernetes

Alibaba Cloud Container Service for Kubernetes is a fully managed cloud container management service that supports native Kubernetes and integrates with other Alibaba Cloud products.

Learn More CloudOps Orchestration Service

CloudOps Orchestration Service

CloudOps Orchestration Service is an automated operations and maintenance (O&M) service provided by Alibaba Cloud.

Learn More Serverless Workflow

Serverless Workflow

Visualization, O&M-free orchestration, and Coordination of Stateful Application Scenarios

Learn MoreMore Posts by Alibaba Cloud Native Community