By Youyi and Lvfeng

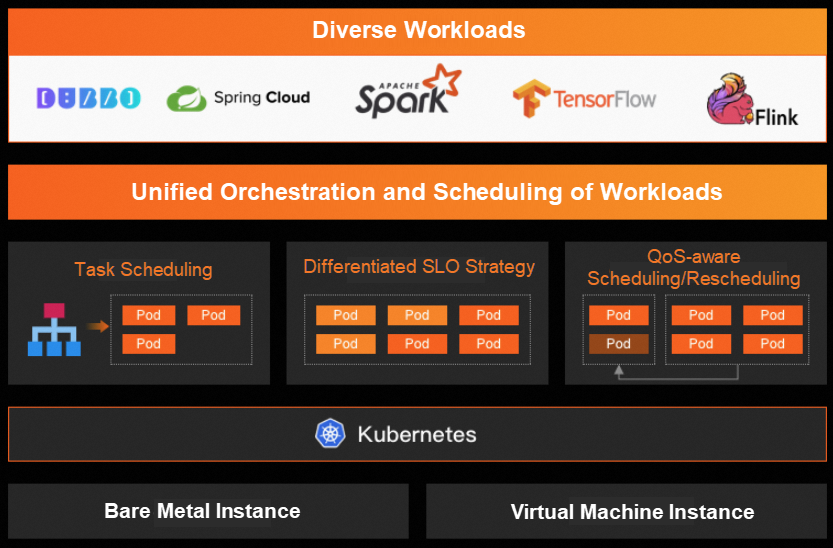

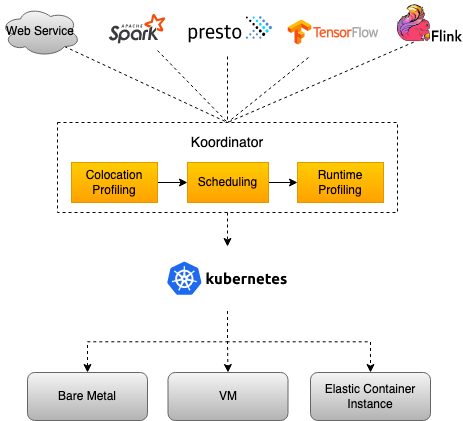

Koordinator is an open source project that is incubated based on years of experience of Alibaba in the container scheduling field. It can improve container performance and reduce cluster resource costs. With technical capabilities, such as co-location, resource profiling, and scheduling optimization, it can improve the running efficiency and reliability of latency-sensitive workloads and batch jobs, and optimize the efficiency of cluster resource usage.

Since its release in April 2022, a total of 10 versions of Koordinator have been released so far. In Koordinator v1.2, it supports node resource reservation and is compatible with the rescheduling policy of the Kubernetes community. In addition, it enables the resource isolation of L3 cache and memory bandwidth in the AMD environment on the standalone side.

In the new version, there are 12 new developers involved in the construction of the Koordiantor community, and they are @ReGrh, @chengweiv5, @kingeasternsun, @shelwinnn, @yuexian1234, @Syulin7, @tzzcfrank, @Dengerwei, @complone, @AlbeeSo, @xigang, and @leason00. Thank you for your contribution and participation.

Various applications exist in the co-location scenario. Besides cloud-native containers, there are many applications that have not yet been containerized and run as processes on the host with Kubernetes containers. To reduce resource competition between Kubernetes applications and other types of applications on the node side, Koordinator reserves a portion of resources not involved in resource scheduling by the scheduler or resource allocation on the node side. This way, resources are used separately. In version v1.2, Koordinator supports resource reservation for CPU and memory and allows specifying the reserved CPU numbers directly. The details are as follows.

The amount of resources to be reserved or the specific CPU numbers can be configured on nodes, for example:

apiVersion: v1

kind: Node

metadata:

name: fake-node

annotations: # specific 5 cores will be calculated, e.g. 0, 1, 2, 3, 4, and then those core will be reserved.

node.koordinator.sh/reservation: '{"resources":{"cpu":"5"}}'

---

apiVersion: v1

kind: Node

metadata:

name: fake-node

annotations: # the cores 0, 1, 2, 3 will be reserved.

node.koordinator.sh/reservation: '{"reservedCPUs":"0-3"}'When the standalone component Koordlet reports node resource topology information, it updates the specific reserved CPU numbers to the annotation of the NodeResourceTopology object.

In the process of allocating resources, the scheduler is involved in resource verification in various situations, including quota management, node capacity verification, and CPU topology verification. In these scenarios, the consideration of reserved node resources is required. For example, the scheduler needs to deduct the reserved resources of nodes when calculating the node CPU capacity.

cpus(alloc) = cpus(total) - cpus(allocated) - cpus(kubeletReserved) - cpus(nodeAnnoReserved)In addition, the calculation of batch co-located overcommitted resources also requires deducting this part of resources. But considering the resource consumption of system processes in nodes, Koord-Manager takes the maximum value of node reservation and system usage during calculation. The details are as follows:

reserveRatio = (100-thresholdPercent) / 100.0

node.reserved = node.alloc * reserveRatio

system.used = max(node.used - pod.used, node.anno.reserved)

Node(BE).Alloc = Node.Alloc - Node.Reserved - System.Used - Pod(LS).UsedFor rescheduling, each plug-in policy needs to be aware of the resources reserved on nodes in scenarios such as node capacity calculation and utilization calculation. In addition, if a container occupies the reserved resources of nodes, rescheduling must be performed to evict the container to ensure proper node capacity management and avoid resource competition. This part of rescheduling-related features will be supported in subsequent versions. You are welcome to participate in the co-construction.

For Latency-Sensitive (LS) pods, Koordlet dynamically calculates the shared CPU pool based on CPU allocation. For CPU cores reserved by nodes, Koordlet excludes them to ensure resource isolation between LS pods and other non-containerized processes. At the same time, as a standalone-related QoS policy, the CPU Suppress policy takes the reserved resources into account when calculating node utilization.

suppress(BE) := node.Total * SLOPercent - pod(LS).Used - max(system.Used, node.anno.reserved)For more information about the node resource reservation feature, see https://github.com/koordinator-sh/koordinator/blob/main/docs/proposals/scheduling/20221227-node-resource-reservation.md

Compatible with the Rescheduling Policy of the Kubernetes Community

Thanks to the increasingly mature framework of Koordinator Descheduler, Koordinator v1.2 introduces an interface adaptation mechanism to seamlessly integrate with existing Kubernetes Descheduler plug-ins. You only need to deploy Koordinator Descheduler to use all upstream features.

In terms of implementation, Koordinator Descheduler does not make any intrusive modifications by importing the upstream code, ensuring that it is fully compatible with all upstream plug-ins, parameter configurations, and running policies. At the same time, Koordinator allows you to specify an enhanced evictor for upstream plug-ins, so as to reuse security policies provided by Koordinator, such as resource reservation, workload availability guarantee, and global flow control.

List of compatible plug-ins:

• HighNodeUtilization

• LowNodeUtilization

• PodLifeTime

• RemoveFailedPods

• RemoveDuplicates

• RemovePodsHavingTooManyRestarts

• RemovePodsViolatingInterPodAntiAffinity

• RemovePodsViolatingNodeAffinity

• RemovePodsViolatingNodeTaints

• RemovePodsViolatingTopologySpreadConstraint

• DefaultEvictor

When using the above plug-ins, you can refer to the following configuration. Let's take RemovePodsHavingTooManyRestarts as an example:

apiVersion: descheduler/v1alpha2

kind: DeschedulerConfiguration

clientConnection:

kubeconfig: "/Users/joseph/asi/koord-2/admin.kubeconfig"

leaderElection:

leaderElect: false

resourceName: test-descheduler

resourceNamespace: kube-system

deschedulingInterval: 10s

dryRun: true

profiles:

- name: koord-descheduler

plugins:

evict:

enabled:

- name: MigrationController

deschedule:

enabled:

- name: RemovePodsHavingTooManyRestarts

pluginConfig:

- name: RemovePodsHavingTooManyRestarts

args:

apiVersion: descheduler/v1alpha2

kind: RemovePodsHavingTooManyRestartsArgs

podRestartThreshold: 10In earlier versions of Koordinator, the Reservation mechanism was introduced to help solve the problem of resource delivery certainty by reserving resources and allocating them to pods with specified characteristics for use. For example, in the rescheduling scenario, the pods that are expected to be evicted must have available resources to avoid stability problems due to insufficient resources. Alternatively, some PaaS platforms aim to determine if the resources meet the requirements for application scheduling and orchestration before deciding whether to scale up or make advance preparations.

Koordinator Reservation is defined based on CRD. Each Reservation object is forged into a pod for scheduling in the koord-scheduler, which can reuse existing scheduling plug-ins and scoring plug-ins to find suitable nodes and eventually occupy corresponding resources in the internal state of the scheduler. When a Reservation is created, it specifies which pods the reserved resources will be used by in the future. These can be specific pods, specific workload objects, or pods with certain labels. When these pods are scheduled by the koord-scheduler, the scheduler finds the Reservation objects that can be used by the pods and preferentially uses the Reservation resources. In addition, the Reservation Status records the pods that use the Reservation objects, and the Pod Annotations also record the Reservation that is used. After the Reservation is used, the internal status is automatically cleared to ensure that other pods do not fail to be scheduled due to the Reservation.

In Koordinator v1.2, significant optimizations have been made. First, the restriction that only the resources held by Reservation can be used has been removed, so both the resources reserved by Reservation and the remaining resources on nodes can be used. Additionally, the Kubernetes Scheduler Framework has been extended in a non-intrusive way to support fine-grained resource reservation, including CPU cores and GPU devices. The default law for Reservation reuse has also been modified to AllocateOnce, which means that once a Reservation is used by a pod, the Reservation is discarded.This change is made in view of the fact that AllocateOnce can better cover most scenarios, which is more user-friendly and can cover most scenarios.

In the latest 1.2.0 release, we add support for resource isolation in the AMD environment.

The resource isolation of Linux kernel L3 Cache and memory bandwidth provides a unified resctrl interface and supports both Intel and AMD environments. The main difference is that the memory bandwidth isolation interface provided by Intel is in percentage format, while the memory bandwidth isolation interface provided by AMD is in absolute value format. The details are as follows:

# Intel Format

# resctrl schema

L3:0=3ff;1=3ff

MB:0=100;1=100

# AMD Format

# resctrl schema

L3:0=ffff;1=ffff;2=ffff;3=ffff;4=ffff;5=ffff;6=ffff;7=ffff;8=ffff;9=ffff;10=ffff;11=ffff;12=ffff;13=ffff;14=ffff;15=ffff

MB:0=2048;1=2048;2=2048;3=2048;4=2048;5=2048;6=2048;7=2048;8=2048;9=2048;10=2048;11=2048;12=2048;13=2048;14=2048;15=2048The interface format consists of two parts. L3 represents the ways available for the corresponding sockets or CCDs, which is expressed in hexadecimal data format, and each bit represents one way. MB represents the memory bandwidth ranges that the corresponding sockets or CCDs can use. Intel supports a percentage format ranging from 0 to 100. AMD corresponds to an absolute value format, whose unit is GB/s, and 2048 represents no limit. Koordiantor provides a unified interface in percentage format, and automatically senses whether the node environment is AMD and determines the format entered in the resctrl interface.

apiVersion: v1

kind: ConfigMap

metadata:

name: slo-controller-config

namespace: koordinator-system

data:

resource-qos-config: |-

{

"clusterStrategy": {

"lsClass": {

"resctrlQOS": {

"enable": true,

"catRangeStartPercent": 0,

"catRangeEndPercent": 100,

"MBAPercent": 100

}

},

"beClass": {

"resctrlQOS": {

"enable": true,

"catRangeStartPercent": 0,

"catRangeEndPercent": 30,

"MBAPercent": 100

}

}

}

}Through the v1.2 release[1] page, you can see the new features included in more versions.

In the following versions, Koordiantor plans to focus on the following features, including:

• Hardware topology-aware scheduling, which comprehensively considers the topological relationships of multiple resource dimensions such as CPU, memory, and GPU, and optimizes scheduling within the cluster.

• Enhance the observability and traceability of the rescheduler.

• Enhance the GPU resource scheduling capability.

You are welcome to join the Koordinator community.

[1] v1.2 release: https://github.com/koordinator-sh/koordinator/releases/tag/v1.2.0

Analysis of the Metrics Function of the Dubbo Observability Practice

ZooKeeper Practice: Solving Data Inconsistency Caused by ZooKeeper v3.4.6

508 posts | 49 followers

FollowAlibaba Cloud Native Community - December 1, 2022

Alibaba Cloud Native Community - March 29, 2023

Alibaba Cloud Native Community - August 15, 2024

Alibaba Cloud Native Community - July 19, 2022

Alibaba Cloud Native Community - January 25, 2024

Alibaba Cloud Native - November 15, 2022

508 posts | 49 followers

Follow ACK One

ACK One

Provides a control plane to allow users to manage Kubernetes clusters that run based on different infrastructure resources

Learn More Cloud-Native Applications Management Solution

Cloud-Native Applications Management Solution

Accelerate and secure the development, deployment, and management of containerized applications cost-effectively.

Learn More Managed Service for Prometheus

Managed Service for Prometheus

Multi-source metrics are aggregated to monitor the status of your business and services in real time.

Learn More Container Service for Kubernetes

Container Service for Kubernetes

Alibaba Cloud Container Service for Kubernetes is a fully managed cloud container management service that supports native Kubernetes and integrates with other Alibaba Cloud products.

Learn MoreMore Posts by Alibaba Cloud Native Community

Dikky Ryan Pratama July 1, 2023 at 3:50 pm

awesome!