By Xinyang

During the composition of this paper, Yanqing Peng, Feidao, Wangsheng, Leyu, Maijun, and Yuexie contributed a lot. Here, I want to show my special appreciation to Feidao's guidance and help. I also want to express gratitude for Xili's support and Deshi's help and support.

"There are gaps between cow bones, while a knife is extremely thin. Therefore, it is easy to cut the bones with the knife." - Abstracted from Pao Ding Jie Niu.

This Chinese saying means that you can do a job well as long as you understand the rules for doing it.

With the application and popularization of the mobile Internet, Internet of Things (IoT), and 5G, we are stepping into the age of the digital economy. The resulting massive data will be an objective existence and will play an increasingly important role in the future. Time series data is an important part of massive data. In addition to data mining, analysis, and prediction, how to effectively compress the data for storage is also an important topic of discussion. Today, we are also in the artificial intelligence (AI) age, where deep learning has been widely used. How can we use it in more applications? The essence of deep learning is to make decisions. When we use deep learning to solve a specific problem, it is important to find a breakthrough point and create an appropriate model. On this basis, we can sort out data, optimize the loss, and finally solve the problem. In the past, we conducted some research and exploration in data compression by using deep reinforcement learning and found some results. We published a paper titled "Two-level Data Compression Using Machine Learning in Time Series Database" in ICDE 2020 Research Track and made an oral report. This article introduces our research and exploration, and we hope that you will find it helpful for other scenarios, at least for the compression of other data.

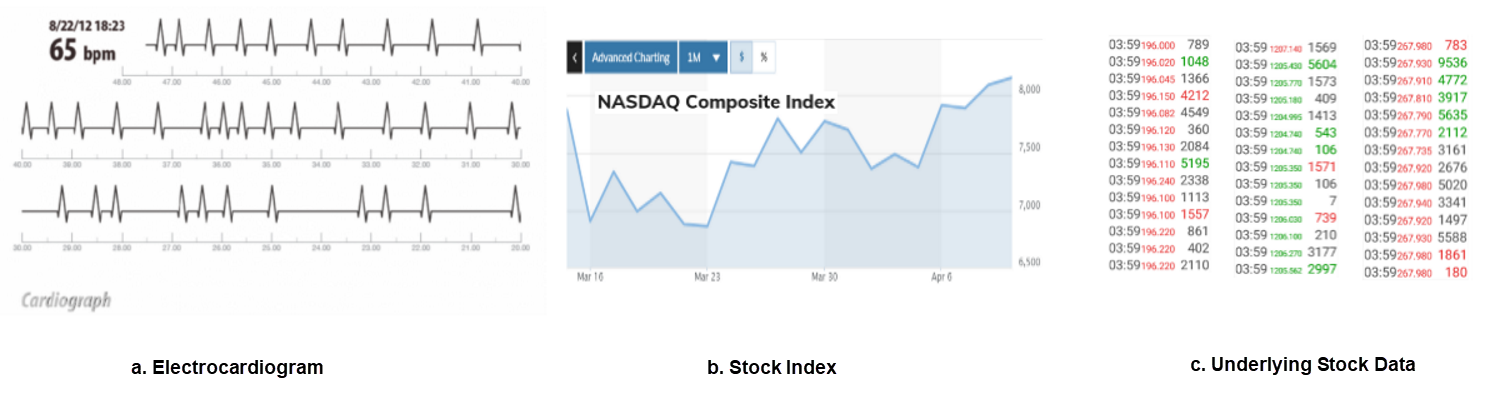

As its name implies, time series data refers to data related to time series. It is a common form of data. The following figure shows three examples of time series data: a) electrocardiogram, b) stock index, and c) specific stock exchange data.

From the view of users, a time series database provides query, analysis, and prediction of massive data. However, at the underlying layer, the time series database must perform massive operations, including read and write, compression and decompression, and aggregation. These operations are performed on the basic unit of time series data. Generally, time series data is described by two 8-byte values in a unified manner, which can also be simplified.

As you can imagine, electronic devices generate massive time series data of different types every day, which requires huge storage space. In this case, compressing the data for storage and processing is something natural. Then, the point is how to more efficiently compress the data.

Depending on whether samples have groundTruth, machine learning is classified into supervised learning, unsupervised learning, and reinforcement learning. As the name implies, reinforcement learning requires continuous learning, which does not require groundTruth. In the real world, groundTruth is also often unavailable. For example, human cognition is mostly a process of continuous learning in iterative mode. In this sense, reinforcement learning is a process and method that is more suitable or popular for dealing with real-world problems. So, many people say if deep learning gradually becomes a basic tool for solving specific problems like C, Python, and Java, reinforcement learning will be a basic tool of deep learning.

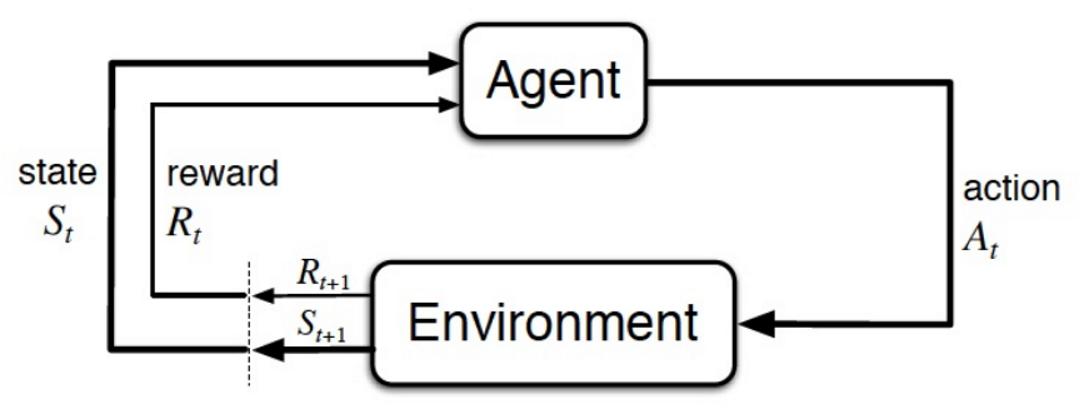

The following figure shows the classic schematic diagram of reinforcement learning, with the basic elements of state, action, and environment. The basic process is: The environment provides a state. The agent makes a decision on an action based on the state. The action works in the environment to generate a new state and reward. The reward is used to guide the agent to make a better decision on an action. The cycle works repeatedly.

In comparison, the common supervised learning, which can be considered special reinforcement learning, is much simpler. Supervised learning has a definite target, groundTruth. Therefore, the corresponding reward is also definite.

Reinforcement learning can be classified into the following types:

Undoubtedly, in the real world, it is necessary to compress massive time series data. Therefore, the academic and industrial worlds have done a lot of research, including:

There are also many related compression algorithms. In general,

Time series data comes from different fields, such as the IoT, finance, Internet, business management, and monitoring. Therefore, they have different forms and characteristics, and also have different requirements for data accuracy. If only one unified compression algorithm can be used for non-differential processing, this algorithm should be a lossless algorithm that describes data in the unit of 8 bytes.

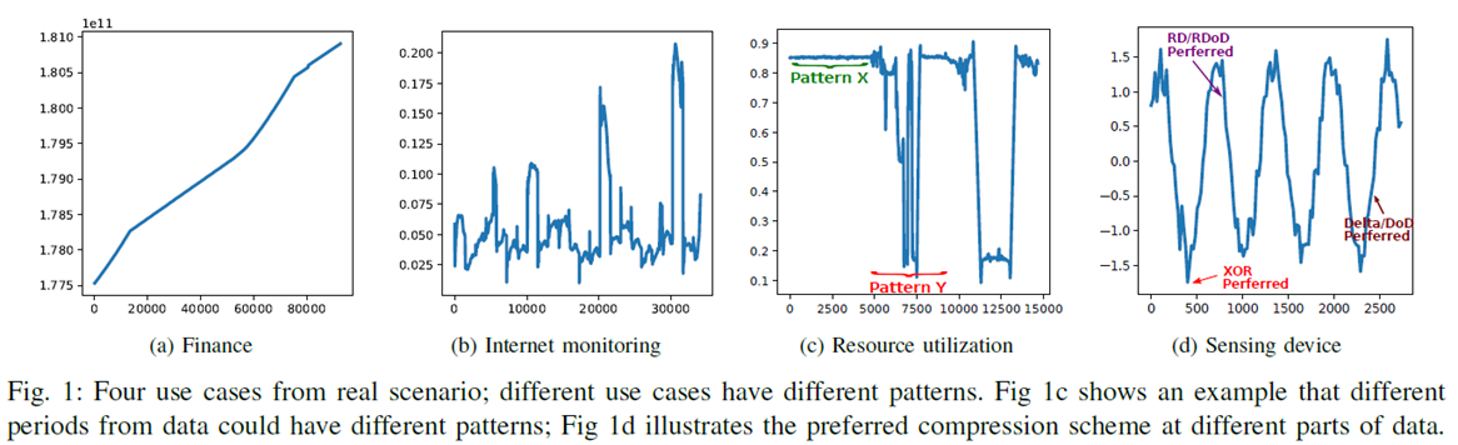

The following figure shows some examples of time series data used in Alibaba Cloud business. Regardless of the macro or micro view, there are various data patterns, both different in shape curve and data accuracy. Therefore, compression algorithms must support as many compression patterns as possible, so an effective and economic compression pattern can be selected for compression.

A large-scale commercial compression algorithm for time series data must have three important characteristics:

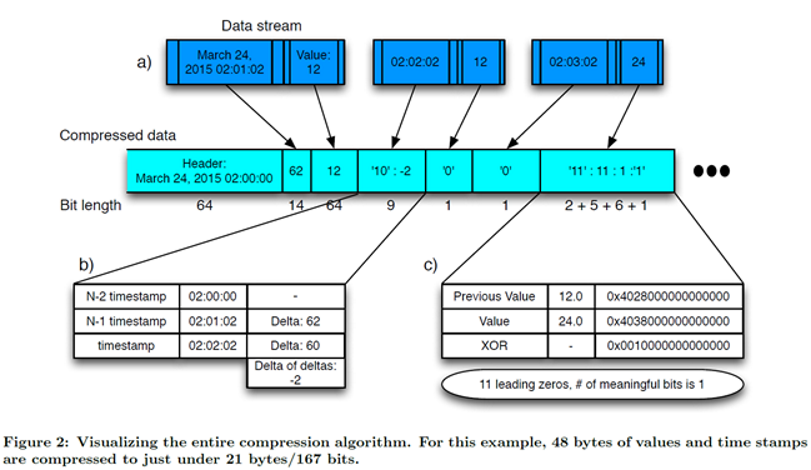

The essence of data compression can be divided into two stages. The first stage is transformation, at which data is transformed from one space to another space with a more regular arrangement. The second stage is delta encoding, at which various methods can be used to identify the resulting deltas after delta processing.

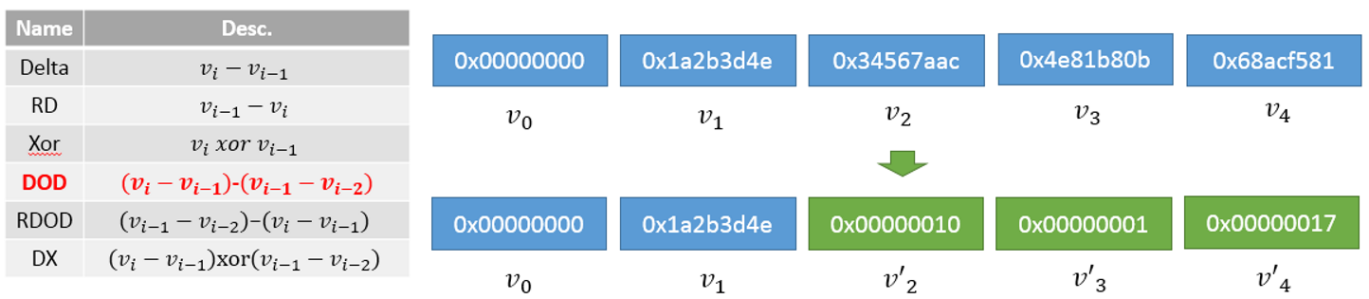

Based on the characteristics of time series data, we can define the following six basic transforming primitives. All of them are expandable.

Then, we can define the following three basic differential coding primitives. All of them are also expandable.)

Next, should we sort and combine the preceding two tools for compression? This is feasible, but the effect is not good, because the cost proportion of pattern selection and related parameters are too high. The control information of 2 bytes (primitive choice + primitive parameter) accounts for 25% of data to be expressed in 8 bytes.

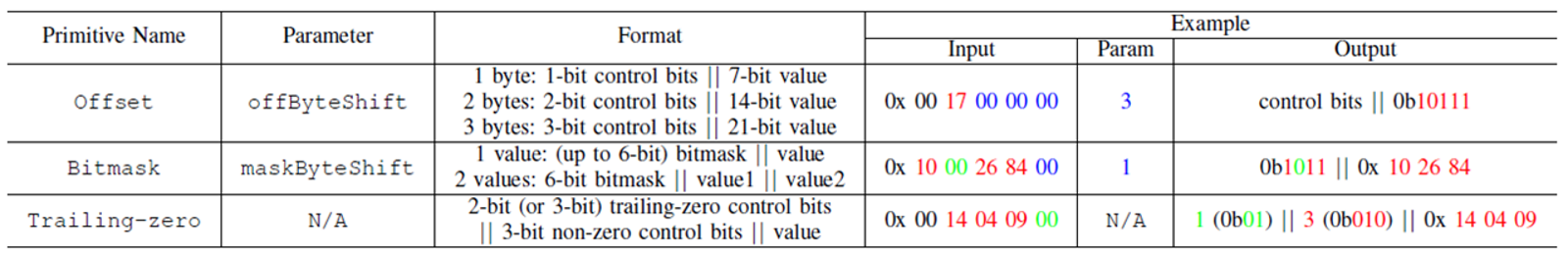

Therefore, a better solution is to express the data characteristics at abstracted layers, as shown in the following figure. Create a control parameter set to better express all situations. Then, select proper parameters at the global (timeline) level to determine a search space, which contains only a small number of compression patterns, such as four patterns. During the compression of each point in the timeline, traverse all the compression patterns and select the best one for compression. In this solution, the proportion of control information is about 3%.

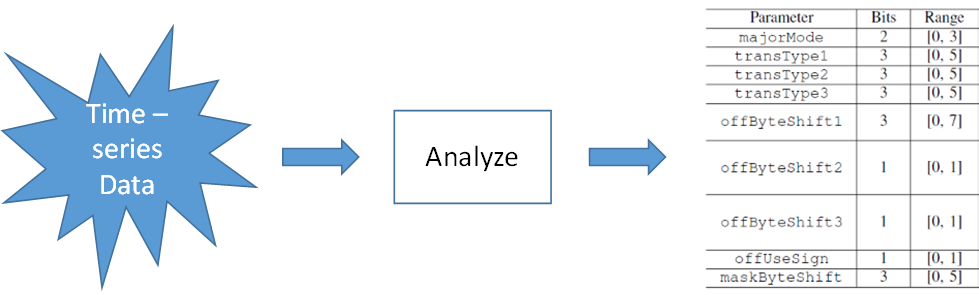

The overall process of adaptive multiple mode middle-out (AMMMO) is divided into two stages. At the first stage, determine the general characteristics of the current timeline and determine the values of nine control parameters. Then at the second stage, traverse a small number of compression patterns and select the best pattern for compression.

It is easy to choose a pattern at the second stage. However, it is challenging to obtain a proper compression space by determining the parameter values (nine values in this example) at the first stage, because a proper compression space must be selected from 300,000 combinations (in theory.)

We can design an algorithm that creates a scoreboard for all compression patterns. Then, the algorithm traverses all points in a timeline and performs analysis and recording. Eventually, the algorithm can select the best pattern through statistics, analysis, and comparison. Here, this process involves some obvious problems:

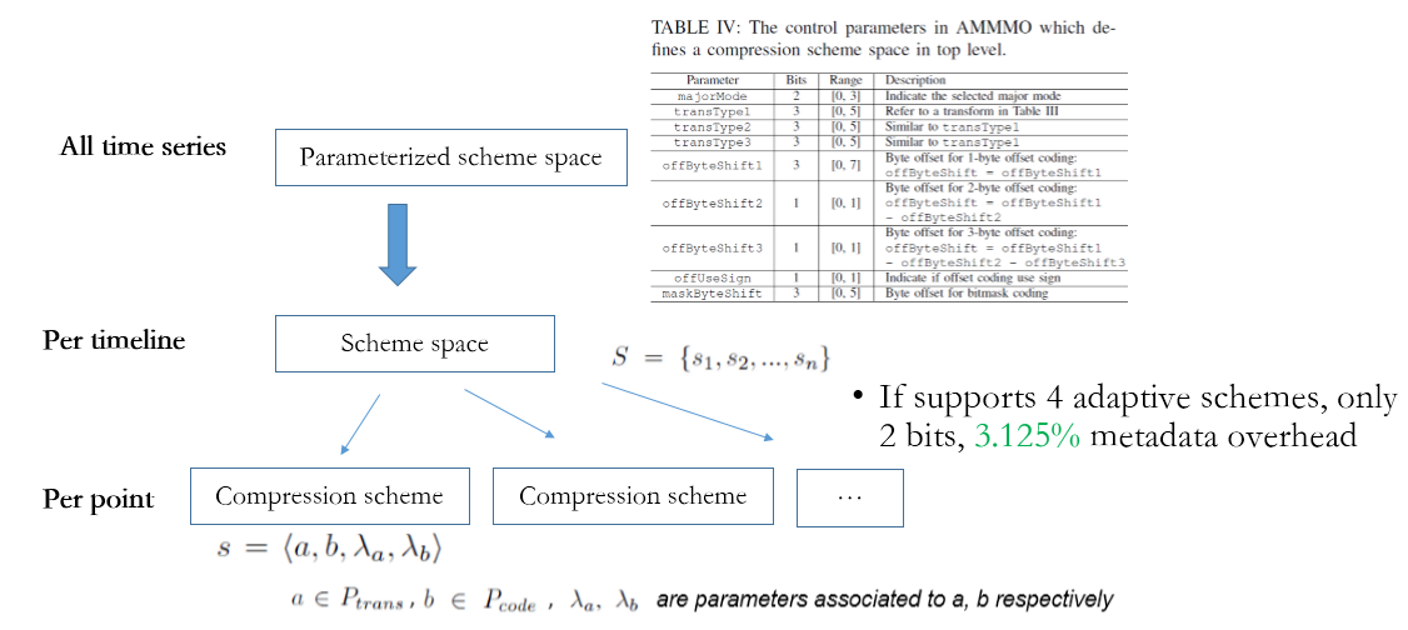

We can simplify the preceding pattern and space selection algorithm into the structure shown in the following diagram. In this way, we can consider the problem as a classification problem with multiple targets. Each parameter is a target, and the value range of each parameter space includes the available classes. Deep learning has proved its high availability in terms of image classification and semantics understanding. Similarly, we can also implement the pattern and space selection by using deep learning as a multi-label classification process.

Then, what kind of network can we use? Since the main relations identified include delta/xor, shift, and bitmask, the Convolutional Neural Network (CNN) is not proper, and full-connect multilayer perceptron (MLP) is proper. Take all points in a timeline into account. There are a total of 3600 x 8B points in one hour, which is a huge number. Considering the similar segments in the same timeline, we can consider 32 points as the basic processing unit.

Next, how can we create training samples and how can we determine labels for samples?

We introduced reinforcement learning instead of supervised learning for training for the following reasons.

Then, which type of reinforcement learning should we select; DQN, Policy Gradient, or Actor-Critic? As we analyzed earlier, DQN does not apply to the case where rewards and actions are discontinuous. The parameters, such as majorMode 0 and 1, lead to completely different results. Therefore, DQN does not apply. In addition, it is not easy to evaluate compression problems and the network is not complex. Therefore, Actor-Critic is not required. So, we chose Policy Gradient.

A common loss of Policy Gradient is to use a slowly raised baseline as the metric for showing whether the current action is reasonable. For this sample, Policy Gradient is not suitable, with poor effects achieved. This is because the sample has too many (256^256) theoretical block states. Therefore, we designed a loss.

After we obtained the parameters of each block, we need to consider the correlation between the blocks. We can use statistical aggregation to obtain the final parameter settings of the entire timeline.

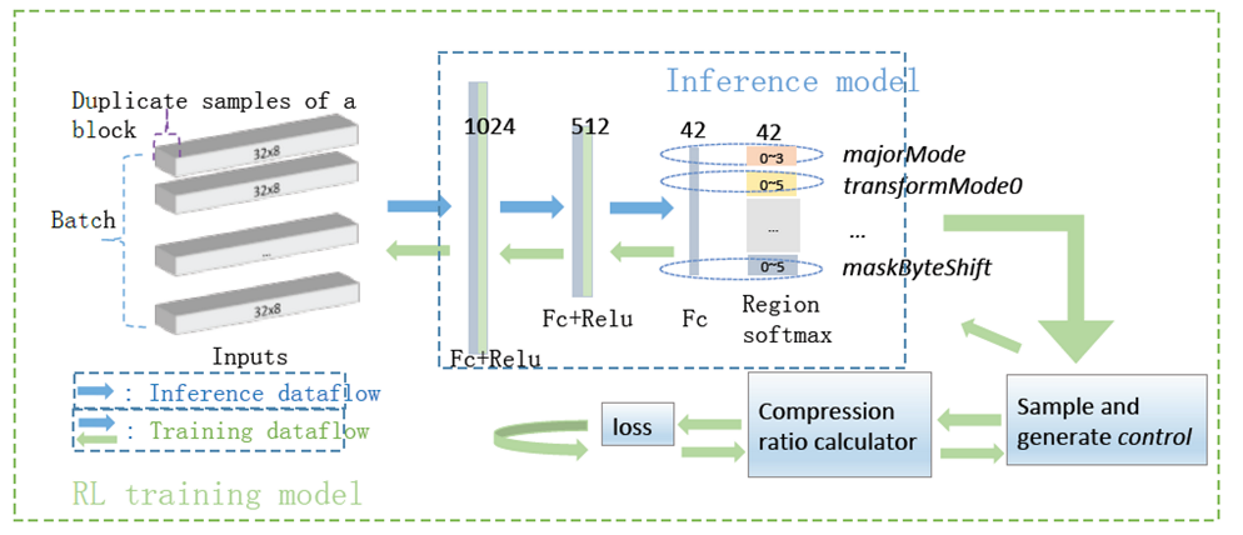

The following figure shows the entire network framework.

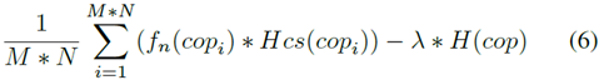

On the training side, M blocks are randomly selected, each block is copied in N duplicates, and then the duplicates are input into a fully connected network with three hidden layers. Region softmax is used to obtain the probabilities of various choices of each parameter. Then, the value of each parameter is sampled based on the probabilities. The resulting parameter values are then passed into the underlying compression algorithm for compression. Finally, a compression value is obtained. The N duplicate blocks are compared to calculate the loss and then backpropagation is performed. The overall design of the loss is:

fn(copi) describes the compression effect, which indicates positive feedback if it is higher than the average value of the N blocks. Hcs(copi) indicates the cross-entropy; the higher the probability of obtaining a high score, the more certain, and better the result, and vice versa. H(cop) indicates the cross-entropy that is used as a normalization factor to avoid network curing and perform convergence to achieve local optimum.

On the inference side, all or partial blocks of a timeline can be input into the network to obtain parameter values, which can be used for statistical aggregation to obtain the parameter values of the timeline.

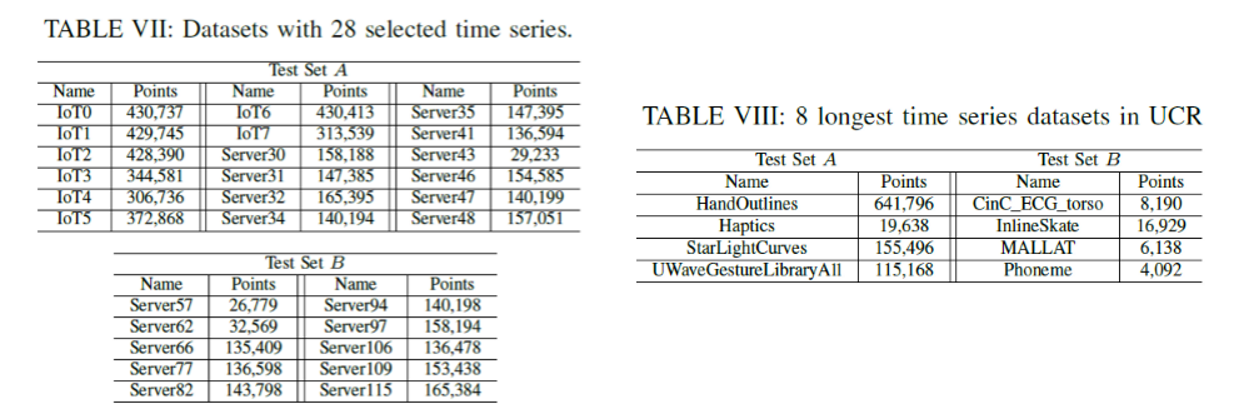

We obtained the test data by randomly selecting a total of 28 timelines from 2 large scenarios, Alibaba Cloud IoT and the server. We also selected the data set UCR, which is the most common in the field of time series data analysis and mining. The basic information is:

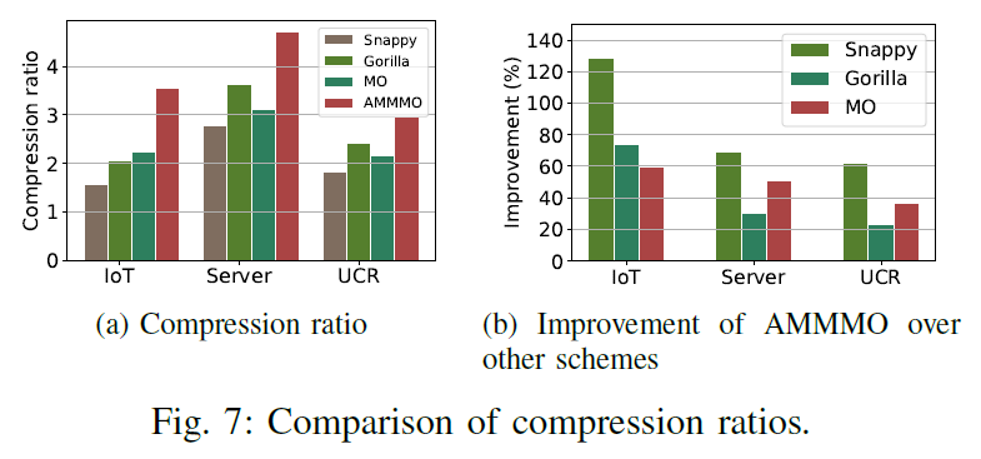

We selected Gorilla, MO, and Snappy as the comparison algorithms. Since AMMMO is a two-stage compression algorithm framework and various algorithms can be used for parameter selection at the first stage, we selected Lazy (simply setting some universal parameters), rnd1000Avg (obtaining the average effect from 1,000 random samples), Analyze (using manual code), and ML (an algorithm of deep reinforcement learning.)

In terms of the overall compression ratio, the two-stage adaptive multi-mode compression ratio of AMMMO significantly improved the compression effect, as compared with those of Gorila and MO, with the average compression ratio increases by about 50%.

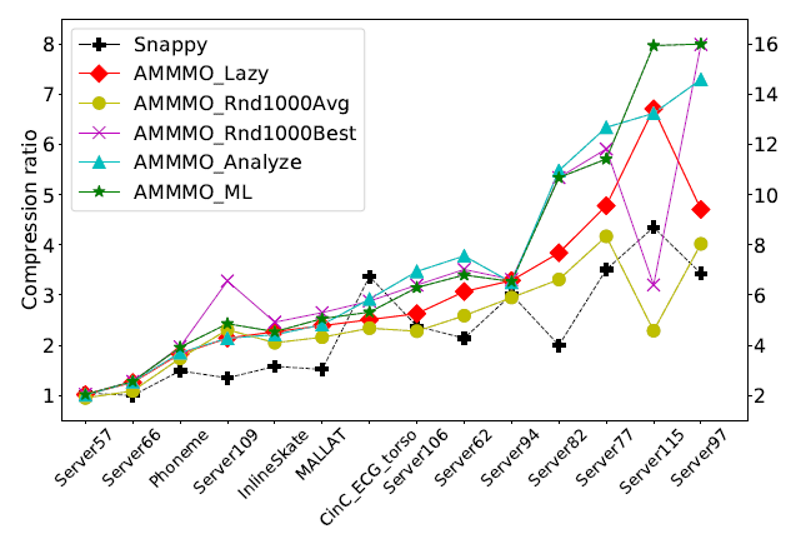

Then, what about the performance of ML? The following figure compares the compression effects on test set B in terms of ML. In general, ML is slightly better than Analyze and much better than rnd1000Avg.

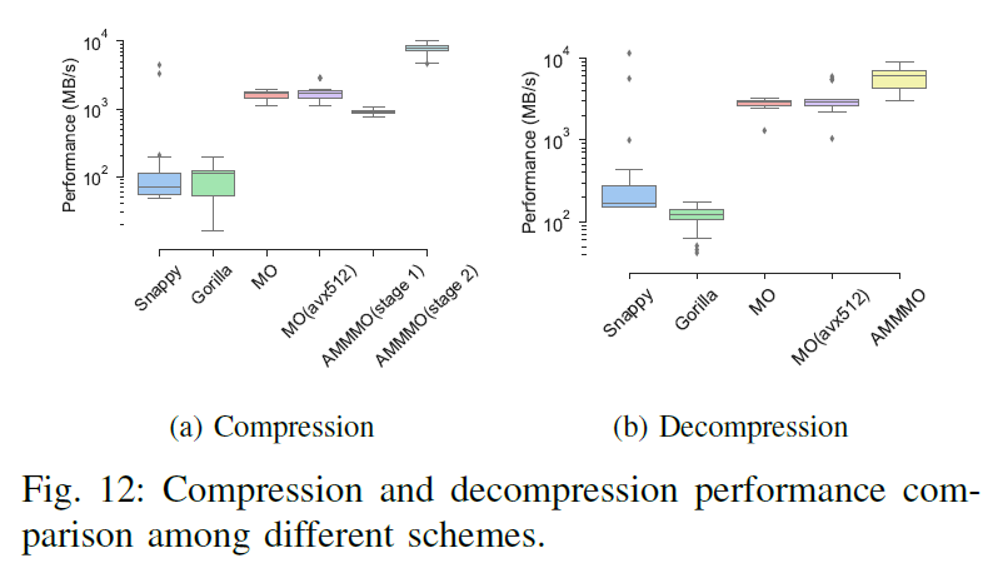

Based on the design concept of MO, AMMMO removed bit-packing. This allows AMMMO to run at high speeds on CPUs. It also makes it ideal for parallel computing platforms, such as GPUs. In addition, AMMMO is divided into two stages. The first stage has poorer performance, but most of the time, the global compression parameters can be reused. For example, the data of a specific device from the last two days is reusable. The following figure shows the overall performance comparison. The experimental environment is "Intel CPU 8163 + Nvidia GPU P100" and the AMMMO code is P100.

As shown in the preceding figure, AMMMO achieved the Gbit/s-level processing performance on both the compression and decompression ends, and the values of performance metrics are good.

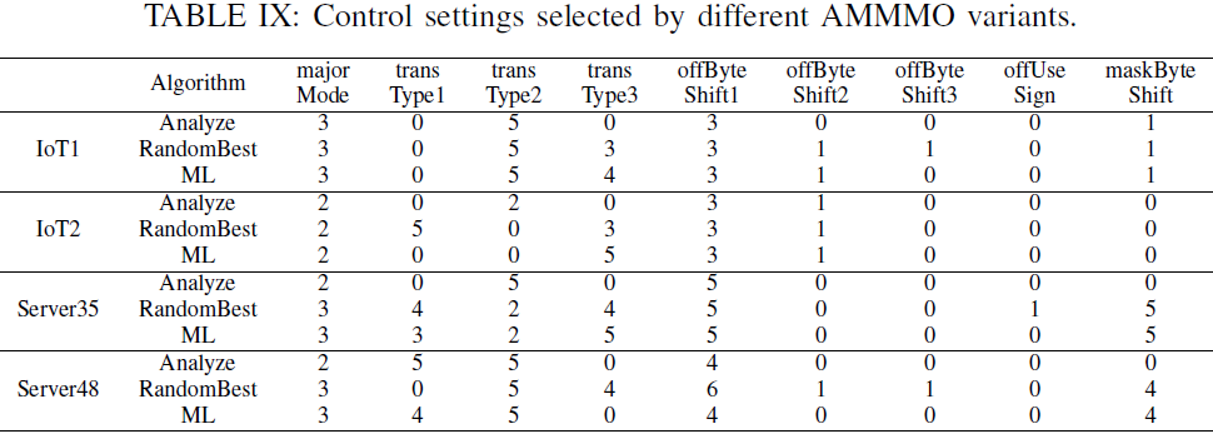

The network trained by deep reinforcement learning achieved a good final effect. So, did it learn meaningful content? The following table compares the performance of the three algorithms on several test sets. We can see that the parameter selection of ML is similar to those of Analyze and RandomBest, especially in the selection of byte offset and majorMode.

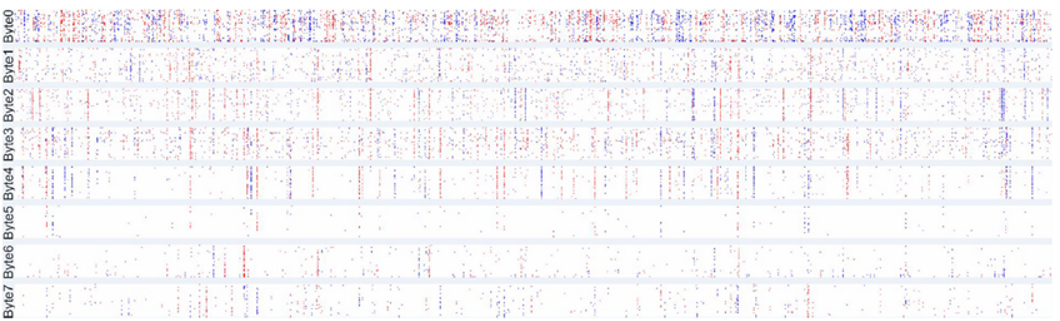

So, what is the representation of the compressed full-connect network parameters? We visualized the parameter heatmap at the first layer, as shown in the following figure. Positive parameter values are displayed in red, and negative parameter values are in blue; the larger the values, the brighter the color.

We can see that the values of 32 points are regularly displayed in vertical lines within the same bytes. Obfuscation occurs across bytes, and we can consider delta or xor computing occurs at the corresponding positions. The parameters of Byte0 with the largest number change are also active.

The views expressed herein are for reference only and don't necessarily represent the official views of Alibaba Cloud.

2,593 posts | 792 followers

FollowAlibaba Clouder - April 20, 2020

Amuthan Nallathambi - July 12, 2024

ApsaraDB - May 23, 2024

Alibaba Clouder - March 22, 2018

ApsaraDB - June 27, 2022

ApsaraDB - January 17, 2022

2,593 posts | 792 followers

Follow Platform For AI

Platform For AI

A platform that provides enterprise-level data modeling services based on machine learning algorithms to quickly meet your needs for data-driven operations.

Learn More Epidemic Prediction Solution

Epidemic Prediction Solution

This technology can be used to predict the spread of COVID-19 and help decision makers evaluate the impact of various prevention and control measures on the development of the epidemic.

Learn More Offline Visual Intelligence Software Packages

Offline Visual Intelligence Software Packages

Offline SDKs for visual production, such as image segmentation, video segmentation, and character recognition, based on deep learning technologies developed by Alibaba Cloud.

Learn More Network Intelligence Service

Network Intelligence Service

Self-service network O&M service that features network status visualization and intelligent diagnostics capabilities

Learn MoreMore Posts by Alibaba Clouder