Recently, the paper on an intelligent parameter tuning ResTune system developed by the Intelligent Database and Database Autonomy Service (DAS) Team was accepted by SIGMOD 2021. SIGMOD is the first of the global top three database conferences and the only one providing a double-blind review, making its authority beyond doubt.

The acceptance of the ResTune paper illustrates our technical accumulation and depth in intelligent database management and control. It is also a milestone in the autonomous database and intelligent O&M of Alibaba Cloud. The intelligent parameter tuning feature has been implemented in DAS. It is the first intelligent parameter tuning feature of database configuration parameter officially launched in the industry, which indicates the technical leadership of Alibaba Cloud in the autonomous database.

Parameter tuning services are widely applied in the business scenarios of Alibaba, such as the optimization of performance and configuration parameter of the database system, the hyper-parameter selection of machine learning model and deep neural network, the adaptive adjustment of parameters in the recommendation system and cloud scheduling system, and simulation optimization and parameter optimization of industrial control and supply chains. How to support the needs of customers in the production environment is a research hotspot of AI for the system in academia.

In 2021, the ResTune intelligent parameter tuning is developed by Alibaba Cloud DAMO Academy, Database and Storage Laboratory, and Intelligent Database Team (ResTune: Resource Oriented Tuning Boosted by Meta-Learning for Cloud Databases). It is mainly used to optimize the performance parameters of OLTP database systems, including RDS MySQL, RDS PostgreSQL, PolarDB MySQL, and PolarDB-O. This work was published in SIGMOD 2021 (Research Track), a top-level conference in the database field, and the technology is implemented in DAS products of Alibaba Cloud.

Database systems (such as MySQL) provide over 200 configuration parameters. Different parameter combinations and changing business load characteristics jointly determine the performance and resource usage of the database systems. DBAs manually select a suitable set of parameters based on business and staff experience for business within Alibaba. Business is becoming more diversified as databases accelerate migrating to the cloud. Only relying on manual parameter adjustment of DBAs encounters the bottleneck in the horizontal expansion. At the same time, due to the differences in the experience of DBAs, it is difficult to find the optimal parameters for a variety of business loads. Automated parameter tuning is essential for cloud vendors to achieve the principle of customer first. It adaptively provides personalized optimization parameters in different instance environments for various business loads constantly changing over time.

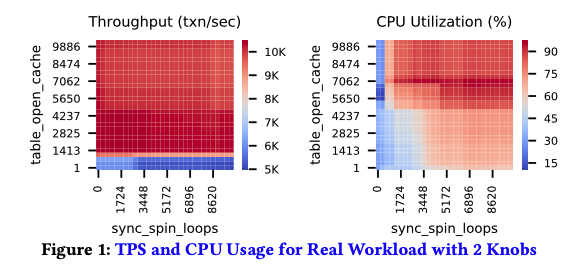

The database system needs to consider both performance (such as transactions per second (TPS) and latency) and resource usage (including CPU, memory, and I/O). Admittedly, performance optimization is important. However, the TPS of the real load is often limited by the request rate of users, so it is difficult to achieve peak performance. Figure 1 shows the TPS and CPU utilization with different values of the two parameters. The CPU utilization corresponding to the red area with the highest TPS varies significantly from 15% to 75%. In the case of the same TPS, there is a lot of room for optimization of resource usage. The total cost of ownership (TCO) is an important metric of ApsaraDB from a perspective of cost and a major advantage of ApsaraDB.

Optimizing resource usage is of great significance to reduce ApsaraDB TCO and improve cost advantage. Most instances on the cloud encounter the issue of over-provision. In addition, excessive resource usage may cause ApsaraDB exceptions and performance degradation caused by resource contention. Optimizing the resource usage of databases can effectively reduce or avoid failures caused by such issues and improve stability.

We analyzed that the goal of parameter tuning is to consider optimizing resource usage and performance at the same time. As mentioned above, performance (such as TPS) is often limited by the request rate of the client and cannot reach peak performance as a result. Therefore, we need to find out the database configuration parameters with the lowest resource usage, which meet the requirements of SLA.

In addition, the parameter tuning needs to be as fast as possible. Otherwise, it violates the reduction of resource usage. Usually, the parameter tuning system requires hundreds of iteration steps to find a good configuration. Each iteration takes about three to five minutes to replay the workload, which usually requires day-based periods for parameter tuning training. However, if you need to satisfy the demand for online troubleshooting, you are often required to find out problems within one hour and recover them. As a cloud vendor, we use knowledge migration and learning based on the historical data of existing service load parameter tuning, which can effectively accelerate the parameter tuning process and find out good database parameter configurations as quickly as possible.

Database parameter tuning is a relatively popular research area recently, and a lot of work has been published in the past few years. The work can be divided into three categories according to technical ideas: heuristic methods based on search, methods based on Bayesian optimization, and methods based on the reinforcement learning model.

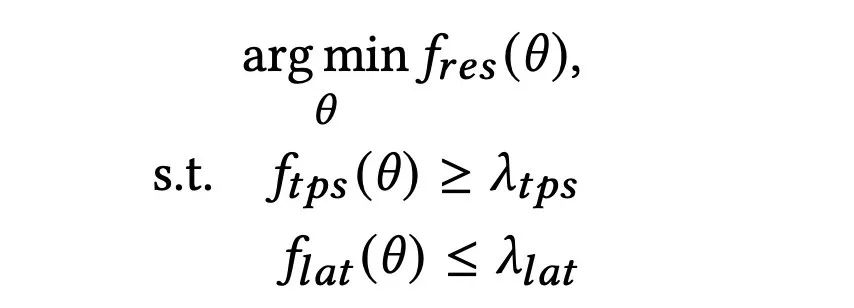

We define the problem as an optimization problem with specific constraints: where the constraint condition constants can be set as the TPS and latency values under the default configuration parameters.

ResTune converts the optimization of resource usage and SLA satisfaction into a constrained optimization (Constrained Bayesian Optimization). Instead of the traditional Bayesian optimization algorithm, the constrained EI function (Constrained EI (CEI)) is used here, and we add the constrained information to the commonly used EI utility function (Acqudition Function). Please see Chapter Five of the paper for more details.

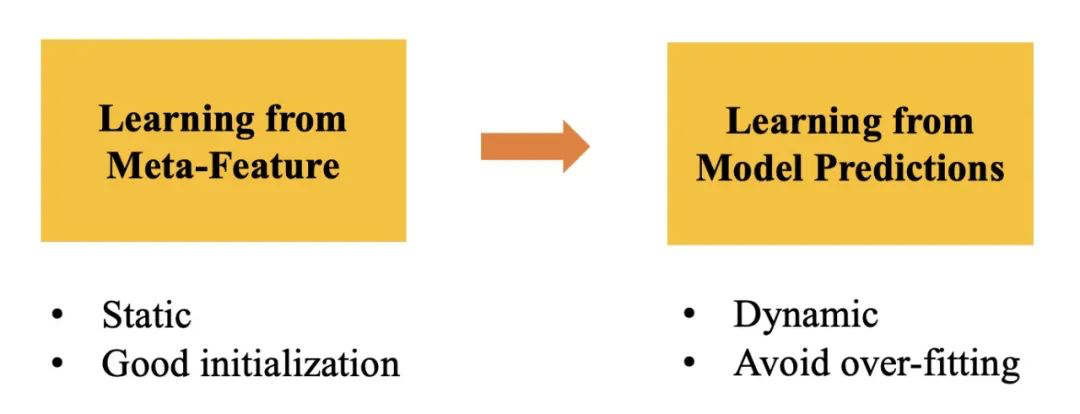

On the other hand, ResTune also designs a Gaussian weight model, combining static weight and dynamic weight, to make better use of the existing data. The surrogate function of the target workload is weighted and averaged using the Gaussian process model of ensemble history. The core issue here is how to define weights.

At a cold start (when no data is observed), static weight learning assigns weights based on the meta-feature distance of the task workload. The calculation of the meta-feature requires the analysis of the workload to obtain the workload feature vector.

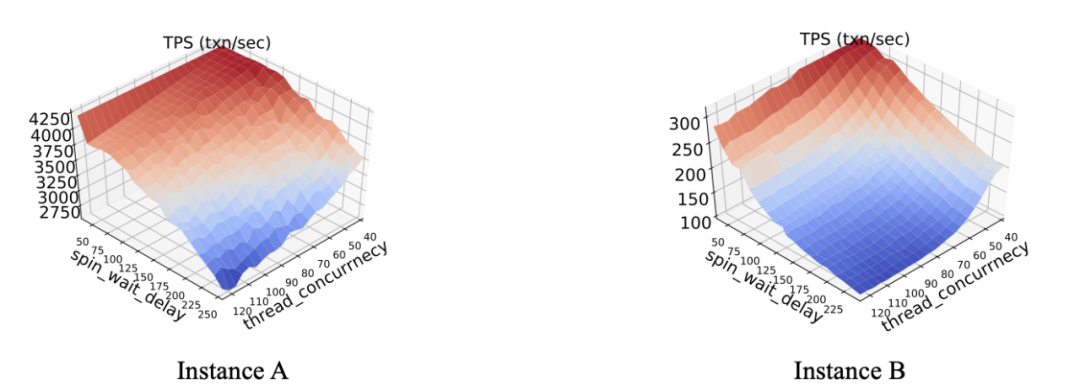

When a certain amount of data (such as ten pieces of data) is accumulated, ResTune uses a dynamic weight learning strategy to compare the similarity between the predictions of the history learner and the real observations of the target task through the partial order relation. As shown in the following figure, although the absolute values of TPS are different, the surface trend is the same, so the partial order relation is similar. With a dynamic allocation strategy, the weights are dynamically updated as the number of observations on the target workload increases. ResTune finally gets a meta-learner through these two strategies, which can be used as an experienced proxy model. Please refer to Chapter Six of the paper for more details.

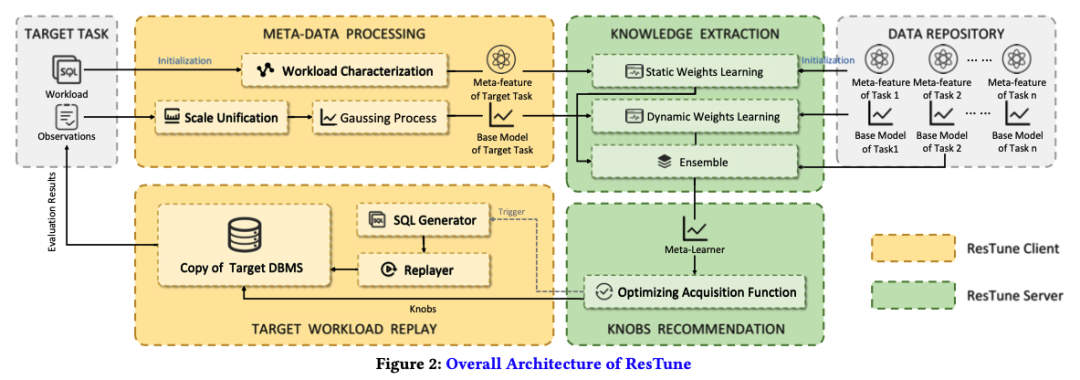

ResTune abstracts the parameter tuning problem into an optimization problem with constraints, minimizing resource usage while meeting the SLA constraints. The following figure shows the system architecture design of ResTune. The ResTune system includes two main parts: ResTune Client and ResTune Server.

One Iteration Step in a Parameter Tuning Task: When a parameter tuning task starts, the system copies the target database and then collects the target workload over a period to the user environment for future replay.

In each round of iteration, the target task obtains the meta-feature and base model through the Meta-Data Processing module as the input of the Knowledge Extraction module. The Knowledge Extraction module is responsible for calculating the static and dynamic weights when the current task is integrated with the historical task in the base model and weighting and summing the base models to obtain the meta model. A set of parameter configurations are recommended according to the Meta Learner in the Knobs Recommendation module. The Target Workload Replay module verifies the recommended parameters and writes the results to the historical observation data of the target task.

The training process above repeats several iteration steps and terminates when the maximum training step is reached or the lifting effect converges. After the target task is trained, ResTune collects the meta-feature and observation data of the current task to the data repository as historical data.

The specific features of each module are listed below:

We compared the performance and speed of ResTune and other state-of-the-art (SOTA) systems in multiple scenarios.

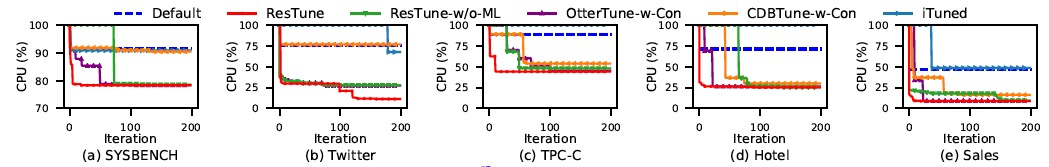

First, we select CPU utilization as the optimization target in a single-task scenario to verify the effectiveness of ResTune in solving optimization problems with SLA constraints. Here, we test Sysbench, Twitter, TPC-C, and two real workloads—Hotel Booking and Sales. The ResTune method can get the best results and the best efficiency on all loads.

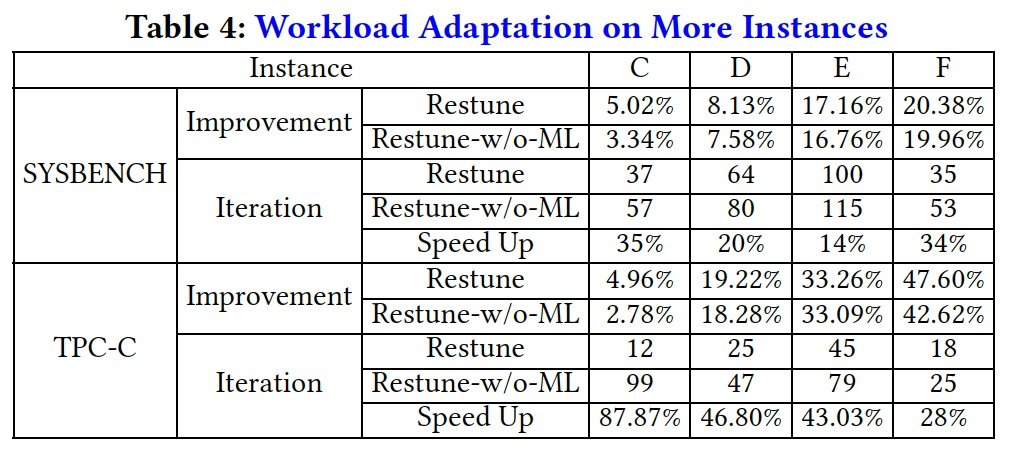

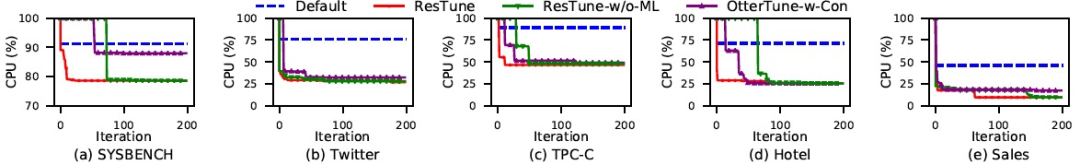

Since there are a large number of users and various instances on the ApsaraDB, it is very important whether our proposed method can migrate between different workloads and hardware. Similarly, taking CPU utilization as the optimization goal, we test the migration effect between different machine hardware. We can see that the proposed meta-learning algorithm has brought significant improvements in training speed and training effect. This enables the entire ResTune parameter tuning process to be completed within about 30-50 steps, while non-migration scenarios usually require hundreds of iteration steps.

Similarly, in the migration experiment between different workloads, our meta-learning method also caused a significant rise in training speed.

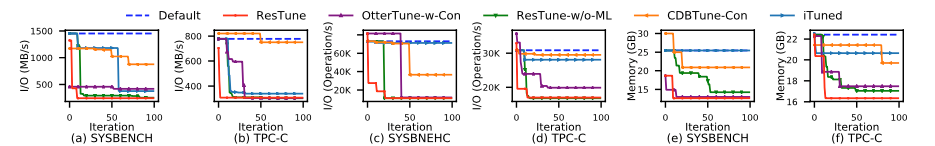

In addition to CPU resources, we test the optimization effects of parameter tuning on memory resources and IO resources. According to the following figure, ResTune reduces IOPS by 84%-90% for the parameter tuning task of I/O resource optimization. ResTune reduces memory utilization from 22.5G to 16.34G for the parameter tuning task of memory resource optimization. We also estimate the cost reduction of TCO in the paper.

Intelligent parameter tuning technology has been implemented on DAS products. We launch the technology in different stages and with detailed features. It mainly includes the template feature and intelligent parameter tuning feature based on stress testing. Alibaba Cloud is the first vendor to launch the parameter tuning feature and is a leading manufacturer in this field.

The template parameter feature is a parameter tuning scenario we launched in the first phase. Prior to this, the RDS MySQL database on the cloud only had one set of unified parameter templates, which was difficult to satisfy the different user service loads on the cloud. Therefore, we select different types of benchmarks for the offline training of parameter tuning of the RDS instance type, which users use most frequently.

We divide the user load into six typical scenarios, including transaction, social networking, and stress testing. We select the optimal configuration for each typical scenario through offline training and provide users with choices based on their business characteristics. Thus, we extend the previous unified set of RDS parameter templates to a variety of typical OLTP business scenarios.

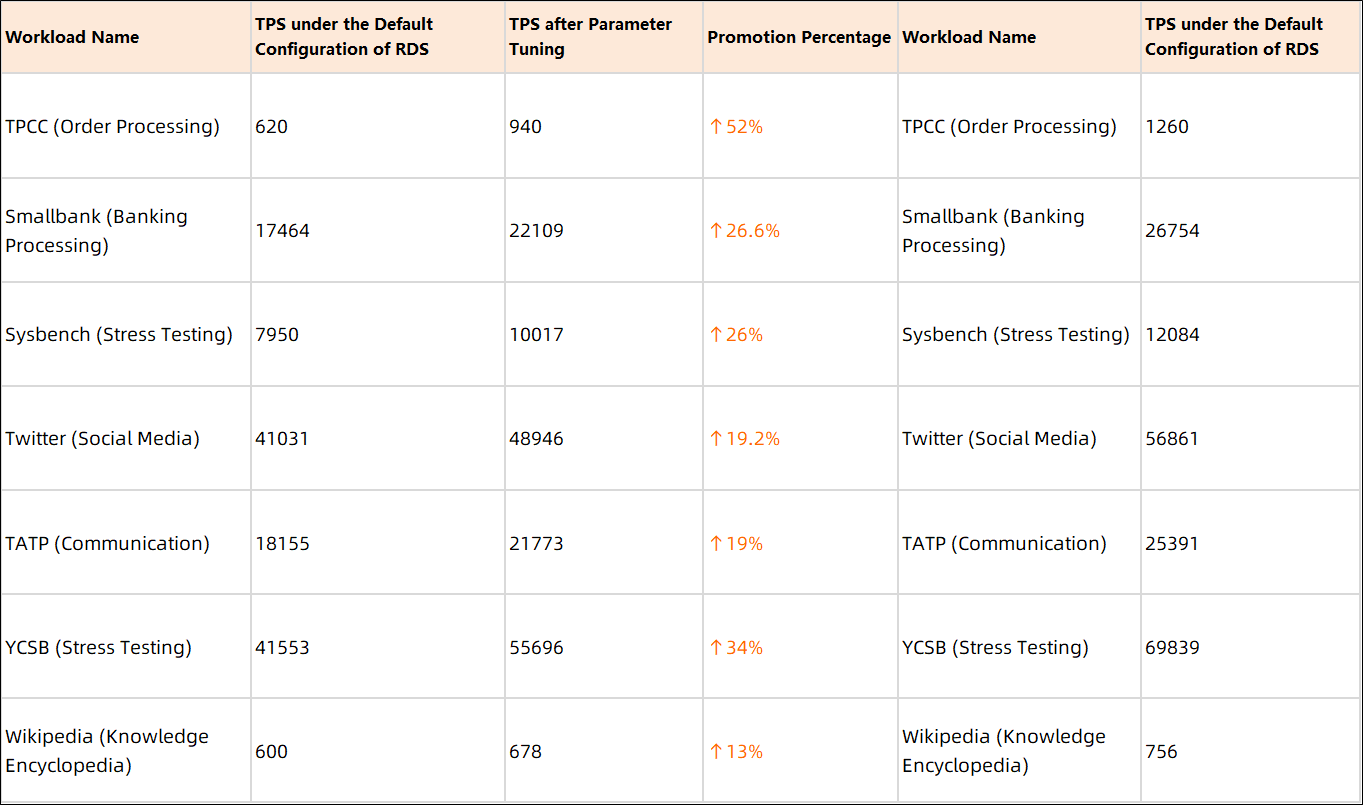

The following table lists the results of the offline parameter tuning training. There are 13%-50% improvements on different workloads. Here, we take TPS performance as the optimization target.

The preceding feature verifies the requirements of cloud users for the intelligent parameter tuning feature based on the template parameter. It is difficult for most users (except for particularly professional users) to grasp their business characteristics accurately. Therefore, users cannot choose the set of parameter templates that best suits their workload features.

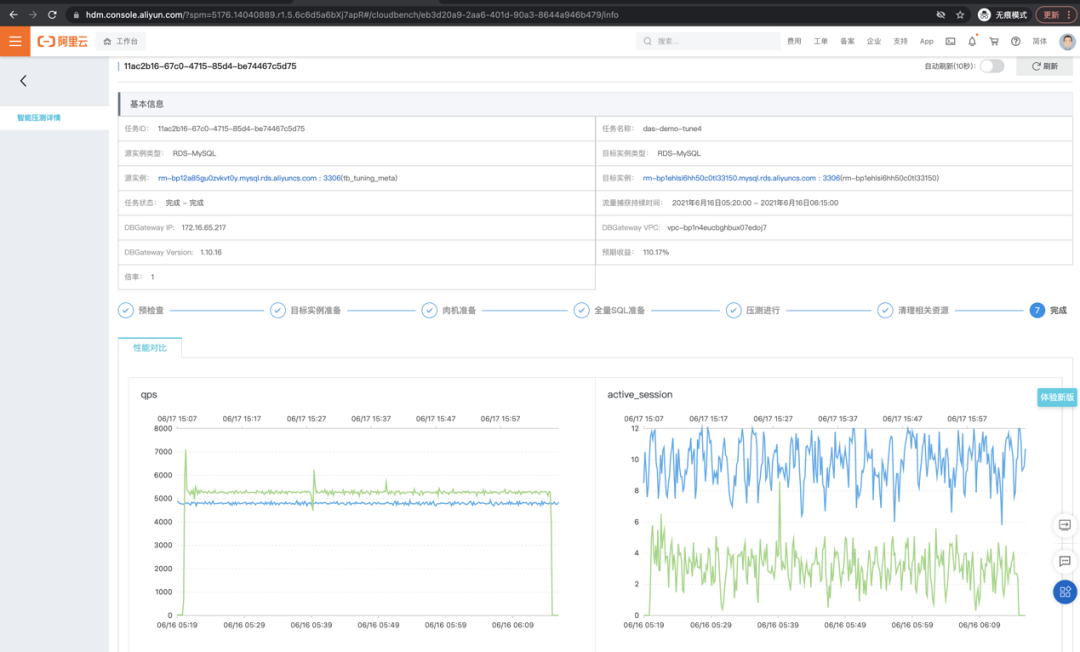

We launched the intelligent parameter tuning feature based on stress testing in DAS to solve the pain points of users. The feature is mainly responsible for collecting and replaying the real workload of users to ensure security in the user VPC environment for the business load of users. In addition, the feature helps train the optimal performance parameter configuration in a customized way. This feature is called Cloudtune intelligent parameter tuning.

In the architecture design of ResTune described above, we need to prepare a user database RDS target instance in the user VPC environment, start a pressure testing machine to replay the real workload in the user environment, and collect performance data on the target instance for parameter tuning training. DAS Master users connect the online VPC environment and backend network environment. The backend DAS app is responsible for the main control logic of parameter tuning. It mainly operates the replay stress testing in the user VPC environment to obtain the corresponding metrics (such as resource usage, TPS, and latency) and then performs iteration training by calling Cloudtune Microservice. Cloudtune Service gives the next sampling configuration and the best configuration parameters found through the algorithm model so far.

Currently, the launched parameter tuning feature is replayed by pulling the library, which is an offline operation for users and is relatively complicated. We are helping users simplify this process to realize the online dynamic parameter tuning.

The online dynamic parameter tuning technology encounters bigger challenges and stricter requirements. First, the parameter tuning effect is required to be steadily improved, the performance of the system cannot be drastically dropped in operation, and online real-time services cannot be affected. Secondly, online dynamic parameter tuning needs to automatically select key related parameters for different workloads to ensure online stability and quick convergence during the tuning. Finally, the current work assumes the user load changes infrequently. Once the user load changes, the parameter needs to be re-tuned. If you want to improve user experience, it is necessary to combine workload detection to support adaptive parameter tuning services.

The Intelligent Database Team takes database O&M and kernel intelligence as the main directions, deeply integrates the expert experience of artificial intelligence, machine learning, and database, and enables the database to have the ability of autonomy. Therefore, it realizes the self-perception, self-optimization, self-repair, and self-security of the database and ensures that services are stable, secure, and efficient. The team provides the first intelligent database control platform in the industry, named Database Autonomy Service (DAS).

Related work also includes abnormal SQL detection from massive data, one-click intelligent stress testing, database generation based on compressed sensing, sampling algorithm and SQL workload external optimization, exception detection and root cause diagnosis of graph multi-mode data, index recommendation, hot and cold data separation, intelligent scheduling based on instance knowledge graph, NLP2SQL human-computer interaction interface, computing platform integration, and one-sided RDMA memory pooling system research and development. For example, according to the works published by the DAS Team on VLDB2020, Leaper [2] effectively performs data prefetching and cache elimination and solves the performance jitter of the LSM-Tree storage engine architecture. Leaper is an important attempt to integrate the machine learning model into the OLTP database kernel in academia.

Recently, DAS designed a brand-new hot and cold data layering algorithm based on survival analysis in statistics and medical data analysis, which was integrated into Polar X Engine. According to tests, the performance improved by 10%, and the storage cost decreased by 25%. Also, in the slow SQL diagnosis work published in VLDB2020, DAS solves nearly 90% of the CPU-intensive exceptions in a large number of SQL requests.

[1] Zhang, Xinyi, Hong Wu, Zhuo Chang, Shuowei Jin, Jian Tan, Feifei Li, Tieying Zhang, and Bin Cui. "ResTune: Resource Oriented Tuning Boosted by Meta-Learning for Cloud Databases." In Proceedings of the 2021 International Conference on Management of Data, pp. 2102-2114. 2021.

[2] Yang, Lei, Hong Wu, Tieying Zhang, Xuntao Cheng, Feifei Li, Lei Zou, Yujie Wang, Rongyao Chen, Jianying Wang, and Gui Huang. "Leaper: a learned prefetcher for cache invalidation in LSM-tree based storage engines." Proceedings of the VLDB Endowment 13, no. 12 (2020): 1976-1989.

[3] Y.Zhu, J.Liu, MengyingGuo, YungangBao, WenlongMa, ZhuoyueLiu, Kunpeng Song, and Yingchun Yang. 2017. BestConfig: tapping the performance potential of systems via automatic configuration tuning. Proceedings of the 2017 Symposium on Cloud Computing (2017).

[4] SongyunDuan, VamsidharThummala, and ShivnathBabu.2009.TuningDatabase Configuration Parameters with ITuned. Proc. VLDB Endow. 2, 1 (Aug. 2009), 1246–1257.

[5] Dana Van Aken, Andrew Pavlo, Geoffrey J. Gordon, and Bohan Zhang. 2017. Automatic Database Management System Tuning Through Large-scale Machine Learning. In Acm International Conference on Management of Data. 1009–1024.

[6] Ji Zhang, Yu Liu, Ke Zhou, Guoliang Li, Zhili Xiao, Bin Cheng, Jiashu Xing, Yangtao Wang, Tianheng Cheng, Li Liu, Minwei Ran, and Zekang Li. 2019. An End-to-End Automatic Cloud Database Tuning System Using Deep Reinforce- ment Learning. In Proceedings of the 2019 International Conference on Management of Data (Amsterdam, Netherlands) (SIGMOD '19). Association for Computing Ma- chinery, New York, NY, USA, 415–432.

[7] Guoliang Li, Xuanhe Zhou, Shifu Li, and Bo Gao. 2019. QTune. Proceedings of the Vldb Endowment (2019)

[8] Tan, J., Zhang, T., Li, F., Chen, J., Zheng, Q., Zhang, P., ... & Zhang, R. (2019). ibtune: Individualized buffer tuning for large-scale cloud databases. Proceedings of the VLDB Endowment, 12(10), 1221-1234.

Senior Technical Experts from Alibaba Discuss Data Warehouse Tuning – Part 1

ApsaraDB - October 21, 2020

Alibaba Clouder - December 21, 2020

ApsaraDB - September 14, 2023

ApsaraDB - October 27, 2020

Rupal_Click2Cloud - September 15, 2023

Alibaba Clouder - April 17, 2020

DAS(Database Autonomy Service)

DAS(Database Autonomy Service)

Self-driving Database Platform: Self-repair, Self-optimization, and Self-security

Learn More Database Migration Solution

Database Migration Solution

Migrating to fully managed cloud databases brings a host of benefits including scalability, reliability, and cost efficiency.

Learn More Managed Service for Grafana

Managed Service for Grafana

Managed Service for Grafana displays a large amount of data in real time to provide an overview of business and O&M monitoring.

Learn More Bastionhost

Bastionhost

A unified, efficient, and secure platform that provides cloud-based O&M, access control, and operation audit.

Learn MoreMore Posts by ApsaraDB