By Yu Kai

Co-Author: Xieshi, Alibaba Cloud Container Service

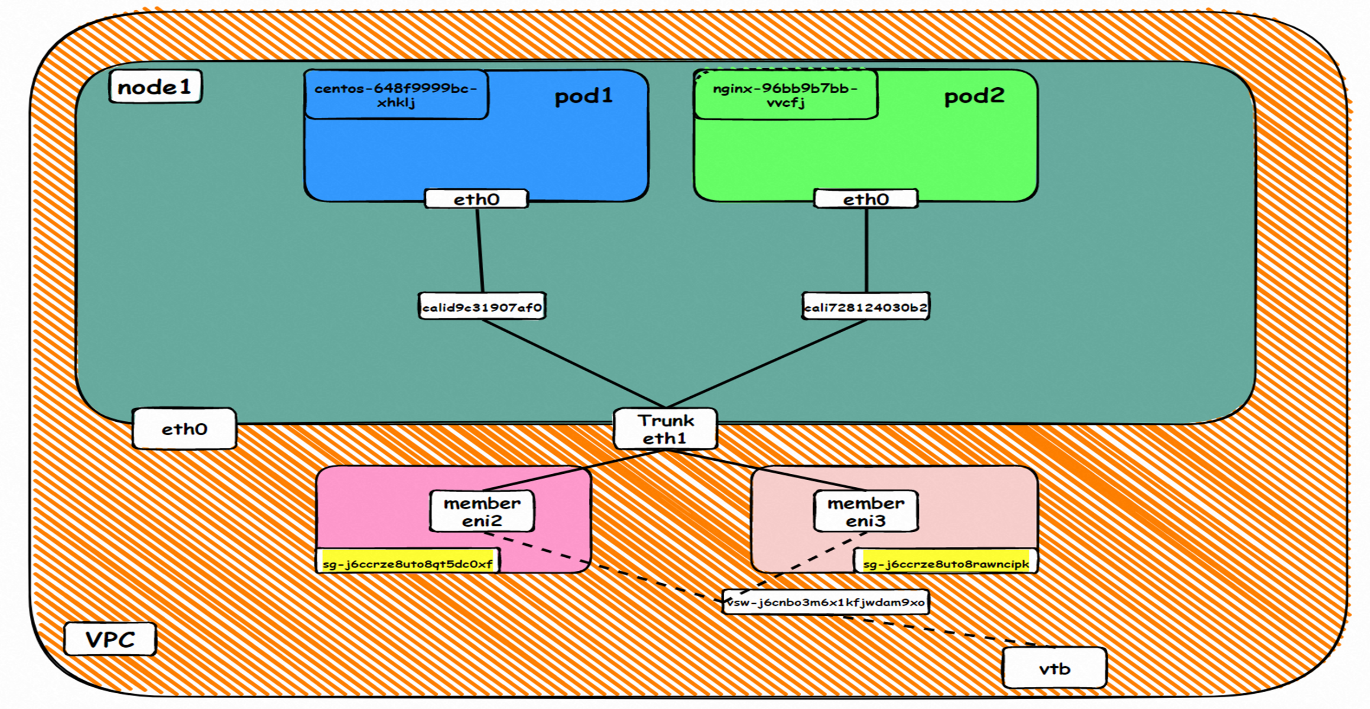

This article is the fifth part of the series. It mainly introduces the forwarding links of data plane links in Kubernetes Terway Elastic Network Interface (ENI) – Trunking. On the one hand, we can find out the reasons for different access results that customers receive in different scenarios and help customers further optimize the business architecture by understanding the forwarding links of the data plane in different scenarios. On the other hand, by understanding the forwarding links in-depth, customer O&M and staff from Alibaba Cloud can choose the link points to manual deployment and observation to further classify and locate the problems.

Trunk ENIs are virtual network interfaces that can be bound with Elastic Computer Service (ECS) instances deployed in virtual private clouds (VPCs). Compared with Elastic Network Interface (ENI), the instance resource density of Trunk ENI is significantly improved. After you enable the Terway Trunk ENI, the pods you specified will use Trunk ENI resources. Custom configurations are optional for pods. By default, the Terway Trunk ENI feature is disabled for pods. In this case, pods use IP addresses allocated from a shared ENI. Terway can simultaneously use shared ENI and Trunk ENI to allocate IP addresses to a pod only after you claim the pod to use custom configurations. The two modes share the maximum number of pods on the node. The total deployment density is the same as before the mode is enabled.

Industries (such as finance, telecommunications, and government) have strict data security requirements for data information security. Generally, core important data is placed in a self-built data center, and there is a strict whitelist of the clients that access this data. Generally, specific IP access sources are restricted. When the business architecture is migrated to the cloud, we usually connect user-created data centers to cloud resources through leased lines, VPNs, etc. Since the PodIP in traditional containers is not fixed, Network Policies can only take effect in clusters, which poses a great challenge to the whitelist. When ENI is in the Trunk mode, you can configure independent security groups and vSwitches to provide a more refined network configuration and a highly competitive container network solution.

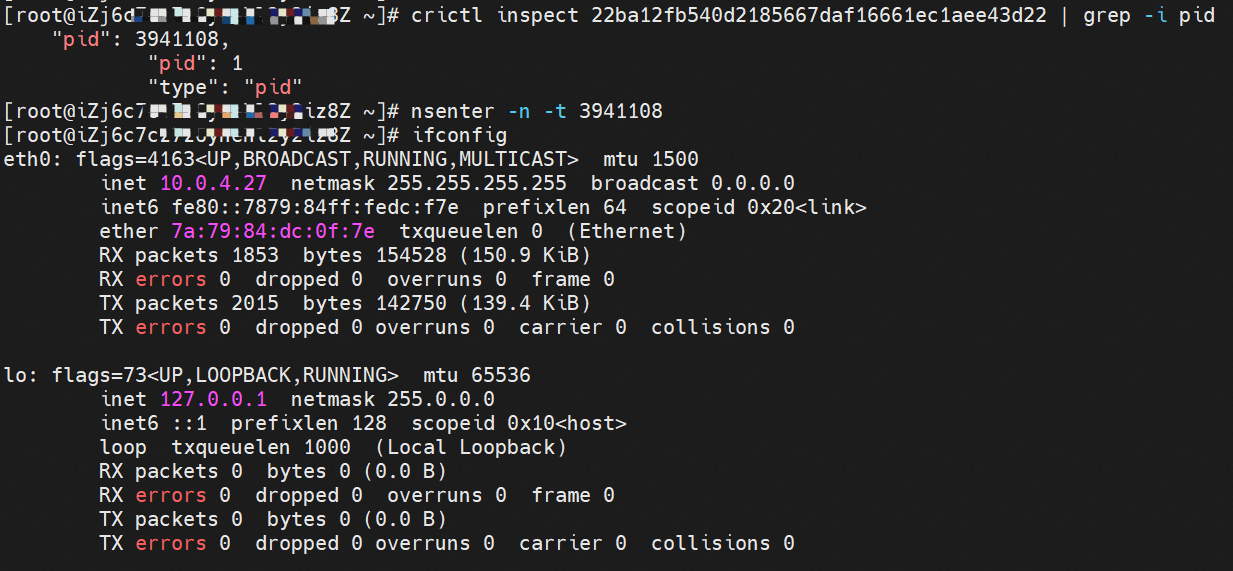

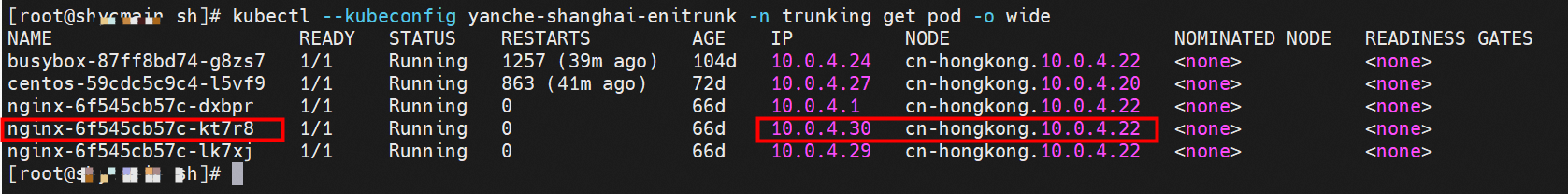

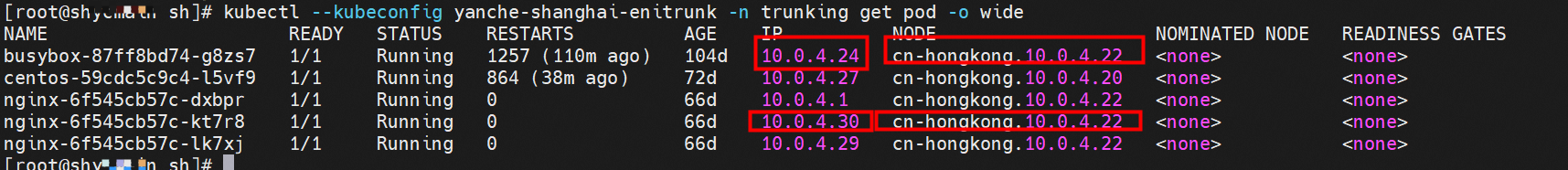

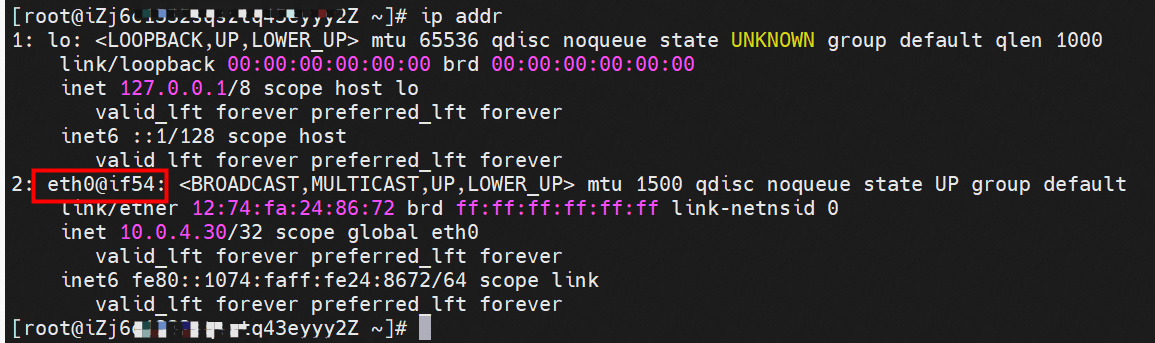

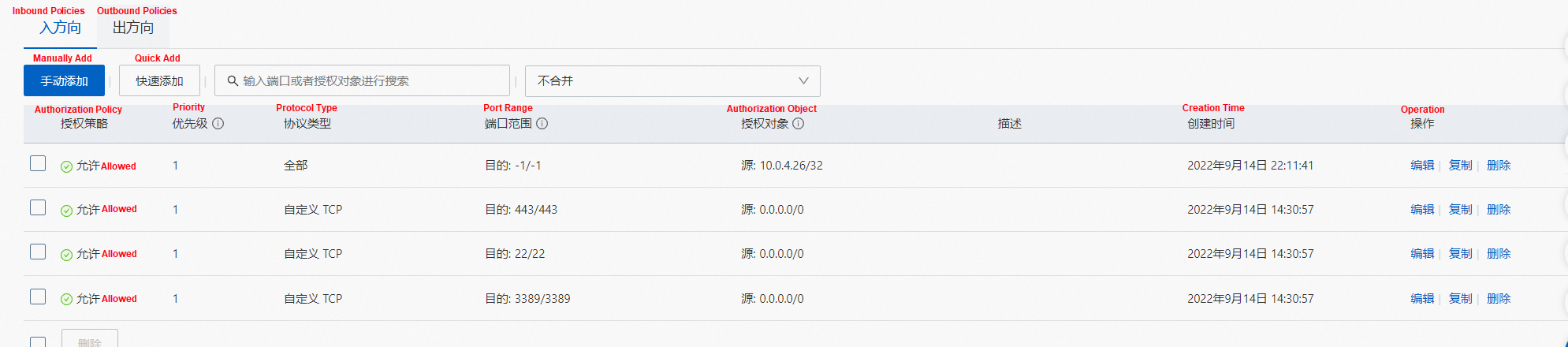

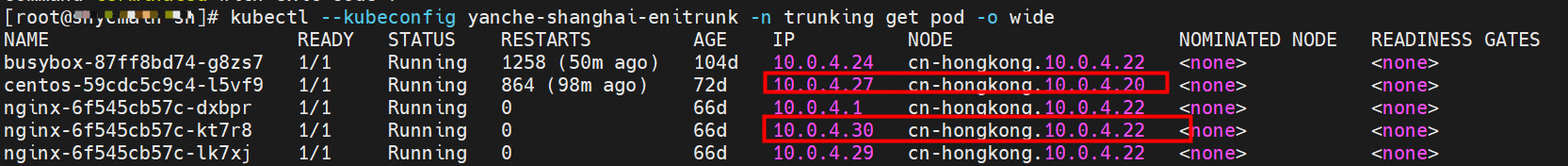

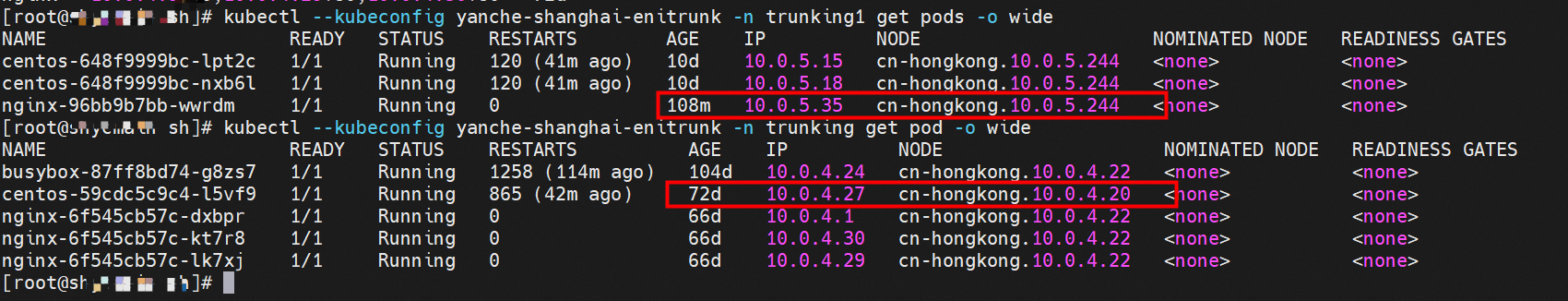

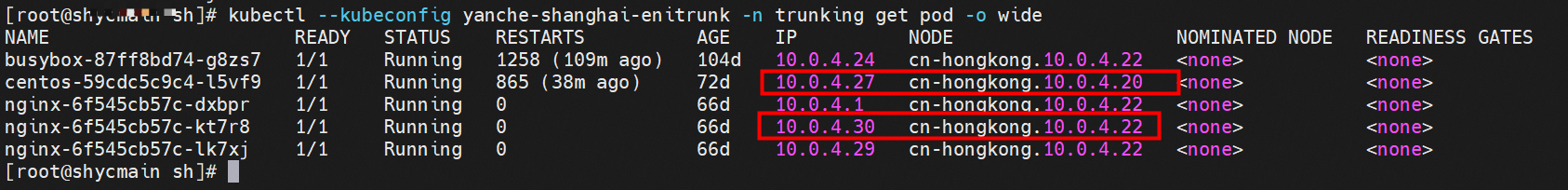

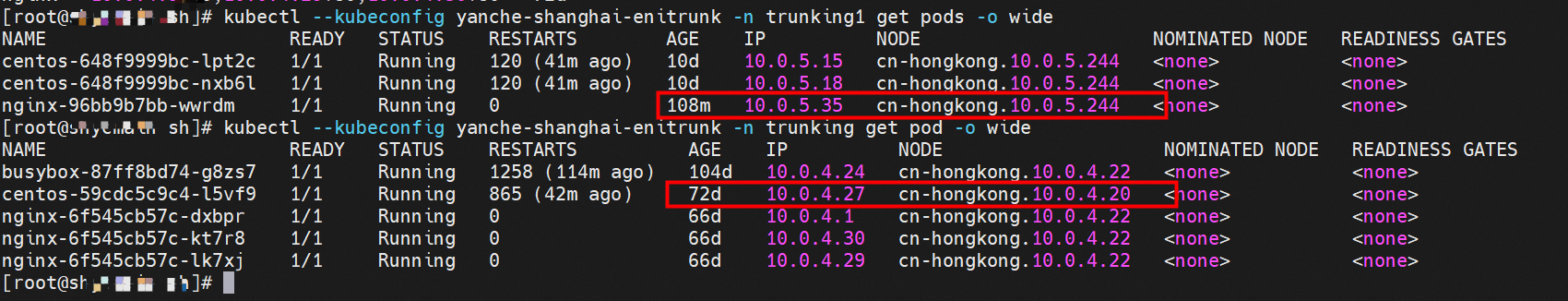

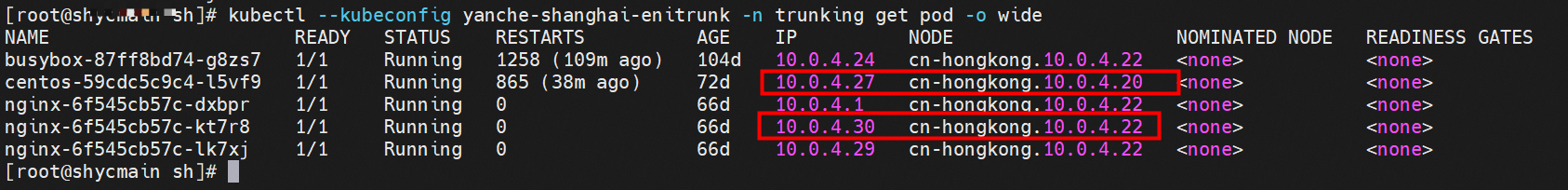

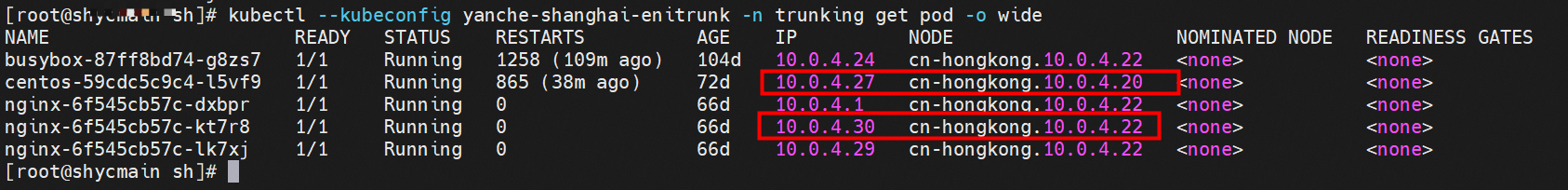

In the trunking namespace, you can see the relevant pod information and node information. The network of the IP address of the pod application will be described in detail later.

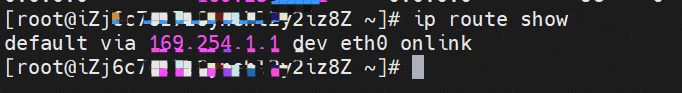

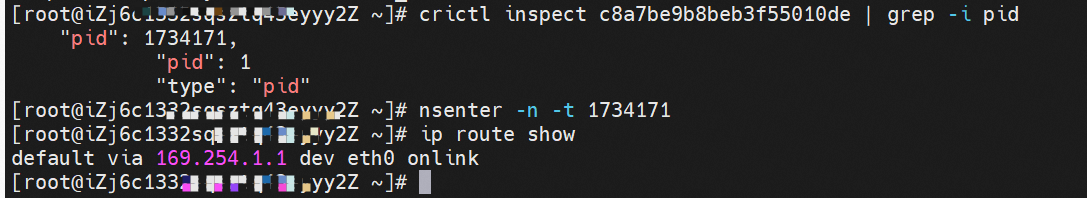

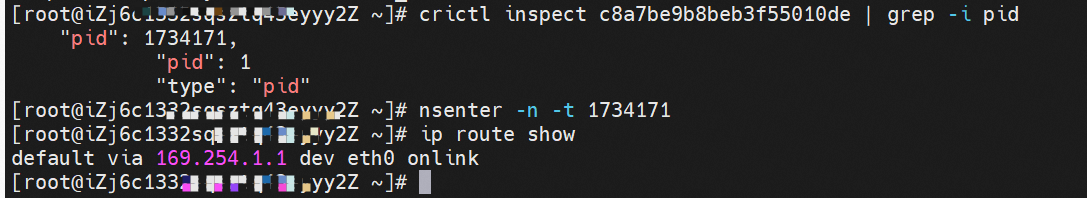

The pod has a default route that only points to eth0. This indicates that the pod accesses all address fields with eth0 as the unified gateway.

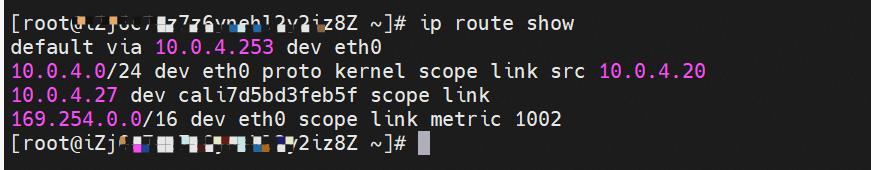

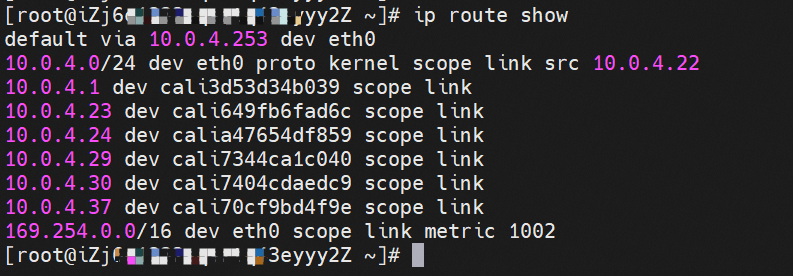

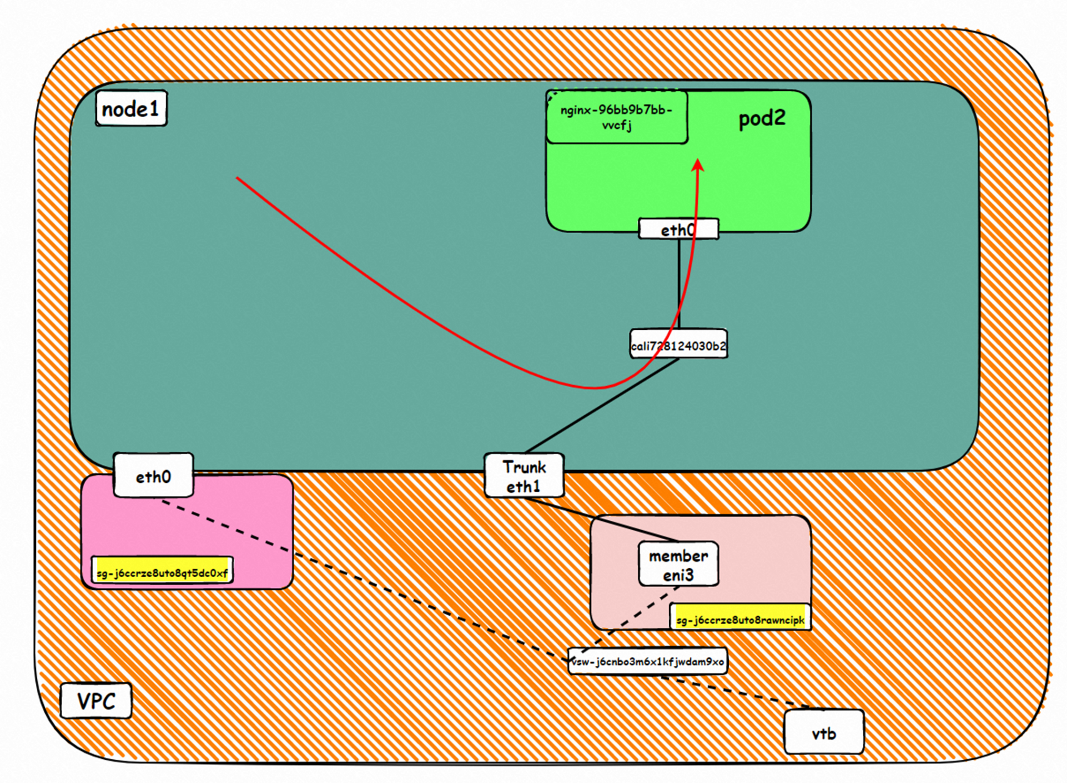

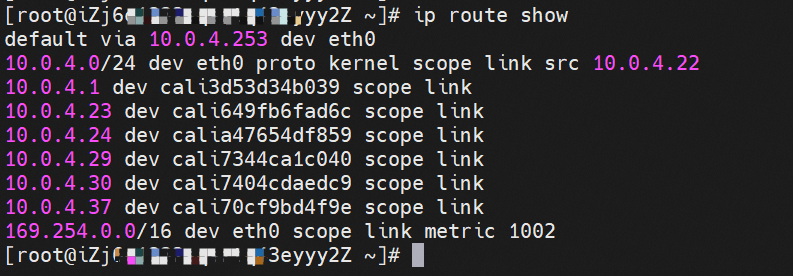

How do pods communicate with ECS OS? At the OS level, when we see the network interface card of calicxxxx, we can see it is attached to eth1. The communication connection between nodes and pods is similar to what's described in Panoramic Analysis of Alibaba Cloud Container Network Data Link (3) — Terway ENIIP. No more details are provided here. Through the OS Linux Routing, we can see that all traffic destined for Pod IP will be forwarded to pod's corresponding calico virtual network interface card. Here, ECS OS and Pod's network namespace have established a complete inbound and outbound link configuration.

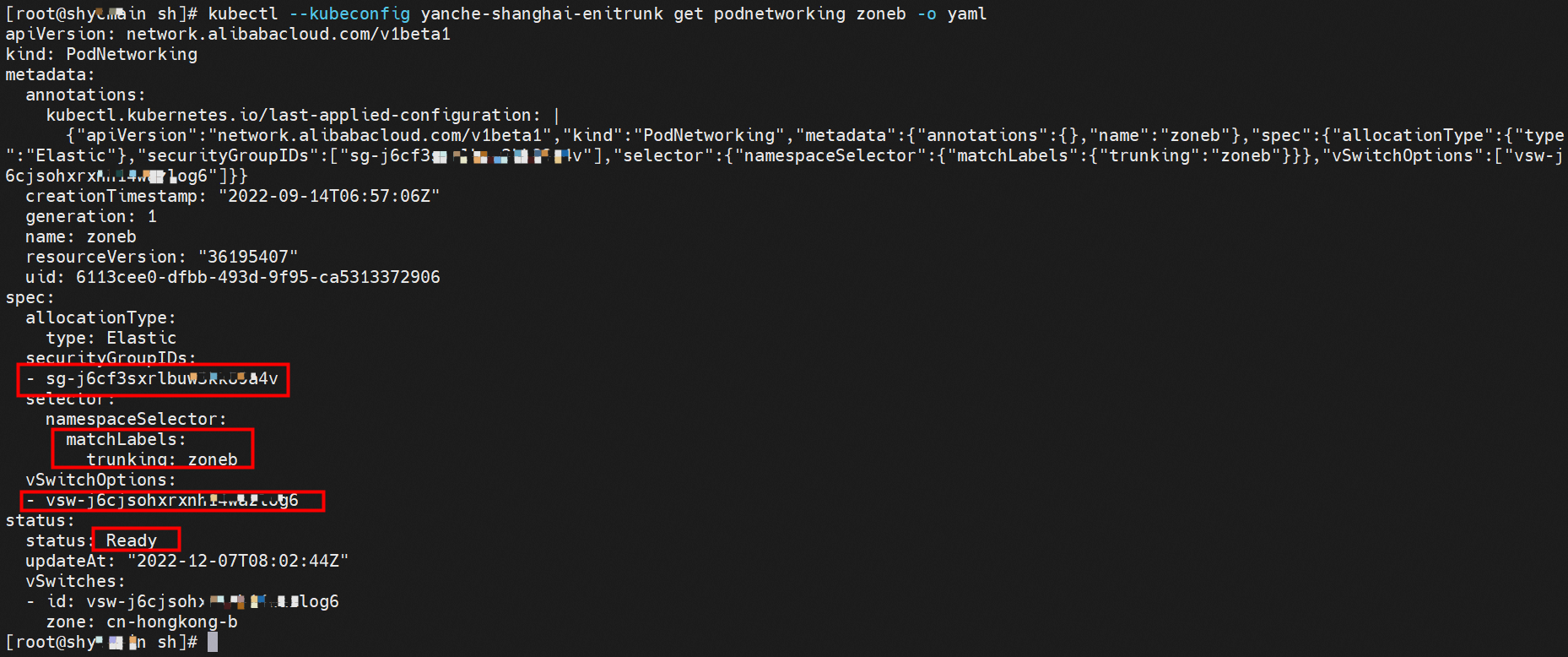

Let's focus on ENI Trunking itself. How does ENI Trunking configure the switches and the security groups of pods? Terway allows you to use custom resource definitions (CRD) named PodNetworking to describe network configurations. You can create multiple PodNetworkings to design different network planes. After the PodNetworking is created, Terway automatically synchronizes the network configurations. The PodNetworking takes effect on pods only after the status of the PodNetworking changes to Ready. As shown in the following figure, the type is Elastic. As long as the tag of namespace conforms to tryunking:zoneb, the pod can use the specified security groups and switches.

When a pod is created, the pod will match PodNetworkings through labels. If a pod does not match a PodNetworking, the pod is assigned an IP address from a shared ENI by default. If a pod matches a PodNetworking, an ENI is assigned to the pod based on the configurations specified in the PodNetworking. Please see labels for more information about the Pod label.

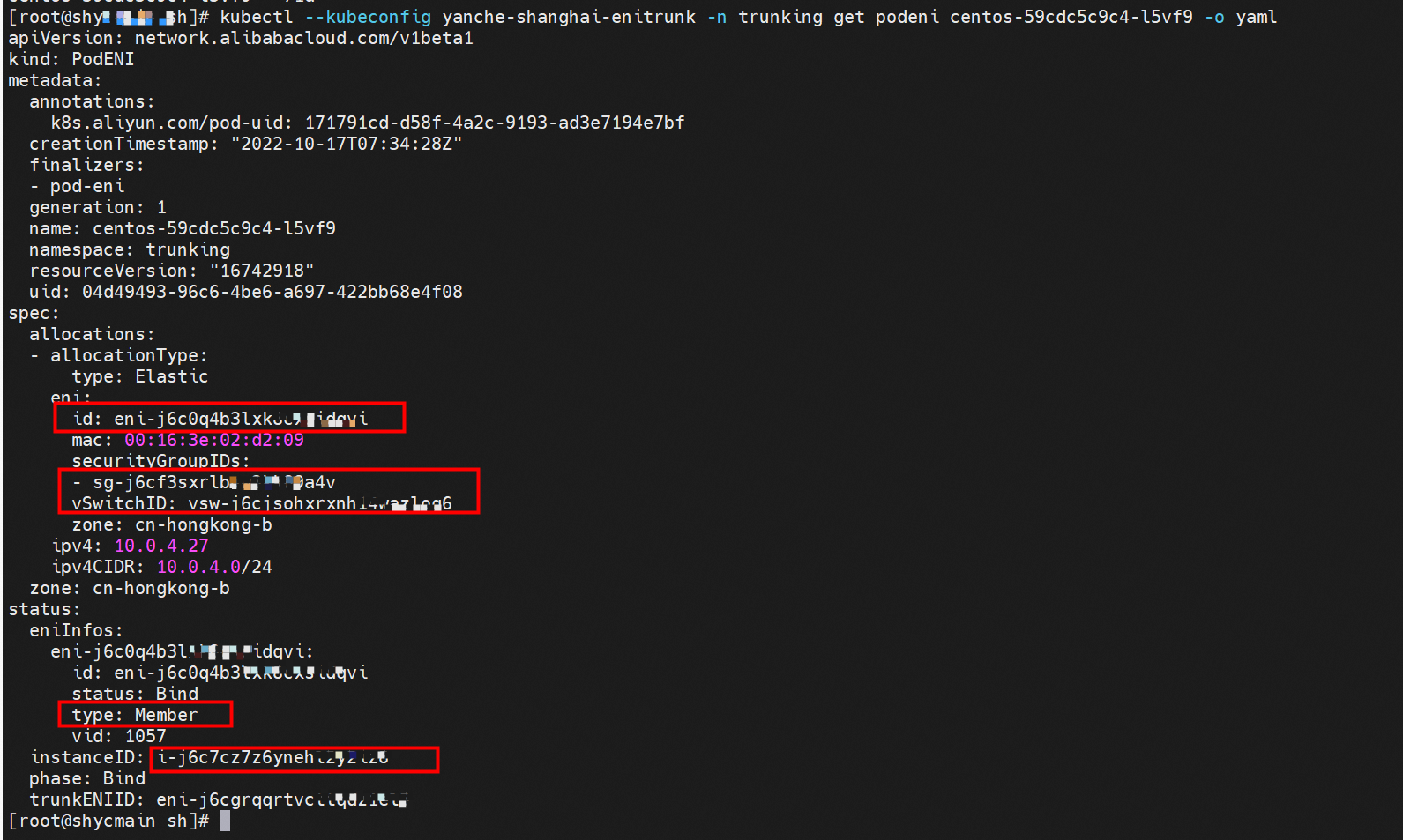

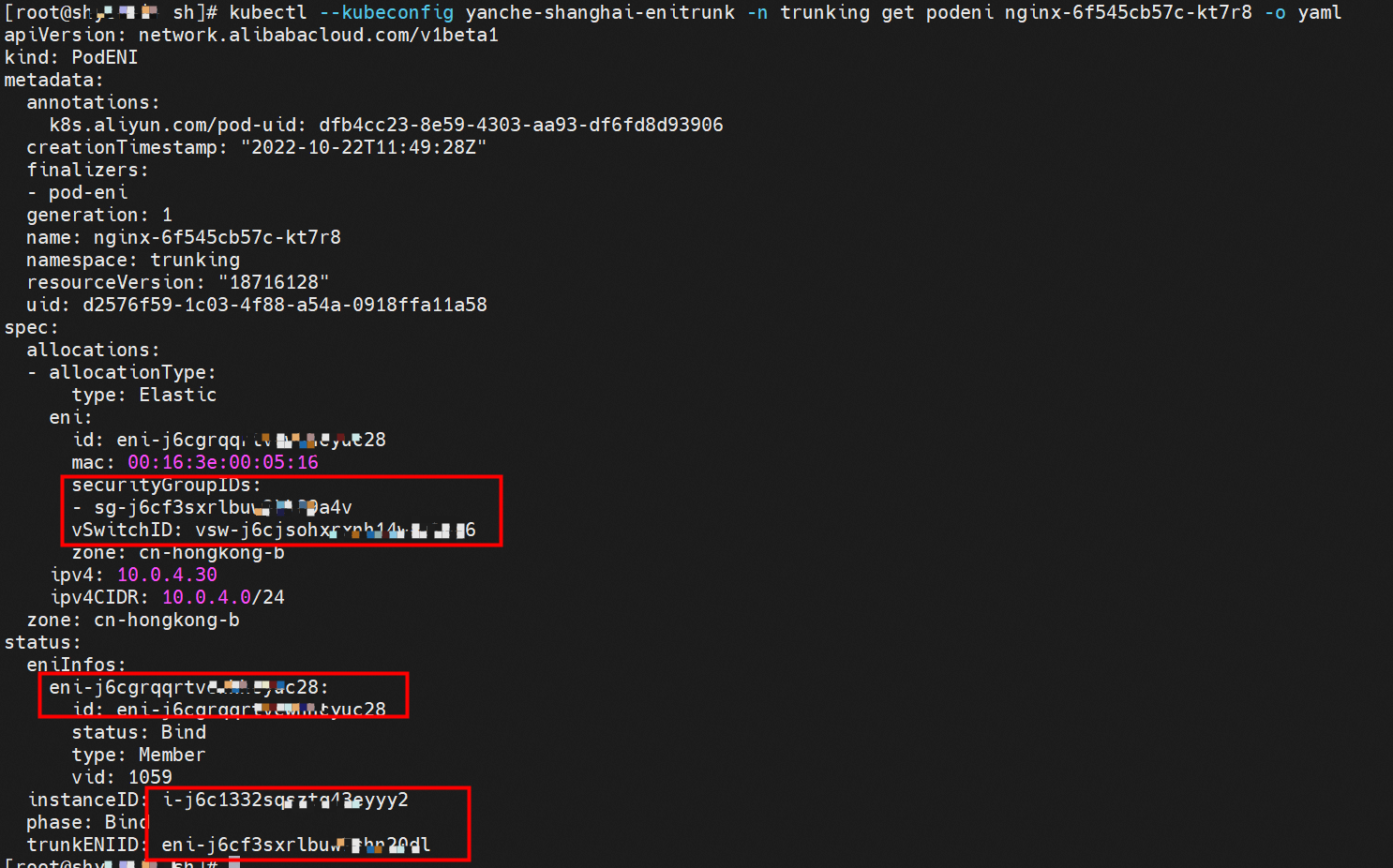

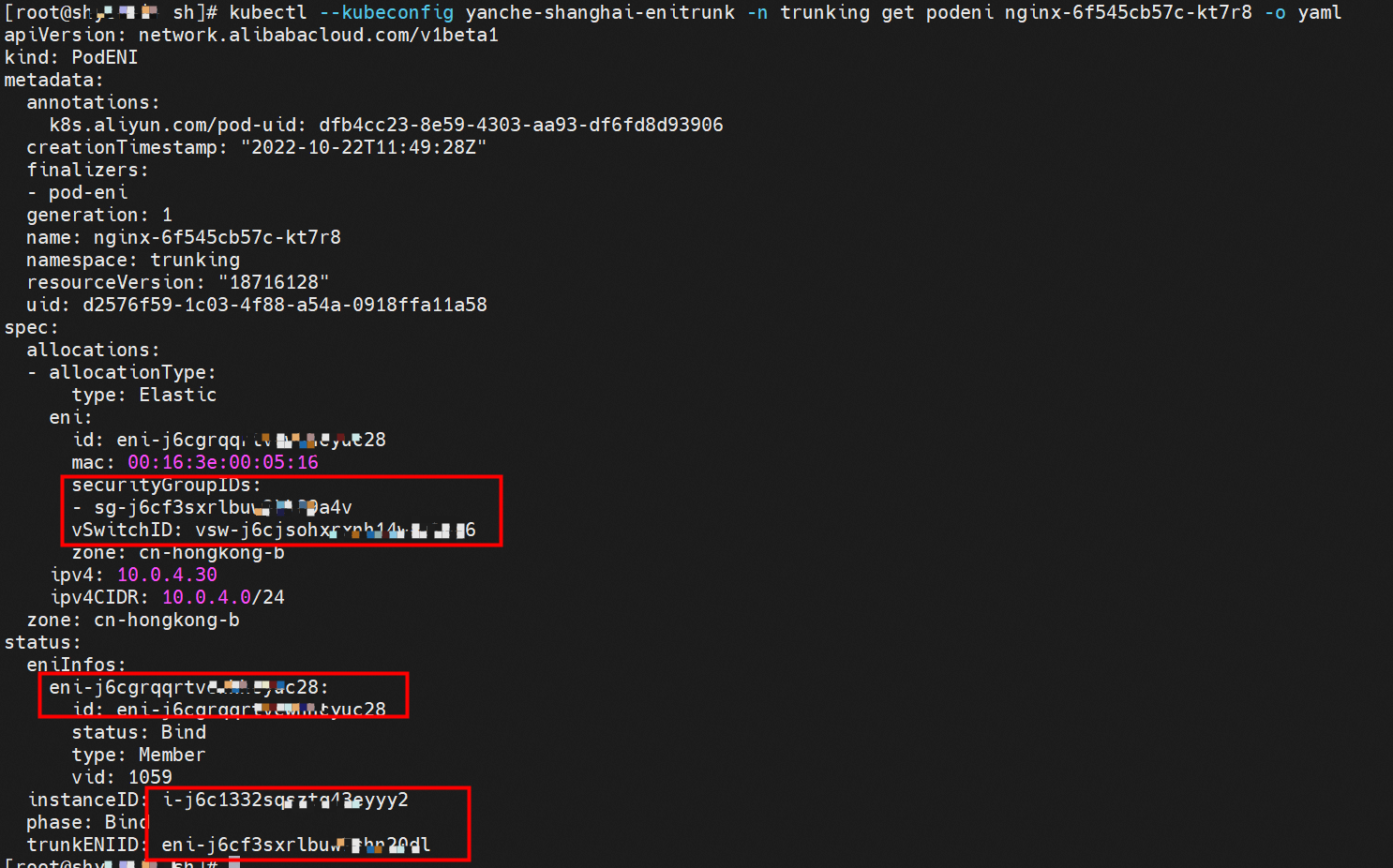

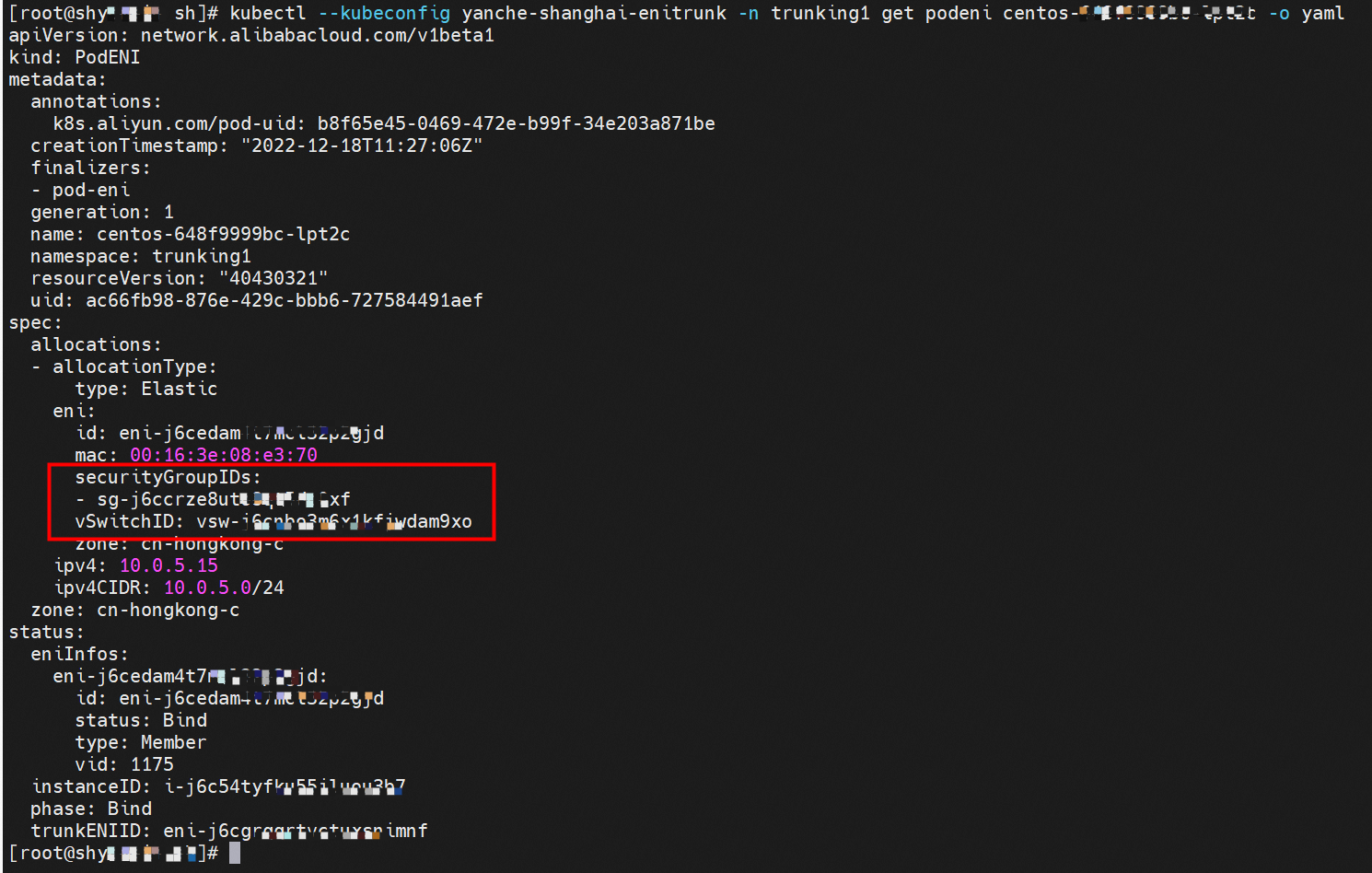

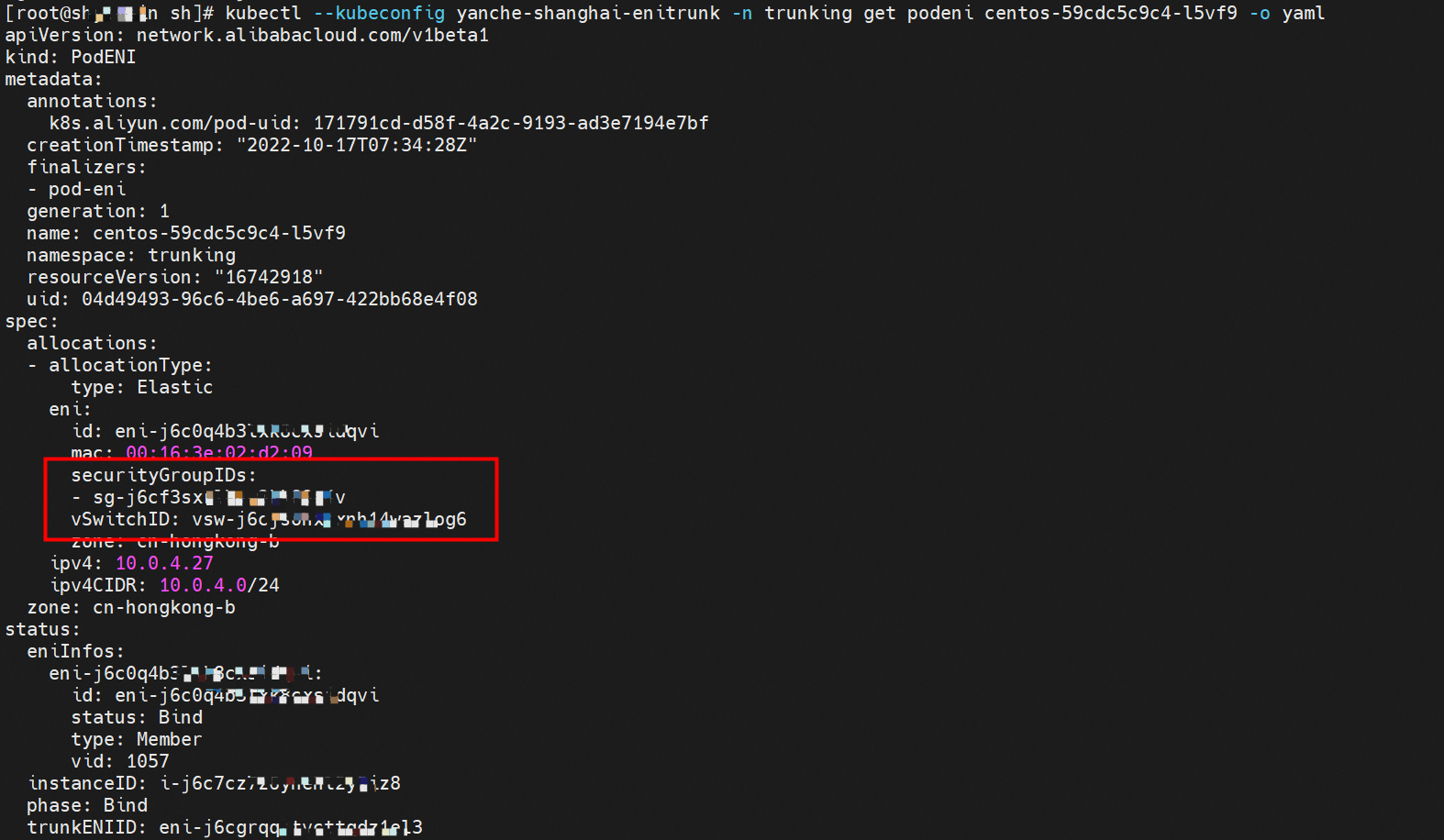

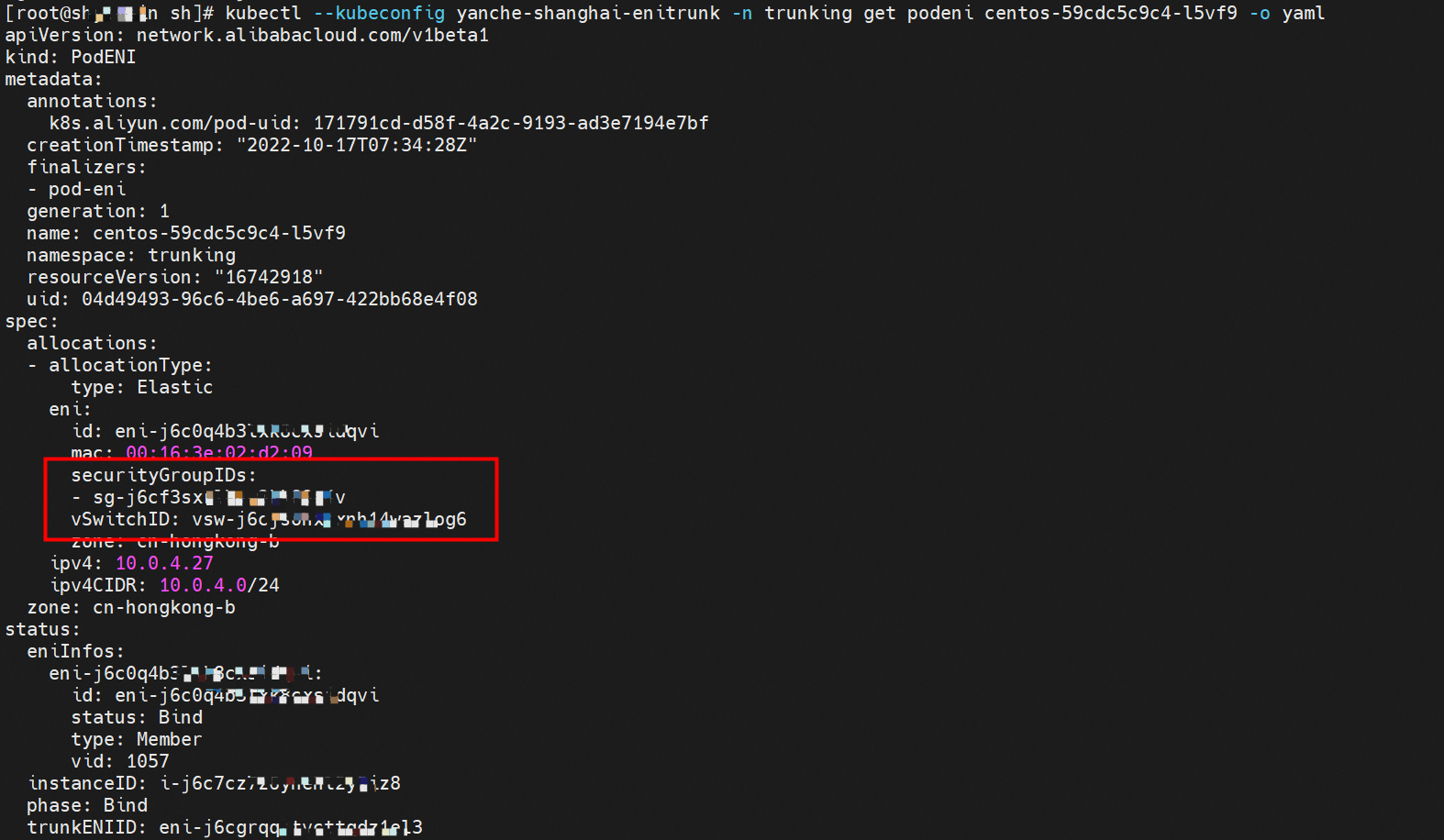

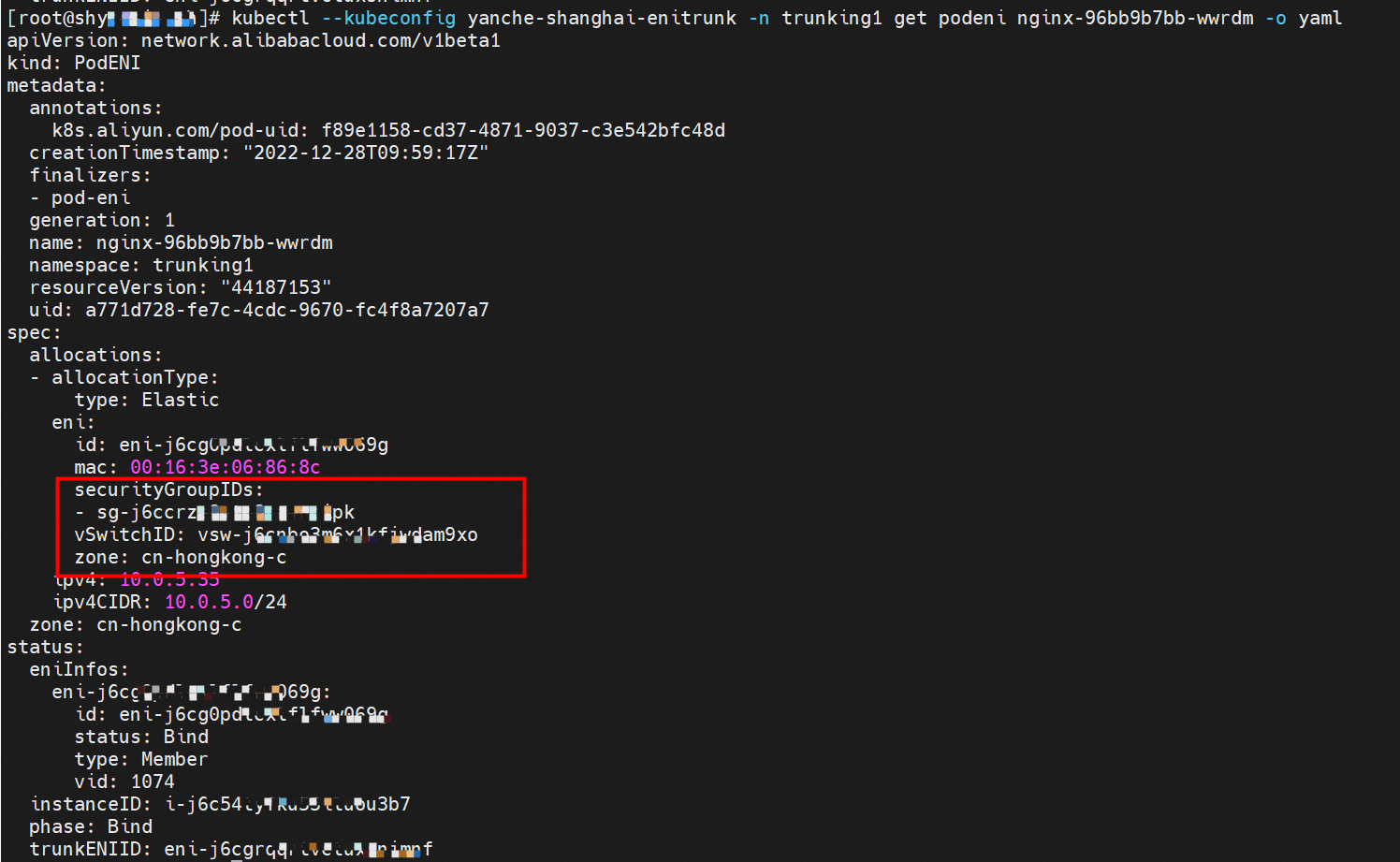

Terway automatically creates CRDs named PodENI for pods that match PodNetworkings to track the resource usage of pods. PodENIs are managed by Terway and cannot be modified. The following centos-59cdc5c9c4-l5vf9 pod in the trunking namespace matches the podnetworking settings and is assigned with the corresponding member ENI, corresponding trunking ENI, security group, switch, and bound ECS instance. This way, we can configure and manage the switch and the security group in the Pod dimension.

In the ECS console, we can clearly see the relationship between the member ENI and the Trunking ENI with the information on the corresponding security groups and switches.

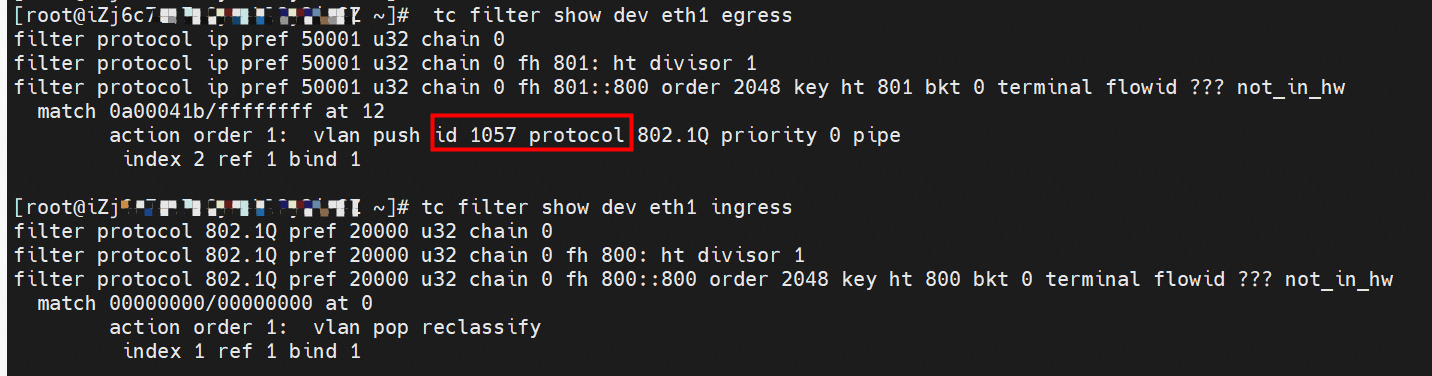

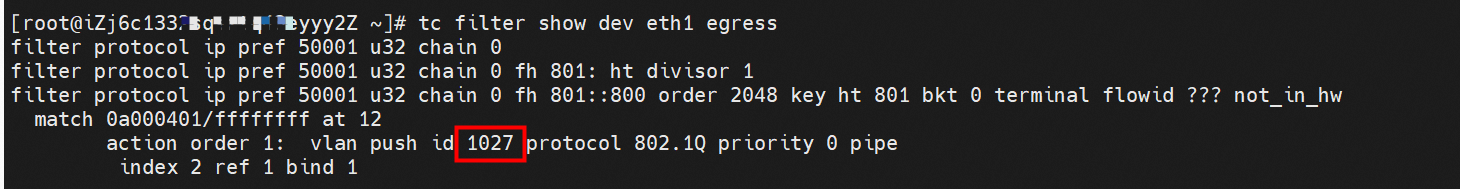

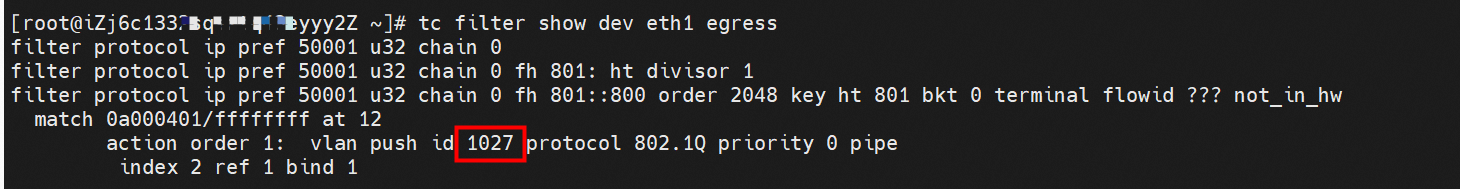

The configurations above allow us to know how to configure switches and security groups for each pod, and these configurations will take effect if we can enable each pod to automatically go to the corresponding configured Member ENI after it leaves the ECS through Trunking ENI. All configurations are implemented on the host through relevant policies. How does the Trunking ENI network interface card forward the traffic of the corresponding pod to the correct corresponding Member ENI? This is done through vlan. The VLAN ID can be seen at the tc level. Therefore, the VLAN ID is marked or removed at the egress or ingress stage.

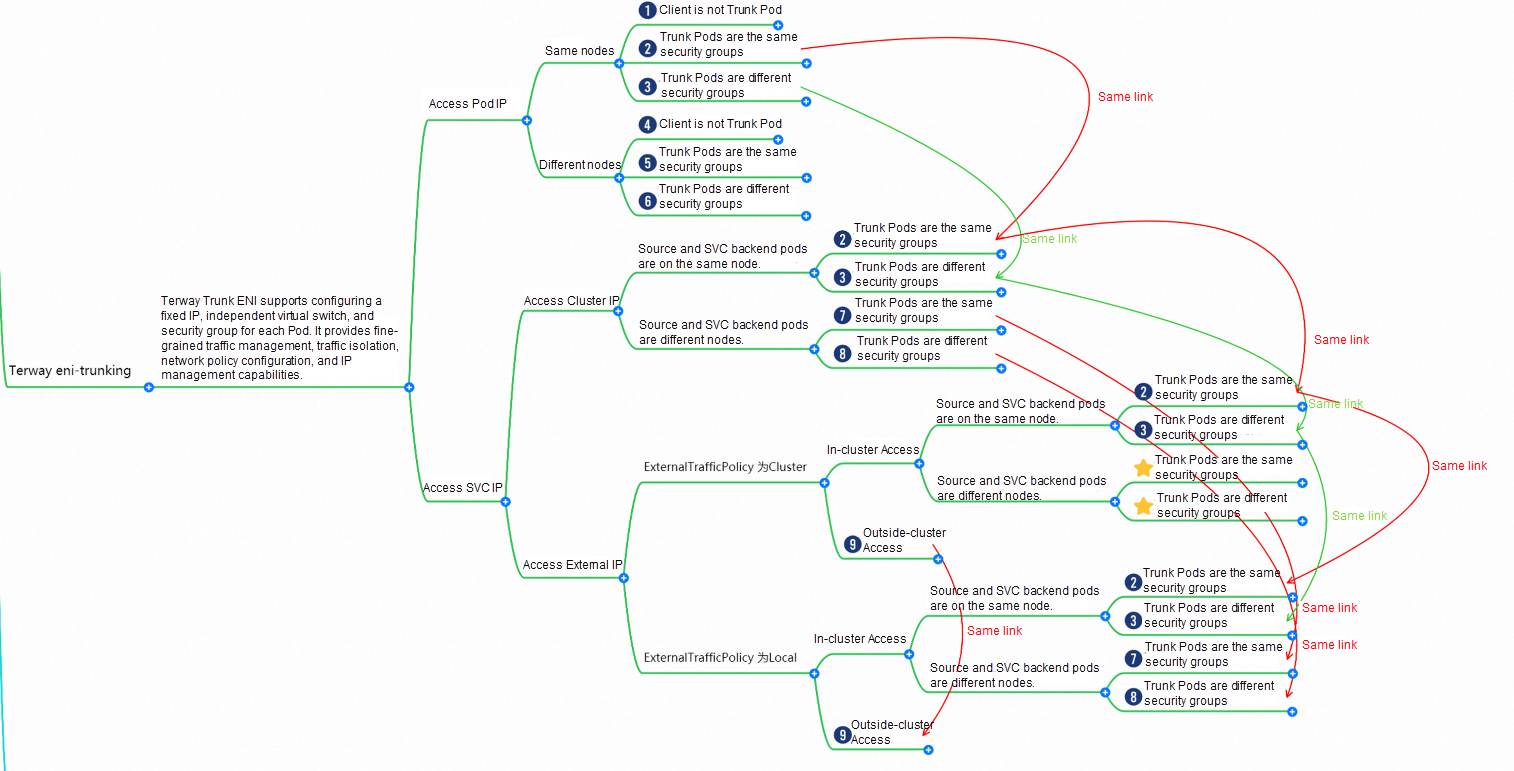

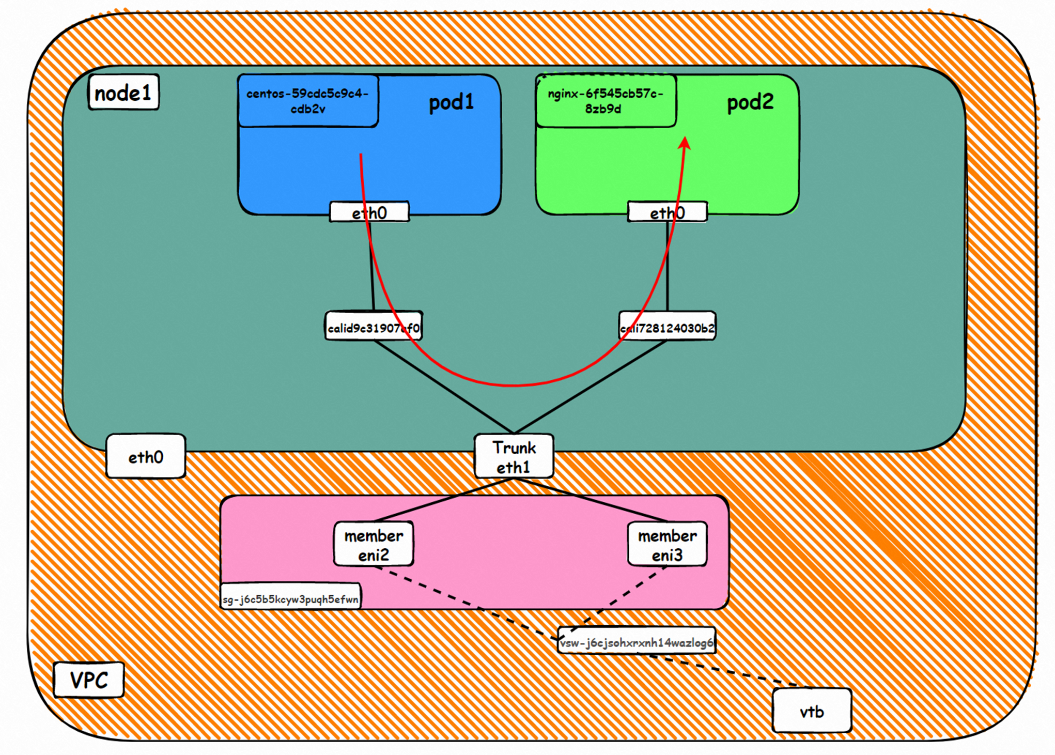

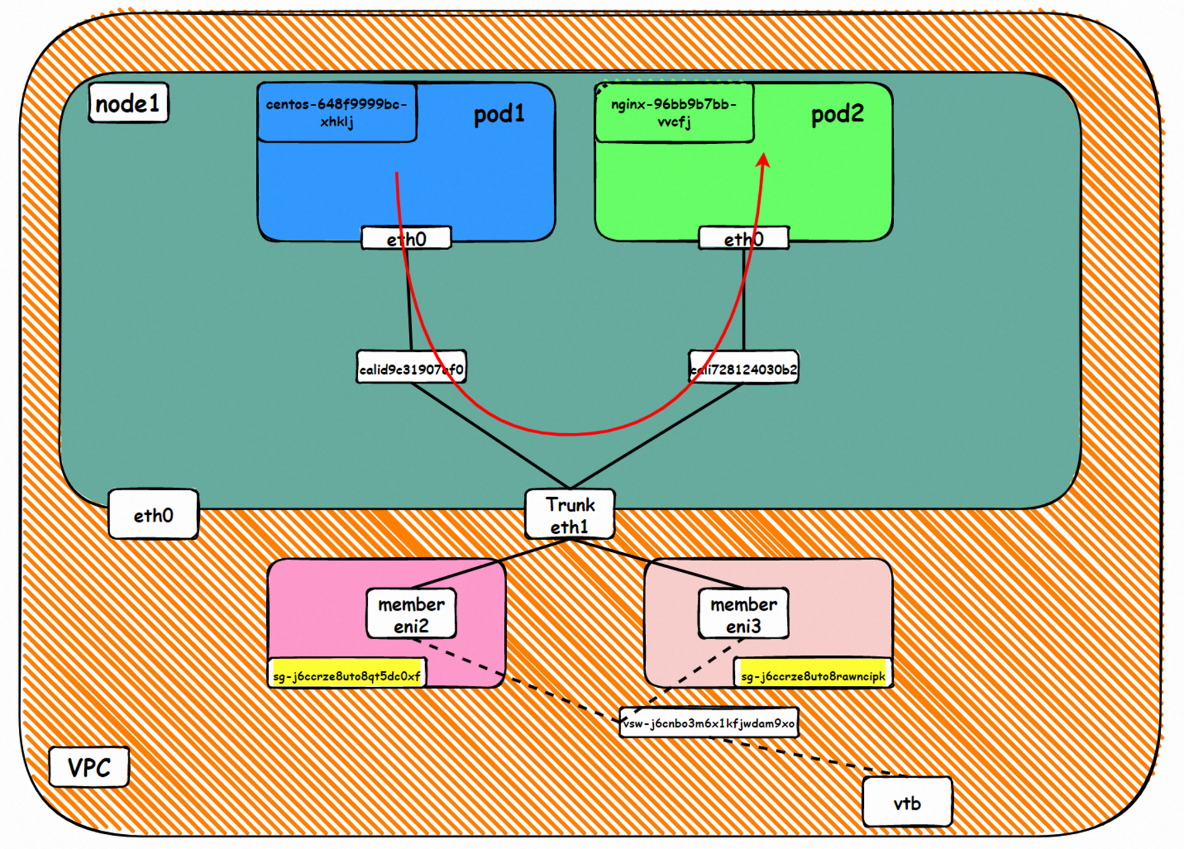

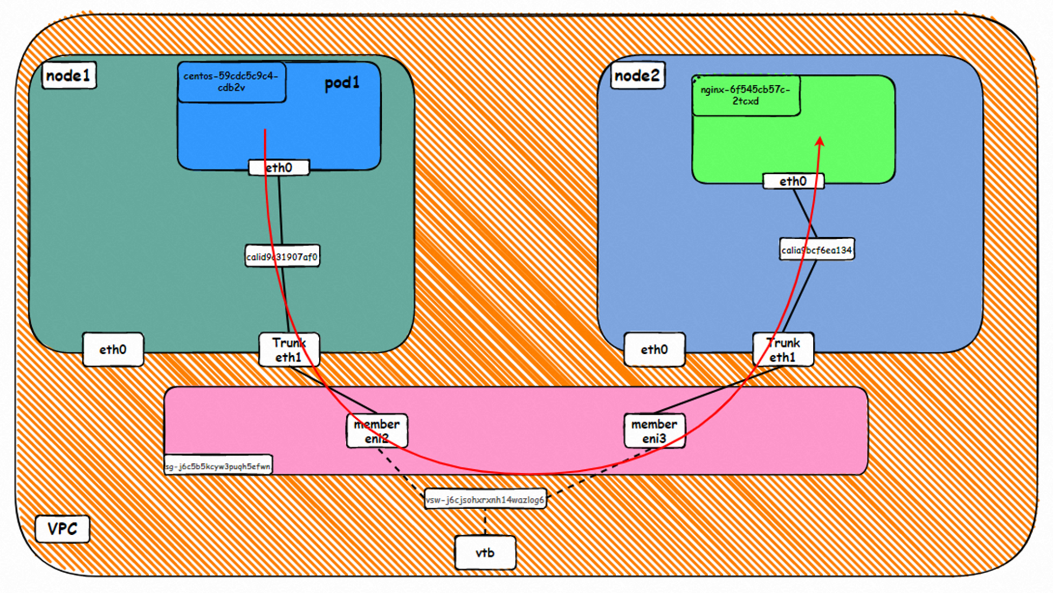

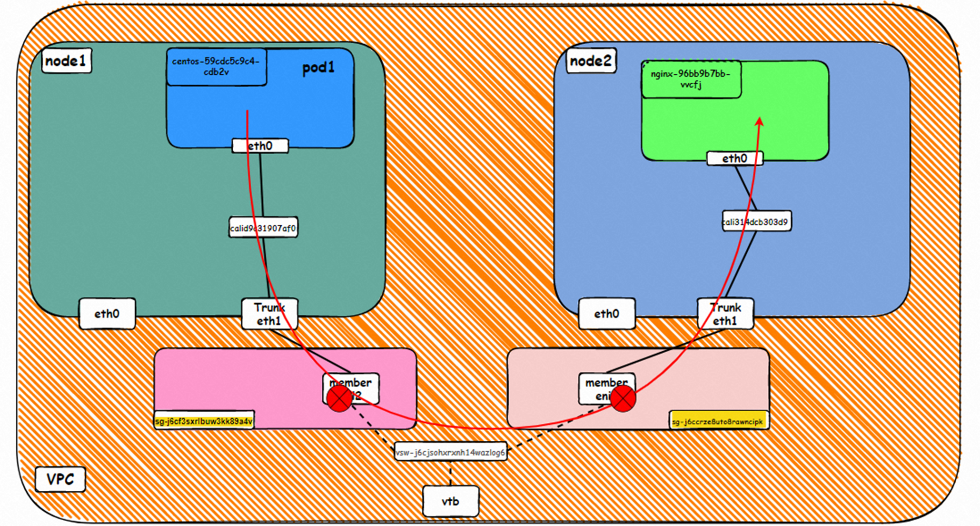

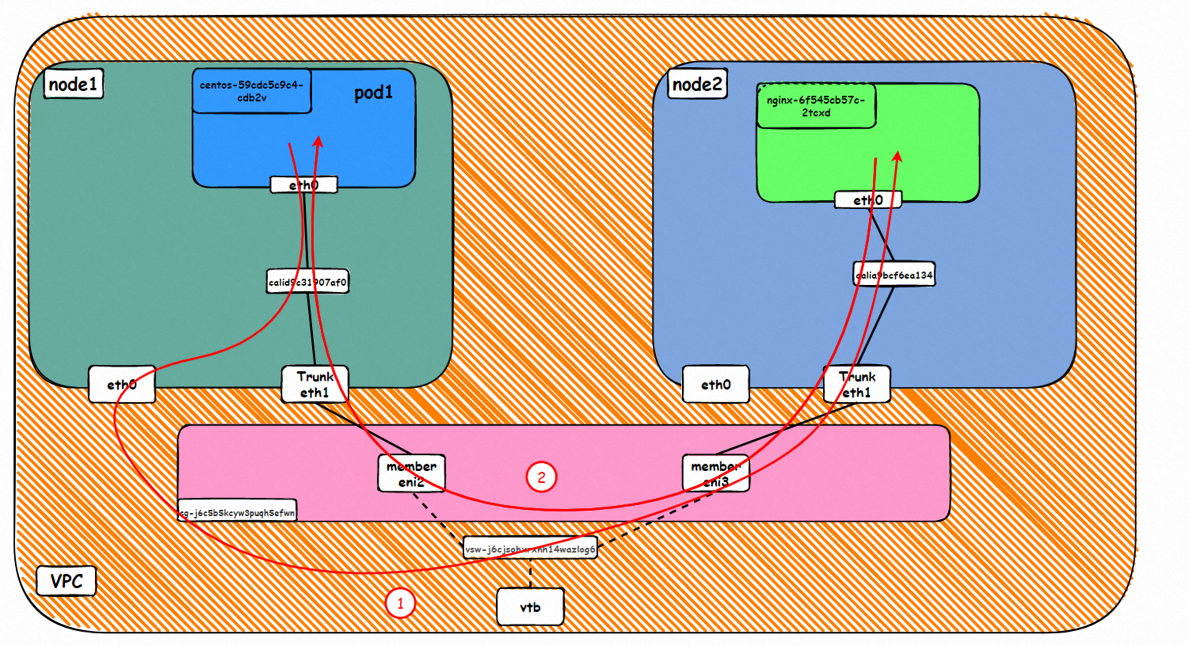

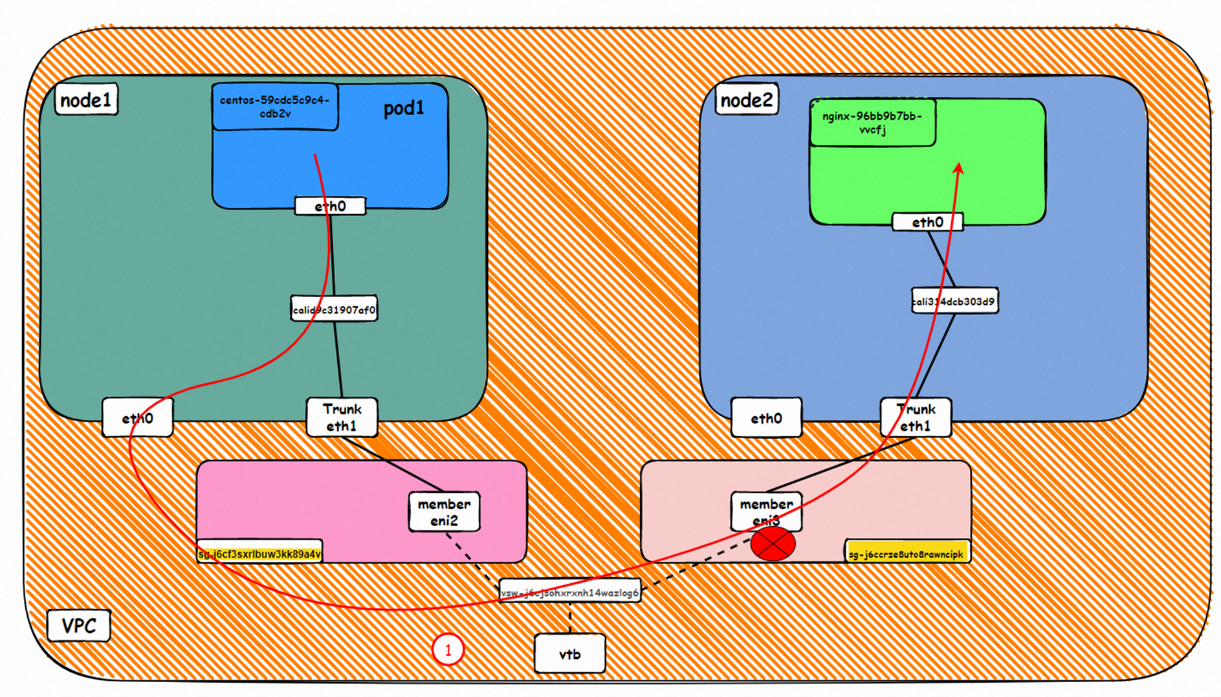

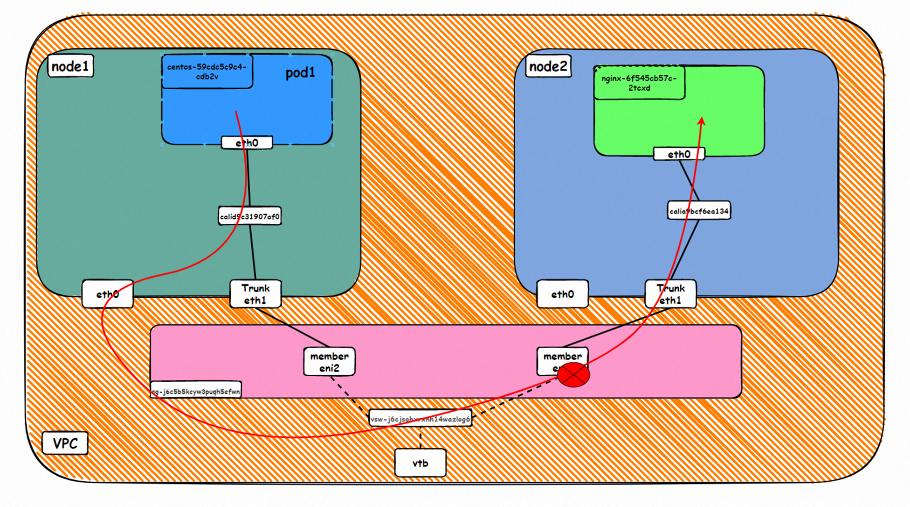

Therefore, the overall Terway ENI-Trunking model can be summarized as:

Since the security group and switch can be set in the Pod dimension, access to different links will inevitably become more complicated in macro terms. We can roughly divide the network links in Terway ENI-TRunking mode into two large SOP scenarios (Pod IP and SVC), or we can further divide them into ten different small SOP scenarios.

The data links of these 11 scenarios can be summarized into the following ten typical scenarios:

nginx-6f545cb57c-kt7r8 and 10.0.4.30 exist on the cn-hongkong.10.0.4.22 node.

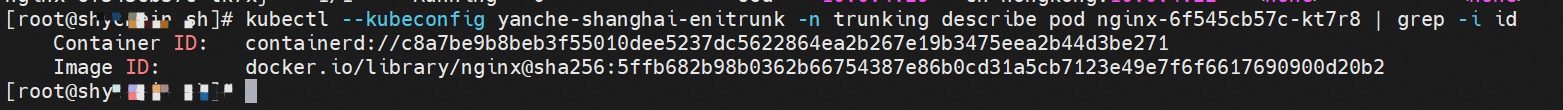

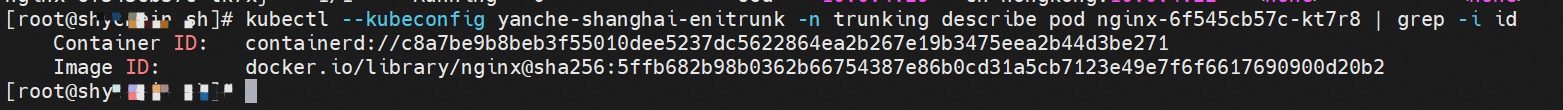

The IP address of nginx-6f545cb57c-kt7r8 is 10.0.4.30. The PID of the container on the host is 1734171, and the container network namespace has a default route pointing to container eth0.

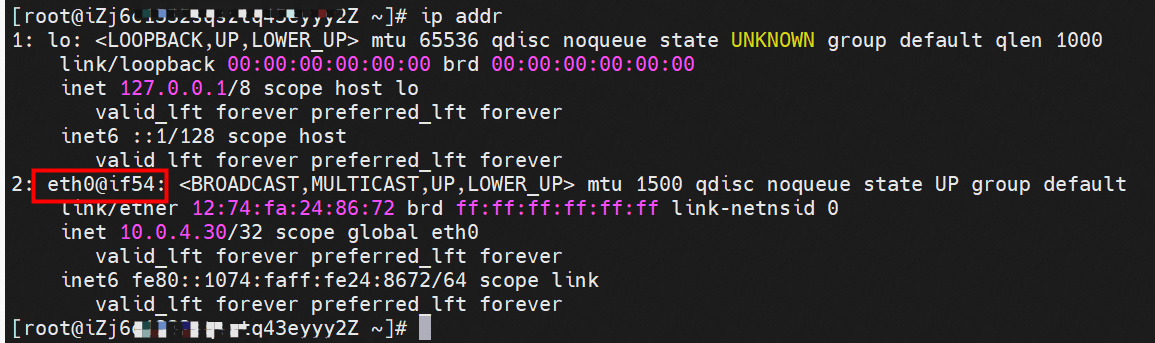

The container eth0 is a tunnel established in ECS through ipvlan tunnel and ECS's subsidiary ENI eth1. The subsidiary ENI eth1 also has a virtual calxxx network interface card.

In the ECS OS, there is a route that points to the IP address of the pod with calixxxxx as the next hop. As you can see from the preceding section, the calixxx network interface card is a pair that consists of veth1 in each pod. Therefore, the CIDR of the pod that accesses the SVC will point to veth1 instead of the default eth0 route. Therefore, the calixx network interface card here works to:

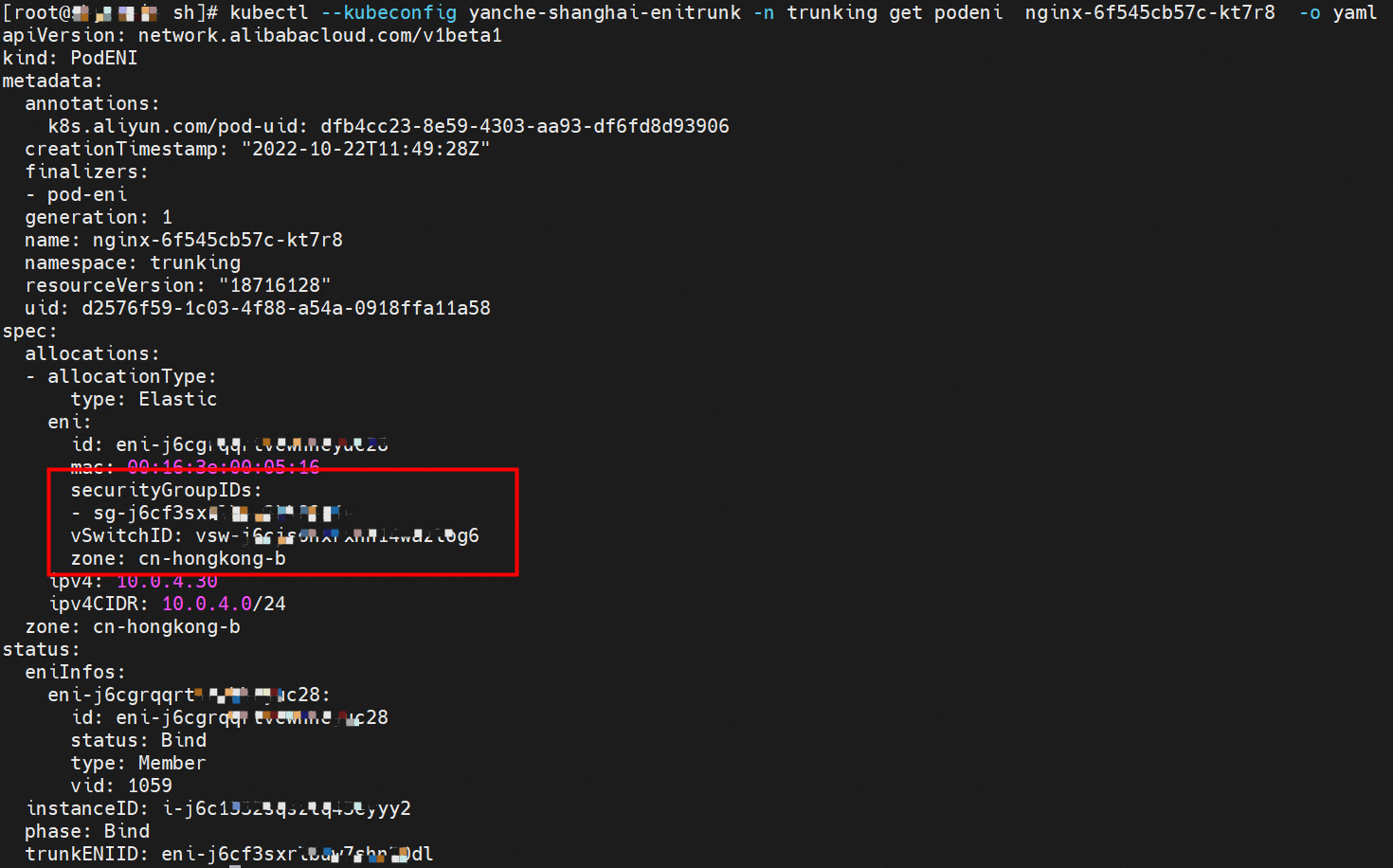

The nginx-6f545cb57c-kt7r8 pod in the trunking namespace matches the corresponding podnetworking settings and is assigned with the corresponding member ENI, corresponding trunking ENI, security group, switch, and bound ECS instance, thus realizing the configuration and management of the switch and security group in the Pod dimension.

VLAN ID 1027 can be seen at the tc level, so data traffic will be tagged or removed from VLAN IDs at the egress or ingress stage.

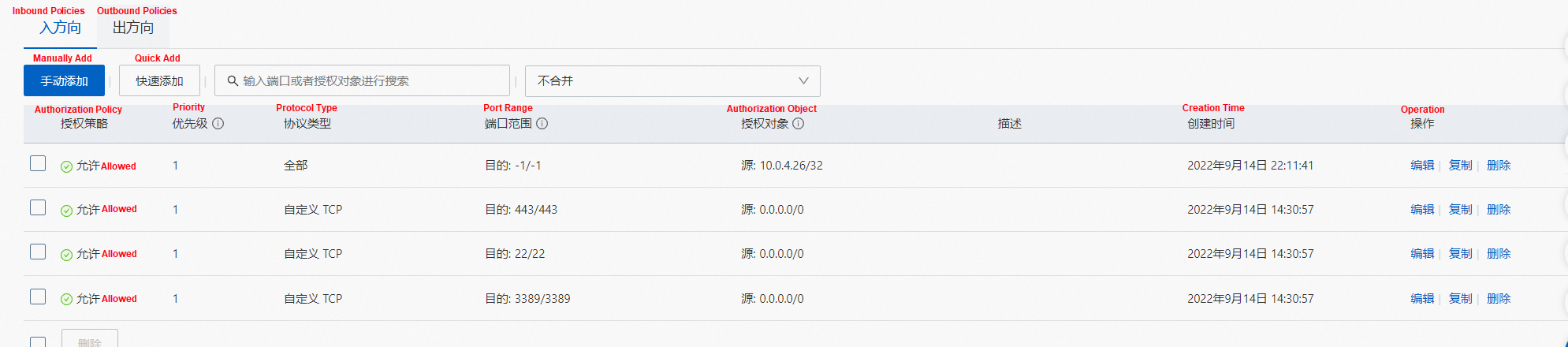

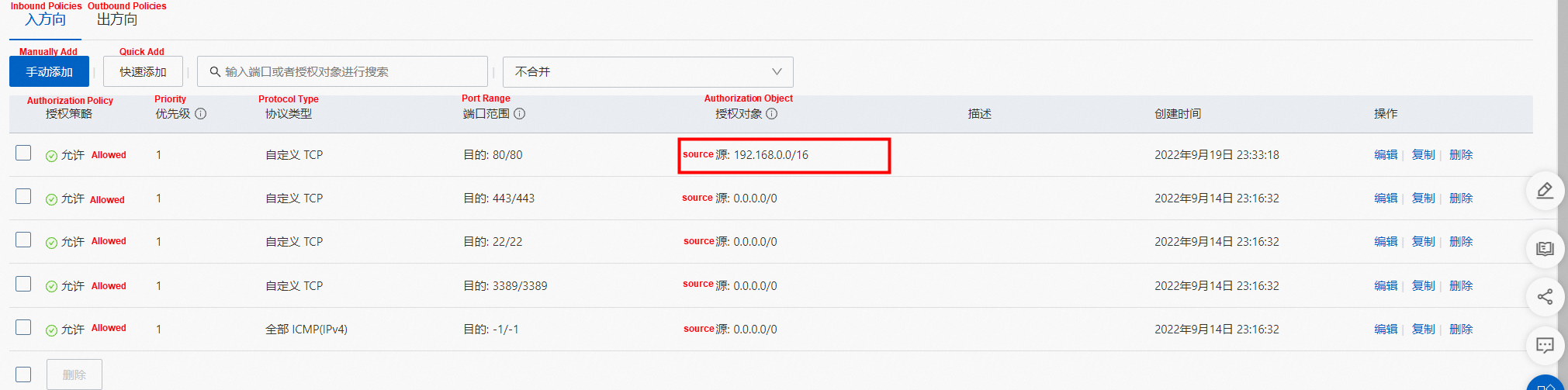

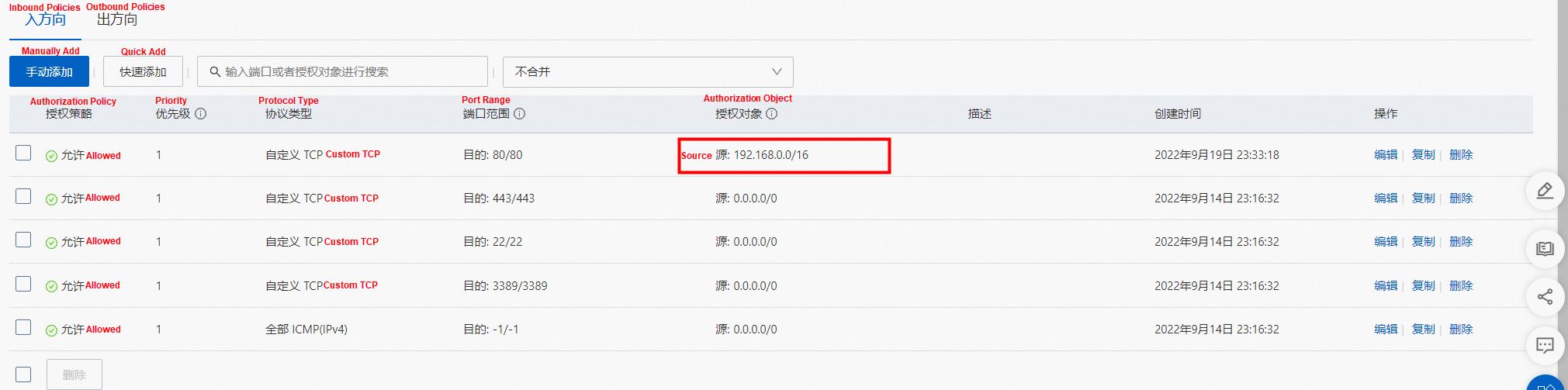

From the security group to which the network interface card of the ENI belongs, we can see that only the specified IP address is allowed to access port 80 of the nginx pod.

The traffic forwarding logic placed on the data surface at the OS level is similar to what's described in Panoramic Analysis of Alibaba Cloud Container Network Data Link (3) — Terway ENIIP. No more details are provided here.

Data Link Forwarding Diagram

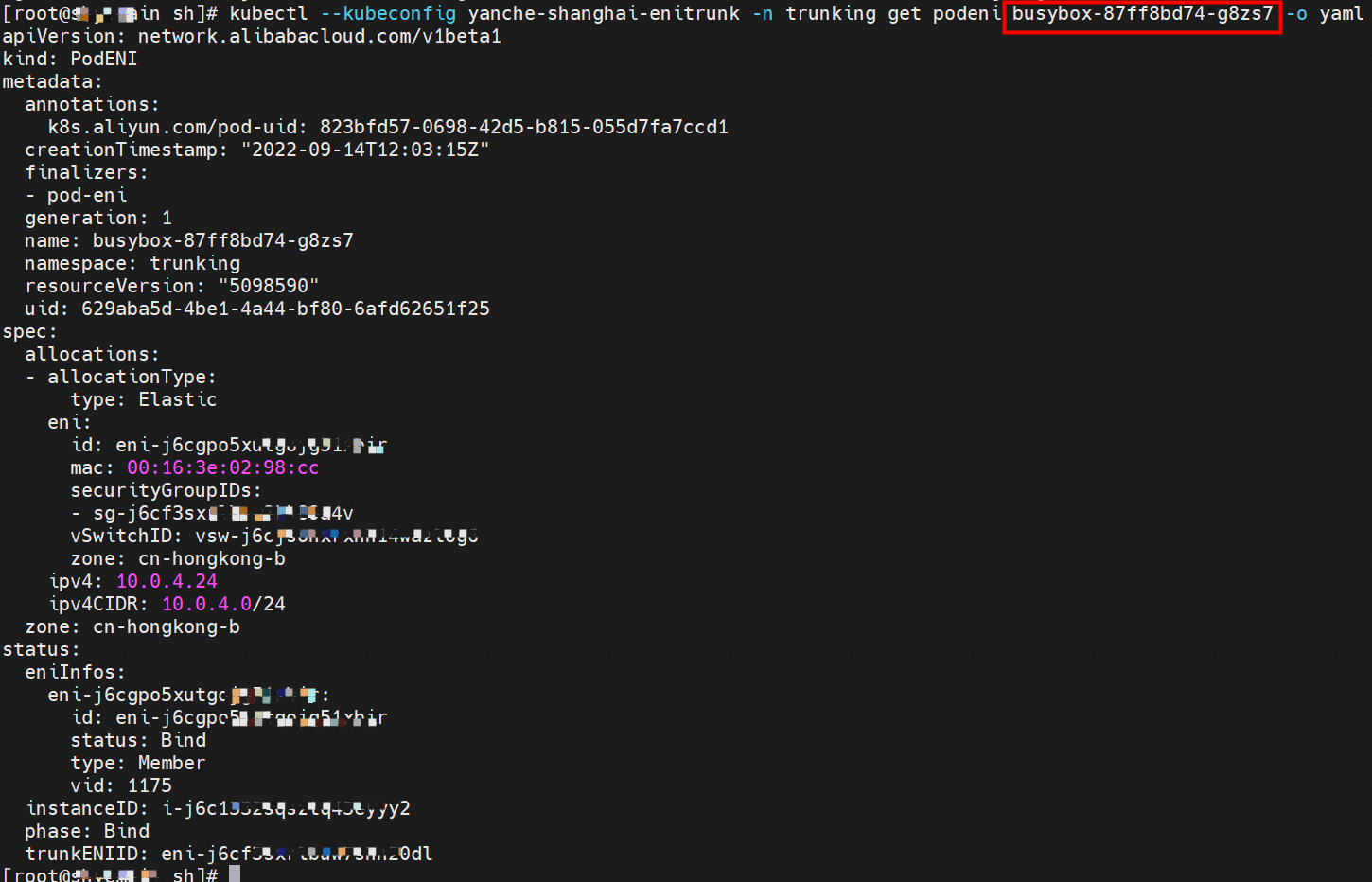

nginx-6f545cb57c-kt7r8,10.0.4.30 and busybox-87ff8bd74-g8zs7,10.0.4.24 exists on cn-hongkong.10.0.4.22 node.

The IP address of nginx-6f545cb57c-kt7r8 is 10.0.4.30. The PID of the container on the host is 1734171, and the container network namespace has a default route pointing to container eth0.

The container eth0 is a tunnel established in ECS through the ipvlan tunnel and ECS's subsidiary ENI eth1. The subsidiary ENI eth1 also has a virtual calxxx network interface card.

In the ECS OS, there is a route that points to the IP address of the pod with calixxxxx as the next hop. As you can see from the preceding section, the calixxx network interface card is a pair that consists of veth1 in each pod. Therefore, the CIDR of the pod that accesses the SVC will point to veth1 instead of the default eth0 route. Therefore, the calixx network interface card here works to:

The busybox-87ff8bd74-g8zs7 and nginx-6f545cb57c-kt7r8 pod in the trunking namespace match the corresponding podnetworking settings and is assigned with the corresponding member ENI, corresponding trunking ENI, security group, switch, and bound ECS instance, thus realizing the configuration and management of the switch and security group in the Pod dimension.

VLAN ID 1027 can be seen at the tc level, so data traffic will be tagged or removed from VLAN IDs at the egress or ingress stage.

From the security group to which the network interface card of the ENI belongs, we can see that only the specified IP address is allowed to access port 80 of the nginx pod.

The traffic forwarding logic placed on the data surface at the OS level is similar to what's described in Panoramic Analysis of Alibaba Cloud Container Network Data Link (3) — Terway ENIIP.

Data Link Forwarding Diagram

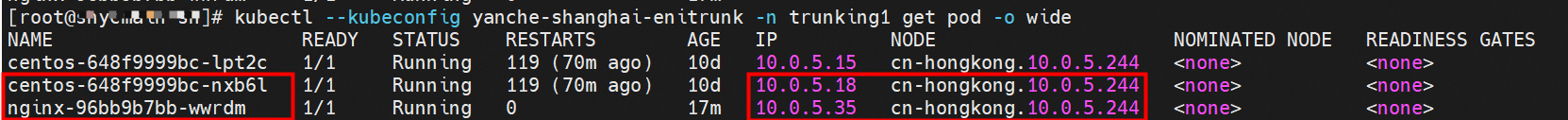

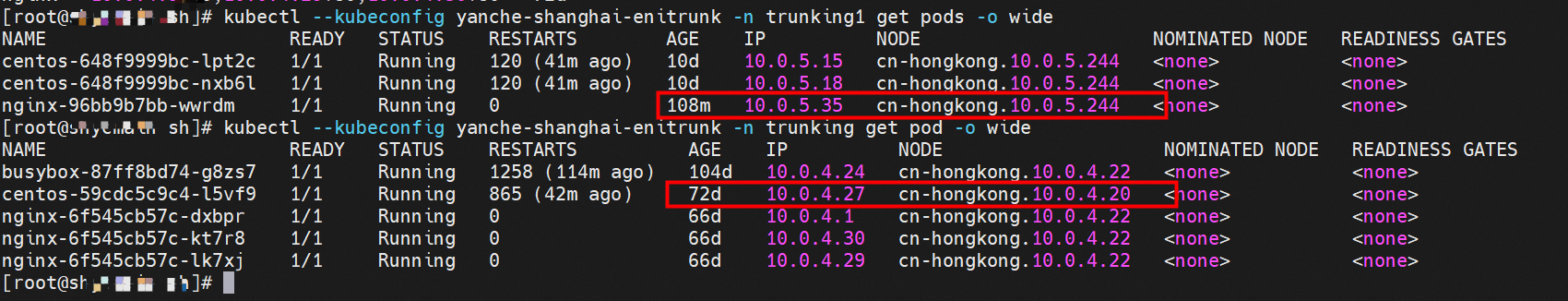

nginx-96bb9b7bb-wwrdm,10.0.5.35 and centos-648f9999bc-nxb6l,10.0.5.18 exist on cn-hongkong.10.0.4.244 node

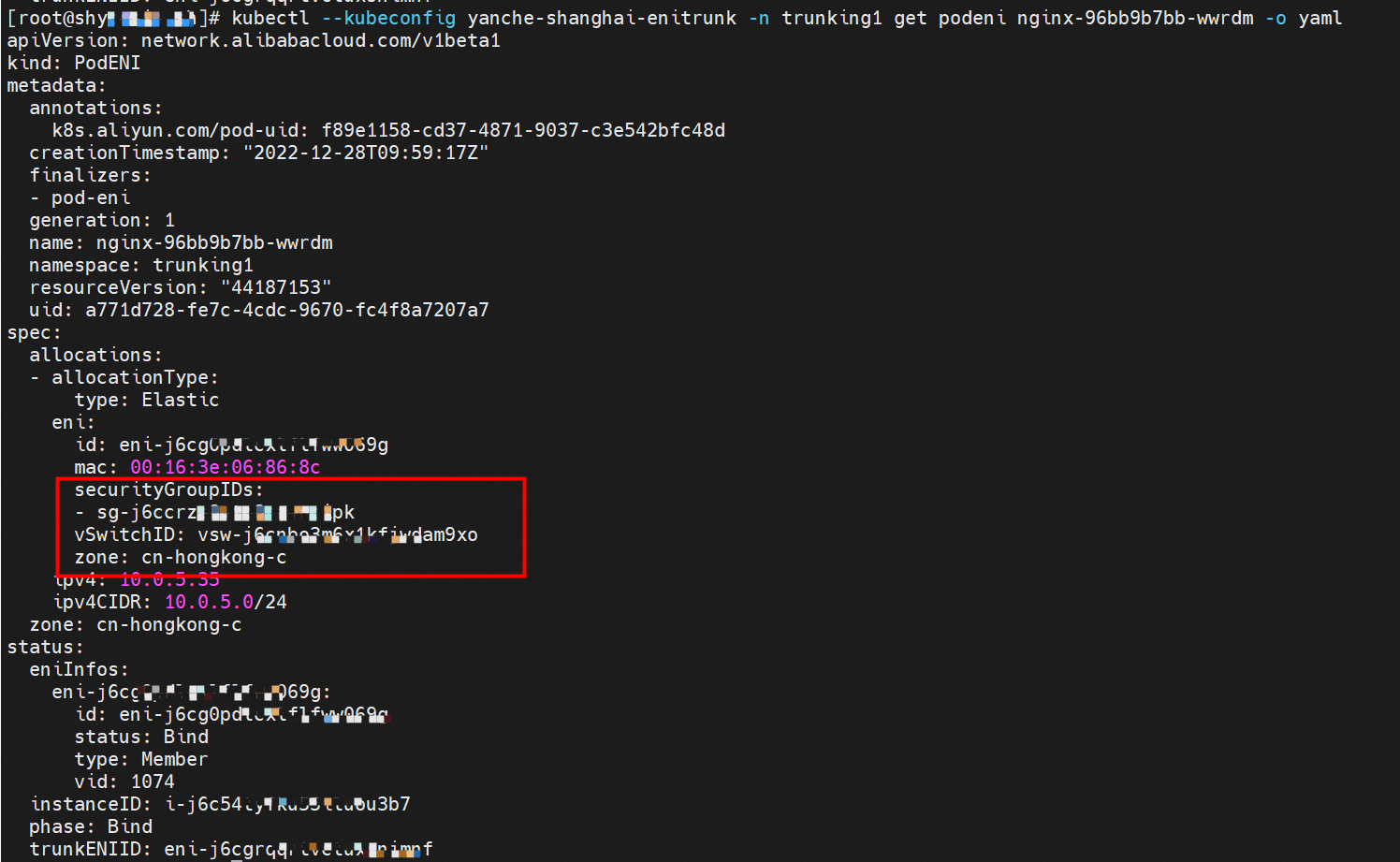

The container network namespace and routing of related pods are not described here. Please see the preceding two sections for more information. You can use podeni to view the ENI, security group sg, and vsw of the centos-648f9999bc-nxb6l.

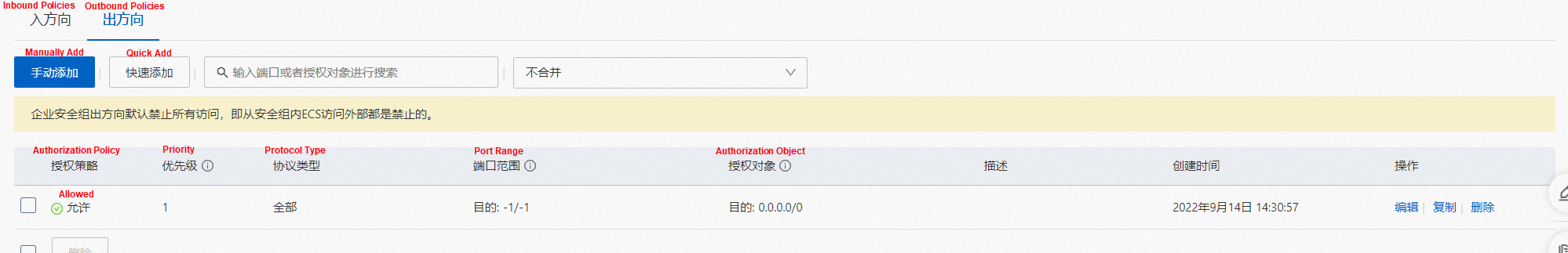

The security group sg-j6ccrxxxx shows that the CentOS pod can access all external addresses.

Similarly, it can be found that the nginx-96bb9b7bb-wwrdm's security group sg-j6ccrze8utxxxxx of the server pod only allows 192.168.0.0/16 to access.

Data Link Forwarding Diagram

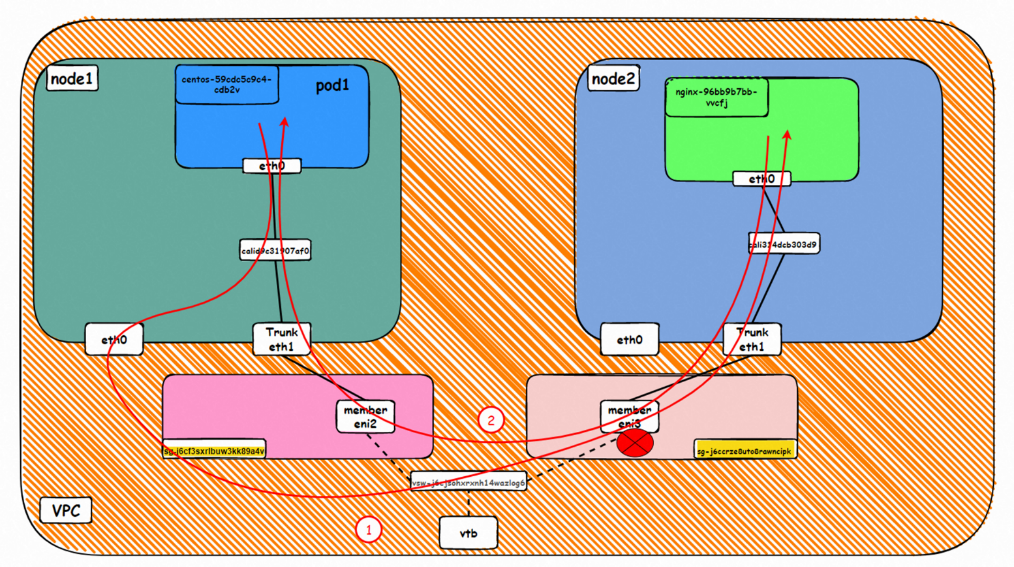

Client centos-59cdc5c9c4-l5vf9 and IP 10.0.4.27 exist on cn-hongkong.10.0.4.20 node

Server nginx-6f545cb57c-kt7r8 and IP 10.0.4.30 exist on cn-hongkong.10.0.4.22 node

The container network namespace and routing of related pods are not described here. Please see the preceding two sections for more information.

You can use podeni to view the ENI, security group sg, and vsw of the centos-59cdc5c9c4-l5vf9.

Through the security group sg-j6cf3sxrlbuwxxxxx, we can see that the CentOS and nginx pods belong to the same security group sg-j6cf3sxrlbuxxxxx.

Access depends on the security group configuration

Data Link Forwarding Diagram

Client centos-59cdc5c9c4-l5vf9 and IP 10.0.4.27 exist on cn-hongkong.10.0.4.20 node.

Server nginx-96bb9b7bb-wwrdm and IP address 10.0.5.35 exist on cn-hongkong.10.0.4.244 node.

The container network namespace and routing of related pods are not described here. Please see the preceding two sections for more information.

You can use podeni to view the ENI, security group sg, and vsw of the centos-59cdc5c9c4-l5vf9.

The security group sg-j6cf3sxrlbuwxxxxx shows that the CentOS pod can access all external addresses.

Similarly, it can be found that the nginx-96bb9b7bb-wwrdm's security group sg-j6ccrze8utxxxxx of the server pod only allows 192.168.0.0/16 access.

Access depends on the security group configuration

Data Link Forwarding Diagram

Client centos-59cdc5c9c4-l5vf9 and IP 10.0.4.27 exist on cn-hongkong.10.0.4.20 node

Server nginx-6f545cb57c-kt7r8 and IP 10.0.4.30 exist on cn-hongkong.10.0.4.22 node

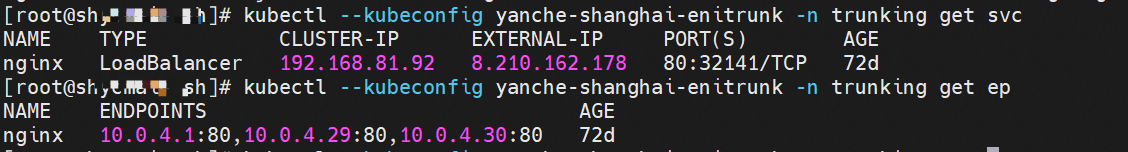

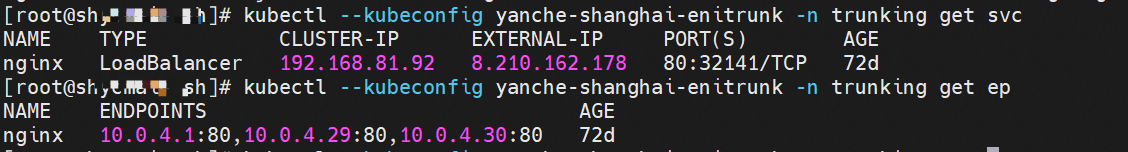

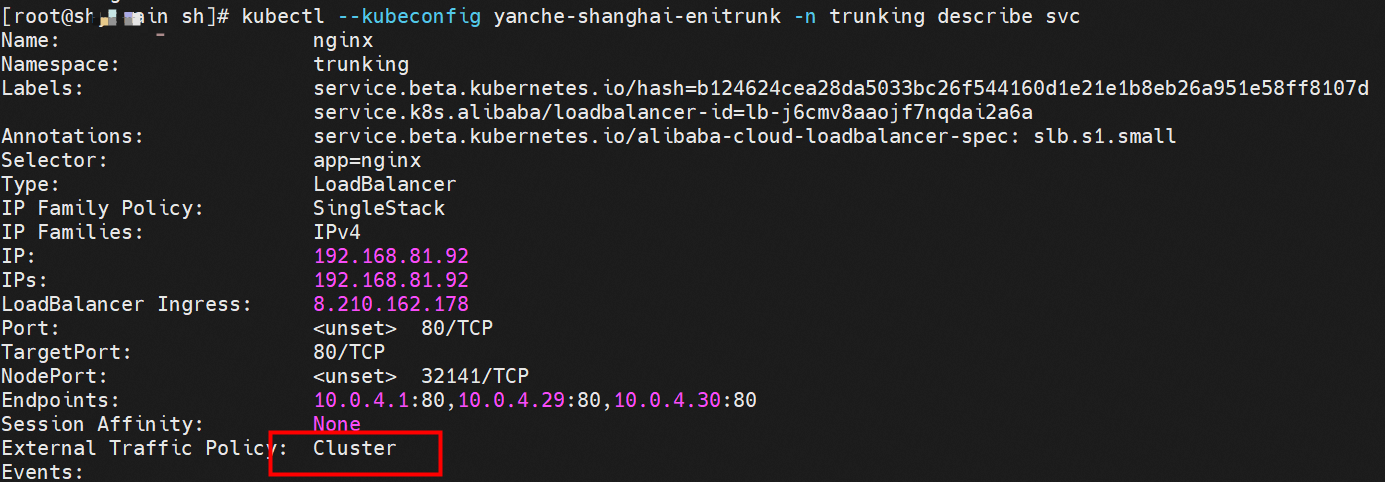

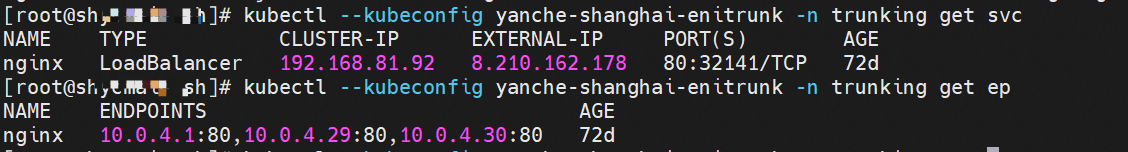

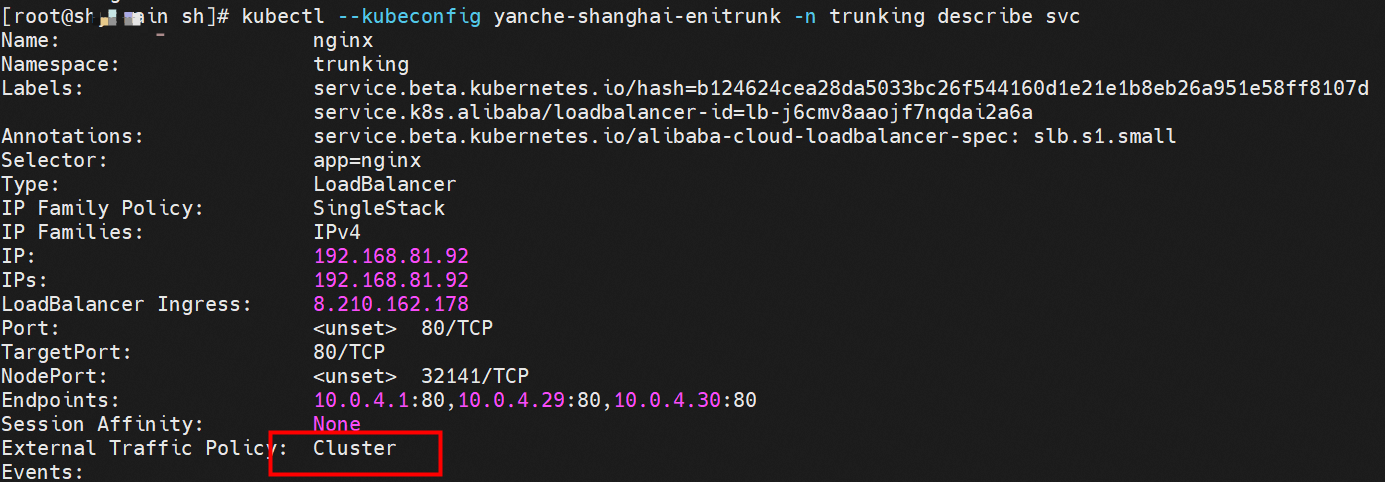

The ClusterIP address of the SVC in NGINX is 192.168.81.92, and the external IP address is 8.210.162.178.

ENI-Trunking (compared with ENIIP) only adds the corresponding Truning and Member ENIs on the VPC side, which is the same as in OS.

Access depends on the security group configuration

Data Link Forwarding Diagram

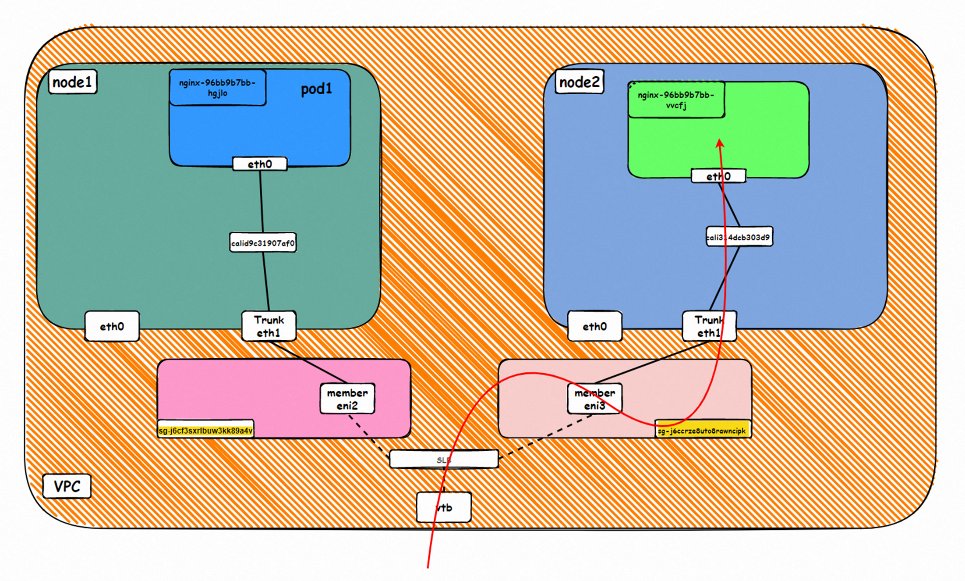

Go: ECS1 Pod1 eth0 → cali1xxx > ECS eth0 → Pod1 member ENI → vpc route rule (if any) → Pod2 member ENI → Trunk ENI (ECS2) cali2 xxx → ECS2 Pod1 eth0

Back: ECS2 Pod1 eth0 → Eth-Trunk (ECS2) cali2 xxx → Pod2 member ENIi → vpc route rule (if any) → Pod1 member ENI → Eth-Trunk ENI (ECS1) → cali1xxx → ECS1 Pod1 eth0

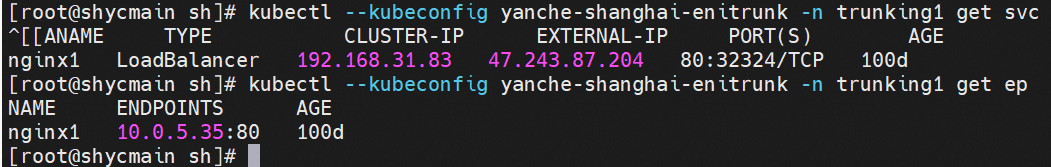

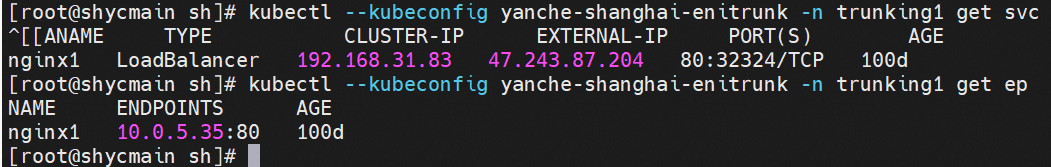

Client centos-59cdc5c9c4-l5vf9 and IP 10.0.4.27 exist on cn-hongkong.10.0.4.20 node

Server nginx-96bb9b7bb-wwrdm and IP 10.0.5.35 exist on cn-hongkong.10.0.4.244 node

The ClusterIP address of the SVC in NGINX is 192.168.31.83, and the external IP address is 47.243.87.204.

ENI-Trunking (compared with ENIIP) only adds the corresponding Truning and Member ENIs on the VPC side, which is the same as in OS.

Access depends on the security group configuration

Data Link Forwarding Diagram

Go: ECS1 Pod1 eth0 → cali1xxx > ECS eth0 → Pod1 member ENI → vpc route rule (if any) → Pod2 member ENI → Trunk ENI (ECS2) cali2 xxx → ECS2 Pod1 eth0

Back: ECS2 Pod1 eth0 → Eth-Trunk ( ECS2) cali2 xxx → Pod2 member ENI → vpc route rule (if any) → Pod1 member ENI → Eth-Trunk ENI (ECS1) → cali1xxx → ECS1 Pod1 eth0

Client centos-59cdc5c9c4-l5vf9 and IP 10.0.4.27 exist on cn-hongkong.10.0.4.20 node.

Server nginx-96bb9b7bb-wwrdm and IP 10.0.5.35 exist on cn-hongkong.10.0.4.244 node.

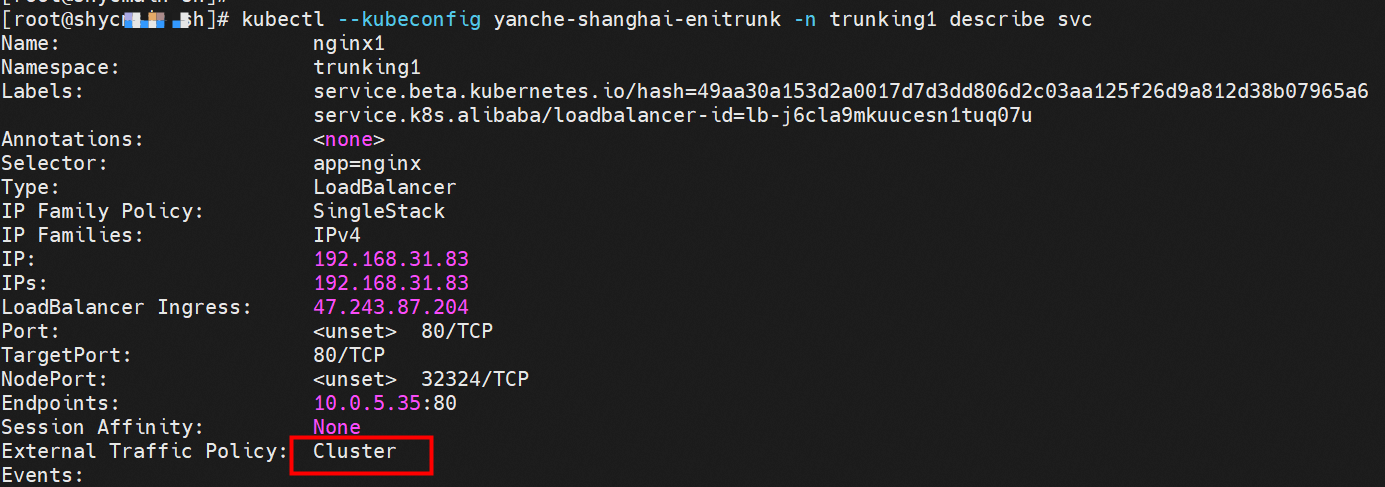

The ClusterIP address of the SVC in NGINX is 192.168.31.83. The external IP address is 47.243.87.204. The ExternalTrafficPolicy is in cluster mode.

ENI-Trunking (compared with ENIIP) only adds the corresponding Truning and Member ENIs on the VPC side, which is the same as in OS.

Access depends on the security group configuration

Data Link Forwarding Diagram

Client centos-59cdc5c9c4-l5vf9 and IP 10.0.4.27 exist on cn-hongkong.10.0.4.20 node.

Server nginx-6f545cb57c-kt7r8 and IP 10.0.4.30 exist on cn-hongkong.10.0.4.22 node.

The ClusterIP address of the SVC in NGINX is 192.168.81.92. The external IP address is 8.210.162.178. The ExternalTrafficPolicy is Cluster.

ENI-Trunking (compared with ENIIP) only adds the corresponding Truning and Member ENIs on the VPC side, which is the same as in OS.

Access depends on the security group configuration

Data Link Forwarding Diagram

Client centos-59cdc5c9c4-l5vf9 and IP 10.0.4.27 exist on cn-hongkong.10.0.4.20 node.

Server nginx-6f545cb57c-kt7r8 and IP 10.0.4.30 exist on cn-hongkong.10.0.4.22 node.

The ClusterIP address of the SVC in NGINX is 192.168.81.92. The external IP address is 8.210.162.178. The ExternalTrafficPolicy is Cluster.

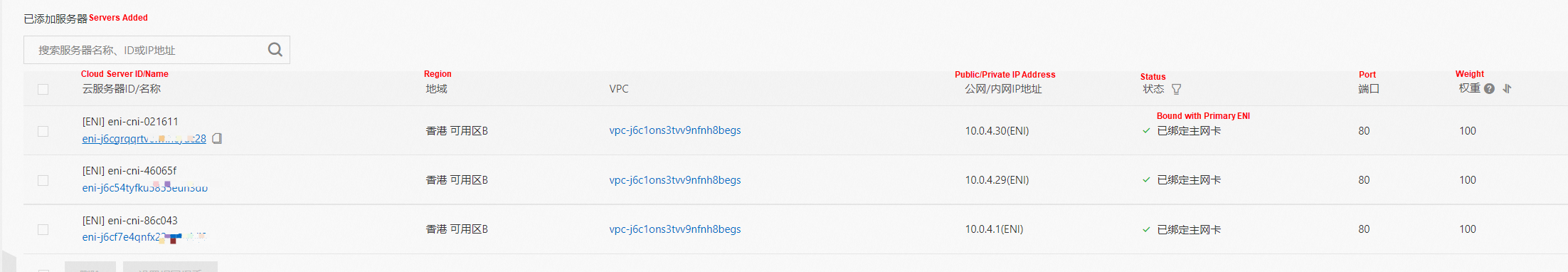

In the SLB console, we can see the backend server group of the lb-j6cmv8aaojf7nqdai2a6a Vserver group is the ENI eni-j6cgrqqrtvcwhhcyuc28, eni-j6c54tyfku5855euh3db, and eni-j6cf7e4qnfx22mmvblj0 of two backend nginx pods, which are all member ENIs.

Access depends on the security group configuration

Data Link Forwarding Diagram

This article focuses on the data link forwarding paths of ACK in Terway ENI-Trunking mode in different SOP scenarios. Pod-dimension switch and security group configuration settings are introduced to meet customer requirements for more refined management of business networks. There are ten SOP scenarios in Terway ENI-Trunking mode. The technical implementation principles and cloud product configurations of these scenarios are sorted out and summarized step by step. This provides preliminary guidance for link jitter, optimal configuration, and link principles under Terway ENI-Trunking architectures.

In Terway ENI-Trunking mode, the veth pair is used to connect the network space between the host and pod. The pod address comes from the auxiliary IP address of the ENI, and policy-based routing needs to be configured on the node to ensure that the traffic of the IP address passes through the Elastic Network Interface to which it belongs. This way, multi-pod sharing of ENI can be realized, which significantly improves the deployment density of pods. At the same time, tc egress/ingress is used to mark or remove the VLAN tag when data flow is input into ECS, so data flow can really go to the Member ENI network card, thus realizing fine management. Currently, microservices are becoming more popular. Sidecar mode is adopted to enable each pod to become a network node, thus realizing different network behaviors and observability for different traffic in the pod.

Stay tuned for the next part!

Analysis of Alibaba Cloud Container Network Data Link (4): Terway IPVLAN + EBPF

212 posts | 13 followers

FollowAlibaba Cloud Native - June 9, 2023

Alibaba Cloud Native Community - March 14, 2023

Alibaba Cloud Native - August 9, 2023

Alibaba Cloud Native - June 7, 2023

Alibaba Cloud Native - June 9, 2023

Alibaba Developer - September 7, 2020

212 posts | 13 followers

Follow ACK One

ACK One

Provides a control plane to allow users to manage Kubernetes clusters that run based on different infrastructure resources

Learn More Container Registry

Container Registry

A secure image hosting platform providing containerized image lifecycle management

Learn More Container Service for Kubernetes

Container Service for Kubernetes

Alibaba Cloud Container Service for Kubernetes is a fully managed cloud container management service that supports native Kubernetes and integrates with other Alibaba Cloud products.

Learn More Cloud-Native Applications Management Solution

Cloud-Native Applications Management Solution

Accelerate and secure the development, deployment, and management of containerized applications cost-effectively.

Learn MoreMore Posts by Alibaba Cloud Native