By Yu Kai

Co-Author: Xieshi (Alibaba Cloud Container Service)

This article is the second part of the series, which mainly introduces the forwarding links of data plane links in Kubernetes Terway ENI modes. First, by understanding the forwarding links of the data plane in different scenarios, it can detect the reasons for the performance of customer access results in different scenarios and help customers further optimize the business architecture. On the other hand, by understanding the forwarding links in-depth when encountering container network jitter, customer O&M and Alibaba Cloud developers can know which link points to deploy and observe manually, to further delimit the direction and cause of the problem.

Previous article: Analysis of Alibaba Cloud Container Network Data Link (1): Flannel

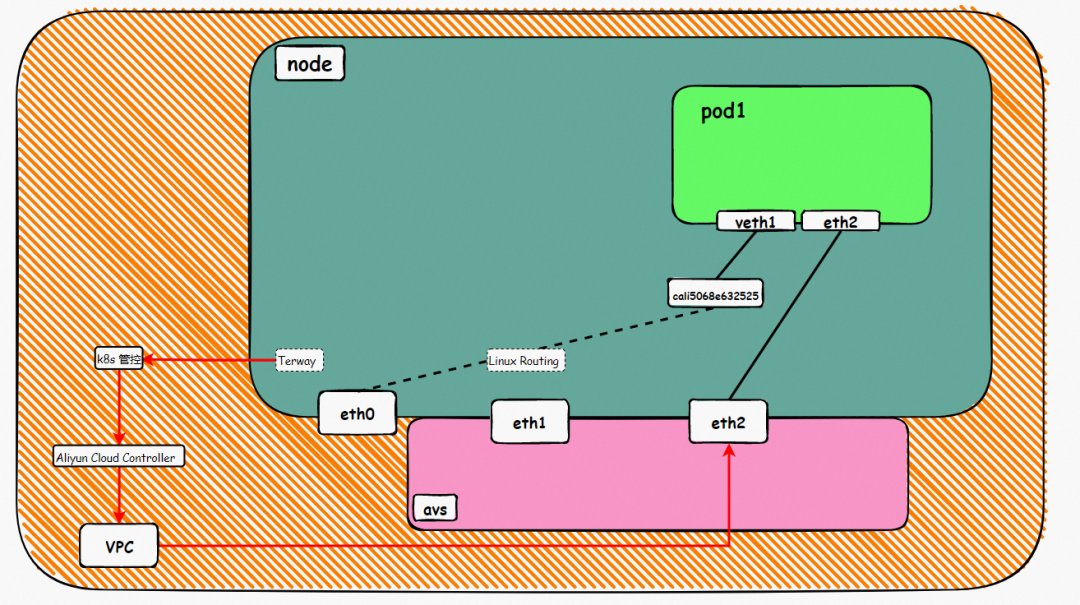

In Terway ENI mode, the network of ENI is the same as that of VPC. ENI network is to create and bind an ENI[1] from Aliyun's VPC network to ECS nodes, and then pods use this ENI to communicate with other networks. It should be noted that the number of ENI is limited, and quota [2] is specific according to the type of instance.

[1] Elastic Network Interface

https://www.alibabacloud.com/help/en/elastic-compute-service/latest/elastic-network-interfaces-overview

[2] Instance types have different quotas

https://www.alibabacloud.com/help/en/elastic-compute-service/latest/instance-family

The CIDR block used by the Pod is the same as the CIDR block of the node.

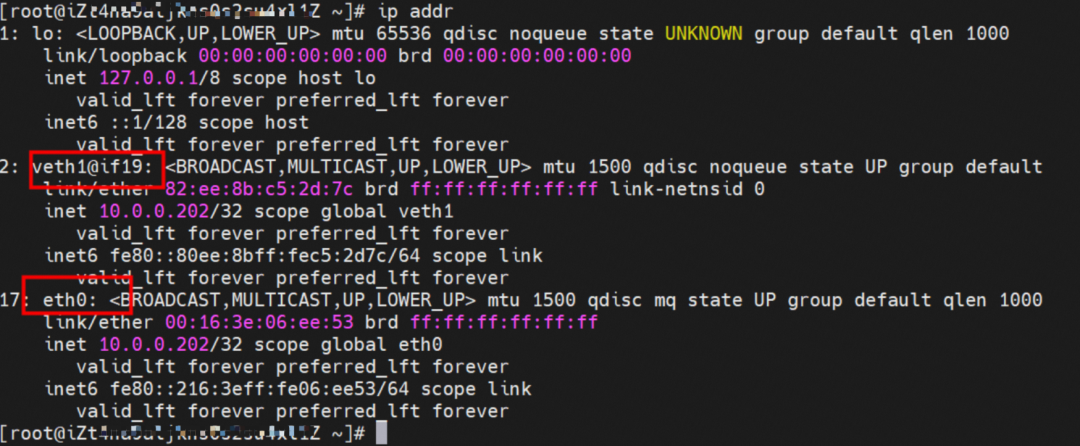

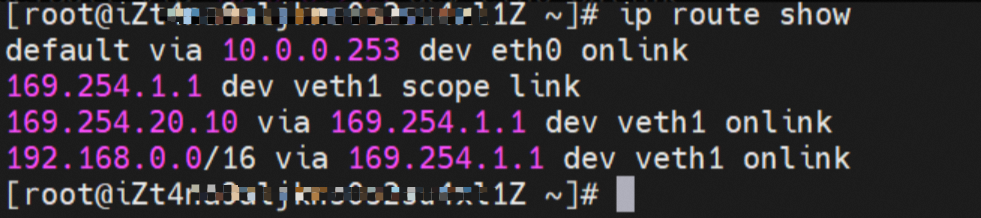

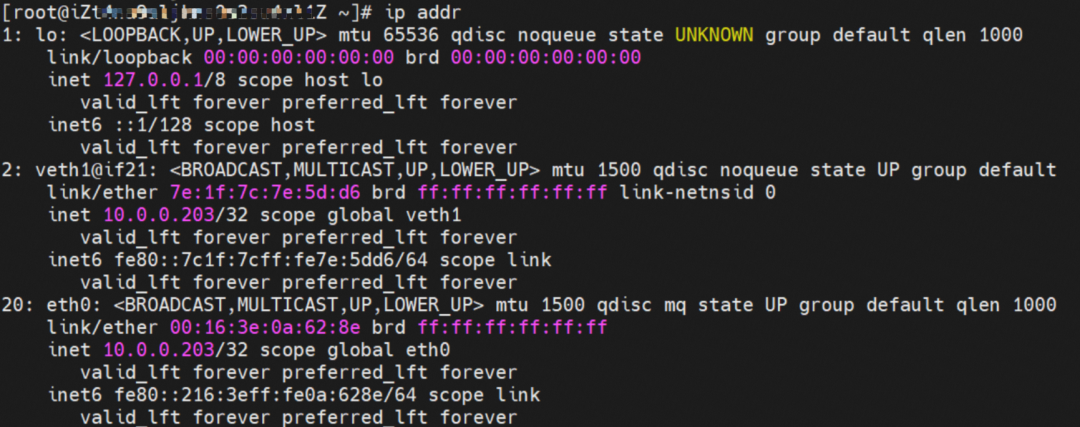

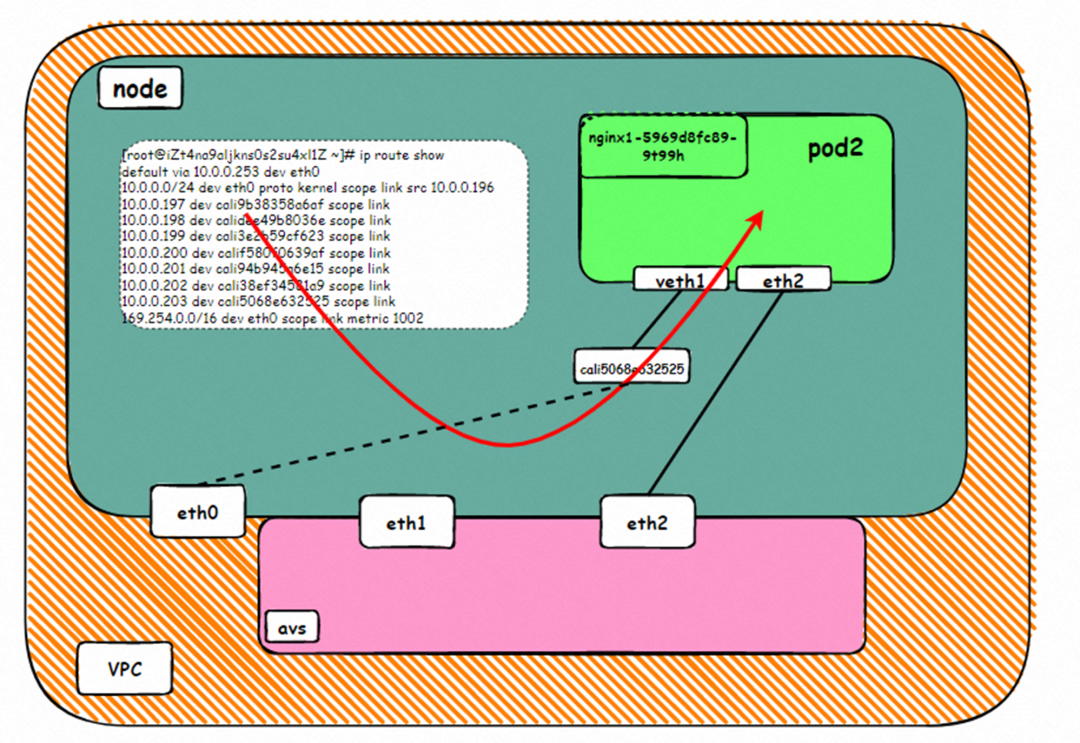

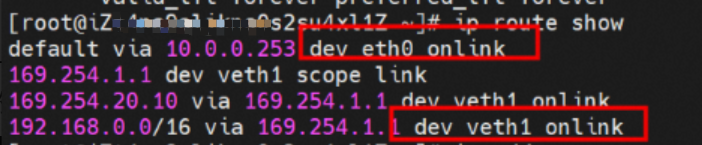

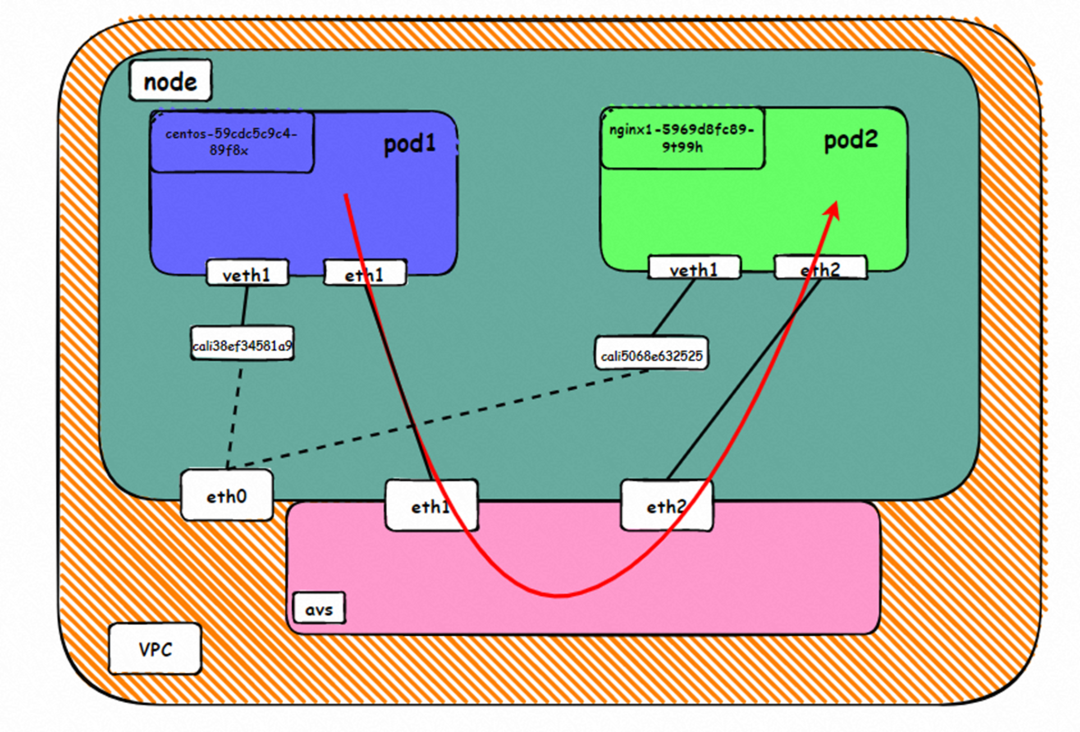

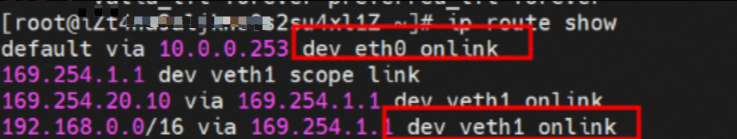

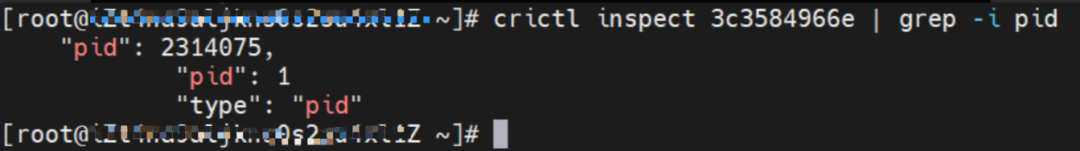

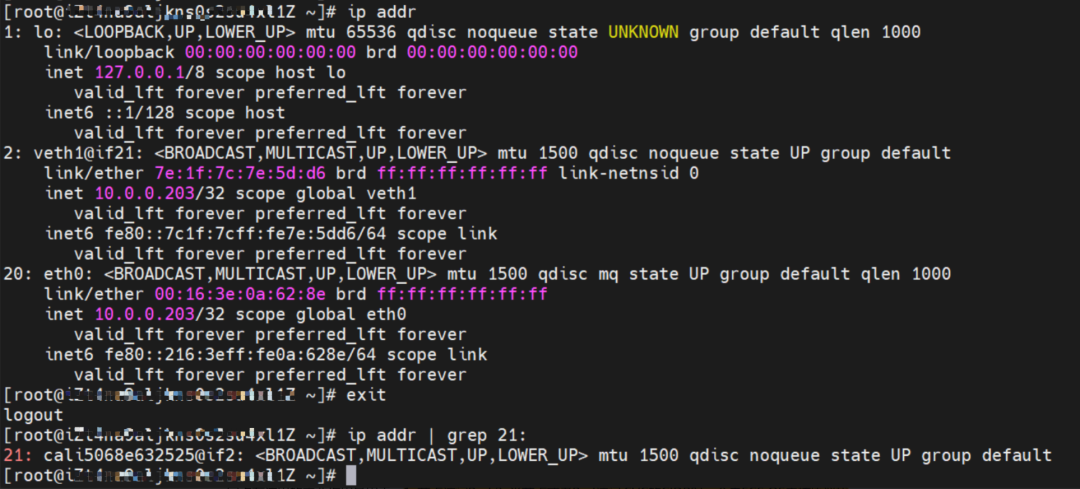

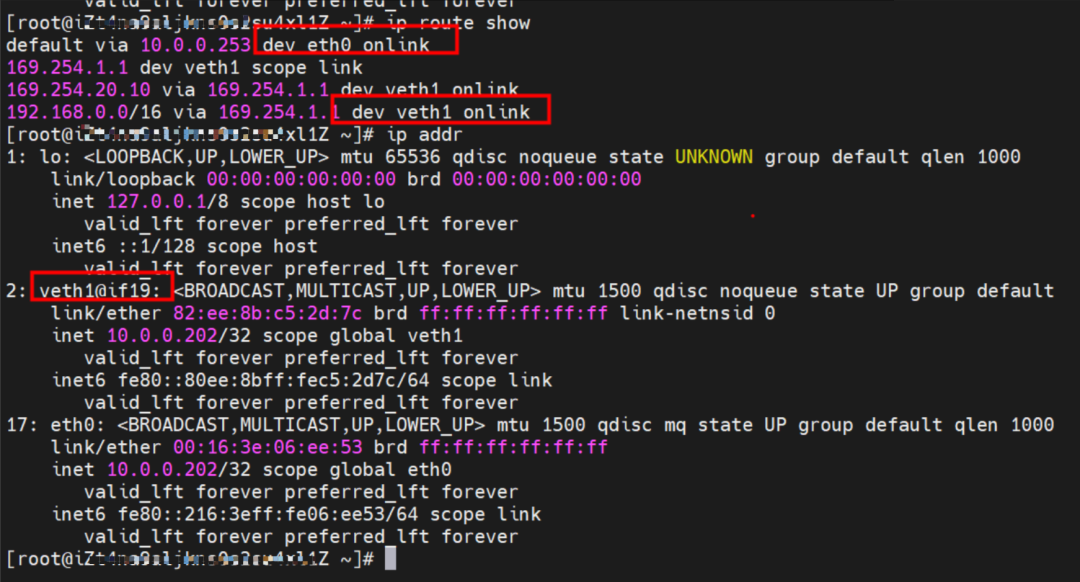

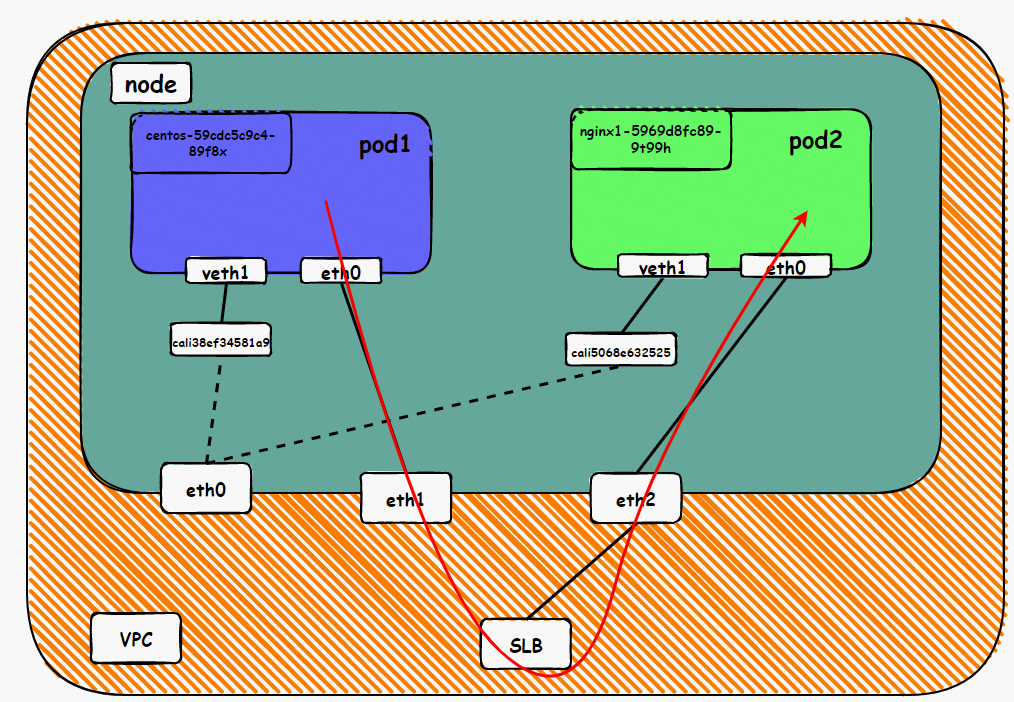

It can be seen that there are two network interface controllers inside the Pod: eth0 and veth1. The IP of eth0 is the IP of the Pod. The MAC address of this network interface controller can match the MAC address of the ENI on the console, indicating that this network interface controller is the secondary ENI network interface controller and is mounted to the network namespace of the Pod.

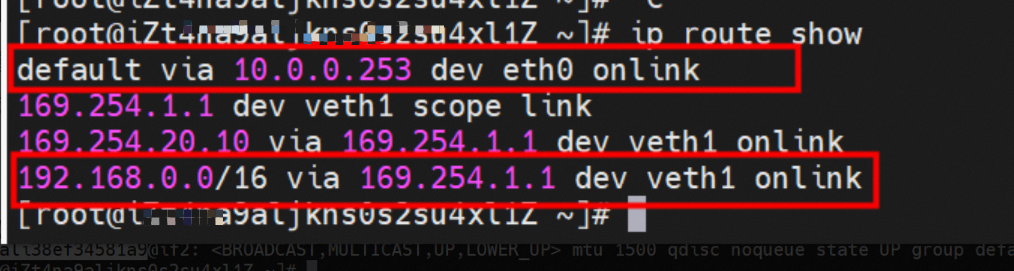

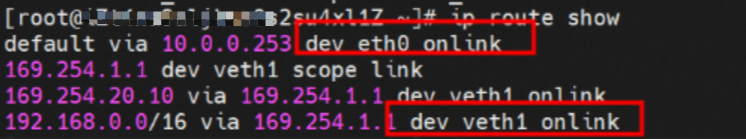

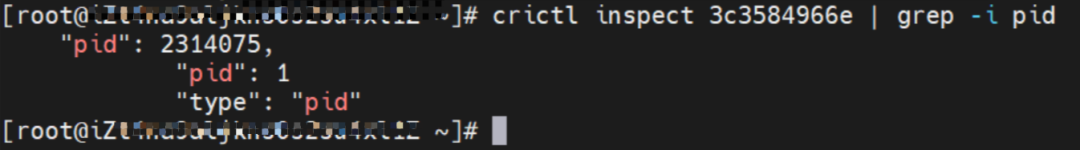

There is a default route pointing to eth0 in the Pod, and a route pointing to the destination CIDR block of 192.168.0.0/16 and the next hop of veth1 network interface controller, of which 192.168.0.0/16 is the service CIDR block of the cluster. It indicates that the Pod in the cluster accesses the clusterIP CIDR block of SVC, and the data link will through the veth1 network interface controller to the OS of the host ECS for the next judgment. In other cases, go to VPC directly through eth0.

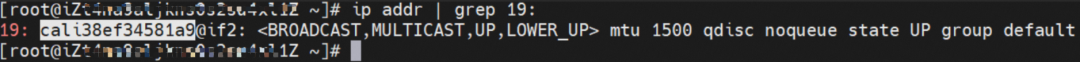

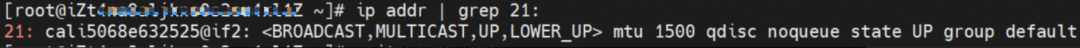

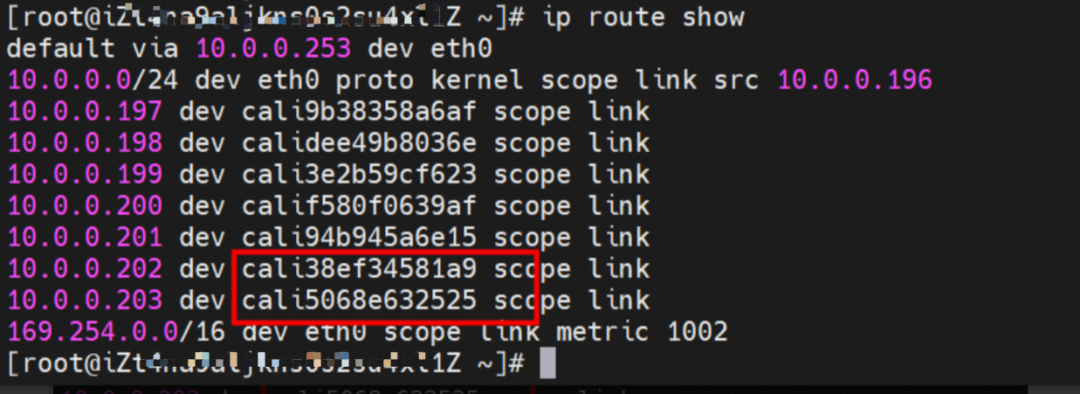

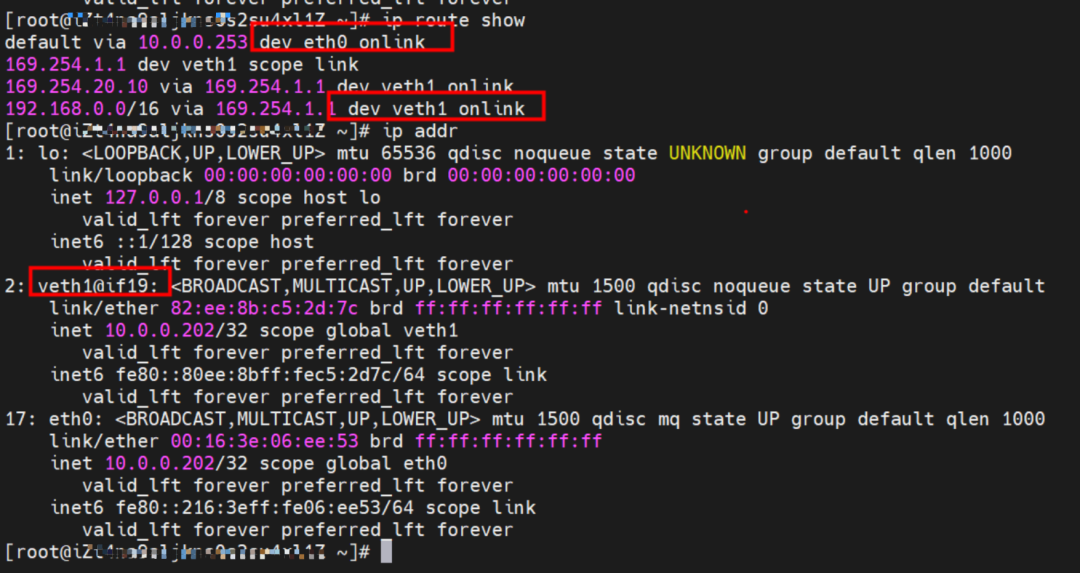

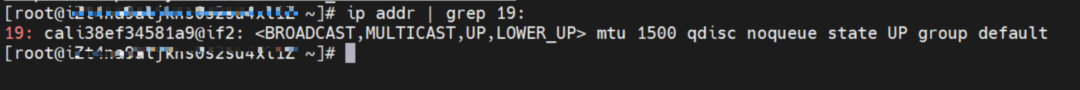

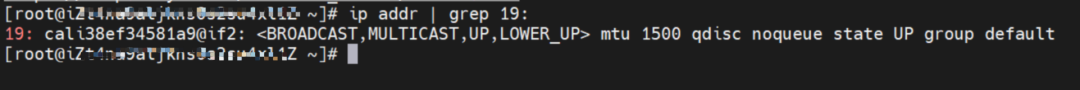

As shown in the figure, we can see veth1@if19 through ip addr in the network namespace of the container, where '19' will help us find the opposite of veth pair in the network namespace of the container in the OS of ECS. In the ECS OS, we can find the virtual network interface controller cali38ef34581a9 through ip addr | grep 19:, which is the opposite of the veth pair on the ECS OS side.

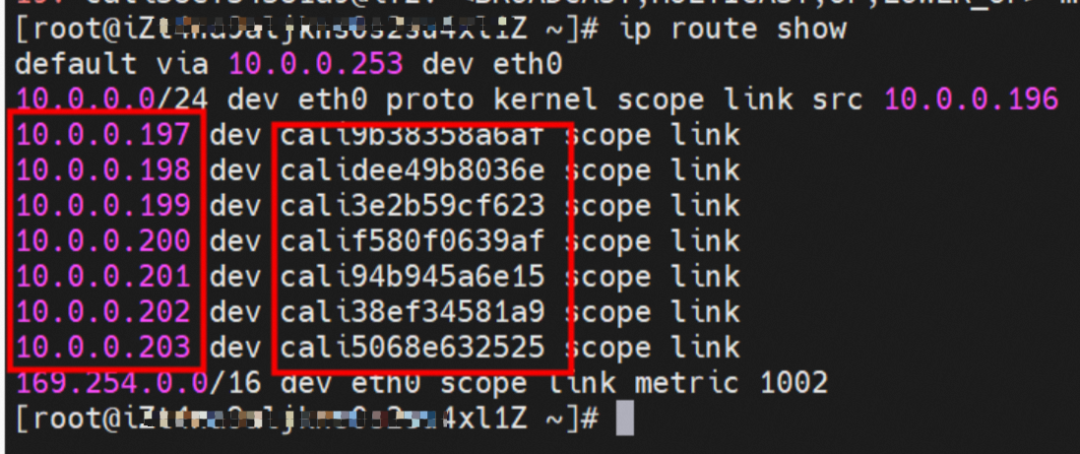

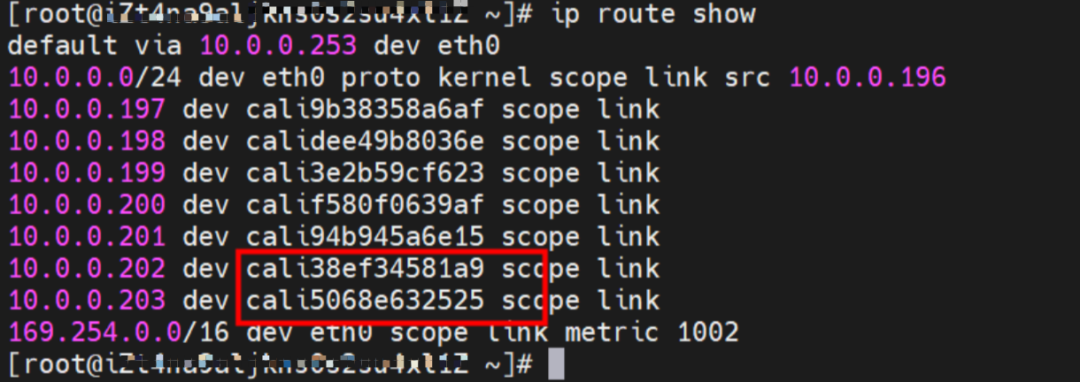

So far, when a container accesses ClusterIP of SVC, a link has been established data link the container and OS. How does ECS OS determine which container to go to for data traffic? From the OS Linux Routing, we can see that all traffic destined for the CIDR block of the pod will be forwarded to the calico virtual card corresponding to the pod. Up to this point, the ECS OS and the network namespace of the pod have established a complete ingress and egress link configuration.

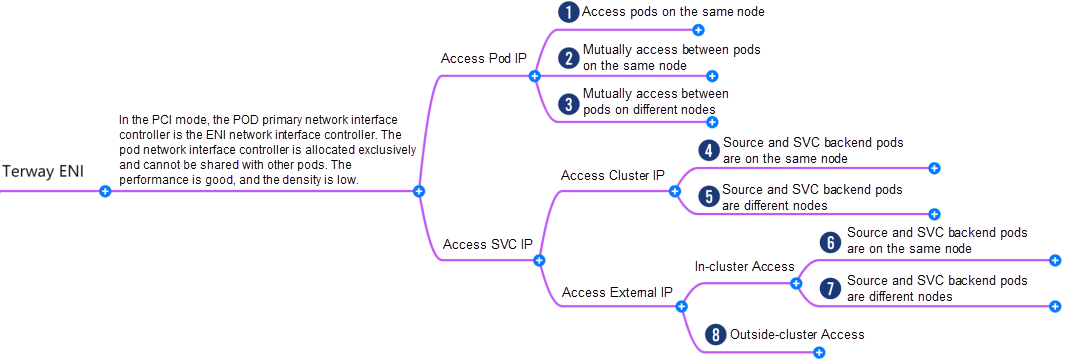

Based on the characteristics of container networks, the network links in Terway ENI mode can be roughly divided into two major SOP scenarios: pod IP and SVC, which can be subdivided into eight different small SOP scenarios.

The data link of these eight scenarios can be summarized into the following eight typical scenarios.

Under the TerwayENI architecture, different data link access scenarios can be summarized into eight categories.

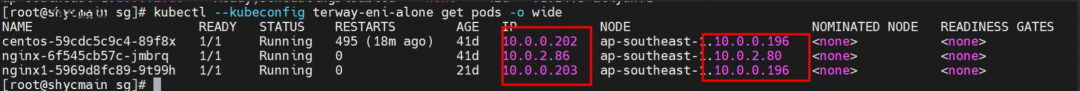

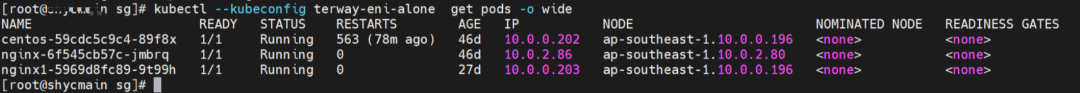

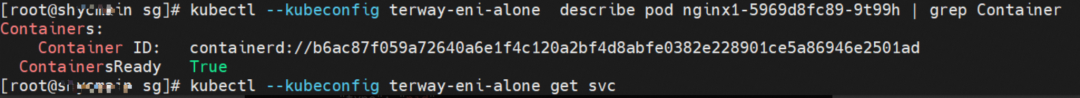

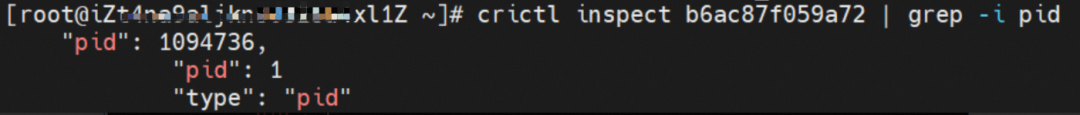

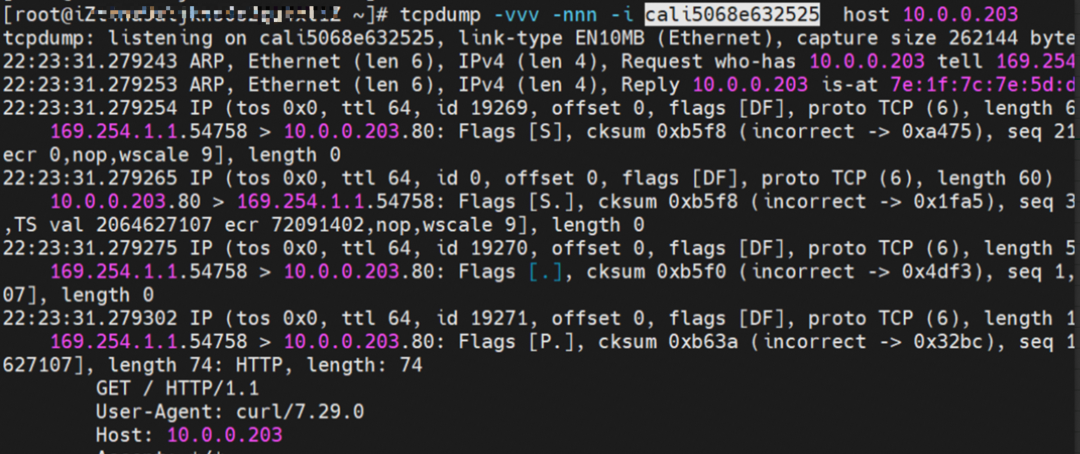

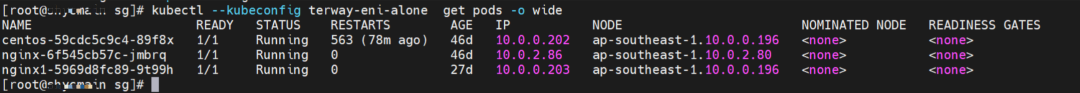

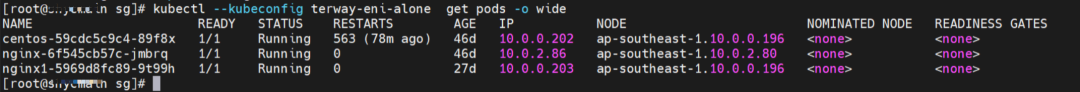

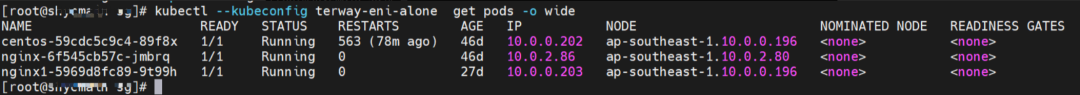

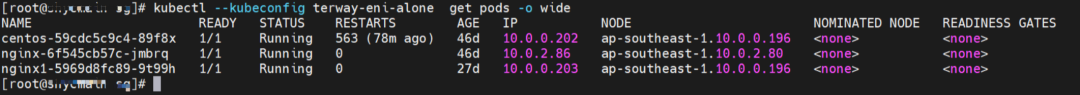

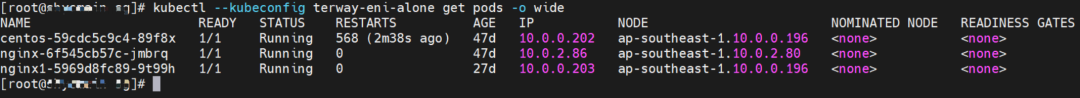

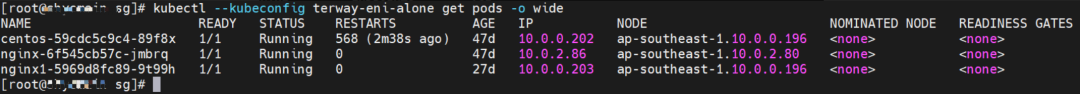

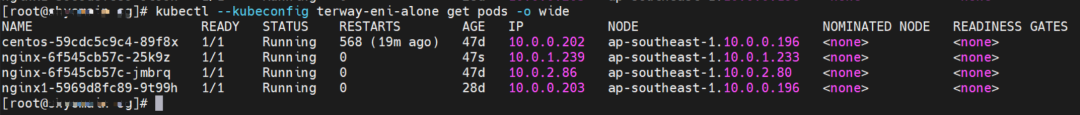

nginx1-5969d8fc89-9t99h and 10.0.0.203 exist on ap-southeast-1.10.0.0.196 node.

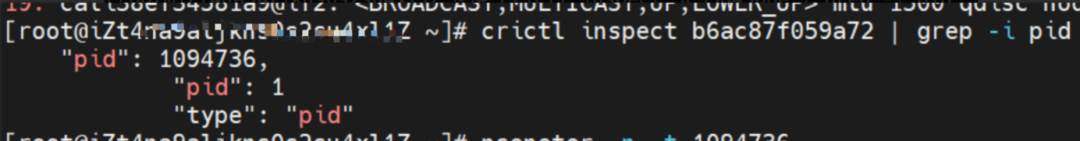

The nginx1-5969d8fc89-9t99h IP address is 10.0.0.203, and the PID of the container on the host is 1094736. The container network namespace has a default route pointing to container eth0 and the two routes with the next hop of veth1 and the destination CIDR block of service.

The veth pair of container veth1 in ECS OS is cali5068e632525.

In ECS OS, there is a route that points to Pod IP and the next hop is calixxxxx. As you can see from the preceding section, the calixxx network interface controller is a pair that is composed of veth1 in each pod. Therefore, accessing the CIDR of SVC in the pod will point to veth1 instead of the default eth0 route. Therefore, the main functions of the calixx network interface controller here are:

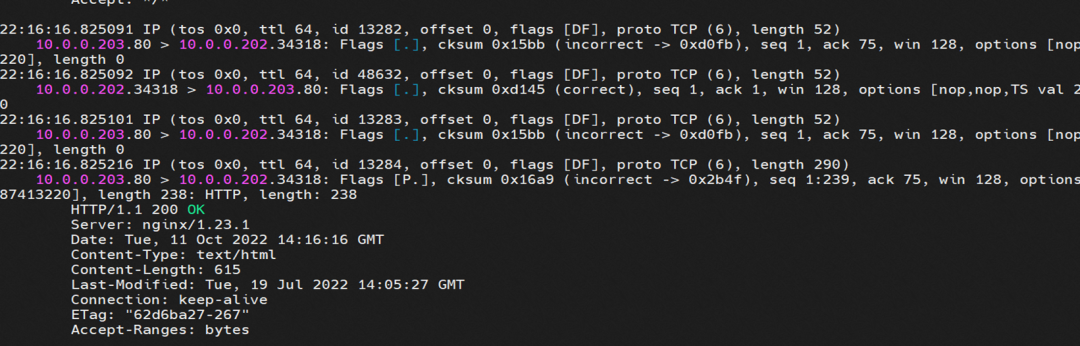

nginx1-5969d8fc89-9t99h netns veth1 can catch packets.

nginx1-5969d8fc89-9t99h cali5068e632525 can catch packets.

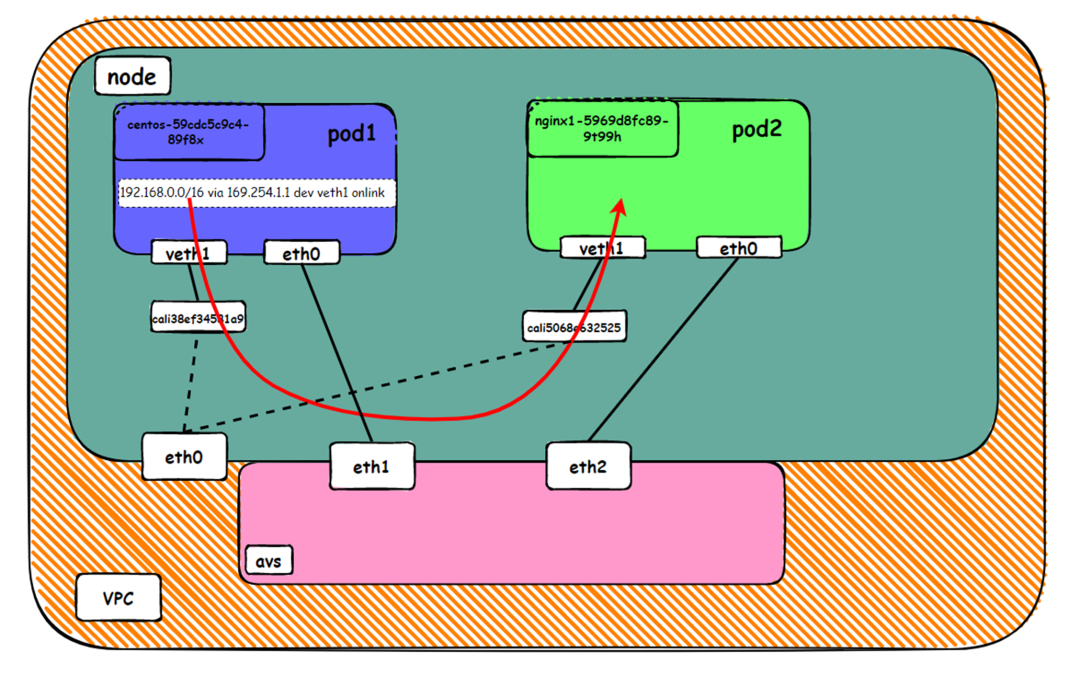

Data Link Forwarding Diagram

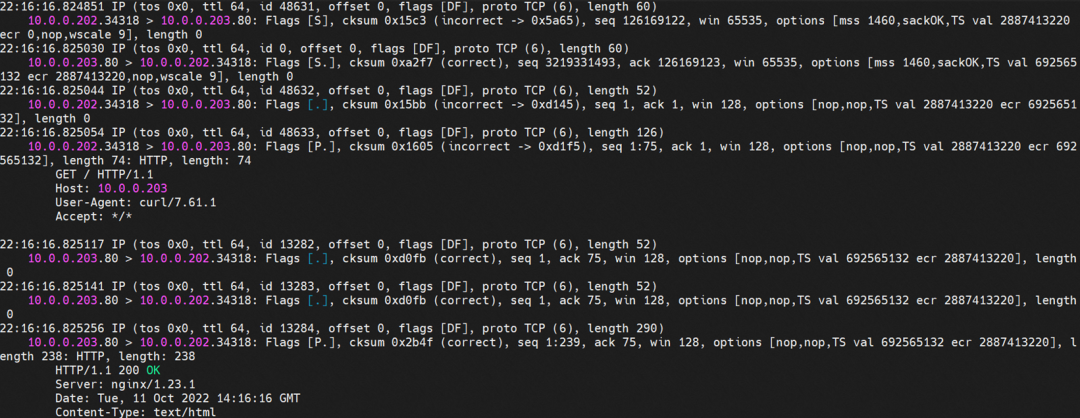

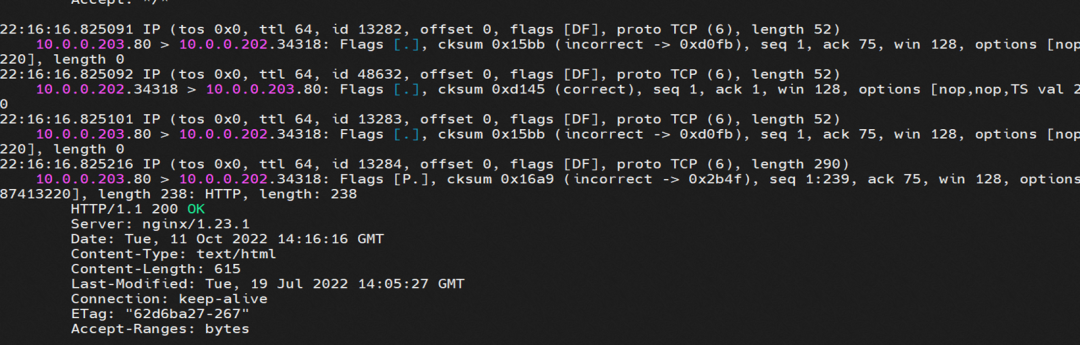

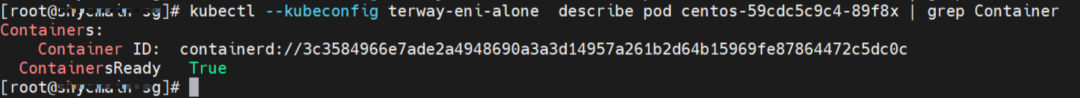

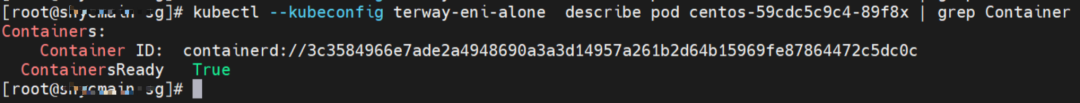

Two pods exist on the ap-southeast-1.10.0.0.196 node: centos-59cdc5c9c4-89f8x, IP addresses 10.0.0.202 and nginx1-5969d8fc89-9t99h and 10.0.0.203.

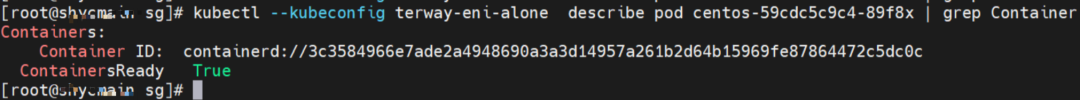

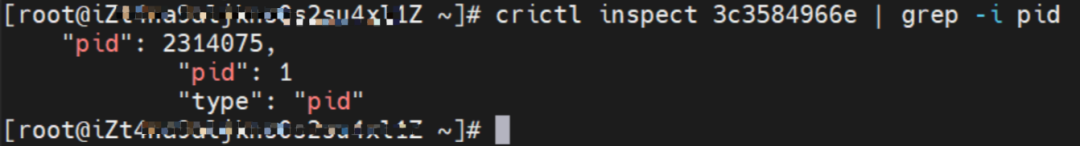

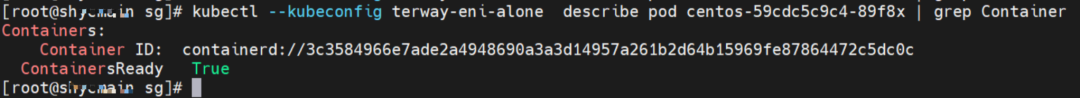

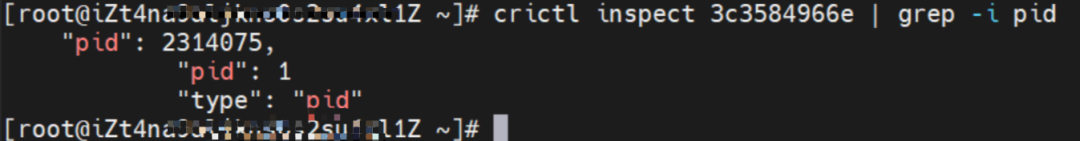

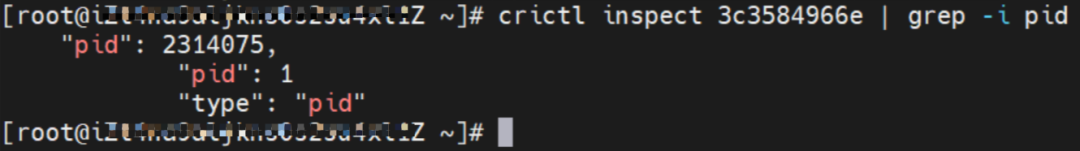

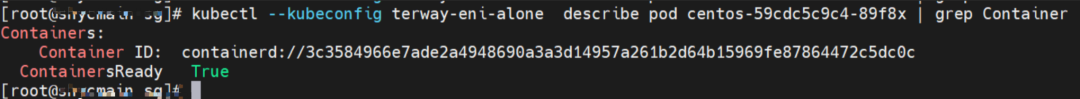

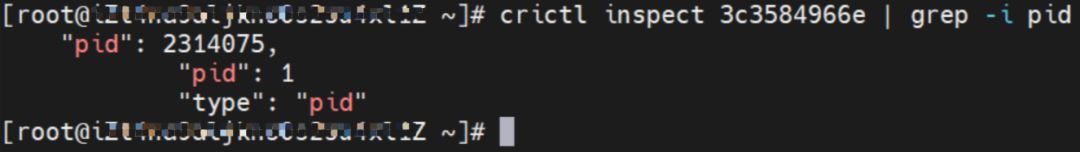

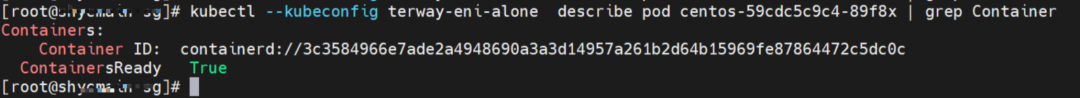

The centos-59cdc5c9c4-89f8x IP address is 10.0.0.202, and the PID of the container on the host is 2314075. The container network namespace has a default route pointing to container eth0 and the two routes with the next hop of veth1 and the destination CIDR block of service.

Through a similar method, the nginx1-5969d8fc89-9t99h IP address 10.0.0.203 can be found, and the PID of the container on the host is 1094736.

centos-59cdc5c9c4-89f8x netns eth1 can catch packets.

nginx1-5969d8fc89-9t99h netns eth1 can catch packets.

Data Link Forwarding Diagram

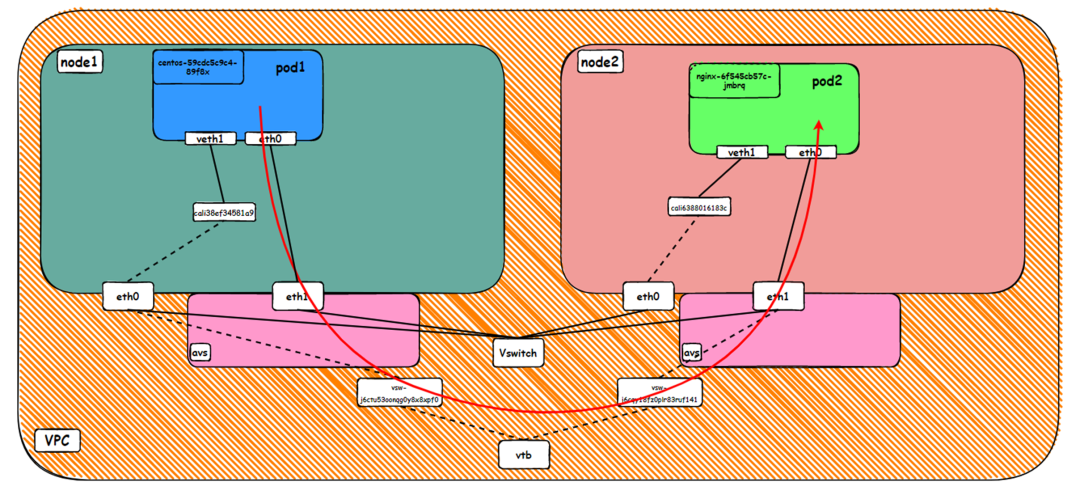

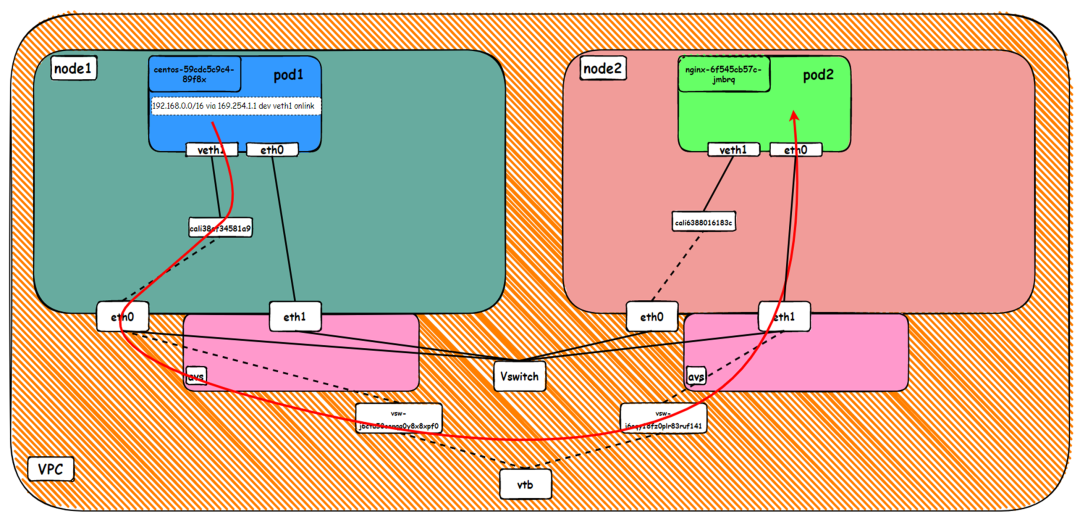

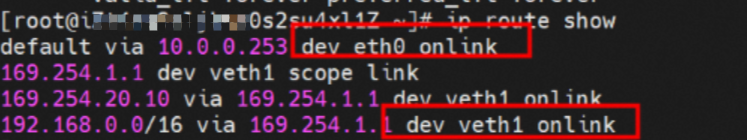

The pod exists on the ap-southeast-1.10.0.0.196 node: centos-59cdc5c9c4-89f8x, IP address 10.0.0.202.

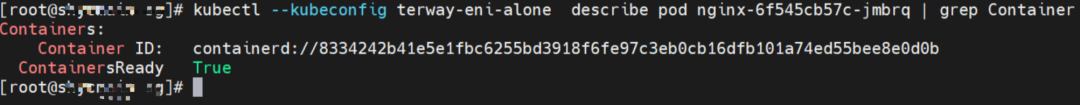

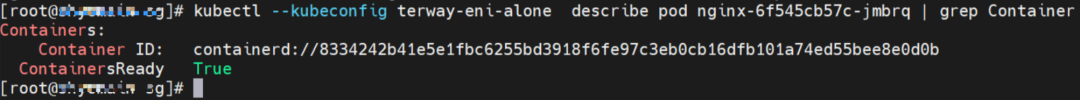

The pod exists on ap-southeast-1.10.0.2.80 nodes: nginx-6f545cb57c-jmbrq and 10.0.2.86.

The centos-59cdc5c9c4-89f8x IP address is 10.0.0.202, and the PID of the container on the host is 2314075. The container network namespace has a default route pointing to container eth0 and the two routes with the next hop of veth1 and the destination CIDR block of service.

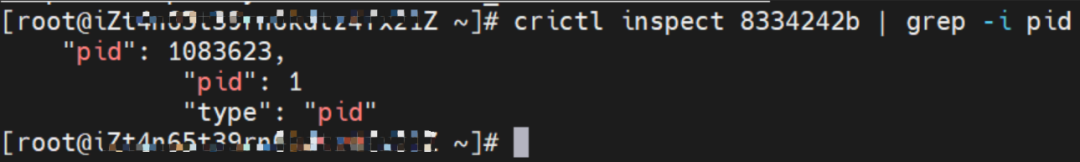

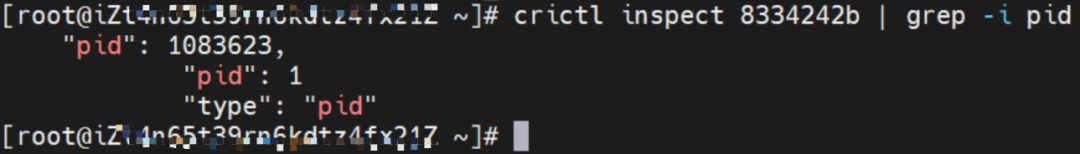

Through a similar method, the nginx-6f545cb57c-jmbrq IP address 10.0.2.86 can be found, and the PID of the container on the host is 1083623.

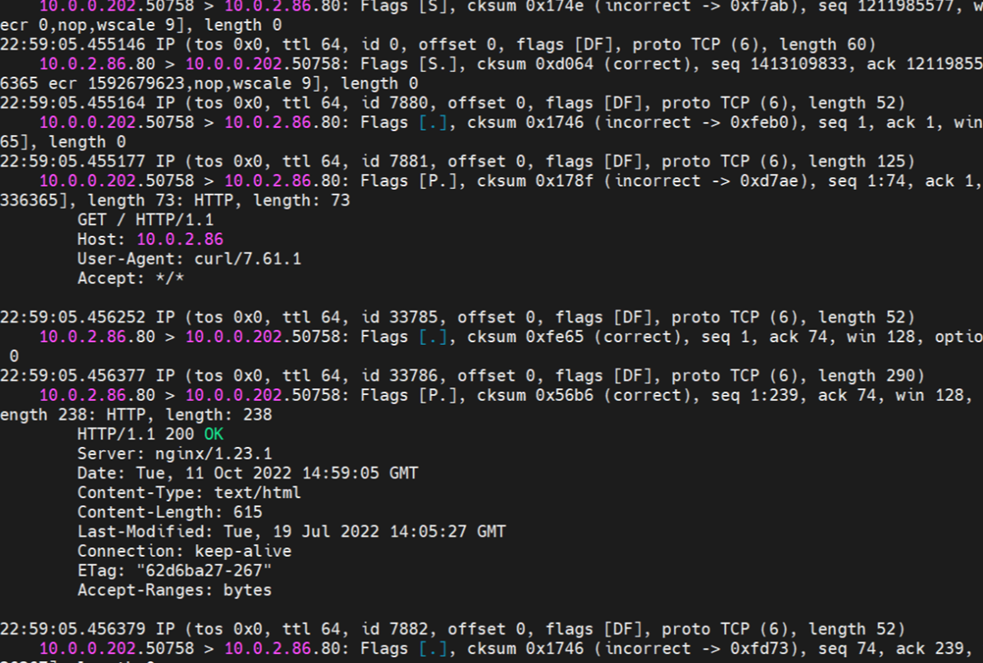

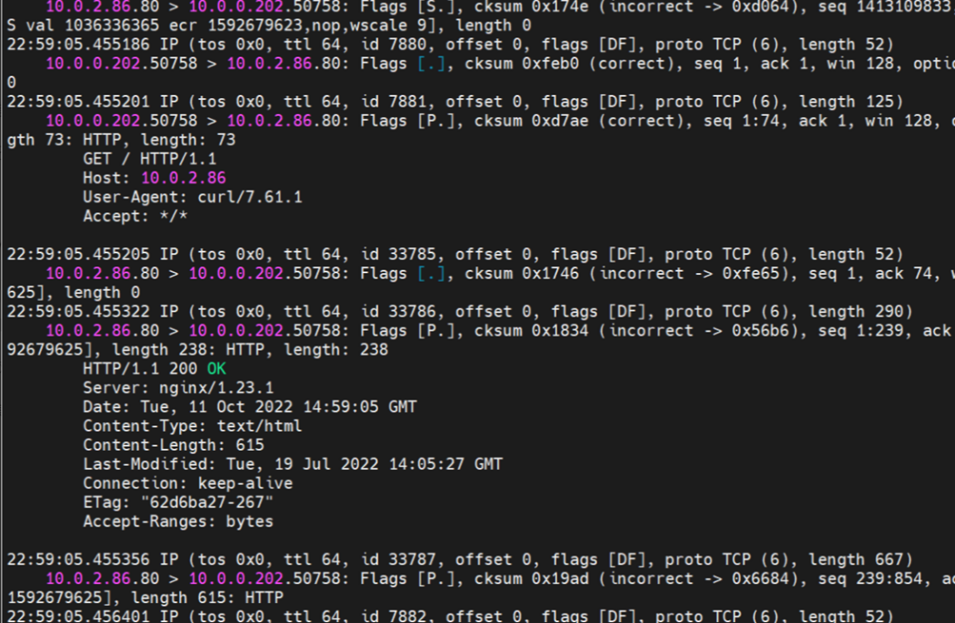

centos-59cdc5c9c4-89f8x netns eth0 can catch packets.

nginx-6f545cb57c-jmbrq netns eth0 can catch packets.

Data Link Forwarding Diagram

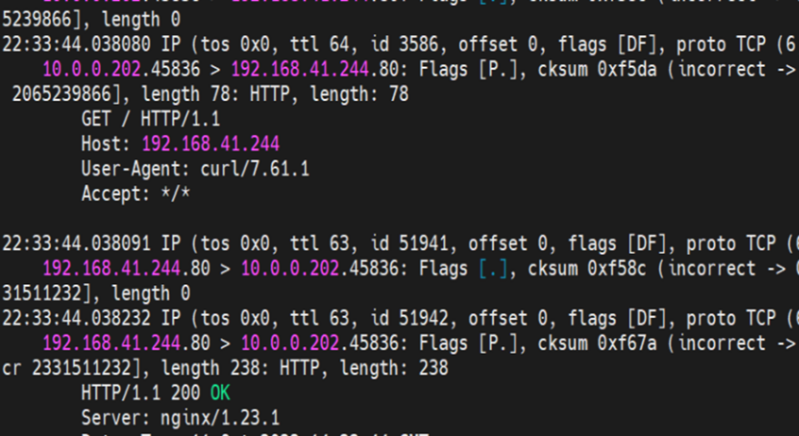

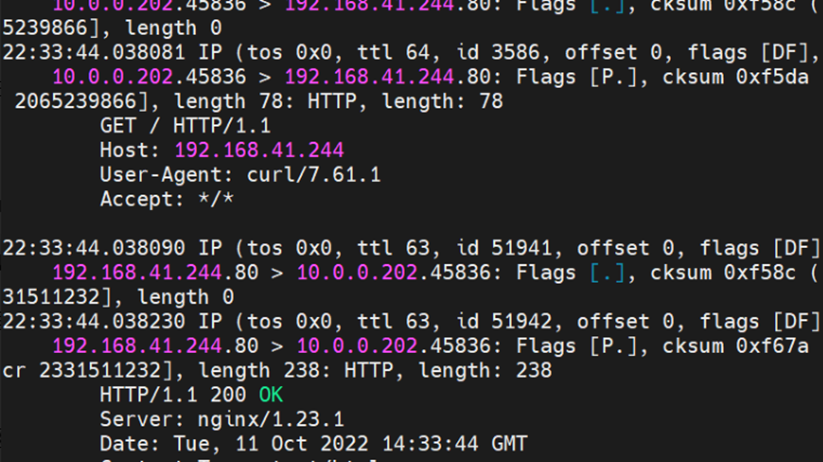

Two pods exist on the ap-southeast-1.10.0.0.196 node: centos-59cdc5c9c4-89f8x, IP addresses 10.0.0.202 and nginx1-5969d8fc89-9t99h and 10.0.0.203.

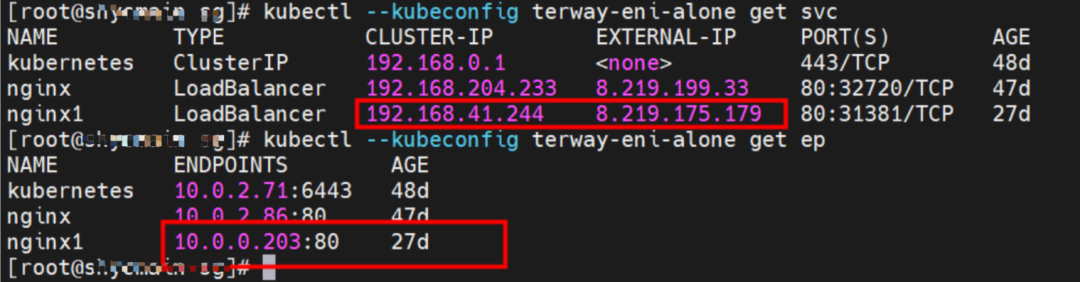

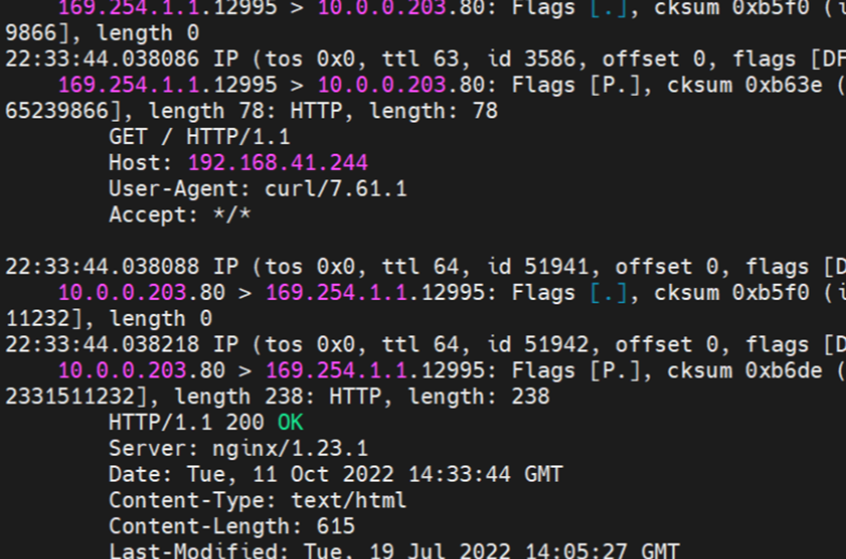

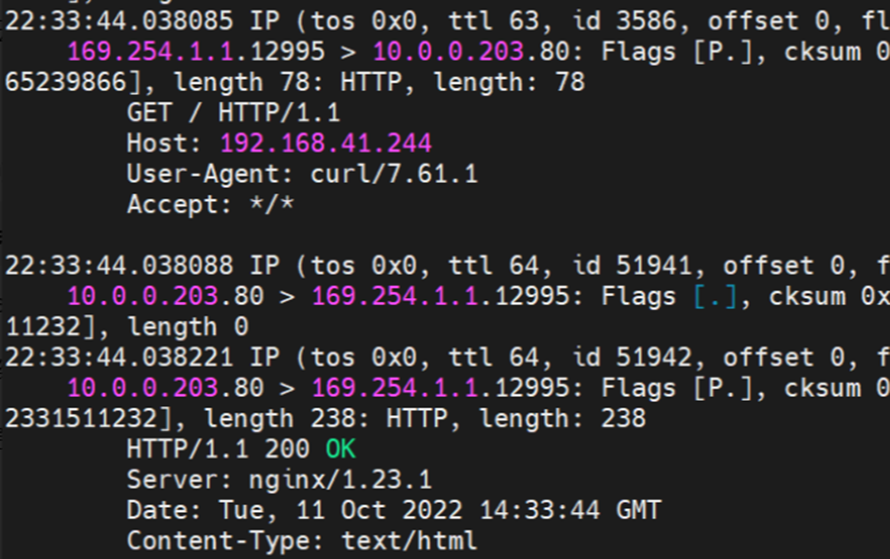

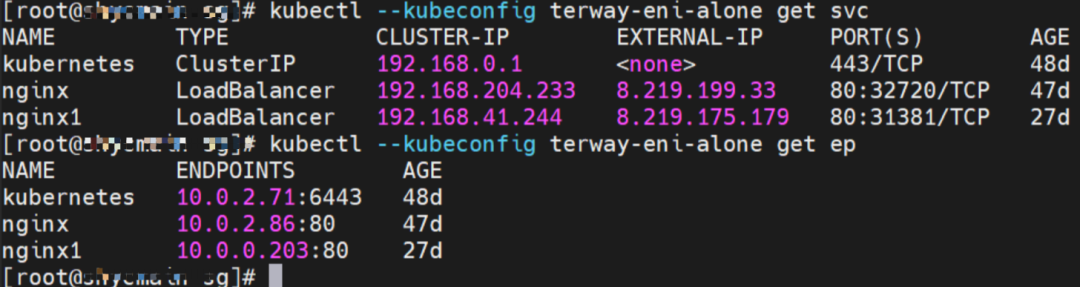

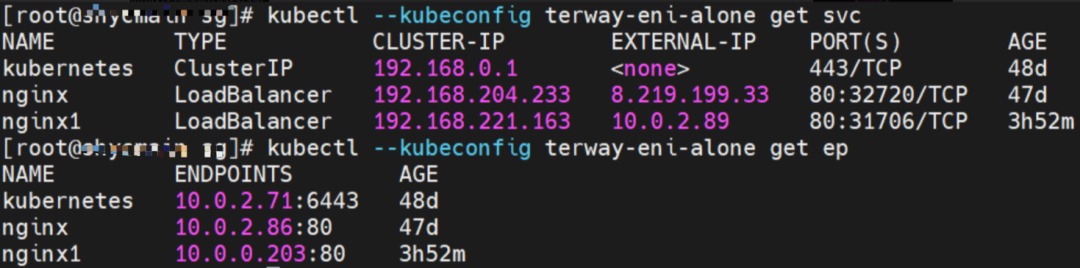

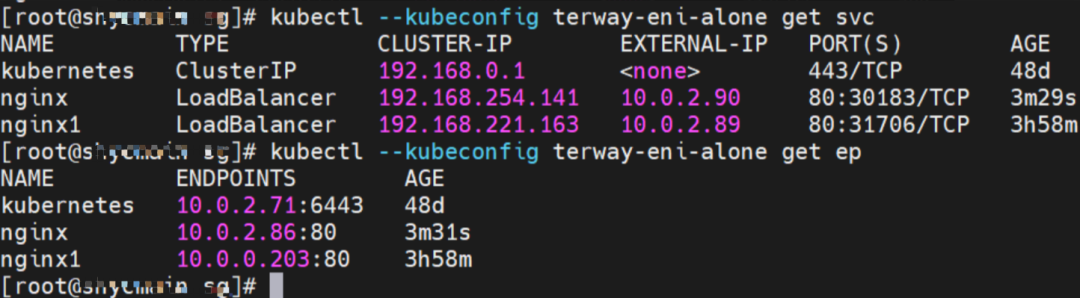

The clusterIP of the service is 192.168.41.244, and the external IP is 8.219.175.179 in the nginx1 cluster.

The centos-59cdc5c9c4-89f8x IP address is 10.0.0.202, and the PID of the container on the host is 2314075. The container network namespace has a default route pointing to container eth0 and the two routes with the next hop of veth1 and the destination CIDR block of service.

The container eth0 corresponds to the veth pair in the ECS OS: cali38ef34581a9.

Through a similar method, the nginx1-5969d8fc89-9t99h IP address 10.0.0.203 can be found, the PID of the container on the host is 1094736, and the corresponding veth pair of the container eth0 in ECS OS is cali5068e632525.

In ECS OS, there is a route that points to Pod IP and the next hop is calixxxxx. As you can see from the preceding section, the calixxx network interface controller is a pair composed of veth1 in each pod. Therefore, accessing the CIDR of SVC in the pod will point to veth1 instead of the default eth0 route. Therefore, the main functions of the calixx network interface controller here are:

centos-59cdc5c9c4-89f8x netns veth1 can catch packets.

centos-59cdc5c9c4-89f8x netns cali38ef34581a9 can catch packets.

nginx1-5969d8fc89-9t99h netns veth1 can catch packets.

nginx1-5969d8fc89-9t99h netns cali5068e632525 can catch packets.

Data Link Forwarding Diagram

The pod exists on the ap-southeast-1.10.0.0.196 node: centos-59cdc5c9c4-89f8x, IP address 10.0.0.202.

The pod exists on ap-southeast-1.10.0.2.80 nodes: nginx-6f545cb57c-jmbrq and 10.0.2.86.

The clusterIP address of the service is 192.168.204.233, and the external IP is 8.219.199.33.

The centos-59cdc5c9c4-89f8x IP address is 10.0.0.202, and the PID of the container on the host is 2314075. The container network namespace has a default route pointing to container eth0 and the two routes with the next hop of veth1 and the destination CIDR block of service.

The container eth0 corresponds to the veth pair in the ECS OS is cali38ef34581a9.

You can use the preceding method to find the nginx-6f545cb57c-jmbrq. The IP address is 10.0.2.86, the PID of the container on the host is 1083623, and the pod ENI is directly mounted to the network namespace of the pod.

Data Link Forwarding Diagram

Two pods exist on the ap-southeast-1.10.0.0.196 node: centos-59cdc5c9c4-89f8x IP addresses 10.0.0.202 and nginx1-5969d8fc89-9t99h and 10.0.0.203.

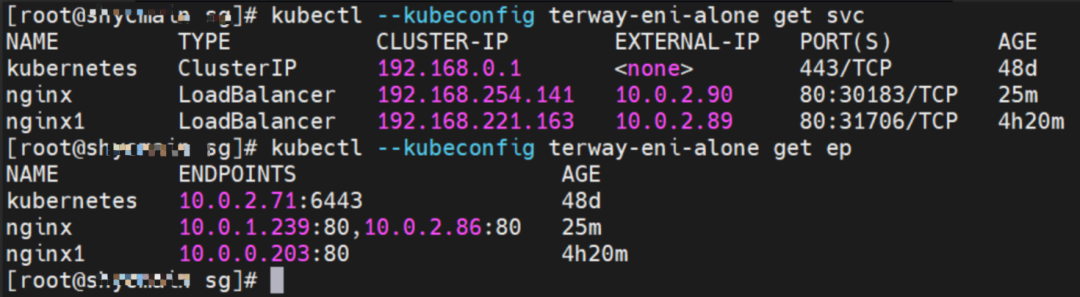

The clusterIP address of the Service is 192.168.221.163, and the external IP address is 10.0.2.89 in the nginx1 cluster.

The centos-59cdc5c9c4-89f8x IP address is 10.0.0.202, and the PID of the container on the host is 2314075. The container network namespace has a default route pointing to container eth0 and the two routes with the next hop of veth1 and the destination CIDR block of service ClusterIP.

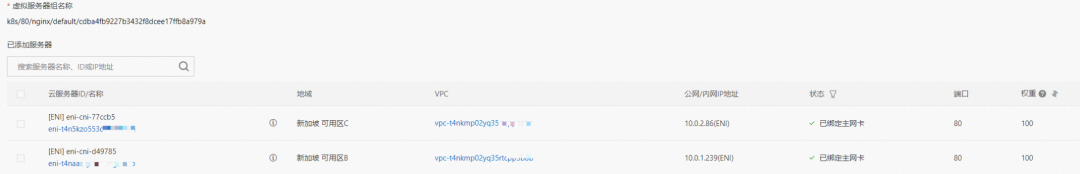

In the SLB console, you can see the backend of the virtual server group only has nginx1-5969d8fc89-9t99h ENI eni-t4n6qvabpwi24w0dcy55.

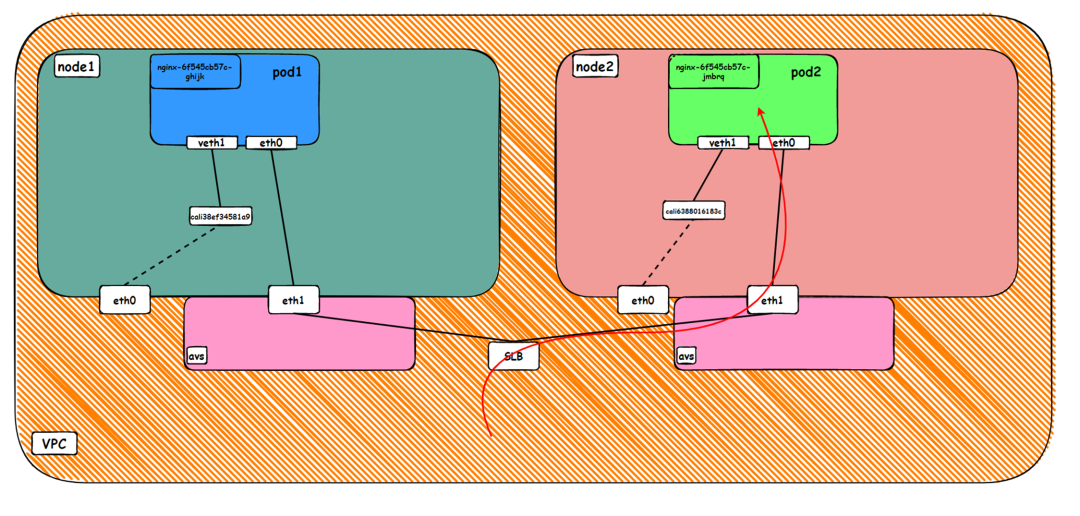

In summary, it can be judged that if the external IP address of SVC is accessed, the default route eth0 is used, the ECS is directly accessed to avs, the SLB instance is accessed, and then the SLB instance is forwarded to the backend eni.

Data Link Forwarding Diagram

The pod exists on the ap-southeast-1.10.0.0.196 node: centos-59cdc5c9c4-89f8x, IP address 10.0.0.202.

The pod exists on ap-southeast-1.10.0.2.80 nodes: nginx-6f545cb57c-jmbrq and 10.0.2.86.

The clusterIP of the Service is 192.168.254.141, and the external IP is 10.0.2.90.

The centos-59cdc5c9c4-89f8x IP address is 10.0.0.202, and the PID of the container on the host is 2314075. The container network namespace has a default route pointing to container eth0 and the two routes with the next hop of veth1 and the destination CIDR block of service ClusterIP.

In the SLB console, you can see that the backend of the lb-t4nih6p8w8b1dc7p587j9 virtual server group only has nginx-6f545cb57c-jmbrq ENI eni-t4n5kzo553dfak2sp68j.

To sum up, it can be judged that if the external IP address of SVC is accessed, the default route eth0 is used, the ECS is accessed to avs, the SLB instance is accessed, and then the SLB instance is forwarded to the backend eni.

Data Link Forwarding Diagram

The pod exists on ap-southeast-1.10.0.2.80 nodes: nginx-6f545cb57c-jmbrq and 10.0.2.86.

The pod exists on ap-southeast-1.10.0.1.233 nodes: nginx-6f545cb57c-25k9z and 10.0.1.239.

The clusterIP address of the Service is 192.168.254.141 and the external IP address is 10.0.2.90.

In the SLB console, you can see that the backend server group of the lb-t4nih6p8w8b1dc7p587j9 virtual server group is the ENI eni-t4n5kzo553dfak2sp68j and eni-t4naaozjxiehvmg2lwfo of the two backend nginx pods.

From the perspective of the outside of the cluster, the backend virtual server group of SLB is the network interface controller of the two ENIs to which the backend Pod of SVC belongs. The IP address of the internal network is the address of the Pod and directly enters the protocol stack of the OS without passing through the OS level of the ECS where the backend Pod is located.

Data Link Forwarding Diagram

This article focuses on ACK data link forwarding paths in different SOP scenarios in Terway ENI mode. With the customer's demand for extreme performance, Terway ENI can be divided into eight SOP scenarios. The forwarding links, technical implementation principles, and cloud product configurations of these eight scenarios are sorted out and summarized. This provides preliminary guidance to encounter link jitter, optimal configuration, and link principles under the Terway ENI architecture. In Terway ENI mode, ENI is mounted to the namespace of pods in PCI mode, which means that ENI belongs to the allocated pods. The number of pods that ECS can deploy depends on the limit of the number of ENI network interface controllers ECS can mount. This limit is related to the instance type of ECS. For example, ECS ecs.ebmg7.32xlarge,128C 512GB only supports a maximum of 32 ENIs. This often results in a waste of resources and a reduction in deployment density. In order to solve this resource efficiency problem, ACK provides the Terway ENIIP method to realize that ENI is shared by multiple pods. This increases the quota of pods on a single ECS instance and improves the deployment density. This is currently the most widely used architecture for online clusters. The next part of this series will enter the analysis of the Terway ENIIP mode - ACK Analysis of Alibaba Cloud Container Network Data Link (3): Terway ENIIP.

Analysis of Alibaba Cloud Container Network Data Link (1): Flannel

Analysis of Alibaba Cloud Container Network Data Link (3): Terway ENIIP

210 posts | 13 followers

FollowAlibaba Cloud Native - June 9, 2023

Alibaba Cloud Native - August 9, 2023

Alibaba Cloud Native - June 12, 2023

Alibaba Cloud Native - June 9, 2023

Alibaba Container Service - August 10, 2023

Alibaba Container Service - August 10, 2023

210 posts | 13 followers

Follow ACK One

ACK One

Provides a control plane to allow users to manage Kubernetes clusters that run based on different infrastructure resources

Learn More Container Registry

Container Registry

A secure image hosting platform providing containerized image lifecycle management

Learn More Container Service for Kubernetes

Container Service for Kubernetes

Alibaba Cloud Container Service for Kubernetes is a fully managed cloud container management service that supports native Kubernetes and integrates with other Alibaba Cloud products.

Learn More Cloud-Native Applications Management Solution

Cloud-Native Applications Management Solution

Accelerate and secure the development, deployment, and management of containerized applications cost-effectively.

Learn MoreMore Posts by Alibaba Cloud Native

Start building with 50+ products and up to 12 months usage for Elastic Compute Service

Get Started for Free Get Started for Free