By Garvin Li

News classification is a common scenario in the field of text mining. At present, many media or content producers often use manual tagging for news text classification, which consumes a lot of human resources. This article classifies news texts through smart text mining algorithms. It is completely realized by the machine without any manual tagging.

In this article, automatic news classification is implemented through the PLDA algorithm and clustering topic weights. It includes processes such as word breaking, word type conversion, disabled-word filtering, topic mining, and clustering. We will be doing this using the Alibaba Cloud Machine Learning Platform.

Note: The data in this article is fictitious and is only used for experimental purposes.

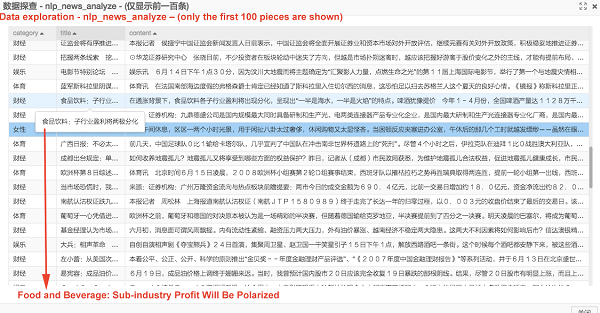

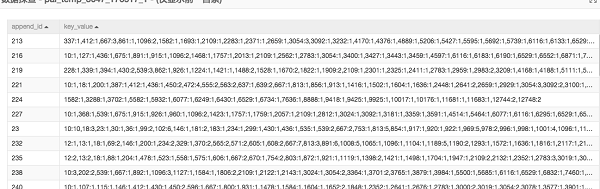

The data screenshot is shown below.

The detailed fields are as follows:

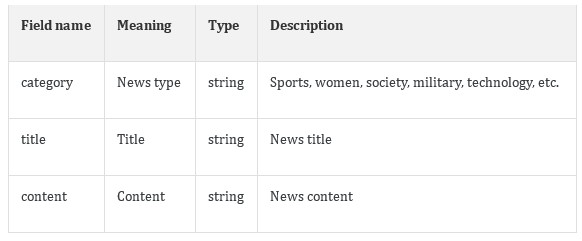

The experiment flow chart is as follows.

The experiment is roughly divided into the following 5 steps:

The data source of this experiment is based on a single news unit. It is necessary to add an ID column as a unique identifier for each news unit, which is convenient for computing the following algorithm.

These two steps are the most common practices in the field of text mining.

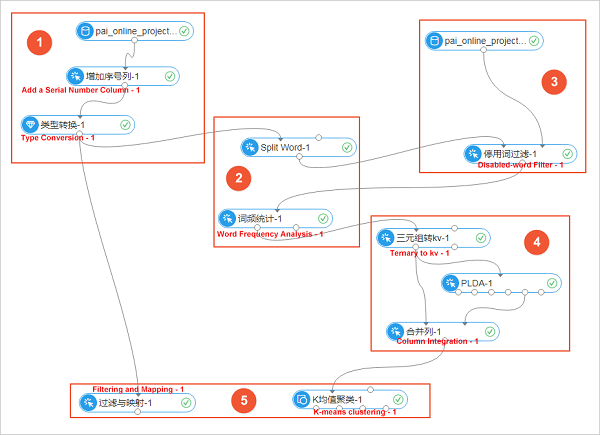

The word splitting component is first used to break the content field (news content). After removing filtered words (filtered words are generally punctuation and auxiliary words), then the word frequency is analyzed. The results are shown in the following figure.

The disabled-word filter component is used to filter the input disabled-word lexicon, generally filter punctuations and auxiliary words that have less influence on the article.

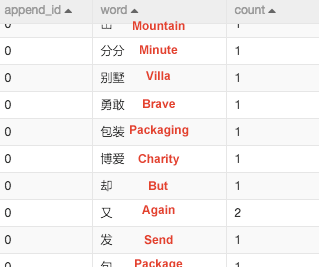

Using the PLDA text mining component requires first converting the text to a ternary form (text to numeral), as shown in the following figure.

append_id is the unique identifier for each news unit.

The number in front of the colon in the key_value field indicates the numeral identifier that the word is abstracted into, and the colon is followed by the frequency at which the corresponding word appears.

Use the PLDA algorithm for the data.

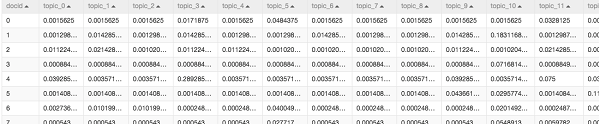

The PLDA algorithm is also known as topic model, which can locate words that represent the topic of each article. This experiment sets 50 topics. PLDA has 6 output piles, and the 5th output pile outputs the probability of each topic corresponding to each article, as shown in the following figure.

The above steps represent the article as a vector from the dimension of the topic.

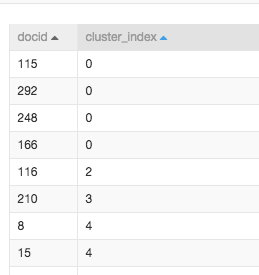

Then article classification can be achieved by clustering the distances of the vectors. The classification results of the K-means clustering component are shown in the figure below.

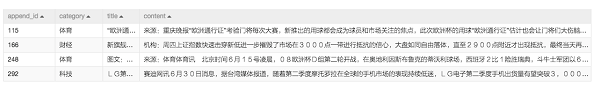

The 4 articles 115, 292, 248, and 166 are queried through the filtering and mapping component. The results are shown in the following figure.

The experimental result is not perfect. In the above figure, most of the articles are sorted correctly, with the exception of a financial news unit, a technology news unit and two sports news units being grouped together.

The main reasons are as follows:

To learn more about Alibaba Cloud Machine Learning Platform for Artificial Intelligence (PAI), visit www.alibabacloud.com/product/machine-learning

Alibaba Cloud Machine Learning Platform for AI: Air Quality Forecasting

Alibaba Clouder - July 18, 2018

Merchine Learning PAI - October 30, 2020

Alibaba Clouder - July 17, 2019

Alibaba Clouder - June 17, 2020

Alibaba Clouder - September 25, 2020

Alibaba Clouder - July 22, 2020

Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn More Platform For AI

Platform For AI

A platform that provides enterprise-level data modeling services based on machine learning algorithms to quickly meet your needs for data-driven operations.

Learn More ApsaraDB for HBase

ApsaraDB for HBase

ApsaraDB for HBase is a NoSQL database engine that is highly optimized and 100% compatible with the community edition of HBase.

Learn MoreMore Posts by GarvinLi