By Yangshao

With the increasing popularity of cloud-native technologies, more business applications are shifting to a cloud-native architecture. With the immutable infrastructure, elastic scaling, and high extensibility of the container management platform Kubernetes, businesses can quickly complete digital transformation. Ingress traffic management methods have become universal and standardized in the evolution of cloud-native technologies. Ingresses defined by Kubernetes are used to manage external access to internal services of clusters. The standardization of ingress gateway decouples ingress traffic management from the implementation of gateways. This promotes the development of various Ingress controllers and eliminates the need for developers to bind to vendors. In the future, you can switch to different ingress controllers based on your business scenarios.

Alibaba Cloud Container Service for Kubernetes (ACK) allows you to manage containerized applications that run on the cloud conveniently and efficiently. MSE Ingress provides a more powerful method to manage traffic based on MSE cloud-native gateways. MSE Ingress is compatible with more than 50 annotations of NGINX Ingress and is suitable for more than 90% of use scenarios of NGINX Ingress. MSE Ingress supports canary releases of multiple service versions and provides flexible service governance capabilities and comprehensive security protection. Meanwhile, MSE Ingress can meet requirements for traffic governance in scenarios in which a large number of cloud-native distributed applications are used. This article describes how to use Container Service for Kubernetes (ACK) and MSE Ingresses to manage ingress traffic in clusters richer and easier.

Business development requires continuous iteration of application systems. We cannot avoid frequent application changes and releases, but we can improve the stability and high availability during application upgrades. A more common approach is to use canary release. Canary release routes a small amount of traffic to the new version of a service. As a result, few machines are required to deploy the new version of the service. After you verify that the new version meets your expectations, you can gradually adjust the traffic to migrate the traffic from the old version to the new version. During this period, you can scale out the services of the new version and scale in the services of the old version based on the current traffic distribution of the new and old versions to maximize the utilization of underlying resources.

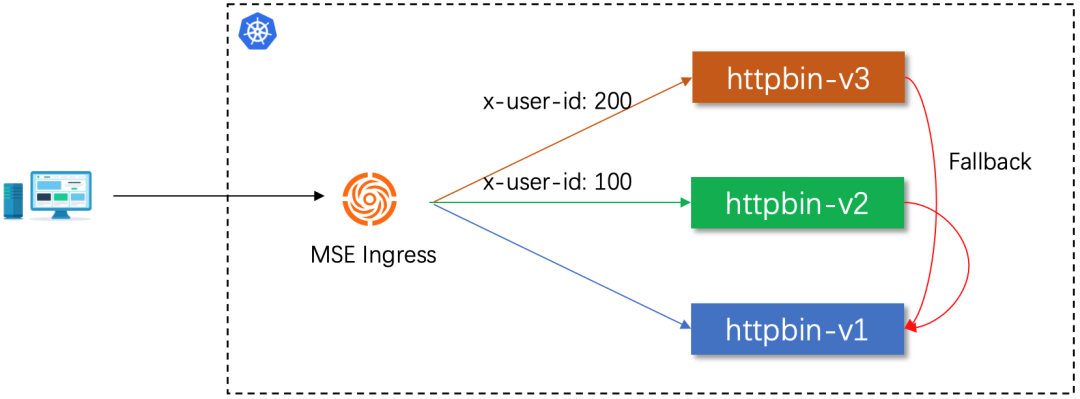

You can use MSE Ingress to enable the coexistence of multiple canary release versions of a service. This allows you to develop multiple features of the service at the same time and perform independent canary verification. MSE Ingress supports multiple methods to identify canary traffic. You can implement canary matching policies on the route level based on HTTP headers, cookies, and weights.

MSE Ingress provides the Fallback feature for the canary version of a service by default. If the canary version of a service does not exist or is unavailable, traffic is automatically routed to the official version of the service. This ensures the high availability of business applications. You can still use the default-backend annotations provided by MSE Ingress to control the direction of the disaster recovery service.

For micro-application in a distributed architecture, the dependencies between services are complex. A business function requires multiple micro-services to provide capabilities. One business request needs to go through multiple micro-services to complete the processing.

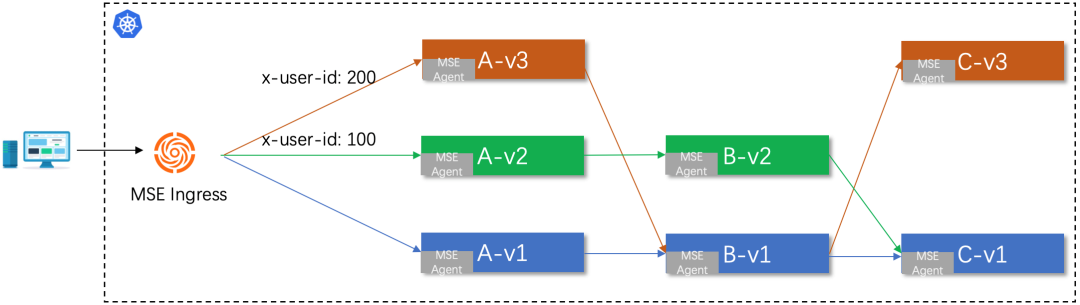

In this scenario, the release of a new service feature may involve the release of multiple services at the same time. When verifying the new feature, multiple services are canaried at the same time. The environment isolation from the gateway to the entire backend service is established to perform phased verification on multiple services of different canary versions. This is a unique full-link canary scenario in the microservices model.

Currently, the end-to-end canary solution includes physical environment isolation and logical environment isolation. The physical environment isolation approach is to build real traffic isolation by adding machines. This approach has certain labor costs and machine costs, so the more common approach in the industry is based on flexible logical environment management. Although it seems that both the official version and the canary version of a service are deployed in the same environment, the canary route matching policy can accurately control the canary traffic to preferentially flow through the corresponding canary version of the service. Only when the target service does not have a canary version will the disaster recovery go to the official version of the service. From the overall perspective, the canary verification traffic for new features only flows through the canary version of the service that involves the to-be-released version. For the services that do not involve changes in this new feature, the canary traffic passes normally. This precise traffic control method greatly facilitates the pain points of concurrent development and verification of multiple versions in the microservices model and reduces the machine cost of building the test environment.

Container Service for Kubernetes (ACK) users can use MSE governance and MSE Ingress together to easily and quickly implement the full-link canary feature without changing a single line of code. This fine-grained traffic control feature makes microservices model governance easier for users.

Please see this document for a specific example: Configure full-link canary release based on MSE Ingress

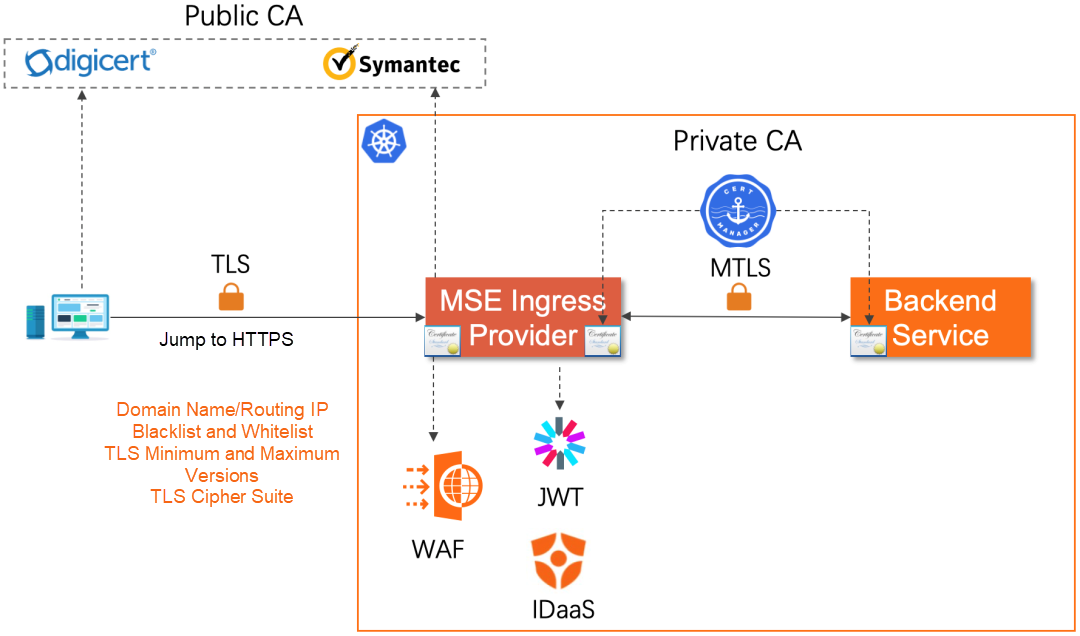

Security risk is always the number one problem of business applications, along with the entire lifecycle of business development. In addition, the environment of the external Internet is becoming more complex, the internal business architecture is becoming larger, and the deployment structure involves multiple forms of public cloud, private cloud, and hybrid cloud. Therefore, the security risk is becoming more intense.

As an ingress gateway, MSE Ingress has actively explored and enhanced security in the field from the very beginning, creating all aspects of security protection. It is mainly reflected in the following aspects:

Observability is not a new word. The word comes from control theory and refers to the degree to which a system can infer its internal state from its external output. With the development of the IT industry for decades, the fields of monitoring, alerting, and troubleshooting of IT systems have gradually matured, and the IT industry has abstracted it to form a set of observability engineering systems. The term has become popular in recent years is largely due to the continuous popularity of technologies (such as cloud-native, micro-service models, and DevOps), which pose greater challenges to observability.

As the gateway of the business traffic, the observability of the gateway is closely related to the stability of the overall business. At the same time, since the gateway has many user scenarios and functions, the network environment is more complex. This also brings many difficulties to the gateway observability construction, mainly:

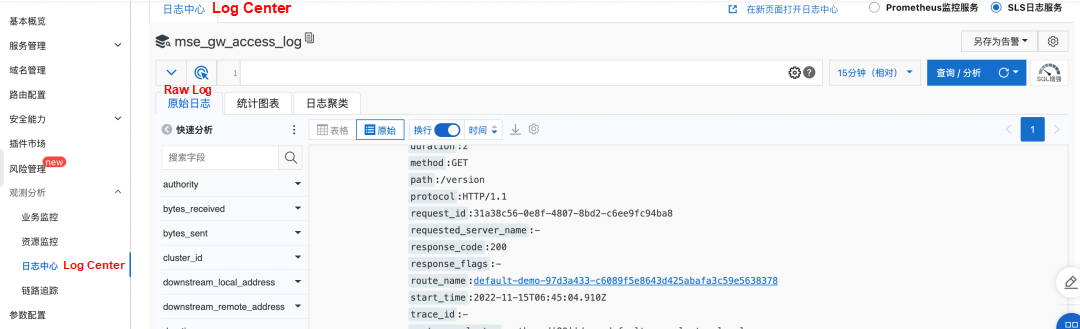

MSE Ingress helps users build a comprehensive observability system based on three major categories to address the user pain points: logs, Tracing Analysis, and metric monitoring.

In terms of logs, MSE Ingress is seamlessly integrated with Alibaba Cloud SLS. Users can view all access requests to the cluster ingress in real-time.

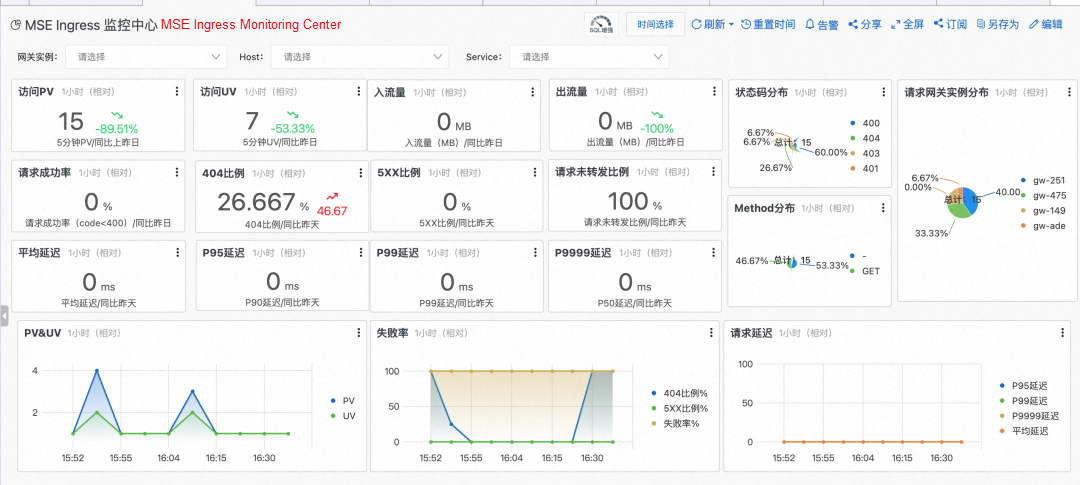

In terms of monitoring metrics, MSE Ingress provides a dashboard that contains a wide range of metrics and integrates Prometheus and SLS. You can use the etl of gateway access logs to obtain more precise and accurate data. You can also use Prometheus to obtain real-time monitoring of the gateway.

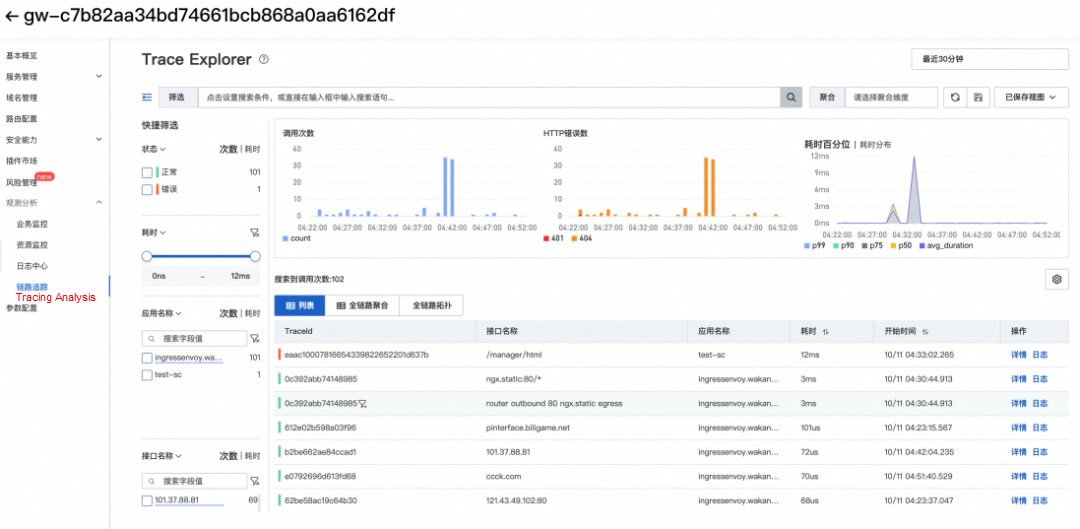

In terms of Tracing Analysis, to help users solve the pain points of call link visualization in microservice scenarios, MSE Ingress connects to the out-of-the-box ARMS distributed Tracing Analysis service and supports the delivery of trace data to user-built skywalking to avoid cloud product locking.

MSE Ingress is deeply integrated with ACK/ASK, so users can easily access and use it.

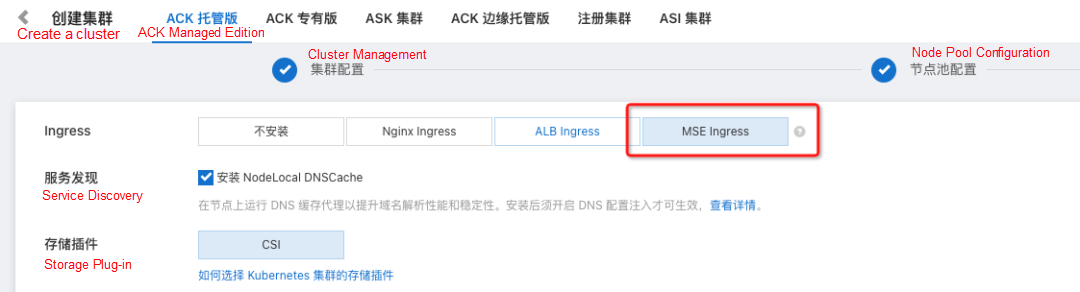

When you create an ACK/ASK cluster, you can install an MSE Ingress in the Ingress section.

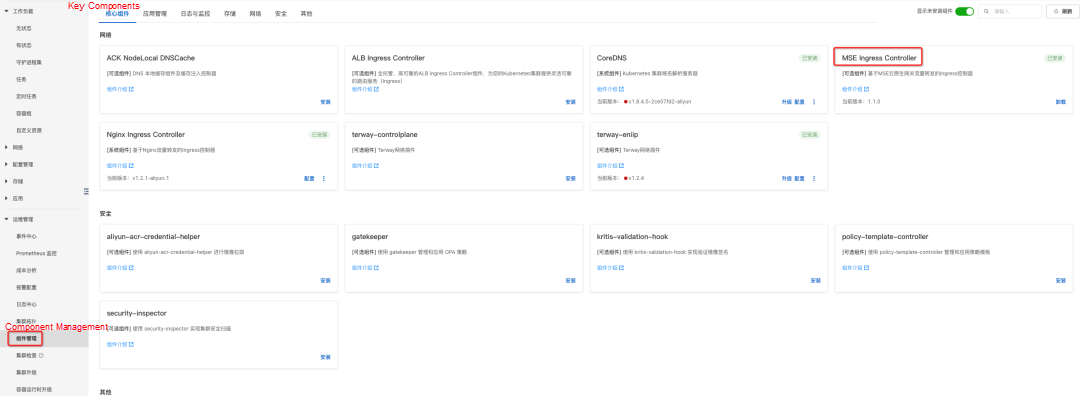

For a previously created cluster, you can go to Cluster → Operations Management → Component Management, find the MSE Ingress Controller in the network group, and install it.

The installation of the MSE Ingress Controller is complete. You can refer to the following documentation to get started with MSE Ingress.

208 posts | 12 followers

FollowAlibaba Container Service - June 13, 2024

Alibaba Cloud Native Community - May 17, 2022

Alibaba Cloud Native - February 15, 2023

Alibaba Container Service - April 12, 2024

Alibaba Cloud Native - November 9, 2022

Alibaba Cloud Native - May 11, 2022

208 posts | 12 followers

Follow Cloud-Native Applications Management Solution

Cloud-Native Applications Management Solution

Accelerate and secure the development, deployment, and management of containerized applications cost-effectively.

Learn More Container Service for Kubernetes

Container Service for Kubernetes

Alibaba Cloud Container Service for Kubernetes is a fully managed cloud container management service that supports native Kubernetes and integrates with other Alibaba Cloud products.

Learn More Microservices Engine (MSE)

Microservices Engine (MSE)

MSE provides a fully managed registration and configuration center, and gateway and microservices governance capabilities.

Learn More ACK One

ACK One

Provides a control plane to allow users to manage Kubernetes clusters that run based on different infrastructure resources

Learn MoreMore Posts by Alibaba Cloud Native