By Fan Yang (Yangshao)

Typically, the network environment in a Kubernetes cluster is isolated from the outside. In other words, clients outside the Kubernetes cluster cannot directly access services inside the cluster. This is a problem about how to connect different network domains. A common approach to dealing with cross-network domain access is to introduce an ingress for a target cluster. All external requests to the target cluster must access this ingress. Then, those external requests are forwarded to target nodes by the ingress.

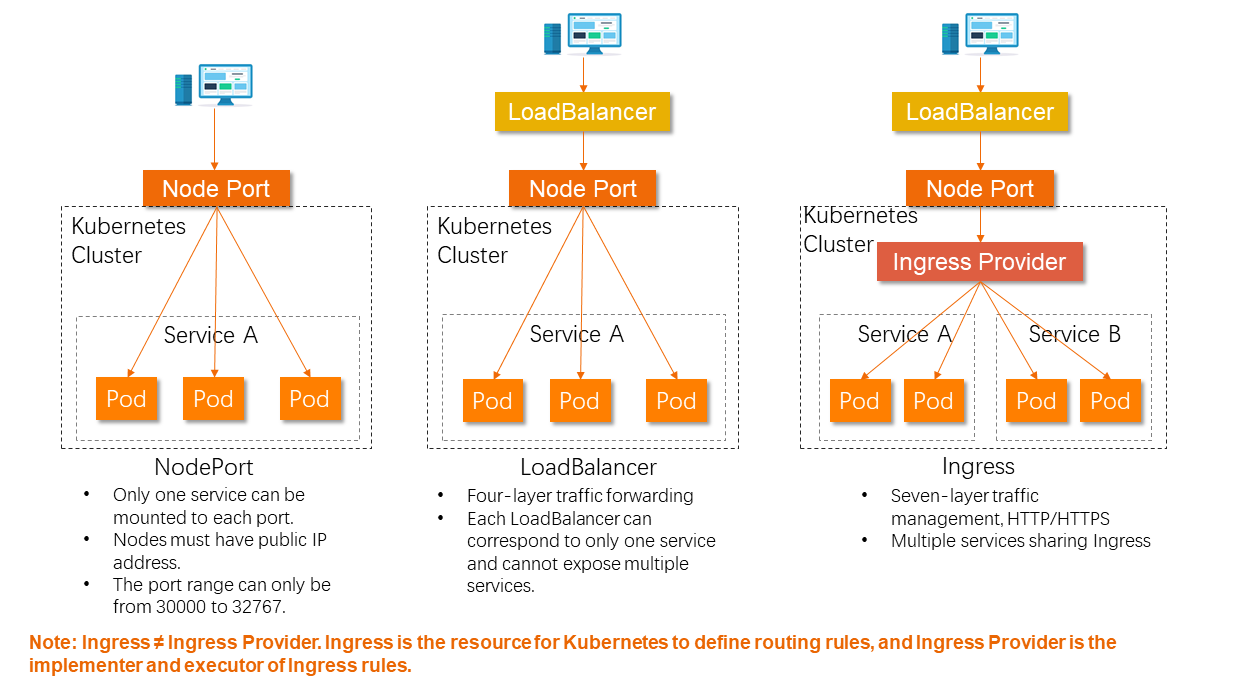

Similarly, the Kubernetes community also uses the solution of adding ingresses to solve the problem of how to expose the internal services within the cluster to the outside. Kubernetes has always defined standards to solve the same kind of problems, and it is no exception in solving the problem of external traffic management of clusters. Kubernetes unifies the node port of clusters and proposes three solutions: NodePort, LoadBalancer, and Ingress. The three solutions are compared in the following figure:

By comparison, you can see that Ingress is a more suitable way for businesses. Based on Ingress, you can do more complex secondary routing. This is also the choice for most users.

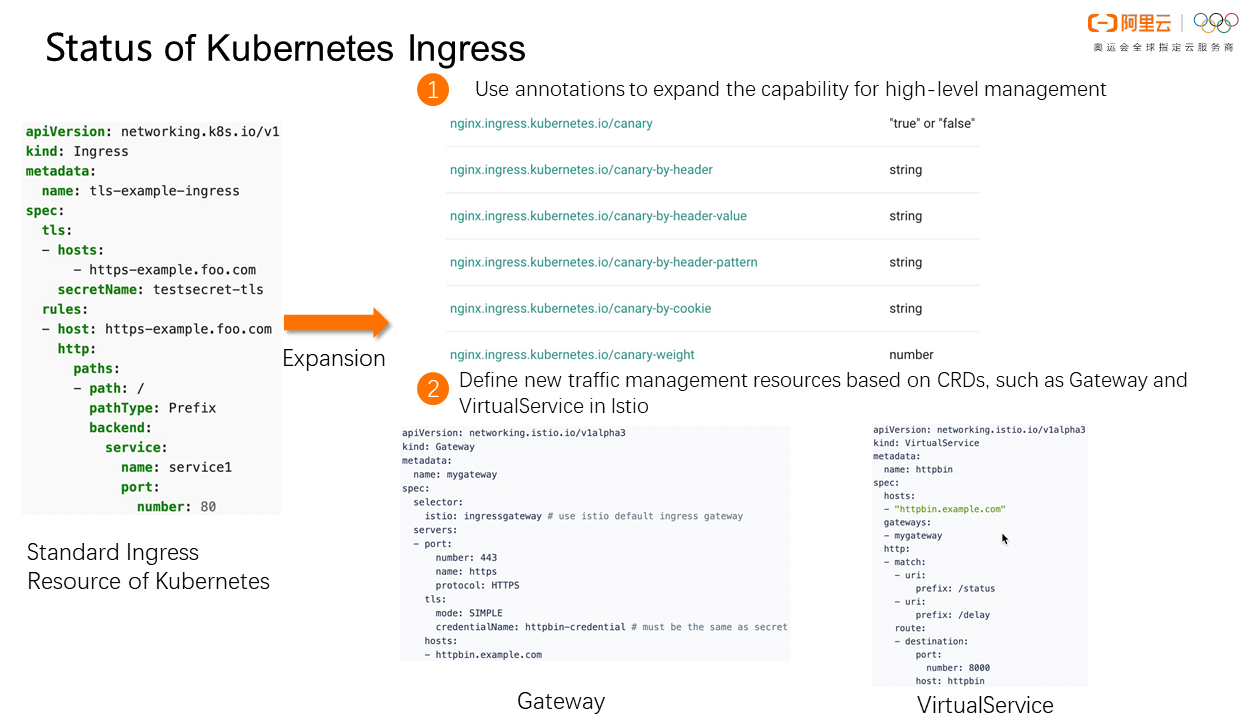

Kubernetes has a standardized and unified abstraction of the traffic management mode for a cluster ingress, but it only covers the basic HTTP or HTTPS traffic forwarding feature and cannot deal with the large-scale and complex traffic management of cloud-native distributed applications. For example, standard Ingress does not support common traffic policies, such as traffic diversion, cross-domain, rewriting, and redirection. There are two mainstream solutions to this problem. One solution is to define key-value pairs in the annotation of Ingress to expand the capacity for high-level management. The other solution is to use Kubernetes CRD to define new ingress traffic rules. The following figure shows the two solutions.

This section will explain the best practices of Kubernetes Ingress from the following five aspects.

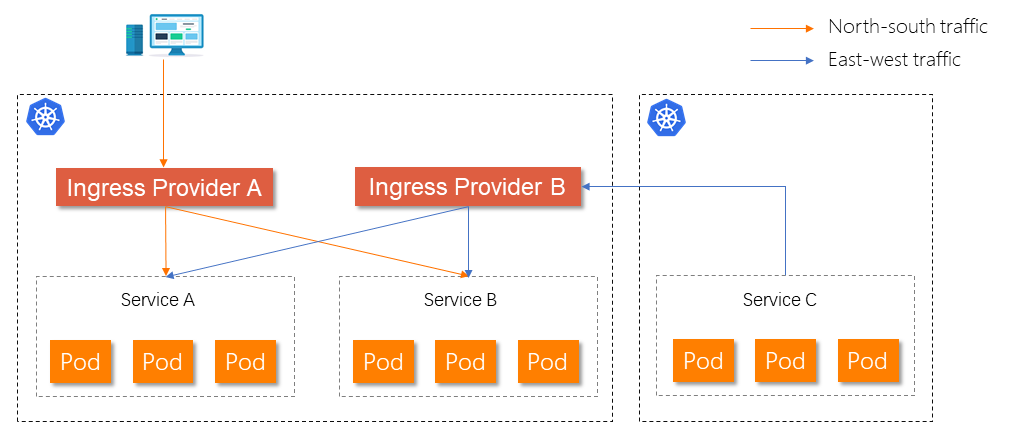

In actual business scenarios, backend services in a cluster need to provide services to external users or other internal clusters. Generally speaking, we refer to traffic from outside to inside as north-south traffic and traffic between internal services as east-west traffic. Some users choose to share the same Ingress Provider for north-south traffic and east-west traffic to save machine costs and reduce O&M pressure. This approach leads to new problems that make it impossible to do fine-grained traffic management for external or internal traffic and increases the impact of faults. The best practice is to deploy an independent Ingress Provider for external and internal network scenarios, control the number of replicas and hardware resources according to the actual request scale, and provide resource utilization while reducing the explosion radius as much as possible.

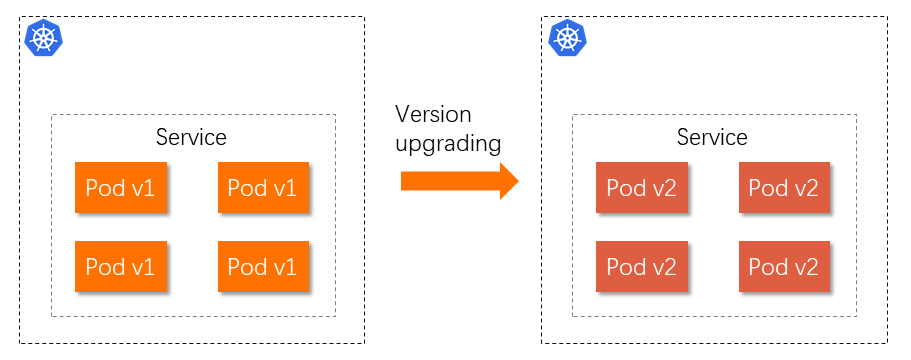

The application services of the business encounter the problem of frequent version upgrading during the continuous iteration and development of the business. The most original (and simplest) way is to stop the old version of an online service and then deploy and start the new version of the service. However, directly providing a new version of the service to all users poses two serious problems. First of all, during the period between stopping the old version of the service and starting its new version, the application service is unavailable, and the success rate of traffic requests drops to zero. Secondly, if there are serious program bugs in the new version, rolling back from the new version to the old version will lead to short-term unavailability of the service. It will affect user experience and cause many unstable factors to the overall business system.

How can we meet the demands of fast business iteration and ensure the external high availability of business applications during the upgrade process?

The following core issues need to be addressed:

In response to the first two issues, the more common approach used in the field is to adopt grayscale release (also known as canary release). Canary release routes a small amount of traffic to the new version of a service. As a result, very few machines are required to deploy the new version of the service. After you confirm the new version works as expected, you can gradually route the traffic from the old version to the new version. During the process, you can scale up services of the new version and scale down services of the old version to maximize the utilization of underlying resources.

In the Status of Kubernetes Ingress section, we mentioned two popular solutions to expand Ingress, among which adding key-value in the annotation can solve the third issue. We can define the policy configuration required for grayscale release in the annotation (such as configuring Header and Cookie of grayscale traffic) and matching methods of corresponding values (such as exact match or regular match). After that, the Ingress Provider identifies the newly defined annotation and resolves the annotation into its own routing rules. The key is that the Ingress Provider selected by users must support a variety of routing methods.

We can optionally consider the online traffic with some characteristics as small traffic in the process of verifying whether the new version of service meets expectations with small traffic. Header and Cookie in the request content can be considered as request characteristics. Therefore, we can split online traffic based on Header or Cookie for the same API. If there is no difference in Header in real traffic, we can manually manufacture some traffic based on a specific Header for verification in the online environment. We can also verify the new version in batches according to the importance of clients. For example, access requests of common users are given priority to access the new version. After the verification is completed, VIP users are gradually diverted to the new version. Generally, information about users and clients will be stored in Cookie.

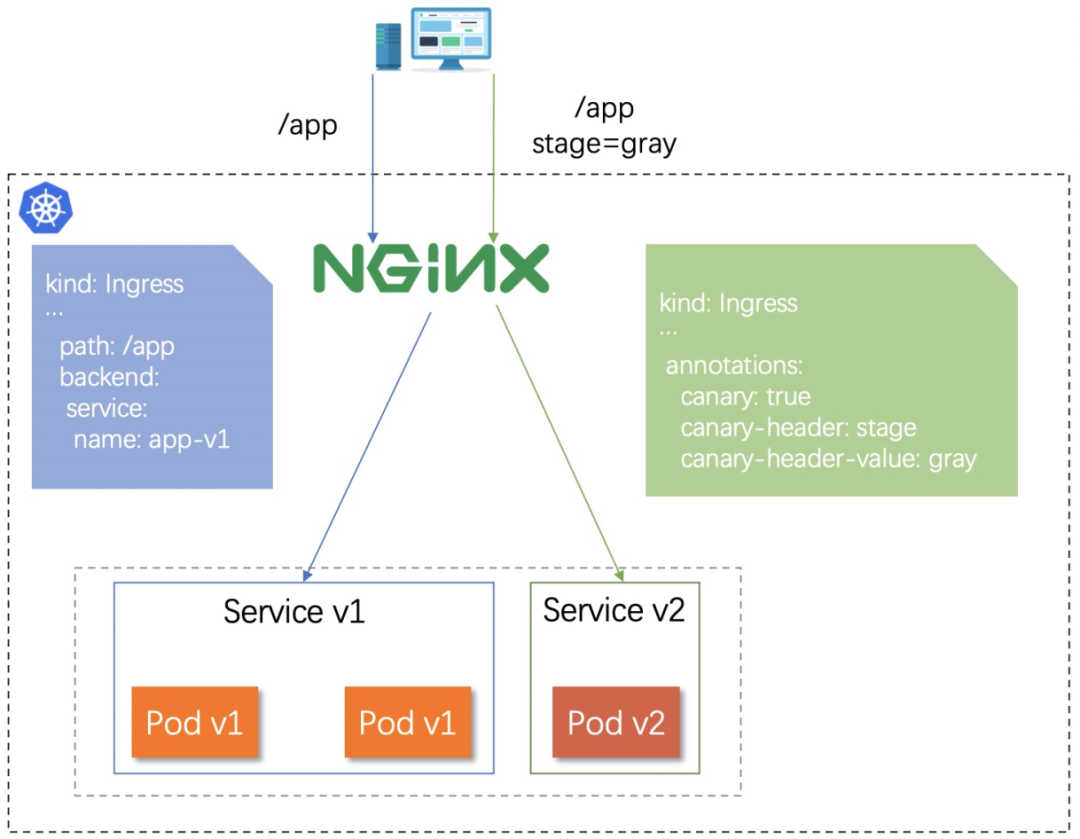

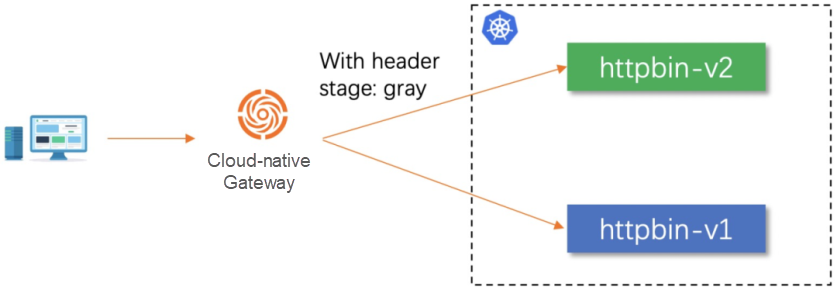

Let's take NGINX-Ingress as an example. Annotation is used to support traffic diversion of Ingress. The following diagram shows how to use the Header-based grayscale release:

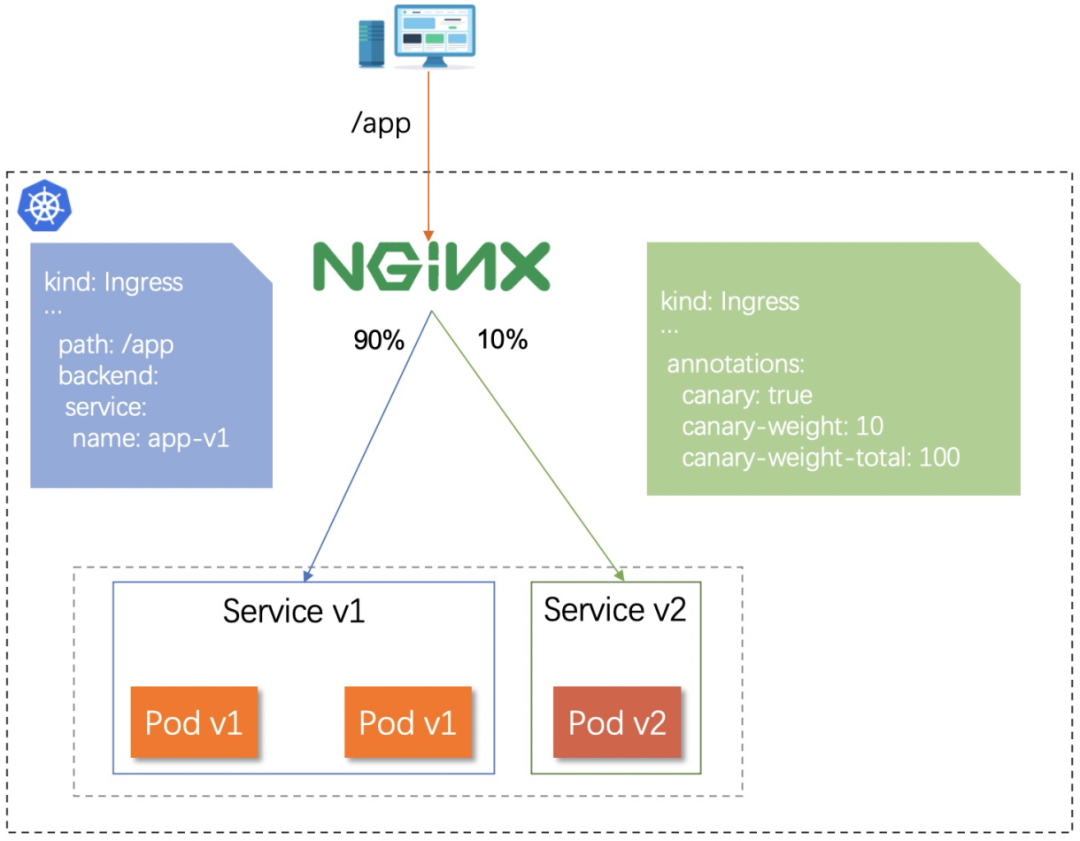

If you implement the Header-based grayscale release, the new version of service can be provided for specific requests or users, but the scale of requests to access the new version cannot be evaluated well. Therefore, the maximum utilization of resources may not be achieved when allocating machines to the new version. However, weight-based grayscale release can accurately control the traffic ratio and allocate machine resources with ease. After the small traffic verification in the early stage, the upgrade is gradually completed by adjusting the traffic weight in the later stage. This method is simple to operate and easy to manage. However, online traffic will be indiscriminately directed to new versions, which may affect the experience of important users. The following diagram shows the weight-based grayscale release:

With the continuous expansion of the scale of cloud-native applications, developers began to split the original monolithic architecture in a fine-grained manner, splitting the service modules in monolithic applications into independently deployed and running microservices. The lifecycle of these microservices is solely in the charge of the corresponding business teams. The splitting effectively deals with insufficient agility and low flexibility in the monolithic architecture. However, any architecture is not a silver bullet, and it is bound to cause new problems while solving old problems. Monolithic applications can expose services to the outside through a four-layer SLB, while distributed applications rely on Ingress to provide the capability for seven-layer traffic distribution. In this case, learning how to design better routing rules is particularly important.

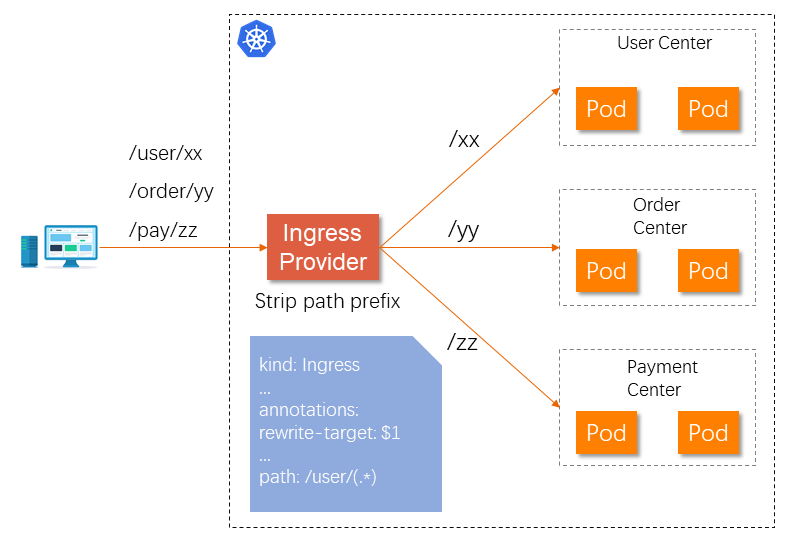

Generally, we split services based on business or feature domains. Then, we can follow this principle when exposing services through Ingress. When designing external APIs for microservices, we can add a representative business prefix to the original path. After a request completes route matching and before forwarding the request to backend services, the Ingress Provider strips the business prefix by rewriting the path. The workflow diagram is shown below:

It is easy for the API design principle to manage the set of services exposed to the outside and perform finer-grained authentication based on business prefixes. At the same time, it is convenient for the unified observable construction of services in various business domains.

Security risks are always the number-one problem of business applications, along with the entire lifecycle of business development. In addition, the environment of the external Internet is becoming more complex, the internal business architecture is becoming larger, and the deployment structure involves multiple forms of public cloud, private cloud, and hybrid cloud. Therefore, the security risk is becoming more intense. Zero-trust was born as a new design model in the security field. It believes that all users and services inside and outside the application network are not trusted, and all users and services must be authenticated before initiating and processing requests. All authorization operations follow the principle of least privilege. In short, it trusts no one and requires verification for everything.

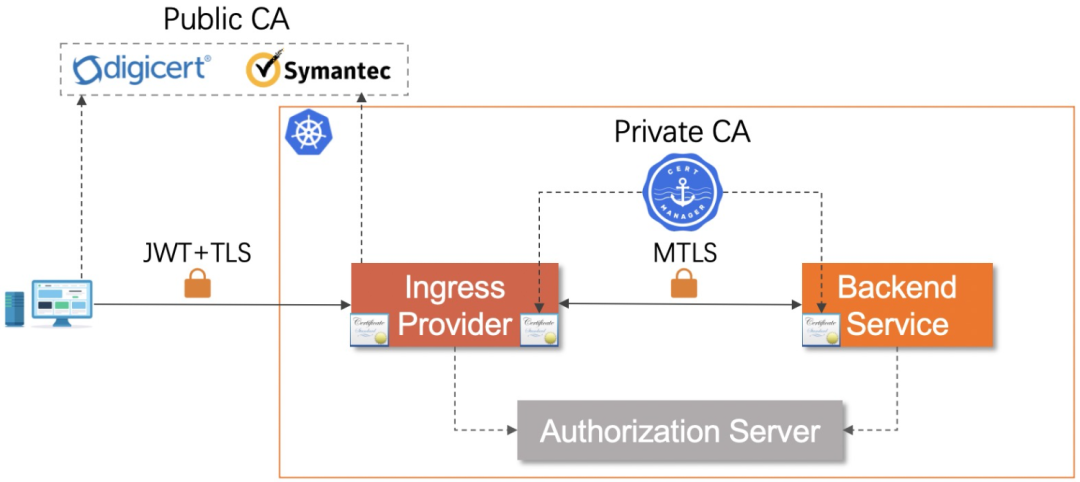

The following figure is an architecture diagram of the zero-trust concept for the entire end-to-end implementation of external users → Ingress Provider → backend services:

All external access traffic needs to pass through the Ingress Provider first. Therefore, the main performance bottleneck is reflected in the Ingress Provider, which has higher requirements for high concurrency and high performance. Leaving aside the performance differences between various Ingress Providers, we can release additional performance by adjusting kernel parameters. Thanks to the practical experience of Alibaba in the cluster access layer for many years, we can appropriately adjust the following kernel parameters:

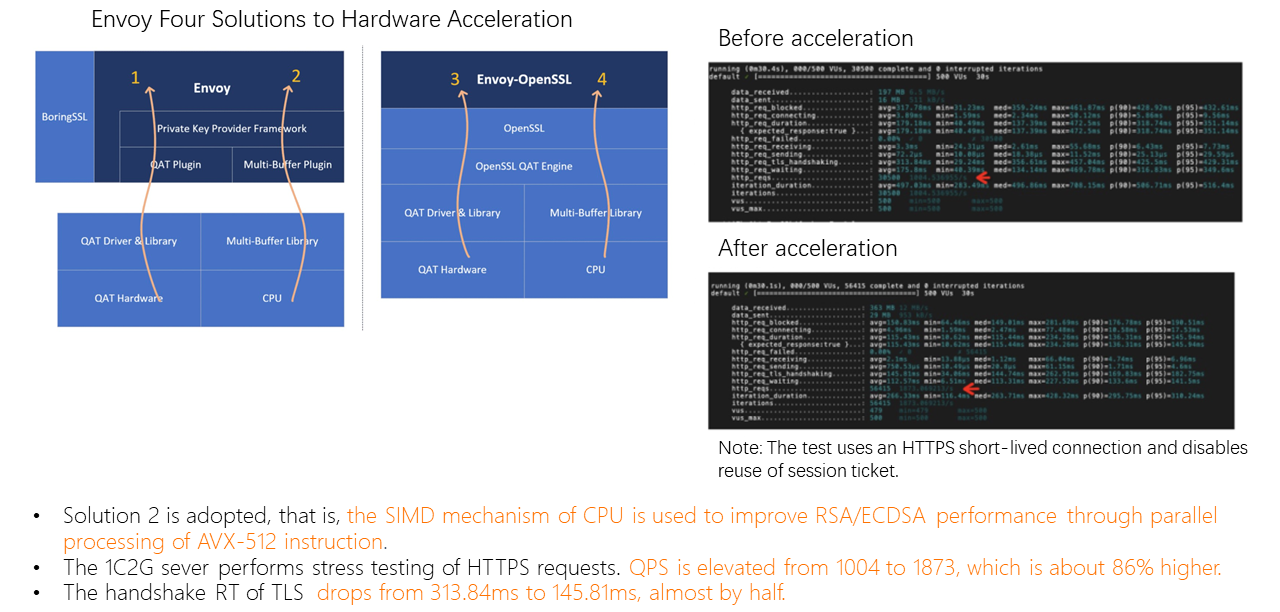

Another optimization perspective is to start with hardware and fully release the underlying hardware computing power to improve the performance of the application layer. HTTPS has become the main use method for public network requests. After all requests use HTTPS, it is bound to have great performance loss compared with HTTP because TLS handshakes are required. With the significant improvement of CPU performance, the SIMD mechanism of the CPU can accelerate the performance of TLS. This optimization solution relies on the support of the machine hardware and the support of the internal implementation of the Ingresses Provider.

Microservices Engine (MSE) cloud-native gateway (relying on the Istio-Envoy architecture and combining with the seventh-generation ECS of Alibaba Cloud) has taken the lead in completing TLS hardware acceleration. It improves the performance of HTTPS without increasing user resource costs.

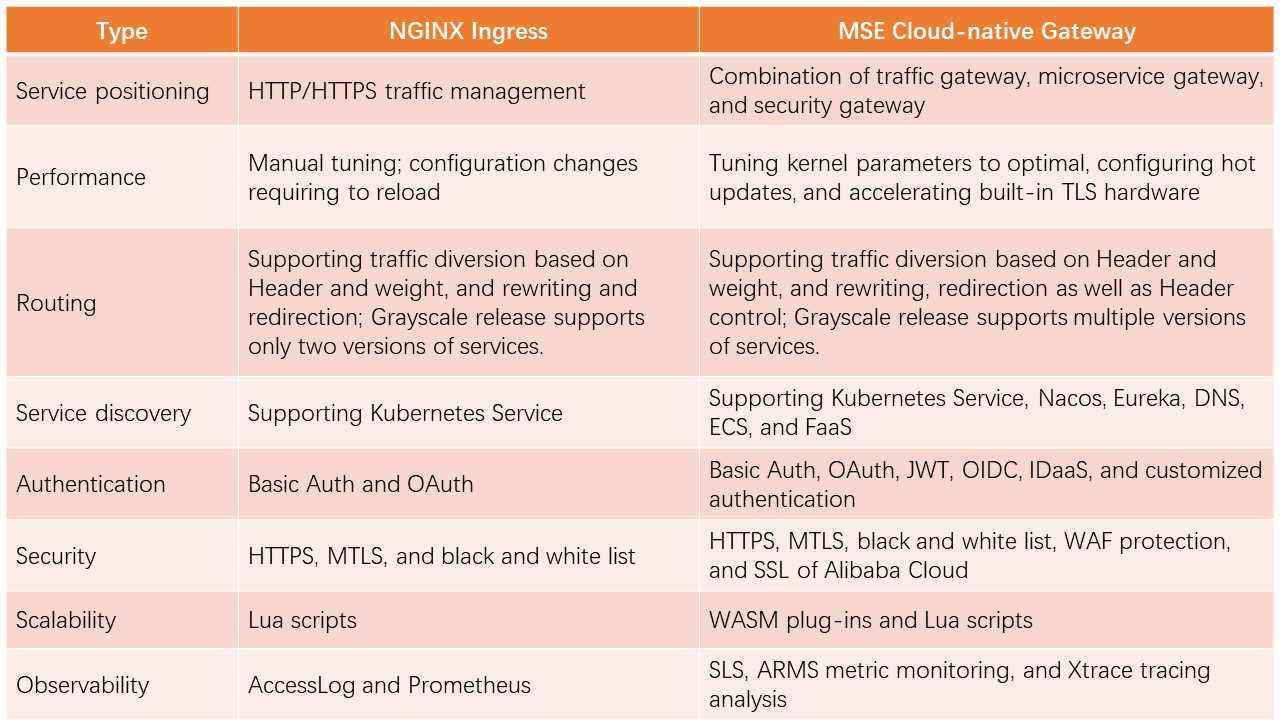

With the continuous evolution of cloud-native technology and the deepening converting to microservices of cloud-native applications, NGINX Ingress is slightly tired in the face of complex routing rule configuration, support for multiple protocols in the application layer (including Dubbo and QUIC), service access security, and traffic observability. In addition, NGINX Ingress takes effect of configurations for updating configuration through reloading. Disconnection will occur in the face of large-scale long connections. Frequent configuration changes may cause business traffic loss.

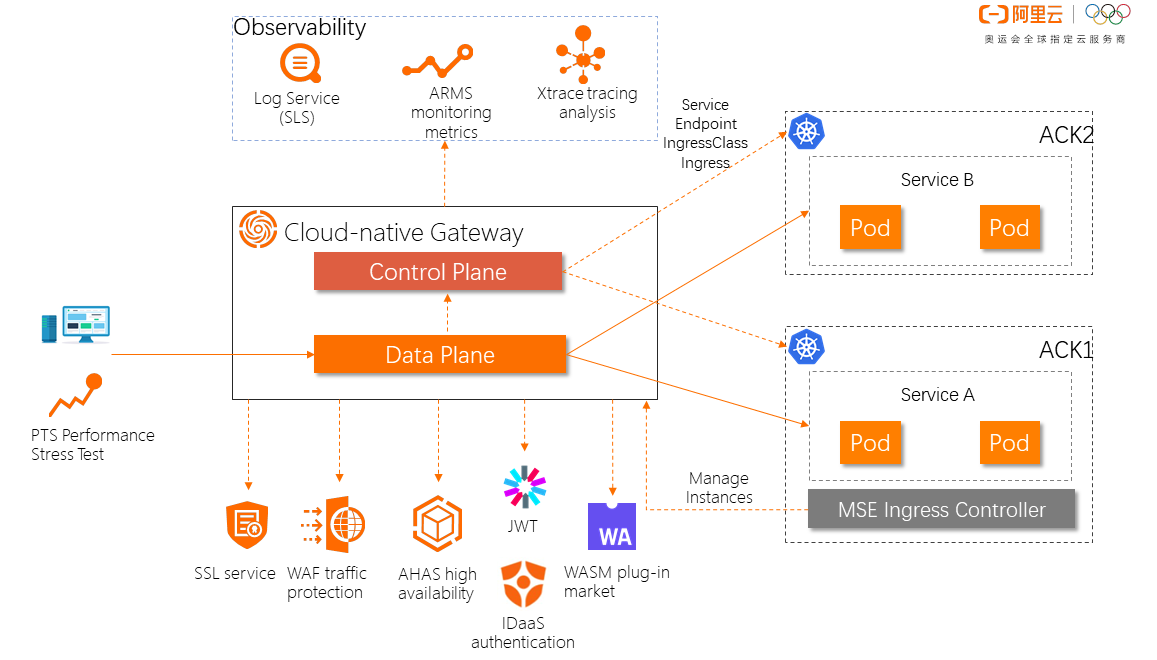

MSE cloud-native gateway was created to meet users' urgent demands for large-scale traffic management. This is a next-generation gateway that is compatible with standard Ingress specifications launched by Alibaba Cloud. It has the advantages of low cost, great security, high integration, and high availability. MSE cloud-native gateway combines traditional WAF gateway, traffic gateway, and microservice gateway to provide users with the capability for refined traffic management, reducing the resource cost by 50%. It supports multiple service discovery methods, such as Alibaba Cloud Container Service for Kubernetes (ACK), Nacos, Eureka, fixed address, FaaS, and multiple methods of authentication and login to quickly build security defense lines. It provides a comprehensive and multi-perspective monitoring system, such as index monitoring, log analysis, and tracing analysis. It also supports the resolution of standard Ingress resources in the single and multi Kubernetes cluster to help users implement unified traffic management declaratively in cloud-native application scenarios. In addition, we introduced the WASM plug-in market to meet users' customized requirements.

The following table is a summary of the comparisons between NGINX Ingress and MSE cloud-native gateway:

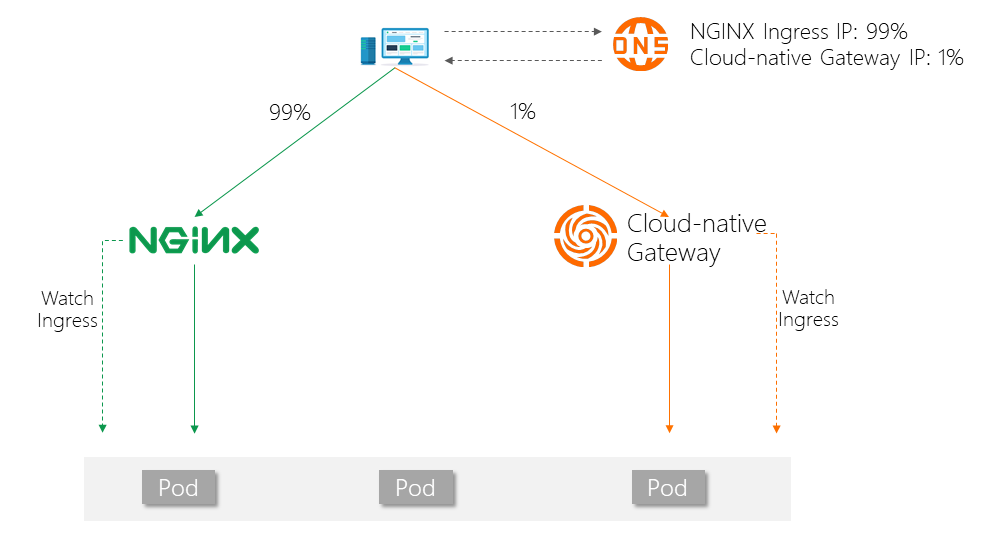

MSE cloud-native gateway is hosted by Alibaba Cloud, which is O&M-free, reduces costs, has rich features, and is deeply integrated with the peripheral products of Alibaba Cloud. The following figure shows how to seamlessly migrate data from NGINX Ingress to MSE cloud-native gateway. You can also refer to this method for other Ingress Providers.

Next, we will perform practical operations related to the Ingress Provider (MSE cloud-native gateway) based on ACK. You can learn how to use MSE Ingress Controller to manage cluster ingress traffic.

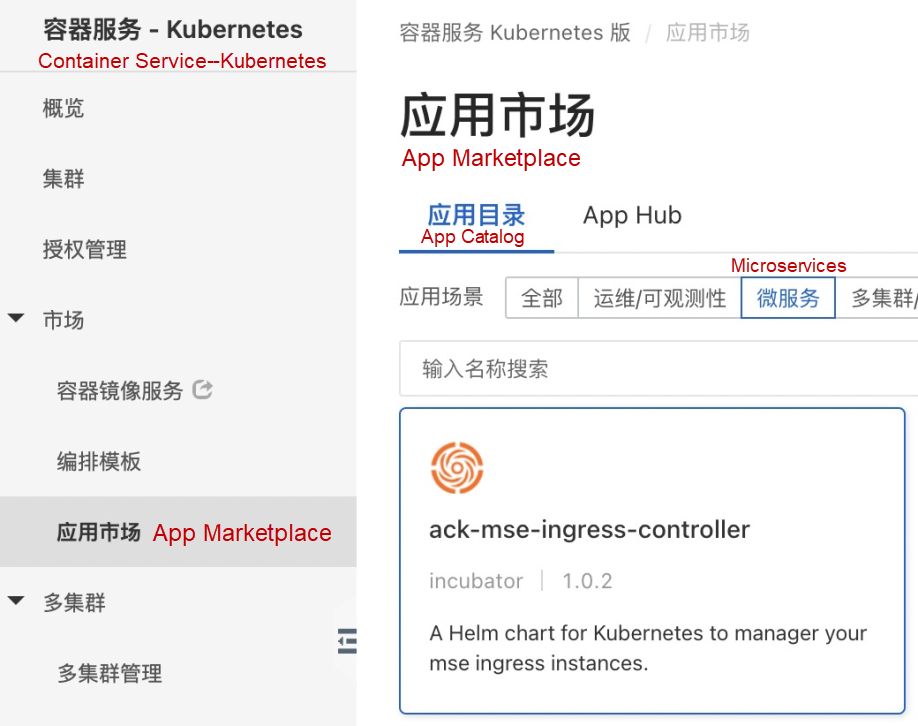

We can find the ack-mse-ingress-controller in the app marketplace of Alibaba Cloud Container Service and complete the installation according to the operation document below the component:

MseIngressConfig serves as a CRD resource provided by MSE Ingress Controller. MSE Ingress Controller uses MseIngressConfig to manage the lifecycle of MSE cloud-native gateway instances. One MseIngressConfig maps to one MSE cloud-native gateway instance. If you need multiple instances of MSE cloud-native gateway, you must create multiple MseIngressConfig configurations. We created a gateway in a way that minimizes the configuration:

apiVersion: mse.alibabacloud.com/v1alpha1

kind: MseIngressConfig

metadata:

name: test

spec:

name: mse-ingress

common:

network:

vSwitches:

- "vsw-bp1d5hjttmsazp0ueor5b"Configure the standard IngressClass of Kubernetes to associate the MseIngressConfig. After the association is completed, the cloud-native gateway starts to listen to Ingress resources related to the IngressClass in the cluster.

apiVersion: networking.k8s.io/v1

kind: IngressClass

metadata:

annotations:

ingressclass.kubernetes.io/is-default-class: 'true'

name: mse

spec:

controller: mse.alibabacloud.com/ingress

parameters:

apiGroup: mse.alibabacloud.com

kind: MseIngressConfig

name: testWe can view the current situation by checking the status of the MseIngressConfig. MseIngressConfig changes in sequence based on the status of Pending > Running > Listening. Each status is explained below:

It assumes the cluster has a backend service named httpbin. We want to perform grayscale verification by Header during version upgrading, as shown in the figure:

First, deploy httpbin v1 and v2 and apply the following resources to the ACK cluster:

apiVersion: apps/v1

kind: Deployment

metadata:

name: go-httpbin-v1

spec:

replicas: 1

selector:

matchLabels:

app: go-httpbin-v1

template:

metadata:

labels:

app: go-httpbin-v1

version: v1

spec:

containers:

- image: specialyang/go-httpbin:v3

args:

- "--port=8090"

- "--version=v1"

imagePullPolicy: Always

name: go-httpbin

ports:

- containerPort: 8090

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: go-httpbin-v2

spec:

replicas: 1

selector:

matchLabels:

app: go-httpbin-v2

template:

metadata:

labels:

app: go-httpbin-v2

version: v2

spec:

containers:

- image: specialyang/go-httpbin:v3

args:

- "--port=8090"

- "--version=v2"

imagePullPolicy: Always

name: go-httpbin

ports:

- containerPort: 8090

---

apiVersion: v1

kind: Service

metadata:

name: go-httpbin-v1

spec:

ports:

- port: 80

targetPort: 8090

protocol: TCP

selector:

app: go-httpbin-v1

---

apiVersion: v1

kind: Service

metadata:

name: go-httpbin-v2

spec:

ports:

- port: 80

targetPort: 8090

protocol: TCP

selector:

app: go-httpbin-v2Release the Ingress resources of stable version v1:

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: httpbin

spec:

ingressClassName: mse

rules:

- host: test.com

http:

paths:

- path: /version

pathType: Exact

backend:

service:

name: go-httpbin-v1

port:

number: 80Release the Ingress resources of grayscale version v2:

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

annotations:

"nginx.ingress.kubernetes.io/canary": "true"

"nginx.ingress.kubernetes.io/canary-by-header": "stage"

"nginx.ingress.kubernetes.io/canary-by-header-value": "gray"

name: httpbin-canary-header

spec:

ingressClassName: mse

rules:

- host: test.com

http:

paths:

- path: /version

pathType: Exact

backend:

service:

name: go-httpbin-v2

port:

number: 80Test verification

# Test the stable version

curl -H "host: test.com" <your ingress ip>/version

# Test result

version: v1

# Test the grayscale version

curl -H "host: test.com" -H "stage: gray" <your ingress ip>/version

# Test result

version: v2The examples above use Ingress Annotation to extend the capability for high-level traffic management of standard Ingress to support grayscale release.

MSE cloud-native gateway aims to provide users with more reliable, lower costs, higher efficiency, and enterprise-level gateway products that conform with Kubernetes Ingress standards.

MSE cloud-native gateway provides two billing methods: pay-as-you-go and subscription. It supports ten regions: Hangzhou, Shanghai, Beijing, Shenzhen, Zhangjiakou, Hong Kong, Singapore, US (Virginia), US (Silicon Valley), and Germany (Frankfurt) and will gradually be available in other regions.

Redefining Analysis: EventBridge Real-Time Event Analysis Platform

Privatized Business Delivery Practice Based on Sealer from Government Procurement Cloud

639 posts | 55 followers

FollowAlibaba Cloud Native Community - March 2, 2023

Alibaba Cloud Native Community - March 11, 2024

Alibaba Developer - September 22, 2020

Alibaba Cloud Native Community - November 15, 2023

Alibaba Cloud Native - September 4, 2023

Alibaba Cloud Native - February 15, 2023

639 posts | 55 followers

Follow ACK One

ACK One

Provides a control plane to allow users to manage Kubernetes clusters that run based on different infrastructure resources

Learn More Container Service for Kubernetes

Container Service for Kubernetes

Alibaba Cloud Container Service for Kubernetes is a fully managed cloud container management service that supports native Kubernetes and integrates with other Alibaba Cloud products.

Learn More Cloud-Native Applications Management Solution

Cloud-Native Applications Management Solution

Accelerate and secure the development, deployment, and management of containerized applications cost-effectively.

Learn More DevOps Solution

DevOps Solution

Accelerate software development and delivery by integrating DevOps with the cloud

Learn MoreMore Posts by Alibaba Cloud Native Community