By Danjiang and Shimian

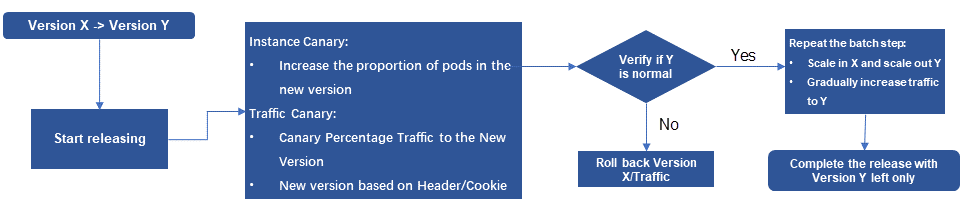

During application deployment, it is common practice to verify the normal functioning of a new version by using a small amount of specific traffic, ensuring overall stability. This process is known as canary release. Canary release gradually increases the release scope, thus ensuring the stability of the new version. If any issues arise, they can be detected promptly, and the scope of impact can be controlled to maintain overall stability.

A canary release generally has the following features:

• Gradual increase in release scope, avoiding simultaneous full release.

• Phased release process that allows careful verification of the new version's stability using canary release.

• Support for suspension, rollback, resumption, and automated status flow to provide flexible control over the release process and ensure stability.

According to relevant data, 70% of online issues are caused by changes. Canary release, observability, and rollback are often referred to as the three key elements of safe production because they help control risks and mitigate the impact of changes. By implementing canary release, organizations can release new versions more robustly and prevent losses caused by issues during the release process.

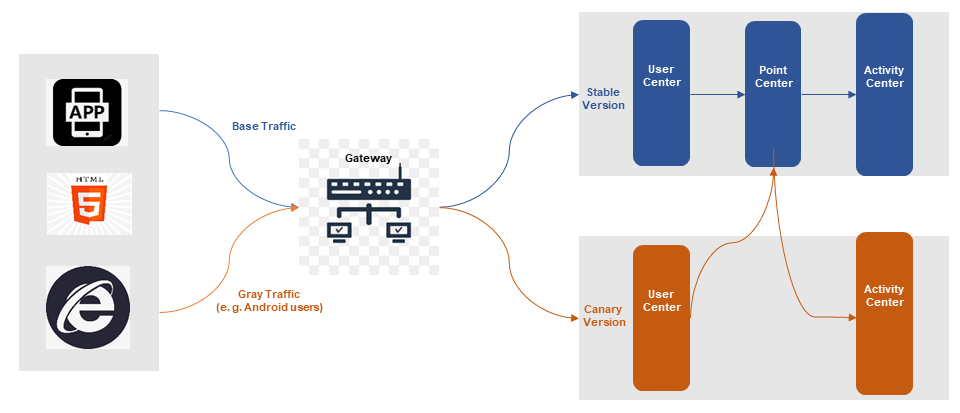

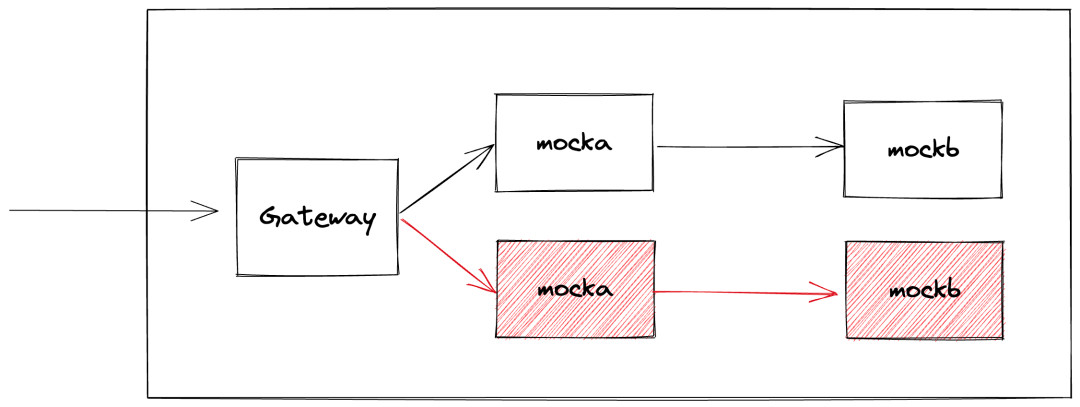

The end-to-end canary release is a best practice for canyon release solutions in microservice scenarios. Usually, each microservice will have a canary release environment or grouping to receive canary traffic. We hope that the traffic entering the upstream canary release environment can also enter the downstream canary release environment, ensuring that one request is always transmitted in the canary release environment, thus forming a traffic lane. Even if some microservices in the traffic lane do not have a canary release environment, these applications can still return to the canary release environment when requesting downstream.

End-to-end canary release escorts microservice release

The end-to-end canary release enables independent release and traffic control for individual services based on their specific needs. It also allows simultaneous release and modification of multiple services to ensure the stability of the entire system. Additionally, the use of automated deployment methods facilitates faster and more reliable releases, ultimately improving release efficiency and stability.

The implementation of the end-to-end canary release by using Istio is a common concern. This article discusses in detail several key technologies.

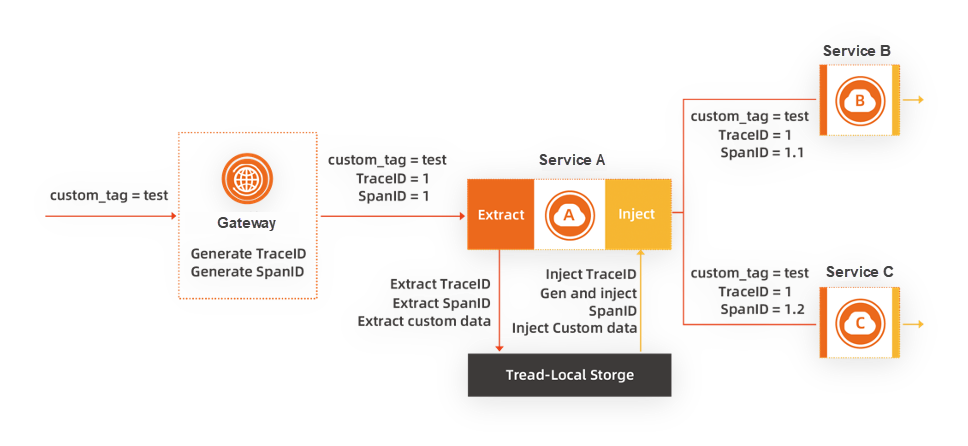

During the implementation of end-to-end canary release for microservices, an important consideration is the pass-through of headers in traffic. The challenge arises when some microservices retain specific headers for pass-through and discard others. Although Kruise Rollout effectively reduces the complexity of gateway resource configuration during deployment, it does not address the header pass-through issue. One crucial aspect is ensuring that the canary identifier can be continuously transmitted within the trace. Distributed tracing technology records detailed request call traces in large-scale distributed systems. The core concept involves recording the nodes and request duration through a globally unique trace ID and the span ID of each request trace. Leveraging the distributed tracing concept allows for the transmission of custom information, such as canary identifiers. Commonly used distributed tracing analysis products in the industry support the transmission of custom data through the chain. The figure below illustrates the data processing process.

We typically utilize the Tracing Baggage mechanism to convey the corresponding identifiers throughout the full chain. Most Tracing frameworks, including OpenTelemetry, Skywalking, and Jaeger, support the Baggage concept and capability. We simply extract the value of the specified key, such as 'x-mse-tag', from the Baggage at the designated location according to the Tracing protocol in the Envoy outbound Filter and insert it into the HTTP header for Envoy to route.

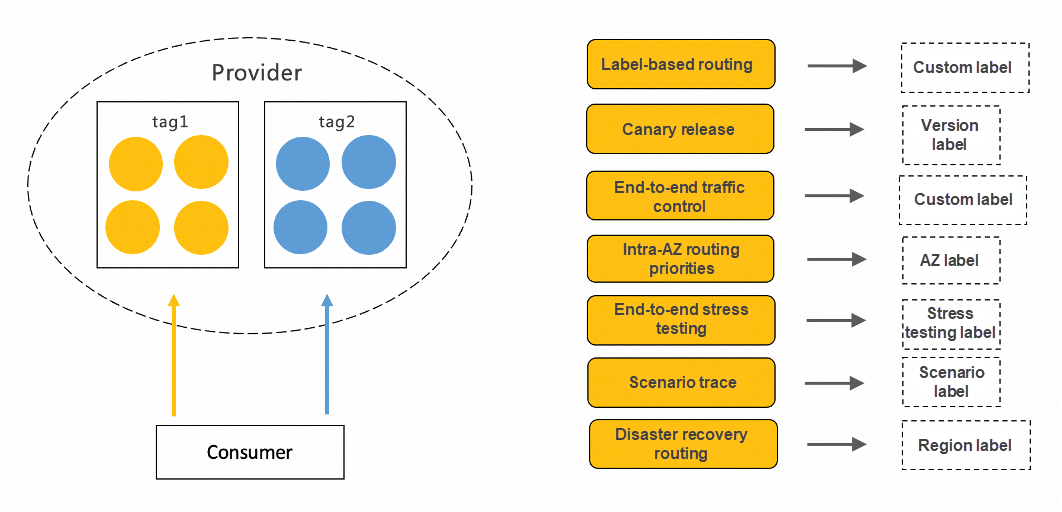

By labeling all nodes under a service with various label names and values, service consumers subscribing to the service node information can access specific groups of the service as needed, essentially a subset of all nodes. Service consumers can employ any label information present on the service provider's node. Depending on the label's meaning, consumers can apply tag routing to broader business scenarios.

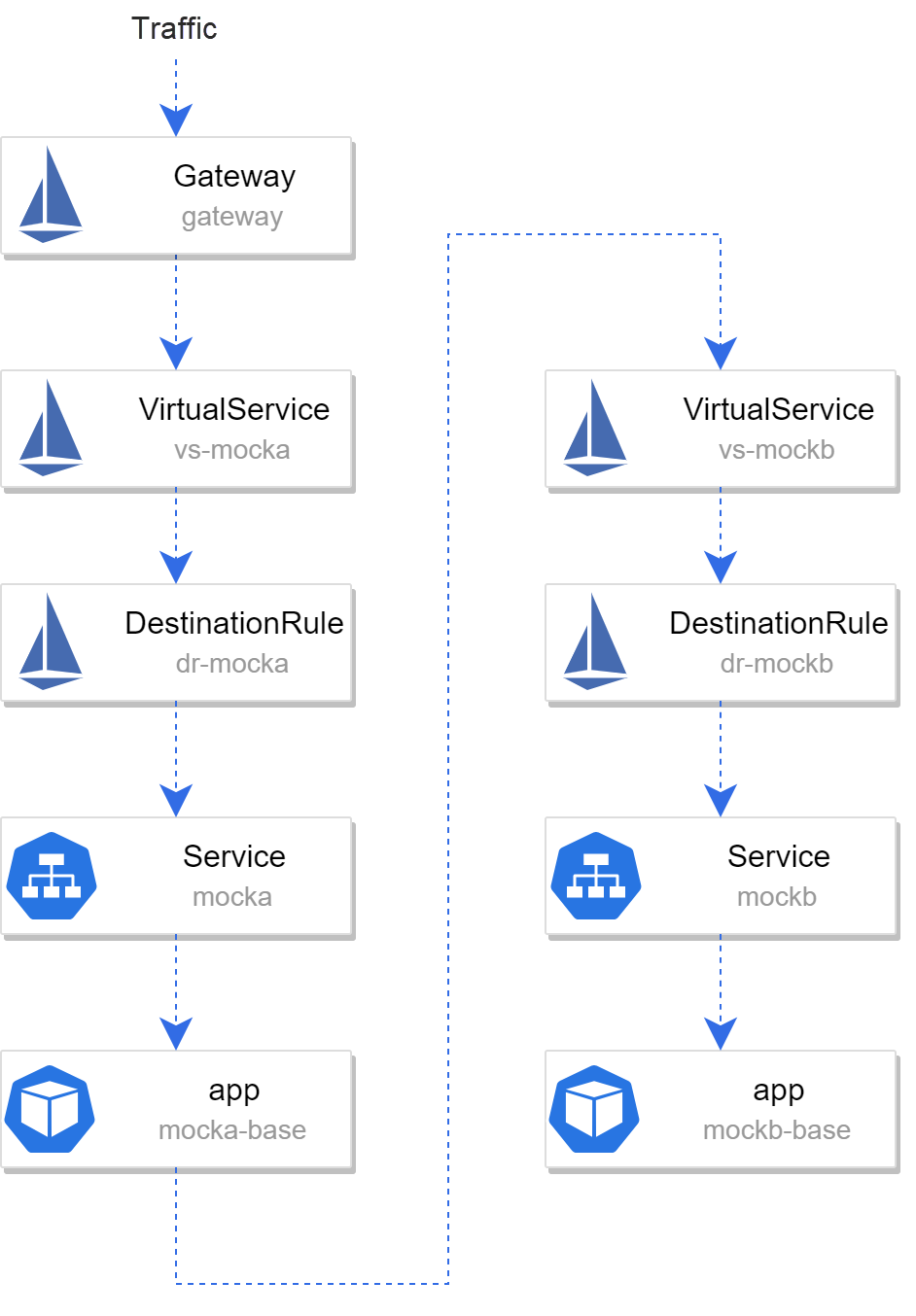

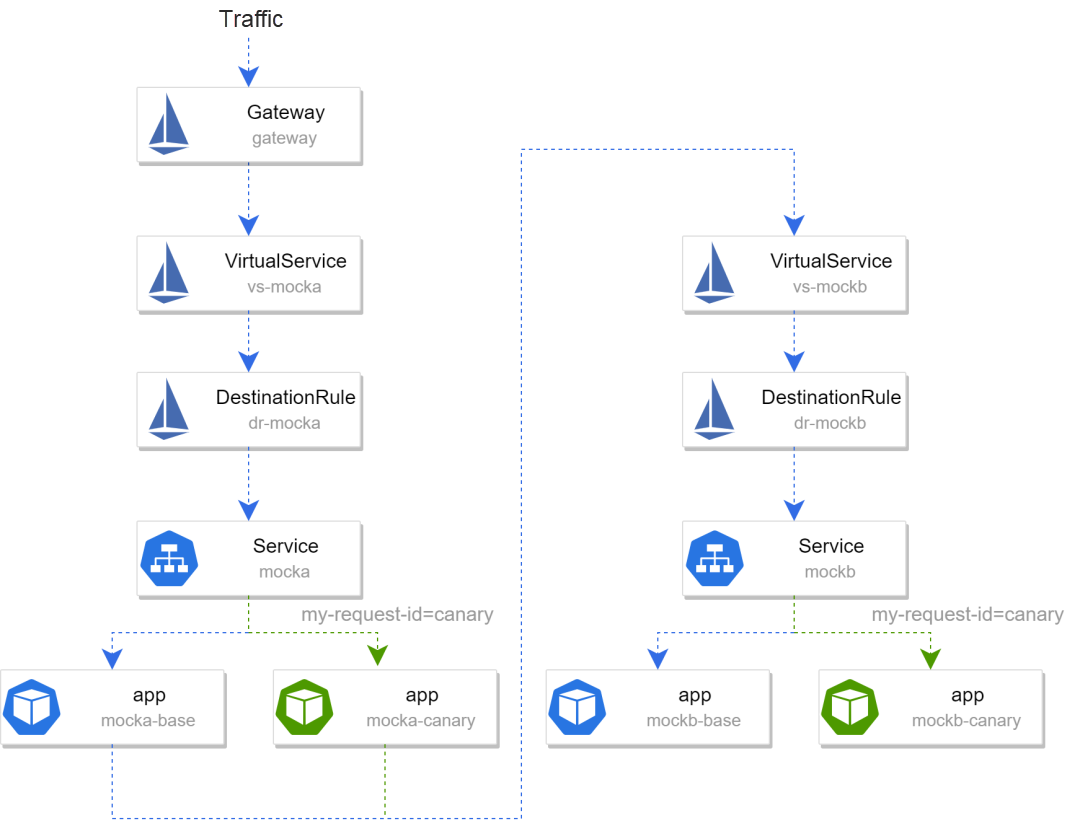

In Istio, you can use Istio Gateway, DestinationRule, and VirtualService to configure routing and external access.

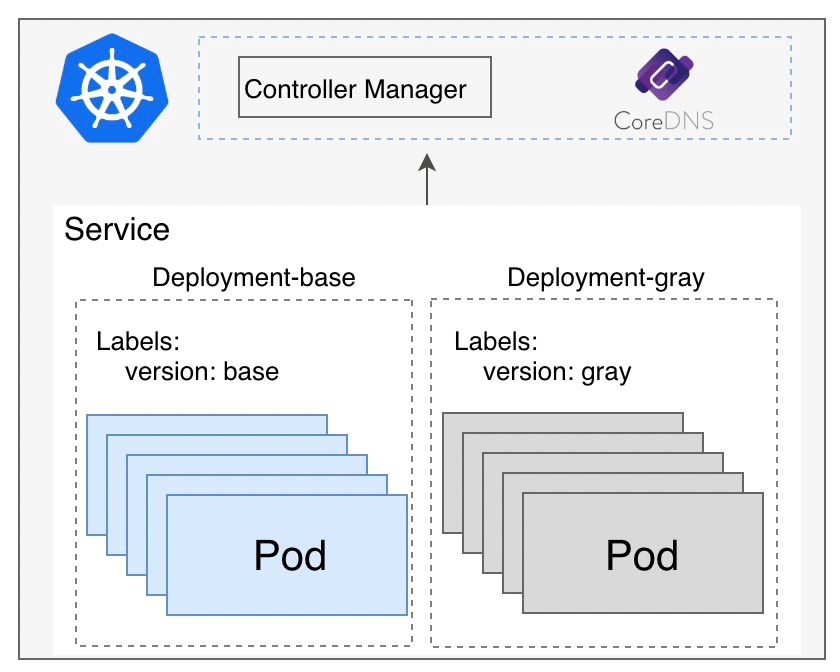

How do we attach different labels to service nodes? This is achieved simply by labeling the node in the pod template within the Deployment of the business application description resource. In business systems that use Kubernetes as service discovery, a service provider exposes the service by submitting a service resource to the ApiServer. The service consumer monitors the endpoint resource associated with the service resource, retrieves the related service pod resource from the endpoint resource, reads the Labels data, and uses it as metadata information for the node.

The technical details discussed above reveal that implementing end-to-end canary operations based on Istio is extremely complex and costly. Initially, creating a canary deployment and adding canary identifiers to the nodes is necessary. Additionally, configuring the traffic routing CRD of Istio, including the VirtualService and DestinationRule rules for each request to match the request identifiers, is crucial. While these technologies may sound acceptable in theory, their practical application is associated with high costs. Furthermore, errors during the configuration process could lead to issues with production traffic, significantly impacting business operations. To mitigate the cost of end-to-end canary practice, the introduction of Kruise Rollout is essential.

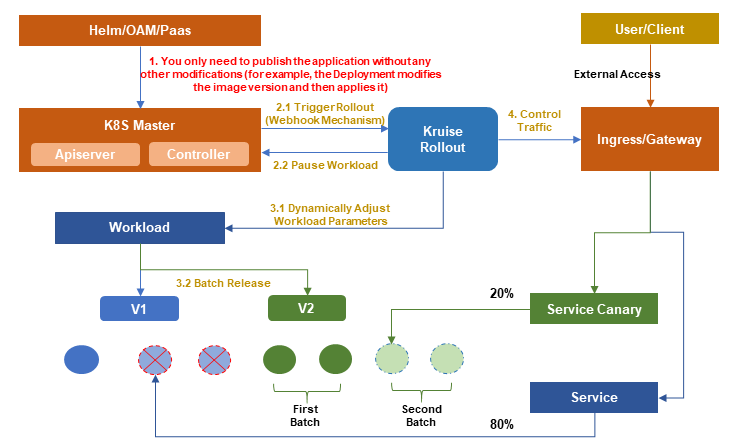

Kruise Rollout[1] is an open-source progressive delivery framework offered by OpenKruise. It is designed to provide a standardized set of bypass Kubernetes release components that combine traffic release with instance canary release, support various release methods (such as canary, blue-green, and A/B testing), and facilitate automatic release processes that are unaware and easy to scale based on custom metrics (such as Prometheus Metrics).

As shown in the preceding figure, OpenKruise Rollout can automate the complex canary release, greatly reducing the implementation cost of canary release. Customers only need to configure the CRD of Kruise Rollout and then directly publish the application to achieve canary release.

We have discussed the details of implementation technologies. Now, let's practice the canary release based on Kruise Rollout and Istio.

Begin by deploying two services: mocka and mockb. The service mocka will call the service mockb, ⚠️and the service will only retain the header my-request-id in traffic, discarding other headers. You can also employ OpenTelemetry to facilitate dynamic traffic label pass-through.

The following is the configuration file for the service:

apiVersion: v1

kind: Service

metadata:

name: mocka

namespace: e2e

labels:

app: mocka

service: mocka

spec:

ports:

- port: 8000

name: http

selector:

app: mocka

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: mocka-base

namespace: e2e

labels:

app: mocka

spec:

replicas: 1

selector:

matchLabels:

app: mocka

template:

metadata:

labels:

app: mocka

version: base

spec:

containers:

- name: default

image: registry.cn-beijing.aliyuncs.com/aliacs-app-catalog/go-http-sample:1.0

imagePullPolicy: Always

env:

- name: version

value: base

- name: app

value: mocka

- name: upstream_url

value: "http://mockb:8000/"

ports:

- containerPort: 8000

---

apiVersion: v1

kind: Service

metadata:

name: mockb

namespace: e2e

labels:

app: mockb

service: mockb

spec:

ports:

- port: 8000

name: http

selector:

app: mockb

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: mockb-base

namespace: e2e

labels:

app: mockb

spec:

replicas: 1

selector:

matchLabels:

app: mockb

template:

metadata:

labels:

app: mockb

version: base

spec:

containers:

- name: default

image: registry.cn-beijing.aliyuncs.com/aliacs-app-catalog/go-http-sample:1.0

imagePullPolicy: Always

env:

- name: version

value: base

- name: app

value: mockb

ports:

- containerPort: 8000The following is the code for processing headers:

// All URLs will be handled by this function

m.HandleFunc("/", func(w http.ResponseWriter, r *http.Request) {

requestId := r.Header.Get("my-request-id")

fmt.Printf("receive request: my-request-id: %s\n", requestId)

response := fmt.Sprintf("-> %s(version: %s, ip: %s)", app, version, ip)

if url != "" {

// Send only my-request-id for new requests.

content := doReq(url, requestId)

response = response + content

}

w.Write([]byte(response))

})Then, deploy Istio Gateway, DestinationRule, and VirtualService to configure the routing and external access:

apiVersion: networking.istio.io/v1beta1

kind: Gateway

metadata:

name: gateway

namespace: e2e

spec:

selector:

istio: ingressgateway

servers:

- hosts:

- "*"

port:

name: http

number: 80

protocol: HTTP

---

apiVersion: networking.istio.io/v1beta1

kind: DestinationRule

metadata:

name: dr-mocka

namespace: e2e

spec:

host: mocka

trafficPolicy:

loadBalancer:

simple: ROUND_ROBIN

subsets:

- labels:

version: base

name: version-base

---

apiVersion: networking.istio.io/v1beta1

kind: DestinationRule

metadata:

name: dr-mockb

namespace: e2e

spec:

host: mockb

trafficPolicy:

loadBalancer:

simple: ROUND_ROBIN

subsets:

- labels:

version: base

name: version-base

---

apiVersion: networking.istio.io/v1beta1

kind: VirtualService

metadata:

name: vs-mocka

namespace: e2e

spec:

gateways:

- simple-gateway

hosts:

- "*"

http:

- route:

- destination:

host: mocka

subset: version-base

---

apiVersion: networking.istio.io/v1beta1

kind: VirtualService

metadata:

name: vs-mockb

namespace: e2e

spec:

hosts:

- mockb

http:

- route:

- destination:

host: mockb

subset: version-baseThe following figure shows the entire structure after deployment:

In local clusters, you can run the following command to obtain the gateway ingress IP and port to access the service:

kubectl -n istio-system get service istio-ingressgateway -o jsonpath='{.spec.ports[?(@.name=="http2")].nodePort}'

kubectl get po -l istio=ingressgateway -n istio-system -o jsonpath='{.items[0].status.hostIP}'Run curl http://GATEWAY_IP:PORT. The following output is returned:

-> mocka(version: base, ip: 10.244.1.36)-> mockb(version: base, ip: 10.244.1.37)Then, deploy Rollout to control mocka and mockb. The following are the policies for configuring Rollout and TrafficRouting:

• A header rule that matches my-request-id=canary is added. Traffic that contains the specified header is routed to the canary environment.

• label istio.service.tag=gray and version=canary are added for the released pod.

Two labels are added to the pod of the new version in the configuration. The purpose of istio.service.tag=gray is to specify the pod containing the label as a subset in the DestinationRule. The lua script automatically adds the subset to the DestinationRule. version=canary is added to overwrite version=baselabel in the original version. If this label is not overwritten, the original DestinationRule will also import the traffic of the stable version to the pod of the new version.

apiVersion: rollouts.kruise.io/v1alpha1

kind: Rollout

metadata:

name: rollouts-a

namespace: demo

annotations:

rollouts.kruise.io/rolling-style: canary

rollouts.kruise.io/trafficrouting: mocka-tr

spec:

disabled: false

objectRef:

workloadRef:

apiVersion: apps/v1

kind: Deployment

name: mocka-base

strategy:

canary:

steps:

- replicas: 1

patchPodTemplateMetadata:

labels:

istio.service.tag: gray

version: canary

---

apiVersion: rollouts.kruise.io/v1alpha1

kind: TrafficRouting

metadata:

name: mocka-tr

namespace: demo

spec:

strategy:

matches:

- headers:

- type: Exact

name: my-request-id

value: canary

objectRef:

- service: mocka

customNetworkRefs:

- apiVersion: networking.istio.io/v1alpha3

kind: VirtualService

name: vs-mocka

- apiVersion: networking.istio.io/v1beta1

kind: DestinationRule

name: dr-mocka

---

apiVersion: rollouts.kruise.io/v1alpha1

kind: Rollout

metadata:

name: rollouts-b

namespace: demo

annotations:

rollouts.kruise.io/rolling-style: canary

rollouts.kruise.io/trafficrouting: mockb-tr

spec:

disabled: false

objectRef:

workloadRef:

apiVersion: apps/v1

kind: Deployment

name: mockb-base

strategy:

canary:

steps:

- replicas: 1

patchPodTemplateMetadata:

labels:

istio.service.tag: gray

version: canary

---

apiVersion: rollouts.kruise.io/v1alpha1

kind: TrafficRouting

metadata:

name: mockb-tr

namespace: demo

spec:

strategy:

matches:

- headers:

- type: Exact

name: my-request-id

value: canary

objectRef:

- service: mockb

customNetworkRefs:

- apiVersion: networking.istio.io/v1alpha3

kind: VirtualService

name: vs-mockb

- apiVersion: networking.istio.io/v1beta1

kind: DestinationRule

name: dr-mockbModify the environment variables in mocka and mockb to version=canary to start the release. Kruise Rollout automatically obtains gateway resources and modifies them. You can find in VirtualService and DestinationRule that VirtualService defines routing rules to route traffic with the header of my-request-id=canary to the canary version, and DestinationRule adds a new subset that contains label istio.service.tag=gray.

apiVersion: networking.istio.io/v1beta1

kind: VirtualService

metadata:

annotations:

kubectl.kubernetes.io/last-applied-configuration: |

{"apiVersion":"networking.istio.io/v1beta1","kind":"VirtualService","metadata":{"annotations":{},"name":"vs-mocka","namespace":"demo"},"spec":{"gateways":["simple-gateway"],"hosts":["*"],"http":[{"route":[{"destination":{"host":"mocka","subset":"version-base"}}]}]}}

rollouts.kruise.io/origin-spec-configuration: '{"spec":{"gateways":["simple-gateway"],"hosts":["*"],"http":[{"route":[{"destination":{"host":"mocka","subset":"version-base"}}]}]},"annotations":{"kubectl.kubernetes.io/last-applied-configuration":"{\"apiVersion\":\"networking.istio.io/v1beta1\",\"kind\":\"VirtualService\",\"metadata\":{\"annotations\":{},\"name\":\"vs-mocka\",\"namespace\":\"demo\"},\"spec\":{\"gateways\":[\"simple-gateway\"],\"hosts\":[\"*\"],\"http\":[{\"route\":[{\"destination\":{\"host\":\"mocka\",\"subset\":\"version-base\"}}]}]}}\n"}}'

creationTimestamp: "2023-09-12T07:49:15Z"

generation: 40

name: vs-mocka

namespace: demo

resourceVersion: "98670"

uid: c7da3a99-789c-4f1e-93a4-caaee41cbe06

spec:

gateways:

- simple-gateway

hosts:

- '*'

http:

# -- Rules automatically added by the lua script.

- match:

- headers:

my-request-id:

exact: canary

route:

- destination:

host: mocka

subset: canary

# --

- route:

- destination:

host: mocka

subset: version-base

---

apiVersion: networking.istio.io/v1beta1

kind: VirtualService

metadata:

annotations:

kubectl.kubernetes.io/last-applied-configuration: |

{"apiVersion":"networking.istio.io/v1beta1","kind":"VirtualService","metadata":{"annotations":{},"name":"vs-mockb","namespace":"demo"},"spec":{"hosts":["mockb"],"http":[{"route":[{"destination":{"host":"mockb","subset":"version-base"}}]}]}}

rollouts.kruise.io/origin-spec-configuration: '{"spec":{"hosts":["mockb"],"http":[{"route":[{"destination":{"host":"mockb","subset":"version-base"}}]}]},"annotations":{"kubectl.kubernetes.io/last-applied-configuration":"{\"apiVersion\":\"networking.istio.io/v1beta1\",\"kind\":\"VirtualService\",\"metadata\":{\"annotations\":{},\"name\":\"vs-mockb\",\"namespace\":\"demo\"},\"spec\":{\"hosts\":[\"mockb\"],\"http\":[{\"route\":[{\"destination\":{\"host\":\"mockb\",\"subset\":\"version-base\"}}]}]}}\n"}}'

creationTimestamp: "2023-09-12T07:49:16Z"

generation: 40

name: vs-mockb

namespace: demo

resourceVersion: "98677"

uid: 7c96ee2b-96ce-48e4-ba6d-cf94171ed854

spec:

hosts:

- mockb

http:

# -- Rules automatically added by the lua script.

- match:

- headers:

my-request-id:

exact: canary

route:

- destination:

host: mockb

subset: canary

# --

- route:

- destination:

host: mockb

subset: version-base

---

apiVersion: networking.istio.io/v1beta1

kind: DestinationRule

metadata:

annotations:

kubectl.kubernetes.io/last-applied-configuration: |

{"apiVersion":"networking.istio.io/v1beta1","kind":"DestinationRule","metadata":{"annotations":{},"name":"dr-mocka","namespace":"demo"},"spec":{"host":"mocka","subsets":[{"labels":{"version":"base"},"name":"version-base"}],"trafficPolicy":{"loadBalancer":{"simple":"ROUND_ROBIN"}}}}

rollouts.kruise.io/origin-spec-configuration: '{"spec":{"host":"mocka","subsets":[{"labels":{"version":"base"},"name":"version-base"}],"trafficPolicy":{"loadBalancer":{"simple":"ROUND_ROBIN"}}},"annotations":{"kubectl.kubernetes.io/last-applied-configuration":"{\"apiVersion\":\"networking.istio.io/v1beta1\",\"kind\":\"DestinationRule\",\"metadata\":{\"annotations\":{},\"name\":\"dr-mocka\",\"namespace\":\"demo\"},\"spec\":{\"host\":\"mocka\",\"subsets\":[{\"labels\":{\"version\":\"base\"},\"name\":\"version-base\"}],\"trafficPolicy\":{\"loadBalancer\":{\"simple\":\"ROUND_ROBIN\"}}}}\n"}}'

creationTimestamp: "2023-09-12T07:49:15Z"

generation: 12

name: dr-mocka

namespace: demo

resourceVersion: "98672"

uid: a6f49044-e889-473c-b188-edbdb8ee347f

spec:

host: mocka

subsets:

- labels:

version: base

name: version-base

# -- Rules automatically added by the lua script.

- labels:

istio.service.tag: gray

name: canary

# --

trafficPolicy:

loadBalancer:

simple: ROUND_ROBIN

---

apiVersion: networking.istio.io/v1beta1

kind: DestinationRule

metadata:

annotations:

kubectl.kubernetes.io/last-applied-configuration: |

{"apiVersion":"networking.istio.io/v1beta1","kind":"DestinationRule","metadata":{"annotations":{},"name":"dr-mockb","namespace":"demo"},"spec":{"host":"mockb","subsets":[{"labels":{"version":"base"},"name":"version-base"}],"trafficPolicy":{"loadBalancer":{"simple":"ROUND_ROBIN"}}}}

rollouts.kruise.io/origin-spec-configuration: '{"spec":{"host":"mockb","subsets":[{"labels":{"version":"base"},"name":"version-base"}],"trafficPolicy":{"loadBalancer":{"simple":"ROUND_ROBIN"}}},"annotations":{"kubectl.kubernetes.io/last-applied-configuration":"{\"apiVersion\":\"networking.istio.io/v1beta1\",\"kind\":\"DestinationRule\",\"metadata\":{\"annotations\":{},\"name\":\"dr-mockb\",\"namespace\":\"demo\"},\"spec\":{\"host\":\"mockb\",\"subsets\":[{\"labels\":{\"version\":\"base\"},\"name\":\"version-base\"}],\"trafficPolicy\":{\"loadBalancer\":{\"simple\":\"ROUND_ROBIN\"}}}}\n"}}'

creationTimestamp: "2023-09-12T07:49:15Z"

generation: 12

name: dr-mockb

namespace: demo

resourceVersion: "98678"

uid: 4bd0f6c5-efa1-4558-9e31-6f7615c21f9a

spec:

host: mockb

subsets:

- labels:

version: base

name: version-base

# -- Rules automatically added by the lua script.

- labels:

istio.service.tag: gray

name: canary

# --

trafficPolicy:

loadBalancer:

simple: ROUND_ROBINRun curl http://GATEWAY_IP:PORT. The following output is returned. All traffic passes through the base version service.

-> mocka(version: base, ip: 10.244.1.36)-> mockb(version: base, ip: 10.244.1.37)Run curl -H http://GATEWAY_IP:PORT -Hmy-request-id:canary. The following output is returned. All traffic passes through the canary version service.

-> mocka(version: canary, ip: 10.244.1.41)-> mockb(version: canary, ip: 10.244.1.42)The following figure shows the traffic of the entire service. Traffic that contains the headermy-request-id=canary is routed to the canary environment, and other traffic to the base environment:

We can further simplify the traffic dyeing by writing EnvoyFilter. In the preceding example, the request contains headermy-request-id that can be passed through. If you want to implement more general header request rules, for example, all traffic that contains agent=pc is routed to the grayscale environment, and other traffic to the baseline environment, you can use EnvoyFilter to dye the traffic at the ingress gateway. The following is an EnvoyFilter that defines a lua script to dye traffic with agent=pc and add my-request-id=canary to it while adding my-request-id=base to other traffic.

apiVersion: networking.istio.io/v1alpha3

kind: EnvoyFilter

metadata:

name: http-request-labelling-according-source

namespace: istio-system

spec:

workloadSelector:

labels:

app: istio-ingressgateway

configPatches:

- applyTo: HTTP_FILTER

match:

context: GATEWAY

listener:

filterChain:

filter:

name: "envoy.filters.network.http_connection_manager"

subFilter:

name: "envoy.filters.http.router"

patch:

operation: INSERT_BEFORE

value:

name: envoy.lua

typed_config:

"@type": "type.googleapis.com/envoy.extensions.filters.http.lua.v3.Lua"

inlineCode: |

function envoy_on_request(request_handle)

local header = "agent"

headers = request_handle:headers()

version = headers:get(header)

if (version ~= nil) then

if (version == "pc") then

headers:add("my-request-id","canary")

else

headers:add("my-request-id","base")

end

else

headers:add("my-request-id","base")

end

endWith other configurations unchanged, run curl http://GATEWAY_IP:PORT. The following output is returned. All traffic passes through the base version service.

-> mocka(version: base, ip: 10.244.1.36)-> mockb(version: base, ip: 10.244.1.37)Run curl -H http://GATEWAY_IP:PORT -Hagent:pc. The following output is returned. EnvoyFilter automatically adds headermy-request-id=canary for the traffic, thus all traffic passes through the new version of the service.

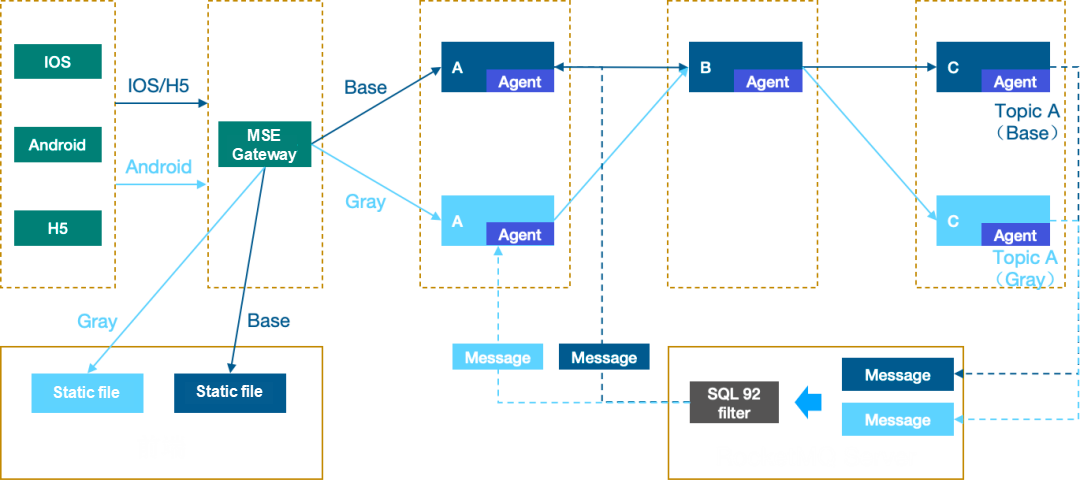

-> mocka(version: canary, ip: 10.244.1.41)-> mockb(version: canary, ip: 10.244.1.42)In addition to the open source Istio, Kruise Rollout also supports a complete end-to-end canary release solution with MSE. You can perform the following operations[2] to quickly implement a systematic end-to-end canary release.

The end-to-end canary release of MSE can effectively control the traffic flow across front-end, gateway, back-end microservices, and other components. Apart from RPC/HTTP traffic, asynchronous calls like MQ traffic also comply with end-to-end "traffic lane" call rules. You can use Kruise Rollout and MSE to easily implement end-to-end canary release of microservices and improve the efficiency and stability of microservice release.

[1] Kruise Rollout

https://openkruise.io/rollouts/introduction

[2] Operation document

https://www.alibabacloud.com/help/en/mse/user-guide/implement-mse-based-end-to-end-canary-release-by-using-kruise-rollouts

Cloud-native Offline Workflow Orchestration: Kubernetes Clusters for Distributed Argo Workflows

640 posts | 55 followers

FollowAlibaba Cloud Native Community - September 18, 2023

Alibaba Container Service - April 3, 2025

Alibaba Cloud Native Community - November 22, 2023

Alibaba Cloud Native Community - September 20, 2022

Alibaba Cloud Native Community - July 27, 2023

Alibaba Cloud Native Community - December 29, 2023

640 posts | 55 followers

Follow Best Practices

Best Practices

Follow our step-by-step best practices guides to build your own business case.

Learn More Cloud-Native Applications Management Solution

Cloud-Native Applications Management Solution

Accelerate and secure the development, deployment, and management of containerized applications cost-effectively.

Learn More Container Service for Kubernetes

Container Service for Kubernetes

Alibaba Cloud Container Service for Kubernetes is a fully managed cloud container management service that supports native Kubernetes and integrates with other Alibaba Cloud products.

Learn More Alibaba Cloud Service Mesh

Alibaba Cloud Service Mesh

Alibaba Cloud Service Mesh (ASM) is a fully managed service mesh platform that is compatible with Istio.

Learn MoreMore Posts by Alibaba Cloud Native Community