By Wangde, a Senior Database Development Engineer at Alibaba Cloud

First, let's understand what a transaction really means. To implement complex semantics, databases allow users to flexibly perform add, delete, modify, and query operations. In many scenarios, a set of operations takes effect as a group which is called a transaction. A transaction is the basic unit of database status changes, and it contains one or more operations (such as multiple SQL statements).

A typical transfer transaction involves three operations:

1) Check whether the balance of account A is sufficient.

2) If sufficient, 100 RMB is deducted from account A.

3) 100 RMB is added to account B.

Either all or none of these operations take effect. If an error occurs during transaction execution, all operations that have been performed are withdrawn. This is called the atomicity of transactions.

If some operations cannot be withdrawn after a failure occurs, the database enters an incorrect state. For example, an error occurs when 100 RMB has been deducted from account A but the balance of account B does not increase, and if the deduction operation fails to be withdrawn, 100 RMB is lost. Atomicity can be achieved through logging (values before changes). Some databases cache transaction operations locally. In case of a failure, the cached operations are directly discarded.

After the transaction is committed, the result cannot be changed. Even if the system breaks down, the database status after restart will still be consistent with that before downtime. This is called the persistence of transactions.

As long as data is stored on non-volatile storage media, downtime will not cause data loss. Therefore, the following methods are used to ensure data persistence:

1) Ensure that all changes are stored in the disk before the transaction is complete.

2) Before the transaction is committed, store the transaction changes in the disk as logs and recover the memory status of the database system according to the logs during restart.

Generally, the second method is used.

Databases allow concurrent execution of transactions to improve resource utilization, transaction execution efficiency, and reduce response time (RT). However, conflicts are inevitable if multiple transactions are executed on the same object at the same time. Also, the intermediate status of a transaction may be exposed to other transactions. As a result, some transactions write incorrect values to the database based on the intermediate status of other transactions. A mechanism is required to ensure that concurrent transactions don't affect transaction execution. In this way, it will seem only the current transaction is being executed. This is called the isolation of transactions.

Isolation allows focusing on the logic of a single transaction without considering the impact of concurrent transaction execution. The concurrency control mechanism is used to ensure database isolation. Due to the high isolation requirements on the execution sequence of transactions, many databases offer different options. These operations represent different trade-offs between performance and isolation. The different options are transaction isolation levels.

Databases reflect the real world, so they must impose the restrictions we face in the real world. For example, whatever transfers are made between accounts, the total balance cannot change; age cannot be negative; and genders can only include male, female, and transgender. These constraints cannot be omitted during transaction execution in order to ensure that a transaction changes from one correct status to another correct status. This is called the consistency of transactions.

Unlike the atomicity, persistence, and isolation of transactions, all of which are guaranteed by database implementation, consistency depends on database implementation (atomicity, persistence, and isolation ensure consistency) as well as on the transaction logic written in the application.

To ensure transaction isolation, all transactions are executed in sequence so that transactions do not interfere with each other. However, the efficiency of serial execution is very low. To increase throughput and reduce RT, a database usually allows multiple transactions to run simultaneously. Therefore, the concurrency control module needs to ensure serializability, which ensures the effects of concurrent execution and serial execution are the same, in order to meet the isolation requirements.

To simply describe how the concurrency control module ensures isolation, we use a simplified transaction model here. A transaction is composed of one or more operations, and each operation is split into a series of read and write operations. A sequence for executing all the read and write operations in a batch of concurrent transactions is defined as a schedule.

For example, when T1 and T2 are executed at the same time, the schedule may be as follows:

T1.read(A),T2.read(B),T1.write(A),T1.read(B),T2.write(A)

If the schedule for concurrent transaction execution is equivalent to that for serial execution, serializability is achieved. If the sequence of read and write operations in a schedule is constantly changing, the schedule becomes a serializable schedule. However, some sequence changes may lead to different transaction execution results. If a change in the sequence of two adjacent operations in a schedule results in a different transaction execution result, the two operations conflict. If two operations conflict, they must:

Therefore, common conflicts include:

As long as the schedule of concurrent transactions can become a serial schedule where the execution sequence of conflicting operations remains unchanged and only that of non-conflicting operations changes, the different possible serial schedules are considered equivalent.

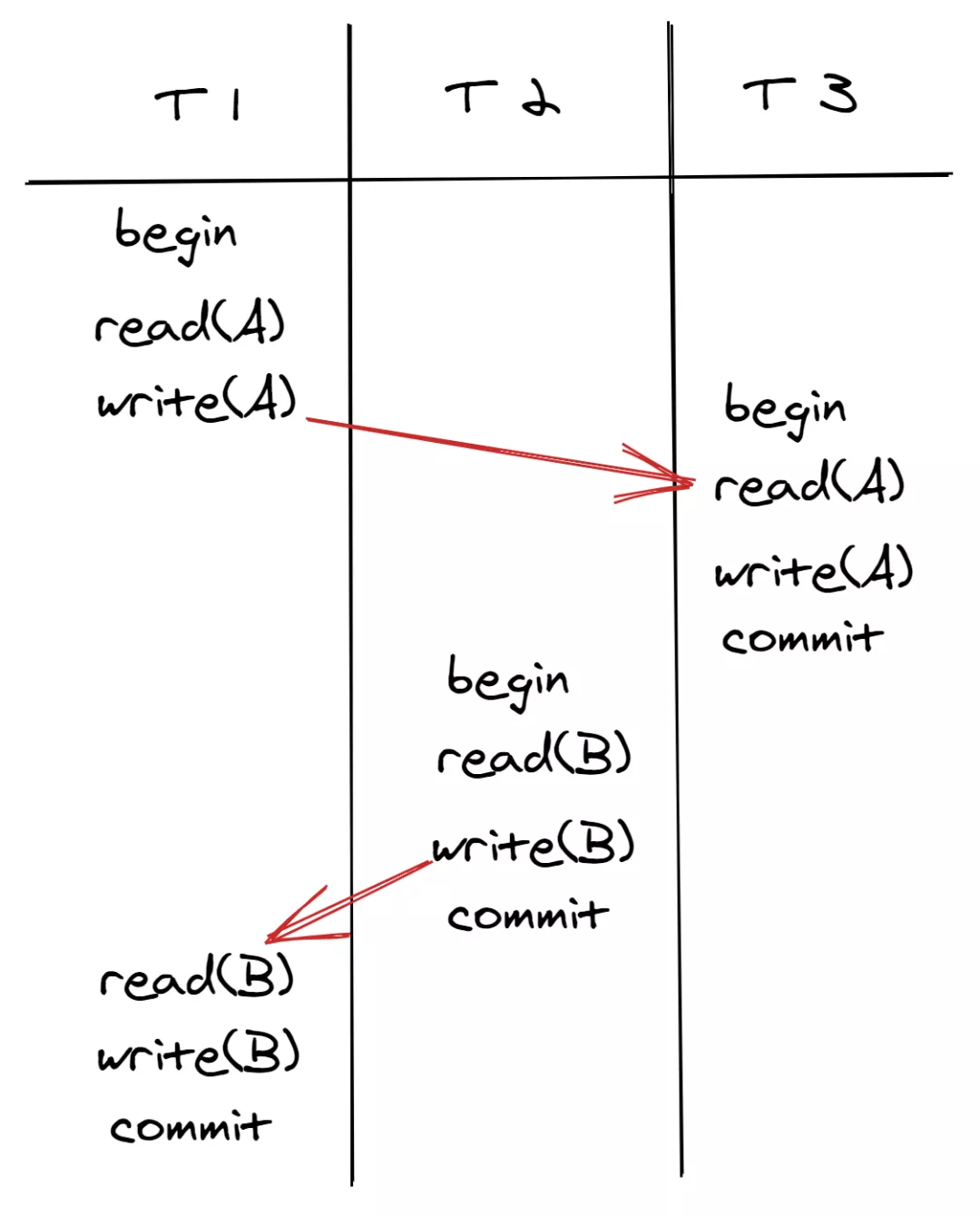

This equivalence judgment method is called conflict equivalent. Schedules are conflict equivalent when conflicting operations in the two schedules are executed in the same sequence. As shown in the following figures, T1 write(A) conflicts with T3 read(A), and T1 is executed before T3; T1 read(B) conflicts with T2 write(B), and T2 is executed before T1. Therefore, the schedule in the first figure below is equivalent to the serial schedule (in the sequence of T2, T1, and T3) in the second figure below. The execution sequence in the first figure below meets the requirements of conflict serializability.

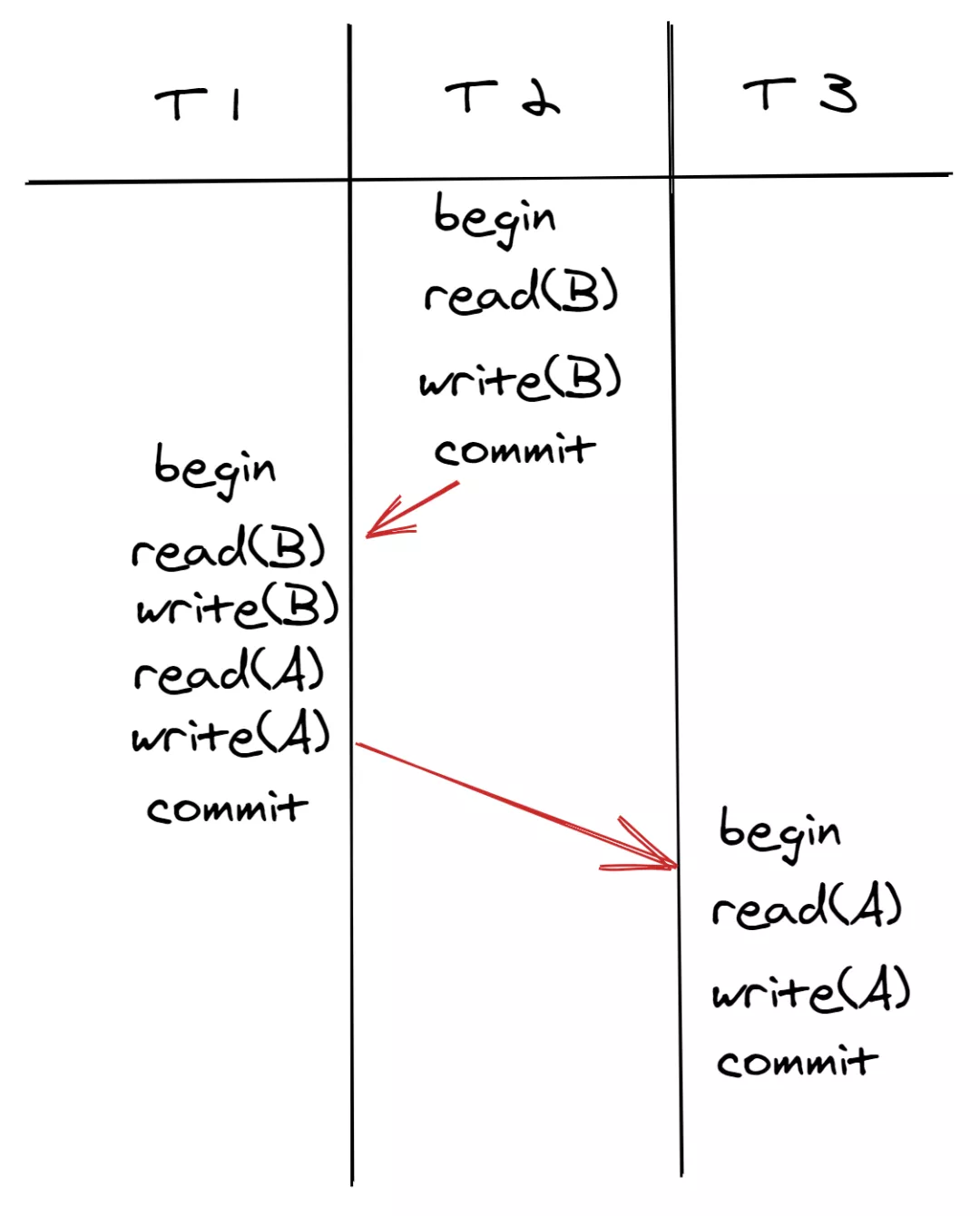

For another example, assume T1 read(A) conflicts with T2 write(A), and T1 is executed before T2; T2 write(A) conflicts with T1 write(A), and T2 is executed before T1. The schedule in the following figure is not equivalent to any serial schedule. Its execution sequence does not meet the requirements of conflict serializability and would result in update loss.

In general, serializability requirements are high. To improve the concurrency performance of the database system, many users are willing to reduce isolation requirements to achieve better performance. A database system provides multiple isolation levels. Now that we understand the requirements of concurrency control, we must find a way to meet them. The following section describes common methods of concurrency control based on conflict detection results.

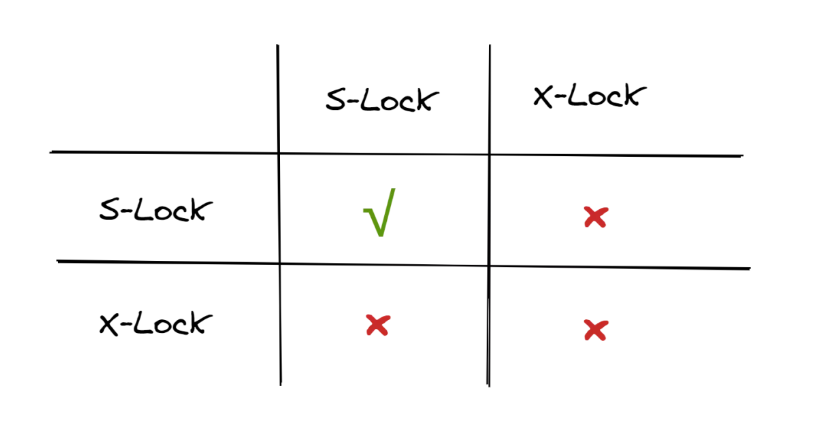

The easiest way to ensure that operations are performed in the correct order is to lock the access object. The lock manager of the database system locks and releases locks for access objects, ensuring that only transactions with locks operate on the corresponding objects. Locks are divided into S-Locks and X-Locks. S-Locks are shared locks for read requests and X-Locks are exclusive locks for write requests. Only when two read requests are compatible with each other can operations be executed on the same object at the same time. Read-write and write-write operations are executed in sequence due to lock conflicts.

Two-phase locking (2PL) is the most common lock-based concurrency control protocol in databases. It consists of two phases:

Phase 1 - Growing: A transaction requests all the locks it needs from the lock manager (locking may fail).

Phase 2 - Shrinking: The transaction releases the locks obtained in the Growing phase and cannot request new locks.

Why locking and lock releasing are implemented in two phases?

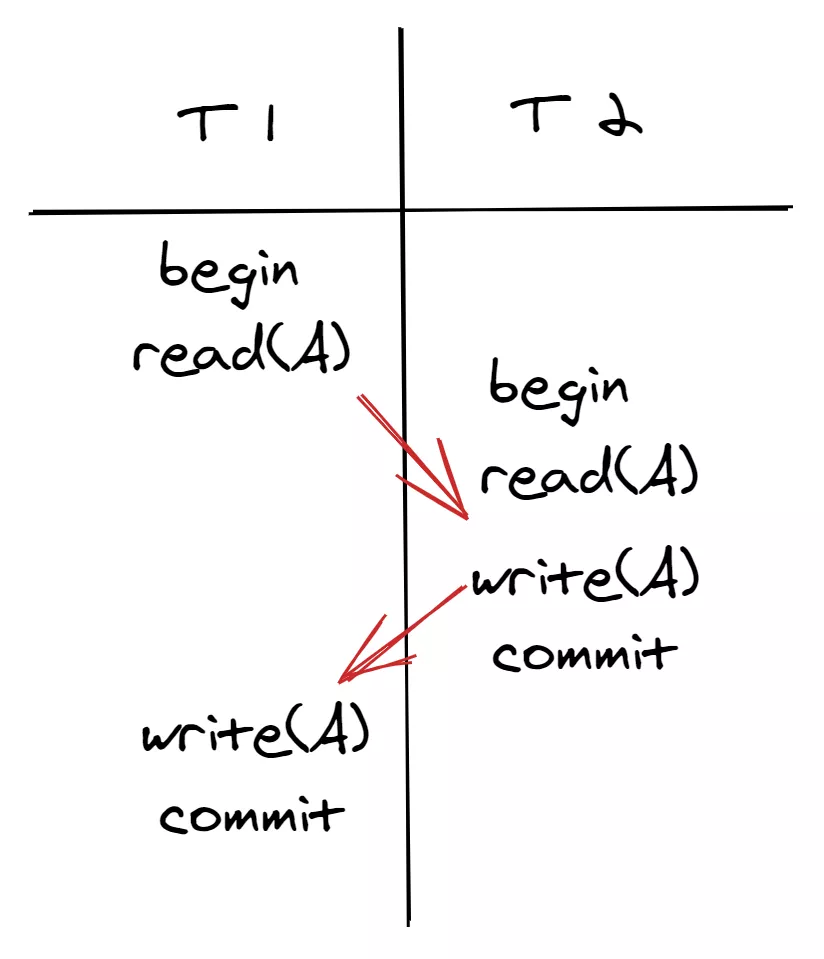

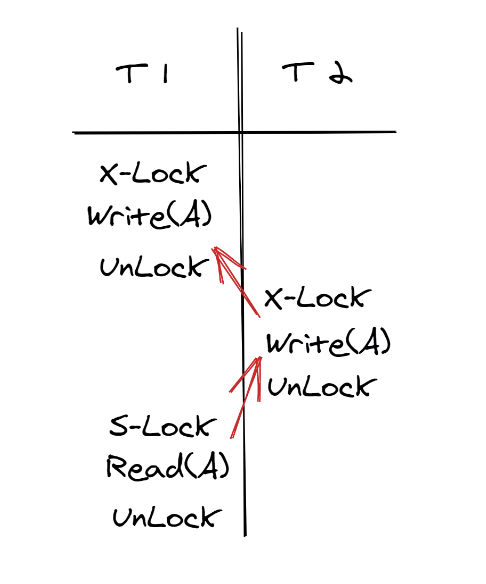

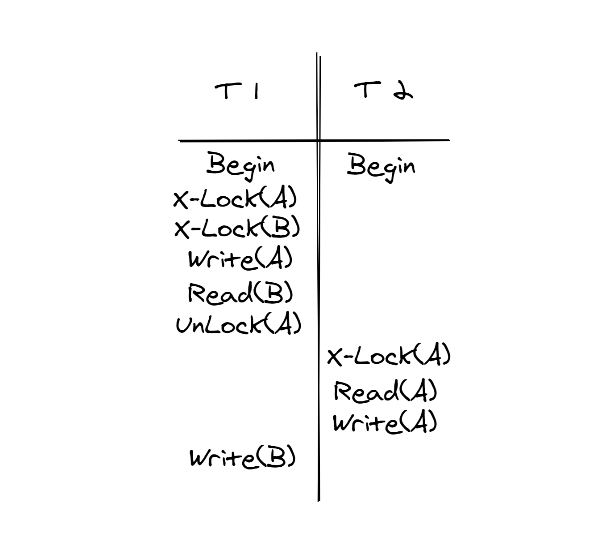

2PL-based concurrency control aims to achieve serializability. If the concurrency control module does not apply for all the required locks in advance but can apply for locks again after locks are released, an object may be modified by other transactions in the interval of two operations on the object (as shown in the following figure). In this case, conflict serializability cannot be achieved, and inconsistencies may occur. The following is an example of an update loss.

2PL ensures conflict serializability because transactions must obtain all the required locks before they are executed. For example, if transaction A, which is currently being executed, conflicts with transaction B, transaction B must either have already been executed or is waiting to be executed. Therefore, the execution sequence of these conflicting operations is consistent with that of the conflicting operations when transactions A and B are executed in series (either as BA or AB).

Is 2PL the only way to guarantee transaction consistency and isolation? Let's take a look at the example in the following figure.

The execution sequence in the figure complies with 2PL, but T2 reads uncommitted data. If T1 rolls back at this time, it will trigger a cascading rollback (changes of T1 must be shielded from all transactions). Therefore, databases often use strong strict two-phase locking (SS2PL). Unlike 2PL, SS2PL is used to release locks only after a transaction is executed in the Shrinking phase, which prevents uncommitted data from being read.

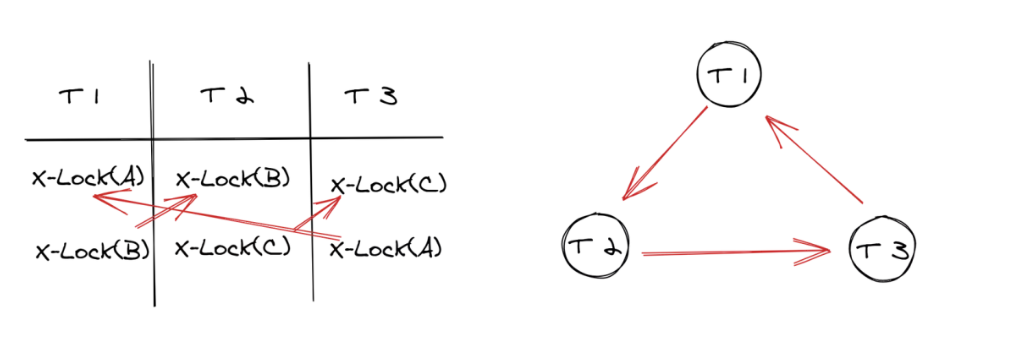

Deadlocks may occur upon locking or lock-releasing for concurrent transactions. For example, this occurs when transaction 1 has lock A and is waiting for lock B, while transaction 2 has lock B and is waiting for lock A. Currently, two solutions are available for deadlocks:

If there are only row locks, the transaction needs to acquire 0.1 billion row locks to update 0.1 billion records, which will consume a large amount of memory.

Locks are used for protecting access objects, such as attributes, tuples, pages, and tables, in databases. Locks are divided into row locks, page locks, and table locks. No one implements locks for attributes and smaller objects. In an online transaction processing (OLTP) database, the minimum operating unit is a row. The fewer locks a transaction needs to acquire, the better. For example, maybe one table lock is enough to update 0.1 billion records.

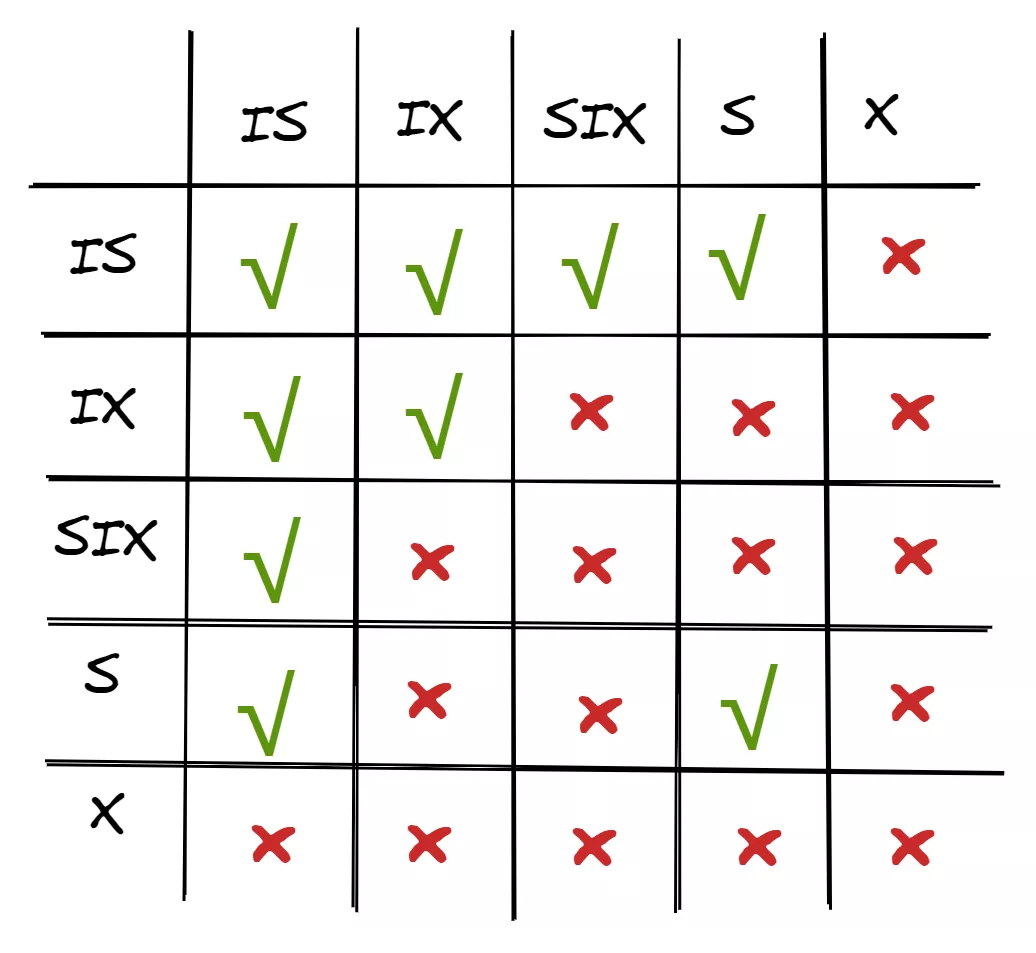

A higher-level lock, such as a table lock, effectively reduces resource usage and the number of lock checks but severely limits concurrency. A lower-level lock, such as a row lock, facilitates concurrent execution. However, a large number of lock checks are required for a large number of request objects. The database system introduces intention locks to solve the concurrency limit problems of high-level locks.

The following figure shows the compatibility relationship between intention locks and ordinary locks.

When IX is enabled for a table, some rows in the table are being modified.

1) To initiate a data definition language (DDL) operation on the table, the X-Lock of the table needs to be requested. If the table holds the IX-Lock, the transaction immediately waits without checking row locks row by row. This effectively reduces the check overheads.

2) The read and write requests from other transactions are not blocked because an IX-Lock rather than an X-Lock is added to the table (add an IX-Lock to the table and then an S- or X-Lock to the record). If the transaction does not involve any rows with the X-Lock, the transaction is executed normally, which increases the concurrency of the system.

Each transaction is assigned a timestamp to determine the transaction execution sequence. If the timestamp of transaction 1 is earlier than that of transaction 2, the database system must ensure that transaction 1 is executed before transaction 2. Timestamps can be assigned to transactions by a physical clock, logical clock, or hybrid clock.

For T/O-based concurrency control, read and write operations do not need to be locked, and each record contains the last modification and read timestamp of the transaction. When the timestamp of a transaction is earlier than the recorded timestamp, the transaction must be aborted and then re-executed because future data cannot be read. Assume that WTS(X) and RTS(X) are the read and write timestamps on record X, and the timestamp of the transaction is TTS. Visibility judgment is as follows:

Read

Write

TTS must be greater than RTS(X) to prevent the read and write conflicts. When the timestamp of a read request is RTS and record X has been read, RTS(X) is set to RTS. If the TTS of the new transaction is less than RTS(X) and the update succeeds, the update is visible after the RTS read request reads the record again, which violates repeatable read. The latest read and write timestamp is stored in the record, ensuring conflict serializability.

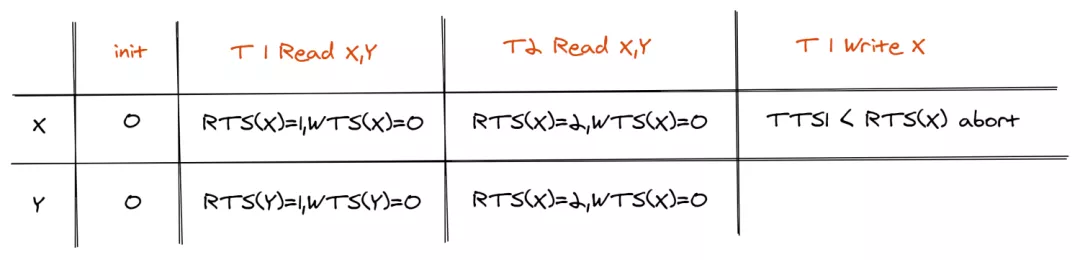

This method also avoids write skew. For example, assume that in the initial state, there are records X and Y such that: X = -3, Y = 5, X + Y > 0, and RTS(X) = RTS(Y) = WTS(X) = WTS(Y) = 0. The TTS1 timestamp of transaction T1 is 1, and the TTS2 timestamp of transaction T2 is 2.

This method has the following defects:

When a transaction is being executed, it maintains its own write operations (basic T/O is used to write data to the database during the transaction execution) and the corresponding RTS and WTS timestamps. Before the transaction is committed, it determines whether the update conflicts with data in the database. If not, it writes the update to the database. Optimistic concurrency control (OCC) is divided into three phases:

After the read and write phase, the transaction enters the validation phase, indicating that transaction preparation is completed, and the transaction will be committed. The time when the transaction enters the validation phase is used as the timestamp of the record row for ordering. The transaction start time is not used because transaction execution may last for a long period of time and other transactions that are executed later may be committed first. This increases the probability of transaction conflicts. When a transaction with a smaller timestamp is written into the database later, it will be aborted.

Assume that there are only two transactions T1 and T2, they modify the same rows of data, and the timestamp of T1 is less than that of T2 (T1 is executed before T2).

1) T1 is in the validation phase, while T2 is still in the read and write phase. In this case, data can be committed as long as the read and write operations of T1 and T2 do not conflict with each other.

2) After the validation phase, T1 enters the write phase until it is committed. Afterward, it is irreversible. The read and write operations of T2 before T1 enters the write phase certainly do not conflict with the operations of T1 (because T1 validation succeeds). The read and write operations of T2 after T1 enters the write phase may conflict with the T1 operations to be committed. Therefore, T2 enters the validation phase.

The database maintains multiple physical versions of a record. During data writing in a transaction, a new version of written data is created. Read requests obtain the latest version of data based on the snapshot information at the beginning of the transaction or statement. When this method is used, writing does not block reading, and reading does not block writing. Read requests will never fail (such as in single-version T/O) or wait (such as in single-version 2PL) due to such conflicts. In databases, read requests generally outnumber write requests. Therefore, almost all mainstream databases use this optimization technology.

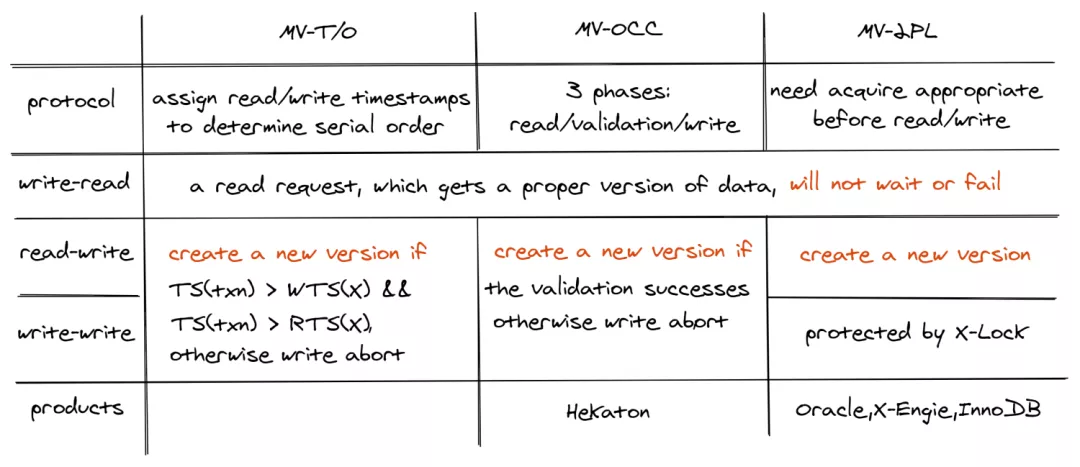

Multiversion concurrency control (MVCC) is an optimization technique for read and write requests. It does not completely solve the concurrency problem of databases, so it must be used with the preceding concurrency control techniques for complete concurrency control. Common concurrency control techniques include multiversion two-phase locking (MV2PL), multiversion timestamp ordering (MVTO), and multiversion optimistic concurrency control (MVOCC).

When MVCC is used, the storage of multiversion data and the recycling of redundant multiversion data must be considered.

Multiversion data is stored in two modes:

1) In the append-only mode, the data of old and new versions are stored in the same tablespace, for example, a storage engine based on Log-Structured Merge-Tree (LSM Tree).

2) The primary tablespace records data of the latest version, and pre-images are recorded in other tablespaces or data segments. For example, multiversion information about InnoDB is recorded in undo logs. The recycling of multiversion data is also called garbage collection (GC). Earlier-version records that cannot be obtained by any read requests should be deleted promptly.

This article introduces the lock-based (conflict prevention before the transaction starts), T/O-based (conflict judgment during transaction execution), and OCC-based (conflict validation before transaction committing) concurrency control mechanisms for transactions based on conflict handling timing (conflict detection results).

Different implementation methods are suitable for different workloads. A more optimistic concurrency control method is suitable for workloads with fewer concurrency conflicts. MVCC can remove the interference between read-only and read-write transactions and improve the read concurrency of transactions. Therefore, most mainstream database systems use it.

AnalyticDB: Alibaba Cloud's Cloud-native Data Warehouse Broke the TPC-DS World Record

ApsaraDB - November 19, 2025

5544031433091282 - April 7, 2025

Alibaba Clouder - April 8, 2018

andy.zh - November 7, 2019

ApsaraDB - July 29, 2022

ApsaraDB - February 22, 2022

Database for FinTech Solution

Database for FinTech Solution

Leverage cloud-native database solutions dedicated for FinTech.

Learn More ApsaraDB RDS for MySQL

ApsaraDB RDS for MySQL

An on-demand database hosting service for MySQL with automated monitoring, backup and disaster recovery capabilities

Learn More ApsaraDB RDS for PostgreSQL

ApsaraDB RDS for PostgreSQL

An on-demand database hosting service for PostgreSQL with automated monitoring, backup and disaster recovery capabilities

Learn More ApsaraDB RDS for MariaDB

ApsaraDB RDS for MariaDB

ApsaraDB RDS for MariaDB supports multiple storage engines, including MySQL InnoDB to meet different user requirements.

Learn MoreMore Posts by ApsaraDB