By Qingshan Lin (Longji)

The development of message middleware has spanned over 30 years, from the emergence of the first generation of open-source message queues to the explosive growth of PC Internet, mobile Internet, and now IoT, cloud computing, and cloud-native technologies.

As digital transformation deepens, customers often encounter cross-scenario applications when using message technology, such as processing IoT messages and microservice messages simultaneously, and performing application integration, data integration, and real-time analysis. This requires enterprises to maintain multiple message systems, resulting in higher resource costs and learning costs.

In 2022, RocketMQ 5.0 was officially released, featuring a more cloud-native architecture that covers more business scenarios compared to RocketMQ 4.0. To master the latest version of RocketMQ, you need a more systematic and in-depth understanding.

Today, Qingshan Lin, who is in charge of Alibaba Cloud's messaging product line and an Apache RocketMQ PMC Member, will provide an in-depth analysis of RocketMQ 5.0's core principles and share best practices in different scenarios.

Today, we're going to explore the fundamentals of Message Queue for Apache RocketMQ 5.0. As mentioned earlier, RocketMQ 5.0 is a real-time data processing platform that integrates messages, events, and streams. We often refer to RocketMQ as the de facto standard in the business messaging field, and many Internet companies use it in business messaging scenarios, while using Kafka in big data scenarios.

Here, we repeatedly mention two key terms: message and business message, which refer to distributed application decoupling, the core business design of RocketMQ. In this course, you'll learn more about the advantages of RocketMQ 5.0 in business messaging scenarios and why it can become the de facto standard in this field.

This course is divided into three parts. The first part discusses the application decoupling scenario of business messages. What specific decoupling is achieved? The second part covers the basic features of RocketMQ in business messaging scenarios. The third part will further elaborate on how numerous message queues in the industry can achieve application decoupling and what enhancements RocketMQ has made to its basic features.

The classic scenario of RocketMQ in the business messaging field is application decoupling, which was also the core scenario of Alibaba e-commerce's distributed Internet architecture when RocketMQ was first developed. It mainly handles the asynchronous integration of distributed applications (microservices) to achieve application decoupling, the ultimate goal of all software architectures.

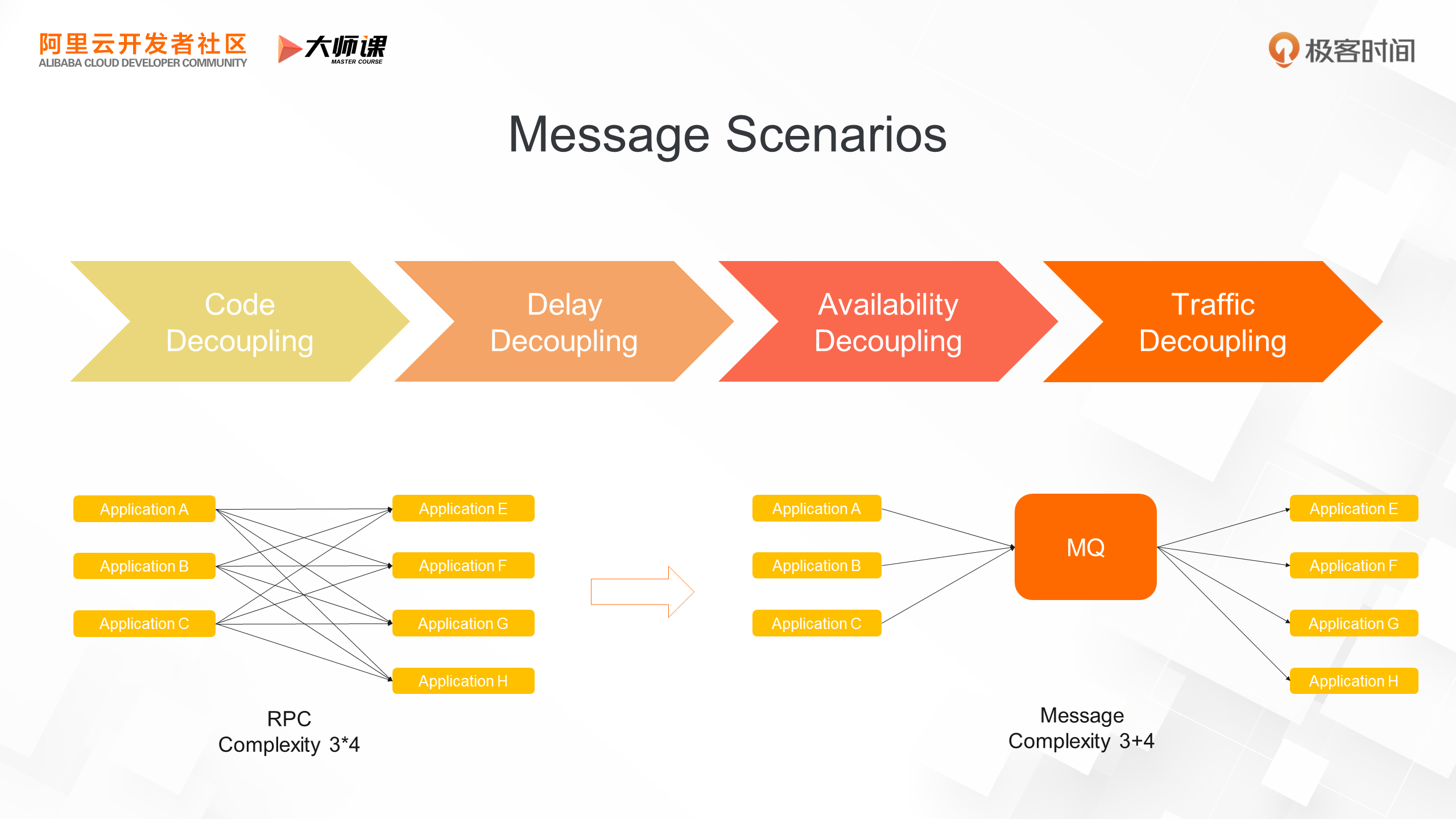

The following figure shows a comparison between synchronous RPC and asynchronous messages for distributed applications (microservices). The figure shows that in a business system, there are three upstream applications and four downstream applications. If synchronous RPC is used, the dependency complexity will be "3*4". However, if asynchronous messages are used, the complexity can be simplified to "3+4" dependency complexity, from multiplication to addition. Four decoupling advantages can be obtained by introducing the message queue to implement asynchronous integration of applications.

The first one is code decoupling, which significantly improves business agility. When using synchronous invocation, each time you extend business logic, the upstream application needs to explicitly call the downstream application interface, resulting in direct code coupling. This restricts business iteration, as the upstream application needs to make changes and release. By using a message queue, you only need to add downstream applications to subscribe to a topic, making the upstream and downstream applications transparent to each other and enabling independent, flexible, and fast iteration.

The second is latency decoupling. When using synchronous calls, the number of remote calls for a user operation increases with business logic, leading to slower business responses, performance degradation, and unsustainable business development. With a message queue, no matter how many services are added, upstream applications can respond to online users by calling the message queue sending interface once, with a constant delay of basically within 5ms.

The third is availability decoupling. When using synchronous calls, if any downstream service is unavailable, the entire link fails. The availability under this structure is based on serial circuit connectivity, which decays with increasing calls. Even with partial call failure, state inconsistency occurs. By using RocketMQ for asynchronous integration, as long as the RocketMQ service is available, users' business operations are available. The RocketMQ service is provided through a Broker cluster consisting of multiple pairs of primary and secondary nodes, ensuring high availability.

The last one is traffic decoupling, also known as peak-load shifting. When using synchronous calls, upstream and downstream capacities must be aligned; otherwise, cascading unavailability occurs. Full capacity alignment requires significant effort for full-link stress testing and additional machine costs. By introducing RocketMQ, downstream businesses that don't require high real-time performance can consume messages at their best, ensuring system stability, reducing machine costs, and lowering R&D and O&M costs.

Next, we will take Alibaba's e-commerce business as an example to understand the advantages of RocketMQ's application decoupling.

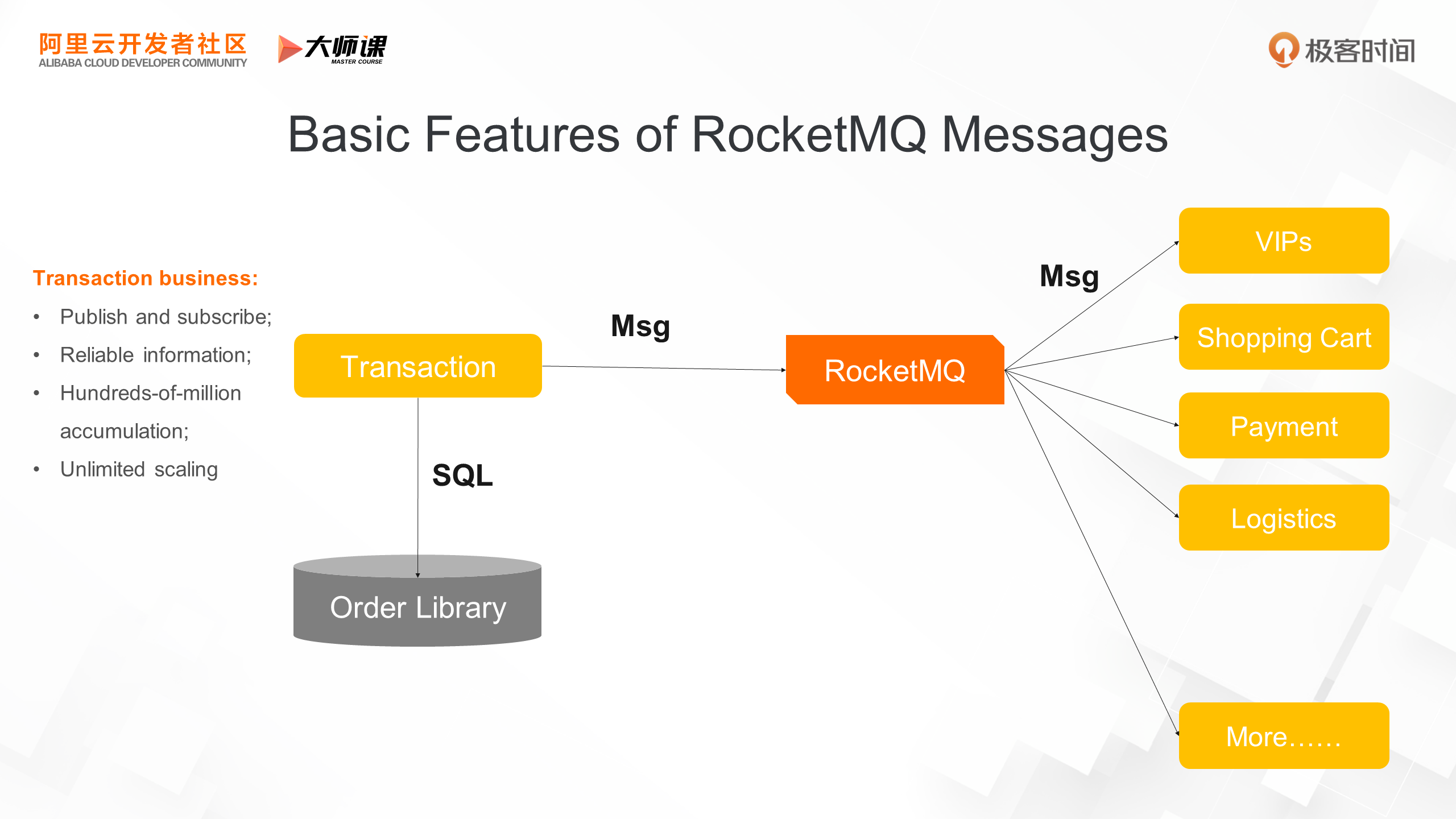

This is a simplified diagram of Alibaba's transaction architecture. On the left is the transaction application. When a user places an order on Taobao, the transaction application is called to create an order. It then writes the order to the database, produces an order creation message to RocketMQ, and returns a successful order creation interface to the end user. After the transaction process, RocketMQ delivers the order creation message to the downstream applications. Upon receiving the order message, the member application sends points and gold coins to the buyer, triggering user incentive-related business. The shopping cart application deletes the goods in the shopping cart to prevent repeated purchases by users. Meanwhile, the payment system and logistics system also promote the payment link and performance link based on the change in order status.

The e-commerce architecture based on RocketMQ greatly improves the agility of Alibaba's e-commerce business. The upstream core transaction system does not care about which applications are subscribing to transaction messages, and the latency and availability of transaction applications remain at a high level. It only relies on a small number of core systems and RocketMQ, which is relatively convergent and will not be affected by hundreds of downstream applications. The downstream business types of transactions vary, and there are a large number of business scenarios that do not require real-time consumption of transaction data, such as logistics scenarios that can tolerate certain delays. The hundreds-of-million accumulation capabilities of RocketMQ greatly reduce machine costs. The ShareNothing architecture of RocketMQ has unlimited scale-out capabilities. It has supported the rapid growth of Double 11 message peaks for 10 consecutive years, reaching hundreds of millions of TPS a few years ago.

Next, let's take a look at the differentiated advantages and enhancements of RocketMQ compared with other message queues in classic scenarios.

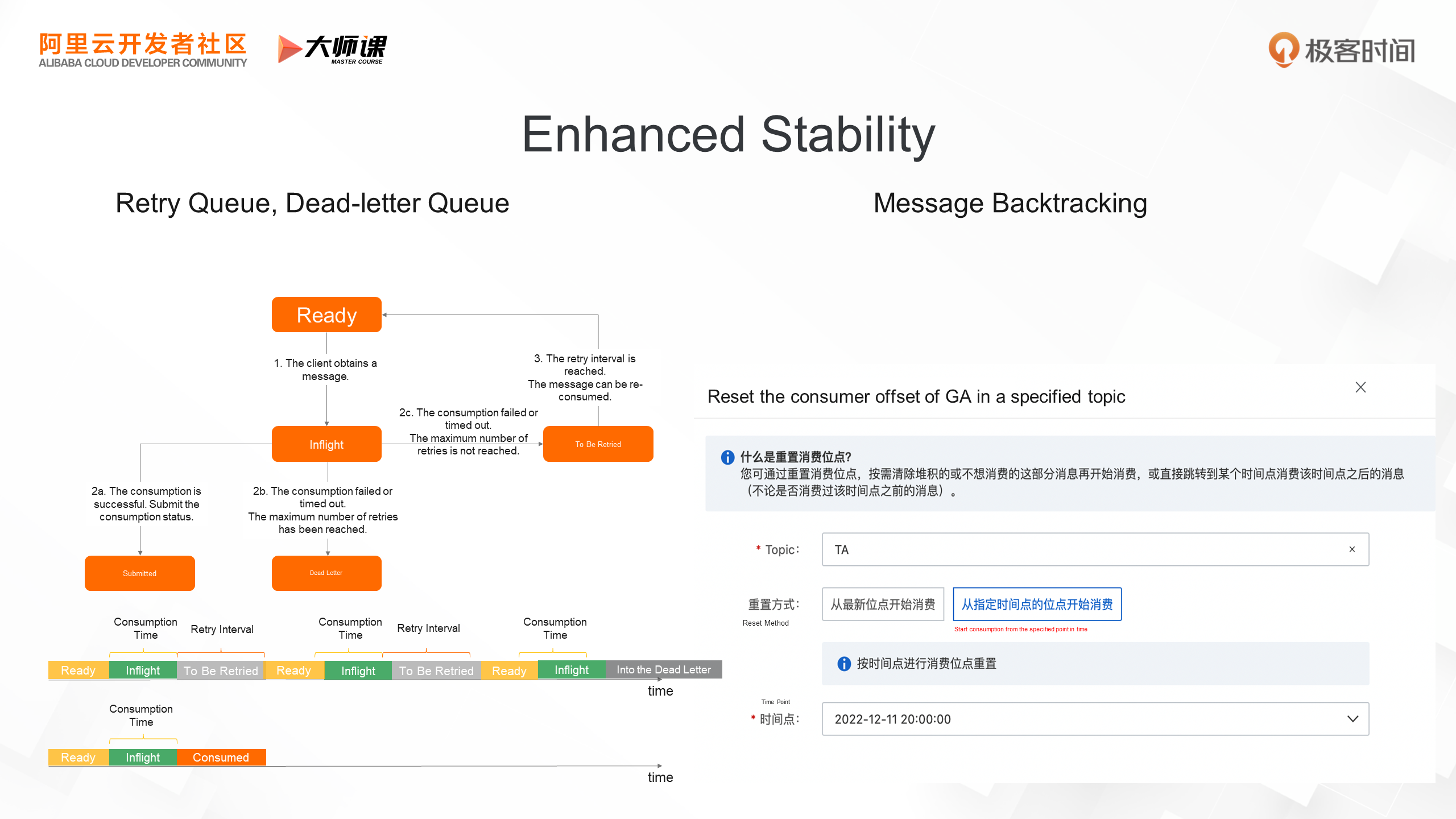

The first enhancement is primarily reflected in stability, which is a critical requirement for trading and financial scenarios. RocketMQ's stability is not just about high-availability architecture, but also about building competitiveness through a full range of product capabilities. For example, in a retry queue, if a downstream consumer fails to consume a message due to service data unavailability or other reasons, RocketMQ won't block consumption. Instead, it adds the message to the retry queue and retries it with time decay. If a message still can't be consumed after multiple retries, RocketMQ transfers it to a dead-letter queue, allowing users to process these failed messages using other methods. This is a must-have requirement in the financial industry. Even if the consumer consumes a message successfully, but the business logic doesn't meet expectations due to code bugs, RocketMQ can replay messages by time, allowing the service to reprocess according to correct logic after the application bug is fixed.

RocketMQ's consumption mechanism uses adaptive pull mode to prevent consumers from being overwhelmed by heavy traffic in extreme scenarios. At the same time, it caches local message numbers and protects message memory usage thresholds in the consumer SDK to prevent memory risks in consumer applications.

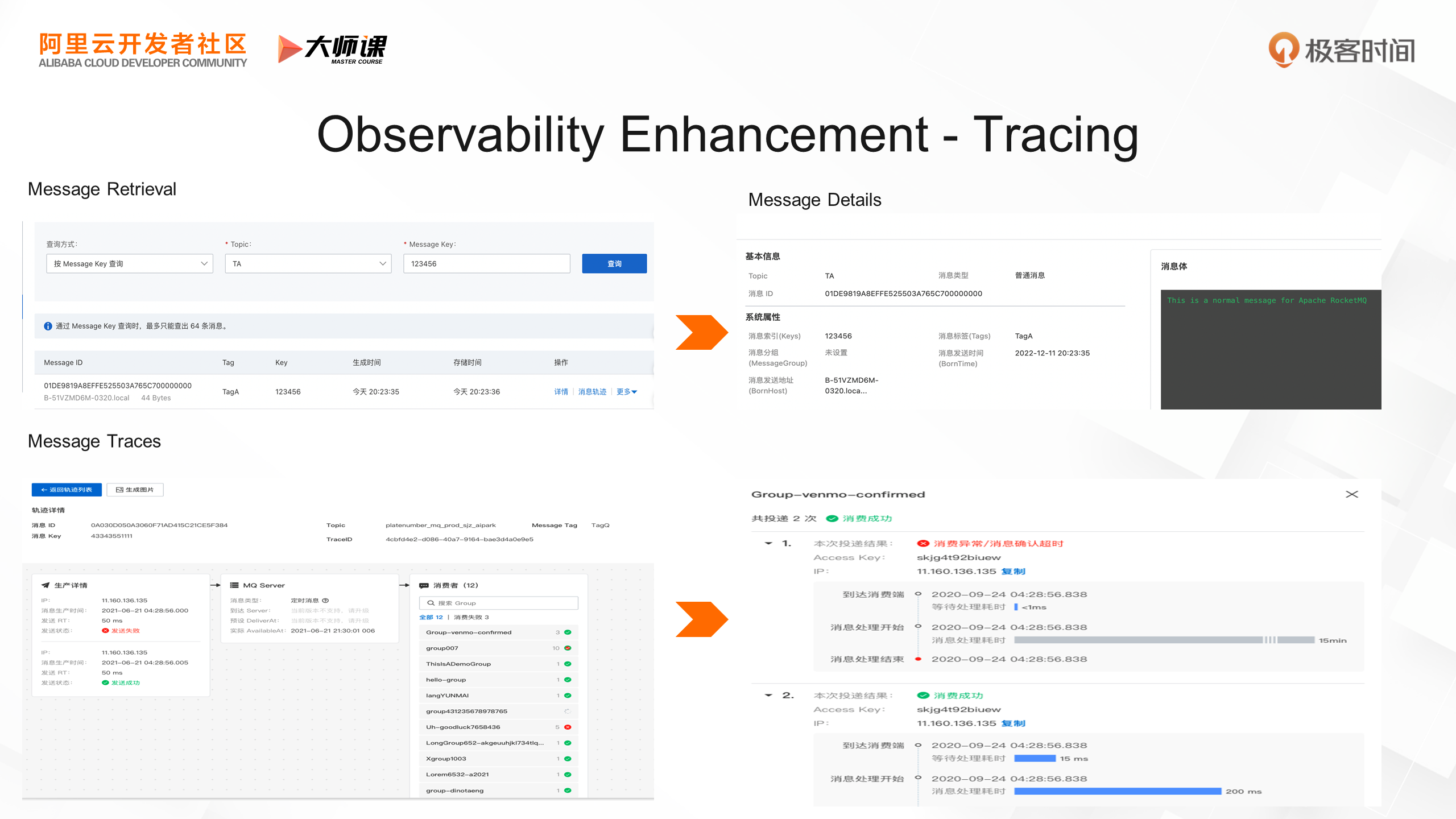

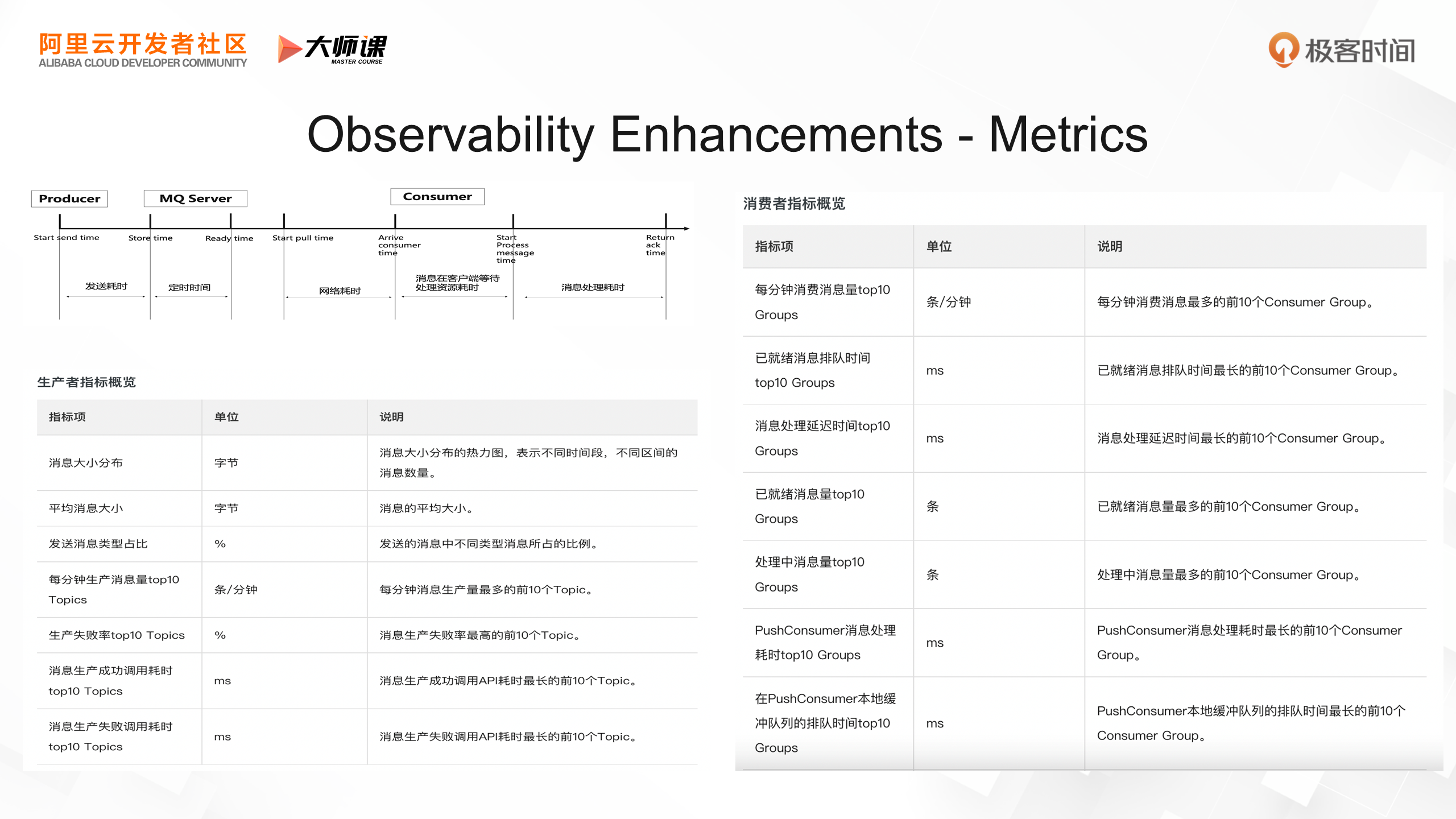

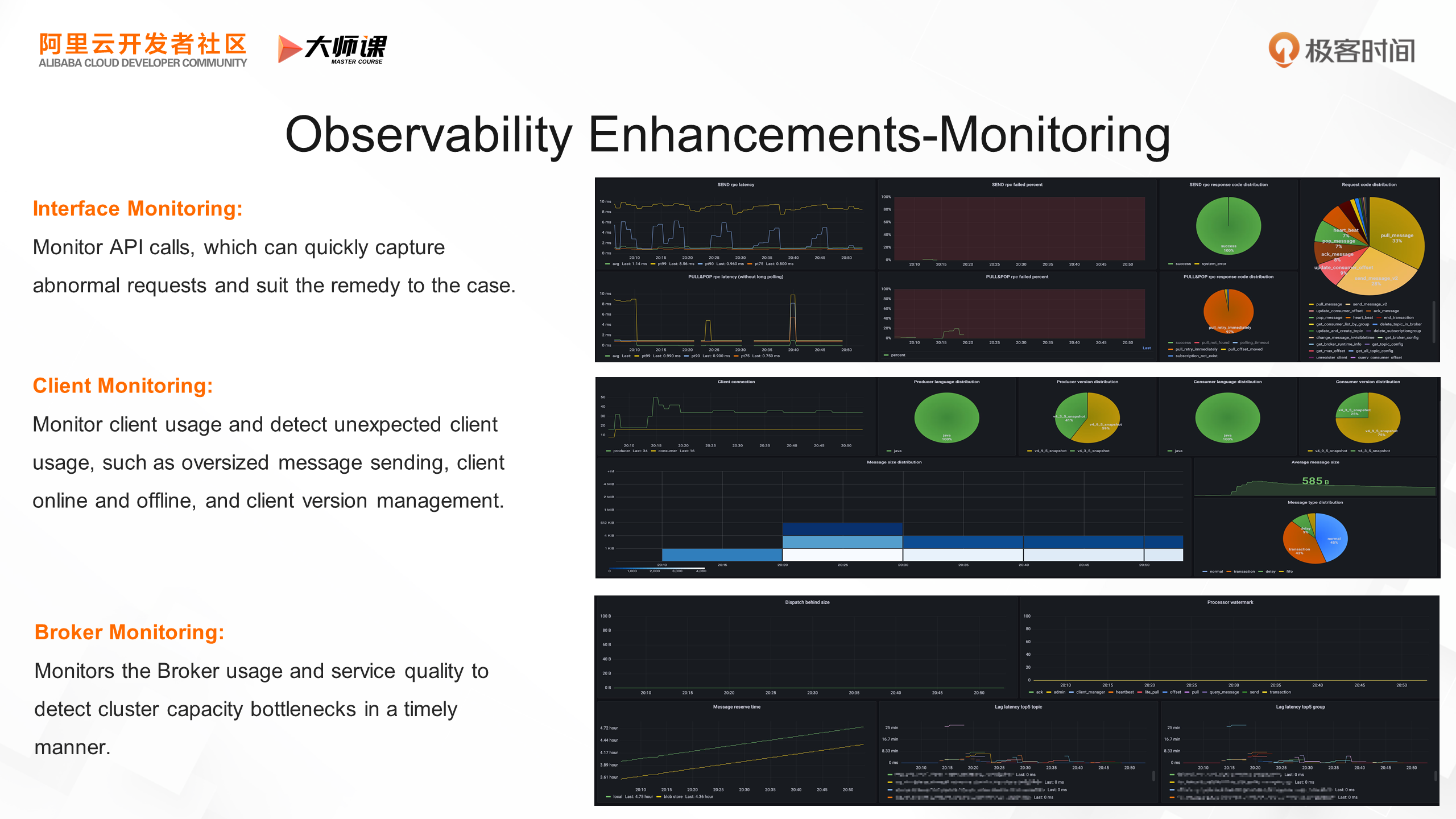

• Tracing: RocketMQ provides excellent observability, which is an important aid to stability. RocketMQ is the first message queue in the industry to provide message-level observability. Each message can include a business primary key. For example, in a transaction scenario, you can use the order ID as the business primary key of its message. If you need to troubleshoot the business of an order, you can query the message based on the order ID to see when the message was generated and the message content. You can also drill down the observable data of the message. You can use the message trace to view which producer sent the message, which consumer consumed the message at what time, and whether the consumption status is successful or failed.

• Metrics: In addition, it also supports dozens of core metrics, including cluster producer traffic distribution, slow consumer ranking, average consumption latency, consumption accumulation, consumption success rate, and so on.

• Monitoring: Based on these rich metrics, users can build a more complete monitoring and alarm system to further reinforce stability.

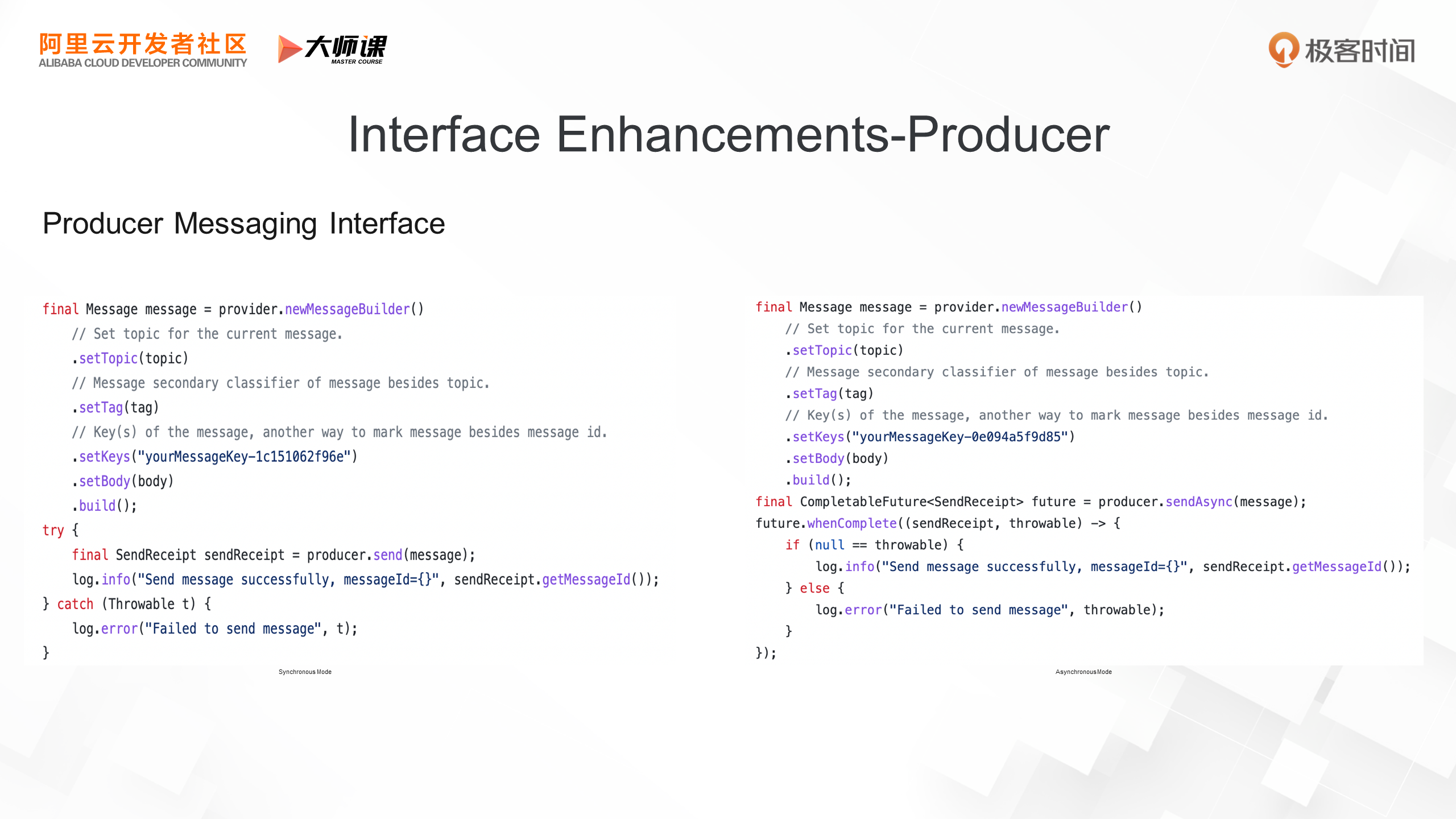

To support a more flexible application architecture, RocketMQ provides multiple modes for key interfaces, such as production and consumption. Let's start with the producer interface. RocketMQ offers both synchronous and asynchronous sending interfaces. Synchronous sending is the most commonly used mode, where the workflow orchestration is serial. After the application sends a message and the Broker completes storage and returns a success message, the application executes the next logic. However, in some scenarios, completing a service involves multiple remote calls. To further reduce latency and improve performance, the application can adopt a fully asynchronous mode, concurrently issuing remote calls (which can be a combination of multiple messages or RPCs) and asynchronously collecting future results to promote the business logic.

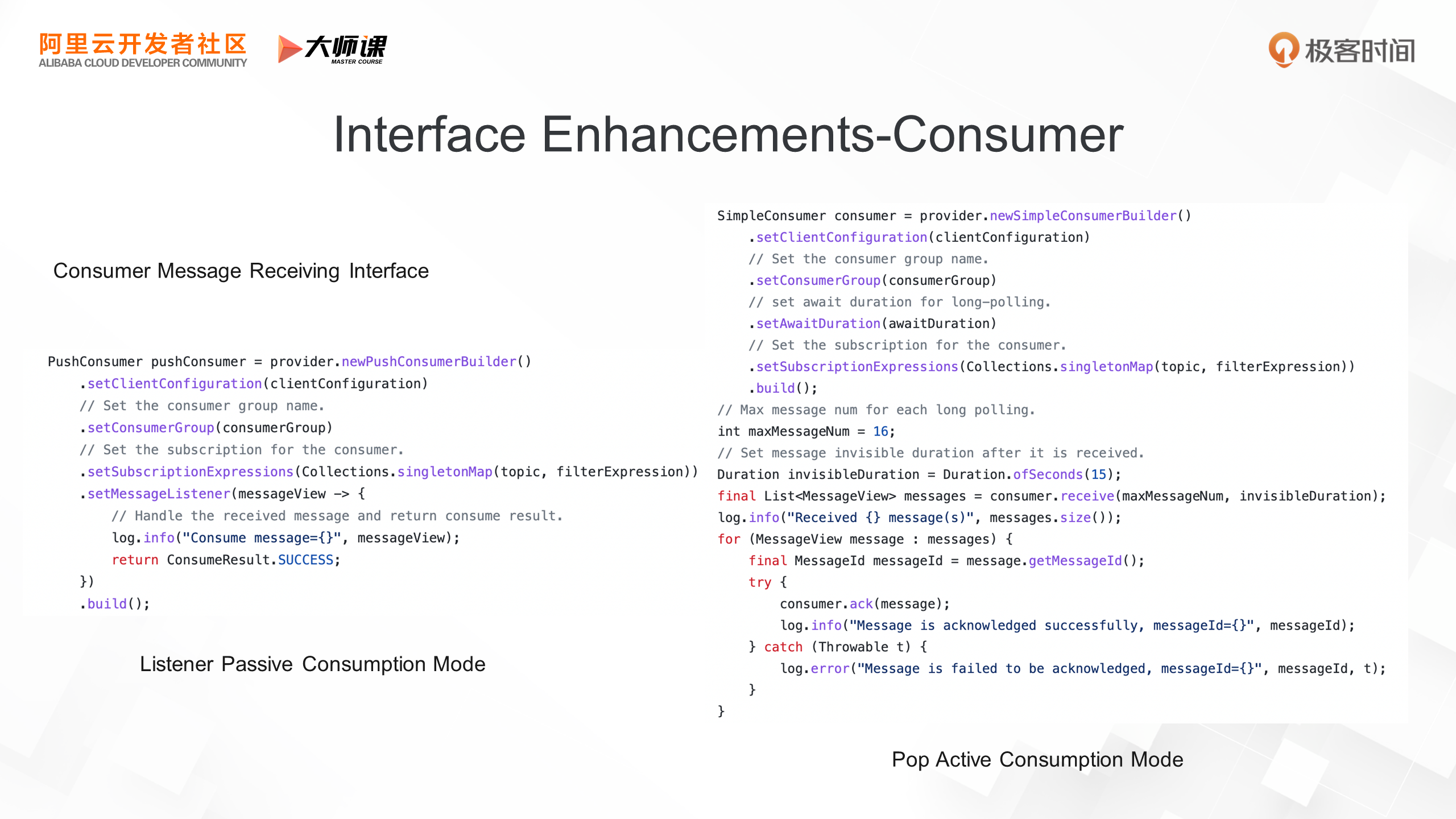

Two types of consumer interfaces are also provided. One is passive consumption in listener mode. This is the most widely used method currently. You do not need to worry about when the client pulls messages from the Broker, when the Broker sends a confirmation of successful consumption, or how to maintain details such as the consumption thread pool and local message cache. You only need to write the business logic of the message listener and return Success or Failure based on the business execution result. It belongs to the fully managed mode, allowing users to focus on writing business logic, and the implementation details are completely entrusted to the RocketMQ client.

In addition to the listener mode, RocketMQ also provides an active consumption mode to give users more autonomy. This mode is called SimpleConsumer. In this mode, the user can decide when to go to the Broker to read the message and when to initiate the consumption confirmation message. You can also control the execution threads of the business logic. After reading messages, you can put the consumption logic into a custom thread pool for execution. In some scenarios, the processing duration and priority of different messages will vary. With the SimpleConsumer mode, users can do secondary distribution based on the properties and size of the message. These messages will be isolated to different business thread pools for processing. This mode also provides the ability to set the consumption timeout period for message granularity. For some messages that consume a long time, users can call the ChangeInvisibleDuration interface to extend the consumption time and avoid timeout retries.

In this course, we first explored the classic scenario of RocketMQ - application decoupling, covering code, latency, availability, and traffic decoupling. Then, we delved into the basic features of RocketMQ, a reliable, consistent, and high-performance publish-subscribe system.

Finally, we learned about its enhancements in business messaging, which not only support high data and service availability but also product feature design and all-around stability design. Additionally, it provides rich observability to further reinforce stability. To accommodate diverse application architectures, it also supports a range of interfaces, including synchronous, asynchronous, managed, and semi-managed.

In the next course, we will continue to learn the advanced features of RocketMQ 5.0 in business messaging scenarios.

Click here to go to the official website for more details.

RocketMQ 5.0: How Does the Cloud-native Architecture Support Diversified Scenarios?

RocketMQ 5.0: How to Support Complex Business Message Scenarios?

212 posts | 13 followers

FollowAlibaba Cloud Native - June 6, 2024

Alibaba Cloud Community - December 21, 2021

Alibaba Cloud Native - June 7, 2024

Alibaba Cloud Native Community - November 20, 2023

Alibaba Cloud Native - June 11, 2024

Alibaba Cloud Native Community - November 23, 2022

212 posts | 13 followers

Follow ApsaraMQ for RocketMQ

ApsaraMQ for RocketMQ

ApsaraMQ for RocketMQ is a distributed message queue service that supports reliable message-based asynchronous communication among microservices, distributed systems, and serverless applications.

Learn More Message Queue for Apache Kafka

Message Queue for Apache Kafka

A fully-managed Apache Kafka service to help you quickly build data pipelines for your big data analytics.

Learn More Message Queue for RabbitMQ

Message Queue for RabbitMQ

A distributed, fully managed, and professional messaging service that features high throughput, low latency, and high scalability.

Learn More ChatAPP

ChatAPP

Reach global users more accurately and efficiently via IM Channel

Learn MoreMore Posts by Alibaba Cloud Native