本文介紹使用備份中心時遇到的常見問題及其解決方案。

索引

類別 | 問題 |

擷取報錯資訊 | |

控制台 | |

通用 | |

備份 | |

儲存類轉換 (恢複期間的可選步驟) | |

恢複 | |

其他 |

通用操作

若您通過kubectl命令列工具使用備份中心,在問題排查之前,請先將備份服務元件migrate-controller升級至最新版本,組件升級不會影響已有的備份。關於升級操作,請參見管理組件。

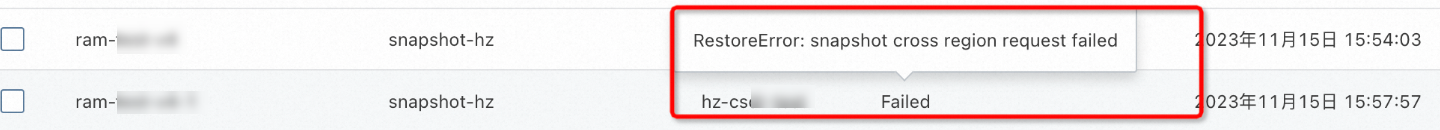

當您的備份任務、儲存類轉換、恢複任務的狀態為Failed或PartiallyFailed時,您可以通過以下方式擷取相關報錯提示資訊。

將滑鼠懸浮至任務狀態列的Failed或PartiallyFailed上,擷取錯誤的概況資訊,例如

RestoreError: snapshot cross region request failed。

如需擷取更詳細的錯誤資訊,您可以執行以下命令查詢任務對應資源的Event記錄,例如

RestoreError: process advancedvolumesnapshot failed avs: snapshot-hz, err: transition canceled with error: the ECS-snapshot related ram policy is missing。備份任務

kubectl -n csdr describe applicationbackup <backup-name>儲存類轉換任務

kubectl -n csdr describe converttosnapshot <backup-name>恢複任務

kubectl -n csdr describe applicationrestore <restore-name>

控制台介面提示“工作群組件運行異常”或“當前資料拉取失敗”

問題現象

控制台介面提示工作群組件運行異常或當前資料拉取失敗。

問題原因

備份中心組件安裝異常。

解決方案

檢查叢集中的節點是否存在。若不存在,備份中心將無法部署。

確認叢集是否使用Flexvolume儲存外掛程式。若叢集使用Flexvolume儲存外掛程式,請將叢集的Flexvolume外掛程式遷移至CSI外掛程式。具體操作,請參見Flexvolume叢集migrate-controller組件無法正常拉起。

若您通過kubectl命令列工具使用備份中心,請檢查相關YAML配置是否有誤。具體操作,請參見通過kubectl實現叢集應用的備份和恢複。

檢查csdr命名空間下的無狀態應用csdr-controller和csdr-velero是否因為資源、調度限制等原因無法正常部署,確認問題並進行修複。

控制台介面提示已存在同名的資源,請修改名稱後再試

問題現象

在建立或刪除備份、儲存類轉換、恢複任務時,控制台介面提示已存在同名的資源,請修改名稱後再試。

問題原因

在控制台刪除任務時,將在叢集中建立deleterequest資源,由工作群組件進行系列刪除操作,不僅僅刪除對應的備份資源。關於命令列操作也類似,請參見通過命令列工具實現備份和恢複。

如果刪除操作錯誤或在處理deleterequest資源流程中出錯,將導致部分叢集中的部分資源無法刪除,出現存在同名資源的提示。

解決方案

根據提示刪除對應的同名資源。例如,報錯提示

deleterequests.csdr.alibabacloud.com "xxxxx-dbr" already exists,您可以通過以下命令刪除。kubectl -n csdr delete deleterequests xxxxx-dbr使用新的名稱建立對應的任務。

跨叢集恢複應用時,無法選擇已建立的備份進行恢複

問題現象

跨叢集恢複應用時,無法選擇備份任務進行恢複。

問題原因

原因1:備份倉庫未與當前叢集進行關聯,即未初始化倉庫。

初始化倉庫操作會將備份倉庫的基礎資訊(OSS Bucket)下發到當前叢集中,然後將備份倉庫中已成功備份完成的資料在叢集中進行初始化。只有初始化操作完成後,才可以在當前叢集中選擇到該備份倉庫中的備份資料進行恢複操作。

原因2:初始化倉庫失敗,即當前叢集backuplocation資源狀態為

Unavailable。原因3:備份任務未完成或備份失敗。

解決方案

解決方案1:

在建立恢複任務頁面,單擊備份倉庫右側的初始化倉庫,待備份倉庫初始化完成後,再選擇待恢複的任務。

解決方案2:

執行以下命令,確認backuplocation資源的狀態。

kubectl get -n csdr backuplocation <backuplocation-name> 預期輸出:

NAME PHASE LAST VALIDATED AGE

<backuplocation-name> Available 3m36s 38m如果狀態為Unavailable,請參見任務狀態為Failed,且提示包含"VaultError: xxx"的解決方案處理。

解決方案3:

在備份組群控制台,確認相關備份任務已成功,即備份任務狀態為Completed。若備份狀態異常,請重新排查原因。具體操作,請參見索引。

控制台介面提示當前組件所依賴的服務角色尚未被授權

問題現象

在進入應用備份控制台時,介面提示當前組件所依賴的服務角色尚未被授權,錯誤碼為AddonRoleNotAuthorized。

問題原因

備份中心組件migrate-controller在v1.8.0版本進行了ACK託管叢集的雲資源鑒權邏輯最佳化。阿里雲帳號下叢集首次安裝或升級至該版本時,需要阿里雲帳號完成雲資源授權。

解決方案

若您當前使用阿里雲帳號登入,請單擊去授權完成阿里雲帳號授權。

若您當前使用RAM使用者(子帳號)登入,請單擊請複製授權連結,然後發送給阿里雲帳號完成授權。

控制台介面提示當前帳號未被授予該操作所需的叢集RBAC許可權

問題現象

在進入應用備份控制台時,介面提示當前帳號未被授予該操作所需的叢集RBAC許可權,請聯絡主帳號或許可權管理員授權。錯誤碼為APISERVER.403

問題原因

控制台通過與apiserver互動下發備份恢複等任務,並即時擷取任務狀態。預設的叢集營運人員、開發人員許可權列表中缺少部份備份中心組件要求的權限,需要主帳號或許可權管理員完成授權。

解決方案

參考使用自訂RBAC限制叢集內資源操作,為備份中心操作人員授權以下ClusterRole許可權

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: csdr-console

rules:

- apiGroups: ["csdr.alibabacloud.com","velero.io"]

resources: ['*']

verbs: ["get","create","delete","update","patch","watch","list","deletecollection"]

- apiGroups: [""]

resources: ["namespaces"]

verbs: ["get","list"]

- apiGroups: ["storage.k8s.io"]

resources: ["storageclasses"]

verbs: ["get","list"]備份中心組件升級或卸載失敗

問題現象

備份中心組件升級或卸載失敗,且csdr命名空間一直處於Terminating狀態。

問題原因

備份中心組件在運行中因為異常退出等原因,導致在csdr命名空間中遺留了卡在InProgress狀態的任務,這些任務對應叢集資源的finalizers欄位可能導致資源無法被順利刪除,從而導致csdr命名空間一直處於Terminating狀態。

解決方案

執行以下指令,查詢csdr命名空間處於Terminating的原因:

kubectl describe ns csdr確認卡住的任務不再需要,刪除其對應的

finalizers。確認csdr命名空間已刪除後:

對於組件升級情境,可重新安裝備份中心組件migrate-controller。

對於組件卸載情境,組件應已卸載完成。

任務狀態為Failed,且提示包含"internal error"

問題現象

任務狀態為Failed,且提示包含"internal error"。

問題原因

組件或底層依賴雲產品出現非預期異常,例如雲產品未在當前地區開服。

解決方案

若提示為"HBR backup/restore internal error",可先前往雲備份控制台確認容器備份是否已開服。

任務狀態為Failed,且提示包含"create cluster resources timeout"

問題現象

任務狀態為Failed,且提示包含"create cluster resources timeout"。

問題原因

儲存類轉換或恢複任務期間,可能會建立臨時Pod、儲存聲明、儲存卷等資源,這些資源建立後若長時間未可用,將會提示"create cluster resources timeout"錯誤資訊。

解決方案

執行以下命令,通過Event快速定位異常的資源及失敗原因。

kubectl -n csdr describe <applicationbackup/converttosnapshot/applicationrestore> <task-name>預期輸出:

……wait for created tmp pvc default/demo-pvc-for-convert202311151045 for convertion bound time out表明叢集用於儲存類轉換的儲存聲明長時間未處於Bound狀態,儲存聲明所在的命名空間為

default,儲存聲明的名稱為demo-pvc-for-convert202311151045。執行以下命令,查詢儲存聲明的狀態及異常原因。

kubectl -ndefault describe pvc demo-pvc-for-convert202311151045以下為備份中心情境常見的異常原因,更多資訊,請參見儲存異常問題排查。

叢集/節點資源不足或狀態異常。

恢複叢集不存在對應的儲存類。請通過儲存類轉換功能,選擇恢複叢集已有的儲存類後進行恢複。

儲存類關聯的底層儲存不可用,例如指定的雲端硬碟類型不支援在當前可用性區域使用。

alibabacloud-cnfs-nas關聯的CNFS異常。請參見通過CNFS管理NAS檔案系統(推薦)。

在多可用性區域叢集恢複時,選擇volumeBindingMode為Immediate的儲存類。

任務狀態為Failed,且提示包含"addon status is abnormal"

問題現象

任務狀態為Failed,且提示包含"addon status is abnormal"。

問題原因

csdr命名空間中組件運行異常。

解決方案

請參見原因1及解決方案:csdr命名空間中組件運行異常處理。

任務狀態為Failed,且提示包含"VaultError: xxx"

問題現象

備份、恢複或儲存類轉換任務狀態為Failed,且提示VaultError: backup vault is unavailable: xxx。

問題原因

OSS Bucket不存在。

叢集OSS許可權未配置。

OSS Bucket網路訪問不通。

解決方案

登入OSS管理主控台,確認備份倉庫綁定的OSS Bucket已存在。

若OSS Bucket缺失,請建立Bucket並重新綁定。具體操作,請參見控制台建立儲存空間。

確認叢集OSS許可權已配置。

ACK Pro版叢集:無需配置OSS許可權,確認叢集備份倉庫關聯的OSS Bucket名稱以cnfs-oss-**命名。

ACK專有版叢集和註冊叢集:需配置OSS許可權。具體操作,請參見安裝備份服務元件並配置許可權。

對於使用非控制台方式安裝或升級組件至v1.8.0或以上版本的ACK託管叢集,可能缺少OSS相關許可權。您可以通過以下命令進行排查。

kubectl get secret -n kube-system | grep addon.aliyuncsmanagedbackuprestorerole.token預期輸出:

addon.aliyuncsmanagedbackuprestorerole.token Opaque 1 62d若存在以上輸出:則此叢集只需要使用cnfs-oss-*開頭的Bucket,無需配置OSS許可權。

若沒有以上輸出,可以通過以下方式完成授權。

參考ACK專有版叢集和註冊叢集,配置OSS許可權。具體操作,請參見安裝備份服務元件並配置許可權。

使用阿里雲帳號單擊去授權完成阿里雲帳號授權,該授權對整個阿里雲帳號只需操作一次。

說明備份倉庫不支援同名重建,也不支援綁定非cnfs-oss-**命名的OSS Bucket。若您已綁定過非cnfs-oss-**命名的OSS Bucket,請重新建立非同名的備份倉庫,並綁定符合命名要求的OSS Bucket。

使用以下命令,確認叢集相關網路設定。

kubectl get backuplocation <backuplocation-name> -n csdr -o yaml | grep network輸出結果類似如下內容:

network: internal當network為

internal時,說明備份倉庫通過內網訪問OSS Bucket。當network為

public時,說明備份倉庫通過公網訪問OSS Bucket。使用公網訪問OSS Bucket時,若具體報錯原因為訪問逾時,請檢查叢集是否具有公網開啟能力。相關處理請參見為叢集開啟訪問公網的能力。

以下三種情況,備份倉庫必須通過公網訪問OSS Bucket。

叢集和OSS Bucket不在同一Region。

當前叢集為ACK Edge叢集。

當前叢集為註冊叢集,且沒有通過CEN、Express Connect專線、VPN等方式與雲上VPC互連;或已與雲上VPC互連,但未配置指向該地區OSS內網網段的路由。您需要配置指向該地區OSS內網網段的路由。

關於線下IDC接入雲上VPC的相關內容,請參見接入方式介紹。

關於OSS內網網域名稱與VIP網段對照表,請參見通過Bucket網域名稱訪問OSS。

如果必須通過公網訪問OSS Bucket時,您可以使用以下命令,將OSS Bucket的訪問方式修改為公網訪問。以下代碼中

<backuplocation-name>為備份倉庫的名稱,<region-id>為OSS Bucket所在的地區,例如cn-hangzhou。kubectl patch -n csdr backuplocation/<backuplocation-name> --type='json' -p '[{"op":"add","path":"/spec/config","value":{"network":"public","region":"<region-id>"}}]' kubectl patch -n csdr backupstoragelocation/<backuplocation-name> --type='json' -p '[{"op":"add","path":"/spec/config","value":{"network":"public","region":"<region-id>"}}]'

任務狀態為Failed,且提示包含"HBRError: check HBR vault error"

問題現象

備份、恢複或儲存類轉換任務狀態為Failed,且提示包含"HBRError: check HBR vault error"。

問題原因

雲備份服務未開通或授權異常。

解決方案

確認已開通雲備份服務,請參考開通雲備份。

若您的叢集位於華北6(烏蘭察布)、華南2(河源)或華南3(廣州)等地區,需要在開通雲備份服務後額外授權API Gateway使用許可權,請參考(可選)步驟三:為雲備份服務授權API Gateway使用許可權。

若您的叢集為ACK專有版叢集或註冊叢集,確認已授權相關雲備份RAM許可權。具體許可權內容及授權方式請參考安裝migrate-controller備份服務元件並配置許可權。

任務狀態為Failed,且提示包含"HBRError: ... code: 400, Illegal request. Please modify the parameters"

問題現象

備份、恢複或儲存類轉換任務狀態為Failed,且提示包含"HBRError: ... code: 400, Illegal request. Please modify the parameters"。

問題原因

位於任務失敗的叢集所在地區的雲備份服務存放庫ack-backup-data被刪除。

在某個地區初次使用備份中心備份時,組件將自動在該地區建立固定名稱為ack-backup-data的存放庫,用於存放通過備份中心建立的備份。備份將根據建立時設定的備份有效期間,到期自動清除。

解決方案

雲備份服務存放庫被刪除後,此前建立的備份將無法用於恢複。以下步驟只能建立出新的雲備份服務存放庫用於後續的備份恢複任務。

在當前地區的所有使用了備份中心的叢集中執行以下指令,清理初始化過的備份倉庫記錄。

kubectl -ncsdr delete backuplocation --all kubectl -ncsdr delete backupstoragelocation --all回到需要備份的叢集,正常建立備份,組件將自動建立ack-backup-data雲備份服務存放庫,並關聯至備份倉庫。

任務狀態為Failed,且提示包含"hbr task finished with unexpected status: FAILED, errMsg ClientNotExist"

問題現象

備份、恢複或儲存類轉換任務狀態為Failed,且提示包含hbr task finished with unexpected status: FAILED, errMsg ClientNotExist

問題原因

雲備份用戶端在對應節點部署異常,即csdr命名空間下DaemonSet hbr-client在該節點副本運行異常。

解決方案

執行以下指令,確認叢集中是否有運行異常的hbr-client

kubectl -n csdr get pod -lapp=hbr-client若有處於異常狀態的Pod,先檢查是否由Pod IP、記憶體、CPU等資源不足導致。若Pod的狀態為CrashLoopBackOff,執行以下指令,查詢對應日誌。

kubectl -n csdr logs -p <hbr-client-pod-name>若輸出包含"SDKError:\n StatusCode: 403\n Code: MagpieBridgeSlrNotExist\n Message: code: 403, AliyunServiceRoleForHbrMagpieBridge doesn't exist, please create this role. ",請參考(可選)步驟三:為雲備份服務授權API Gateway使用許可權,為雲備份服務授權。

如果日誌輸出包含其他類型的SDK錯誤,可通過返回中的EC錯誤碼 (Error

Code) 排查,請參考使用EC錯誤碼自助排查。

任務長期處於InProgress狀態

原因1及解決方案:csdr命名空間中組件運行異常

確認組件運行狀態,並查詢組件異常原因。

執行以下命令,查詢csdr命名空間中的組件是否出現重啟或無法啟動的情況。

kubectl get pod -n csdr執行以下命令,查詢組件重啟或無法啟動的原因。

kubectl describe pod <pod-name> -n csdr

若原因是OOM異常重啟

若OOM異常發生在恢複期間,Pod為csdr-velero-***,且恢複叢集當前已運行大量應用,如數十個生產命名空間。OOM異常出現的原因可能為,Velero預設會使用Informer Cache為恢複流程提速,Cache將佔用部分記憶體。

若需要恢複的叢集資源數目不多,或能接受恢複時有一定的效能影響,可通過以下命令關閉Informer Cache功能。

kubectl -nkube-system edit deploy migrate-controller在migrate-controller容器的

args中,新增參數--disable-informer-cache=true,即:name: migrate-controller args: - --disable-informer-cache=true對於其他情況,或不希望降低叢集資源恢複速度,可執行以下命令,調整對應Deployment的Limit值。

其中,

csdr-controller-***對應<deploy-name>為csdr-controller;csdr-velero-***對應<deploy-name>為csdr-velero。kubectl patch deploy <deploy-name> -n csdr -p '{"spec":{"template":{"spec":{"containers":[{"name":"<container-name>","resources":{"limits":{"memory":"<new-limit-memory>"}}}]}}}}'

若原因是HBR許可權未配置導致拉起失敗

確認叢集已開通雲備份服務。

未開通:請開通雲備份服務。具體操作,請參見雲備份。

已開通:請轉下一步。

確認ACK專有版叢集和註冊叢集已配置雲備份許可權。

未配置:請配置雲備份許可權。具體操作,請參見安裝備份服務元件並配置許可權。

已配置:請轉下一步。

執行以下命令,確認雲備份 Client組件所需Token是否存在。

kubectl describe pod <hbr-client-***> -n csdr若Event報錯提示couldn't find key HBR_TOKEN,表明Token缺失,請參見以下步驟處理。

執行以下命令,查詢對應

hbr-client-***所在節點。kubectl get pod <hbr-client-***> -n csdr -owide執行以下命令,將對應Node的

labels: csdr.alibabacloud.com/agent-enable由true修改為false。kubectl label node <node-name> csdr.alibabacloud.com/agent-enable=false --overwrite重要重新進行備份恢複時,將自動建立Token並拉起hbr-client。

將其他叢集的Token拷貝到本叢集,由此拉起的hbr-client不可用。您需要刪除已拷貝的Token,以及由此Token拉起的

hbr-client-*** Pod,然後再執行上述步驟。

原因2及解決方案:雲端硬碟資料備份時,叢集快照許可權未配置

若您對掛載雲端硬碟資料卷的應用使用資料備份功能時,長期處於InProgress狀態。請執行以下命令,查詢叢集新建立的VolumeSnapshot資源。

kubectl get volumesnapshot -n <backup-namespace>部分預期輸出:

NAME READYTOUSE SOURCEPVC SOURCESNAPSHOTCONTENT ...

<volumesnapshot-name> true <volumesnapshotcontent-name> ...若所有的volumesnapshot的READYTOUSE長期處於false狀態,請參見如下步驟處理。

登入ECS管理主控台,確認是否已開啟雲端硬碟快照服務。

未開啟:請在對應地區開通雲端硬碟快照。具體操作,請參見開通快照。

已開啟:請轉下一步。

確認叢集CSI組件運行正常。

kubectl -nkube-system get pod -l app=csi-provisioner確認是否已配置雲端硬碟快照的使用許可權。

託管叢集

使用Resource Access Management員登入RAM控制台。

在左側導覽列,選擇。

在角色頁面,在搜尋方塊中搜尋AliyunCSManagedBackupRestoreRole,查詢該角色的授權策略中是否包含以下策略內容。

{ "Statement": [ { "Effect": "Allow", "Action": [ "hbr:CreateVault", "hbr:CreateBackupJob", "hbr:DescribeVaults", "hbr:DescribeBackupJobs2", "hbr:DescribeRestoreJobs", "hbr:SearchHistoricalSnapshots", "hbr:CreateRestoreJob", "hbr:AddContainerCluster", "hbr:DescribeContainerCluster", "hbr:CancelBackupJob", "hbr:CancelRestoreJob", "hbr:DescribeRestoreJobs2" ], "Resource": "*" }, { "Effect": "Allow", "Action": [ "ecs:CreateSnapshot", "ecs:DeleteSnapshot", "ecs:DescribeSnapshotGroups", "ecs:CreateAutoSnapshotPolicy", "ecs:ApplyAutoSnapshotPolicy", "ecs:CancelAutoSnapshotPolicy", "ecs:DeleteAutoSnapshotPolicy", "ecs:DescribeAutoSnapshotPolicyEX", "ecs:ModifyAutoSnapshotPolicyEx", "ecs:DescribeSnapshots", "ecs:DescribeInstances", "ecs:CopySnapshot", "ecs:CreateSnapshotGroup", "ecs:DeleteSnapshotGroup" ], "Resource": "*" }, { "Effect": "Allow", "Action": [ "oss:PutObject", "oss:GetObject", "oss:DeleteObject", "oss:GetBucket", "oss:ListObjects", "oss:ListBuckets", "oss:GetBucketStat" ], "Resource": "acs:oss:*:*:cnfs-oss*" } ], "Version": "1" }如果AliyunCSManagedBackupRestoreRole角色缺失,您可以直接進入存取控制快速授權頁面完成AliyunCSManagedBackupRestoreRole角色的授權。

如果AliyunCSManagedBackupRestoreRole角色存在,但策略內容不全面,請為該角色授予以上許可權。具體操作,請參見建立自訂權限原則和管理RAM角色的許可權。

專有叢集

登入Container Service管理主控台,在左側導覽列單擊叢集。

在叢集列表頁面,單擊目的地組群名稱,然後在左側導覽列,選擇叢集資訊。

在叢集資訊頁面找到Master RAM 角色參數,然後單擊右側連結。

在許可權管理頁簽中,查看雲端硬碟快照許可權是否正常。

若k8sMasterRolePolicy-Csi-***權限原則不存在或不包含以下許可權,請為Master RAM角色授予如下雲端硬碟快照權限原則。具體操作,請參見建立自訂權限原則和管理RAM角色的許可權。

{ "Statement": [ { "Effect": "Allow", "Action": [ "hbr:CreateVault", "hbr:CreateBackupJob", "hbr:DescribeVaults", "hbr:DescribeBackupJobs2", "hbr:DescribeRestoreJobs", "hbr:SearchHistoricalSnapshots", "hbr:CreateRestoreJob", "hbr:AddContainerCluster", "hbr:DescribeContainerCluster", "hbr:CancelBackupJob", "hbr:CancelRestoreJob", "hbr:DescribeRestoreJobs2" ], "Resource": "*" }, { "Effect": "Allow", "Action": [ "ecs:CreateSnapshot", "ecs:DeleteSnapshot", "ecs:DescribeSnapshotGroups", "ecs:CreateAutoSnapshotPolicy", "ecs:ApplyAutoSnapshotPolicy", "ecs:CancelAutoSnapshotPolicy", "ecs:DeleteAutoSnapshotPolicy", "ecs:DescribeAutoSnapshotPolicyEX", "ecs:ModifyAutoSnapshotPolicyEx", "ecs:DescribeSnapshots", "ecs:DescribeInstances", "ecs:CopySnapshot", "ecs:CreateSnapshotGroup", "ecs:DeleteSnapshotGroup" ], "Resource": "*" }, { "Effect": "Allow", "Action": [ "oss:PutObject", "oss:GetObject", "oss:DeleteObject", "oss:GetBucket", "oss:ListObjects", "oss:ListBuckets", "oss:GetBucketStat" ], "Resource": "acs:oss:*:*:cnfs-oss*" } ], "Version": "1" }

註冊叢集

僅節點均為阿里雲ECS的註冊叢集時,才能使用雲端硬碟快照功能。請檢查安裝CSI儲存外掛程式時是否已進行相關授權。相關操作,請參見為CSI組件配置RAM許可權。

原因3及解決方案:使用除雲端硬碟外的其他類型儲存卷

備份中心migrate-controller組件從v1.7.7版本開始,支援雲端硬碟類型資料備份跨地區恢複,其他類型資料跨地區恢複暫未支援。若您使用的是阿里雲OSS等支援公網訪問的儲存產品,可先靜態建立儲存聲明、儲存卷後再恢複應用。具體操作,請參考使用ossfs 1.0靜態儲存卷。

備份狀態為Failed,且提示包含"backup already exists in OSS bucket"

問題現象

備份狀態為Failed,且提示包含"backup already exists in OSS bucket"。

問題原因

此前建立的同名備份實際存放在備份倉庫關聯的OSS Bucket中。

實際存在的備份在當前叢集中不可見的原因:

進行中的備份或失敗的備份不會同步到其他叢集。

在非備份組群刪除備份,只會對其打標,不會實際在OSS Bucket中刪除。打標的備份不再同步至新關聯的叢集。

當前叢集未關聯備份所使用的備份倉庫,即未初始化。

解決方案

使用新的名稱重新建立備份倉庫。

備份狀態為Failed,且提示包含"get target namespace failed"

問題現象

備份狀態為Failed,且提示包含"get target namespace failed"。

問題原因

一般出現在通過定時建立的備份任務中。選擇命名空間的方式不同,備份結果也不同。

選擇包含方式時,選擇的命名空間均已被刪除。

選擇排除方式時,叢集中除選擇的命名空間外無其他命名空間。

解決方案

備份計劃支援編輯,您可以調整命名空間的選擇方式及對應的命名空間。

備份狀態為Failed,且提示包含"velero backup process timeout"

問題現象

備份狀態為Failed,且提示包含"velero backup process timeout"。

問題原因

原因1:應用備份的子任務逾時。應用備份的子任務所需時間與叢集資源量、叢集APIServer時延等多方面因素相關。備份中心migrate-controller組件從v1.7.7版本開始,預設逾時時間為60分鐘。

原因2:備份倉庫使用的Bucket的儲存類型為Archive Storage、冷Archive Storage或深度冷Archive Storage。為保證備份流程的一致性,過程中記錄元資訊的檔案需要由組件在OSS服務端更新,未解凍的檔案不支援該操作。

解決方案

解決方案1:修改備份組群中備份子任務逾時時間的全域配置。

執行以下命令,在applicationBackup中增加

velero_timeout_minutes配置項,單位為分鐘。kubectl edit -n csdr cm csdr-config例如,需要將逾時時間設定為100分鐘,則需要進行如下修改:

apiVersion: v1 data: applicationBackup: | ... #省略展示 velero_timeout_minutes: 100修改完成後,執行以下命令重啟以使csdr-controller生效。

kubectl -n csdr delete pod -l control-plane=csdr-controller解決方案2:將備份倉庫使用的Bucket儲存類型修改為標準儲存。

如果您期望以Archive Storage類型存放備份資料,您可以通過生命週期規則配置自動轉換儲存類型,並在恢複之前解凍資料。更多資訊,請參見轉換儲存類型。

備份狀態為Failed,且提示包含"HBR backup request failed"

問題現象

備份任務狀態為Failed,且提示包含"HBR backup request failed"。

問題原因

原因1:叢集使用的儲存外掛程式暫不相容。

原因2:雲備份暫不支援備份volumeMode為Block模式的儲存卷。更多資訊,請參見volumeMode介紹。

原因3:雲備份用戶端異常,導致備份或恢複檔案系統類型資料(例如OSS、NAS、CPFS、本機存放區卷資料等)任務逾時或失敗。

解決方案

解決方案1:若您叢集使用的是非阿里雲CSI儲存外掛程式,或儲存卷非NFS、LocalVolume等Kubernetes通用的儲存卷,遇到相容問題,請提交工單處理。

解決方案2:通常只有雲端硬碟儲存需要使用Block模式的儲存卷。叢集儲存外掛程式為CSI時,預設使用雲端硬碟快照方式進行資料備份,雲端硬碟快照支援Block模式儲存卷的備份。若您是因為儲存外掛程式類型不符,可先切換儲存外掛程式為CSI後,重新安裝備份組件,再進行備份。

解決方案3:請按照以下步驟處理:

在左側導覽列,選擇備份 > 容器备份,然後單擊備份任務頁簽。

在頂部功能表列左上方,選擇所在地區。

在備份任務頁簽,單擊搜尋方塊前面的下拉式功能表並選擇任務名稱,然後搜尋

<backup-name>-hbr,查詢備份任務的狀態及對應原因。更多資訊,請參見Container ServiceACK備份。說明若需要查詢儲存類轉換或備份任務,請使用對應的備份名稱搜尋。

備份狀態為Failed,且提示包含"hbr task finished with unexpected status: FAILED, errMsg SOURCE_NOT_EXIST"

問題現象

備份狀態為Failed,且提示包含"hbr task finished with unexpected status: FAILED, errMsg SOURCE_NOT_EXIST"。

問題原因

對於其他雲廠商的CSI,或自建的NFS、Ceph等儲存類型:

在混合雲情境中,備份中心預設使用Kubernetes標準的儲存卷掛載路徑作為資料備份路徑。如對標準CSI儲存驅動,掛載路徑預設為

/var/lib/kubelet/pods/<pod-uid>/volumes/kubernetes.io~csi/<pv-name>/mount,NFS、FlexVolume等 Kubernetes官方支援的儲存驅動同理。其中

/var/lib/kubelet為預設的kubelet根路徑,若您的 Kubernetes叢集修改了此路徑,可能會導致雲備份服務無法訪問待備份的資料。對於HostPath儲存類型:

HostPath類型儲存並不會在kubelet根路徑下建立掛載路徑,而是Pod直接掛載指定的節點路徑。預設情況下備份組件無法讀取節點路徑上的資料,因此備份失敗。

解決方案

對於其他雲廠商的CSI,或自建NFS、Ceph等儲存類型:

登入掛載了儲存卷的節點,按以下步驟進行排查:

查詢節點的kubelet的根路徑是否有變更

執行以下指令,查詢kubelet啟動指令

ps -elf | grep kubelet若啟動指令中帶參數

--root-dir,則該參數值為kubelet的根路徑。若啟動指令中帶參數

--config,參數值為kubelet的設定檔。查詢設定檔,若包含root-dir欄位,則該參數值為kubelet的根路徑。若啟動指令中未包含根路徑資訊,查詢kubelet服務的開機檔案

/etc/systemd/system/kubelet.service內容,若包含EnvironmentFile欄位,如:EnvironmentFile=-/etc/kubernetes/kubelet即環境變數設定檔為

/etc/kubernetes/kubelet。查詢設定檔內容,若包含以下內容:ROOT_DIR="--root-dir=/xxx"則/xxx為kubelet的根路徑。

若均未查詢到相關變更,則kubelet根路徑為預設的

/var/lib/kubelet。

執行以下指令,查詢kubelet根路徑是否為其他路徑的軟連結:

ls -al <root-dir>若包含類似輸出:

lrwxrwxrwx 1 root root 26 Dec 4 10:51 kubelet -> /var/lib/container/kubelet則實際根路徑為

/var/lib/container/kubelet。驗證根路徑下有備份目標儲存卷的資料。

確認儲存卷掛載路徑

<root-dir>/pods/<pod-uid>/volumes存在,且底下存在目標類型的儲存卷子路徑,如kubernetes.io~csi、kubernetes.io~nfs等。為無狀態應用csdr/csdr-controller追加ENV

KUBELET_ROOT_PATH = /var/lib/container/kubelet/pods。其中/var/lib/container/kubelet為此前通過查詢配置與軟連結獲得的實際kubelet根路徑。

對於HostPath儲存類型:

請提交工單處理。

備份狀態為Failed,且提示包含"check backup files in OSS bucket failed"或"upload backup files to OSS bucket failed"或"download backup files from OSS bucket failed"

問題現象

備份狀態為Failed,且提示包含"upload backup files to OSS bucket failed"。

問題原因

組件在檢查、上傳、下載備份倉庫關聯的OSS bucket時OSS服務端返回錯誤。可能的原因:

原因1:OSS Bucket配置了資料加密,未追加相關KMS許可權。

原因2:ACK專有版叢集和註冊叢集在安裝組件並配置許可權時,部分讀寫權限缺失。

原因3:ACK專有版叢集和註冊叢集配置許可權時使用的RAM使用者鑒權憑證被吊銷。

解決方案

解決方案1:請參見備份中心是否支援對關聯的OSS Bucket開啟資料加密?開啟KMS服務端加密時,如何追加相關許可權?

解決方案2:確認配置許可權時使用的RAM使用者的權限原則,組件要求的權限策略請參見步驟一:配置相關許可權。

解決方案3:確認配置許可權時使用的RAM使用者的鑒權憑證是否處於啟用狀態。若被吊銷,請重新擷取鑒權憑證並更新csdr命名空間下Secret

alibaba-addon-secret的內容,並執行以下操作重啟組件:kubectl -nkube-system delete pod -lapp=migrate-controller

備份狀態為PartiallyFailed,且提示包含"PROCESS velero partially completed"

問題現象

備份狀態為PartiallyFailed,且提示包含"PROCESS velero partially completed"。

問題原因

使用velero組件備份應用(叢集內資源)時,部分資源備份失敗。

解決方案

執行以下命令,確認失敗的資源及失敗原因。

kubectl -n csdr exec -it $(kubectl -n csdr get pod -l component=csdr | tail -n 1 | cut -d ' ' -f1) -- ./velero describe backup <backup-name>根據輸出中的Errors和Warnings欄位內容提示修複問題。

若無直接顯示失敗原因,執行以下命令以擷取相關異常日誌。

kubectl -n csdr exec -it $(kubectl -n csdr get pod -l component=csdr | tail -n 1 | cut -d ' ' -f1) -- ./velero backup logs <backup-name>備份狀態為PartiallyFailed,且提示包含"PROCESS hbr partially completed"

問題現象

備份狀態為PartiallyFailed,且提示包含"PROCESS hbr partially completed"。

問題原因

使用雲備份服務備份檔案系統類別型資料(例如OSS、NAS、CPFS、本機存放區卷資料等)時,部分資源備份失敗。可能原因如下:

原因1:部分資料卷儲存使用的儲存外掛程式暫未支援。

原因2:雲備份不保證資料一致性,備份期間若檔案被刪除可能導致備份失敗。

解決方案

在左側導覽列,選擇備份 > 容器备份,然後單擊備份任務頁簽。

在頂部功能表列左上方,選擇所在地區。

在備份任務頁簽,單擊搜尋方塊前面的下拉式功能表並選擇任務名稱,然後搜尋

<backup-name>-hbr,查詢儲存卷失敗或部分失敗的原因。更多資訊,請參見Container ServiceACK備份。

儲存類轉換狀態為Failed,且提示包含"storageclass xxx not exists"

問題現象

儲存類轉換狀態為Failed,且提示包含"storageclass xxx not exists"。

問題原因

儲存類轉換時,選擇的目標儲存類在當前叢集中不存在。

解決方案

執行以下命令,重設儲存類轉換任務。

cat << EOF | kubectl apply -f - apiVersion: csdr.alibabacloud.com/v1beta1 kind: DeleteRequest metadata: name: reset-convert namespace: csdr spec: deleteObjectName: "<backup-name>" deleteObjectType: "Convert" EOF在當前叢集中建立缺失的儲存類。

重新執行恢複任務,並配置儲存類轉換。

儲存類轉換狀態為Failed,且提示包含"only support convert to storageclass with CSI diskplugin or nasplugin provisioner"

問題現象

儲存類轉換狀態為Failed,且提示包含"only support convert to storageclass with CSI diskplugin or nasplugin provisioner"。

問題原因

儲存類轉換時,選擇的目標儲存類不是阿里雲CSI雲端硬碟類型或NAS類型。

解決方案

目前的版本預設僅支援雲端硬碟類型、NAS、OSS類型的快照製作及恢複,若您有其他恢複需求,請聯絡相關支援提交工單。

若您使用的是阿里雲OSS等支援公網訪問的儲存產品,可先通過靜態掛載的方式建立儲存聲明、儲存卷後直接恢複應用,無需儲存類轉換步驟。具體請參考使用ossfs 1.0靜態儲存卷。

儲存類轉換狀態為Failed,且提示包含"current cluster is multi-zoned"

問題現象

儲存類轉換狀態為Failed,且提示包含"current cluster is multi-zoned"。

問題原因

當前叢集為多可用性區域叢集,轉換為雲端硬碟類型儲存類時,選擇的目標儲存類volumeBindingMode為Immediate。在多可用性區域叢集使用此類型儲存類會導致建立儲存卷後,Pod無法調度到指定節點上而處於Pending狀態。關於volumeBindingMode欄位的說明,請參見儲存類。

解決方案

執行以下命令,重設儲存類轉換任務。

cat << EOF | kubectl apply -f - apiVersion: csdr.alibabacloud.com/v1beta1 kind: DeleteRequest metadata: name: reset-convert namespace: csdr spec: deleteObjectName: "<backup-name>" deleteObjectType: "Convert" EOF若您需要轉換為雲端硬碟儲存類。

通過控制台操作,請選擇alicloud-disk,alicloud-disk預設使用alicloud-disk-topology-alltype儲存類。

通過命令列操作,請選擇alicloud-disk-topology-alltype類型,alicloud-disk-topology-alltype類型為CSI儲存外掛程式預設提供的儲存類。您也可以自訂volumeBindingMode為WaitForFirstConsumer的儲存類。

重新執行恢複任務,並配置儲存類轉換。

恢複任務狀態為Failed,且提示包含"multi-node writing is only supported for block volume"

問題現象

恢複、儲存類轉換任務狀態為Failed,且提示包含"multi-node writing is only supported for block volume. For Kubernetes users, if unsure, use ReadWriteOnce access mode in PersistentVolumeClaim for disk volume"。

問題原因

為了避免掛載期間的雲端硬碟被其他節點再次掛載導致強制拔盤的風險,CSI在掛載期間會校正雲端硬碟類型儲存卷的AccessModes配置,禁止使用ReadWriteMany或ReadOnlyMany配置。

備份的應用掛載的是AccessMode為ReadWriteMany或ReadOnlyMany的儲存卷(多為支援多掛載的網路儲存如OSS、NAS),恢複為預設不支援多掛載的阿里雲雲端硬碟儲存時,可能由CSI拋出以上錯誤。

具體而言,以下三種情境都可能引起該報錯:

情境1:備份組群的CSI版本較早(或使用的是FlexVolume儲存外掛程式),早期的CSI版本在掛載期間不會對阿里雲雲端硬碟儲存卷的AccessModes欄位進行校正,導致原雲端硬碟儲存卷在CSI版本較新的叢集恢複時報錯。

情境2:備份儲存卷使用的自訂儲存類在恢複叢集不存在,按一定匹配規則,在新叢集預設恢複為阿里雲雲端硬碟儲存類型儲存卷。

情境3:恢複時通過儲存類轉換功能,手動指定備份的儲存卷恢複至阿里雲雲端硬碟類型儲存卷。

解決方案

情境1:備份組件從v1.8.4版本起,支援雲端硬碟儲存卷的AccessModes欄位自動轉換為ReadWriteOnce。請升級備份中心組件後重新恢複。

情境2:儲存類由組件在目的地組群中自動回復可能會有資料無法訪問或資料覆蓋的風險,請先在目的地組群建立同名儲存類再恢複,或通過儲存類轉換功能指定需要在恢複中使用的儲存類。

情境3:網路儲存類型儲存卷恢複為雲端硬碟儲存時,同時配置convertToAccessModes參數轉換AccessModes至ReadWriteOnce,詳情請參見convertToAccessModes:目標AccessModes列表。

恢複任務狀態為Failed,且提示包含"only disk type PVs support cross-region restore in current version"

問題現象

恢複任務狀態為Failed,且提示包含"only disk type PVs support cross-region restore in current version"。

問題原因

備份中心migrate-controller組件從v1.7.7版本開始支援雲端硬碟類型的資料備份跨地區恢複,其他類型的資料跨地區恢複暫未支援。

解決方案

若您使用的是阿里雲OSS等支援公網訪問的儲存產品,請先通過靜態掛載的方式建立儲存聲明、儲存卷後再恢複應用。具體操作,請參見使用ossfs 1.0靜態儲存卷。

恢複任務狀態為Failed,且提示包含"ECS snapshot cross region request failed"

問題現象

恢複任務狀態為Failed,且提示包含"ECS snapshot cross region request failed"。

問題原因

備份中心migrate-controller組件從v1.7.7版本開始支援雲端硬碟類型的資料備份跨地區恢複,但ECS雲端硬碟快照許可權未授權。

解決方案

如果您的叢集為ACK專有版叢集,或接入使用ECS自建的Kubernetes叢集的註冊叢集,您需要補充授權ECS雲端硬碟快照的相關權限原則。具體操作,請參見註冊叢集。

恢複任務狀態為Failed,且提示包含"accessMode of PVC xxx is xxx"

問題現象

恢複任務狀態為Failed,且提示包含“accessMode of PVC xxx is xxx”。

問題原因

需要恢複的雲端硬碟儲存卷,AccessMode設定為ReadOnlyMany(唯讀多掛載)或ReadWriteMany(讀寫多掛載)。

雲端硬碟儲存卷恢複時,需通過CSI儲存外掛程式進行掛載,在當前的CSI版本中:

僅支援開啟了

multiAttach特性的儲存卷多執行個體掛載。其中,

VolumeMode為Filesystem(即使用ext4, xfs等檔案系統掛載)的儲存卷僅能支援唯讀多掛載。

更多雲端硬碟儲存資訊,請參考使用雲端硬碟動態儲存裝置卷。

解決方案

若您在使用儲存類轉換功能將OSS、NAS等支援多掛載類型的儲存卷轉換成雲端硬碟,為保持業務不同副本對儲存卷的資料能正常共用,建議您建立恢複任務,選擇儲存類轉換的目標類型為

alibabacloud-cnfs-nas,使用CNFS託管的NAS儲存卷,更多資訊,請參見通過CNFS管理NAS檔案系統(推薦)。若您備份雲端硬碟儲存卷建立時的CSI版本較低(未對

AccessMode進行檢測),備份的儲存卷本身不符合當前CSI的建立要求,請您優先考慮使用雲端硬碟動態儲存裝置卷改造原業務,避免調度到其他節點時的強拔盤風險。

恢複任務狀態為Completed,但恢複叢集中有部分資源未建立

問題現象

恢複任務狀態為Completed,但恢複叢集中有部分資源未建立。

問題原因

原因1:該資源未被備份。

原因2:該資源在恢複時根據配置項被排除。

原因3:應用恢複子任務部分失敗。

原因4:該資源已成功恢複,但由於ownerReferences配置或商務邏輯的原因被回收。

解決方案

解決方案1:

執行以下命令,查詢備份詳情。

kubectl -n csdr exec -it $(kubectl -n csdr get pod -l component=csdr | tail -n 1 | cut -d ' ' -f1) -- ./velero describe backup <backup-name> --details確認目標資源是否已備份。若目標資源未被備份,請確認是否因為備份任務的指定/排除命名空間、資源等配置而被排除,並重新備份。未被選擇的命名空間中正在啟動並執行應用(Pod)的Cluster層級資源預設不會備份,若您需要備份所有Cluster層級資源,請參見叢集層級備份。

解決方案2:

若目標資源未被恢複,請確認是否因為備份任務的指定/排除命名空間、資源等配置而被排除,並重新恢複。

解決方案3:

執行以下指令,確認失敗的資源及失敗原因。

kubectl -n csdr exec -it $(kubectl -n csdr get pod -l component=csdr | tail -n 1 | cut -d ' ' -f1) -- ./velero describe restore <restore-name> 根據輸出中的Errors和Warnings欄位內容提示修複問題。

解決方案4:

查詢對應資源的審計,確認是否在建立後被異常刪除。

Flexvolume叢集migrate-controller組件無法正常拉起

備份中心migrate-controller組件不支援Flexvolume類型的叢集。如需使用備份中心功能,您可以通過以下方式將Flexvolume外掛程式遷移至CSI。

其他情況,請加入DingTalk使用者群(DingTalk群號:35532895)諮詢。

若您在Flexvolume至CSI遷移過程中,需要在Flexvolume叢集中進行備份並在CSI叢集中恢複,請參見通過備份中心實現低版本Kubernetes叢集應用遷移。

備份倉庫是否支援修改

備份中心不支援備份倉庫的修改。如需修改備份倉庫,只能刪除後使用其他名稱重建。

由於備份倉庫是共用的,已建立的備份倉庫隨時可能處於備份或恢複狀態。此時,修改任意一個參數,都可能導致備份或恢複應用時找不到資料。因此,備份倉庫不允許修改或同名重建。

備份倉庫是否可以關聯非"cnfs-oss-*"格式命名的OSS Bucket

對於ACK專有版叢集及註冊叢集以外類型的叢集,備份中心組件預設擁有cnfs-oss-*命名的OSS Bucket的讀寫權限。為避免備份覆蓋Bucket中原有的資料,建議您為備份中心建立一個專用的符合cnfs-oss-*命名規則的OSS Bucket。

若您需要為備份倉庫關聯非"cnfs-oss-*"格式命名的OSS Bucket,需為組件配置許可權。具體操作,請參見ACK專有叢集。

許可權配置完成後,執行以下命令,重啟備份服務元件。

kubectl -n csdr delete pod -l control-plane=csdr-controller kubectl -n csdr delete pod -l component=csdr若您已經建立了關聯非"cnfs-oss-*"格式命名的OSS Bucket的備份倉庫,可等待連通性檢測完成,狀態變為Available後,再嘗試備份或恢複,連通性檢測的間隔時間約為五分鐘。您可以通過以下命令查詢備份倉庫的狀態。

kubectl -n csdr get backuplocation預期輸出:

NAME PHASE LAST VALIDATED AGE a-test-backuplocation Available 7s 6d1h

建立備份計劃時,備份周期如何填寫

備份周期支援Crontab運算式(例如1 4 * * *);或按間隔備份填寫(例如6h30m),即每隔6h30m備份一次。

Crontab運算式解析如下,可選值(minute的可選值為0-59)除外,*表示給定欄位的任意可用值。以下運算式中:

1 4 * * *:表示每天4:01 AM備份一次。0 2 15 * 1:表示每個月15號的2:00 AM備份一次。

* * * * *

| | | | |

| | | | ·----- day of week (0 - 6) (Sun to Sat)

| | | ·-------- month (1 - 12)

| | .----------- day of month (1 - 31)

| ·-------------- hour (0 - 23)

·----------------- minute (0 - 59)

恢複任務時,對備份的YAML資源會有哪些預設的調整?

恢複任務時,YAML資源會做以下調整:

調整1:

若雲端硬碟類型的儲存卷容量小於20 GiB,則恢複時將調整為20 GiB。

調整2:

Service資源恢複時,根據Service類型的不同將進行如下適配:

NodePort類型的Service:跨叢集恢複時,預設保留連接埠號碼。

LoadBalancer類型的Service:ExternalTrafficPolicy為Local時,HealthCheckNodePort預設使用隨機連接埠號碼。若您需要保留連接埠號碼,請在建立恢複任務時,設定

spec.preserveNodePorts: true。在備份組群中由指定已有SLB的Service,恢複時將使用原有的SLB並預設關閉強制監聽,您需要前往SLB控制台配置監聽。

在備份組群中由CCM管理SLB的Service,恢複時將由CCM建立新的SLB執行個體,更多資訊,請參見Service的負載平衡配置注意事項。

如何查看備份的具體資源?

叢集應用備份的資源

叢集中的YAML儲存在備份倉庫關聯的OSS Bucket中。您可以通過以下任一方式查看具體的備份資源。

在任意同步備份的叢集中,執行以下命令查看資源。

kubectl -n csdr get pod -l component=csdr | tail -n 1 | cut -d ' ' -f1 kubectl -n csdr exec -it csdr-velero-xxx -c velero -- ./velero describe backup <backup-name> --details通過Container Service控制台查看。

登入Container Service管理主控台,在左側導覽列選擇叢集列表。

在叢集列表頁面,單擊目的地組群名稱,然後在左側導覽列,選擇。

在應用備份頁面,單擊備份記錄頁簽,在備份記錄列單擊待查看的目標備份記錄。

雲端硬碟儲存卷備份的資源

登入ECS管理主控台。

在左側導覽列,選擇。

在頁面左側頂部,選擇目標資源所在的資源群組和地區。

在快照頁面,根據云盤ID查詢快照。

非雲端硬碟儲存卷備份的資源

在左側導覽列,選擇。

在頂部功能表列左上方,選擇所在地區。

查看容器備份。

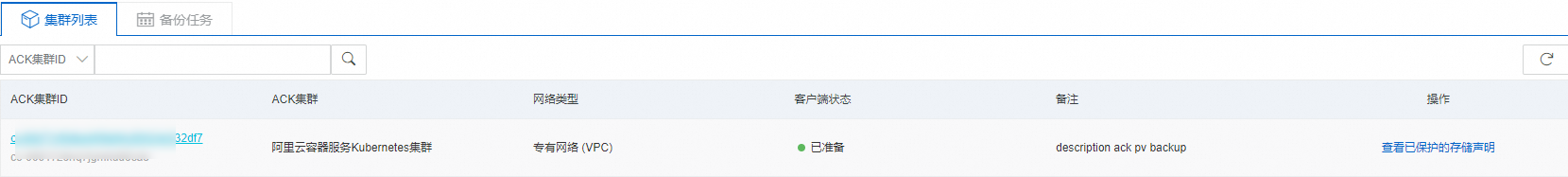

集群列表:展示已進行備份保護的叢集列表。單擊ACK集群ID可查看已保護的儲存卷聲明。更多介紹,請參見儲存卷聲明。

如果客户端状态顯示異常,則說明雲備份服務在Container Service叢集中運行異常。請前往Container Service管理主控台守護進程集,進行定位處理。

备份任务:展示備份任務的運行狀態。

是否支援在低版本叢集備份至高版本叢集恢複?

支援。

備份時,預設備份資源所有支援的apiVersion版本。例如1.16版本叢集Deployment支援extensions/v1beta1、apps/v1beta1、apps/v1beta2以及apps/v1,備份倉庫中將儲存Deployment的4種版本(與您部署時選用的版本無關,底層使用KubernetesConvert功能)。

恢複時,資源將優先按照恢複叢集版本推薦的apiVersion進行恢複。例如1.28版本叢集Deployment推薦版本為apps/v1,上述備份將恢複至apps/v1版本的Deployment。

若某資源在兩個版本中沒有同時支援的apiVersion版本,恢複時您需要手動部署。例如1.16版本叢集Ingress支援extensions/v1beta1、networking.k8s.io/v1beta1,無法將其直接恢複至1.22及以上版本叢集(需要networking.k8s.io/v1)。更多kubernetes官方API版本遷移請參考官方文檔。由於apiVersion相容問題,不建議您通過備份中心將高版本叢集中的應用遷移至低版本的叢集中,也不建議備份1.16以下版本的叢集遷移至高版本的叢集。

恢複時是否會自動切換負載平衡流量?

不會。

Service資源恢複時,根據Service類型的不同將進行如下適配:

NodePort類型的Service:跨叢集恢複時,預設保留連接埠號碼。

LoadBalancer類型的Service:ExternalTrafficPolicy為Local時,HealthCheckNodePort預設使用隨機連接埠號碼。若您需要保留連接埠號碼,請在建立恢複任務時,設定

spec.preserveNodePorts: true。在備份組群中由指定已有SLB的Service,恢複時將使用原有的SLB並預設關閉強制監聽,您需要前往SLB控制台配置監聽。

在備份組群中由CCM管理SLB的Service,恢複時將由CCM建立新的SLB執行個體,更多資訊,請參見Service的負載平衡配置注意事項。

預設情況下,關閉強制監聽或使用新的SLB執行個體不會切換備份組群的流量。若您使用了其他雲產品或第三方的服務發現,且不希望由自動服務發現將流量切換到新的SLB執行個體,可以考慮在備份時排除Service資源,當需要切換時,再切換為手動部署。

為什麼預設不支援備份csdr、ack-csi-fuse、kube-system、kube-public、kube-node-lease等命名空間下的資源?

csdr為備份中心的工作命名空間,直接備份恢複會導致組件在恢複叢集工作異常。此外,備份中心有備份同步的邏輯,您無需手動遷移備份至新叢集。

ack-csi-fuse為儲存群組件CSI的工作命名空間,用於運行CSI維護的FUSE用戶端Pod。在新叢集中恢複儲存時,新叢集的CSI將自動同步到對應的用戶端,無需手動備份恢複。

kube-system、kube-public、kube-node-lease為Kubernetes叢集的預設系統命名空間,由於叢集參數、配置等不同,無法在叢集之間簡單恢複。此外備份中心關注業務應用的備份和恢複。在恢複任務之前,您需要在恢複叢集中預先安裝並配置好所需的系統組件。例如:

ACR免密組件:為恢複叢集重新授權並配置acr-configuration。

ALB Ingress組件:預先配置ALBConfig等。

直接備份kube-system的系統組件至新叢集,可能會導致系統組件運行異常。

備份中心對雲端硬碟的資料都使用ECS雲端硬碟快照備份嗎?快照預設的類型是什嗎?

在以下情境,備份中心會預設使用ECS雲端硬碟快照對雲端硬碟的資料進行備份。

叢集為ACK託管叢集、ACK專有叢集。

叢集版本為1.18及以上版本,且使用1.18及以上版本的CSI儲存外掛程式。

其他情況下,備份中心預設使用Cloud Backup對雲端硬碟的資料進行備份。

由備份中心建立的雲端硬碟快照預設開啟極速可用功能,快照有效期間預設與備份配置的有效期間一致。阿里雲ECS快照自2023年10月12日11:00起,全地區範圍不再收取快照極速可用儲存費和快照極速可用次數費。更多資訊,請參見快照極速可用能力。

為什麼由備份建立的ECS雲端硬碟快照的有效期間與備份配置的不一致?

雲端硬碟快照的建立依賴於叢集的csi-provisioner組件(或managed-csiprovisioner組件)。當csi-provisioner組件低於1.20.6版本時,不支援在建立快照的相關資源(VolumeSnapshot)時指定有效期間或開啟快照極速可用功能。此時,將導致備份配置的有效期間對ECS雲端硬碟快照不生效。

因此,在使用雲端硬碟的儲存卷資料備份功能時,您需要將csi-provisioner組件升級至1.20.6及以上的版本。

若您的叢集無法升級至該版本的csi-provisioner,您可以通過以下方式配置預設的快照有效期間:

將備份中心組件migrate-controller升級至v1.7.10及以上版本。

使用以下命令,確認叢集中是否存在配置預設30天快照有效期間的快照類。

kubectl get volumesnapshotclass csdr-disk-snapshot-with-default-ttl若不存在,使用以下YAML建立csdr-disk-snapshot-with-default-ttl快照類。

若已存在,只需將預設csdr-disk-snapshot-with-default-ttl快照類中的

retentionDays設定為30。apiVersion: snapshot.storage.k8s.io/v1 deletionPolicy: Retain driver: diskplugin.csi.alibabacloud.com kind: VolumeSnapshotClass metadata: name: csdr-disk-snapshot-with-default-ttl parameters: retentionDays: "30"

配置後,該叢集建立的所有涉及雲端硬碟儲存卷的備份都將建立與以上

retentionDays欄位一致的雲端硬碟快照。重要如果您一直希望由備份建立的ECS雲端硬碟快照的有效期間與備份配置的一致,建議您將csi-provisioner組件升級至1.20.6及以上的版本。

在應用備份中,什麼情境適合備份儲存卷資料?

什麼是備份儲存卷資料?

通過ECS雲端硬碟快照或HBR雲備份服務,將儲存卷內的資料至雲端儲存,並在恢複時將資料存入新的雲端硬碟或NAS中,供恢複的應用使用。恢複的應用與原應用不共用資料來源,互不影響。

若無需拷貝資料或有共用資料來源的需求,您可以選擇不備份資料卷資料,並確保備份中的排除資源清單不包含PVC、PV資源,恢複時將直接按儲存卷原來的YAML部署至新叢集。

什麼情境適合備份儲存卷資料?

資料容災和版本記錄。

儲存類型為雲端硬碟,因為普通雲端硬碟只能被掛載至單一節點。

跨地區備份恢複,通常除OSS儲存類型外,均無跨地區訪問能力。

備份應用與恢複應用的資料需要隔離。

備份組群與恢複叢集的儲存外掛程式或版本差異較大,YAML無法直接恢複。

對有狀態應用,未備份儲存卷資料可能會有哪些風險?

若在備份時未備份儲存卷資料,且備份中包含有狀態應用,恢複時的行為是:

對回收策略為Delete的儲存卷:

類似初次部署PVC,若在恢複叢集有對應儲存類,則由CSI自動建立新的PV。以雲端硬碟儲存為例,即會為恢複的應用掛載建立的無資料雲端硬碟。對未設定儲存類的靜態儲存卷,或恢複叢集未有對應儲存類,則恢複的PVC與Pod均處於Pending狀態,直到手動建立對應的PV或儲存類。

對回收策略為Retain的儲存卷:

恢複時將按先PV再PVC順序按原YAML檔案恢複。對NAS、OSS等支援多掛載的儲存,能直接複用原FileSystem、Bucket。對雲端硬碟,可能有被強拔的風險。

可通過以下指令,查詢儲存卷的回收策略:

kubectl get pv -o=custom-columns=CLAIM:.spec.claimRef.name,NAMESPACE:.spec.claimRef.namespace,NAME:.metadata.name,RECLAIMPOLICY:.spec.persistentVolumeReclaimPolicy預期輸出:

CLAIM NAMESPACE NAME RECLAIMPOLICY

www-web-0 default d-2ze53mvwvrt4o3xxxxxx Delete

essd-pvc-0 default d-2ze5o2kq5yg4kdxxxxxx Delete

www-web-1 default d-2ze7plpd4247c5xxxxxx Delete

pvc-oss default oss-e5923d5a-10c1-xxxx-xxxx-7fdf82xxxxxx Retain在資料保護中,如何選擇可用於執行檔案系統備份操作的節點?

預設情況下,在備份除阿里雲雲端硬碟類型外的儲存卷時,均使用雲備份進行資料備份恢複,此時需要在某個節點上執行雲備份任務。ACK Scheduler的預設調度策略與社區Kubernetes調度器保持一致。同時您也可以根據業務情況,只允許任務調度到某些特定的節點上。

當前雲備份任務暫不支援調度到虛擬節點上。

預設情況下,備份任務為低優先順序任務,對於同一備份任務,某節點上最多隻能執行一個儲存卷的備份任務。

備份中心節點調度策略

exclude策略(預設):預設情況下,所有節點均可用於備份恢複。若不期望雲備份任務調度到某些節點上,需要給節點打上

csdr.alibabacloud.com/agent-excluded="true"標籤。kubectl label node <node-name-1> <node-name-2> csdr.alibabacloud.com/agent-excluded="true"include策略:預設情況下,未打標的節點均不可用於備份恢複。對允許執行雲備份任務的節點需要打上

csdr.alibabacloud.com/agent-included="true"標籤。kubectl label node <node-name-1> <node-name-2> csdr.alibabacloud.com/agent-included="true"prefer策略:預設情況下,所有節點均可用於備份恢複,調度優先順序如下:

優先調度在帶有

csdr.alibabacloud.com/agent-included="true"標籤的節點上;無特殊標籤的節點次之;

最後再調度到帶有

csdr.alibabacloud.com/agent-excluded="true"標籤的節點上。

切換節點選擇策略

執行以下命令編輯

csdr-configConfigMap。kubectl -n csdr edit cm csdr-config在

applicationBackup配置中追加node_schedule_policy配置,樣本如下:執行以下命令重啟

csdr-controllerDeployment,使配置生效。kubectl -n csdr delete pod -lapp=csdr-controller

應用備份和資料保護的適用情境有哪些?

應用備份:

備份目標是叢集中啟動並執行業務,包括應用、服務、設定檔等叢集中的資源。

可以同時備份應用掛載的儲存卷中的資料(可選)。

說明在應用備份中,未被任何Pod掛載的儲存卷資料不會被備份。

如需備份應用及所有儲存卷資料,您可以配合建立資料保護類型備份。

用於實現叢集的遷移,以及容災情境下應用的快速恢複。

資料保護:

備份目標是儲存卷資料,資源僅包括儲存聲明與儲存卷。

恢複的目標為可直接被應用掛載的儲存聲明,其中指向的資料與備份資料是獨立的。如果儲存聲明被意外刪除後,通過備份中心恢複時,將建立新的雲端硬碟,其資料與備份時一致。該儲存聲明除指向的雲端硬碟執行個體變更外,其餘掛載參數不變,應用能直接掛載恢複出的儲存聲明。

用於實現資料複製與資料容災。

如何跳過部分儲存卷內資料的備份與恢複?

實際生產中,有部分儲存卷的資料在遷移或異常恢複時是可失的,如日誌類資料。

部分類型的儲存源因具有海量、跨可用性區域甚至跨地區可訪問、本身具有多副本容災能力等特點(如OSS),對於某些低優業務也可考慮跳過資料的備份恢複。

假設需要備份的業務命名空間下有無需備份資料的儲存卷A和需要備份資料的儲存卷B:

備份流程

通過資料保護功能,選擇需要備份的儲存卷B。資料保護功能將同時備份儲存卷B的YAML及對應資料。參見叢集內備份和恢複應用。

說明備份資料指通過將儲存卷B指向的資料來源通過快照或雲備份服務產生獨立的備份源,通過備份源恢複出的儲存卷與原資料卷內容一致但實為兩份,互相不影響。

通過應用備份功能,選擇需要備份的應用所在的命名空間。對備份儲存卷功能,選擇不開啟,應用備份功能將預設備份儲存卷A和儲存卷B的YAML。參見叢集內備份和恢複應用。

說明如在新叢集中不再需要部署儲存卷A,即不再需要恢複儲存卷A的YAML。可以在進階配置的排除資源中指定

pvc, pv。

恢複流程

類似於在叢集中部署新的應用,需要先恢複儲存卷及資料,再恢複上層的工作負載。

在目的地組群中恢複資料保護。將恢複出儲存卷B的YAML及資料。參見叢集內備份和恢複應用。

在目的地組群中恢複應用備份。將恢複出儲存卷A的YAML及應用的其他資源,並由CSI根據儲存卷A PV的回收策略動態建立出新的儲存源或複用舊儲存源。參見在應用備份中,什麼情境適合備份儲存卷資料?中的對有狀態應用,未備份儲存卷資料可能會有哪些風險?

此時應用及儲存卷A和儲存卷B(及B的資料)已恢複。

備份中心是否支援對關聯的OSS Bucket開啟資料加密?開啟KMS服務端加密時,如何追加相關許可權?

OSS Bucket的資料加密方式分為服務端加密和用戶端加密,目前備份中心僅支援OSS Bucket的服務端加密方式,您可以通過OSS控制台等方式手動為您綁定的Bucket開啟服務端加密,並配置加密方式。更多關於OSS Bucket的服務端加密資訊及操作流程,請參見服務端加密。

若您使用KMS託管密鑰進行加解密,並使用內建密鑰BYOK,即配置指定了具體的CMK ID。您需要為備份中心追加KMS的部分許可權,具體步驟為:

建立如下的自訂權限原則。具體操作,請參見建立自訂權限原則。

{ "Version": "1", "Statement": [ { "Effect": "Allow", "Action": [ "kms:List*", "kms:DescribeKey", "kms:GenerateDataKey", "kms:Decrypt" ], "Resource": [ "acs:kms:*:141661496593****:*" ] } ] }以上權限原則表示允許調用該阿里雲帳號ID下所有的KMS密鑰。若您有更細粒度的Resource配置需求,請參見授權資訊。

對ACK專有版叢集和註冊叢集,為安裝時使用的RAM使用者授權,具體操作,請參見管理RAM使用者的許可權。對其他叢集,為AliyunCSManagedBackupRestoreRole角色授權,具體操作,請參見管理RAM角色的許可權。

若您使用OSS預設託管的KMS密鑰或使用OSS完全託管密鑰進行加解密,無需額外授權。

恢複時如何調整備份中應用使用的鏡像?

假裝置份中應用使用的鏡像為:docker.io/library/app1:v1

修改鏡像倉庫地址 (registry)

在混合雲情境中,跨雲端服務廠商部署應用或IDC應用上雲的前置條件通常包括涉及的鏡像上雲,即上傳鏡像至阿里雲Container RegistryACR的倉庫中。

針對此類需求,您可以通過鏡像倉庫地址映射(imageRegistryMapping欄位)配置。如通過以下配置實現將鏡像調整為

registry.cn-beijing.aliyuncs.com/my-registry/app1:v1。docker.io/library/: registry.cn-beijing.aliyuncs.com/my-registry/修改鏡像倉庫 (repositry)、版本等

此類調整屬於進階配置,需要在恢複前先在ConfigMap中定義調整策略。

假定需要調整鏡像的倉庫至

app2:v2,可建立以下配置:apiVersion: v1 kind: ConfigMap metadata: name: <配置名稱> namespace: csdr labels: velero.io/plugin-config: "" velero.io/change-image-name: RestoreItemAction data: "case1": "app1:v1,app2:v2" # 如只希望變更repositry # "case1": "app1,app2" # 如只希望變更版本 # "case1": "v1:v2" # 如只希望變更某個registry下的鏡像 # "case1": "docker.io/library/app1:v1,registry.cn-beijing.aliyuncs.com/my-registry/app2:v2"如有多項調整需求,可在

data中繼續配置case2, case3 ...建立成功後,正常建立恢複任務,且imageRegistryMapping欄位為空白。

說明修改配置對叢集所有的恢複任務生效,請參考以上注釋盡量配置更精細的調整策略,如限制調整的範圍到某個registry下。若不再需要使用,請及時刪除配置。