JMeter is an open source performance testing tool developed by Apache. JMeter supports features such as parameterization and assertion. With its extensive open-source ecosystem, Apache JMeter provides a wide range of protocol and controller extensions, along with the ability to write custom scripts for parameter handling. On PTS, you can directly use JMeter for performance testing. Seamless resource expansion and integration with cloud monitoring enhance the capabilities of JMeter in simulating high concurrency and identifying bottlenecks and issues. This topic describes the benefits and procedure of JMeter performance testing on PTS.

Step 1: Create a JMeter scenario

Upload a JMeter test file.

Log on to the PTS console, choose , and then click JMeter.

In the Create a JMeter Scenario dialog box, enter a Scenario Name.

In the Scenario Settings section, upload a JMeter test file in YAML format. The file name ends with

jmxand cannot contain spaces.After the file is uploaded, PTS automatically completes plug-ins. For more information, see Automatic Completion of JMeter Plug-ins.

Click Upload File to upload other files, such as data files in CSV format and plug-ins in JAR format.

ImportantThese files may overwrite the previously uploaded file with the same name. To confirm whether to overwrite, you can view the MD5 hash value of the file in the Actions column and compare it with the MD5 hash value of your on-premises file.

Limits:

JMX script file: The file size cannot exceed 2 MB. You can upload multiple JMX script files. However, you can use only one JMX script file for a JMeter test. You must select one JMX script file before initiating a test.

JAR file: The file size cannot exceed 10 MB. Debug the JAR file to be uploaded in the local JMeter environment to ensure successful debugging

CSV file: The size of a CSV file cannot exceed 60 MB. You can use OSS Data Source feature to upload files larger than 60 MB. The number of non-JMX files cannot exceed 20 in total.

ImportantDo not directly modify the suffix of an XLSX file into CSV. We recommend that you use Excel, Numbers and other software to export a CSV file or use Apache Commons CSV to generate a CSV file.

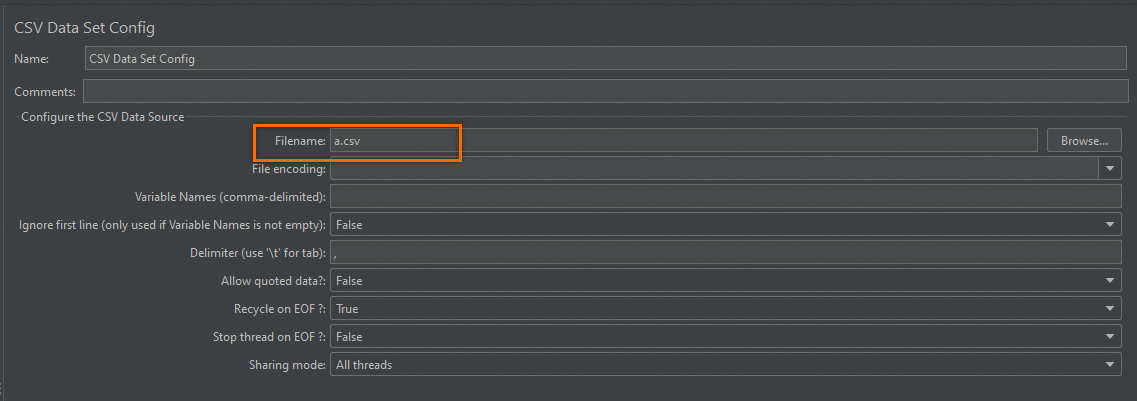

If a data file is associated with a JMX script, you must enter the file name in Filename in the CSV Data Set Config. The file name cannot contain the file path. The following figure shows an example. Otherwise, the data file cannot be read. Similarly, if you use __CSVRead to read data from CSV files or specify files in JAR packages, you must enter the file name.

If you have uploaded multiple JMX files, you must select one JMX file as the JMeter script used to initiate a JMeter test.

(Optional) Select Split File for a CSV file to ensure that the data in the file is unique for each load generator. Otherwise, the same data is used in different generators. For more information, see Use CSV parameter files in JMeter.

(Optional) If the script that you upload contains distributed adaptation components, such as timers and controllers, you can configure these components based on different IP addresses of multiple JMeter tests. This helps ensure accurate and effective performance testing.

Set the Synchronous Timer. If a timer is provided in the uploaded JMeter scripts, you must configure the parameter of the timer to Global or Single Generator.

Set the Constant Throughput Timer. If a controller is provided in the uploaded JMeter scripts, you must configure the parameter of controller to Global or Single Generator. For more information, see Example on Constant Throughput Distributed Usage.

Specify whether to enable Use Dependency Environment. For more information, see JMeter Environment Management.

If you select Yes, you must select an existing environment.

If you select No, you must select a JMeter version. Apache JMeter 5.0 and Java 11 are supported.

Load configuration

For more information about how to set loads, see Configure load models and levels.

(Optional) Advanced settings.

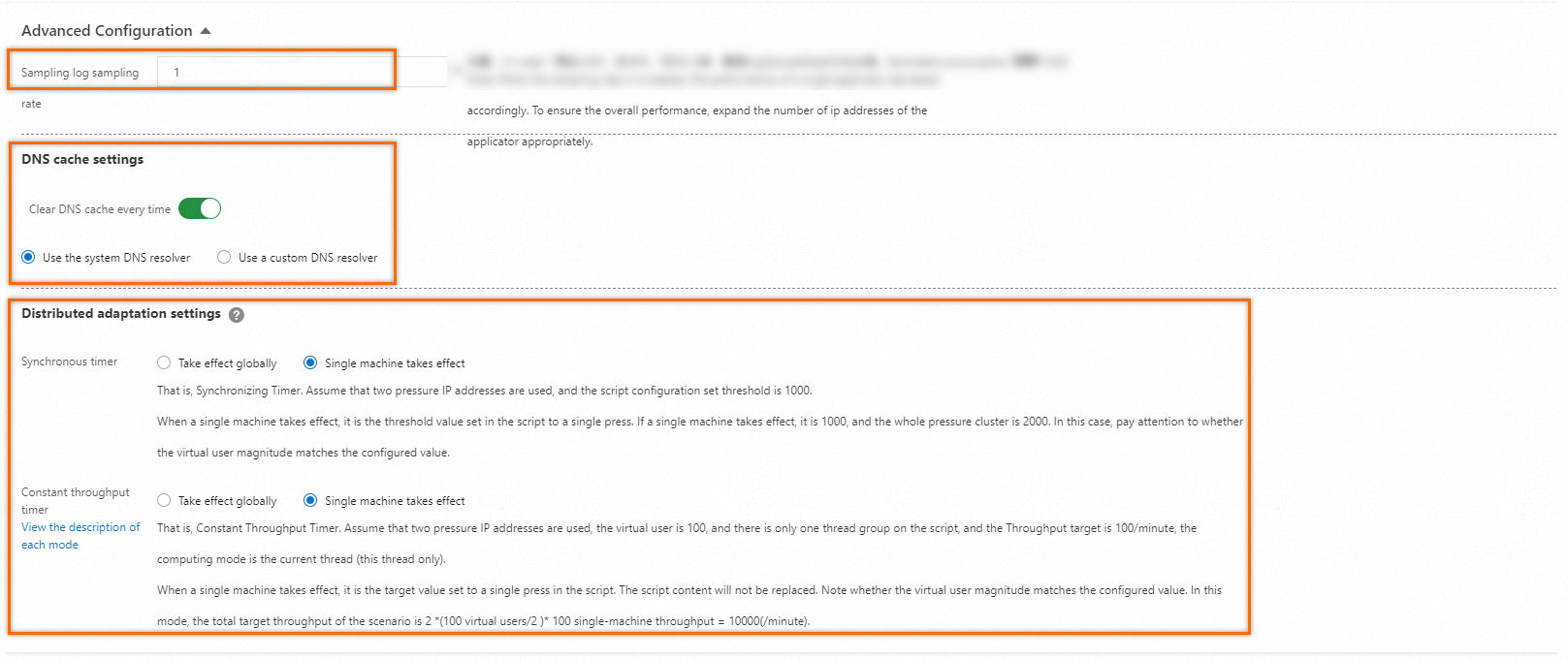

Advanced settings include log sampling rate settings, DNS configuration, and distributed adaptation component settings. By default, this feature is disabled. You can enable this feature to perform configurations.

Status with advanced settings disabled

In this case, the default configurations are supported. Log sampling rate is 1%. Auto DNS Cache Clear is disabled for each cycle. Use default DNS resolver. Both the synchronizing timer and the constant throughput timer take effect for a single test.

Status with advanced settings enabled

Log sampling rate settings

The default sampling rate is 1%. You can specify log sampling rates. To reduce sampling rates, enter a value within the

(0,1]. To increase sampling rates, enter a number that is divisible by 10 within the(1,50]interval, for example, 20.ImportantIf you specify a sampling rate greater than 1%, additional fee will be charged. For example, if you set the sampling rate to 20%, an additional fee of 20%×VUM is charged. For more information, see Pay-as-you-go.

DNS Cache Settings

You can choose whether to clear the DNS cache for each access request. You can use the default DNS resolver or custom DNS resolver to clear DNS cache.

Situations where you might need to use a custom DNS resolver:

Performance testing on the Internet

To test a service on the Internet, you can bind the public domain name of the service to the IP address of the testing environment to isolate the testing traffic from online traffic.

Performance testing in an Alibaba Cloud VPC

To test services in an Alibaba Cloud VPC, you can bind the domain names of APIs to the internal addresses of the VPC. For more information, see Performance testing in an Alibaba Cloud VPC.

Distributed adaption component settings

If the script that you upload contains distributed adaptation components, such as timers and controllers, you can configure these components based on different IP addresses of multiple JMeter tests. This helps ensure accurate and effective performance testing.

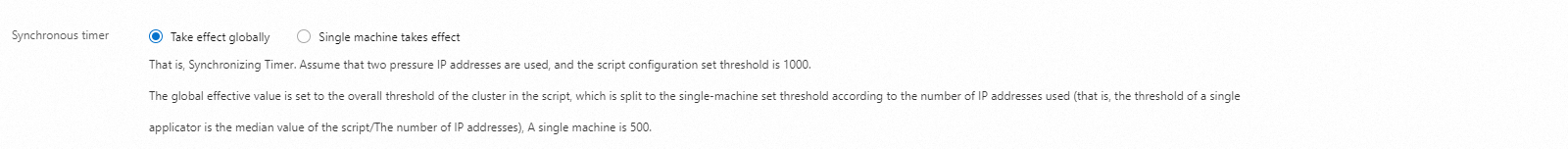

Set the Synchronous Timer. If a timer is provided in the uploded JMeter script, you must configure the parameter of the timer to Global or Single Generator.

Global specifies that the threshold in the script applies as the total threshold of all load generators. Threshold for a single generator = (Threshold in the script)/(Number of IP addresses). For example, two IP addresses are used, and the threshold is 1,000 in the script. In this example, the threshold for a single generator is 500.

Single Generator specifies that the threshold in the script applies to each load generator, which does not overwrite the script. Note that the concurrent users must match the configured value. For example, two IP addresses are used, and the threshold is 1,000 in the script. In this example, the threshold for a single generator and for all generators are 1,000 and 2,000, respectively.

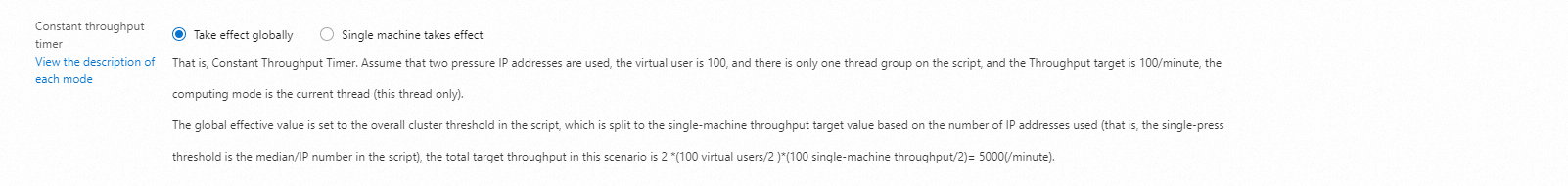

Set the Constant Throughput Timer. If a controller is provided in the uploaded JMeter scripts, you must configure the parameter of controller to Global or Single Generator.

Global specifies that the threshold in the script applies as the total threshold of all load generators. Threshold for a single generator = (Threshold in the script)/(Number of IP addresses). Assume that a performance test uses two IP addresses to simulate 100 concurrent users in a thread group. In the script, the target throughput is set to 100 requests per minute in This Thread Only mode. In this case, the total target TPS per minute of the scenario is 2 × (100 concurrent users/2) × (100/2) = 5000.

Single Generator specifies that the threshold in the script applies to each load generator, which does not overwrite the script. Note that the number of concurrent users must match the configured value. Assume that a performance test uses two IP addresses to simulate 100 concurrent users in a thread group. In the script, the target throughput is set to 100 requests per minute in This Thread Only mode. In this case, the total target TPS per minute of the scenario is 2 × (100 concurrent users/2) × 100 = 10,000.

(Optional) Add cloud resource monitoring.

You can enable this feature to quickly view the corresponding monitoring data in the test report during the test. By default, this feature is disabled. You need to enable this feature manually.

(Optional) Add additional information.

You can add the owner of the performance test and related remarks. By default, this feature is disabled. You need to enable this feature manually.

Step 2: Start testing

To ensure a smooth performance test, you can check the network connectivity, plug-in integrity, and script configuration in debug scenario. To debug a scenario, click Debug Scenario. For more information, see Debug scenarios.

Click Save and Start. On the Tips page, select Execute Now and click The test is permitted and complies with the applicable laws and regulations, and then click Start.

(Optional) You can monitor the performance testing data and adjust test speed.

Data Information

Data Information

Description

Real-time VUM

The total number of resources consumed by each performance testing. Unit: VUM (per virtual user per minute).

Request Success Rate (%)

The success rate of all-scenario requests for all agents in statistical periods.

Average RT (Successful requests/Failed requests)

Successful RT Avg (ms): the average RT of all successful requests.

Failed RT Avg (ms): the average RT of all failed requests.

TPS

Equals to the total number of requests for all agents in a statistical period divided by the duration (unit: second (s)).

Number of Exceptions

The number of request exceptions. Request exceptions occur due to a variety of causes, including connection timeout and request timeout.

Traffic (request/response)

The traffic consumed by a load generator to send requests and receive responses.

Concurrency (current/maximum)

Concurrency for load testing. If the Adjust Speed is configured for a performance testing, the system will display current concurrency and the configured maximum concurrency. If the configured maximum concurrency is not reached during the warm-up phase, the current concurrency is used as the configured concurrency after the warm-up is completed.

Click Speed Regulation and enter the required number of concurrent requests. You can adjust the number during the testing.

Total Requests

The total number of requests sent during performance testing for a scenario.

NoteAggregate and calculate the sources for monitoring data with Backend Listener. The time period for both statistical sampling and data aggregation and calculation is 15s. Therefore, data latency may occur.

Settings

This page lists the basic information in a scenario configuration, including the load source, configuration duration, traffic model, and number of specified IP addresses.

Load testing

On the Test Details tab, click the View Chart on the right side of a link to view real-time data such as TPS, success rate, response time, and traffic.

Click the Load Generator Performance tab to view the time series curves of CPU utilization, Load 5, memory utilization, and network traffic for all load generators. You can view the performance by generator type.

If you have added ECS monitoring, SLB monitoring, RDS monitoring, and ARMS monitoring, you can click the Cloud Resource Monitoring tab to view the monitoring information for each generator.

Sampling Log

On the Sampling Logs tab, you can filter logs based on the Sampler, response status, and RT range during testing, and view log details.

Filter logs based on Sampler and response status during testing. Click the View Details in the Actions column corresponding to a log.

On the General tab of the Log Details dialog box, view the log field and field values. In the upper right corner of the dialog box, switch the display format: Common or HTTP Protocol Style.

If Embedded Resources from HTML Files was configured in the JMeter script, the Sub-request Details tab will appear in the log details.

You can select a specific subrequest to filter the corresponding request logs.

Timing Waterfall tab displays the time consumed for all requests and for each sub-request.

Trace View tab tab details the upstream and downstream traces of the testing API.

Step 3: View a testing report

After a testing is stopped, the system automatically collects the data during the testing and forms a report. This report includes different JMeter scenarios and the dynamic trend for these scenarios.

Log on to the PTS console and choose .

On the Report List page, select a scenario from the drop-down list. Find the report that you want to view and click View Report in the Actions column. For more information, see View JMeter test reports.

NoteIn the trend chart, the statistical data sampling period for each point is 15s, and the final data report may show data latency at the beginning. The maximum number of days for which report data can be retained is 30. If necessary, you can export the report to your computer.

(Optional) Step 4: Export a testing report

You can export the current testing report to your computer.

On the Report Details page, click Export Report.

Select Watermark Version or No Watermark Version, and download the testing report (PDF) to your computer.

Sample scenarios

You can use JMeter performance testing in the following scenarios:

The scenario where high-concurrency distributed testing is required.

The scenario where real-time high-precision monitoring is required during testing, and testing report is automatically generated after the test.

The scenario where JMeter scripts and the dependency files of JMeter environment need to be managed.

For more information about how to use JMeter, see Use JMeter to simulate upload.