After a Performance Testing Service (PTS)-based stress test is complete, the system automatically obtains the stress testing data, such as the metrics for stress testing scenarios, service details, monitoring details, and sampling logs of APIs, to generate a PTS-based stress testing report. This topic describes the details of a PTS-based stress testing report and the metrics.

Procedure

Log on to the PTS console and choose .

Find the performance testing report that you want to view and click View Report in the Actions column.

NoteA performance testing report generated in the JMeter performance testing mode has a JMeter tag

icon. Important

icon. ImportantIn the PTS console, the sampling logs of a PTS-based stress testing report are retained for 30 days. After the 30-day retention period elapses, you cannot view the sampling logs. To prevent data loss, we recommend that you export and save the stress testing report to your on-premises device at the earliest opportunity.

Interpretation of report details

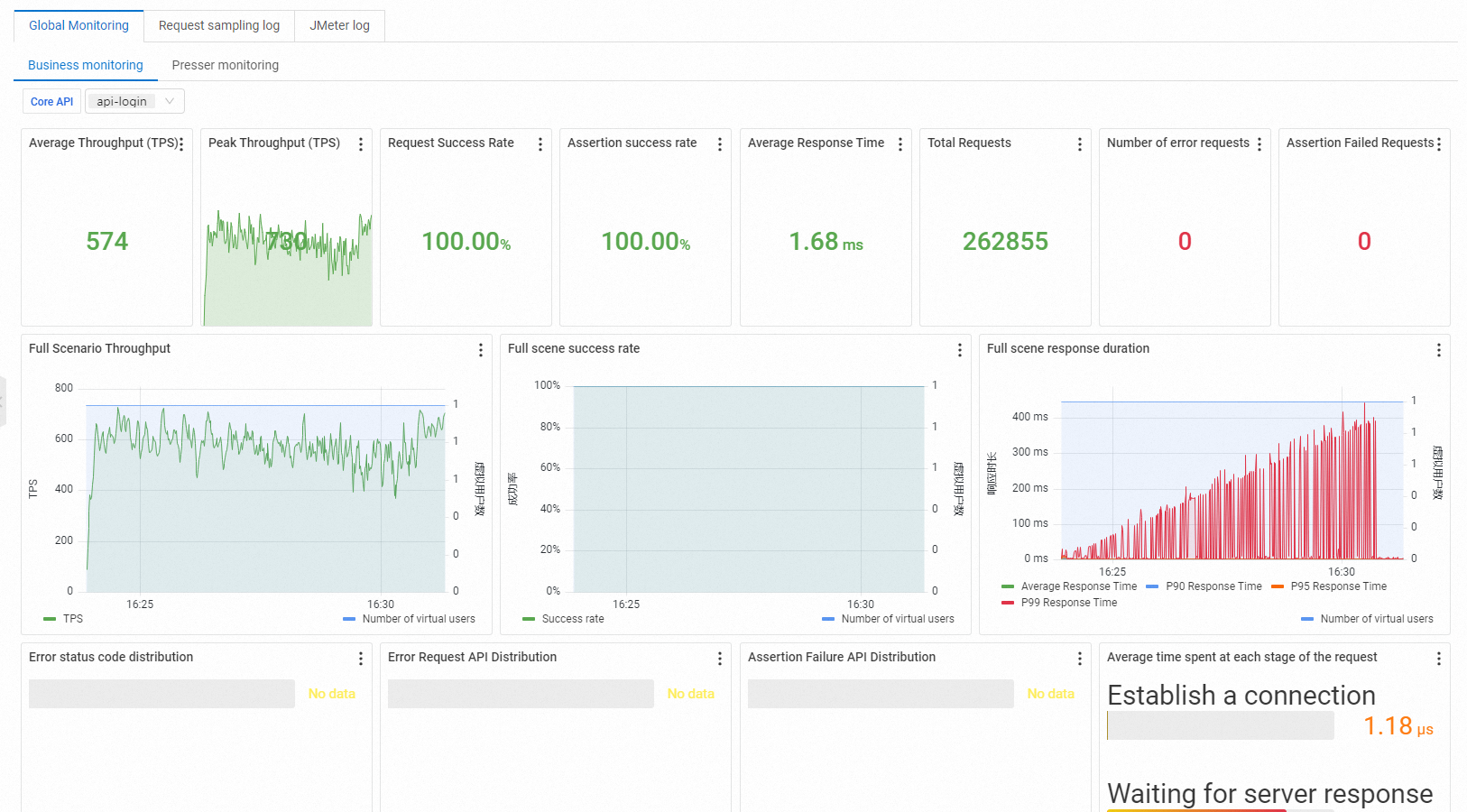

On the report details page, choose to view the basic information, business metric overview, and business details. For more information, see Test metrics.

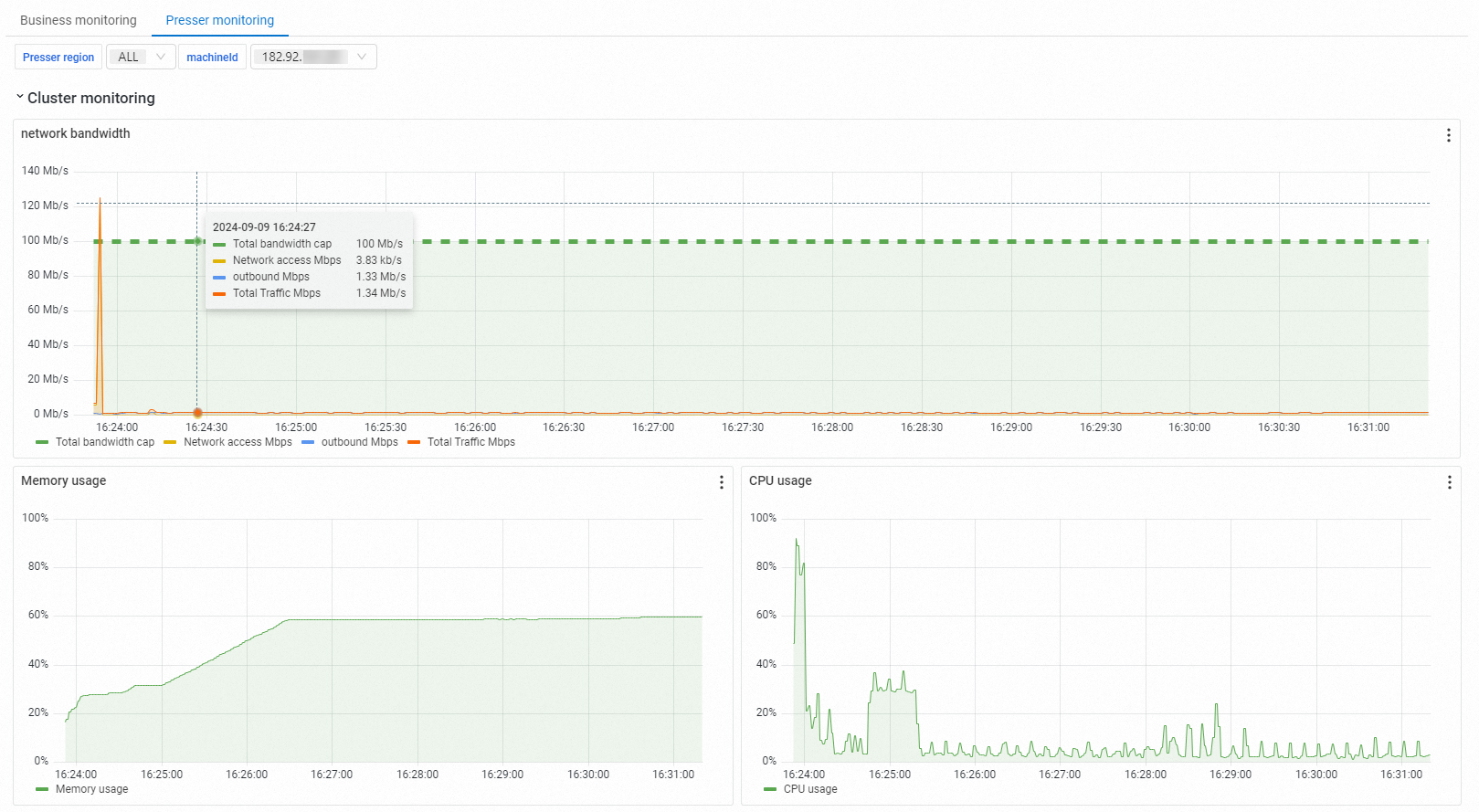

On the report details page, choose to view the details of the performance testing load generator, including its location, network bandwidth, CPU, and memory.

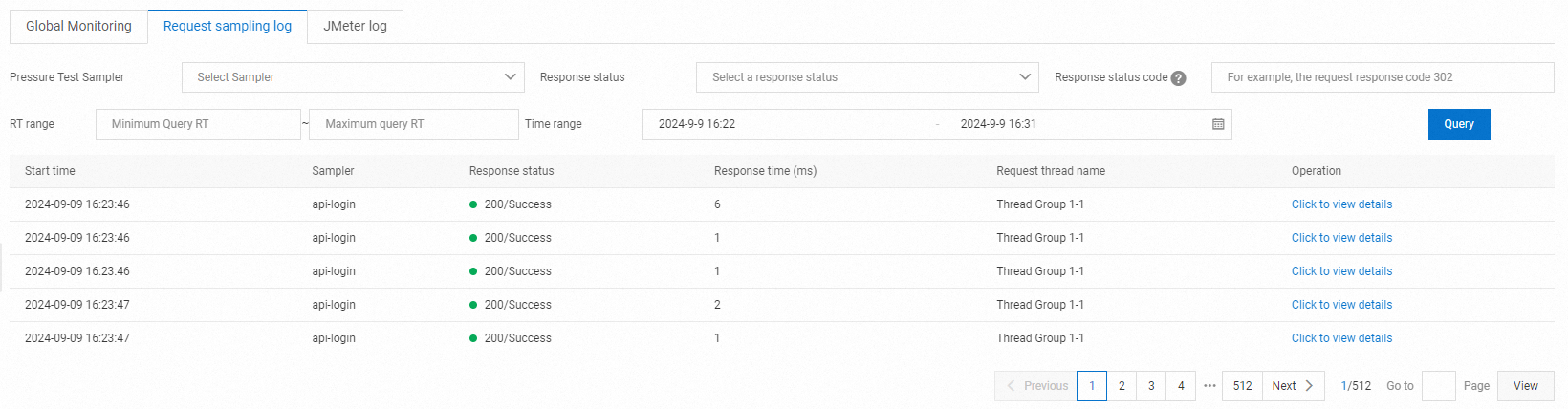

On the report details page, click Request Sampling Log and View Details to view the General and Timing Waterfall of each request. You can quickly locate issues by viewing the sampling logs during performance testing or in performance testing reports.

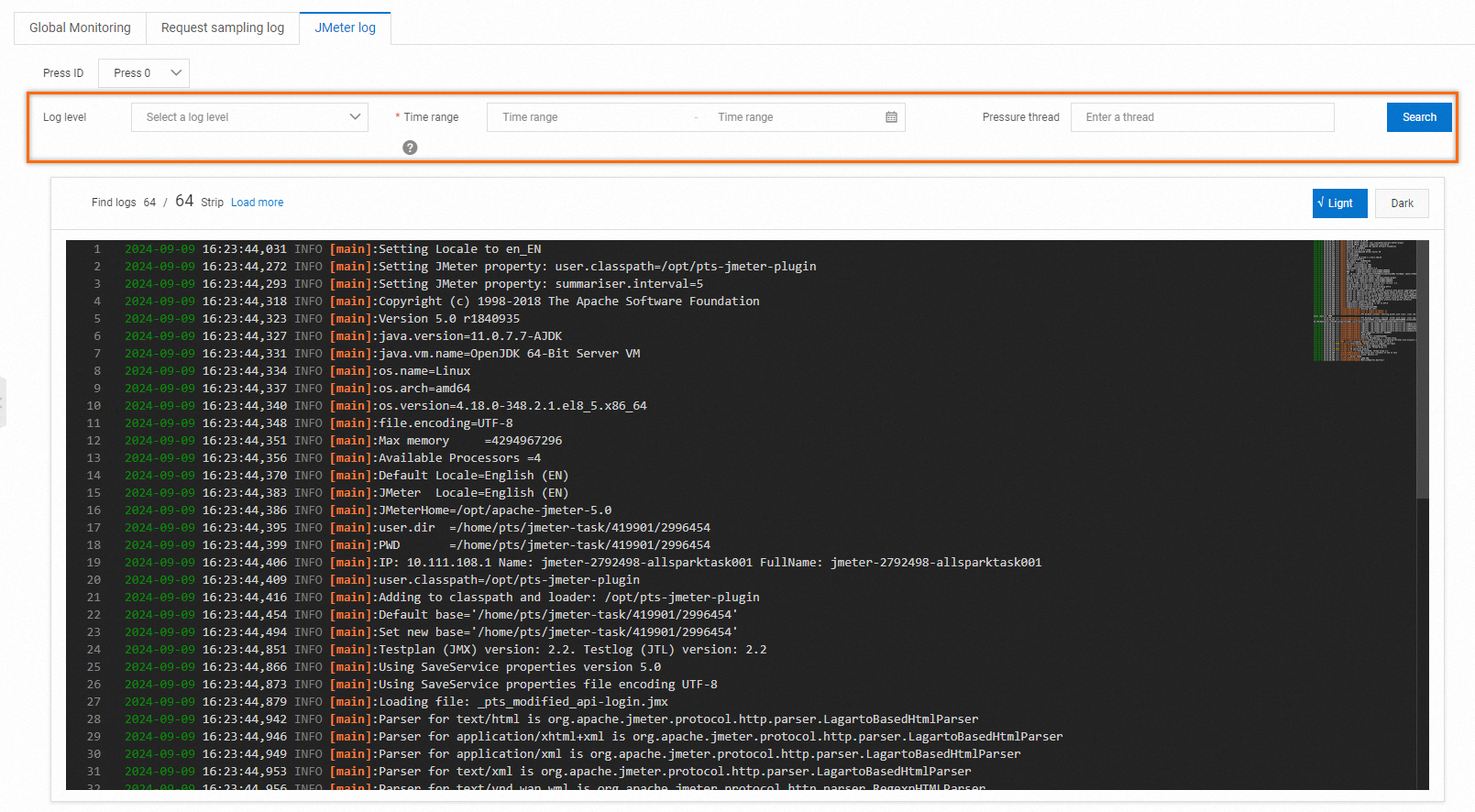

On the report details page, click JMeter Logs to view and retrieve the log information.

Key metrics

Information | Description |

VUM | Total resources consumed during this performance testing. Unit: VUM (per virtual user per minute). |

Scenario Concurrency | The current applied concurrent load. During the warm-up phase, this load may not reach the configured concurrency level. Upon completion of the warm-up phase, it will match the configured concurrency level. |

Scenario TPS (s) | Total requests in all Agent statistical periods divided by the time. |

Total Requests | Total number of requests sent during performance testing in the entire scenario. |

Successful RT Avg (ms) | Average RT for all successful requests. |

Failed RT Avg (ms) | Average RT for all failed requests. |

Success Rate | Success rate of all-scenario requests in all Agent statistical periods. |

Load Source | Source of the performance testing, including the internet inside the Chinese mainland and Alibaba Cloud VPC. |

Specified IP Addresses | Number of source IP addresses configured in the scenario load configuration. |

Business metrics | Description |

Sampler Name | Names of the entire scenario and all Samplers. |

Total Requests | Total number of requests sent during performance testing in the entire scenario. |

Average TPS | Average TPS value of the current scenario during the performance testing period. TPS = Total requests during the Sampler performance testing/Performance testing duration. |

Success Rate | Success rate of this Sampler in the performance testing.

|

Average Response Time | Average response time of this Sampler in the performance testing. Click Details to view the maximum, minimum, and percentile response times. |

Traffic (Send/Receive) | Displays the amount of traffic sent and received, respectively. |

The source of the monitoring data is a simple aggregate calculation based on Backend Listener. The statistical sampling period of the load configuration Agent is 15 seconds, and the data aggregation calculation period is also 15 seconds, so data may be delayed.