The kube-apiserver component provides RESTful APIs of Kubernetes to allow external clients and other components in a Container Service for Kubernetes (ACK) cluster to interact with the ACK cluster. This topic describes the monitoring metrics of the kube-apiserver component. This topic also describes how to use monitoring dashboards and how to handle metric anomalies.

Usage notes

Access method

For more information, see View cluster control plane component monitoring dashboards.

Metrics

Metrics can indicate the status and parameter settings of a component. The following table describes the metrics supported by kube-apiserver.

Metric | Type | Description |

apiserver_request_duration_seconds_bucket | Histogram | The latency between a request sent to the API server and a response returned by the API server. Requests are classified based on the following dimensions:

The buckets in the histogram of the API server include |

apiserver_request_total | Counter | The number of different requests received by the API server. Requests are classified based on verbs, groups, versions, resource, scope, component, HTTP content types, HTTP status code, and clients. |

apiserver_request_no_resourceversion_list_total | Counter | The number of LIST requests that are sent to the API server and for which the |

apiserver_current_inflight_requests | Gauge | The number of requests that are being processed by the API server. Requests are classified into the following types:

|

apiserver_dropped_requests_total | Counter | The number of requests that are dropped when throttling is performed on the API server. A request is dropped if the |

etcd_request_duration_seconds_bucket | Histogram | The latency between a request sent from the API server and a response returned by etcd. Requests are classified based on operations and operation types. The buckets in the histogram include |

apiserver_flowcontrol_request_concurrency_limit | Gauge | The request concurrency limit of the API Priority and Fairness (APF) feature. The maximum concurrency limit for a priority queue, which is the theoretical maximum number of requests that the queue allows to be processed at the same time. This helps you understand how the API server allocates resources to different priority queues by using throttling policies. This ensures that high-priority requests can be processed in a timely manner. This metric is deprecated in Kubernetes 1.30 and will be removed from Kubernetes 1.31. For clusters that run Kubernetes 1.31 and later, we recommend that you use the apiserver_flowcontrol_nominal_limit_seats metric instead. |

apiserver_flowcontrol_current_executing_requests | Gauge | The number of requests currently being executed in a priority queue, which is the actual concurrent load of the queue. This helps you understand the actual load of the API server and determine if it is approaching the maximum concurrency limit of the system to prevent overload. |

apiserver_flowcontrol_current_inqueue_requests | Gauge | The number of requests currently waiting in a priority queue, which indicates the request backlog in that queue. This helps you understand the traffic pressure on the API server and whether the queue is overloaded. |

apiserver_flowcontrol_nominal_limit_seats | Gauge | APF nominal concurrency limit seats, which is the nominal maximum concurrent processing capacity of the API server. Unit: Seats. This helps you understand how the API server allocates resources to different priority queues by using throttling policies. |

apiserver_flowcontrol_current_limit_seats | Gauge | APF current concurrency limit seats. The current concurrency limit of a priority queue, which is the actual maximum number of concurrent seats allowed after dynamic adjustment. This reflects the actual concurrent capacity of the current queue, which may vary due to system load or other factors. Unlike nominal_limit_seats, this value may be affected by global throttling policies. |

apiserver_flowcontrol_current_executing_seats | Gauge | APF current executing seats. The number of seats corresponding to the requests currently being executed in a priority queue, which reflects the concurrent resources being consumed in the current queue. This helps you understand the actual load situation of the queue. If current_executing_seats is close to current_limit_seats, it indicates that the concurrent resources of the queue may be about to be exhausted. You can optimize the configuration by increasing the maxMutatingRequestsInflight and maxRequestsInflight parameter values of the API server. For more information about access methods and parameter values, see Customize the parameters of control plane components in ACK Pro clusters. |

apiserver_flowcontrol_current_inqueue_seats | Gauge | APF current queue seats. The number of seats corresponding to the requests currently waiting to be processed in a priority queue, which reflects the resources occupied by the requests waiting to be processed in the current queue. This helps you understand the backlog situation of the queue. |

apiserver_flowcontrol_request_execution_seconds_bucket | Histogram | The actual execution time of requests. This metric records the time taken for the request from the start of execution to final completion. Time interval distribution is 0, 0.005, 0.02, 0.05, 0.1, 0.2, 0.5, 1, 2, 5, 10, 15, and 30. Unit: seconds. |

apiserver_flowcontrol_request_wait_duration_seconds_bucket | Histogram | The distribution of time requests wait in the queue. This metric records the waiting time from when a request enters the queue to when it starts execution. Time interval distribution is 0, 0.005, 0.02, 0.05, 0.1, 0.2, 0.5, 1, 2, 5, 10, 15, and 30. Unit: seconds. |

apiserver_flowcontrol_dispatched_requests_total | Counter | The number of requests successfully scheduled and processed, which reflects the total number of requests successfully handled by the API server. |

apiserver_flowcontrol_rejected_requests_total | Counter | The number of requests rejected due to exceeding concurrency limits or queue capacity. |

apiserver_admission_controller_admission_duration_seconds_bucket | Histogram | The processing latency of the admission controller. The histogram is identified by the admission controller name, operation such as CREATE, UPDATE, or CONNECT, API resource, operation type such as validate or admit, and whether the request is denied. The buckets of the histogram include |

apiserver_admission_webhook_admission_duration_seconds_bucket | Histogram | The processing latency of the admission webhook. The histogram is identified by the admission controller name, operation such as CREATE, UPDATE, or CONNECT, API resource, operation type such as validate or admit, and whether the request is denied. The buckets of the histogram include |

apiserver_admission_webhook_admission_duration_seconds_count | Counter | The number of requests processed by the admission webhook. The histogram is identified by the admission controller name, operation such as CREATE, UPDATE, or CONNECT, API resource, operation type such as validate or admit, and whether the request is denied. |

cpu_utilization_core | Gauge | The number of used CPU cores. Unit: cores. |

memory_utilization_byte | Gauge | The amount of used memory. Unit: bytes. |

up | Gauge | Indicates whether kube-apiserver is available.

|

The following resource utilization metrics are deprecated. Remove any alerts and monitoring data that depend on these metrics at the earliest opportunity:

cpu_utilization_ratio: CPU utilization.

memory_utilization_ratio: Memory utilization.

Usage notes for dashboards

Dashboards are generated based on metrics and Prometheus Query Language (PromQL). The following sections describe the API server dashboards for key metrics, cluster-level overview, resource analysis, queries per second (QPS) and latency, admission controller and webhook, and client analysis.

The dashboard consists of the following modules. In most cases, these dashboards are used in the following sequence:

Key metrics: Quickly view key cluster metrics.

Overview: Analyze the response latency of the API server, the number of requests that are being processed, and whether request throttling is triggered.

Resource analysis: View the resource usage of the managed components.

QPS and latency: Analyze the QPS and response time based on multiple dimensions.

APF throttling: Confirm the API server request traffic distribution, throttling status, and system performance bottlenecks based on APF metrics.

Admission controller and webhook: Analyze the QPS and response time of the admission controller and webhook.

Client analysis: Analyze the client QPS based on multiple dimensions.

Filters

Multiple filters are displayed above the dashboards. You can use the following filters to filter requests sent to the API server based on verbs and resources, modify the quantile, and change the PromQL sampling interval.

To change the quantile, use the quantile filter. For example, if you select 0.9, 90% of the sample values of a metric are used as sample values in the histogram. A value of 0.9 (P90) can help eliminate the impacts of long-tail samples, which are only a small portion of the total sample values. A value of 0.99 (P99) includes long-tail samples.

The following filters are used to specify the time range and update interval.

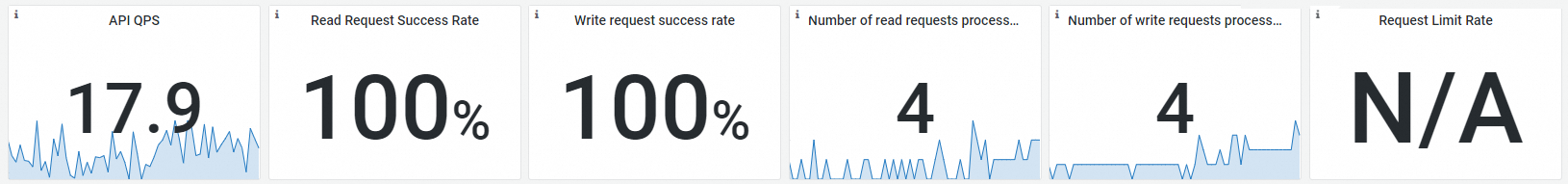

Key metrics

Observability

Feature

Name | PromQL | Description |

API QPS | sum(irate(apiserver_request_total[$interval])) | The QPS of the API server. |

Read Request Success Rate | sum(irate(apiserver_request_total{code=~"20.*",verb=~"GET|LIST"}[$interval]))/sum(irate(apiserver_request_total{verb=~"GET|LIST"}[$interval])) | The success rate of read requests sent to the API server. |

Write Request Success Rate | sum(irate(apiserver_request_total{code=~"20.*",verb!~"GET|LIST|WATCH|CONNECT"}[$interval]))/sum(irate(apiserver_request_total{verb!~"GET|LIST|WATCH|CONNECT"}[$interval])) | The success rate of write requests sent to the API server. |

Number of read requests processed | sum(apiserver_current_inflight_requests{requestKind="readOnly"}) | The number of read requests that are being processed by the API server. |

Number of write requests processed | sum(apiserver_current_inflight_requests{requestKind="mutating"}) | The number of write requests that are being processed by the API server. |

Request Limit Rate | sum(irate(apiserver_dropped_requests_total[$interval])) | The ratio of the number of dropped requests to the total number of requests sent to the API server when the request throttling is performed on the API server. |

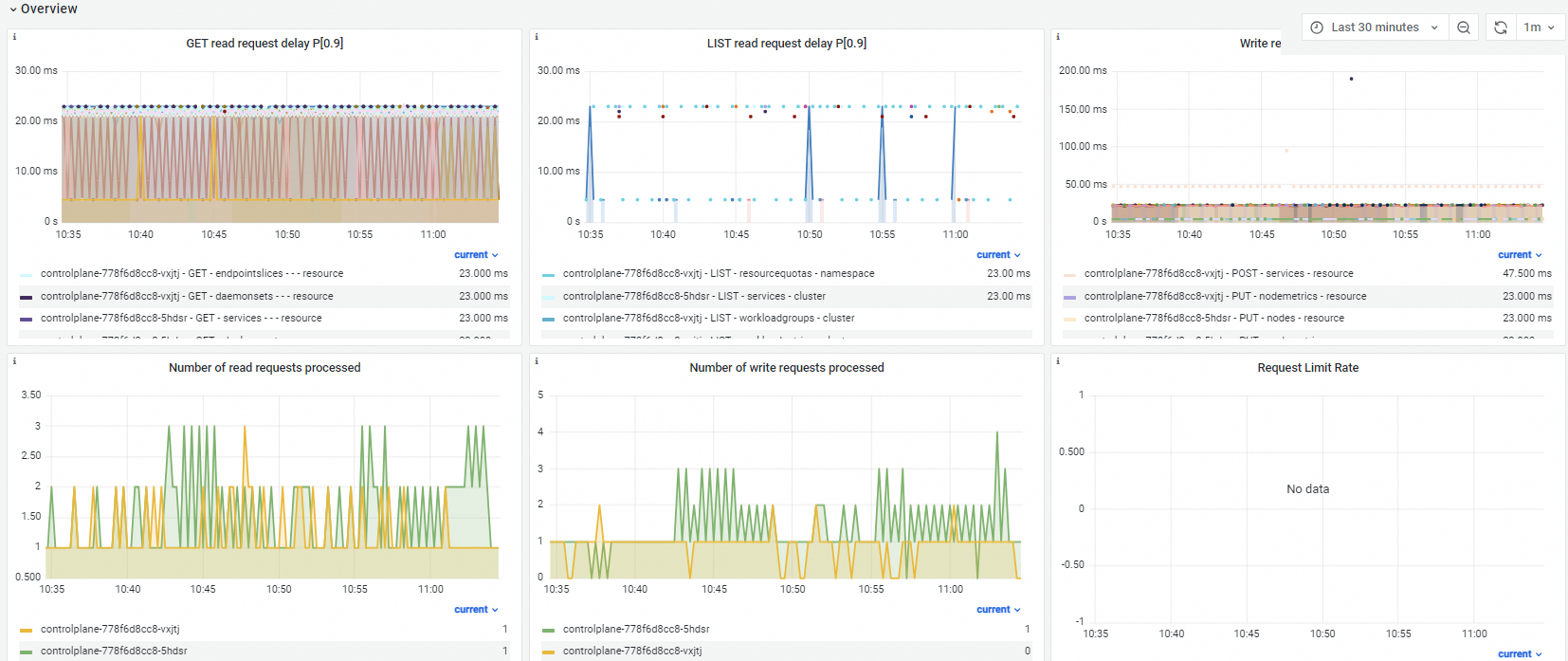

Overview

Observability

Feature

Metric | PromQL | Description |

GET read request delay P[0.9] | histogram_quantile($quantile, sum(irate(apiserver_request_duration_seconds_bucket{verb="GET",resource!="",subresource!~"log|proxy"}[$interval])) by (pod, verb, resource, subresource, scope, le)) | The response time of GET requests displayed based on the following dimensions: API server pods, GET verb, resources, and scope. |

LIST read request delay P[0.9] | histogram_quantile($quantile, sum(irate(apiserver_request_duration_seconds_bucket{verb="LIST"}[$interval])) by (pod_name, verb, resource, scope, le)) | The response time of LIST requests displayed based on the following dimensions: API server pods, LIST verb, resources, and scope. |

Write request delay P[0.9] | histogram_quantile($quantile, sum(irate(apiserver_request_duration_seconds_bucket{verb!~"GET|WATCH|LIST|CONNECT"}[$interval])) by (cluster, pod_name, verb, resource, scope, le)) | The response time of Mutating requests displayed based on the following dimensions: API server pods, verbs such as GET, WATCH, LIST, and CONNECT, resources, and scope. |

Number of read requests processed | apiserver_current_inflight_requests{request_kind="readOnly"} | The number of read requests that are being processed by the API server. |

Number of write requests processed | apiserver_current_inflight_requests{request_kind="mutating"} | The number of write requests that are being processed by the API server. |

Request Limit Rate | sum(irate(apiserver_dropped_requests_total{request_kind="readOnly"}[$interval])) by (name) sum(irate(apiserver_dropped_requests_total{request_kind="mutating"}[$interval])) by (name) | The throttling rate of the API server. |

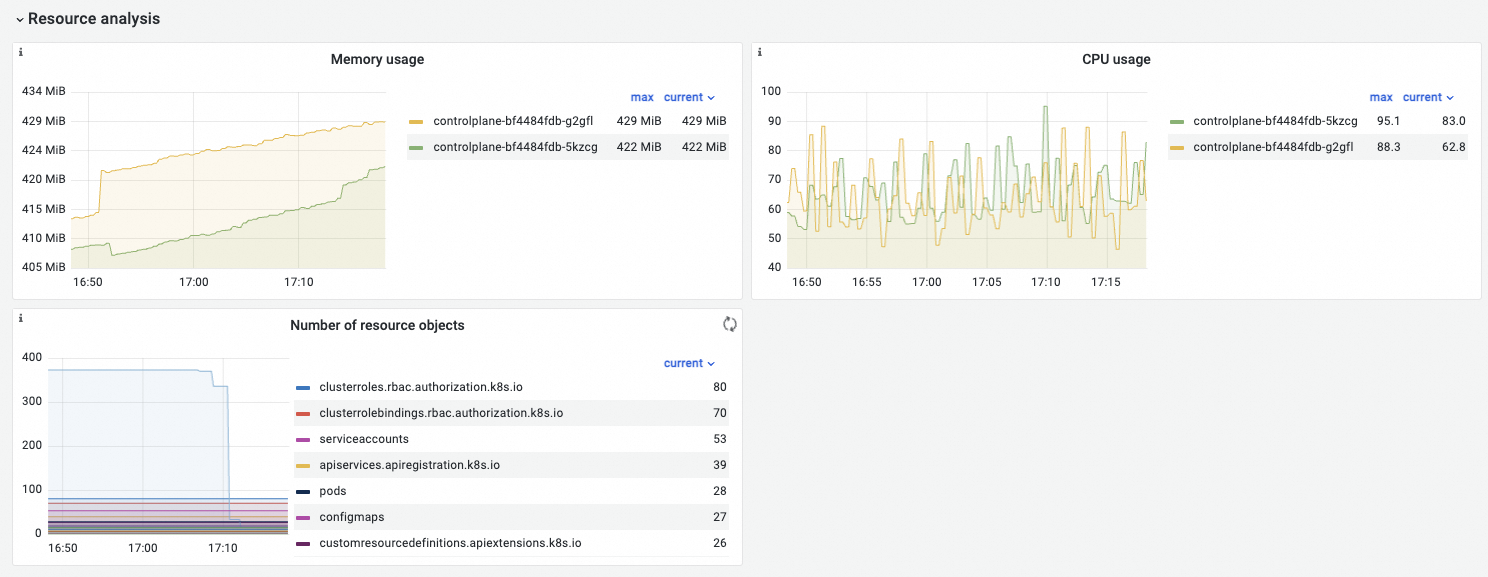

Resource analysis

Observability

Feature

Metric | PromQL | Description |

Memory Usage | memory_utilization_byte{container="kube-apiserver"} | The memory usage of API server. Unit: bytes. |

CPU Usage | cpu_utilization_core{container="kube-apiserver"}*1000 | The CPU usage of API server. Unit: millicores. |

Number of resource objects |

|

Note Due to compatibility issues, both the apiserver_storage_objects and etcd_object_counts metrics exist in Kubernetes 1.22. |

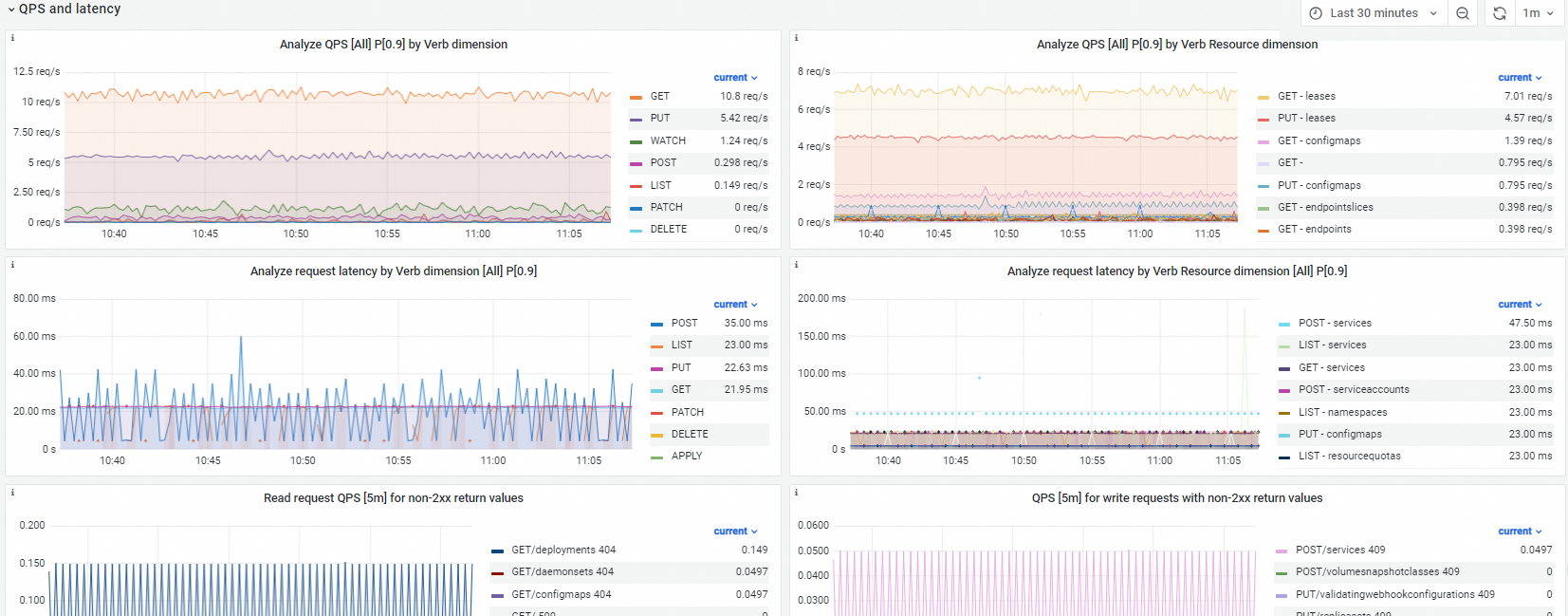

QPS and latency

Observability

Feature

Metric | PromQL | Description |

Analyze QPS [All] P[0.9] by Verb dimension | sum(irate(apiserver_request_total{verb=~"$verb"}[$interval]))by(verb) | The QPS calculated based on verbs. |

Analyze QPS [All] P[0.9] by Verb Resource dimension | sum(irate(apiserver_request_total{verb=~"$verb",resource=~"$resource"}[$interval]))by(verb,resource) | The QPS calculated based on verbs and resources. |

Analyze request latency by Verb dimension [All] P[0.9] | histogram_quantile($quantile, sum(irate(apiserver_request_duration_seconds_bucket{verb=~"$verb", verb!~"WATCH|CONNECT",resource!=""}[$interval])) by (le,verb)) | The response latency calculated based on verbs. |

Analyze request latency by Verb Resource dimension [All] P[0.9] | histogram_quantile($quantile, sum(irate(apiserver_request_duration_seconds_bucket{verb=~"$verb", verb!~"WATCH|CONNECT", resource=~"$resource",resource!=""}[$interval])) by (le,verb,resource)) | The response latency calculated based on verbs and resources. |

Read request QPS [5m] for non-2xx return values | sum(irate(apiserver_request_total{verb=~"GET|LIST",resource=~"$resource",code!~"2.*"}[$interval])) by (verb,resource,code) | The QPS of read requests for which HTTP status codes other than 2xx, such as 4xx or 5xx, are returned. |

QPS [5m] for write requests with non-2xx return values | sum(irate(apiserver_request_total{verb!~"GET|LIST|WATCH",verb=~"$verb",resource=~"$resource",code!~"2.*"}[$interval])) by (verb,resource,code) | The QPS of write requests for which HTTP status codes other than 2xx, such as 4xx or 5xx, are returned. |

Apiserver to etcd request latency [5m] | histogram_quantile($quantile, sum(irate(etcd_request_duration_seconds_bucket[$interval])) by (le,operation,type,instance)) | The latency of requests sent from API server to etcd. |

APF throttling

APF throttling related metrics monitoring is in canary release.

Clusters that run Kubernetes 1.20 and later support APF-related metrics. For more information about how to upgrade clusters, see Manually upgrade a cluster.

APF-related metrics dashboards also depend on the upgrade of the following components. For more information, see Upgrade monitoring components.

Cluster monitoring component: 0.06 or later.

ack-arms-prometheus: 1.1.31 or later.

Managed probe: 1.1.31 or later.

Observability

Feature

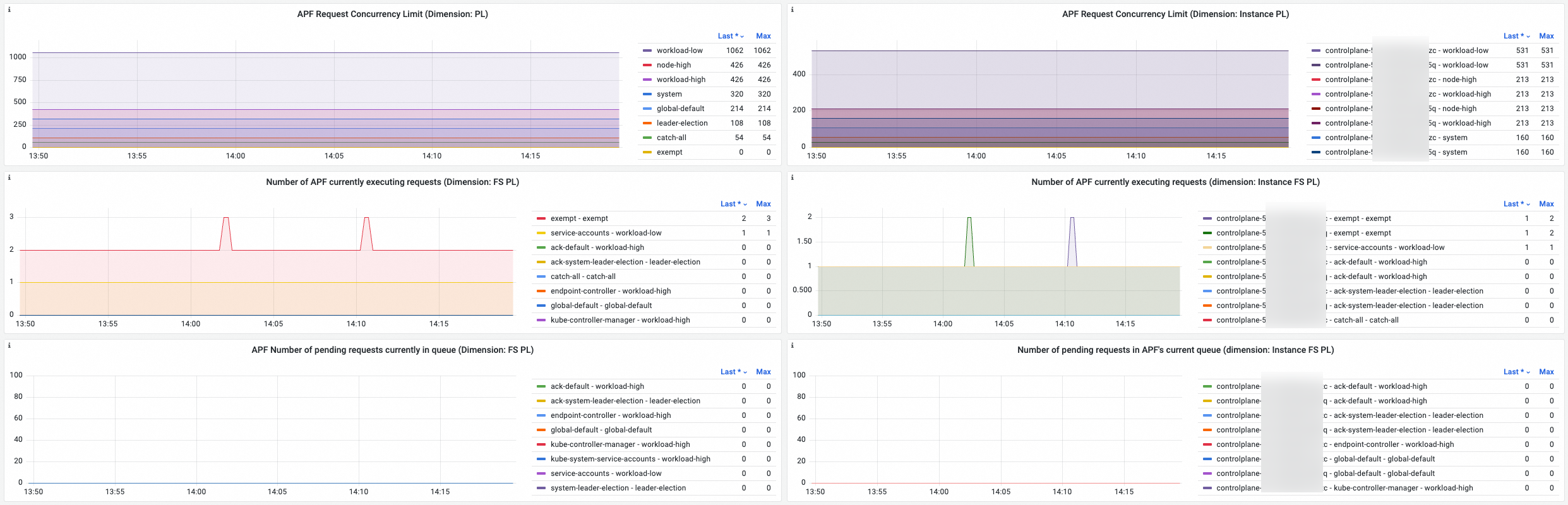

Some metrics in the following table are statistics by PL, Instance, and FS dimensions.

PL: Priority Level dimension, which is statistics based on different priority levels.

Instance: Statistics based on the API server instance dimension.

FS: Flow Schema dimension, which is statistics based on request classification.

For more information about APF and the above dimensions, see APF.

Metric | PromQL | Description |

APF Request Concurrent Limit (Dimension: PL) | sum by(priority_level) (apiserver_flowcontrol_request_concurrency_limit) | The APF request concurrency limit calculated based on PL or instance and PL, which is the maximum number of requests that a priority queue theoretically allows to be processed at the same time. apiserver_flowcontrol_request_concurrency_limit is deprecated in Kubernetes 1.30 and will be removed from version 1.31. For clusters that run Kubernetes 1.31 and later, we recommend that you use the apiserver_flowcontrol_nominal_limit_seats metric instead. |

APF Request Concurrent Limit (Dimension: Instance PL) | sum by(instance,priority_level) (apiserver_flowcontrol_request_concurrency_limit) | |

Number of APF currently executing request (Dimension: FS PL) | sum by(flow_schema,priority_level) (apiserver_flowcontrol_current_executing_requests) | The APF currently executing request calculated based on FS and PL, or instance, FS, and PL. |

Number of APF currently executing request (Dimension: Instance FS PL) | sum by(instance,flow_schema,priority_level)(apiserver_flowcontrol_current_executing_requests) | |

APF Number of pending requests currently in queue (Dimension: FS PL) | sum by(flow_schema,priority_level) (apiserver_flowcontrol_current_inqueue_requests) | The number of pending requests currently in queue calculated based on FS and PL, or instance, FS, and PL. |

Number of pending requests in APF's current queue (Dimension: Instance FS PL) | sum by(instance,flow_schema,priority_level) (apiserver_flowcontrol_current_inqueue_requests) | |

APF Nominal Concurrency Limited Seats | sum by(instance,priority_level) (apiserver_flowcontrol_nominal_limit_seats) | The APF seat count-related metrics calculated based on instance and PL. The following metrics are Included:

|

APF Current Concurrency Limit Seats | sum by(instance,priority_level) (apiserver_flowcontrol_current_limit_seats) | |

Number of current APF seats in execution | sum by(instance,priority_level) (apiserver_flowcontrol_current_executing_seats) | |

Number of seats in APF's current queue | sum by(instance,priority_level) (apiserver_flowcontrol_current_inqueue_seats) | |

APF Request Execution Time | histogram_quantile($quantile, sum(irate(apiserver_flowcontrol_request_execution_seconds_bucket[$interval])) by (le,instance, flow_schema,priority_level)) | The time taken from when a request starts execution to when it is finally completed. |

APF Request Wait Time | histogram_quantile($quantile, sum(irate(apiserver_flowcontrol_request_wait_seconds_bucket[$interval])) by (le,instance, flow_schema,priority_level)) | The waiting time from when a request enters the queue to when it starts execution. |

Request QPS sucessfully scheduled and processed by APF | sum(irate(apiserver_flowcontrol_dispatched_requests_total[$interval]))by(instance,flow_schema,priority_level) | QPS of successfully scheduled and processed requests. |

APD Deny Request QPS | sum(irate(apiserver_flowcontrol_rejected_requests_total[$interval]))by(instance,flow_schema,priority_level) | QPS of requests rejected due to exceeding concurrency limits or queue capacity. |

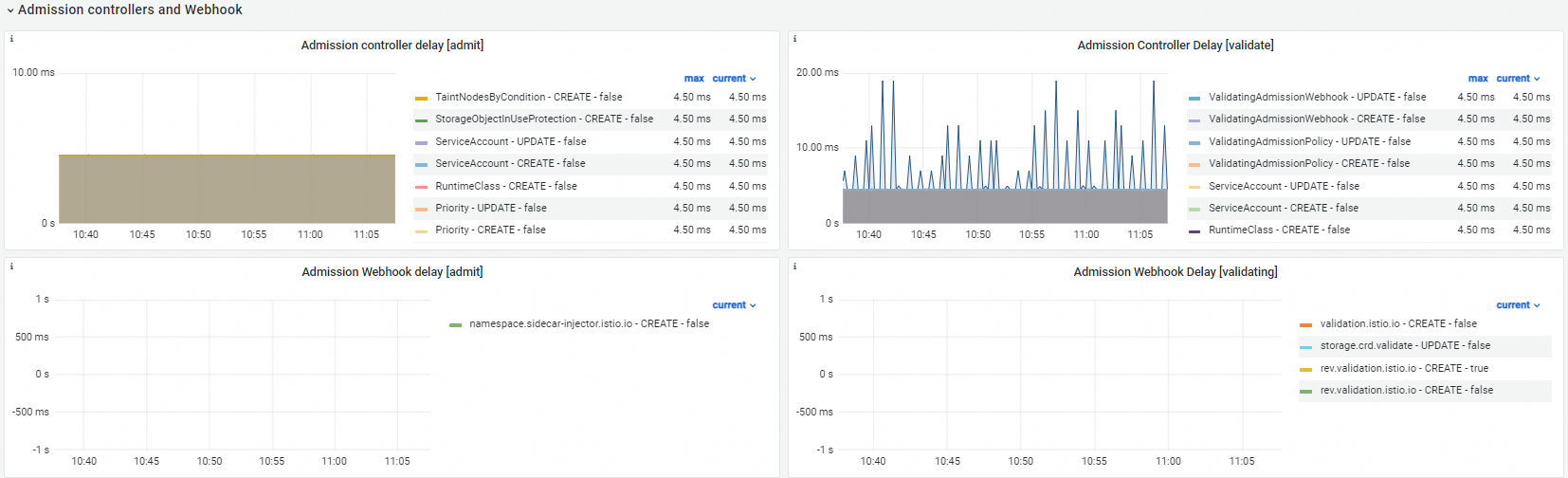

Admission controller and webhook

Observability

Feature

Metric | PromQL | Description |

Admission controller delay [admit] | histogram_quantile($quantile, sum by(operation, name, le, type, rejected) (irate(apiserver_admission_controller_admission_duration_seconds_bucket{type="admit"}[$interval])) ) | The statistics about the name of the admission controller of the admit type, the performed operations, whether the operations are denied, and the duration of the operations. The buckets of the histogram include |

Admission Controller Delay [validate] | histogram_quantile($quantile, sum by(operation, name, le, type, rejected) (irate(apiserver_admission_controller_admission_duration_seconds_bucket{type="validate"}[$interval])) ) | The statistics about the name of the admission controller of the validate type, the performed operations, whether the operations are denied, and the duration of the operations. The buckets of the histogram include |

Admission Webhook delay [admit] | histogram_quantile($quantile, sum by(operation, name, le, type, rejected) (irate(apiserver_admission_webhook_admission_duration_seconds_bucket{type="admit"}[$interval])) ) | The statistics about the name of the admission webhook of the admit type, the performed operations, whether the operations are denied, and the duration of the operations. The buckets of the histogram include |

Admission Webhook Delay [validating] | histogram_quantile($quantile, sum by(operation, name, le, type, rejected) (irate(apiserver_admission_webhook_admission_duration_seconds_bucket{type="validating"}[$interval])) ) | The statistics about the name of the admission webhook of the validating type, the performed operations, whether the operations are denied, and the duration of the operations. The buckets of the histogram include |

Admission Webhook Request QPS | sum(irate(apiserver_admission_webhook_admission_duration_seconds_count[$interval]))by(name,operation,type,rejected) | The QPS of the admission webhook. |

Client analysis

Observability

Feature

Metric | PromQL | Description |

Analyze QPS by Client dimension | sum(irate(apiserver_request_total{client!=""}[$interval])) by (client) | The QPS statistics based on clients. This can help you analyze the clients that access the API server and the QPS. |

Analyze QPS by Verb Resource Client dimension [All] | sum(irate(apiserver_request_total{client!="",verb=~"$verb", resource=~"$resource"}[$interval]))by(verb,resource,client) | The QPS statistics based on verbs, resources, and clients. |

Analyze LIST request QPS by Verb Resource Client dimension (no resourceVersion field) | sum(irate(apiserver_request_no_resourceversion_list_total[$interval]))by(resource,client) |

|

Common metric anomalies

If the metrics of kube-apiserver become abnormal, check whether the metric anomalies described in the following sections exist.

Success rate of read/write requests

Case description

Normal | Abnormal | Description |

The values of Read Request Success Rate and Write Request Success Rate are close to 100%. | The values of Read Request Success Rate and Write Request Success Rate are small. For example, the values are smaller than 90%. | A large number of requests for which HTTP status codes other than 200 are returned exist. |

Recommended solution

Check Read request QPS [5m] for non-2xx return values and QPS [5m] for write requests with non-2xx return values for request types and resources that cause kube-apiserver to return HTTP status codes other than 2xx. Evaluate whether such requests meet your expectations and optimize the requests based on the evaluation results. For example, if GET/deployment 404 exists, GET Deployment requests for which the HTTP status code 404 is returned exist. This decreases the value of Read Request Success Rate.

Latency of GET/LIST requests and latency of write requests

Case description

Normal | Abnormal | Description |

The values of GET read request delay P[0.9], LIST read request delay P[0.9], and Write request delay P[0.9] vary based on the amount of resources to be accessed in the cluster and the cluster size. Therefore, no specific threshold can be used to identify anomalies. All cases are acceptable if your workloads are not adversely affected. For example, if the number of requests that are sent to access a specific type of resource increases, the latency of LIST requests increases. In most cases, the values of GET read request delay P[0.9] and Write request latency delay P[0.9] are smaller than 1 second, and the value of LIST read request delay P[0.9] is greater than 5 seconds. |

| Check whether the response latency increases due to the admission webhook that cannot promptly respond or the increase in requests sent from clients that access the resources. |

Recommended solution

Check GET read request delay P[0.9], LIST read request delay P[0.9], and Write request latency delay P[0.9] for request types and resources that cause the API server to return HTTP status codes other than 2xx. Evaluate whether such requests meet your expectations and optimize the requests based on the evaluation results.

The upper limit of the

apiserver_request_duration_seconds_bucketmetric is 60 seconds. Response latencies that are longer than 60 seconds are rounded down to 60 seconds. Pod access requestsPOST pod/execand log retrieval requests create persistent connections. The response latency of these requests is longer than 60 seconds. Therefore, you can ignore these requests when you analyze requests.

Analyze whether the response latency of the API server increases due to the admission webhook that cannot promptly respond. For more information, see the Admission webhook latency section of this topic.

Number of read or write requests that are being processed and dropped requests

Case description

Normal | Abnormal | Description |

In most cases, if the values of Number of read requests processed and Number of write requests processed are smaller than 100, and the value of Request Limit Rate is 0, no anomaly occurs. |

| The request queue is full. Check whether the issue is caused by temporary request spikes or the admission webhook that cannot promptly respond. If the number of pending requests exceeds the length of the queue, the API server triggers request throttling and the value of Request Limit Rate exceeds 0. As a result, the stability of the cluster is affected. |

Recommended solution

View the QPS and latency and client analysis dashboards. Check whether the top requests are necessary. If the requests are generated by workloads, check whether you can reduce the number of similar requests.

Analyze whether the response latency of the API server increases due to the admission webhook that cannot promptly respond. For more information, see the Admission webhook latency section of this topic.

Admission webhook latency

Case description

Normal | Abnormal | Description |

The value of Admission Webhook Delay is smaller than 0.5 seconds. | The value of Admission Webhook Delay remains greater than 0.5 seconds. | If the admission webhook cannot promptly respond, the response latency of the API server increases. |

Recommended solution

Analyze the admission webhook logs and check whether the webhooks work as expected. If you no longer need a webhook, uninstall it.

References

For more information about the monitoring metrics and dashboards of other control plane components and how to handle metric anomalies, see the following topics:

For more information about how to obtain Prometheus monitoring data by using the console or calling API operations, see Use PromQL to query Prometheus monitoring data.

For more information about how to use a custom PromQL statement to create an alert rule in Managed Service for Prometheus, see Create an alert rule for a Prometheus instance.