Prometheus is an open source project used for monitoring cloud-native applications. This topic explains how to deploy Prometheus in a Container Service for Kubernetes (ACK) cluster.

Index

Background information

This topic describes how to efficiently monitor system components and resource entities in a Kubernetes cluster. A monitoring system monitors the following types of objects:

-

Resource monitoring: resource utilization of nodes and applications. In a Kubernetes cluster, the monitoring system monitors the resource usage of nodes, pods, and the cluster.

-

Application monitoring: internal metrics of applications. For example, the monitoring system dynamically counts the number of online users using an application, collects monitoring metrics from application ports, and enables alerting based on the collected metrics.

In a Kubernetes cluster, the monitoring system monitors the following objects:

-

Cluster components: The components of the Kubernetes cluster, such as kube-apiserver, kube-controller-manager, and etcd. To monitor cluster components, specify the monitoring methods in configuration files.

-

Static resource entities: The status of resources on nodes and kernel events. To monitor static resource entities, specify the monitoring methods in configuration files.

-

Dynamic resource entities: Entities of abstract workloads in Kubernetes, such as Deployments, DaemonSets, and pods. To monitor dynamic resource entities in a Kubernetes cluster, you can deploy Prometheus in the Kubernetes cluster.

-

Custom objects in applications: For applications that require customized monitoring of data and metrics, specific configurations need to be set to meet unique monitoring requirements. This can be achieved by combining port exposure with the Prometheus monitoring solution.

Procedure

-

Deploy the Prometheus monitoring solution.

Log on to the ACK console. In the left-side navigation pane, choose .

-

On the App Catalog page, you can click the Applications tab. Search for and click ack-prometheus-operator.

-

On the ack-prometheus-operator page, click Deploy .

-

In the Create panel, select a cluster and a namespace, and then click Next.

-

On the Parameters wizard page, configure the parameters and click OK .

View the deployment result:

-

Run the following command to map Prometheus in the cluster to local port 9090.

kubectl port-forward svc/ack-prometheus-operator-prometheus 9090:9090 -n monitoring -

In a browser, visit localhost:9090 to view Prometheus.

-

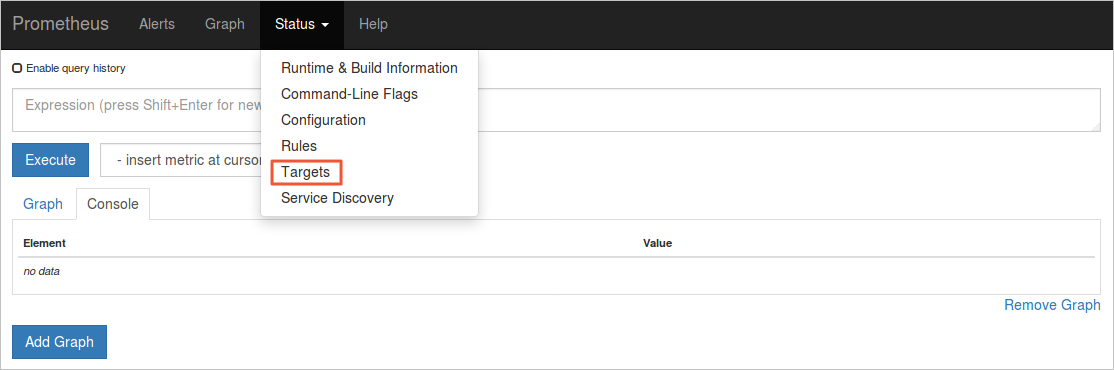

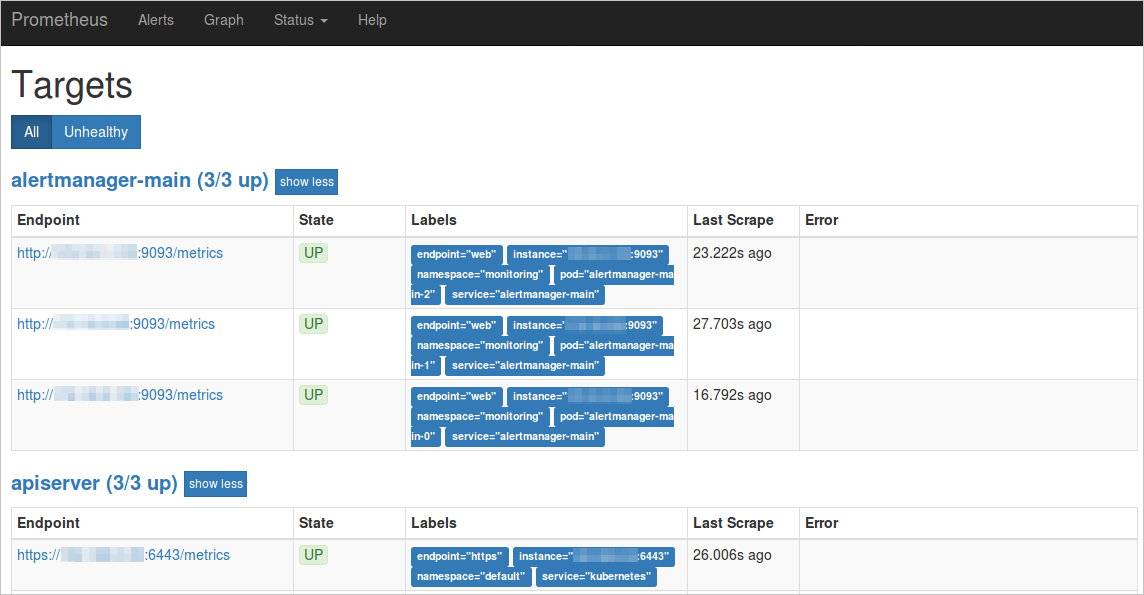

In the menu bar, choose to view all scraping tasks.

If the status of all tasks is UP, it indicates that all scraping tasks are operating as expected.

If the status of all tasks is UP, it indicates that all scraping tasks are operating as expected.

-

-

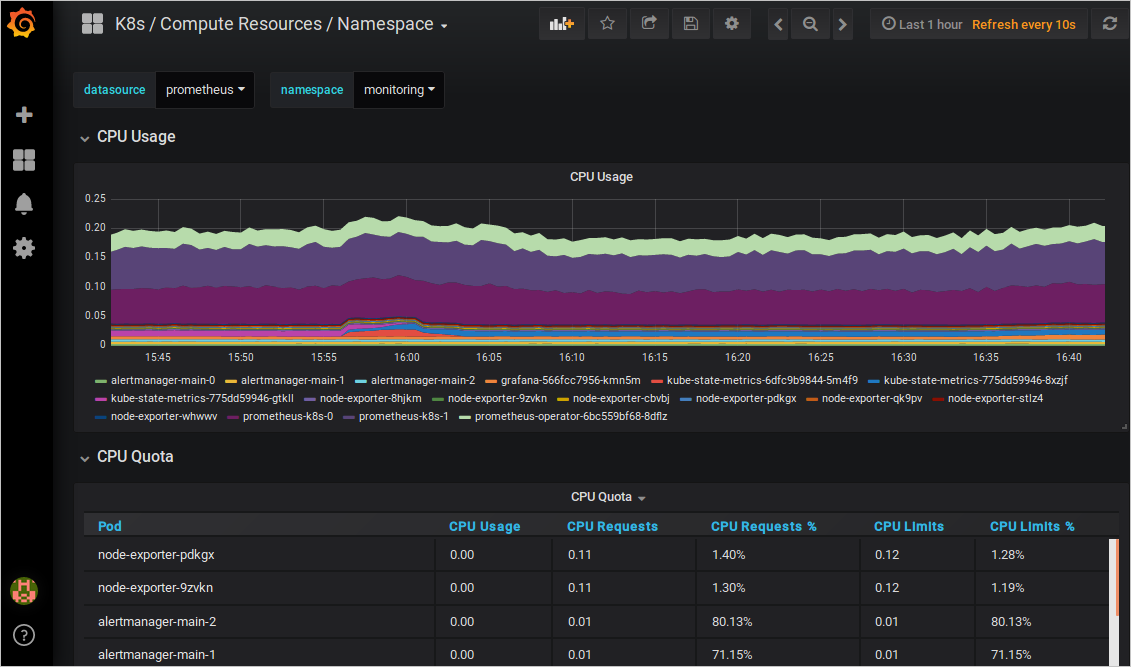

View and display data aggregation.

-

Run the following command to map Grafana in the cluster to local port 3000.

kubectl -n monitoring port-forward svc/ack-prometheus-operator-grafana 3000:80 -

In a browser, visit localhost:3000 and select the required dashboard to view the aggregated data.

-

-

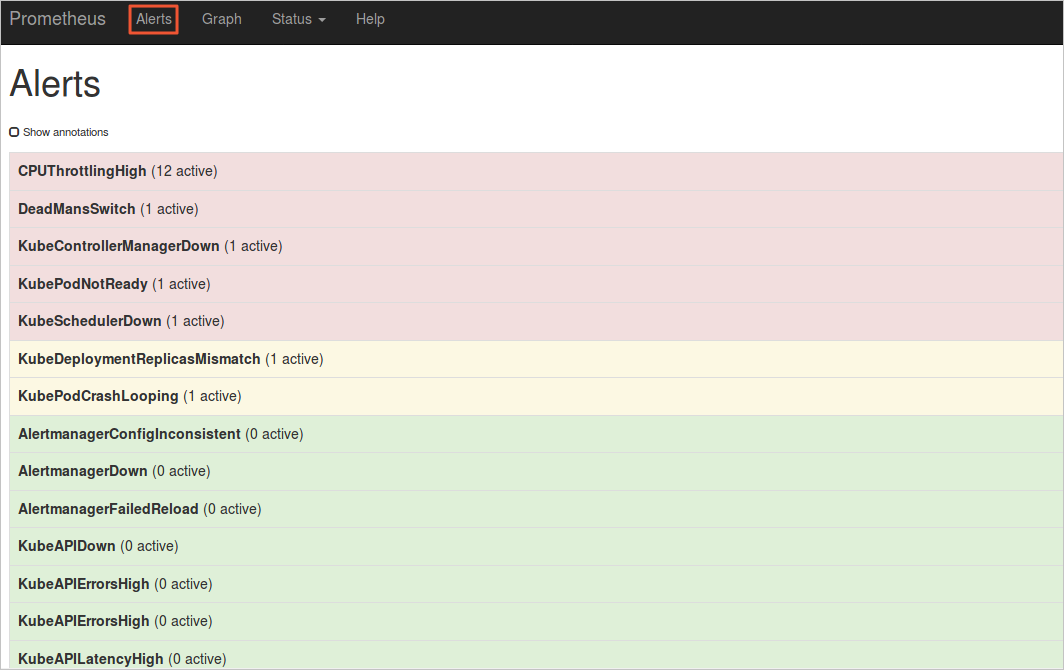

View alert rules and set silent alerts.

-

View alert rules

In a browser, navigate to

localhost:9090and select Alerts from the menu bar to see the active alert rules.-

Red: Alerts are being triggered based on alert rules in red.

-

Green: No alerts are being triggered based on alert rules in green.

-

-

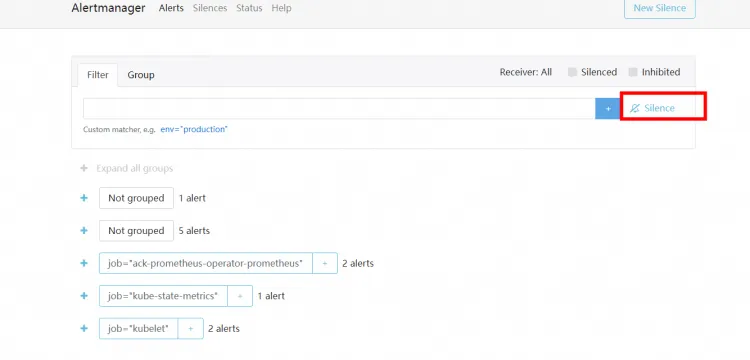

Set silent alerts

Execute the command below and navigate to

localhost:9093in your browser. Select Silenced to configure silent alerts.kubectl --namespace monitoring port-forward svc/alertmanager-operated 9093

-

Follow the preceding steps to deploy Prometheus in a cluster. The following examples describe how to configure Prometheus in different scenarios.

Alert configurations

To configure alert notification methods or notification templates, perform the following steps to configure the config field in the alertmanager section.

-

Configure alert notification methods

The prometheus-operator supports notifications via DingTalk and email. To enable these notifications, follow the steps below.

-

Configure DingTalk notifications

To add DingTalk notifications, navigate to the ack-prometheus-operator page, click Deploy, and proceed to the Parameters wizard page. Locate the dingtalk section, enable it by setting enabled to true, and enter your DingTalk chatbot's webhook URL in the token field. Within the alertmanager's config field, find the receiver and in the receivers section, specify your DingTalk notification's name, which by default is Webhook.

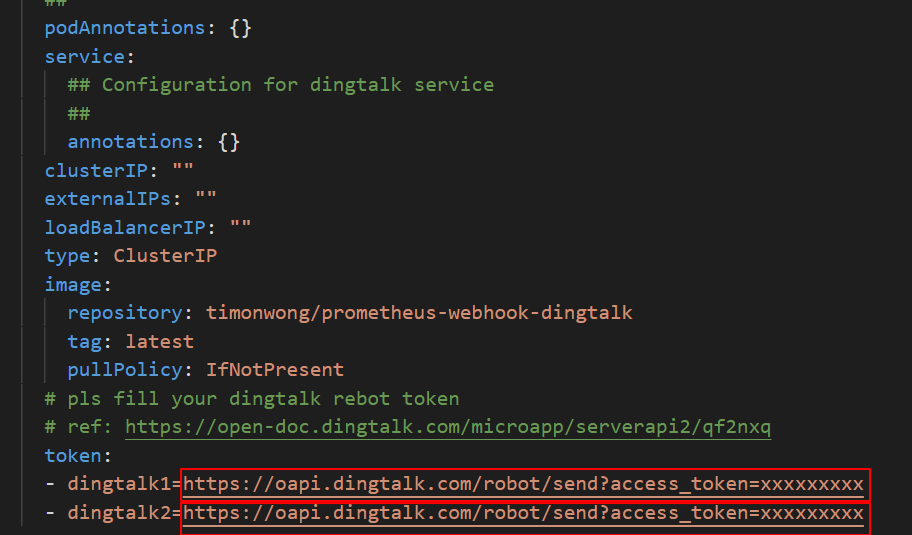

If you have two DingTalk chatbots, perform the followingsteps:

-

Replace the parameter values in the token field with the webhook URLs of your DingTalk chatbots.

Copy the webhook URLs of your DingTalk chatbots and replace the parameter values of dingtalk1 and dingtalk2 in the token field with the copied URLs. Replace https://oapi.dingtalk.com/robot/send?access_token=xxxxxxxxxx with the webhook URLs.

-

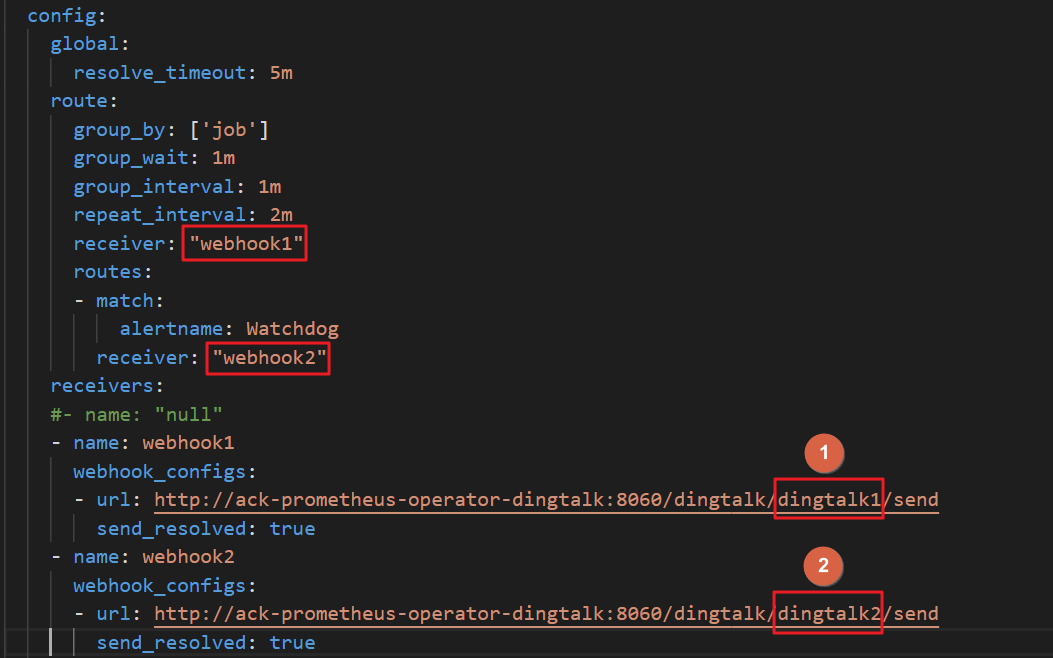

Modify the value of the receiver parameter

In the config field of alertmanager, find receiver and specify the name of the DingTalk notification in the receivers section. In this example, the names are webhook1 and webhook2.

-

Modify the value of the url parameter

Replace the parameter values in the url field with the actual values of dingtalk. In this example, the values are dingtalk1 and dingtalk2.

NoteTo add more DingTalk chatbots, add more webhook URLs.

-

-

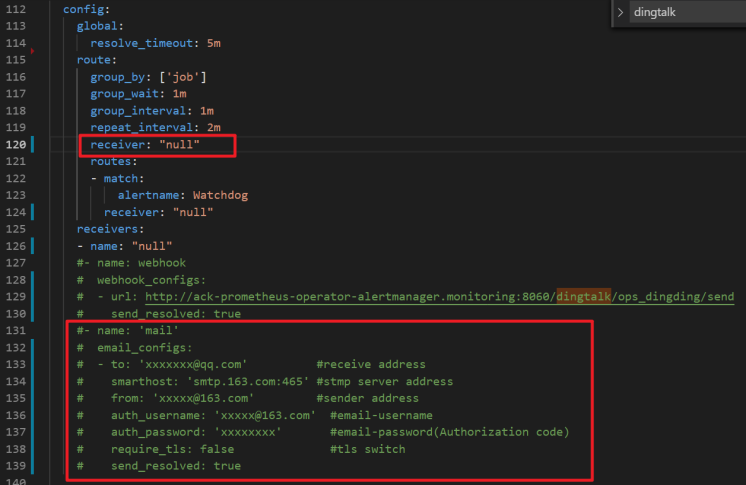

Configure email notifications

To add email notifications, navigate to the ack-prometheus-operator page, click Deploy, and proceed to the Parameters wizard page. Fill in the email details within the highlighted red box. In the

alertmanagersection, locate theconfigfield and enter the email notification name in thereceiverfield. The default name is 'mail'.

-

-

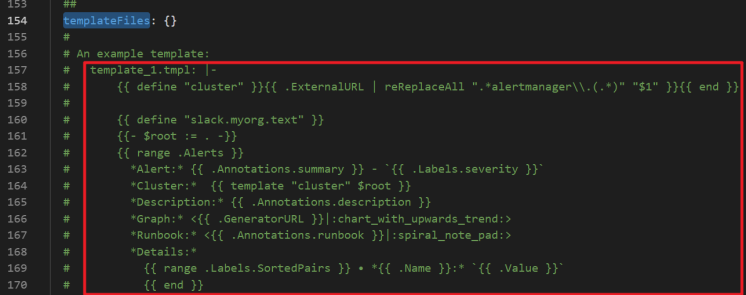

Configure alert notification templates

You can define alert notification templates in the templateFiles section of alertmanager. The following figure shows an example.

Mount a ConfigMap to prometheus

The following methods describe how to mount a ConfigMap to the /etc/prometheus/configmaps/ directory in a pod.

Method 1: Deploy prometheus-operator for the first time

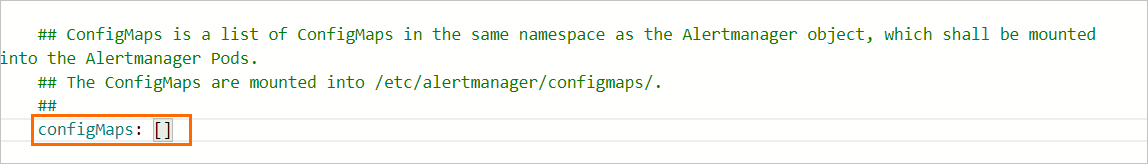

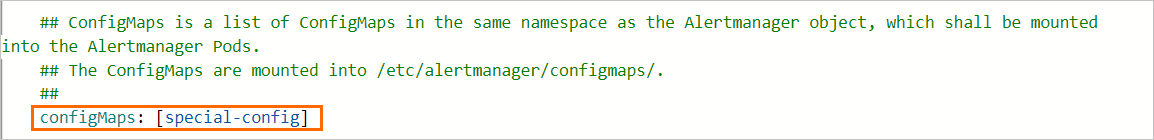

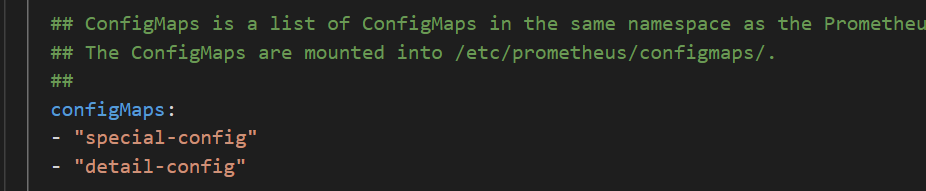

If this is your first time deploying prometheus-operator, follow the instructions in Step 1 to set up the prometheus monitoring solution. On the Parameters wizard page, locate the ConfigMaps field for prometheus and enter the name of your custom ConfigMap.

Method 2: prometheus-operator is deployed

If prometheus-operator has already been deployed in your cluster, follow these steps:

Log on to the ACK console. In the left-side navigation pane, click Clusters.

On the Clusters page, find the cluster that you want to manage and click its name. In the left-side navigation pane, choose .

-

In the Actions column for the desired Helm application, click Update.

-

In the Update Release panel, specify the name of your custom ConfigMap in the ConfigMaps field for Prometheus and Alertmanager, and then click OK .

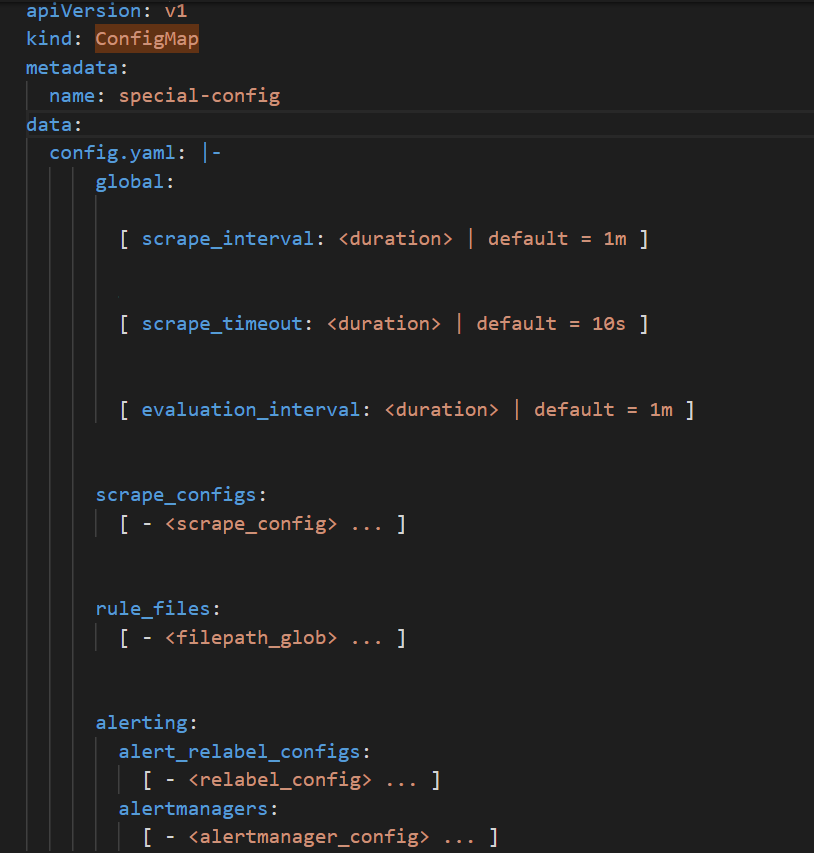

For example, if you want to define a ConfigMap named

special-configthat contains the config file of Prometheus, you can specify the monitoring method in the ConfigMaps field of Prometheus. Then, the ConfigMap is mounted to the pod. The mount path is /etc/prometheus/configmaps/.The YML definition for special-config is as follows:

The following figure shows an example of the yml definition of special-config.

The image below illustrates the configuration of the ConfigMaps field for Prometheus.

Configure grafana

-

Mount the dashboard configuration to Grafana

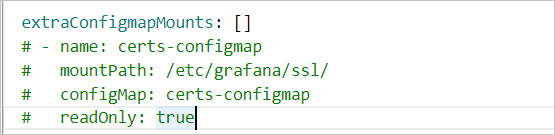

If you want to mount a dashboard file to a Grafana pod using a ConfigMap, navigate to the ack-prometheus-operator page, click Deploy , and proceed to the Parameters wizard page. Locate

extraConfigmapMountsand enter the mount settings in the respective fields. Note

Note-

Ensure that you have a ConfigMap containing the dashboard configuration inyour cluster. Ensure that the labels added to the ConfigMap match those on other ConfigMaps.

The labels added to this ConfigMap must match those added to other ConfigMaps.

-

In the

extraConfigmapMountsfield of Grafana, specify the details of the ConfigMap and the mount settings. -

Set mountPath to /tmp/dashboards/.

-

Set configMap to the name of the ConfigMap.

-

Set name to the name of the JSON file that stores the dashboard configuration.

-

-

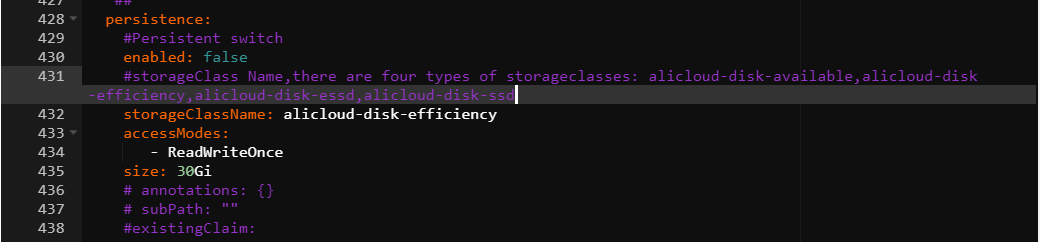

Enable data persistence for dashboards

To enable data persistence for Grafana dashboards, follow these steps:

Log on to the ACK console. In the left-side navigation pane, click Clusters.

On the Clusters page, find the cluster that you want to manage and click its name. In the left-side pane, choose .

-

Locate ack-prometheus-operator and click Update on the right.

-

In the Update Release panel, locate the persistence option within the grafana section and configure it to enable data persistence for Grafana dashboards.

You can export data from Grafana dashboards in JSON format to your local machine. For more information, see the referenced document.

Uninstall open source prometheus

To uninstall open source Prometheus based on the version of the Helm chart and avoid resource residue and unexpected behavior, follow these steps. You must manually clear related resources, including the Helm release, namespace, CRD, and kubelet Service.

When you uninstall ack-prometheus-operator, the associated kubelet Service is not automatically deleted due to a known issue in the community. You must manually uninstall the Service. For more information about the issue, see #1523.

Chart v12.0.0

Console

-

Log on to the ACK console. In the left-side navigation pane, click Clusters.

-

On the Cluster List page, click the name of the desired cluster. Then, use the left-side navigation pane to select various interfaces for performing the corresponding operations.

-

To uninstall the Helm release: navigate to . In the Actions column of the Helm release list, locate ack-prometheus-operator and click Delete. Follow the on-screen instructions to complete the deletion and remove the release record.

-

To delete the namespace, click Namespaces & Quotas. Locate 'monitoring' in the namespace list, select it, and follow the on-screen instructions to finalize the deletion.

-

Delete CRD: Select , click the Resource Definition (customresourcedefinition) tab, and follow the on-screen instructions to locate and delete all CRD resources within the

monitoring.coreos.comAPI group, including the following:AlertmanagerConfig

Alertmanager

PodMonitor

Probe

Prometheus

PrometheusRule

ServiceMonitor

ThanosRuler

-

To delete the kubelet Service, navigate to Network > Services. Then, follow the on-screen instructions to locate and remove the ack-prometheus-operator-kubelet from the kube-system namespace.

-

kubectl

-

Uninstall the Helm release

helm uninstall ack-prometheus-operator -n monitoring -

Delete the namespace

kubectl delete namespace monitoring -

Delete the CRD

kubectl delete crd alertmanagerconfigs.monitoring.coreos.com kubectl delete crd alertmanagers.monitoring.coreos.com kubectl delete crd podmonitors.monitoring.coreos.com kubectl delete crd probes.monitoring.coreos.com kubectl delete crd prometheuses.monitoring.coreos.com kubectl delete crd prometheusrules.monitoring.coreos.com kubectl delete crd servicemonitors.monitoring.coreos.com kubectl delete crd thanosrulers.monitoring.coreos.com -

Delete the kubelet Service

kubectl delete service ack-prometheus-operator-kubelet -n kube-system

Chart v65.1.1

Console

-

Log on to the ACK console. In the left-side navigation pane, click Clusters.

-

On the Cluster List page, click the name of the desired cluster. Then, use the left-side navigation pane to select various interfaces for performing the corresponding operations.

-

To uninstall the Helm release: navigate to . In the Actions column of the Helm release list, locate ack-prometheus-operator and select Delete. Then, follow the on-screen instructions to finalize the deletion and remove the release record.

-

To delete the namespace, click Namespaces & Quotas. Locate the 'monitoring' namespace in the list, select it, and follow the on-screen instructions to complete the deletion process.

-

To delete a CRD, select , click the Resource Definition (customresourcedefinition) tab, and follow the on-screen instructions to locate and delete all CRD resources within the

monitoring.coreos.comAPI group, which includes the following:AlertmanagerConfig

Alertmanager

PodMonitor

Probe

PrometheusAgent

Prometheus

PrometheusRule

ScrapeConfig

ServiceMonitor

ThanosRuler

-

To delete the kubelet Service, select Network > Services. Then, follow the on-screen instructions to locate and remove the ack-prometheus-operator-kubelet from the kube-system namespace.

-

kubectl

-

Uninstall the Helm release

helm uninstall ack-prometheus-operator -n monitoring -

Delete the namespace

kubectl delete namespace monitoring -

Delete the CRD

kubectl delete crd alertmanagerconfigs.monitoring.coreos.com kubectl delete crd alertmanagers.monitoring.coreos.com kubectl delete crd podmonitors.monitoring.coreos.com kubectl delete crd probes.monitoring.coreos.com kubectl delete crd prometheusagents.monitoring.coreos.com kubectl delete crd prometheuses.monitoring.coreos.com kubectl delete crd prometheusrules.monitoring.coreos.com kubectl delete crd scrapeconfigs.monitoring.coreos.com kubectl delete crd servicemonitors.monitoring.coreos.com kubectl delete crd thanosrulers.monitoring.coreos.com -

Delete the kubelet Service

kubectl delete service ack-prometheus-operator-kubelet -n kube-system

FAQ

-

What do I do if I fail to receive DingTalk alert notifications?

-

Obtain the webhook URL of your DingTalk chatbot. For more information, see event monitoring.

-

Locate the DingTalk section, enable it by setting enabled to true, and then enter the webhook URL of your DingTalk chatbot in the token field. For more information, see alert configurations in Dingtalk Notifications.

-

-

What do I do if an error message appears when I deploy prometheus-operator in a cluster?

The error message is:

Can't install release with errors: rpc error: code = Unknown desc = object is being deleted: customresourcedefinitions.apiextensions.k8s.io "xxxxxxxx.monitoring.coreos.com" already existsThe error message indicates that the cluster fails to clear custom resource definition (CRD) objects of the previous deployment. Run the following commands to delete the CRD objects. Then, deploy prometheus-operator again.

kubectl delete crd prometheuses.monitoring.coreos.com kubectl delete crd prometheusrules.monitoring.coreos.com kubectl delete crd servicemonitors.monitoring.coreos.com kubectl delete crd alertmanagers.monitoring.coreos.com -

What do I do if I fail to receive email alert notifications?

The issue may occur because you specified your logon password instead of the authorization code in the

smtp_auth_passwordfield. Make sure that the SMTP server endpoint includes a port number. -

What do I do if the message The current cluster is temporarily unavailable. Try again later or submit a ticket appears when I click YAML Update?

This issue occurs because the configuration file of tiller is too large, which causes the cluster to be unavailable. You can delete some comments and mount the configuration file to a pod as a ConfigMap. Currently, prometheus-operator supports only the mounting of prometheus and alertmanager pods. For more information, see Method 2 in Mount a ConfigMap to Prometheus.

-

How do I enable the features of prometheus-operator after I deploy it in a cluster?

After deploying prometheus-operator, to enable certain features, navigate to the cluster information page, select , click Update next to Ack-prometheus-operator, locate the relevant switch, adjust the settings, and then click OK to activate the features.

-

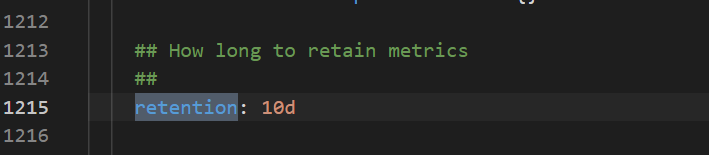

How do I select data storage: TSDB or disks?

TSDB supports only a few regions. Disks support all regions. For more information about the data retention policy, see the following configuration.

-

What do I do if a Grafana dashboard fails to display data properly?

Navigate to the cluster information page, select , click Update next to ack-prometheus-operator, and verify that the clusterVersion matches the correct version of your cluster. If your cluster's Kubernetes version is below 1.16, change clusterVersion to 1.14.8-aliyun.1. If it is 1.16 or above, change clusterVersion to 1.16.6-aliyun.1.

-

What do I do if I fail to install ack-prometheus after I delete the ack-prometheus namespace?

After you delete the ack-prometheus namespace, the related resource configurations may be retained. In this case, you may fail to install ack-prometheus again. You can perform the following operations to delete the residual resource configurations.

-

Delete role-based access control (RBAC)-related resource configurations.

-

Delete ClusterRole.

kubectl delete ClusterRole ack-prometheus-operator-grafana-clusterrole kubectl delete ClusterRole ack-prometheus-operator-kube-state-metrics kubectl delete ClusterRole psp-ack-prometheus-operator-kube-state-metrics kubectl delete ClusterRole psp-ack-prometheus-operator-prometheus-node-exporter kubectl delete ClusterRole ack-prometheus-operator-operator kubectl delete ClusterRole ack-prometheus-operator-operator-psp kubectl delete ClusterRole ack-prometheus-operator-prometheus kubectl delete ClusterRole ack-prometheus-operator-prometheus-psp -

Delete ClusterRoleBinding.

kubectl delete ClusterRoleBinding ack-prometheus-operator-grafana-clusterrolebinding kubectl delete ClusterRoleBinding ack-prometheus-operator-kube-state-metrics kubectl delete ClusterRoleBinding psp-ack-prometheus-operator-kube-state-metrics kubectl delete ClusterRoleBinding psp-ack-prometheus-operator-prometheus-node-exporter kubectl delete ClusterRoleBinding ack-prometheus-operator-operator kubectl delete ClusterRoleBinding ack-prometheus-operator-operator-psp kubectl delete ClusterRoleBinding ack-prometheus-operator-prometheus kubectl delete ClusterRoleBinding ack-prometheus-operator-prometheus-psp

-

-

Delete the CRD.

kubectl delete crd alertmanagerconfigs.monitoring.coreos.com kubectl delete crd alertmanagers.monitoring.coreos.com kubectl delete crd podmonitors.monitoring.coreos.com kubectl delete crd probes.monitoring.coreos.com kubectl delete crd prometheuses.monitoring.coreos.com kubectl delete crd prometheusrules.monitoring.coreos.com kubectl delete crd servicemonitors.monitoring.coreos.com kubectl delete crd thanosrulers.monitoring.coreos.com

-