ACK集群与阿里云日志服务SLS深入集成,通过提供日志采集组件来简化容器日志的收集和管理。本文介绍如何安装日志采集组件并完成日志采集配置,包括日志的自动采集、查询和分析,以提升运维效率,降低管理成本。

场景指引

日志采集组件可通过以下两种方式来采集容器日志。

DaemonSet:适用于日志分类明确、功能较单一的集群。可参见本文了解。

Sidecar:适用于大型、混合型集群。详情请参见通过Sidecar方式采集Kubernetes容器文本日志。

索引

操作步骤 | 操作链接 |

步骤一:安装日志采集组件 | 在以下两个日志采集组件中选择一个进行安装。

|

步骤二:创建采集配置 | 根据采集需求选择文本日志或标准输出。 |

步骤三:查询分析日志 | 通过控制台进行日志查询与分析。 |

步骤一:安装日志采集组件

安装LoongCollector(推荐)

LoongCollector(原Logtail):Logtail是日志服务提供的日志采集Agent,用于采集阿里云ECS、自建IDC或其他云厂商等服务器上的日志。Logtail基于日志文件采集,无需修改应用程序代码,且采集日志不会影响应用程序运行。LoongCollector是日志服务推出的新一代采集Agent,是Logtail的升级版,兼容Logtail的同时性能更佳。

已有的ACK集群中安装loongcollector组件

登录容器服务管理控制台,在左侧导航栏选择集群列表。

在集群列表页面,单击目标集群名称,然后在左侧导航栏,选择运维管理 > 组件管理。

在日志与监控页签中,找到 loongcollector,然后单击安装。

说明LoongCollector组件和logtail-ds组件不能同时存在。如果集群之前已经安装logtail-ds组件,升级方案请参见Logtail升级到LoongCollector。

安装完成后,日志服务会自动生成名为k8s-log-${your_k8s_cluster_id}的Project,并在该Project下生成如下资源,您可登录日志服务控制台查看。

资源类型 | 资源名称 | 作用 | 示例 |

机器组 | k8s-group- | loongcollector-ds的机器组,主要用于日志采集场景。 | k8s-group-my-cluster-123 |

k8s-group- | loongcollector-cluster的机器组,主要用于指标采集场景。 | k8s-group-my-cluster-123-cluster | |

k8s-group- | 单实例机器组,主要用于部分单实例采集配置。 | k8s-group-my-cluster-123-singleton | |

Logstore | config-operation-log | 用于采集和存储loongcollector-operator组件日志。 重要 请勿删除名为 | config-operation-log |

安装Logtail

Logtail采集:Logtail是日志服务提供的日志采集Agent,用于采集阿里云ECS、自建IDC或其他云厂商等服务器上的日志。Logtail基于日志文件,无侵入式采集日志。您无需修改应用程序代码,且采集日志不会影响您的应用程序运行。

已有的ACK集群中安装Logtail组件

登录容器服务管理控制台,在左侧导航栏选择集群列表。

在集群列表页面,单击目标集群名称,然后在左侧导航栏,单击组件管理。

在日志与监控页签中,找到logtail-ds,然后单击安装。如未找到logtail-ds组件,请安装LoongCollector组件。

LoongCollector组件为logtail-ds组件的升级版,两个组件不能同时存在,推荐使用LoongCollector组件。

新建ACK集群时安装Logtail组件

登录容器服务管理控制台,在左侧导航栏选择集群列表。

单击创建集群,在组件配置页面,选中使用日志服务。

本文只描述日志服务相关配置,关于更多配置项说明,请参见创建ACK托管集群。

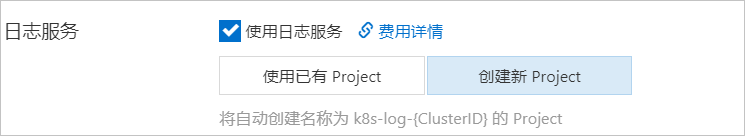

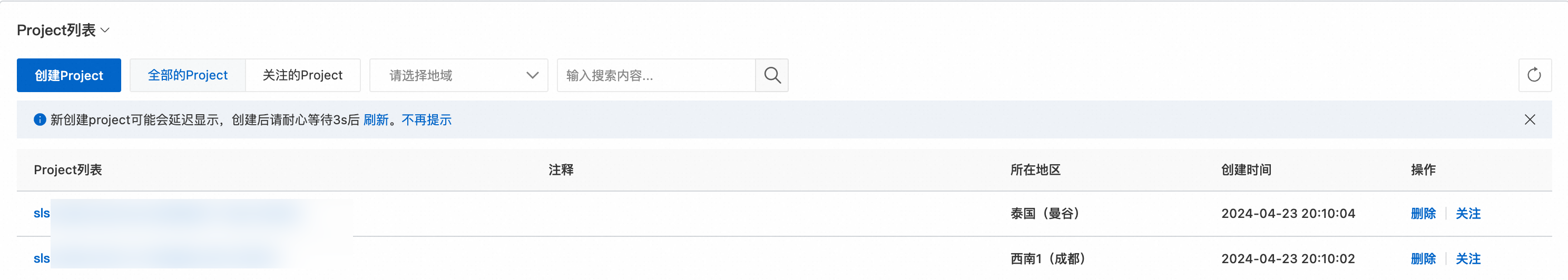

当选中使用日志服务后,会出现创建项目(Project)的提示。

使用已有Project

可选择一个已有的Project来管理采集到的容器日志。

创建新Project

日志服务自动创建一个Project来管理采集到的容器日志。其中

ClusterID为新建的Kubernetes集群的唯一标识。

在组件配置页中,默认开启控制面组件日志,开启此配置会在Project中自动配置并采集集群控制面组件日志并遵循按量计费,因此请根据自身情况选择是否需要开启,相关信息请参考管理控制面组件日志。

安装完成后,自动生成名为k8s-log-<YOUR_CLUSTER_ID>的Project,并在该Project下生成如下资源,可登录日志服务控制台查看资源。

资源类型 | 资源名称 | 作用 | 示例 |

机器组 | k8s-group- | logtail-daemonset的机器组,主要用于日志采集场景。 | k8s-group-my-cluster-123 |

k8s-group- | logtail-statefulset的机器组,主要用于指标采集场景。 | k8s-group-my-cluster-123-statefulset | |

k8s-group- | 单实例机器组,主要用于部分单实例采集配置。 | k8s-group-my-cluster-123-singleton | |

Logstore | config-operation-log | 用于存储Logtail组件中的alibaba-log-controller日志。建议不要在此Logstore下创建采集配置。该Logstore可以删除,删除后不会再采集alibaba-log-controller的运行日志。该Logstore的收费标准和普通的Logstore收费标准是一致的,具体请参见按写入数据量计费模式计费项。 | 无 |

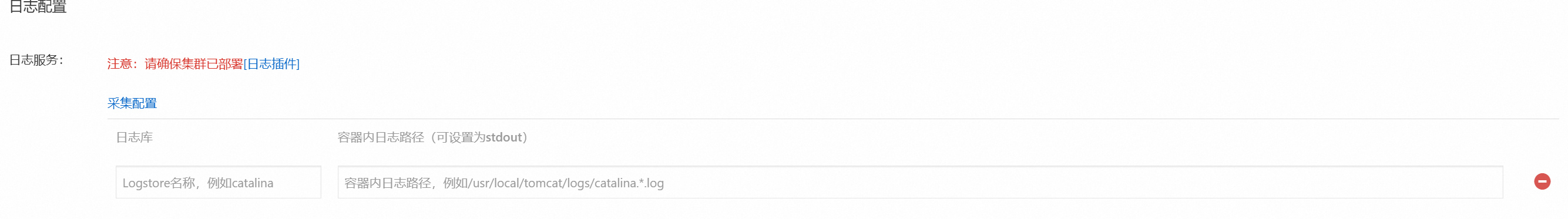

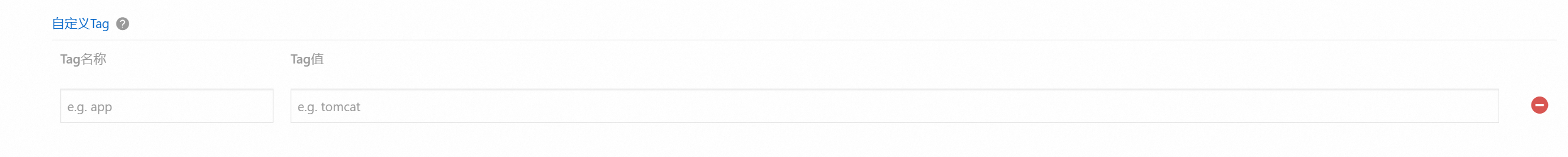

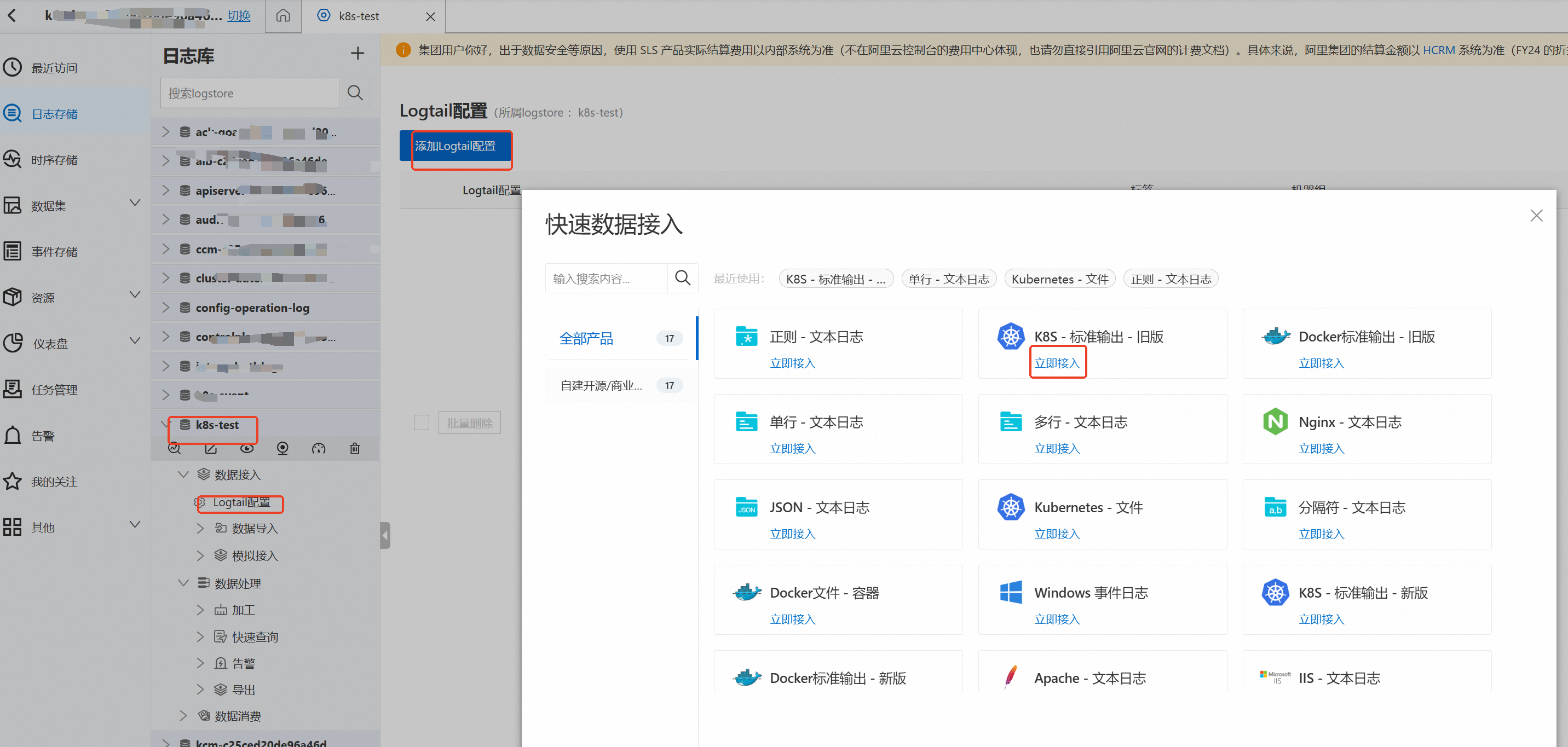

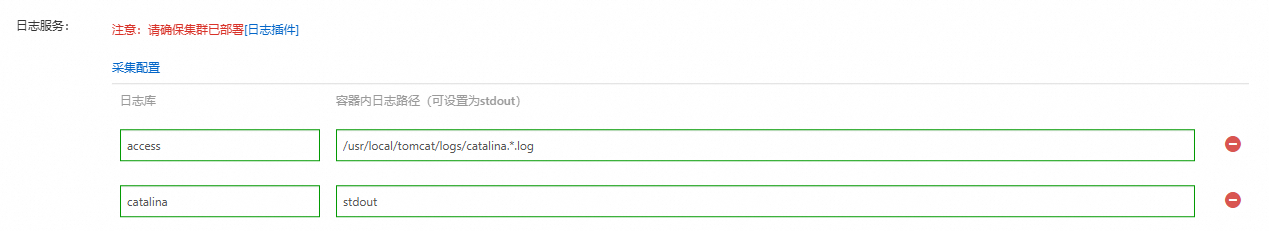

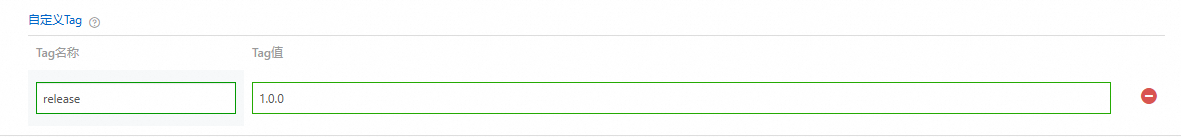

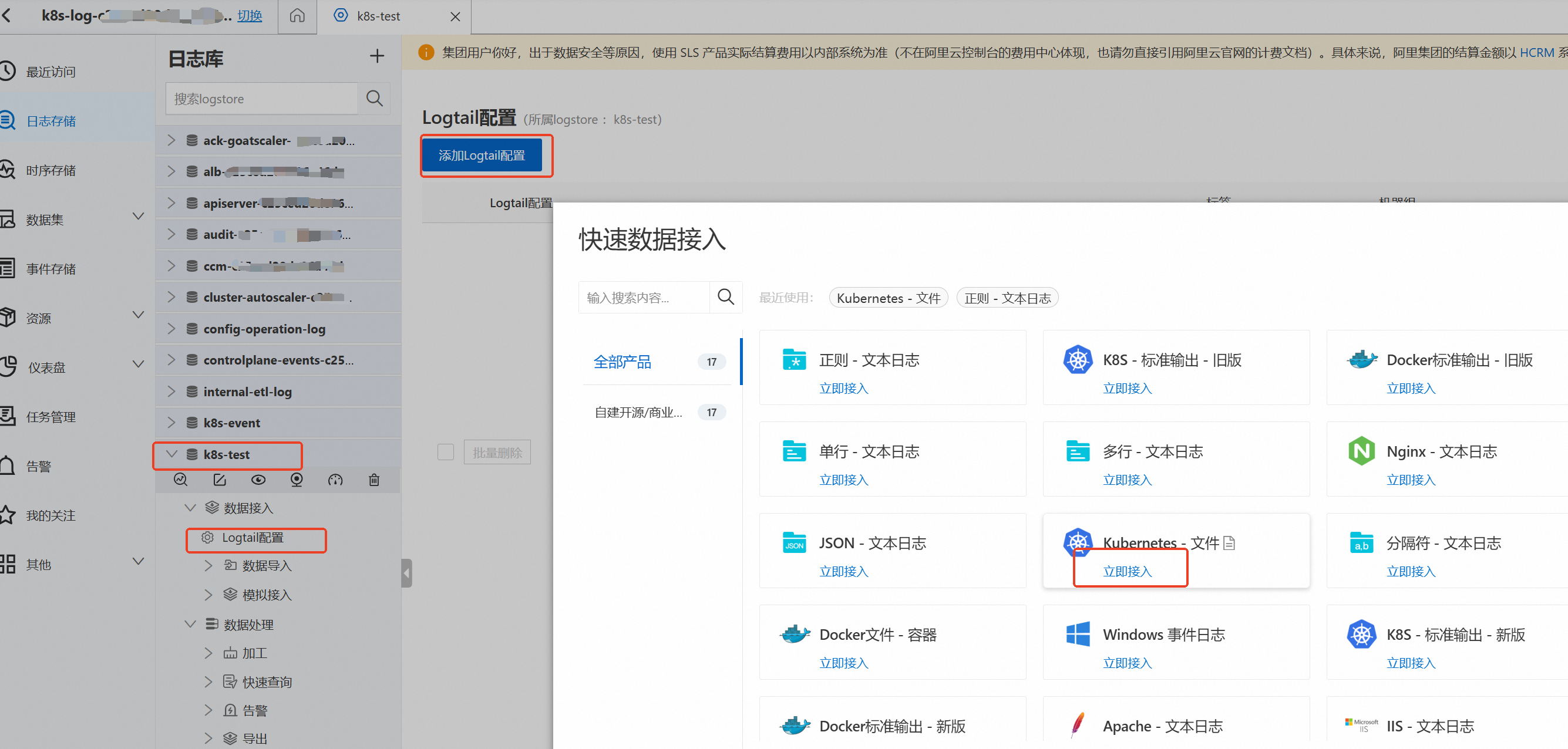

步骤二:创建采集配置

步骤三:查询分析日志

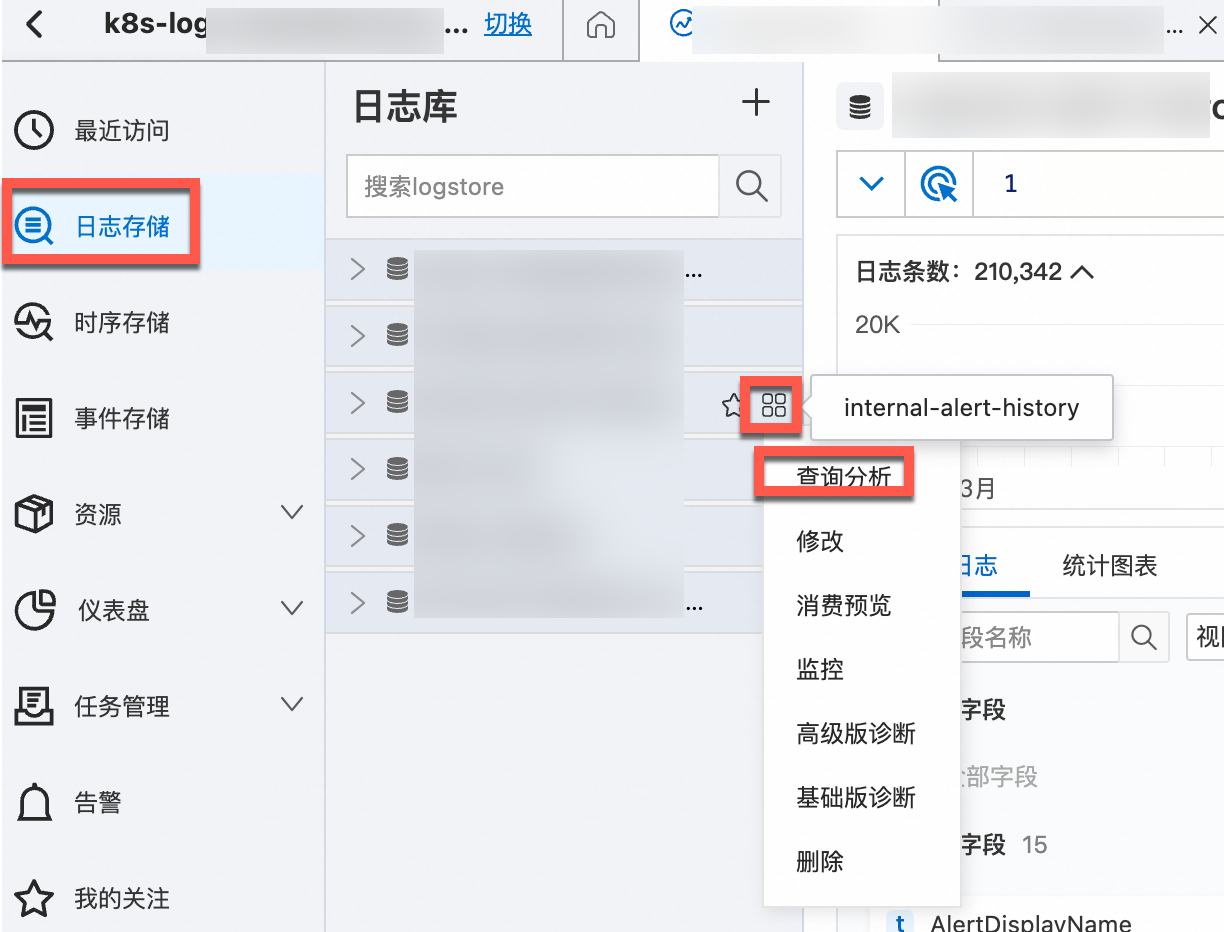

登录日志服务控制台。

在Project列表中,单击目标Project,进入对应的Project详情页面。

在对应的日志库右侧的

图标,选择查询分析,查看Kubernetes集群输出的日志。

图标,选择查询分析,查看Kubernetes集群输出的日志。

日志默认字段

文本日志

K8s每条容器文本日志默认包含的字段如下表所示。

字段名称 | 说明 |

__tag__:__hostname__ | 容器宿主机的名称。 |

__tag__:__path__ | 容器内日志文件的路径。 |

__tag__:_container_ip_ | 容器的IP地址。 |

__tag__:_image_name_ | 容器使用的镜像名称。 说明 若存在多个相同Hash但名称或Tag不同的镜像,采集配置将根据Hash选择其中一个名称进行采集,无法确保所选名称与YAML文件中定义的一致。 |

__tag__:_pod_name_ | Pod的名称。 |

__tag__:_namespace_ | Pod所属的命名空间。 |

__tag__:_pod_uid_ | Pod的唯一标识符(UID)。 |

标准输出

Kubernetes集群的每条日志默认上传的字段如下所示。

字段名称 | 说明 |

_time_ | 日志采集时间。 |

_source_ | 日志源类型,stdout或stderr。 |

_image_name_ | 镜像名 |

_container_name_ | 容器名 |

_pod_name_ | Pod名 |

_namespace_ | Pod所在的命名空间 |

_pod_uid_ | Pod的唯一标识 |

相关文档

当完成日志内容的采集后,可在日志服务中使用查询与分析功能,来帮助了解日志情况,请参考查询与分析快速指引。

当完成日志内容的采集后,可在日志服务中使用可视化功能, 来帮助直观地统计与了解日志情况,请参考快速创建仪表盘。

当完成日志内容的采集后,可在日志服务中使用告警功能, 来自动提醒日志中的异常情况,请参考快速设置日志告警。

日志服务仅采集增量日志,历史日志文件采集请参见导入历史日志文件。

容器采集异常排查思路:

查看控制台是否有报错信息,具体操作,请参见如何查看Logtail采集错误信息。

如果控制台无报错信息,排查机器组心跳、Logtail采集配置等内容。具体操作,请参见如何排查容器日志采集异常。

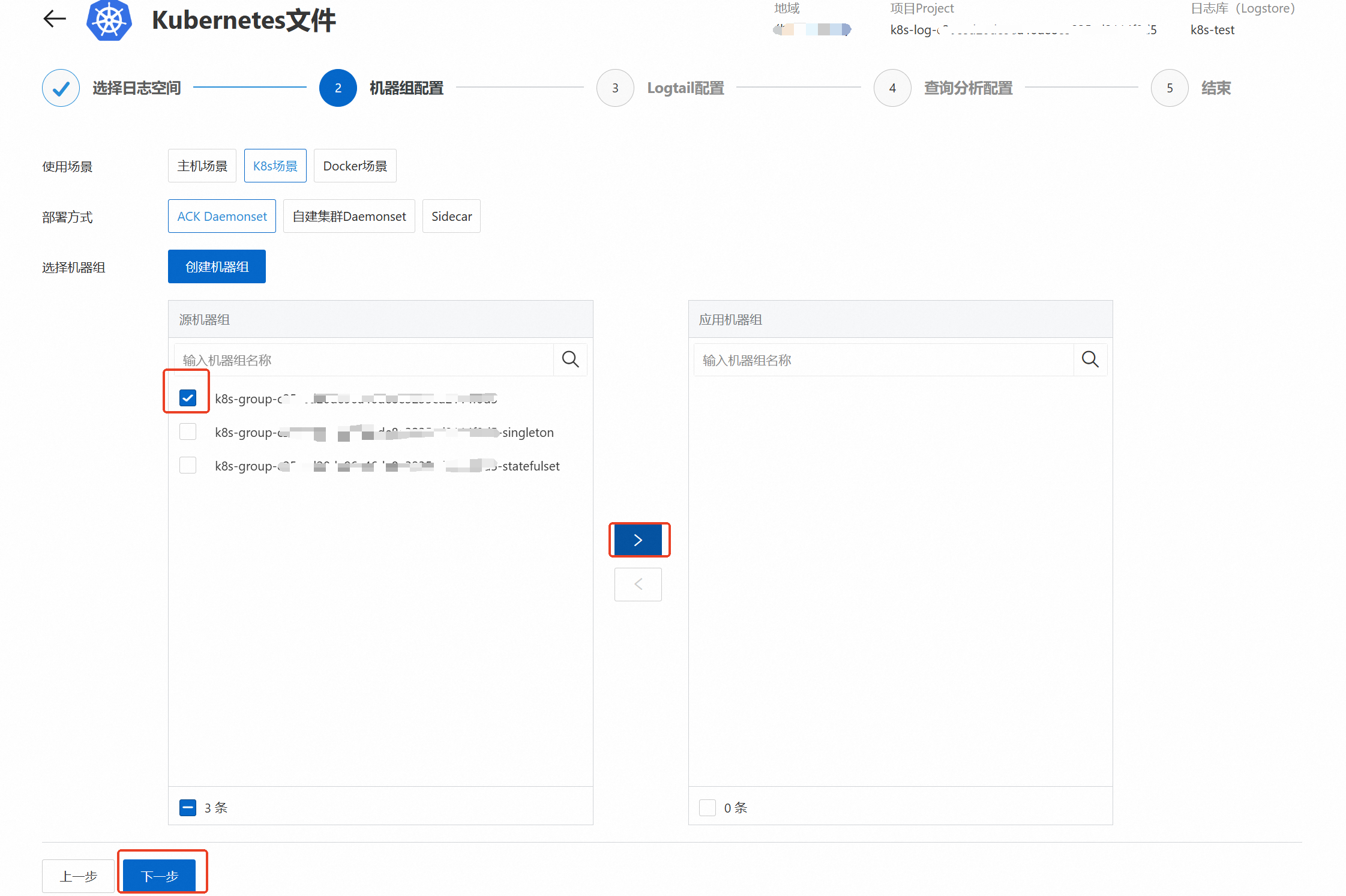

添加到应用机器组,单击下一步。

添加到应用机器组,单击下一步。