Container Service for Kubernetes (ACK) is deeply integrated with Simple Log Service (SLS). ACK provides log collection components to help you simplify container log collection and management. This topic describes how to install a log collection component and how to configure log collection, such as automated log collection, log query, and log analysis. You can use the preceding configurations to improve O&M efficiency and reduce operating costs.

Scenarios

Log collection components collect logs in the following modes:

DaemonSet mode: suitable for clusters that have clear classifications of logs or have single functions. This mode is introduced in this topic.

Sidecar mode: suitable for large-scale and hybrid clusters. For more information, see Collect text logs from Kubernetes containers in Sidecar mode.

For differences between the two modes, see Collection method. For details about how SLS is billed, see Billing overview.

Table of contents

Procedure | References |

Step 1: Install a log collection component | Install one of the following log collection components:

|

Step 2: Create a collection configuration | Collect text logs or stdout.

|

Step 3: Query and analyze logs | You can query and analyze logs in the SLS console. |

Step 1: Install a log collection component

Install LoongCollector (Recommended)

LoongCollector-based data collection: LoongCollector is a new-generation log collection agent that is provided by Simple Log Service. LoongCollector is an upgraded version of Logtail. LoongCollector is expected to integrate the capabilities of specific collection agents of Application Real-Time Monitoring Service (ARMS), such as Managed Service for Prometheus-based data collection and Extended Berkeley Packet Filter (eBPF) technology-based non-intrusive data collection.

Install the loongcollector component in an existing ACK cluster

Log on to the ACK console. In the left-side navigation pane, click Clusters.

On the Clusters page, click the cluster that you want to manage. In the left-side navigation pane, choose Operations > Add-ons.

On the Logs and Monitoring tab of the Add-ons page, find the loongcollector component and click Install.

NoteThe LoongCollector component and the logtail-ds component cannot coexist. If the logtail-ds component is already installed on the cluster, see Upgrade from Logtail to LoongCollector (server scenario) for the upgrade solution.

After LoongCollector is installed, Simple Log Service automatically generates a project named k8s-log-${your_k8s_cluster_id} and resources in the project. You can log on to the Simple Log Service console to view the resources. The following table describes the resources.

Resource type | Resource name | Description | Example |

Machine group | k8s-group- | The machine group of loongcollector-ds, which is used in log collection scenarios. | k8s-group-my-cluster-123 |

k8s-group- | The machine group of loongcollector-cluster, which is used in metric collection scenarios. | k8s-group-my-cluster-123-cluster | |

k8s-group- | The machine group of a single instance, which is used to create a LoongCollector configuration for the single instance. | k8s-group-my-cluster-123-singleton | |

Logstore | config-operation-log | The logstore is used to collect and store loongcollector-operator logs. Important Do not delete the | config-operation-log |

Install Logtail

Logtail-based data collection: Logtail is a log collection agent that is provided by Simple Log Service. You can use Logtail to collect logs from multiple data sources, such as Alibaba Cloud Elastic Compute Service (ECS) instances, servers in data centers, and servers from third-party cloud service providers. Logtail supports non-intrusive log collection based on log files. You do not need to modify your application code, and log collection does not affect the operation of your applications.

Logtail-based data collection: Logtail is a log collection agent that is provided by Simple Log Service. You can use Logtail to collect logs from multiple data sources, such as Alibaba Cloud Elastic Compute Service (ECS) instances, servers in data centers, and servers from third-party cloud service providers. Logtail supports non-intrusive log collection based on log files. You do not need to modify your application code, and log collection does not affect the operation of your applications.

Install Logtail components in an existing ACK cluster

Log on to the ACK console. In the left navigation pane, click Clusters.

On the Clusters page, find the one you want to manage and click its name. In the left navigation pane, click Add-ons.

On the Logs and Monitoring tab of the Add-ons page, find the logtail-ds component and click Install.

Install Logtail components when you create an ACK cluster

Log on to the ACK console. In the left navigation pane, click Clusters.

On the Clusters page, click Create Kubernetes Cluster. In the Component Configurations step of the wizard, select Enable Log Service.

This topic describes only the settings related to Simple Log Service. For more information about other settings, see Create an ACK managed cluster.

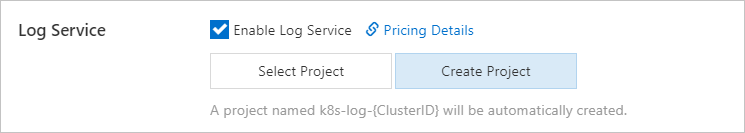

After you select Enable Log Service, the system prompts you to create a Simple Log Service project. You can use one of the following methods to create a project:

Select Project

You can select an existing project to manage the collected container logs.

Create Project

Simple Log Service automatically creates a project to manage the collected container logs.

ClusterIDindicates the unique identifier of the created Kubernetes cluster.

In the Component Configurations step of the wizard, Enable is selected for the Control Plane Component Logs parameter by default. If Enable is selected, the system automatically configures collection settings and collects logs from the control plane components of a cluster, and you are charged for the collected logs based on the pay-as-you-go billing method. You can determine whether to select Enable based on your business requirements. For more information, see Collect logs of control plane components in ACK managed clusters.

After the Logtail components are installed, Simple Log Service automatically generates a project named k8s-log-<YOUR_CLUSTER_ID> and resources in the project. You can log on to the Simple Log Service console to view the resources.

Resource type | Resource name | Description | Example |

Machine group | k8s-group- | The machine group of logtail-daemonset, which is used in log collection scenarios. | k8s-group-my-cluster-123 |

k8s-group- | The machine group of logtail-statefulset, which is used in metric collection scenarios. | k8s-group-my-cluster-123-statefulset | |

k8s-group- | The machine group of a single instance, which is used to create a Logtail configuration for the single instance. | k8s-group-my-cluster-123-singleton | |

Logstore | config-operation-log | The logstore is used to store logs of the alibaba-log-controller component. We recommend that you do not create a Logtail configuration for the logstore. You can delete the logstore. After the logstore is deleted, the system no longer collects the operational logs of the alibaba-log-controller component. You are charged for the logstore in the same manner as you are charged for regular logstores. For more information, see Billable items for the pay-by-ingested-data mode. | None |

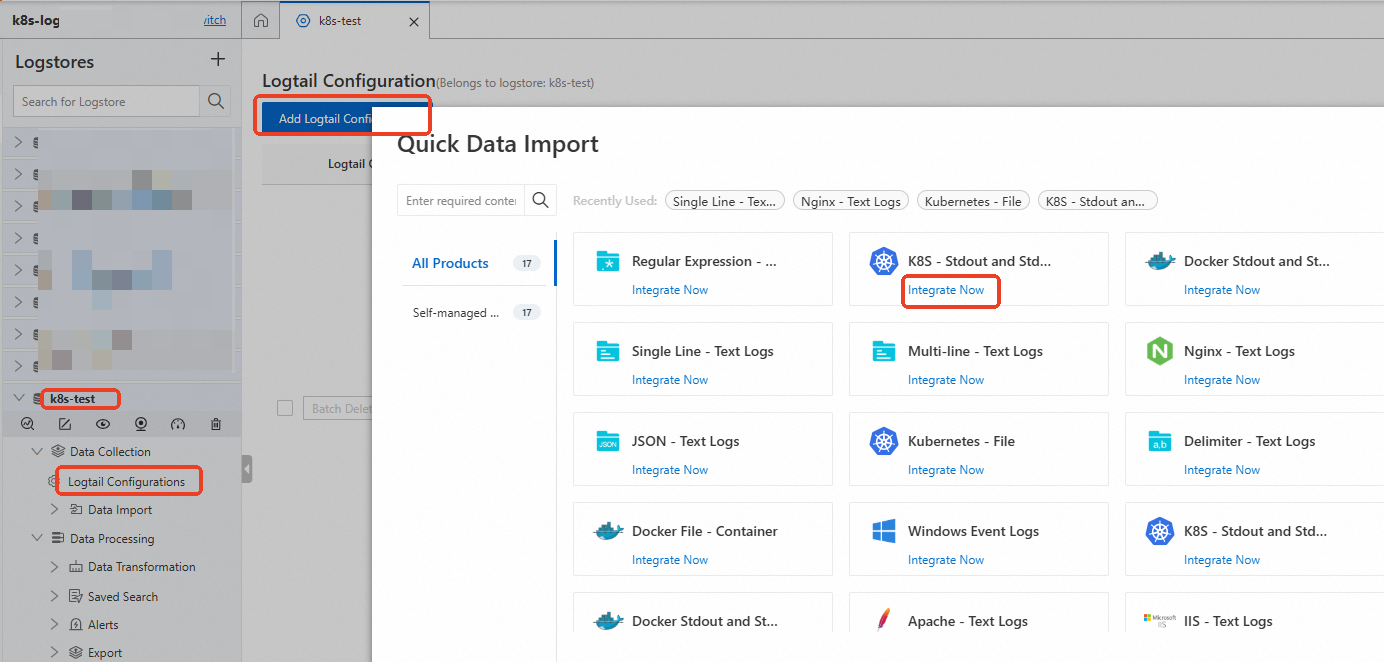

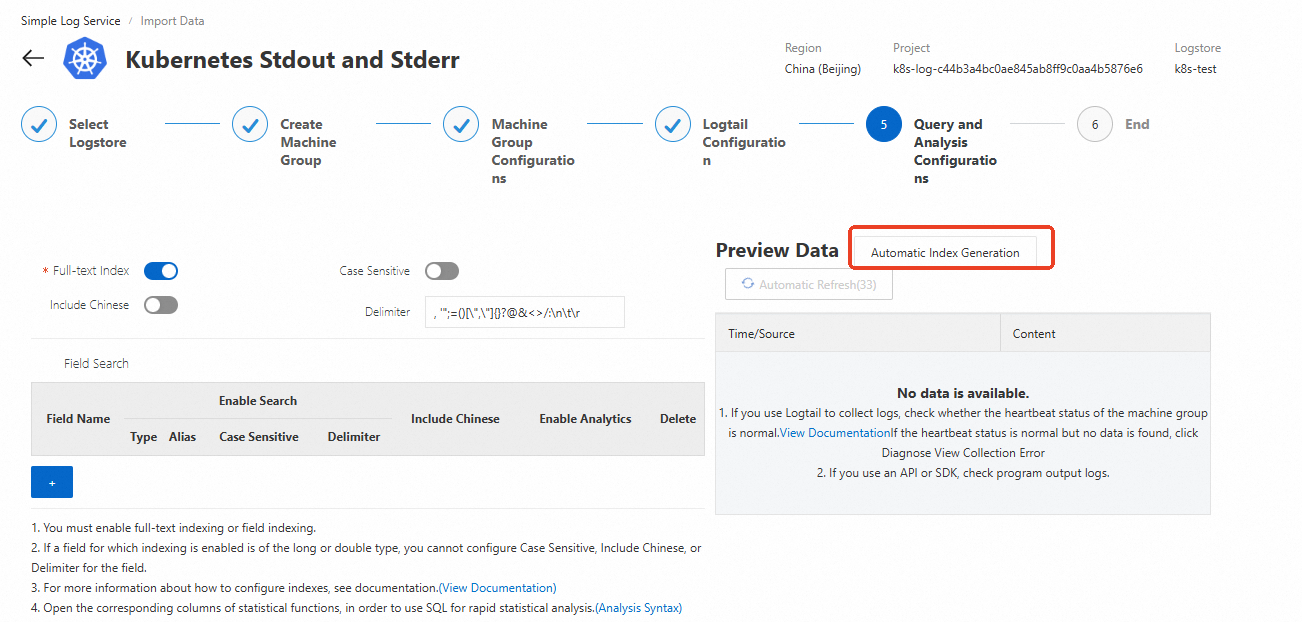

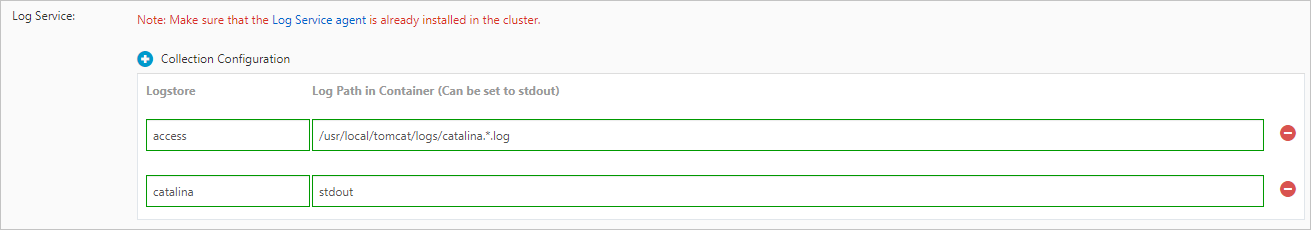

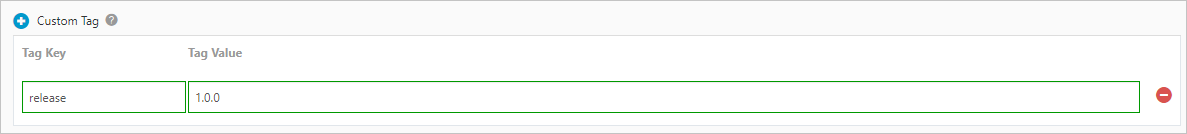

Step 2: Create a collection configuration

Step 3: Query and analyze logs

Log on to the Simple Log Service console.

In the Projects section, click the project you want to go to its details page.

In the left-side navigation pane, click the

icon of the logstore you want. In the drop-down list, select Search & Analysis to view the logs that are collected from your Kubernetes cluster.

icon of the logstore you want. In the drop-down list, select Search & Analysis to view the logs that are collected from your Kubernetes cluster.

Default log fields

Text logs

The following table describes the default fields that are included in each container text log in Kubernetes.

Field | Description |

__tag__:__hostname__ | The name of the container host. |

__tag__:__path__ | The log file path in the container. |

__tag__:_container_ip_ | The IP address of the container. |

__tag__:_image_name_ | The name of the image used by the container. Note If there are multiple images with the same hash but different names or tags, the collection configuration will select one name based on the hash for collection. It cannot ensure that the selected name matches the one defined in the YAML file. |

__tag__:_pod_name_ | The name of the pod. |

__tag__:_namespace_ | The namespace to which the pod belongs. |

__tag__:_pod_uid_ | The unique identifier (UID) of the pod. |

stdout

The following table describes the fields uploaded by default for each log in a Kubernetes cluster.

Field | Description |

_time_ | The time when the log was collected. |

_source_ | The type of the log source. Valid values: stdout and stderr. |

_image_name_ | The name of the image. |

_container_name_ | The name of the container. |

_pod_name_ | The name of the pod. |

_namespace_ | The namespace of the pod. |

_pod_uid_ | The unique identifier of the pod. |

References

After you collect logs, you can use the query and analysis feature of Simple Log Service to understand the logs. For more information, see Query and analyze logs.

After you collect logs, you can use the visualization feature of Simple Log Service to visually analyze and understand the logs. For more information, see Create a dashboard.

After you collect logs, you can use the alerting feature of Simple Log Service to automatically receive notifications about exceptions in the logs. For more information, see Configure alert rules.

By default, Simple Log Service only collects incremental logs. To collect historical logs, see Import historical logs from log files.

Troubleshoot collection errors:

Check whether error messages are displayed in the console. For more information, see View Logtail collection errors.

If no error messages are displayed in the console, check the heartbeat of the machine group and the Logtail configuration. For more information, see Troubleshoot errors when collecting logs from containers.