This article introduces the technical challenges brought by cloud technology to traditional enterprises, and explains in depth the best practices of cloud architecture.

Cloud computing has received much attention since its birth as an innovative application model in the field of information technology. Thanks to features such as low costs, elasticity, ease of use, high reliability, and service on demand, cloud computing has been regarded as the core of next-generation information technological reform. Cloud computing has been actively embraced by many businesses, and is transforming industries such as the Internet, gaming, Internet of Things, and other emerging industries. Most enterprise users, however, are often restricted by traditional IT technical architecture and lack the motivation as well as the technical expertise for migration to the cloud.

Typically, the most important element in an enterprise is a database management system that can meet the needs for real-time transactions and analytics. Traditional stand-alone databases use a "scale-up" approach, which usually only supports storage and processing of several terabytes of data, far from being sufficient to satisfy actual needs.

The online transaction processing (OLTP) cluster-based system is gradually becoming the default approach for achieving higher performance and larger data storage capacity. As shown in Figure 1, common enterprise database clusters such as Oracle RAC usually use the Share-Everything (Share-Disk) mode. Database servers share resources such as disks and caches.

When the performance fails to meet requirements, database servers (usually minicomputers) have to be upgraded in terms of CPU, memory, and disk in order to improve the service performance of single-node databases. In addition, the number of database server nodes can be increased to improve performance and overall system availability by implementing parallel multiple nodes and server load balancing. However, when the number of database server nodes increases, the communication between nodes becomes a bottleneck, and the data access control of each node will be subject to the consistency requirements for transaction processing. Actual case studies show that an RAC with more than four nodes is very rare.

In addition, according to Moore's Law, processor performance will be doubled once every 18 months, while DRAM performance will be doubled once every 10 years, creating a gap between processor performance and memory performance. Although processor performance is on a rapid rise, disk storage performance enhancement is slow because of the physical limitation of disks. Factors such as mechanical rotational speed and the seek time of magnetic arms have limited the IOPS performance of hard disks. There has been essentially zero improvement in hard disk performance during the past 10 years; HDD disk rotation speed has been stuck between 7,200-15,000 RPM. HDD-based disk array storage is increasingly becoming a performance bottleneck for centralized storage architecture. Full-flash arrays on the other hand are limited by high costs and short rewriting service life, which is far from meeting the requirements of large-scale commercial applications.

Therefore, IoE's centralized storage (Share-Everything) approach is costly and has restrictions in performance, capacity and scalability. The high concurrency and big data processing requirements brought about by the Internet, the rapid development of x86 and open source database technology, and the increasing maturity of NoSQL, Hadoop and other distributed system technologies drive the evolution from the centralized scale-up system architecture to the distributed scale-out architecture.

Gartner's IT experts placed Web-scale IT as one of the top 10 IT trends in 2015 that would have a significant impact on the industry over the next three years. It is predicted that more and more companies will adopt Web-scale IT by building apps and architecture similar to those of Amazon, Google, and Facebook. This will enable Web-scale IT to become a commercial hardware platform, introducing new development models, cloud optimization methods, and software-defined methods to existing infrastructure. Development-operation collaboration models such as DevOps is the first step towards the development of Web-scale IT. Despite the potential benefits of Web-scale IT, traditional IT systems still face technical constraints from the following aspects.

■ Performance

User experience is an important factor influencing conversion rate. According to statistics, if a website fails to be loaded within four seconds, around 60% of customers will be lost. Poor user experience will cause customers to abandon the service or purchase services from competitors. Therefore, it is vital to work out a solution that improves user experience by ensuring low-latency responses for highly concurrent access scenarios.

■ Scalability

The access behavior of Internet/mobile Internet users is dynamic. Traffic at hot spots may surge over 10 times of average traffic. It is vital to work out a solution that can respond quickly to the resource overhead requirements of bursty traffic and provide undifferentiated user experience.

■ Fault tolerance and maximum availability

The Internet is deployed on a distributed computing architecture, and is based on a large number of x86 servers and universal network devices. Even though these devices are designed to be reliable, the probability of malfunction is high due to the sheer number of devices. In addition to mechanical faults, there will also be bugs in software development.

How should we automate the handling when hardware malfunctions?

How should we perform systematic damage control?

How should we limit traffic to the server and client based on standalone server QPS and concurrency to achieve dynamic traffic allocation?

How can we identify chain-dependent risks between services and important functional point dependencies of the system?

How can we assess the maximum possible risk points, detect the maximum availability faults of a distributed system, isolate faulty modules, and implement rollback for unfinished transactions?

How do we ensure that core functionality is available after sacrificing non-critical features through elegant downgrading?

■ Capacity management

As businesses expands, system performance will inevitably reach a bottleneck. How can we conduct more scientific capacity assessment and expansion, and automate the calculation of the correspondence between front-end requests and the number of back-end servers to predict hardware and software capacity requirements?

■ Service-orientation

How can we abstract business logic functionality into atomic services to encapsulate and assemble services, and deploy services in a distributed system environment, increasing business flexibility? How can we clarify the relationships between these services from a business perspective? How can we trace and present single service call chains in a large-scale distributed system to discover service call exceptions in a timely manner?

■ Cost

As the evolutionary performance metrics of the system continue to change, how can we ensure that the specific access traffic requirements can be met at minimum cost?

■ Automated O&M management

Evolving large-scale systems require constant maintenance, rapid iteration, and optimization. How can we deal with thousands or even tens of thousands of servers for O&M? How can we use automation tools and processes to manage large-scale hardware and software clusters and deploy, upgrade, expand, and maintain systems quickly?

After analyzing the traditional IDC application architecture, we found that many pain points are caused by the system architecture. To solve these pain points, we decided to migrate data to the cloud. We started to think over how to utilize cloud services to solve the pain points we encountered, for example,

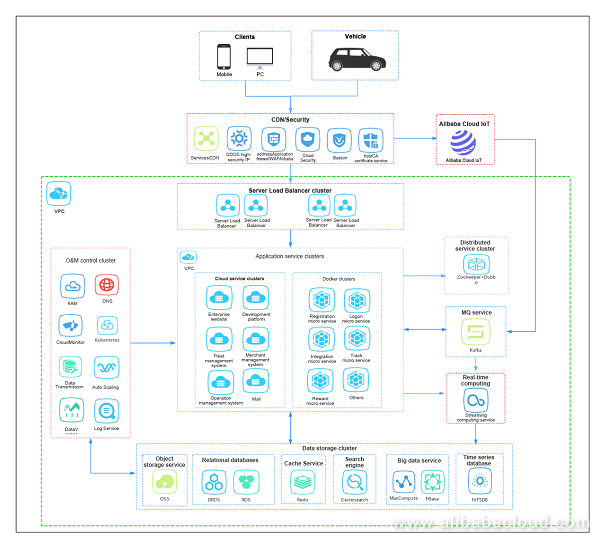

Our new application architecture on the cloud is compatible with some features of the historical application architecture. We also use the new cloud technologies and services to solve our pain points and bottlenecks. In addition, the new architecture on the cloud satisfies our business development plan for the next two to three years and supports our application system architecture in the ten million user level. The following figure shows our application architecture on the cloud:

This article explains how to know when to migrate from a single-cloud architecture to a multi-cloud architecture.

A multi-cloud computing architecture offers several advantages: more reliability, better opportunities for optimizing costs, and an ability to address region-specific compliance concerns, to name just a few benefits.

But a multi-cloud architecture is also more expensive and difficult to maintain. For that reason, you may be hesitant to make the switch to a multi-cloud strategy without having a good reason to do so.

This article explains how to know when it makes sense to migrate from a single-cloud architecture to a multi-cloud architecture. It discusses the financial, technical and geographical considerations that make multi-cloud strategies worth the extra cost and management.

The following are the main reasons why it might make sense for an organization to adopt a multi-cloud computing architecture.

If your workloads are all relatively consistent in size and type (if you can run all of them on a basic computing instance, for example), then keeping them on a single cloud is probably the best choice from a management perspective.

Yet if you find yourself having to run many different types of workloads, a multi-cloud strategy could be a better fit, for two reasons. First, it can help you save money by running each type of workload on the cloud that is most cost-efficient. Second, you’ll be able to choose from a wider array of services, which can help you to choose the best solutions from a technical perspective.

For example, perhaps you want to use the cloud to run virtual machines, containers and Big Data analytics. These are all distinct types of workloads. One cloud provider might offer better pricing for container hosting than another, yet the situation is reversed when it comes to Big Data solutions. By having multiple clouds at your disposal, you can optimize the cost and performance of each type of workload.

Even the best-maintained cloud servers sometimes fail. It’s a fact of life.

It’s also a fact of life that one of the best strategies for maintaining uptime is spreading out your services across as wide an array of host infrastructure as possible.

Therefore, by using multiple clouds, you help to ensure that an infrastructure failure from a cyberattack that impacts one provider (such as the Dyn outage that shut down a number of major websites in 2016) will not make all of your services unavailable. They’ll still be accessible from another host if one provider is impacted.

Of course, this functionality requires you to run the same types of workloads in multiple clouds, so if availability is part of your reason for adopting a multi-cloud architecture, you should distribute your workloads accordingly.

Although all of the major public cloud providers offer hosting infrastructure in most parts of the world, some have a more extensive presence in certain regions than others. If you need to deliver content quickly to certain regions, or address legal challenges related to operating in specific regions, it can make sense to adopt multiple clouds so that you have infrastructure based in each region where you need to operate.

For example, AWS’ availability in China is limited to two regions (Beijing and Ningxia). (AWS has plans to launch a third region in Hong Kong soon.) Google Cloud has no presence in China. In contrast, Alibaba Cloud has seven deployment regions in China (on top of regions in other parts of the world). If you want to reach the China market, Alibaba Cloud can be simpler, because it doesn’t require you to work with a subsidiary (which is the case with a provider like Azure), and you don’t have to worry about slow content delivery due to host infrastructure that is not based locally.

(If you’d like to give Alibaba Cloud a try, you can take advantage of the organization’s current offer of $300 in free credits.)

How should we build the architecture of a big data platform? This article studies the case of OpSmart Technology to elaborate on the business and data architecture of the IoT for enterprises.

How should we design the architecture of a big data platform? Are there any good use cases for this architecture? This article studies the case of OpSmart Technology to elaborate on the business and data architecture of Internet of Things for enterprises, as well as considerations during the technology selection process.

How should we build the architecture of a big data platform? Are there any good use cases for this architecture? This article studies the case of OpSmart Technology to elaborate on the business and data architecture of the Internet of Things for enterprises, as well as considerations during the technology selection process.

Based on the "Internet + big data + airport" model, OpSmart Technology provides wireless network connectivity services on-the-go to 640 million users every year. As the business expanded, OpSmart technology faced the challenge of increasing amounts of data. To cope with this, OpSmart Technology took the lead to build an industry-leading big data platform in 2016 with Alibaba Cloud products.

Below are some tips shared by OpSmart Technology's big data platform architect:

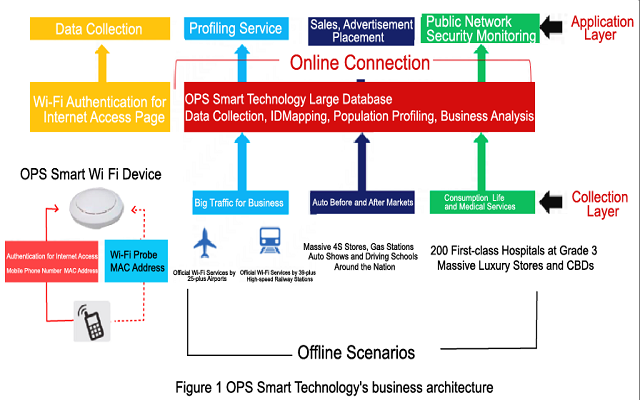

OpSmart Technology's business architecture is shown in the figure above. Our primary business model is to use our own devices to explore value in the data, and then apply the data to our business.

On the collection layer, we founded the first official Wi-Fi brand for airports in China, "Airport-Free-WiFi", covering 25 hub airports and 39 hub high-speed rail stations nationwide and providing wireless network services on-the-go to 640 million people each year. We also have the nation's largest Wi-Fi network for driving schools and our driving school Wi-Fi network is expected to cover 1,500-plus driving schools by the end of 2017. We are also the Wi-Fi provider of China's four major auto shows (Beijing, Shanghai, Guangzhou, and Chengdu) to serve more than 1.2 million people. In addition, we are also running the Wi-Fi network for 2,000-plus gas stations and 600-plus automobile 4S (sales, spare parts, service, survey) stores across the country.

On the data application layer, we connected online and offline behavioral data for user profiling to provide more efficient and precise advertisement targeting including SSP, DSP, DMP and RTB. We also worked with the Ministry of Public Security to eliminate public network security threats.

OpSmart Technology's big data and advertising platforms also offer technical capabilities for enterprises to help them establish their own big data platforms and improve their operation management efficiency with a wealth of quantitative data.

Through this course, you will learn the core services of Alibaba Cloud Elastic Architecture (Auto Scaling, CDN, VPC, ApsaraDB for Redis and Memcache). By studying some classic use cases, you can understand how to build an elastic architecture in Alibaba Cloud.

Through this course, you will learn the core services of Alibaba Cloud Analysis Architecture (E-MapReduce, MaxCompute, Table Store). By studying some classic use cases, you can understand how to build an analysis architecture in Alibaba Cloud.

This course helps you undertsand the components and services in Alibaba Cloud that will help you build a Hybrid Cloud solution. The course will also introduce 4 Hybrid reference architectures.

The objective of this course is to introduce the core services of Alibaba Cloud Fundamental Architecture (ECS, SLB, OSS and RDS) and to show you some classic use cases.

The objective of this course is to introduce the core services of Alibaba Cloud Analysis Architecture (E-MapReduce, MaxCompute, Table Store) and to show you some classic use cases.

Based on the tunneling technique, VPCs isolate virtual networks. Each VPC has a unique tunnel ID, and each tunnel ID corresponds to only one VPC.

With the development of cloud computing, a variety of network virtualization techniques have been developed to meet the increasing demands for virtual networks with higher scalability, security, reliability, privacy, and connectivity.

Earlier solutions combined the virtual network with the physical network to form a flat network, for example, the large layer-2 network. However, with the increase of virtual network scale, problems such as ARP spoofing, broadcast storms, and host scanning are becoming more serious. To resolve these problems, various network isolation techniques are developed to completely isolate the physical network from the virtual network. One of these techniques can isolate users with a VLAN. However, a VLAN only supports up to 4,096 nodes, which are insufficient for the large number of users in the public cloud.

Based on the tunneling technique, VPCs isolate virtual networks. Each VPC has a unique tunnel ID, and each tunnel ID corresponds to only one VPC. A tunnel encapsulation carrying a unique tunnel ID is added to each data packet transmitted over the physical network between ECS instances in a VPC. In different VPCs, ECS instances with different tunnel IDs are located on two different routing planes. Therefore, these ECS instances cannot communicate with each other.

Based on the tunneling and Software Defined Network (SDN) techniques, Alibaba Cloud has developed VPCs that are integrated with gateways and VSwitches.

Cloud Security Scanner consists of the following three modules:

Data middle platform: This module stores assets information throughout the Internet, including worldwide IP affiliations, worldwide domains, WHOIS data, Internet Security Socket Layer (SSL) certificates, Internet port fingerprints, Internet Web fingerprints, Internet Content Provider (ICP) filing information, and employee password databases.

Asset analysis: This module resolves and associates domains, and analyzes and recognizes port and Web fingerprints of assets based on Alibaba Cloud big data resources.

Scan task: This module is based on the elastic and scalable scan worker clusters deployed on Alibaba Cloud. In this module, you can configure scan tasks, schedule and dispatch tasks, detect vulnerabilities including sensitive content, drive-by downloads, and website defacement by using the vulnerability database and rule components, and output detection results.

Elastic and secure virtual cloud servers to cater all your cloud hosting needs.

A single server-based service for application deployment, security management, O&M monitoring, and more

Cloud-Native: Best Practices for Container Technology Implementation

2,593 posts | 792 followers

FollowAlibaba Clouder - September 25, 2020

Alibaba Cloud Native - October 27, 2021

Alibaba Cloud Native Community - July 22, 2022

Alibaba Cloud Native Community - December 1, 2023

Alibaba Clouder - February 3, 2021

ApsaraDB - September 19, 2022

2,593 posts | 792 followers

Follow IT Services Solution

IT Services Solution

Alibaba Cloud helps you create better IT services and add more business value for your customers with our extensive portfolio of cloud computing products and services.

Learn More Function Compute

Function Compute

Alibaba Cloud Function Compute is a fully-managed event-driven compute service. It allows you to focus on writing and uploading code without the need to manage infrastructure such as servers.

Learn MoreMore Posts by Alibaba Clouder

Dikky Ryan Pratama May 6, 2023 at 12:27 pm

very easy article to understand.