By Shanlie, an Alibaba Cloud Intelligence Technology Expert with 13 years of industry experience in the IT field and a deep understanding of the Internet cloud-native architecture and large-scale distributed technologies. Shanlie's rich practicing experience helped Alibaba Cloud customers complete comprehensive cloud-native transformations of the system architecture.

Microservice is essential in large distributed IT architectures. Microservice is an architecture style that splits a large system into multiple applications with independent lifecycles. These applications communicate with each other through a lightweight mechanism. They are built based on specific business and can be deployed and iterated independently. They can also be horizontally scaled according to service loads independently. The microservice concept and related technologies have brought a series of profound changes to the development of IT architecture:

As the saying goes, "There is no such thing as a free lunch." Microservice technology helps IT systems become more agile and robust with greater performance. However, it also leads to increased architecture complexity. If developers want to manage the microservice architecture better, they must handle various challenges, such as continuous integration, service discovery, application communication, configuration management, and traffic protection. Fortunately, a series of excellent open-source technical components and tools have emerged in the industry to address these widespread challenges. These components and tools allow developers to build microservice applications easier. After several years of development, technical frameworks, such as SpringCloud and Dubbo, have become standards in the microservice field. They significantly lower the threshold for microservices. However, these technical frameworks still cannot tackle the two biggest challenges for developers.

A perfect solution for lifecycle management and service governance is urgently needed. In a frequently iterated system, each application needs to release new versions. Thus, the centralized management of processes, including launch, deprecation, upgrade, and rollback are required. Besides, a fine-grained gray release is expected to reduce the impact of version iteration on the business.

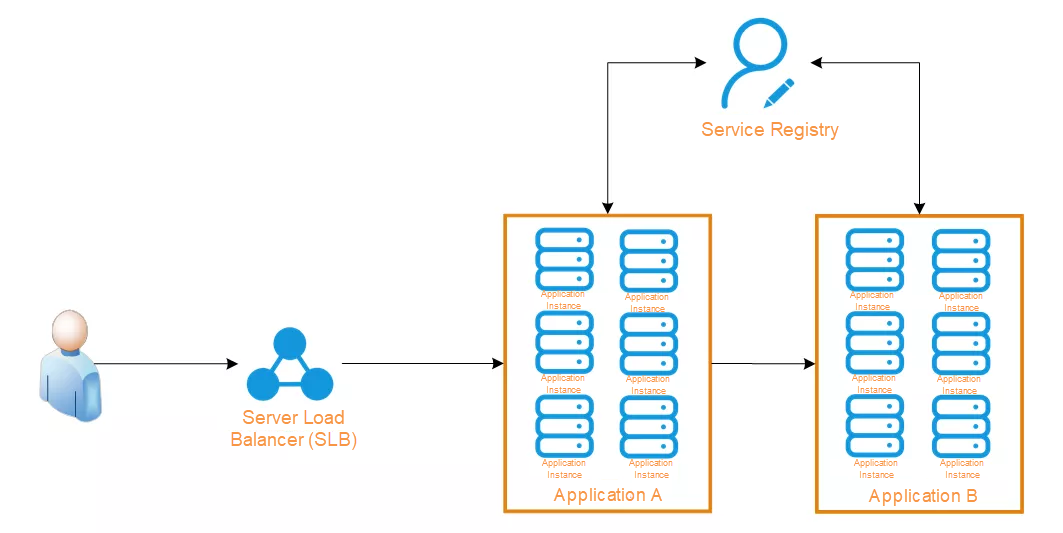

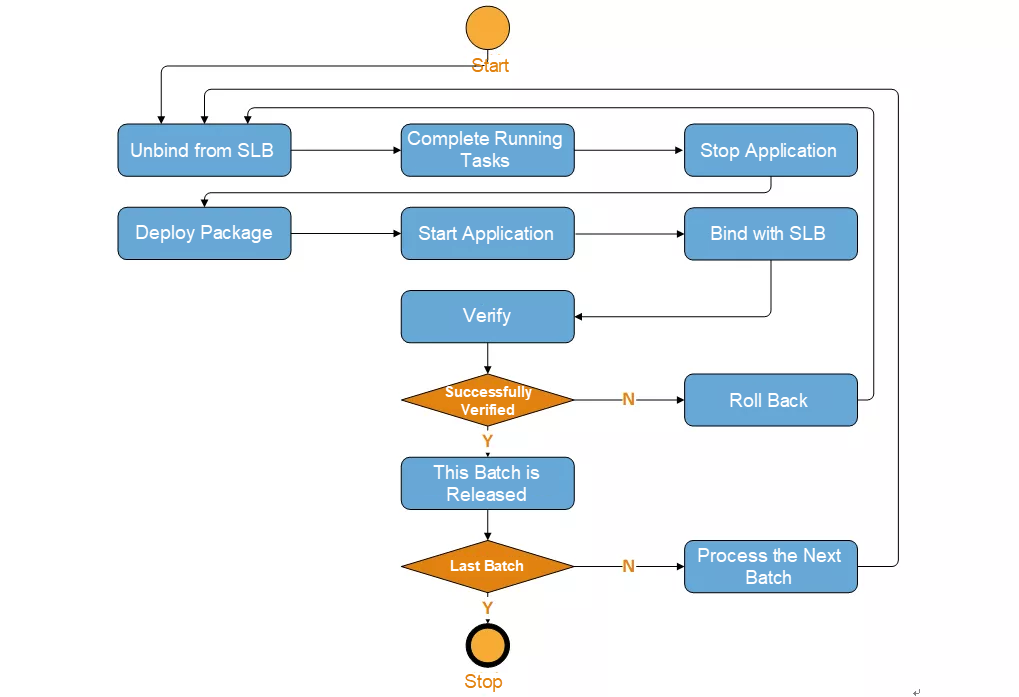

In a simple microservice architecture, if an application is at the entrance of the entire procedure, its frontend often attaches the Server Load Balancer (SLB) component. SLB receives business requests from end users. This type of application becomes more complex during lifecycle management. The following steps will ensure the balance and stability of applications during the release of new versions:

In the process above, advanced gray solutions for fine-grained traffic control are not involved. The complexity and operation difficulty are already relatively high. The management efficiency will be very low only by releasing scripts. The system stability will also be threatened easily.

A perfect solution for horizontal scaling is urgently needed. When the application performance reaches a bottleneck, it is necessary to increase instances and add new computing resources. The question is, "Where we can obtain new computing resources?"

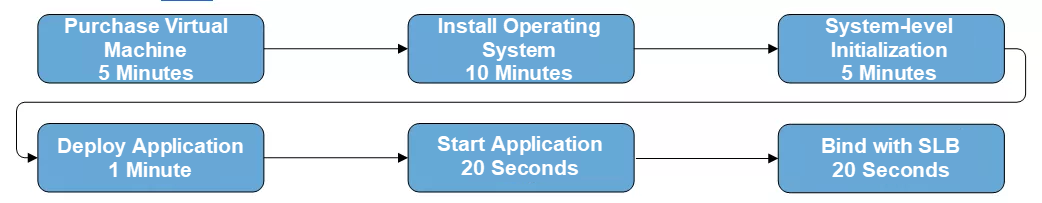

For an offline Internet Data Center (IDC), computing resources are allocated in advance. It is not easy to scale out due to various conditions. This problem no longer exists in the era of cloud computing. Now, it is easier to add the computing resources of an application. However, computing resources need to be deployed for applications, and applications need to be deployed in the microservice system.

As the process above shows, scaling out an application instance will take at least 20 minutes. It is time-consuming in the purchase, system-level initialization, and application deployment stages. Moreover, this solution does not work if the system traffic surges and an emergency scale-out is required within two minutes.

Developers have made various attempts to solve the two challenges above. New concepts and technical frameworks have emerged one after another over the past five years. Container technologies, such as Docker, have become mainstream in the industry with the support of Kubernetes. They are the essential element in building Cloud-Native applications. Container technologies enable a deeper exploration of cloud computing values and help developers solve the two problems.

Kubernetes provides a relatively comprehensive implementation mechanism for application lifecycle management and service governance. It facilitates rolling release and graceful operation of applications by building deployment resources and combining the proStop and postStart script. Although it is still impossible to realize direct fine-grained traffic control during gray release, significant improvements have been witnessed compared with the simple script method. The introduction of ServiceMesh technology can enhance traffic control, but it is not covered in this article.

In terms of horizontal scaling of applications, container technologies reduce the time spent on system installation and system-level initialization. However, virtual machine (VM) purchase is unavoidable, making it still impossible to achieve rapid horizontal scaling when traffic surges.

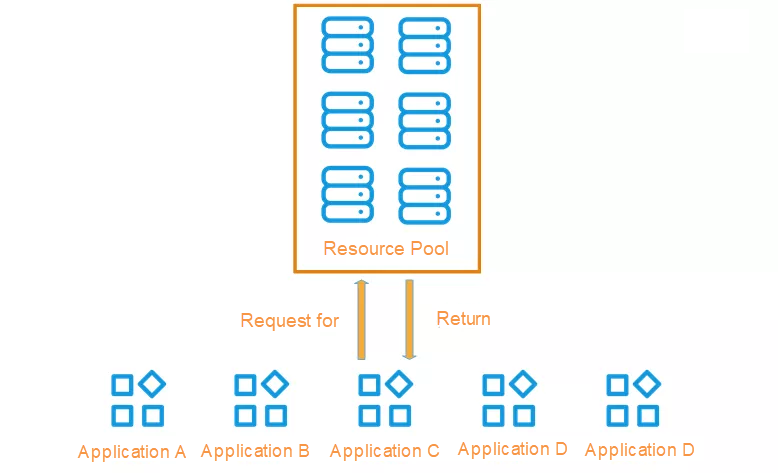

A portion of computing resources can be reserved in the resource pool. When an application needs to be scaled out, it can apply for resources from the resource pool. When the business load drops down, the application returns the unused computing resources.

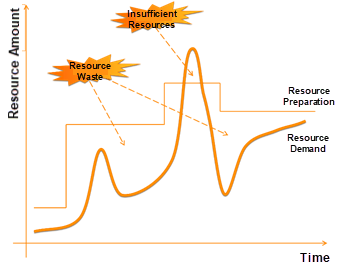

This solution is not perfect since there is a cost for every computing resource. Although the resource pool allows rapid use of computing resources, it causes a huge waste. The scale of the resource pool also causes a lot of trouble. The larger the pool is, the greater the waste will be, while a small pool may not meet the scaling needs.

The current business operation is very stable, and there is no obvious surge in user traffic. Therefore, some developers may think scaling is a pseudo demand, and there will be no such demand in the future. This assumption may be a misunderstanding of the Internet business. Businesses cannot be free of scaling demands.

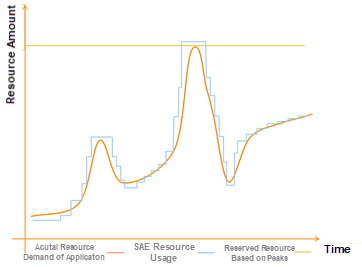

First of all, as long as a system serves people, there will be traffic peaks and troughs. For a system that runs 24/7, it is impossible to maintain the same user traffic. Just as predicted by the Pareto Principle, for many business systems, 80% of user traffic originates from just 20% of the daily time window. Even for systems with relatively balanced user traffic, traffic levels are low in the early hours. If idle computing resources can improve resource utilization, resource usage costs can be reduced significantly.

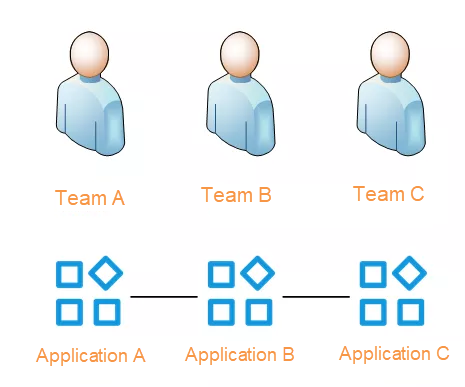

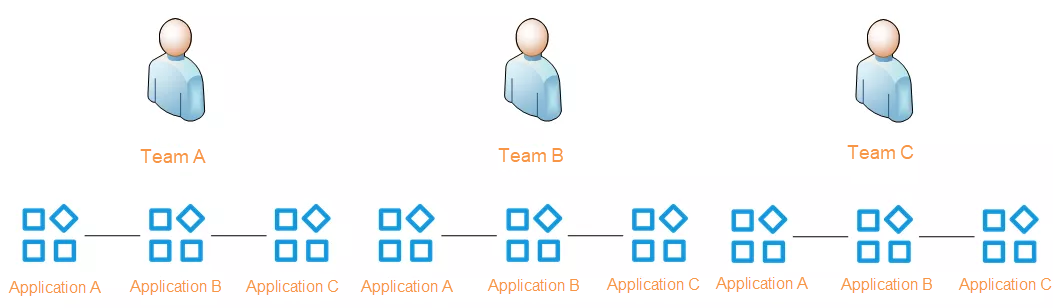

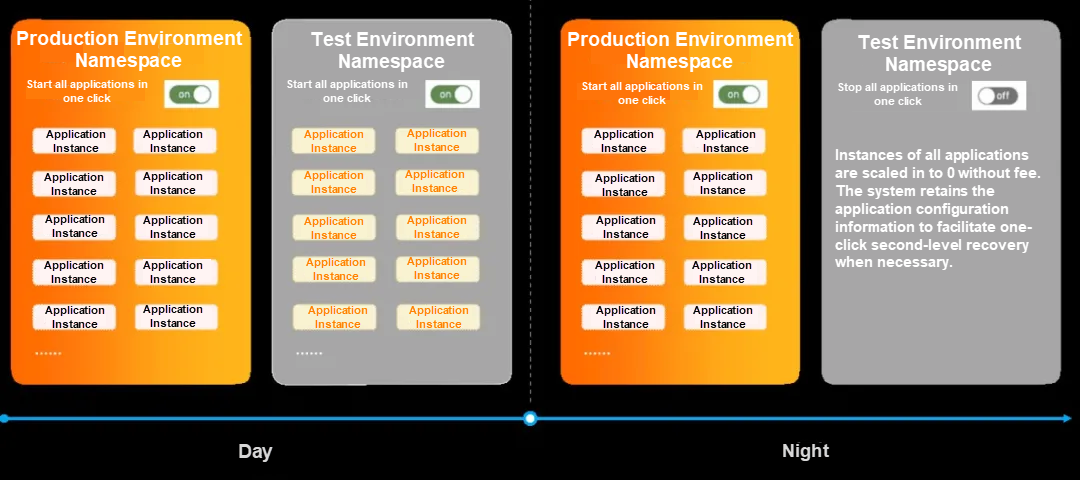

In addition, compared with the production environment, the development and test environments have more urgent demands for scaling. A set of microservice applications is developed by different teams, but ideally, they will share one set of test environments:

However, with the unique pace of application iteration, each team prefers an independent end-to-end test environment for environment isolation to avoid mutual influence between teams. As a result, multiple sets of test environments will likely be formed:

With increasing applications, teams, and business functions, the required development and test environments will multiply, resulting in huge resource waste. The utilization of computing resources in the test environment is much lower than in the production environment. For example, the verification of a simple function may need a new set of microservice application environments. As a result, the team wants to test the function in different devices and avoid mutual impact. The resource waste has caused a lot of enterprises trouble for many years.

Therefore, the microservice architecture has a strong demand for elastic scaling. In the process of elastic scaling, the resource utilization rate plays a decisive role in the final resource cost. It is true for horizontal elastic scaling of a single application and the start-stop operation of the entire environment. As long as resource utilization is improved, resource costs for enterprises will drop. However, it is worth noting that the resource utilization rate of most microservice applications is very low. For example, if the CPU utilization rate of all servers is exported every five minutes to calculate the daily average, we can see the general resource utilization rate. If the server resources of development and test environments are also taken into consideration, the rate is likely to be lower.

Developers need to deploy the prepared packages on the server. This is the root cause of low resource utilization. In the server-based application architecture, this operation allows responses to multiple user events. Programs should be kept on the server for a long time with conservative resource planning to ensure a prompt response. By doing so, service crashes due to heavy loads can be avoided. In this process, the load is uneven at a different time, resulting in lower overall resource utilization.

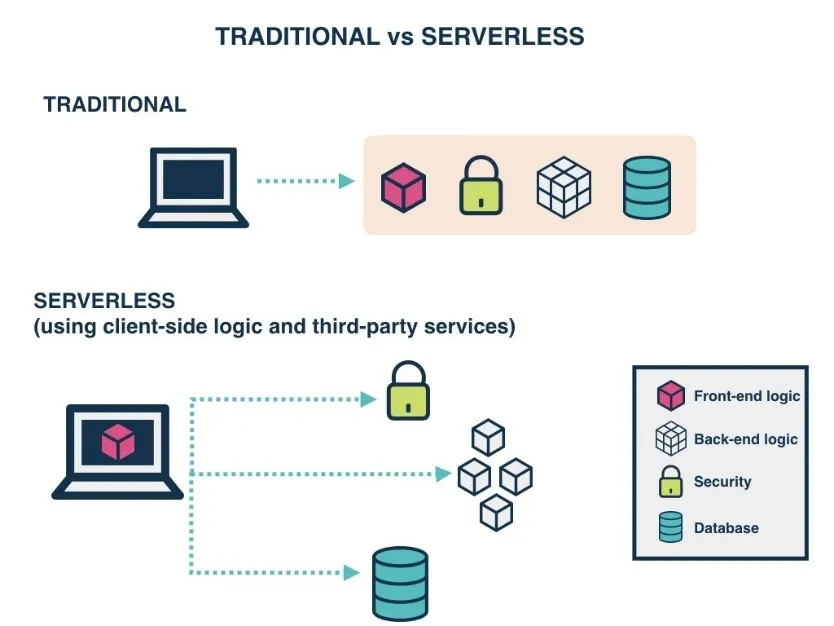

Serverless technology provides a new idea to improve the resource utilization rate. Serverless is a complete process of building and managing a microservice-based architecture. It allows developers to deploy applications directly without considering server resources. It is different from traditional architecture, as it is managed by third-party services and triggered by events. It also exists in Stateless computing containers. Building serverless applications enables developers to focus on product code, instead of managing and operating server resources. This truly allows developers to deploy applications without infrastructure construction.

Serverless technology presents many forms, among which the most typical one is Function as a Service (FaaS), such as the Alibaba Cloud Function Compute (FC). In Function Compute, the application and scheduling of all computing resources are triggered by specific business events. When the task corresponding to the business event is completed, the computing resources are released immediately. This approach implements the on-demand allocation of computing resources and significantly improves resource utilization, which is the ultimate form of Serverless technology.

Another form is the Serverless container technology. Serverless container instances run in the isolated environment, where each computing node is completely isolated via the lightweight virtualized Sandboxie technology. Users can deploy container applications without server resource purchasing, node maintenance, or cluster capacity planning. Besides, the configured CPU and memory resources of applications are charged in pay-as-you-go mode. When a microservice application needs to be scaled out, computing resources can be obtained without purchasing another server. This helps developers cut computing costs, reduce the waste of idle resources, and cope with traffic peaks. Alibaba Cloud Serverless Kubernetes (ASK) is a typical product of Serverless container technology.

Serverless technology matches the development direction of cloud computing and cloud-native application architecture. However, for microservice application developers, FaaS and Serverless Kubernetes have limitations.

Not every business can be built based on FaaS, especially those with long procedures and upstream and downstream dependencies. This makes it impossible to implement FaaS transformation. Even though the FaaS transformation of some business systems is feasible, the transformation requires certain efforts and cannot be transplanted seamlessly.

The Serverless Kubernetes architecture can adapt to all business scenarios. However, developers must master a series of complex Kubernetes-related concepts to build a complete Kubernetes system, which may be very difficult. Extremely complex works are necessary to build various components in the Kubernetes ecosystem and adapt to the network and storage layers.

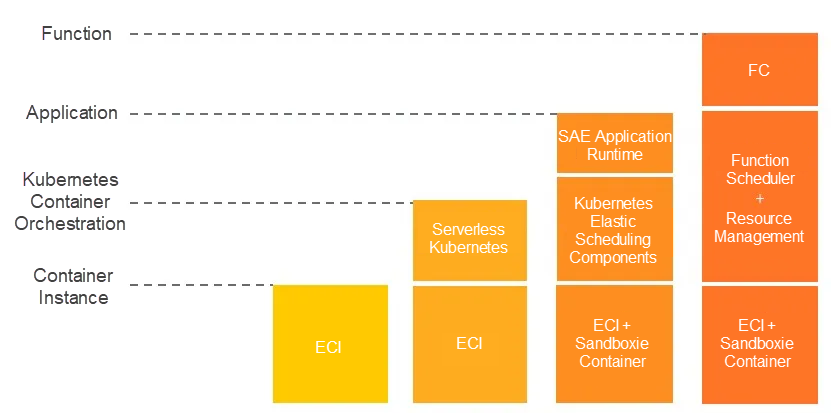

The reason for such limitations is simple. For the microservice technologies represented by SpringCloud, systems are built around applications or individual services, no matter for version updates or horizontal scaling. The core of the Serverless Kubernetes architecture is Pod, which prefers the system bottom layer. Therefore, users need to focus more on managing the underlying resources of applications. The core of the FaaS architecture is Function, which focuses more on the system upper layer. Therefore, with decreased flexibility, it cannot adapt to all business scenarios.

For developers that build microservice applications via the mainstream SpringCloud system or Dubbo system, their final demands of the solution applied to reduce resource costs must involve two aspects:

Is there a technology intermediate between FaaS and Serverless container that applies to all of the demands above? The answer is the application-layer Serverless technology represented by Alibaba Cloud Serverless App Engine (SAE).

Figure: Serverless Technologies at Different Levels

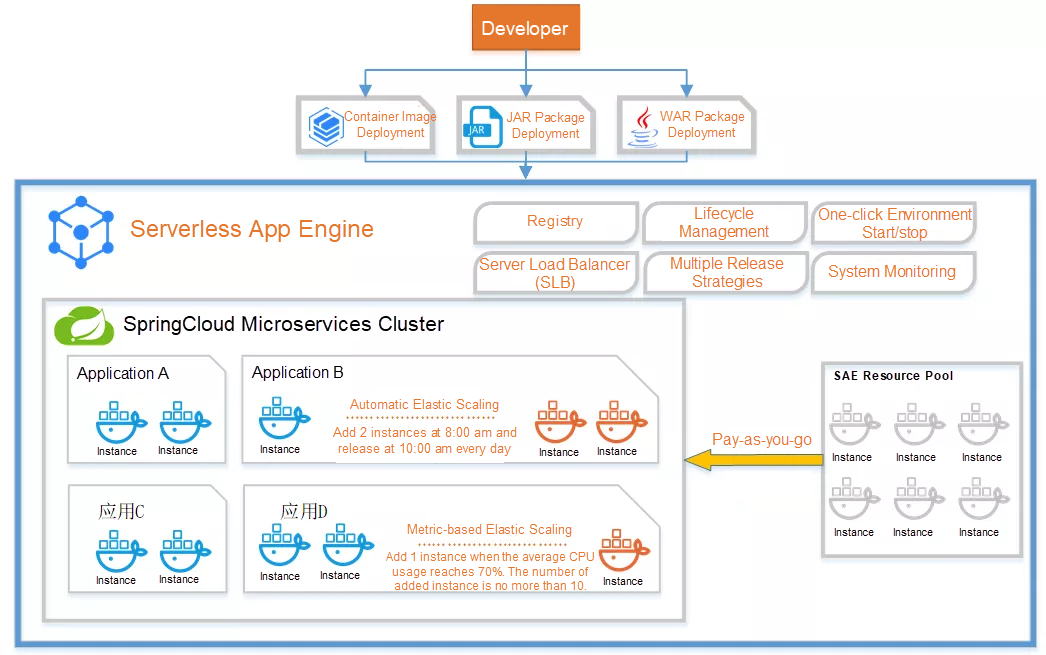

SAE provides the perfect integration of Serverless architecture and microservice architecture. It realizes seamless compatibility with mainstream microservice architectures, such as SpringCloud and Dubbo. SAE has almost no transformation cost, and computing resources are used on-demand and charged with the pay-as-you-go model. Therefore, idle computing resources can be reduced, and O&M work at the IaaS layer is eliminated, effectively improving the efficiency of development and O&M.

Take SpringCloud as an example. Only two steps are needed to deploy a new application:

In the steps above, capacity evaluation, server purchasing, operating system installation, and resource initialization are not included. The microservice application with multiple peer instances can still run. In Serverless, the concept of server resources no longer exists, and the application is based on Sandboxie containers scheduled by SAE. Each instance will be charged according to the usage time only after being put into use.

Developers do not need to worry whether applications are deployed on physical machines, virtual machines, or containers or know the version of the underlying operating system. They only need to pay attention to the computing resources each application instance occupies. Only one command is needed to scale out an application from four instances to six instances or scale in two instances. In addition, the binding with SLB can be automatically established or removed. All these features bring great value to developers.

Using SAE to deploy microservice applications can ensure 100% compatibility with the existing technical architecture and business functions. SAE only changes the carrier in which the application runs, and the migration cost is negligible.

In addition to manual scaling commands, SAE supports two automatic elasticity mechanisms to flexibly and horizontally scale microservice applications and further utilize the elasticity of cloud computing.

These two elasticity mechanisms support the fine-grained management of system capacity and adjust resource usage in pace with the changing business traffic. By doing so, resource utilization is significantly increased, and resource costs are reduced.

SAE has made several optimizations to schedule and start computing resources. It only takes a few seconds to start a new scaled instance, which is of great significance in burst scenarios that require urgent and rapid scaling.

SAE elasticity is fully-demonstrated in development and test environments. With excellent resource scheduling capability, SAE can start and stop a set of microservice applications in one click. The test environment can be started, even for performing smoke tests on a simple new feature. The new environment can be built within seconds, quickly put to use, and released immediately once the test is completed. In addition, you can quickly put a new environment into use at very low costs, allowing you to make changes for multi-team collaboration in the microservice application development process.

SAE is charged according to the resource usage. The cost consists of two parts charged based on statistical results and calculation methods and is deducted hourly. The calculation methods of resource usage of all applications are listed below:

The price of CPU is 0.0021605 yuan per minute per core, and the price of memory is 0.0005401 yuan per minute per GB. SAE also provides prepaid resource packages, which is similar to computing resource pre-ordering by wholesale. As long as these resources are consumed within the validity period, the costs can be reduced further. If the resource package runs out, the system automatically switches the billing mode to pay-as-you-go.

The following is a real case. With this case, this article further explains how SAE helped microservice applications reduce resource costs. In the following case, a microservice system contained 87 application instances configured with a 2-core CPU, 4 GB of memory, and 20 GB of disk space. The daily average running time of each instance is eight hours.

Based on this comparison, the resource cost of microservice applications can be greatly reduced if SAE elasticity can be utilized appropriately.

Except for simplifying O&M works and reducing resource costs, SAE also provides a series of additional functions for microservice applications. These functions bring developers extra value. These out-of-the-box features can be fully-leveraged to make it easier to build microservice applications:

There are applications written in non-Java languages or Java applications without microservice frameworks, such as Spring Cloud. Can SAE perfectly support these applications and help enterprises reduce resource costs? Yes, it can! SAE provides a deployment method based on a container image. This means, no matter which programming language is used, it can be deployed on SAE, as long as the final application can be released as a container image.

For Java-based microservices or common applications and non-Java applications, SAE's ultimate elasticity presents no essential difference. SAE can improve system resource utilization via Serverless technology. However, some additional functions provided by SAE only support SpringCloud or Dubbo, such as the free microservice registry.

The following figure shows the great value brought by Serverless technology:

Q1: If an application instance does not have fixed resources or fixed IP addresses, how can we troubleshoot in the server via SSH?

A: It is unnecessary to have fixed resources and IP addresses for troubleshooting. In the cloud computing era, it is not a good idea to log on to the server through SSH for troubleshooting. In contrast, SAE provides complete monitoring capabilities and can be easily integrated with next-generation monitoring and diagnostic products, such as Cloud Monitoring and ARMS. This brings greater convenience for troubleshooting. If users insist to log on to the server, SSH is still feasible. Users can use Webshell provided by SAE to simplify this troubleshooting process.

Q2: Is the disk billed? What if a large-capacity disk is required? Is the data in the disk still retained after the application restarts?

A: In the microservice field, applications are usually Stateless and do not need to save mass local data. For special scenarios, NAS can be integrated for centralized storage.

Q3: How can we view the application logs?

A: The SAE console allows users to access logs in real-time. The console is like a distributed log collection platform for free. We strongly recommend integrating with Alibaba Cloud Log Service (SLS) to leverage the application logs further.

Six Years! Time to Understand Serverless Again | Discussions about Cloud-Native

Challenges and Considerations about Alibaba Cloud Application Scaling

212 posts | 13 followers

FollowAlibaba Cloud Serverless - March 16, 2023

ProsperLabs - May 10, 2023

Alibaba Cloud New Products - December 4, 2020

Alibaba Cloud Native Community - December 6, 2022

Alibaba Container Service - July 16, 2019

Alibaba Cloud Serverless - April 7, 2022

212 posts | 13 followers

Follow Microservices Engine (MSE)

Microservices Engine (MSE)

MSE provides a fully managed registration and configuration center, and gateway and microservices governance capabilities.

Learn More Managed Service for Prometheus

Managed Service for Prometheus

Multi-source metrics are aggregated to monitor the status of your business and services in real time.

Learn More Application Real-Time Monitoring Service

Application Real-Time Monitoring Service

Build business monitoring capabilities with real time response based on frontend monitoring, application monitoring, and custom business monitoring capabilities

Learn More ACK One

ACK One

Provides a control plane to allow users to manage Kubernetes clusters that run based on different infrastructure resources

Learn MoreMore Posts by Alibaba Cloud Native