By Yuan Yi

This article mainly introduces how to use Logtail to collect logs from NAS mounted on K8S, in two different ways. The implementation principle of the two collection methods is the same. Logtail and the business container are mounted on the same NAS, so that log data from Logtail and the business container are shared to implement log collection. The following are the respective characteristics of the two collection methods:

1.Sidecar mode. This mode is flexible and suitable for horizontal scaling, which can be used in scenarios with a large volume of data;

2.Separate deployment of Logtail. Resource consumption is relatively low, but flexibility and scalability are not strong. It is suitable for scenarios with a small volume of data in the overall cluster (it is recommended that the overall log volume should not exceed 10 MB per second).

Configure NAS-PVC that mounts NAS using PV&PVC (see link)

Example yaml for the Sidecar mode:

apiVersion: batch/v1

kind: Job

metadata:

name: nginx-log-sidecar1-demo

spec:

template:

metadata:

name: nginx-log-sidecar-demo

spec:

# Volume configuration

volumes:

- name: nginx-log

persistentVolumeClaim:

claimName: nas-pvc

containers:

# Master container configuration

- name: nginx-log-demo

image: registry.cn-hangzhou.aliyuncs.com/log-service/docker-log-test:latest

command: ["/bin/mock_log"]

args: ["--log-type=nginx", "--stdout=false", "--stderr=true", "--path=/var/log/nginx/access.log", "--total-count=1000000000", "--logs-per-sec=100"]

volumeMounts:

- name: nginx-log

mountPath: /var/log/nginx

# Sidecar container configuration of Logtail

- name: logtail

image: registry.cn-hangzhou.aliyuncs.com/log-service/logtail:latest

env:

# user id

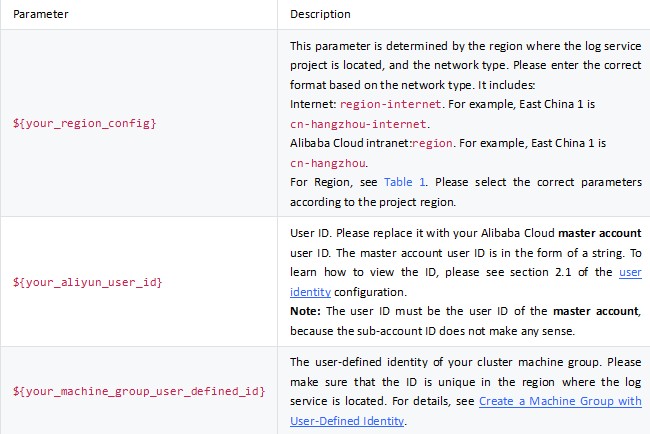

- name: "ALIYUN_LOGTAIL_USER_ID"

value: "${your_aliyun_user_id}"

# user defined id

- name: "ALIYUN_LOGTAIL_USER_DEFINED_ID"

value: "${your_machine_group_user_defined_id}"

# config file path in logtail's container

- name: "ALIYUN_LOGTAIL_CONFIG"

value: "/etc/ilogtail/conf/${your_region_config}/ilogtail_config.json"

# env tags config

- name: "ALIYUN_LOG_ENV_TAGS"

value: "_pod_name_|_pod_ip_|_namespace_|_node_name_|_node_ip_"

- name: "_pod_name_"

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: "_pod_ip_"

valueFrom:

fieldRef:

fieldPath: status.podIP

- name: "_namespace_"

valueFrom:

fieldRef:

fieldPath: metadata.namespace

- name: "_node_name_"

valueFrom:

fieldRef:

fieldPath: spec.nodeName

- name: "_node_ip_"

valueFrom:

fieldRef:

fieldPath: status.hostIP

# Share the volume with the master container

volumeMounts:

- name: nginx-log

mountPath: /var/log/nginx

# Health check

livenessProbe:

exec:

command:

- /etc/init.d/ilogtaild

- status

initialDelaySeconds: 30

periodSeconds: 30

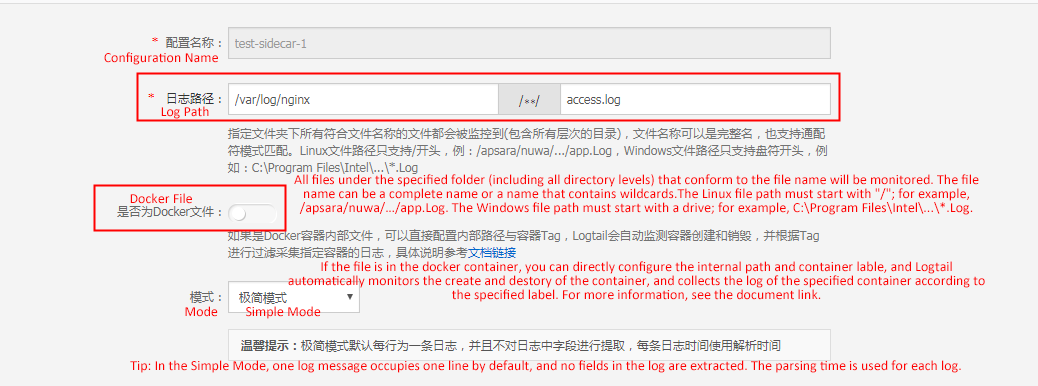

restartPolicy: "Never"The SLS console collection configuration settings are as follows:

The meaning of the collected system default fields:

__source__: internal IP address of the pod container

__tag__:__hostname__: pod name

__tag__:__path__: log path

__tag__:__receive_time__: collection time

__tag__:__user_defined_id__: user-defined identity

__tag__:_namespace_: namaspace to which the pod belongs

__tag__:_node_ip_: IP address of the Node to which the pod belongs

__tag__:_node_name_: name of the Node to which the pod belongs

__tag__:_pod_ip_: internal IP address of the pod container

__tag__:_pod_name_: pod name

Note: spec.replicas (the number of replicas) can only be 1, and cannot be more. If it exceeds 1, the collection will be repeated.

First, create a deployment for Logtail. The following is the template used this time:

apiVersion: apps/v1

kind: Deployment

metadata:

name: logtail-deployment

namespace: kube-system

labels:

k8s-app: nas-logtail-collecter

spec:

replicas: 1

selector:

matchLabels:

k8s-app : nas-logtail-collecter

template:

metadata:

name: logtail-deployment

labels:

k8s-app : nas-logtail-collecter

spec:

containers:

# Logtail configuration

- name: logtail

image: registry.cn-hangzhou.aliyuncs.com/log-service/logtail:latest

env:

# aliuid

- name: "ALIYUN_LOGTAIL_USER_ID"

value: "${your_aliyun_user_id}"

# user defined id

- name: "ALIYUN_LOGTAIL_USER_DEFINED_ID"

value: "${your_machine_group_user_defined_id}"

# config file path in logtail's container

- name: "ALIYUN_LOGTAIL_CONFIG"

value: "/etc/ilogtail/conf/${your_region_config}/ilogtail_config.json"

volumeMounts:

- name: nginx-log

mountPath: /var/log/nginx

# Volume configuration

volumes:

- name: nginx-log

persistentVolumeClaim:

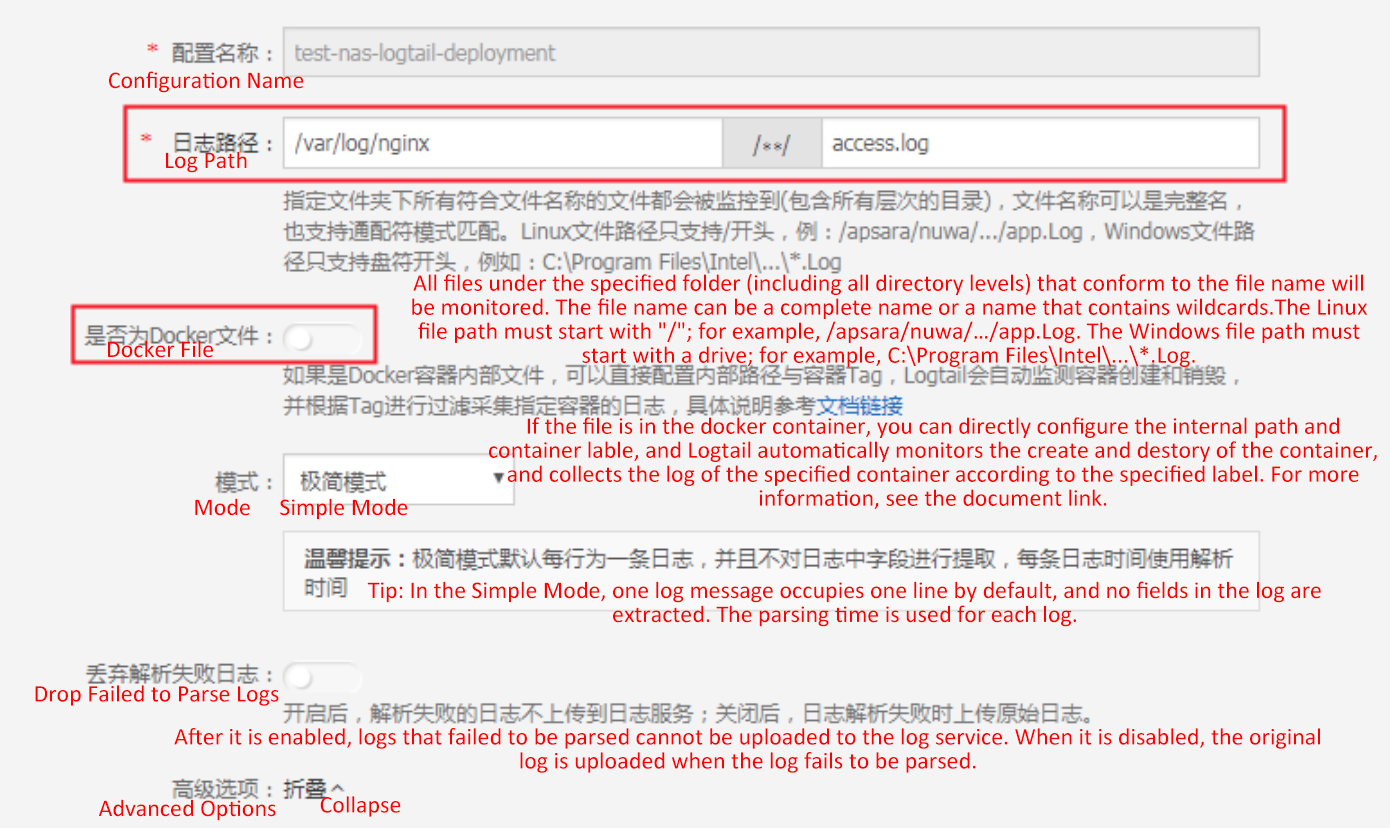

claimName: pvc-test-nginxAfter Logtail runs successfully, you can create a corresponding machine group on the SLS console, based on ALIYUN_LOGTAIL_USER_DEFINED_ID in the template. For more information, see the table description in Solution 1.

Create two pods to test whether the collection is successful. The template for one of the pods is:

apiVersion: v1

kind: Pod

metadata:

name: "test-nginx-2"

spec:

containers:

- name: "nginx-log-demo"

image: "registry.cn-hangzhou.aliyuncs.com/log-service/docker-log-test:latest"

command: ["/bin/mock_log"]

args: ["--log-type=nginx", "--stdout=false", "--stderr=true", "--path=/var/log/nginx/access.log", "--total-count=1000000000", "--logs-per-sec=100"]

volumeMounts:

- name: "nas2"

mountPath: "/var/log/nginx"

volumes:

- name: "nas2"

flexVolume:

driver: "alicloud/nas"

options:

server: "mount address of NAS"

path: "/nginx/test2"

vers: "4.0"The other pod mounts the /var/log/nginx to the/nginx/test1 directory. Now, the two pods are mounted to /nginx/test1 and /nginx/test2 respectively, while Logtail is mounted to /nginx.

Finally, configure the collection configuration of Logtail.

Logtail is also mounted with the same NAS, so Logtail only needs to collect logs in its own folder, and the "Docker File" option is switched OFF.

Note: The NAS path has been mounted to the Logtail container, so the "Docker File" option does not need to be switched ON.

180 posts | 32 followers

FollowAlibaba Cloud Serverless - February 28, 2023

Alibaba Container Service - October 13, 2022

Alibaba Container Service - October 13, 2022

H Ohara - March 13, 2024

Alibaba Cloud Native - August 23, 2024

Alibaba Cloud Blockchain Service Team - January 17, 2019

180 posts | 32 followers

Follow Apsara File Storage NAS

Apsara File Storage NAS

Simple, scalable, on-demand and reliable network attached storage for use with ECS instances, HPC and Container Service.

Learn More Container Service for Kubernetes

Container Service for Kubernetes

Alibaba Cloud Container Service for Kubernetes is a fully managed cloud container management service that supports native Kubernetes and integrates with other Alibaba Cloud products.

Learn More ACK One

ACK One

Provides a control plane to allow users to manage Kubernetes clusters that run based on different infrastructure resources

Learn More Cloud-Native Applications Management Solution

Cloud-Native Applications Management Solution

Accelerate and secure the development, deployment, and management of containerized applications cost-effectively.

Learn MoreMore Posts by Alibaba Container Service