By Tianke from F(x) Team

This article will use Pipcook 1.0 to quickly train a form recognition model and use this model to improve the efficiency of form development.

You may encounter a pain point in the process of restoring web pages on the frontend. The designer designs a form in the design draft, and you copy and modify the code into a similar form that you can find in Ant Design or Fusion. It is inefficient and troublesome.

Can it be faster? Can the form code be generated by screenshots? The answer is Yes.

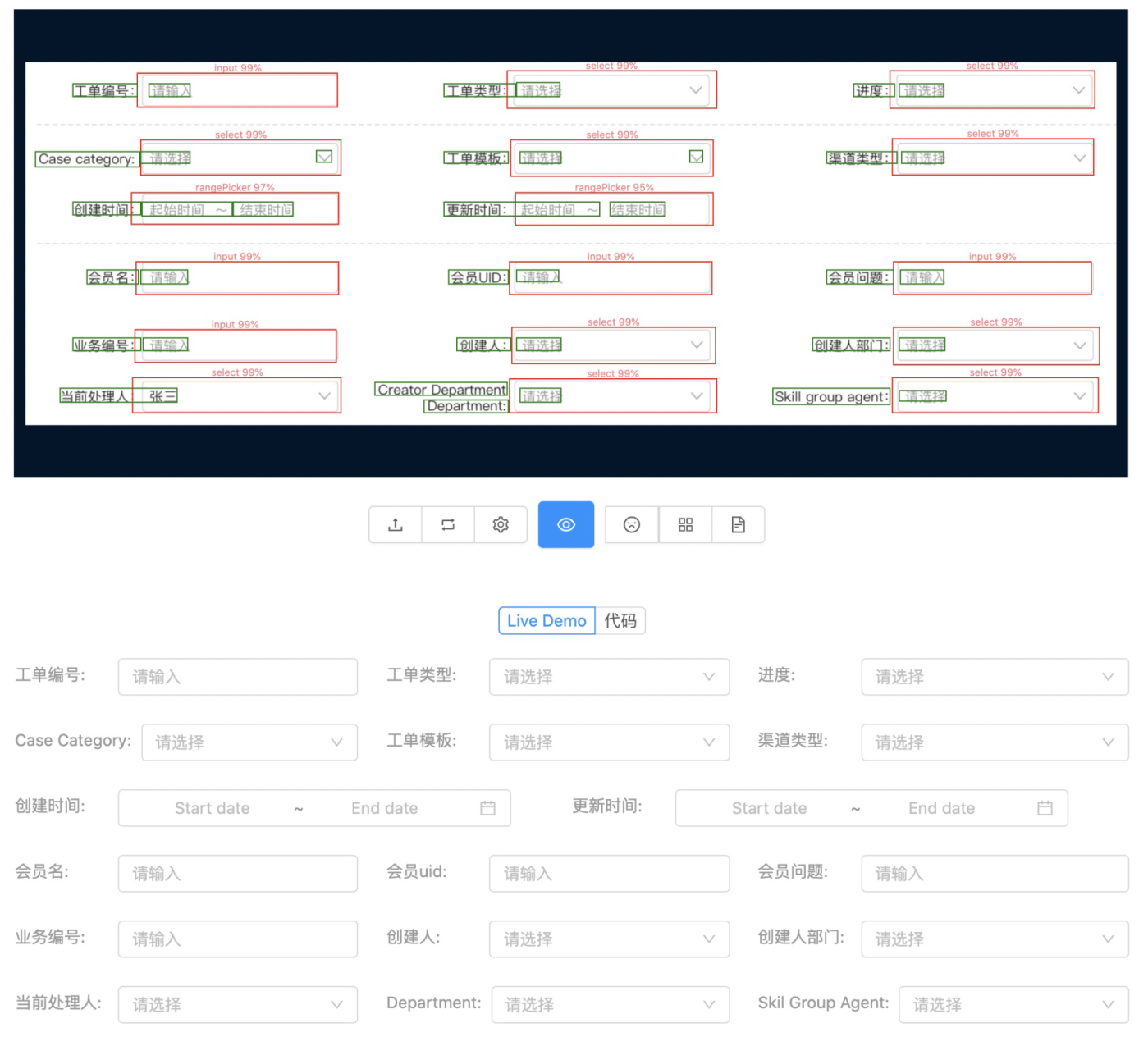

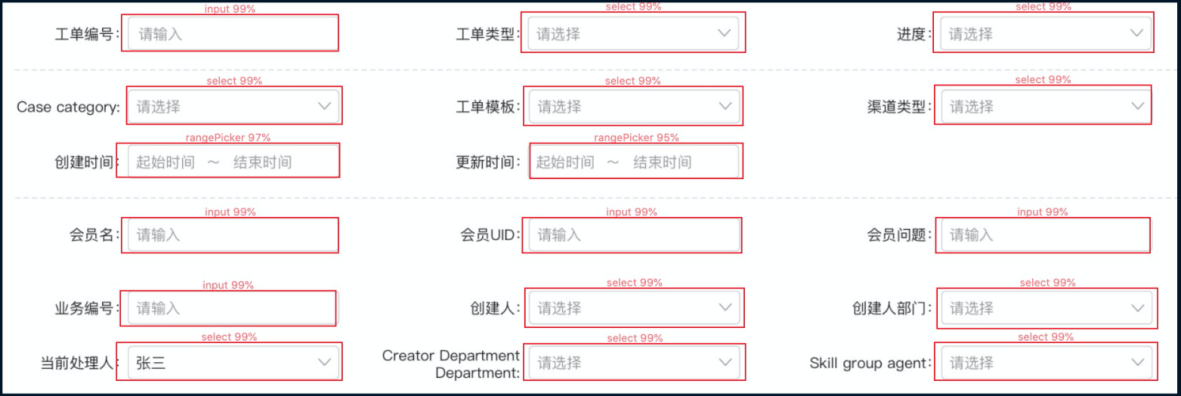

You can train a target detection model. The input of the model is screenshots, and the output is the types and coordinates of all form items. Therefore, you can get all the form items in it by taking a screenshot of the form in the design draft. The form code can be generated after combining them with labels generated by text recognition. For example, I have implemented the function of generating form code by screenshots.

In this figure, the red boxes are the form items detected by the target detection model, and the green boxes are the text recognized by the text recognition API. After some calculation, the form protocol or code can be generated.

Text recognition is universal, so I will not introduce it. However, how is the function of form item detection implemented? The following part introduces the overall steps:

The following part describes each step in detail.

Here, the form recognition samples are universal target detection samples. For the labeling method, please see the previous section. A dataset of form recognition samples is provided for convenience:

http://ai-sample.oss-cn-hangzhou.aliyuncs.com/pipcook/datasets/mid/mid_base.zipNext, I will show you how to use Pipcook to run the sample pages to generate a large number of samples and train a target detection model.

Pipcook is a machine learning application framework developed by the D2C Team of Tao Technology for frontend developers. We hope Pipcook will become a platform for frontend engineers to learn and practice machine learning and promote frontend intelligence. Pipcook is an open-source framework. You are welcome to build it together with us.

Make sure that your node version is 12 or later. Then:

// Install cnpm for acceleration

npm i @pipcook/pipcook-cli cnpm -g --registry=https://registry.npm.taobao.orgThe initialization is next:

pipcook init --tuna -c cnpm

pipcook daemon startForm recognition is a target detection task, so you can create a new configuration file in .json format. Don't worry. You do not need to modify most parameters in this configuration file.

{

"plugins": {

"dataCollect": {

"package": "@pipcook/plugins-object-detection-pascalvoc-data-collect",

"params": {

"url": "http://ai-sample.oss-cn-hangzhou.aliyuncs.com/pipcook/datasets/mid/mid_base.zip"

}

},

"dataAccess": {

"package": "@pipcook/plugins-coco-data-access"

},

"modelDefine": {

"package": "@pipcook/plugins-detectron-fasterrcnn-model-define"

},

"modelTrain": {

"package": "@pipcook/plugins-detectron-model-train",

"params": {

"steps": 20000

}

},

"modelEvaluate": {

"package": "@pipcook/plugins-detectron-model-evaluate"

}

}

}You need to set up parameters in dataCollect.params:

url: Your sample addressYou can also run this configuration file directly to train a form detection model.

The target detection model requires a large amount of computing, so you may need a GPU machine. Otherwise, the training will take several weeks.

pipcook run form.json --tunaThe training time may be a bit long, so go to lunch or write some business code.

After the training is completed, a model is generated and stored in the output directory.

After the training is completed, the output is generated in the current directory. This is a brand new npm package. First, install the dependency:

cd output

// BOA_TUNA = 1 It is mainly for acceleration in China

BOA_TUNA=1 npm installAfter the installation, go back to the root directory, download a test image, and name it test.jpg.

cd ..

curl https://img.alicdn.com/tfs/TB1bWO6b7Y2gK0jSZFgXXc5OFXa-1570-522.jpg --output test.jpgFinally, we can begin to predict:

const predict = require('./output');

(async () => {

const v1 = await predict('./test.jpg');

console.log(v1);

// {

// boxes: [

// [83, 31, 146, 71], // xmin, ymin, xmax, ymax

// [210, 48, 256, 78],

// [403, 30, 653, 72],

// [717, 41, 966, 83]

// ],

// classes: [

// 0, 1, 2, 2 // class index

// ],

// scores: [

// 0.95, 0.93, 0.96, 0.99 // scores

// ]

// }

})();Note: The result consists of three parts:

Visualized boxes, scores, and classes:

66 posts | 5 followers

FollowAlibaba F(x) Team - June 20, 2022

Alibaba F(x) Team - February 23, 2021

Alibaba F(x) Team - February 3, 2021

Alibaba F(x) Team - December 31, 2020

Alibaba F(x) Team - February 2, 2021

Alibaba F(x) Team - December 8, 2020

66 posts | 5 followers

Follow Platform For AI

Platform For AI

A platform that provides enterprise-level data modeling services based on machine learning algorithms to quickly meet your needs for data-driven operations.

Learn More Epidemic Prediction Solution

Epidemic Prediction Solution

This technology can be used to predict the spread of COVID-19 and help decision makers evaluate the impact of various prevention and control measures on the development of the epidemic.

Learn More ChatAPP

ChatAPP

Reach global users more accurately and efficiently via IM Channel

Learn More Intelligent Speech Interaction

Intelligent Speech Interaction

Intelligent Speech Interaction is developed based on state-of-the-art technologies such as speech recognition, speech synthesis, and natural language understanding.

Learn MoreMore Posts by Alibaba F(x) Team