By Laisi

As one of the four major technical directions of the Frontend Committee of Alibaba, the frontend intelligent project created tremendous value during the 2019 Double 11 Shopping Festival. The frontend intelligent project automatically generated 79.34% of the code for Taobao's and Tmall's new modules. During this period, the R&D team experienced a lot of difficulties and had many thoughts on how to solve them. In the series "Intelligently Generate Frontend Code from Design Files," we talk about the technologies and ideas behind the frontend intelligent project.

A business module, also called a business component, is a unit of code providing a certain business feature. The promotion pages in our mobile apps contain a lot of business modules. If we can recognize and extract the business modules from these pages, we can use the business modules for various purposes, such as code reuse and business field binding. Therefore, the business module recognition service is a fundamental part of the frontend intelligent project.

The business module recognition service is oriented at frontend pages, which is different from basic component recognition and form recognition that are oriented at the mid-end and backend systems. It is used to recognize business modules from frontend pages' visual design files that are displayed in mobile apps. Generally, design files provide a lot of directly recognizable information, such as the text content and image size.

Considering that a business module's UI structure is often complex, we did not choose image deep learning to implement business module recognition. Instead, we extract predefined feature values from design files' domain-specific languages (DSLs) and use multiclass classification of traditional machine learning to implement business module recognition. The business module recognition service can return information about recognized business modules, including their categories and positions in visual design files.

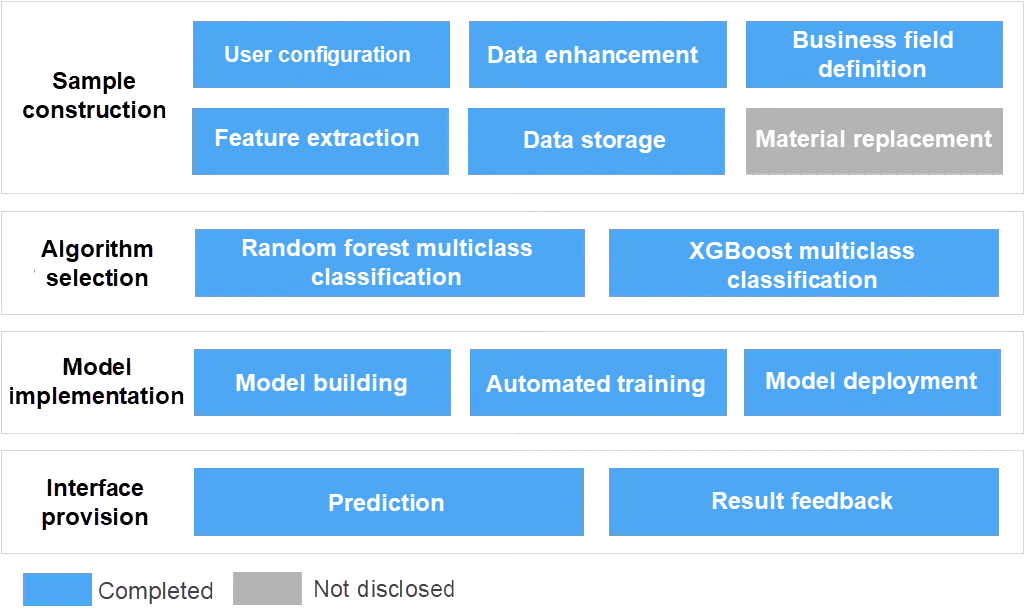

The following figure shows the overall process of implementing business module recognition. It involves the following steps:

Fig: Overall process

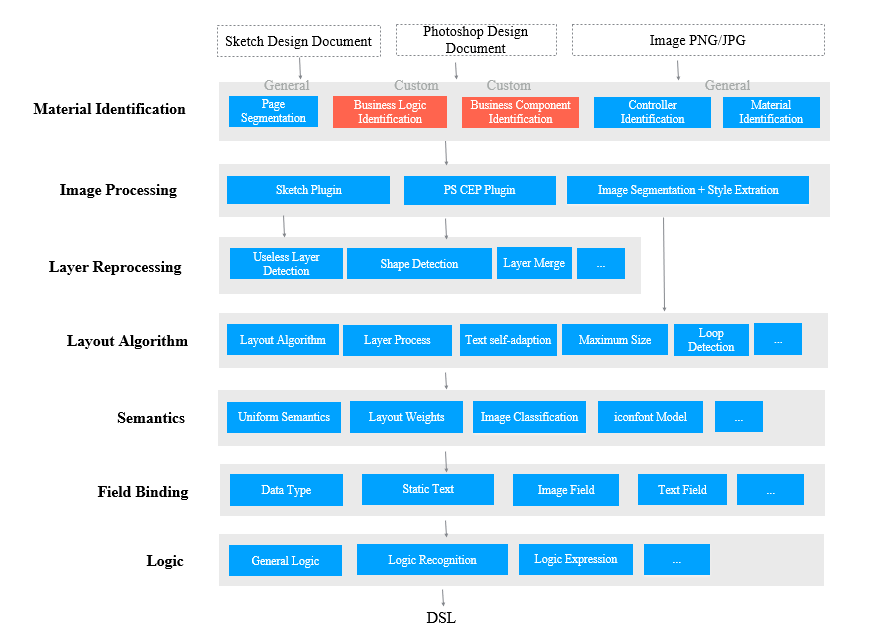

The following figure shows that the business module recognition service is in the material recognition layer, which is in the hierarchical architecture of Design to Code (D2C) technology. The business module recognition service provides the capabilities to recognize business modules from design files' DSLs. The recognized business modules can be used in the subsequent code generation process by other services such as business field binding and business logic generation.

Fig: Hierarchical architecture of D2C technology

Machine learning is a training process based on a large amount of real data. A good sample library can make model training more effective with less effort. The samples for training business module recognition are from design files. A business module may only have a few design files, limiting the number of samples we can obtain. Firstly, we must solve this problem of not having enough samples.

We used data enhancement to solve this problem. There is a set of default and configurable rules for data enhancement. We can adjust the attributes of each element in design files, such as whether the element can be hidden or the number of characters allowed, based on the potential changes of the elements in actual scenarios - and define custom parameters from these attributes. In this way, we can control the differences between the constructed samples.

We can permute and combine attributes using these parameters to generate a large number of different DSLs of design files. The DSLs of these design files are different from each other, both randomly and regularly, allowing us to obtain a large number of samples.

The following figure shows the page for configuring data enhancement. The DSL tree and rendering area are on the left and in the middle of the page. The data enhancement configuration area is on the right. The parameters are divided into the following categories:

Fig: Page for configuring data enhancement

How to generate samples after we have obtained a large number of enhanced visual DSLs? The samples we need must be tabular data, the required input format for traditional machine learning. A sample is a feature vector. Therefore, we need to extract features from DSLs.

Based on previous model training experience, we find that certain UI information is vital for determining module categories. Therefore, we abstracted, customized, and extracted such UI information as features, such as the width, height, layout direction, number of images, and number of characters included in DSLs. By abstracting various UI information, we obtained over 40 visual features.

In addition to the visual features, we added custom business features. That is, we defined some elements as business-related elements, such as price and popularity, according to specific business rules. We then abstracted these elements to obtain ten business features. Custom business rules can be used in this process, which can be implemented through regex matching.

The visual features and business features form a feature vector. We obtain a sample by adding a classification label to the feature vector.

The input of our algorithm is the standardized DSLs extracted from design files. The goal is to recognize which business modules the DSLs belong to, which can be implemented through multiclass classification. Based on this thought, we have extracted features from a large number of enhanced DSLs and generated a dataset to train models. We built multiclass classification models using various components our algorithm platform provided.

At first, we built a random forest model as the multiclass classification model; because the random forest model runs fast and the automation process is smooth. Almost no additional operations are required to meet algorithm engineering requirements. Moreover, the random forest model does not impose many requirements on feature processing. It can automatically process continuous and discrete variables. The following table describes the rules for automatically parsing data types.

| Original data type | Parsed data type |

|---|---|

| string / boolean / datetime | Discrete |

| double / bigint | Continuous |

Table: Rules for automatically parsing data types in the random forest model

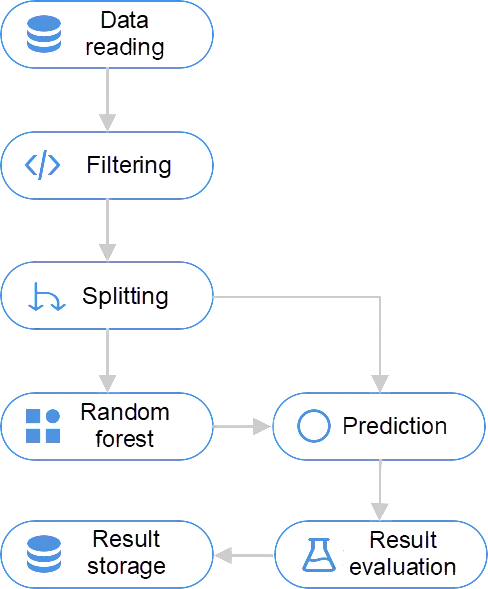

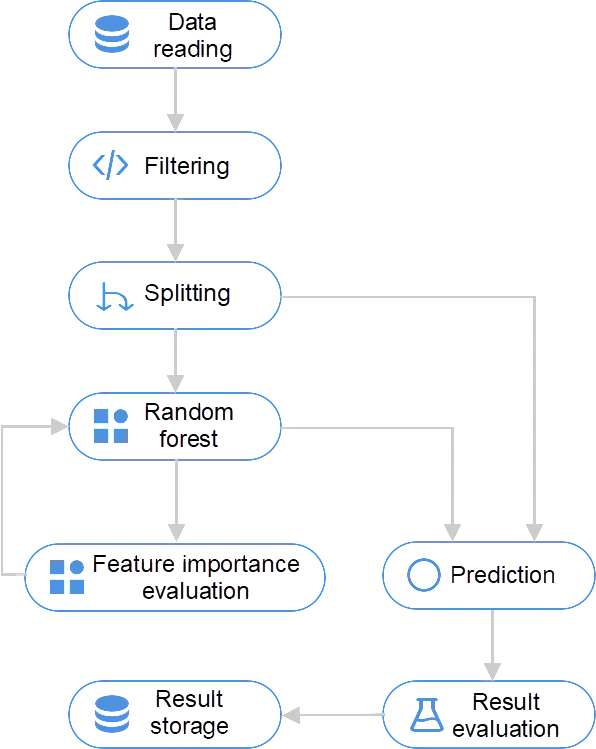

Therefore, we can quickly build a simple model, as shown in the following figure.

Fig: Random forest model used online

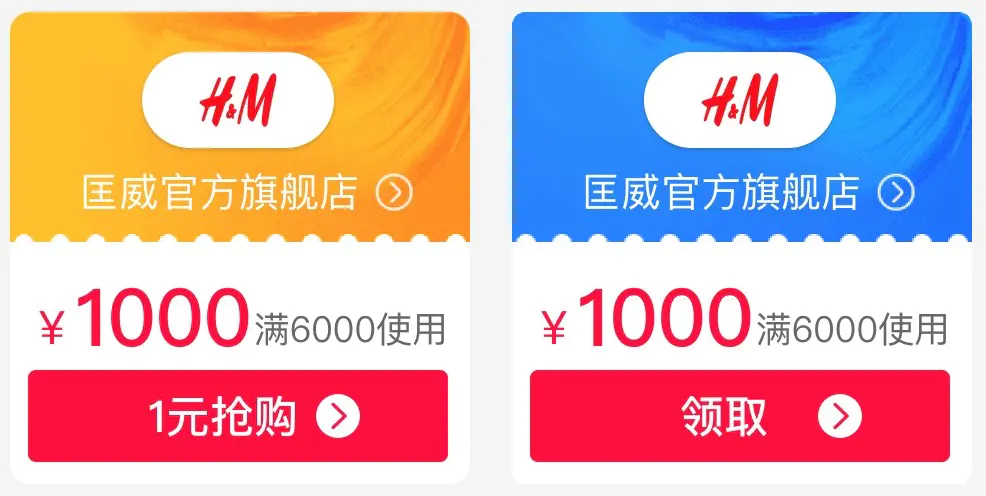

We find that the random forest model occasionally loses confidence in the sample library's data. That is, the confidence level of positive true is low, and the model is stuck by the confidence threshold. Especially for samples that look similar, like the two similar modules, the following figure shows that the random forest model has trouble determining their classification.

Fig: Similar modules

We tuned the random forest model's parameters to address this lack of confidence, including the number of random samples per tree and maximum tree depth and the ID3 ratio, Cart, and C4.5 tree types. We also pre-integrated the feature selection component. However, the performance was still not satisfactory. As the following figure shows, we obtained ideal results after manually feeding the feature importance evaluation results back to the feature selection and model training process. However, this feedback operation cannot be integrated into the automated training process, so we decided not to pursue this approach.

Fig: Random forest model used in the parameter tuning process

Although the random forest model can process discrete variables automatically, it cannot process discrete values not included in the training set. To solve this problem, we have to ensure that all the values of each discrete feature are included in the training set. Because there are multiple discrete features, this problem cannot be solved by simple stratified sampling. This is also a pain point when applying the random forest model.

These are what we did with the random forest model. To sum up, the random forest model is simple, easy to use, and can quickly produce results. It can meet most business scenarios' recognition needs and become the algorithm 1.0 for the business module recognition service. Due to its algorithmic flaws, however, we later introduced another model called XGBoost.

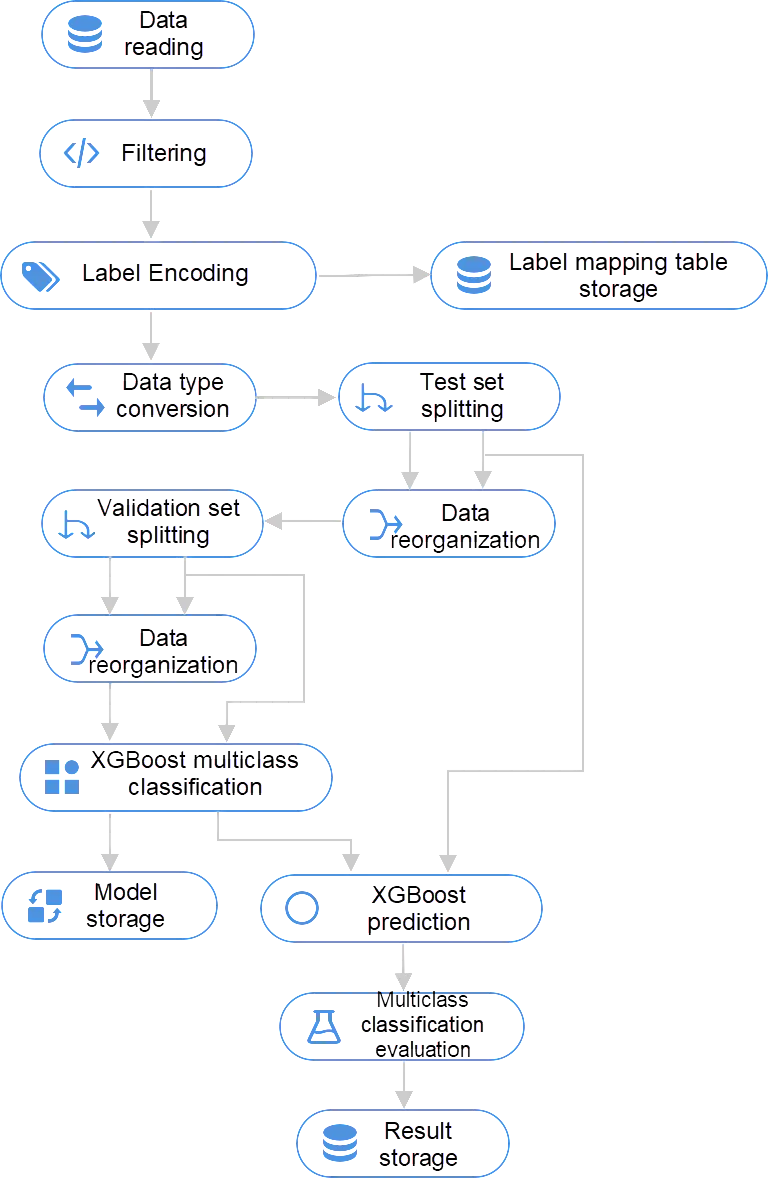

XGBoost improves the accuracy of trees by using the boosting technique, which performs better than the random forest algorithm in our dataset. However, the XGBoost model on the algorithm platform involves many non-standard processes. To implement an automatic workflow, we built the model shown in the following figure.

Fig: XGBoost model

The XGBoost model requires more pre-processing, including:

The XGBoost model shows sufficient confidence when processing the test data, making it less difficult to set thresholds. The prediction results can also meet our needs for recognizing business modules correctly. Besides, it supports automation. Therefore, The XGBoost model becomes the traditional training model that we prioritize in the future.

It is worth noting that we cannot comprehensively collect all visual samples outside the current module library. It would be like trying to collect facial photos of 7 billion people in order to build an internal face recognition system at Alibaba. The missing data outside the sample pool causes us to leave out a hidden classification - the negative sample classification. This also leads to the out-of-distribution (OOD) problem: the problem of inaccurate prediction caused by the data outside the sample library. The symptom of this problem is the excessive false positives in the classification results.

This problem is hard to solve in our scenario because it is difficult to collect all negative samples. This section describes some measures that we took to alleviate this problem.

We use the confidence level (prob) provided by the classification model as the reference for determining whether the classification result is valid. If the confidence level is higher than a threshold, the classification result is deemed valid. This method is empirically meaningful and has effectively avoided most OOD false positives in practice.

We can use the logical relationship to identify some of the OOD false positives in the algorithm model. In our opinion, it is impossible to have multiple identical modules on the same path as a DSL tree. Otherwise, the tree is self-nesting. So, if multiple identical modules are recognized on a path, we can select the recognition result by the confidence level. This logic helps us filter out most false positives.

We provide a feedback service that allows users to upload DSLs with recognition errors, which will be added and stored as negative samples. Re-training the model using the negative samples can solve the OOD problem.

At present, we can only rely on logic and user feedback to circumvent the OOD problem, but it has not been solved at the algorithm level. This is something we plan to solve in the next phase.

The algorithm platform allows users to expose a model as an online interface, that is, to deploy the model as a prediction service. Users can use imgcook to call the interface and deploy the model in one click. To automate the training and deployment processes, we have also worked on algorithm engineering, which is not described here.

The input of the prediction service is DSLs in JSON format that are extracted from design files. And the output is the business module information, including the IDs and positions in the design files.

Before calling the algorithm platform's prediction interface, we added logical filtering, including:

Size filtering: If the module size deviates significantly, the DSL will not enter the prediction logic and be considered a mismatch.

The result feedback process includes automatic result detection and user feedback. Currently, users can only upload samples with wrong prediction results.

The business module recognition service was used online for the first time in Taobao 99 Mega Sale. The model, pre-processing, and OOD avoidance processes ultimately led to the following results: up to 100% recognition accuracy in business scenarios. (The model-only accuracy rate is not calculated.)

Solving difficult problems: As mentioned earlier, the OOD problem is a difficult one without any ideal solution. We have some ideas about how to solve this problem and plan to try them in the future.

DNN-based loss function optimization: Build a Deep Neural Network (DNN) based on the manual UI features. By optimizing the loss function, we can increase the distance between different categories while decreasing the distance within the same category. We can set a distance threshold on the optimized model to identify OOD data.

Optimization on automatic negative sample generation: Based on the XGBoost algorithm, add a pre-class binary classification model to distinguish data within and outside the training set and optimize the random range for generating negative samples accordingly. We need to do more research to find a specific method to achieve this.

Deep learning: Although manual feature extraction is fast and effective, it cannot compare with deep learning methods such as Convolutional Neural Network (CNN) in terms of generalization capability. Therefore, we will try to use image-based algorithms to extract UI feature vectors in the future. That way, we can calculate the vector distance or use the binary classification model to compare the similarity between the input data and the corresponding UI components.

In addition to the algorithms we mentioned above, we will try more in the deep learning field.

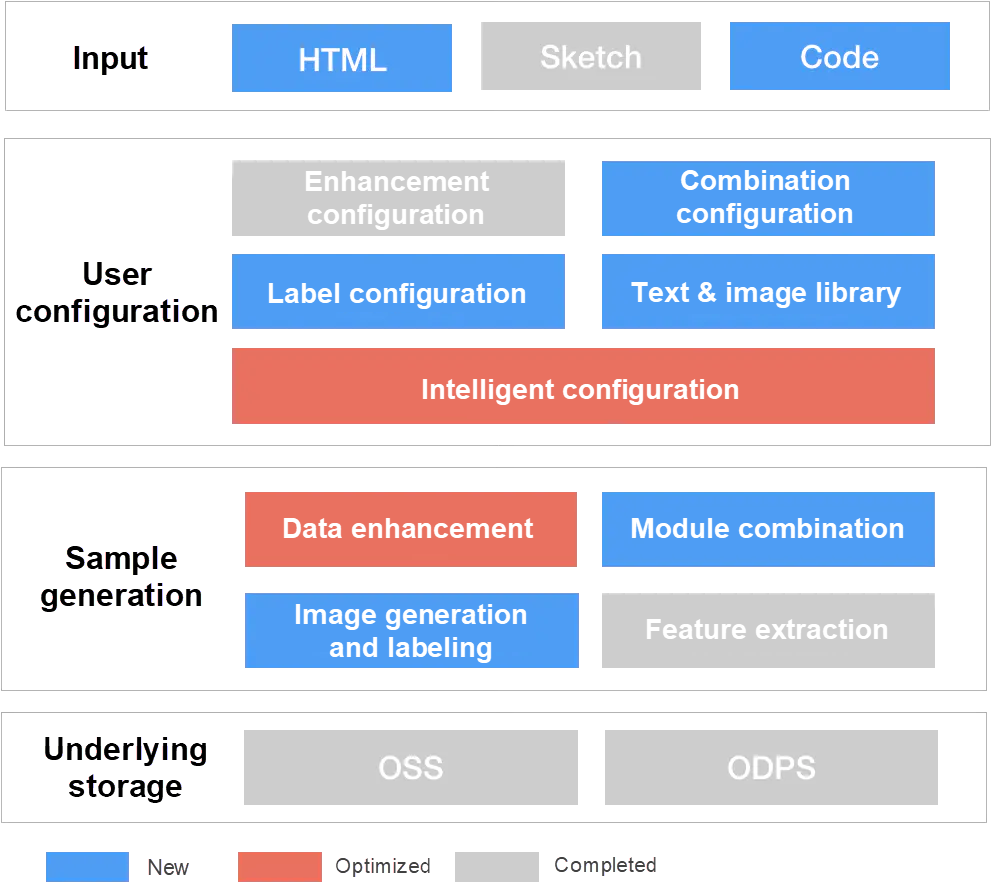

Currently, our sample generation has problems such as low configuration efficiency and few supported algorithms. Therefore, we plan to optimize the design and add more features for sample generation. The following figure shows the main features of the proposed sample generation platform.

Fig: Features on the sample generation platform

Source expansion: Currently, we generate samples by extracting DSLs of design files and saving features as MaxCompute table data. In the future business scenarios, we also want to generate samples from HTML and frontend code. Regardless of the input source, there are many similarities in the data enhancement layer. We will design a general, enhanced algorithm and release it to the public.

Algorithm expansion: The final samples can be table data with feature values for multiclass classification or images and label data in Pascal VOC and COCO formats for the target detection model.

Enhanced intelligence: Currently, users may find the sample generation feature complicated to configure and difficult to use. In some cases, samples generated cannot be used due to incorrect operations. Therefore, we expect to improve the intelligence of data enhancement to minimize user operations and help them quickly generate valid samples.

In summary, optimizing the algorithm and providing the sample generation platform as a product will be our main focus in the next phase.

Intelligently Generate Frontend Code from Design Files: Form and Table Recognition

Intelligently Generate Frontend Code from Design Files: Image Segmentation

66 posts | 5 followers

FollowAlibaba F(x) Team - February 25, 2021

Alibaba F(x) Team - February 26, 2021

Alibaba Clouder - December 31, 2020

Alibaba F(x) Team - February 23, 2021

Alibaba F(x) Team - February 5, 2021

Alibaba F(x) Team - February 2, 2021

66 posts | 5 followers

Follow Platform For AI

Platform For AI

A platform that provides enterprise-level data modeling services based on machine learning algorithms to quickly meet your needs for data-driven operations.

Learn More Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn More Epidemic Prediction Solution

Epidemic Prediction Solution

This technology can be used to predict the spread of COVID-19 and help decision makers evaluate the impact of various prevention and control measures on the development of the epidemic.

Learn MoreMore Posts by Alibaba F(x) Team