By Wang Qingcan and Zhang Kai

The Burgeoning Kubernetes Scheduling System series presents our experiences, technical thinking, and implementation details to Kubernetes users and developers. We hope the articles can help you understand the powerful capabilities and future trends of the Kubernetes scheduling system.

The first two articles in this series are The Burgeoning Kubernetes Scheduling System – Part 1: Scheduling Framework and The Burgeoning Kubernetes Scheduling System – Part 2: Coscheduling and Gang Scheduling That Support Batch Jobs.

These two articles introduce the Kubernetes Scheduling Framework and the implementation of Coscheduling and Gang Scheduling policies by extending the Scheduling Framework, respectively. When dealing with the batch job in the cluster, we need to focus on resource utilization. In particular, GPU cards are too expensive to waste resources. This article describes how to use Binpack to reduce resource fragmentation and improve GPU utilization during batch job scheduling.

The resource scheduling policy of Kubernetes is LeastRequestedPriority by default. The least-consumed nodes are scheduled first, so the resources of the entire cluster are distributed evenly among all nodes. However, this scheduling policy often produces more resource fragments on a single node.

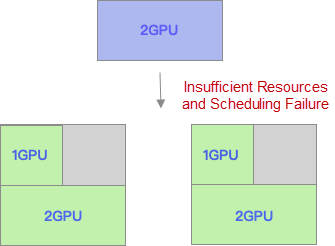

The following is a simple example. As shown in the following figure, resources are used evenly among nodes. Each node uses three GPU cards with two nodes remaining on one GPU resource. At this time, a new job has applied for two GPUs and then is submitted to the scheduler. Due to insufficient resources, scheduling failure occurs.

As shown above, each node has one standby GPU, but it is out of service, leading to expensive resource waste. However, after using the Binpack resource scheduling policy, the resource fragmentation above is solved. Binpack fills the resources on the node first and then schedules the next node. A job that applies for two GPUs can be scheduled to a node successfully, which improves the resource usage of the cluster.

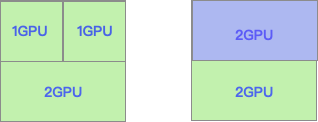

Binpack implementation has been abstracted into the Score plug-in of Kubernetes Scheduler Framework (called RequestedToCapacityRatio.) It is used to score nodes in the preference phase according to self-defined configurations. The specific implementation can be divided into two parts, the constructing scoring function and scoring.

The process of the constructing scoring function is simple. Users can define the score corresponding to different utilization rates to determine the decision-making process of scheduling.

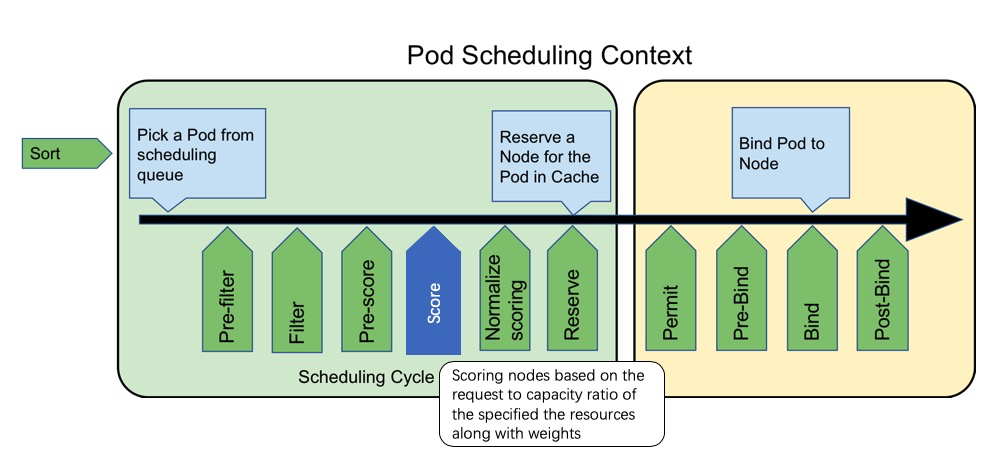

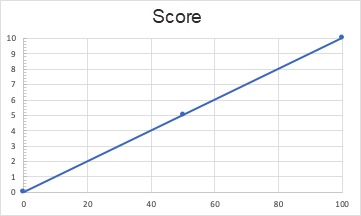

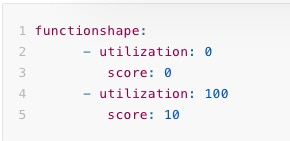

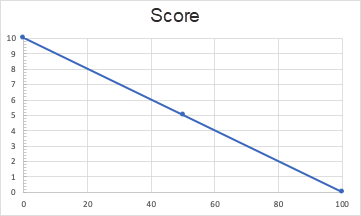

If users set the definition like the following figure, higher resource utilization rates get higher scores. For example, if the resource utilization rate is 0, the score is 0, and if the resource utilization rate is 100, the score is 10. This is the resource allocation method of Binpack.

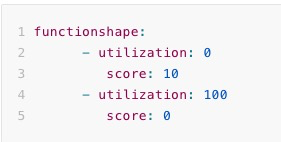

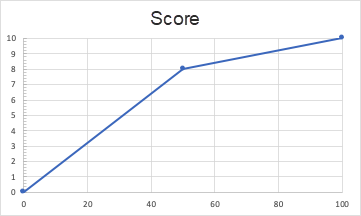

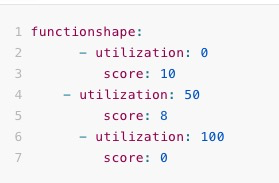

Users can also set the utilization rate of 0 with a score of 10 points and the utilization rate of 100 with 0 points. This means that the lower the resource utilization rate is, the higher the score is. This is the resource allocation method of spreading.

Users can add more points, and the corresponding relationship doesn't have to be linear. For example, a score of 8 can be obtained when the resource utilization rate is 50. Thus, the score is divided into two intervals: 0-50 and 50-100.

Users can define referenced resources and weight values in the Binpack calculation. For example, they can set the values and weights of the GPU and CPU.

resourcetoweightmap:

"cpu": 1

"nvidia.com/gpu": 1Then, during the scoring process, utilization rates of the corresponding resources are obtained according to the calculation results of (pod.Request + node.Allocated)/node.Total. Then, the utilization rates are added to the scoring function to obtain the corresponding scores. The final scores are acquired by weighing the weight value of all resources.

Score = line(resource1_utilization) * weight1 + line(resource2_utilization) * weight2 ....) / (weight1 + weight2 ....)1. Create a/etc/kubernetes/scheduler-config.yaml. Users are allowed to configure other priorities policies according to their needs.

apiVersion: kubescheduler.config.k8s.io/v1alpha1

kind: KubeSchedulerConfiguration

leaderElection:

leaderElect: false

clientConnection:

kubeconfig: "REPLACE_ME_WITH_KUBE_CONFIG_PATH"

plugins:

score:

enabled:

- name: RequestedToCapacityRatio

weight: 100

disabled:

- name: LeastRequestedPriority

pluginConfig:

- name: RequestedToCapacityRatio

args:

functionshape:

- utilization: 0

score: 0

- utilization: 100

score: 100

resourcetoweightmap: # Define the type of resources for Binpack operations. Users can set weight for multiple resources.

"cpu": 1

"nvidia.com/gpu": 1This is the result demonstration of Binpack after running the distributed work of TensorFlow. There are two 4-card GPU machines in the tested cluster.

1. Use Kubeflow's Arena to deploy the tf-operator in an existing Kubernetes cluster.

Arena is one of the subprojects based on Kubeflow, an open-source community for machine learning systems in Kubernetes. Arena supports the main lifecycle management of machine learning jobs through command lines and SDKs. The lifecycle management includes environment installation, data preparation, model development, model training, and model prediction. The work efficiency of data scientists is improved with Arena.

git clone https://github.com/kubeflow/arena.git

kubectl create ns arena-system

kubectl create -f arena/kubernetes-artifacts/jobmon/jobmon-role.yaml

kubectl create -f arena/kubernetes-artifacts/tf-operator/tf-crd.yaml

kubectl create -f arena/kubernetes-artifacts/tf-operator/tf-operator.yamlCheck the deployment status

$ kubectl get pods -n arena-system

NAME READY STATUS RESTARTS AGE

tf-job-dashboard-56cf48874f-gwlhv 1/1 Running 0 54s

tf-job-operator-66494d88fd-snm9m 1/1 Running 0 54s2. The user submits a Tensorflow distributed job to the cluster that contains 1 PS and 4 workers. Each Worker requires 1 GPU.

apiVersion: "kubeflow.org/v1"

kind: "TFJob"

metadata:

name: "tf-smoke-gpu"

spec:

tfReplicaSpecs:

PS:

replicas: 1

template:

metadata:

creationTimestamp: null

labels:

pod-group.scheduling.sigs.k8s.io/name: tf-smoke-gpu

pod-group.scheduling.sigs.k8s.io/min-available: "5"

spec:

containers:

- args:

- python

- tf_cnn_benchmarks.py

- --batch_size=32

- --model=resnet50

- --variable_update=parameter_server

- --flush_stdout=true

- --num_gpus=1

- --local_parameter_device=cpu

- --device=cpu

- --data_format=NHWC

image: registry.cn-hangzhou.aliyuncs.com/kubeflow-images-public/tf-benchmarks-cpu:v20171202-bdab599-dirty-284af3

name: tensorflow

ports:

- containerPort: 2222

name: tfjob-port

resources:

limits:

cpu: '1'

workingDir: /opt/tf-benchmarks/scripts/tf_cnn_benchmarks

restartPolicy: OnFailure

Worker:

replicas: 4

template:

metadata:

creationTimestamp: null

labels:

pod-group.scheduling.sigs.k8s.io/name: tf-smoke-gpu

pod-group.scheduling.sigs.k8s.io/min-available: "5"

spec:

containers:

- args:

- python

- tf_cnn_benchmarks.py

- --batch_size=32

- --model=resnet50

- --variable_update=parameter_server

- --flush_stdout=true

- --num_gpus=1

- --local_parameter_device=cpu

- --device=gpu

- --data_format=NHWC

image: registry.cn-hangzhou.aliyuncs.com/kubeflow-images-public/tf-benchmarks-gpu:v20171202-bdab599-dirty-284af3

name: tensorflow

ports:

- containerPort: 2222

name: tfjob-port

resources:

limits:

nvidia.com/gpu: 1

workingDir: /opt/tf-benchmarks/scripts/tf_cnn_benchmarks

restartPolicy: OnFailure3. When the user uses Binpack, 4 Workers are scheduled to the same GPU node: cn-shanghai.192.168.0.129 after the job is submitted.

$ kubectl get pods -o wide

NAME READY STATUS AGE IP NODE

tf-smoke-gpu-ps-0 1/1 Running 15s 172.20.0.210 cn-shanghai.192.168.0.129

tf-smoke-gpu-worker-0 1/1 Running 17s 172.20.0.206 cn-shanghai.192.168.0.129

tf-smoke-gpu-worker-1 1/1 Running 17s 172.20.0.207 cn-shanghai.192.168.0.129

tf-smoke-gpu-worker-2 1/1 Running 17s 172.20.0.209 cn-shanghai.192.168.0.129

tf-smoke-gpu-worker-3 1/1 Running 17s 172.20.0.208 cn-shanghai.192.168.0.1294. When the user doesn't use Binpack, 4 Workers are scheduled to two nodes: cn-shanghai.192.168.0.129 and cn-shanghai.192.168. 6.50g nodes after submitting the job. Thus, resource fragmentation occurs.

$ kubectl get pods -o wide

NAME READY STATUS AGE IP NODE

tf-smoke-gpu-ps-0 1/1 Running 7s 172.20.1.72 cn-shanghai.192.168.0.130

tf-smoke-gpu-worker-0 1/1 Running 8s 172.20.0.214 cn-shanghai.192.168.0.129

tf-smoke-gpu-worker-1 1/1 Running 8s 172.20.1.70 cn-shanghai.192.168.0.130

tf-smoke-gpu-worker-2 1/1 Running 8s 172.20.0.215 cn-shanghai.192.168.0.129

tf-smoke-gpu-worker-3 1/1 Running 8s 172.20.1.71 cn-shanghai.192.168.0.130This article introduces how to use the native scheduling policy of Kubernetes (called RequestedToCapacityRatio) to enable Binpack Scheduling. By doing so, resource fragments can be reduced, and the GPU utilization rate can be improved. It is efficient, clear, and simple to use. The following articles of this series will discuss improving GPU resource utilization. We will focus on using GPU sharing scheduling to improve the GPU utilization rate.

Addressing Security Challenges in the Container and Cloud-Native Age

223 posts | 33 followers

FollowAlibaba Container Service - February 12, 2021

Alibaba Container Service - February 12, 2021

Alibaba Cloud Native Community - September 18, 2023

Alibaba Container Service - June 12, 2019

Alibaba Developer - May 8, 2019

Alibaba Cloud Native Community - March 11, 2025

223 posts | 33 followers

Follow Platform For AI

Platform For AI

A platform that provides enterprise-level data modeling services based on machine learning algorithms to quickly meet your needs for data-driven operations.

Learn More Epidemic Prediction Solution

Epidemic Prediction Solution

This technology can be used to predict the spread of COVID-19 and help decision makers evaluate the impact of various prevention and control measures on the development of the epidemic.

Learn More ACK One

ACK One

Provides a control plane to allow users to manage Kubernetes clusters that run based on different infrastructure resources

Learn More Container Registry

Container Registry

A secure image hosting platform providing containerized image lifecycle management

Learn MoreMore Posts by Alibaba Container Service