By Xunjian, from Alibaba Cloud Storage team

Currently, in the era of GPT technology, large models promote the rapid development of vector retrieval technology. Compared with the traditional keyword-based retrieval method, vector retrieval can more accurately capture the semantic relationship between data, which greatly improves the effectiveness of information retrieval. Especially in the fields of natural language processing and computer vision, vectors express and retrieve multimodal data in the same space, which promotes the widespread popularity of applications such as artificial intelligence recommendation, content retrieval, RAG, and knowledge base.

The search index of Tablestore provides the vector retrieval capability. Tablestore is a serverless distributed structured data storage service that was created in 2009 when Alibaba Cloud was founded. It features distributed, serverless, out-of-the-box, pay-as-you-go, horizontal scaling, rich query features, and excellent performance.

We will introduce two classic vector database scenarios here. One is the emerging RAG application and the other is the traditional multimodal search. By integrating vector databases, users can efficiently and flexibly access large-scale data.

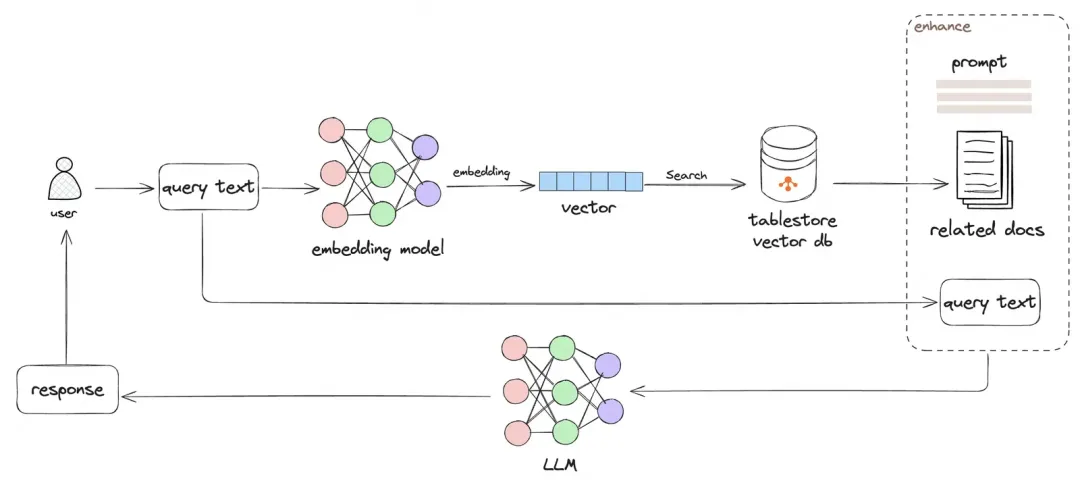

RAG (Retrieval-Augmented Generation) is a method that combines retrieval and generation, mainly used for natural language processing tasks. It works by first retrieving the relevant corpus from the knowledge base and then inputting the information into a generative model for dialog or text generation. The advantage of this method is the ability to combine both factual or private domain information and generative models to generate more accurate and richer texts.

Let's take the intelligent customer service assistant as an example. The core of the whole system is an RAG model based on a vector database. In this scenario, when a customer raises a question through the online chat box, the system first converts the question into a vector representation and retrieves it in the vector database. The vector database quickly finds documents, FAQs, and chat history related to the question. The data is fed into the generative model, which generates a concise and clear reply based on the information retrieved. Compared with the traditional customer service robot based on fixed rules, this RAG model based on the vector database can handle more complex and open questions and provide personalized answers according to the user's requirements.

RAG demonstrates great advantages in fields that require a lot of background information and contextual understanding, including question-answering systems, content creation, language translation, information retrieval, and dialogue systems. Compared with the traditional full-text-based and rule-based retrieval methods, RAG is more accurate, more natural, and in line with the context.

Traditional image, video, and text search is a classic matter of multimodal retrieval, which is most common in systems represented by cloud drives. In the past, users could only search for what they wanted through keyword search, and could not find the target works due to inaccurate keywords. Therefore, various types of cloud drives began to introduce a multimodal search solution based on vector databases.

In this solution, the user enters a description or uploads an image, and the system converts these inputs into vectors. The vector database can retrieve images and videos with high similarity to the user input from massive amounts of data. For example, when a user uploads a landscape photo, the search engine can identify elements such as mountains and lakes in the photo, quickly find other similar landscape images and videos, and display them in the search results. In addition, users can also use natural language expressions when querying, such as "looking for scenery with autumn as the theme". The system will search for multiple images and videos related to autumn in the vector database to provide more accurate search results.

In the field of vector databases, there are two main technical solutions: HNSW-based graph algorithms and clustering methods. Both methods are widely used in high-dimensional data retrieval and storage, especially when dealing with large-scale datasets, and they each have their own advantages and limitations.

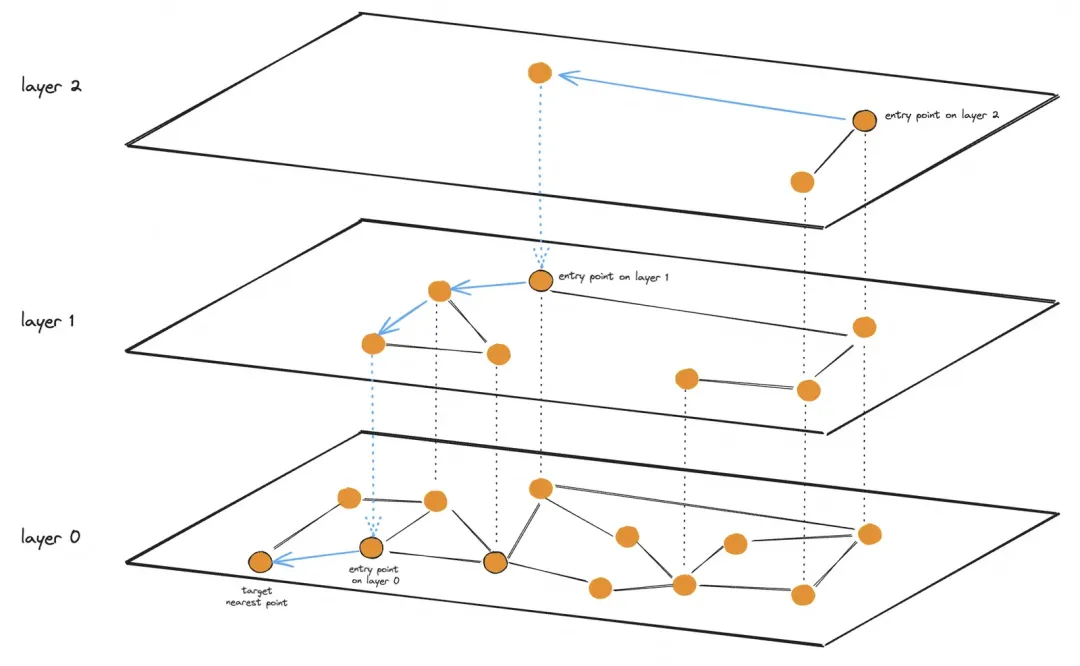

HNSW is a graph-based data structure for efficient nearest neighbor search. It implements fast queries by building a multi-level graph. In this structure, data exists in the form of nodes, and the edges between nodes indicate their similarities. HNSW layers nodes and the number of nodes in each layer decreases from the very top so that when querying, you can start from a higher level, and quickly narrow the search scope, thereby greatly increasing the search speed.

The biggest advantage of the HNSW-based graph algorithm is the high recall rate and good performance. However, its disadvantages are also obvious. First, all data needs to be fully loaded in memory, which is costly. Second, the scale is limited by memory, and it is difficult to support a scale of more than 10 million.

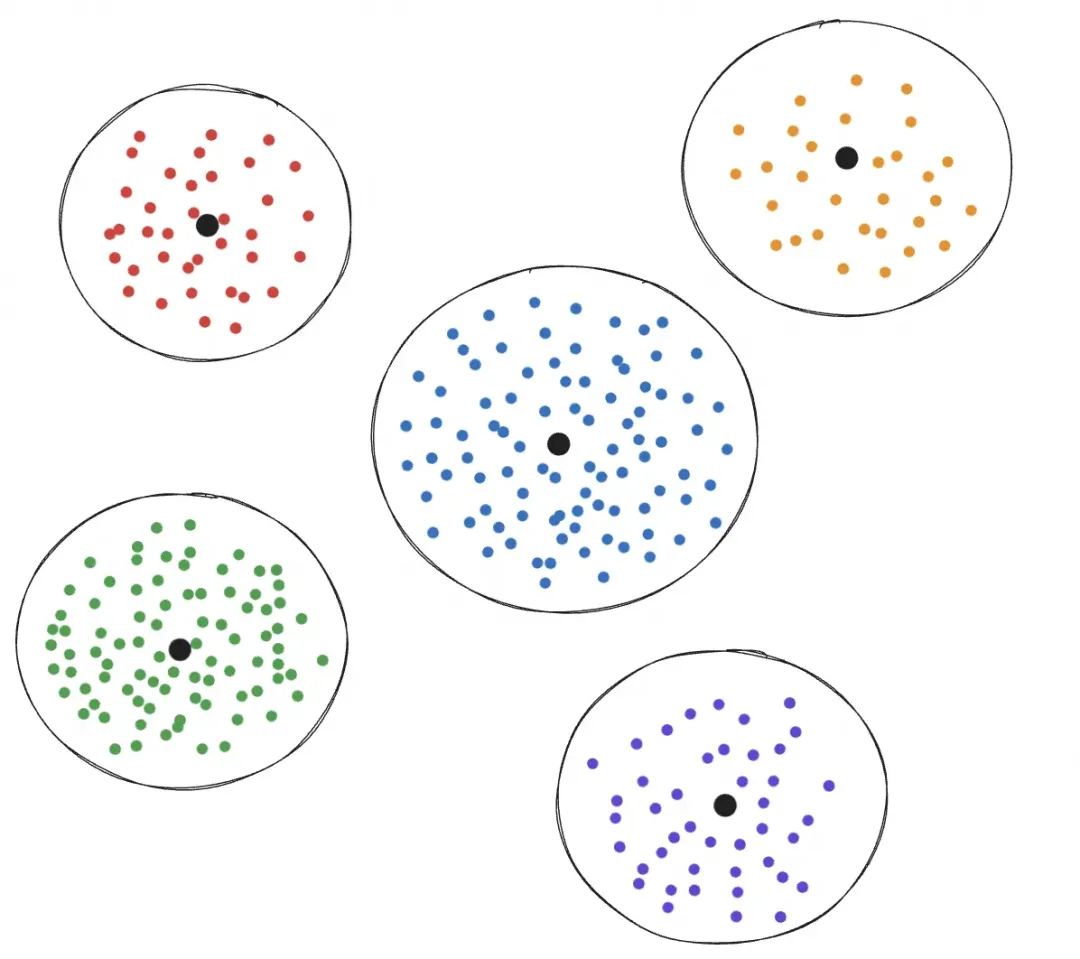

Clustering is the process of grouping similar objects in a dataset. By clustering the data, similar vectors can be grouped into the same group. During retrieval, you can first determine which cluster the data point belongs to, and then perform a detailed search within the cluster. The advantage of this method is that it reduces the amount of data that needs to be accessed, thereby increasing the retrieval speed.

The biggest advantage of the clustering algorithm is its low cost and good index performance, but the obvious disadvantage is the low recall rate. Common clustering algorithms include K-means, hierarchical clustering, and DBSCAN. In the high-dimensional space, different clustering algorithms have different adaptability to the distribution characteristics of the data, so it is important to choose an appropriate clustering algorithm and its parameters, otherwise, there will be a poor selection of the number of clusters and cluster centers, which will lead to the unsatisfactory results of vector retrieval.

There are a wide variety of open-source vector retrieval products that can be divided into "dedicated vector database" and "traditional database with additional vector retrieval". Due to the relative faultiness of the database characteristics of a dedicated vector database, there is still a lot of room for improvement. Therefore, most users tend to use the traditional database with the additional vector retrieval feature. The following are some commonly used products.

• PG: integrates vector retrieval capabilities through the pgvector plug-in. Its indexing capabilities include FLAT indexing to provide linear brute force scanning, clustering-based IVFFLAT, and graph-based HNSW.

• Redis®*: provides vector retrieval capabilities through RedisStack. Its indexing capabilities include FLAT indexing to provide linear brute force scanning and graph-based HNSW.

• Elasticsearch: provides vector retrieval capabilities through Lucene. Its indexing capabilities include FLAT indexing to provide linear brute force scanning and graph-based HNSW.

Although there are many implementation schemes for vector databases, there are still some challenges, especially in the three core aspects of vector retrieval: cost, scale, and recall rate.

Cost is an important factor in the selection of a vector database. In a vector database, building and maintaining a vector index requires huge resources, whose costs are mainly reflected in computing resources (CPU) and I/O resources (memory).

In terms of computing resources, graph-based algorithms require extremely high computing resources when building and maintaining graphs, while clustering-based algorithms are simple to implement and use relatively low computing resources. However, the maintenance cost of the index structure is particularly high and the recall rate is low after the two algorithms overwrite multiple insert or delete operations.

In terms of I/O resources, most scenarios of vector indexes involve random I/O, especially the random I/O problem of graph indexes represented by HNSW. In the industry, the entire index is generally loaded into memory using MMAP, resulting in extremely high memory costs.

Therefore, when considering adding vector capabilities to large amounts of unstructured and semi-structured data, cost becomes the biggest obstacle.

In the future, the scale of data will continue to grow, so the demand for the vector database scale will be higher. Especially in the fields of enterprise cloud drives, semantic search, video analysis, and industry knowledge base, the data scale is expected to reach tens of millions or even billions. This trend makes vector databases face higher requirements when dealing with large-scale data. The stand-alone system is still effective in the personal knowledge base and small-scale document processing, but in a more complex big data environment, the database scalability must be considered to meet the growing data needs in the future.

In a vector retrieval system, supporting large-scale data requires a distributed architecture. With the distributed system, data can be distributed and stored to improve retrieval efficiency and system reliability. However, it is not easy to implement a distributed architecture, which includes issues such as data consistency, load balance, and high availability. In addition, the hybrid storage and retrieval of vector data and scalar data also require a reasonable design to ensure the stable operation of the system under high concurrency and large-scale conditions.

Large scale inevitably brings challenges of higher cost. The high cost of vector retrieval limits the scalability of many traditional databases, making it difficult to adapt to the large amount of vector retrieval data. Take traditional stand-alone databases such as MySQL and PostgreSQL as an example. To maintain optimal performance, the size of a single table is usually small, especially in vector scenarios, which require higher machine specifications and more CPU and memory configurations. Elasticsearch, as an industry leader in the search field, also supports vector retrieval, brute force scanning, and HNSW algorithms. Although Elasticsearch can support scalar data up to the scale of hundreds of billions, vector data is difficult to be at a particularly large scale due to its memory-based implementation.

Recall rate is an important metric to measure the query performance of vector databases. The HNSW-based graph algorithm usually performs relatively well. However, it may not guarantee a high recall rate in some cases, especially scenarios where noise is caused by the larger scale of data and uneven distribution, and local optimum issues caused by incorrect parameters during queries. These may lead to a decrease in the recall rate.

The recall rate of clustering-based solutions varies depending on the usage mode. In the first phase, if the cluster center is right, the recall rate will be very high. However, over-reliance on the cluster center may lead to "excessively fine clustering", which will lead to a decrease in the recall rate. If the number of clusters is small, the vector data scale in a single cluster will be too large, resulting in poor query performance. Generally speaking, relying on some high-cost GPU hardware for acceleration, the recall rate of clustering will be lower than that of the HNSW-based graph algorithm.

In some high recall rate scenarios, although clustering can solve the scale and cost problems, it cannot solve the recall rate problem. Is there a system that can support large-scale data and a high recall rate at a low cost?

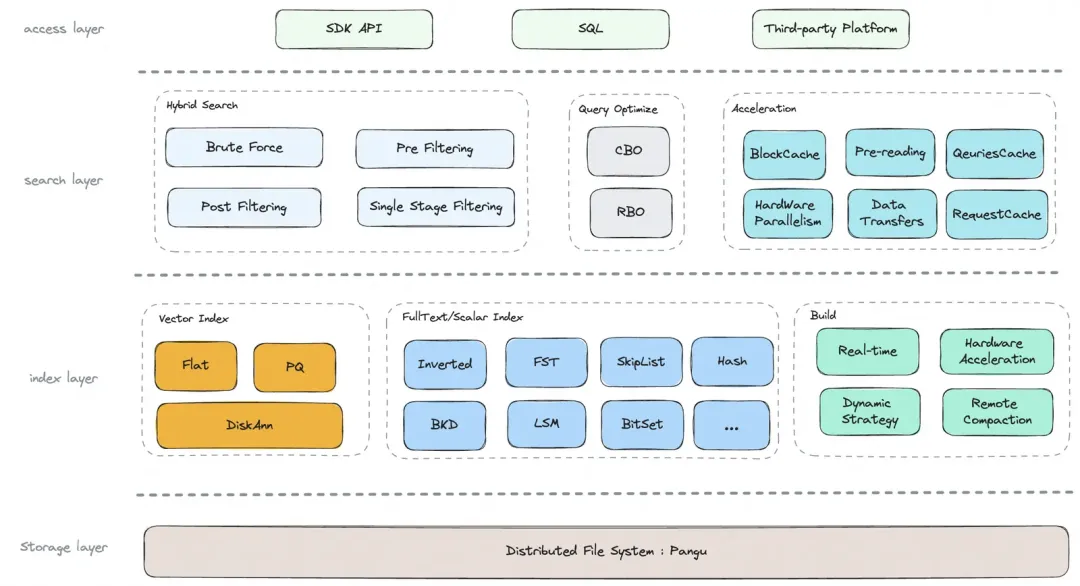

In response to the challenges in the field of vector retrieval, such as cost, scale, and recall rate, Tablestore released a vector retrieval service with low cost, large scale, high performance, and high recall rate, which can support the storage and retrieval of hundreds of billions of vector data at a relatively low cost. The following section will introduce why these advantages can be achieved.

Tablestore vector retrieval is optimized on top of the original DiskANN algorithm to provide large-scale and high-performance vector retrieval services. Earlier, we also provided users with HNSW-based graph algorithms but found obvious performance issues. HNSW has high memory requirements and is difficult to support large-scale data. When the memory is insufficient, HNSW shows terrible performance in accessing disks. Most users on the Tablestore have a relatively large amount of data, and the scale and cost of HNSW fail to match the positioning of our products. We hope that more data can be equipped with the vector capability at a low cost. Therefore, we subsequently abandoned the HNSW algorithm and used DiskAnn to re-implement the vector retrieval capability.

DiskAnn is an algorithm designed for disks. It uses a disk-friendly hierarchical graph structure and can process datasets that far exceed the memory capacity. Within the Tablestore, we only need to put the 10% data of the graph index into memory and the remaining 90% data on disk to achieve a high recall rate and performance similar to HNSW, while the memory cost is only 10% of that of HNSW, which is the reason for the low cost. In terms of scale, through the introduction of DiskANN and Tablestore algorithms and distributed framework optimization, it can eventually support the storage and retrieval of hundreds of billions of vector data.

However, DiskAnn requires high I/O capability and has high disk requirements. Most disk-based DiskAnn will encounter the problem of being limited by I/O capability. How do we solve this problem? The answer lies in our storage-computing separation architecture.

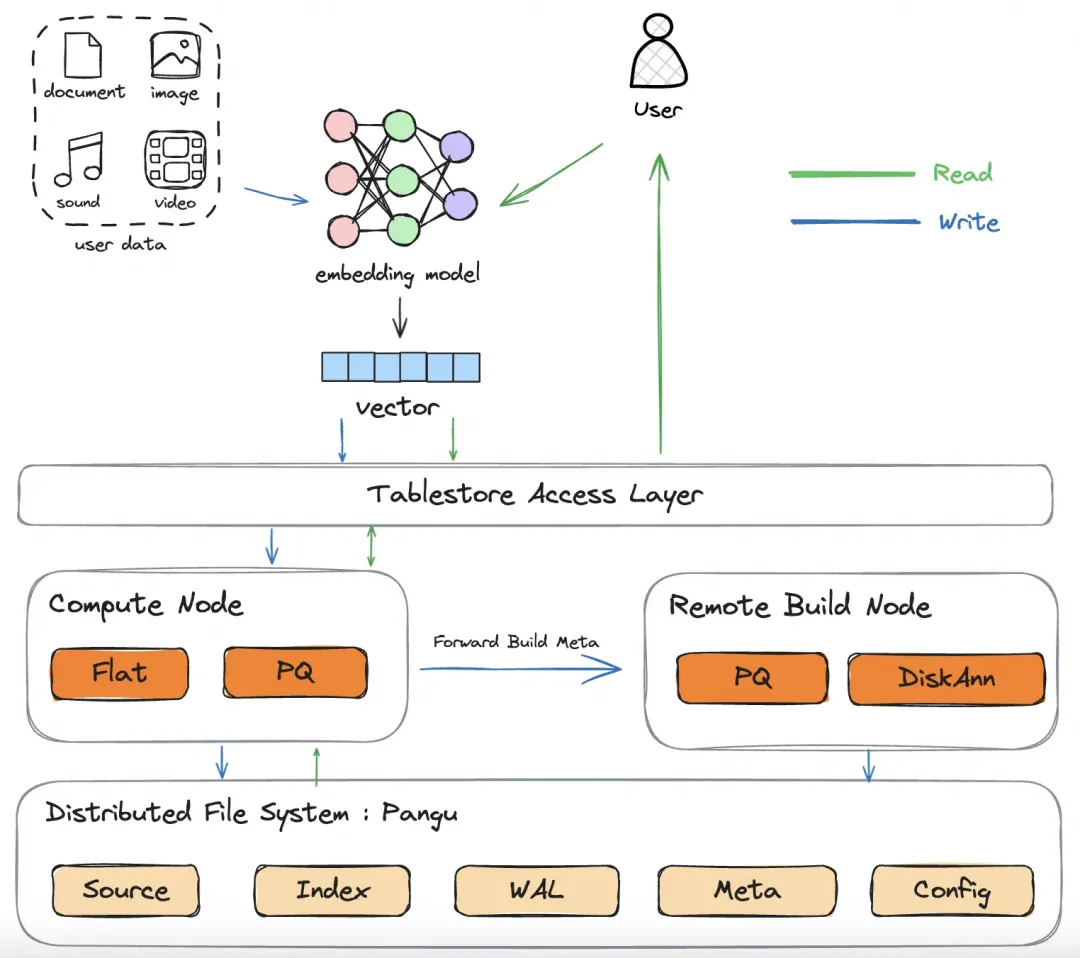

Tablestore uses a storage-computing separation architecture. The underlying layer uses Apsara Distributed File System, a highly reliable distributed file system with high performance self-developed by Alibaba Cloud. It supports independent auto scaling in computing and storage, and supports three-zone capabilities by default without incurring additional costs, providing users with high elasticity and disaster recovery capabilities.

Traditional vector databases that use local disks as media have I/O bottlenecks and limited IOPS when accessing graph indexes on disks, resulting in single-disk hot-spotting issues. This makes it difficult to exploit the true strength of DiskANN. The distributed file system used by Tablestore does not have this problem, because all I/O is discrete, and there will be no hot spots, which solves the I/O bottleneck problem of DiskANN.

In addition, Tablestore built an autonomous and controllable BlockCache cache system based on Apsara Distributed File System to cache index files, using efficient caching mechanisms and data prefetching strategies to reduce I/O and improve overall performance. Compared with the traditional MMAP loading method based on local disks, the resource usage of MMAP is out of control, which is inconvenient for isolation and management and causes cache contention and pollution problems.

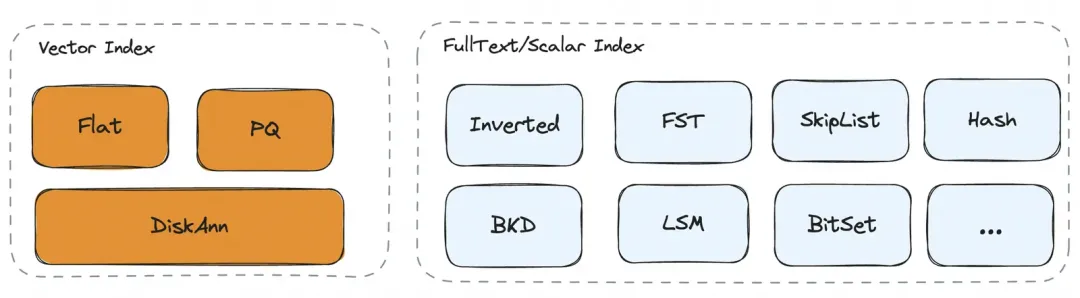

Tablestore vector retrieval supports multiple indexes: Flat, PQ, DiskANN, and inverted indexes. These index types do not need to be selected by users. The optimal solution is adaptively selected based on the user's write mode and data scale. This implements efficient vector retrieval, greatly reduces the user's troubles in parameter selection, and avoids the problems of insufficient performance or low recall rate caused by parameter errors. Whether it is a small-scale data volume application with high real-time requirements or a large-scale data volume application that is sensitive to storage and computing resources, our solution can provide excellent retrieval capabilities.

• Flat: The simplest and most direct index. It stores the original vectors of users and enables data to be retrieved later by using linear brute force scanning. This method is only suitable for small-scale data. Although the time complexity is higher, it can provide 100% accurate results when the data volume is small.

• PQ (Product Quantization): PQ can compress high-dimensional data into low-dimensional features, significantly reducing memory usage and speeding up retrieval through quantization. Especially when processing large-scale data, PQ can more effectively balance accuracy and performance.

• DiskANN: An index designed for large-scale data. It stores vectors on disk and implements approximate nearest neighbor search through efficient graph indexing and clustering algorithms. This method greatly reduces memory usage and improves the retrieval speed, which is especially suitable for scenarios with large-scale data volume.

• Scalar index: An index used to query scalar data that is not part of a vector. The search index of Tablestore has outstanding capabilities in traditional scalar index scenarios. It supports various types of indexes, such as strings, numbers, dates, geographic locations, arrays, and nested JSON indexes. It can perform a variety of functions, such as queries based on columns, Boolean queries, geo queries, full-text search, fuzzy queries, prefix queries, nested queries, deduplication, and sorting.

Tablestore has high write throughput for vector indexes. This capability is supported by different compaction policies and remote compaction capabilities.

The Tablestore vector index uses different index compaction policies, which are automatically selected in different scales and scenarios without the need for users to care about parameter selection and avoid troubles. Different compaction policies can reduce the write amplification problem in LSM-Tree architectures, improving the write throughput and saving user costs.

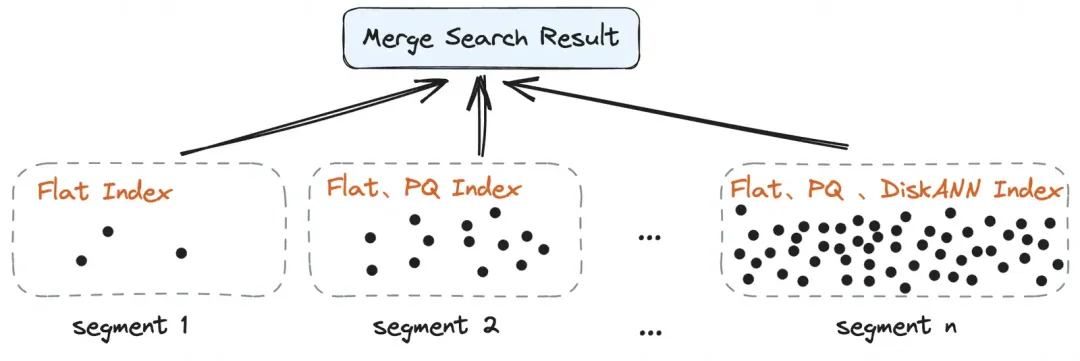

• If the data volume is small, only the Flat index is built.

• If the data volume is medium, only Flat and PQ indexes are built.

• If the data volume is large, multiple indexes such as Flat, PQ, and DiskANN are built.

• Compaction in the background: multiple indexes such as Flat, PQ, and DiskANN are built.

Each cluster has an independent resource group for remote compaction. You can assign vector index compaction tasks to remote nodes for execution. Since the compaction of vector indexes is a CPU-intensive task, local compute nodes cannot utilize full CPU resources to build vector indexes. Otherwise, common writes and queries are affected, and the stability of compute nodes is greatly affected. It is more secure and flexible to execute vector compaction at the remote end. The compaction resources of vector indexes can also be separated from the resources of compute nodes to implement auto scaling and multi-tenant sharing, improve compaction performance and save compaction costs.

By using remote compaction, the following significant benefits are achieved:

Within an index, data is organized on the smallest unit such as a segment. In the preceding description, we learned that the vector retrieval service uses an adaptive policy when building indexes and the vector index type of each segment is different. When the data volume is small, only the Flat original vector index is available. When the data volume is relatively large, additional PQ indexes are built. When the data volume is large, additional DiskANN indexes are built. Therefore, during the query, the index is selected based on the index situation of each segment, and the existing index is given priority. If all indexes exist, dynamic selection based on user query modes is required. For more information, please refer to the Hybrid Query Optimization section below.

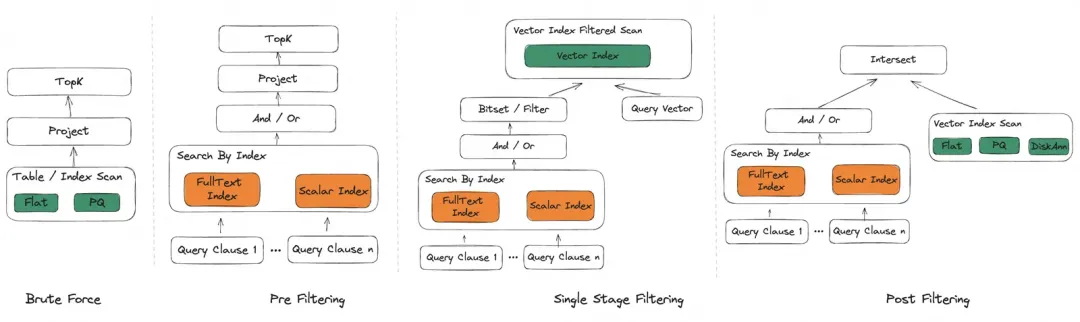

Tablestore allows you to query scalars and vectors at the same time. It provides various query policies to improve user experience and reduce usage complexity. The system analyzes the index characteristics, data scale, query hit scale, and query mode, so as to adaptively select the optimal query policy. The following four query policies are supported:

• Brute Force: linear brute force scanning.

• Pre Filtering: pre-filtering scalar.

• Post Filtering: post-filtering scalar.

• Single Stage Filtering: traversing the graph index while filtering the scalar.

| Product | Tablestore | An open-source vector database | An open-source search engine |

|---|---|---|---|

| top10 recall@99.5 | QPS: 2000 Latency: 23ms |

QPS: 359.31 Latency: 66.5ms |

QPS: 244.81 Latency: 98.40ms |

You can use the Tablestore vector retrieval service through the console or SDK.

• Console: Log on to the Tablestore console, create instances and tables, and then create a search index that contains vector fields. After data is imported through the console, you can use the search function in the index management interface to query data online. For more information, please refer to Introduction and Usage of Vector Retrieval.

• SDK: You can use the vector retrieval feature by using the Java SDK, Go SDK, Python SDK, or Node.js SDK. For more information, please refer to Introduction and Usage of Vector Retrieval.

If you want to convert text into a vector by using the word embedding model, please refer to the following documentation:

• Use Open-source Models to Convert Tablestore Data into Vectors

• Use Cloud Services to Convert Tablestore Data into Vectors

*Redis is a registered trademark of Redis Ltd. Any rights therein are reserved to Redis Ltd. Any use by Alibaba Cloud is for referential purposes only and does not indicate any sponsorship, endorsement or affiliation between Redis and Alibaba Cloud.

Disclaimer: The views expressed herein are for reference only and don't necessarily represent the official views of Alibaba Cloud.

Navigating the Green Frontier Innovation and Collaboration in Corporate Sustainability

1,352 posts | 478 followers

FollowAlibaba Cloud Community - February 5, 2026

ApsaraDB - November 26, 2024

Proxima - April 30, 2021

Alibaba Cloud Storage - February 27, 2020

Alibaba Clouder - September 25, 2020

ApsaraDB - January 28, 2026

1,352 posts | 478 followers

Follow Storage Capacity Unit

Storage Capacity Unit

Plan and optimize your storage budget with flexible storage services

Learn More EasyDispatch for Field Service Management

EasyDispatch for Field Service Management

Apply the latest Reinforcement Learning AI technology to your Field Service Management (FSM) to obtain real-time AI-informed decision support.

Learn More Network Intelligence Service

Network Intelligence Service

Self-service network O&M service that features network status visualization and intelligent diagnostics capabilities

Learn More Conversational AI Service

Conversational AI Service

This solution provides you with Artificial Intelligence services and allows you to build AI-powered, human-like, conversational, multilingual chatbots over omnichannel to quickly respond to your customers 24/7.

Learn MoreMore Posts by Alibaba Cloud Community