By Wang Shaoxuan (Dasha) and Xiao Yunfeng (Hechong), senior technical experts at Alibaba DAMO Academy's System AI laboratory

What kind of retrieval technology is used by Taobao's search recommendation and video search? What problems are solved by unstructured data retrieval, vector retrieval, and multi-modal retrieval?

In this article, we invited scientists at Alibaba DAMO Academy, to address these questions and elaborate on the internal technologies of Alibaba DAMO Academy. In particular, we will discuss the vector retrieval engine Proxima, and also describe the status quo, challenges, and future of the vector retrieval.

Artificial Intelligence (AI) is a technical field that existed way back when the computer was first invented. One of its core features is that it works like a human brain. Through a series of mathematical methods, such as probability theory, statistics, and linear algebra, algorithms can be analyzed and designed for automatic learning in computers.

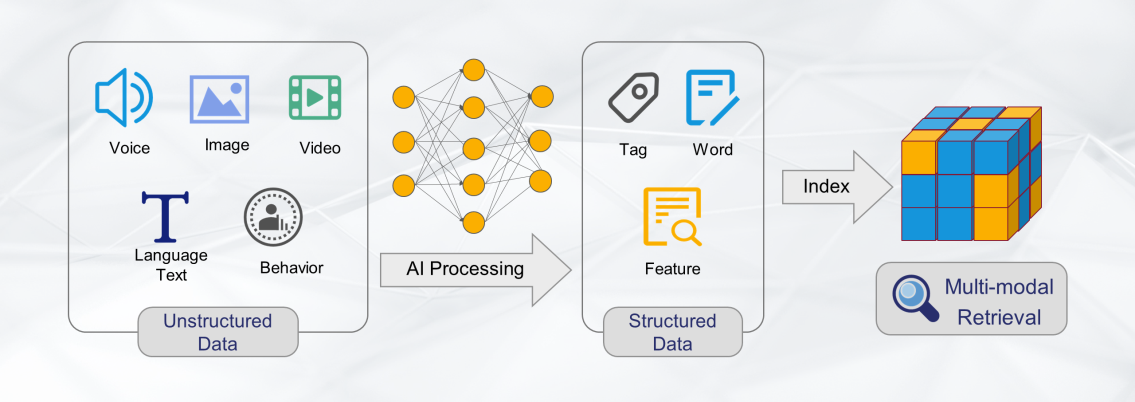

AI algorithms can abstract all kinds of unstructured data, such as voice, pictures, videos, languages, behaviors generated by people, objects and scenes in the physical world into multidimensional vectors. These vectors, like space coordinates in mathematics, mark the relationships between entities. Such a procedure is called embedding, as shown in the following figure. Unstructured retrieval indicates the retrieving of these generated vectors to find corresponding entities.

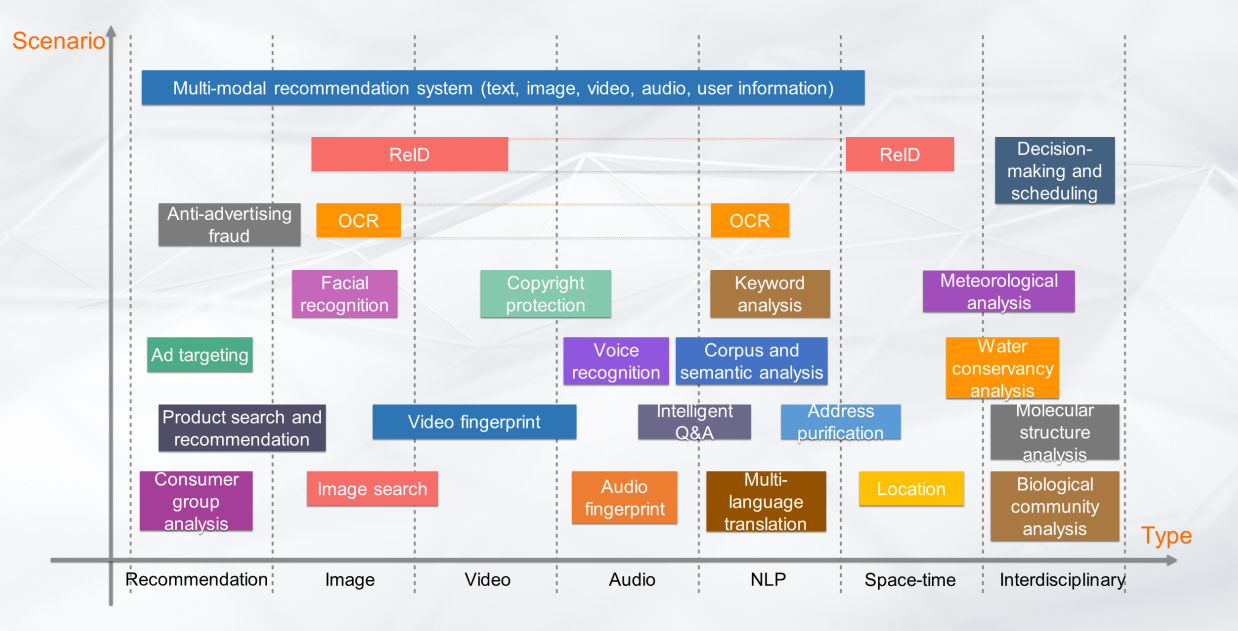

Unstructured retrieval is essentially a vector retrieval technology. Its main application includes image recognition, recommendation systems, image search, video fingerprint, speech processing, natural language processing, and file search. Due to the widespread use of AI technology and the continuous growth of data, vector retrieval has gradually become an indispensable part of the AI technology procedure. It also serves as a supplement to traditional search technology with the multi-modal search capability.

Vector retrieval is applied firstly to retrieve the most common unstructured data that can be accessed by humans, such as voices, images, and videos. Traditional search engines only index the names and descriptions of these multimedia without interpreting and indexing the contents of these unstructured data. Therefore, the retrieval results produced by traditional search engines are quite narrow.

As AI developed, it allowed us to analyze unstructured data in a quick and cost-effective way. Thus, it is now possible to directly retrieve the unstructured data, among which Vector retrieval is a very important part.

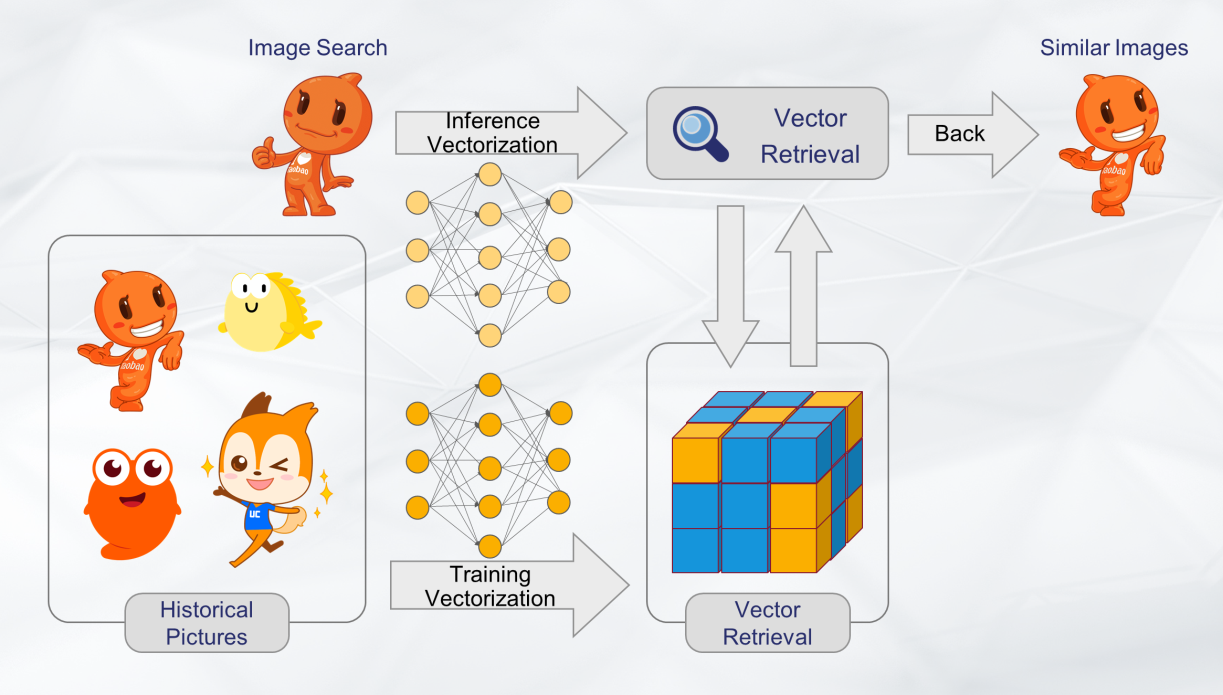

In the following figure, image search is used as an example. First, machine learning and analysis are performed on all historical images offline. Each image (or the figure in an image) is abstracted into multi-dimensional vector features, which are then constructed into efficient vector indexes.

When it comes to a new query (image), the same machine learning method is used to analyze it and generate a characterization vector for finding the most similar result in the formerly built vector indexes. In this way, an image retrieval based on image content is completed.

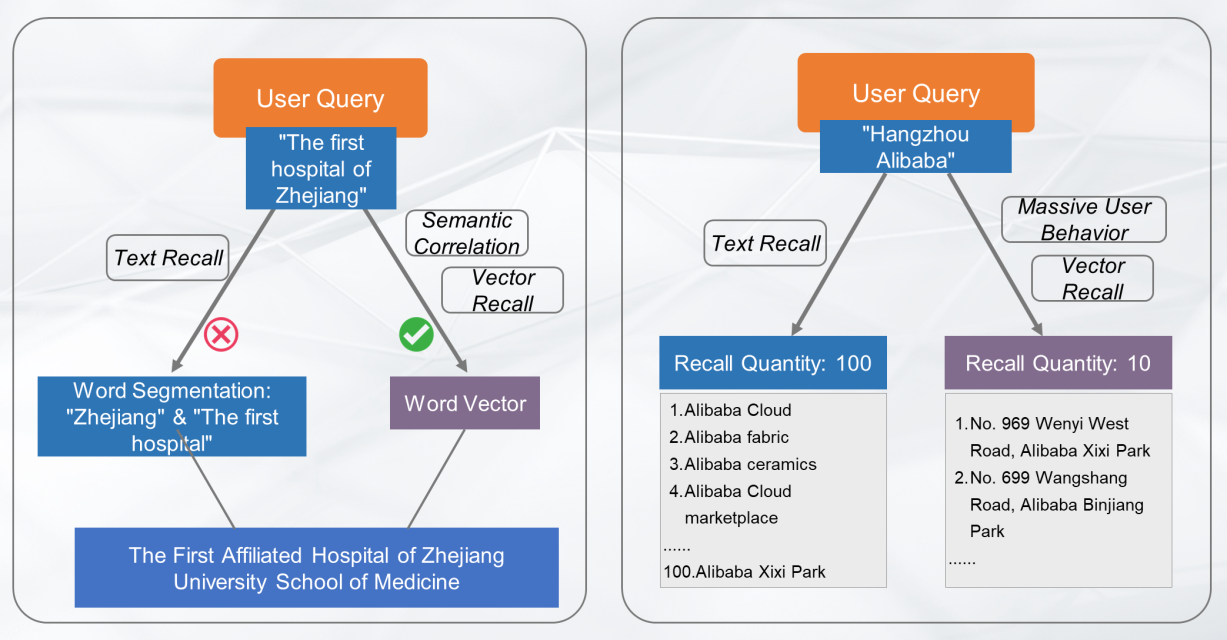

Vector retrieval has been used in common full-text searches for a long time. The address retrieval is used as an example to briefly introduce the application and importance of vector retrieval technology in text retrieval.

For the example on the left of the following figure, a user searches for "The first hospital of Zhejiang" in the standard address library. However, the keyword "The first hospital of Zhejiang" is not in the standard address library, since the standard address of "The first hospital of Zhejiang" is "The First Affiliated Hospital of Zhejiang University School of Medicine". If only "The first hospital" and "Zhejiang" are searched separately, the relevant results cannot be found in the standard address library. This is because the address "The first hospital of Zhejiang" does not exist. However, through the analysis based on people's historical languages or even the previous click associations, a model of semantic correlation can be established to express all addresses with high-dimensional features. By doing so, the "The first hospital of Zhejiang" and the "The First Affiliated Hospital of Zhejiang University School of Medicine" can be highly connected, and thus "The first hospital of Zhejiang" can be retrieved.

Another example is also about address retrieval, as shown on the right side of the following figure. If a user searches for "Hangzhou Alibaba" in the standard address library, it is almost impossible to find a similar result when using only text recall. After analyzing massive users' click behaviors, high-dimensional vectors can be formed combined with address text information. Therefore, texts that are most clicked can be recalled and placed in front.

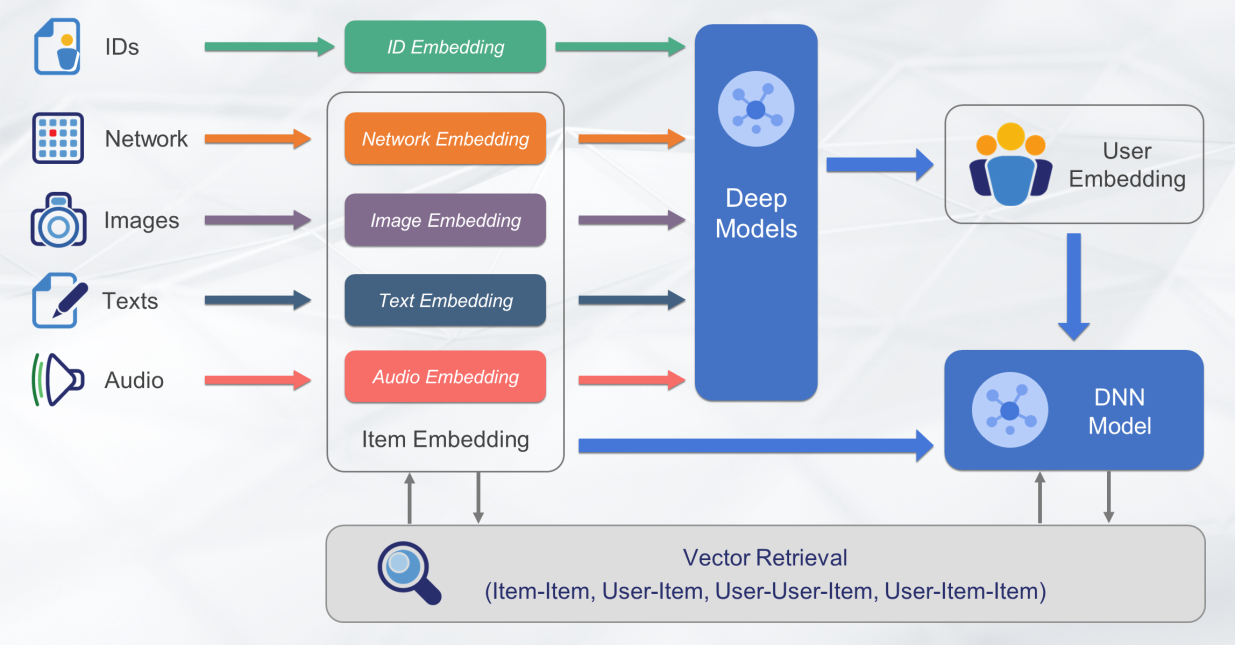

In e-commerce scenarios such as search, recommendation, and advertisement, it is commonly required to find similar products of the same type and recommend them to users. Most of these requirements are implemented through product collaboration and user collaboration. The new generation of search recommendation system, absorbing the embedding capacity of deep learning, realizes quick retrieval through the vector recall method, including Item-Item (i2i), User-Item (u2i), User-User-Item (u2u2i), user-Item-Item (u2i2i).

Through the abstraction of browsing and purchasing by users, as well as the similarities and correlations between items, algorithm engineers characterize the items into high-dimensional vector features and store them in the vector engine. In this way, similar items of an item (i2i) can be quickly and efficiently retrieved from the vector engine.

In fact, the vector retrieval scenarios are far more diverse than the preceding types. The following figure covers most business scenarios where AI can be applied.

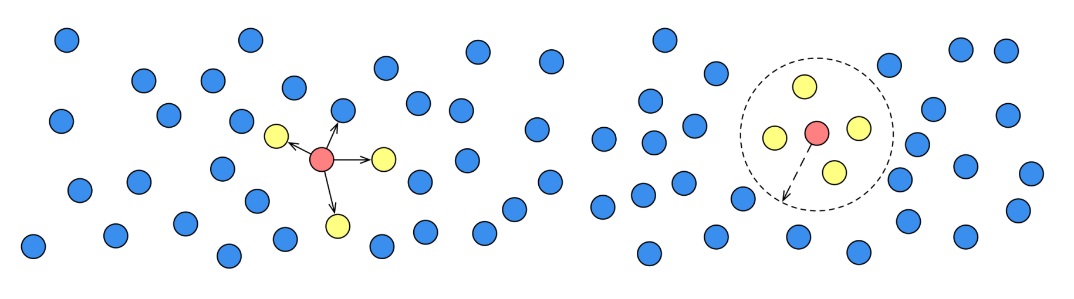

The essence of vector retrieval is to solve problems of k-Nearest Neighbor (KNN) and Radius Nearest Neighbor (RNN). KNN searches for the K points that are closest to the query point, while RNN searches for all points or N points within a specified radius of the query point. When dealing with a large amount of data, the cost of 100% solving the KNN or RNN problem is relatively high, so the method of Approximate Nearest Neighbor (ANN) is introduced. In conclusion, retrieving large amounts of data is to solve the ANN problem.

Many retrieval algorithms have been proposed for solving the ANN problem. The commonly used algorithm can be traced back to the KD-Tree proposed in 1975. Based on Euclidean space, the algorithm uses a multidimensional binary tree data structure to solve the ANN retrieval problem.

At the end of the 1980s, the spatial coding and ideas of Hash were produced, mainly represented by fractal curves and Locality-Sensitive Hashing (LSH). Both the algorithms belong to the ideas of spatial coding and transformation, and similar algorithms include Product Quantification (PQ) and so on. These quantization algorithms map high-dimensional problems to low-dimensional problems for solutions, so the retrieval efficiency is improved.

At the beginning of the 21st century, using the neighbor graph to solve the ANN problem also emerged. The neighbor graph is mainly based on the assumption that "a neighbor's neighbor may also be a neighbor". Namely, the neighbor relationships in the data set are established in advance to form a neighbor graph with certain characteristics. During retrieval, the graph is walked through all over, and finally the result is generated through convergence.

Vector retrieval algorithms are numerous without generality. Different algorithms are available in different data dimensions and distributions. However, the vector retrieval algorithms can be divided into three categories, spatial partitioning, spatial coding and conversion, and neighbor graph. Spatial partitioning is represented by KD-Tree and clustering search. These small collections can be quickly located during retrieval to reduce the number of data points to be scanned and improve retrieval efficiency.

The spatial coding and conversion, such as p-Stable LSH, PQ and other methods, re-encode or transform the data set and map it to smaller data space, thus reducing the calculation of scanned data points. Neighbor graphs include Hierarchical Navigable Small World (HNSW), Space Partition Tree and Graph (SPTAG), ONNG and so on. This idea can improve the retrieval efficiency by establishing relationship graphs in advance to speed up convergence during retrieval and reduce the number of data points to be scanned.

During the development of vector retrieval, some excellent open source products have emerged, such as Fast Approximate Nearest Neighbor Search Library (FLANN) and Facebook AI Similarity Search (Faiss). They provide unified implementation and optimization for some commonly used and effective ANN algorithms in the industry, and form some engineering retrieval solutions through runtime libraries. Based on these runtime libraries and improvements, some service-oriented engineering engines were invented in the industry, such as Milvus and Vearch.

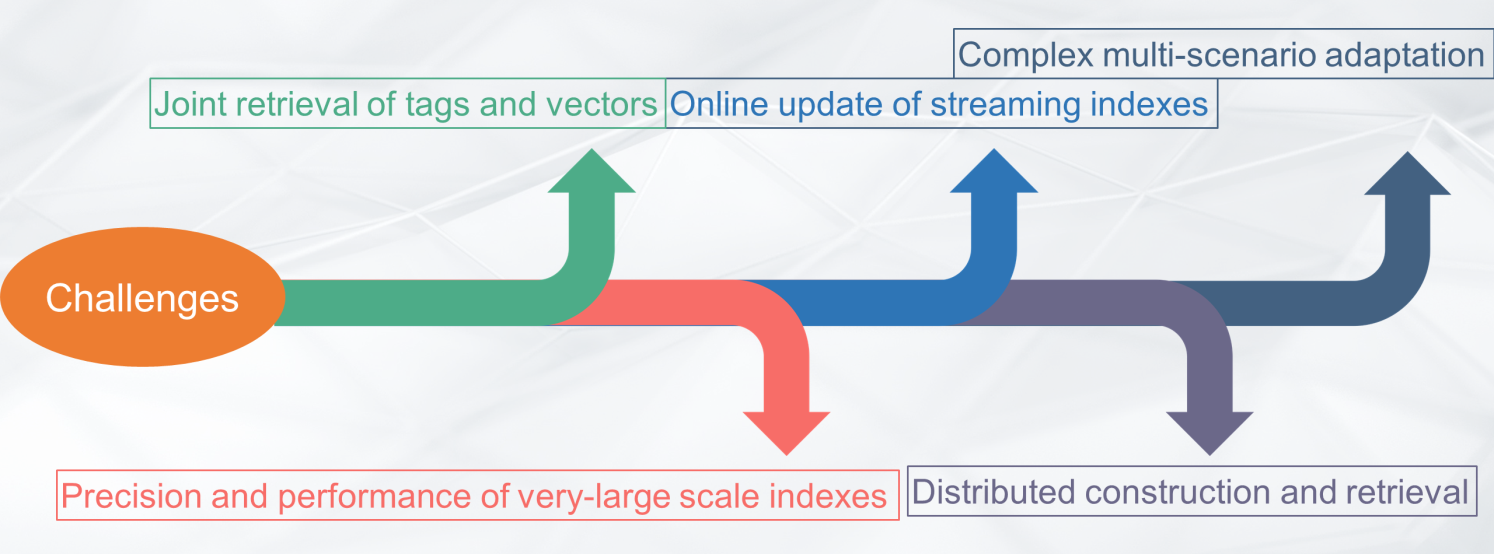

Although vector retrieval has been developed for many years and has gradually become the mainstream method of unstructured search, there are still many technical challenges and problems.

Vector retrieval is designed to support large-scale data retrieval since there is a large quantity of complicated unstructured data. However, many retrieval algorithms are still facing challenges in scenarios with hundreds of millions, or even billions of data. What's more, there are also some problems in engineering implementation, such as huge construction costs and low retrieval efficiency.

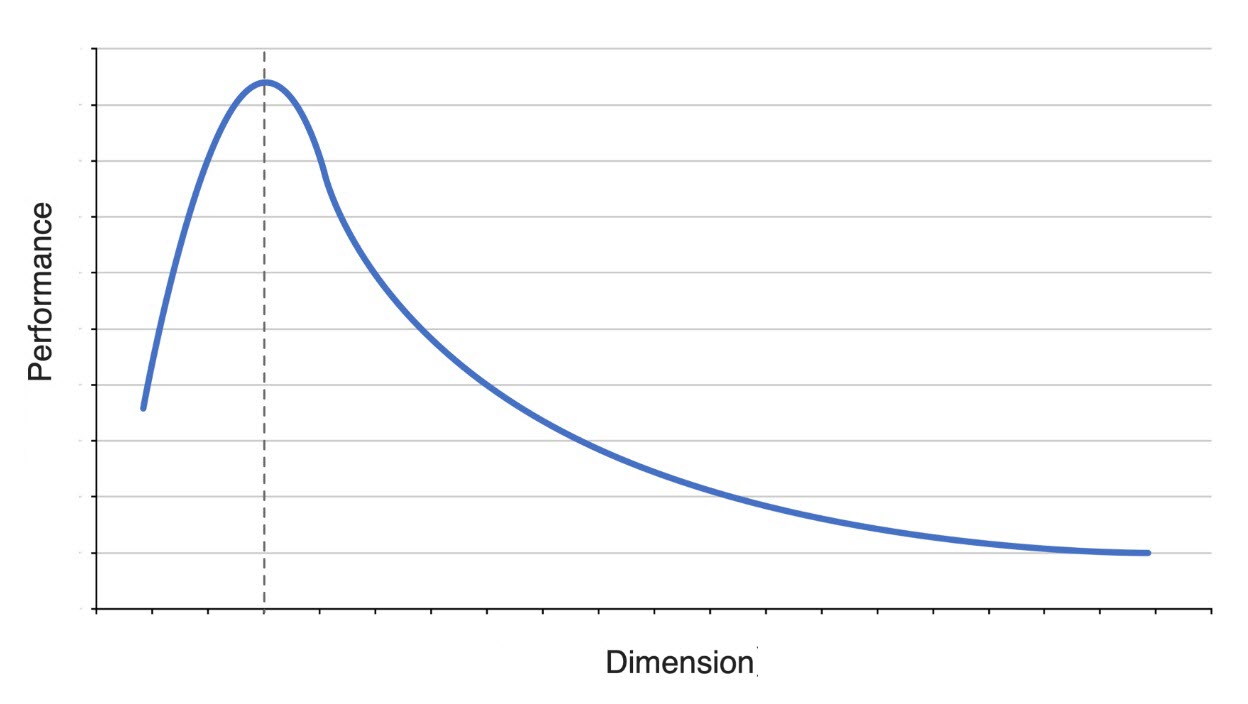

In addition, the increase of dimensionality causes the efficiency decrease of some vector retrieval methods. Vector retrieval methods are useless in high-dimensional space, increasing the data calculation and storage cost in engineering. Secondly, the algorithms lack complete generality, which means extensive consistency of data retrieval cannot be achieved. In other words, the retrieval algorithms are effective in any data distribution.

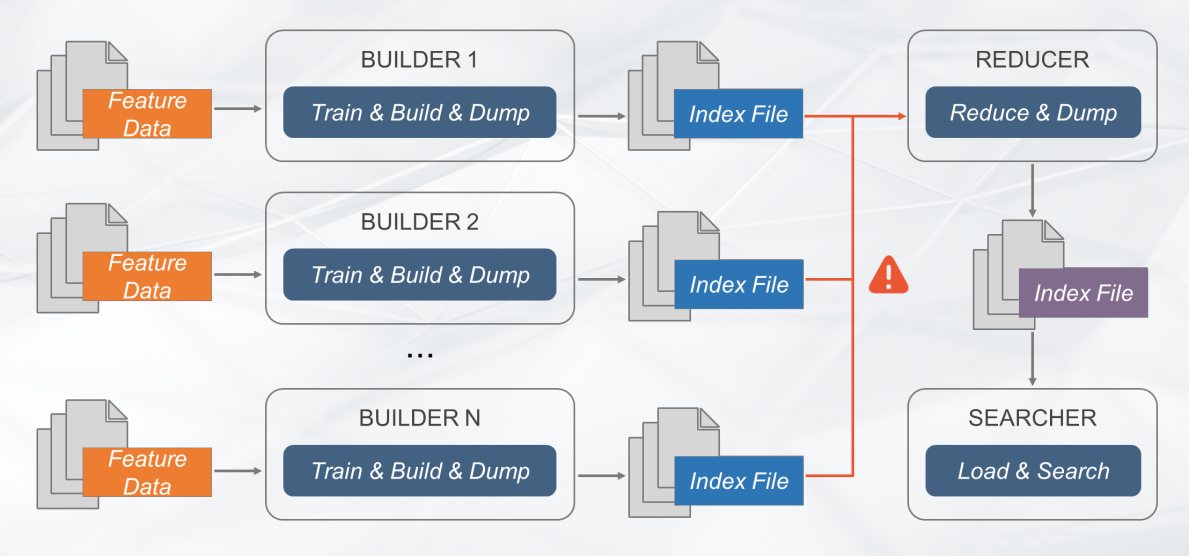

At present, the industry still cannot cope with the billions of high-dimensional data. Multiple indexes and separate retrieval are often combined to deal with data, increasing the actual computing cost.

Currently, most vector retrieval uses data sharding for horizontal scaling. However, too many shards easily lead to an increase in the computing workload, thus decreasing the retrieval efficiency. In distributed mode, it is still difficult to merge algorithms in the vector retrieval. Therefore, once data is sharded, a more efficient index cannot be generated by combining it with the MapReduce computing model.

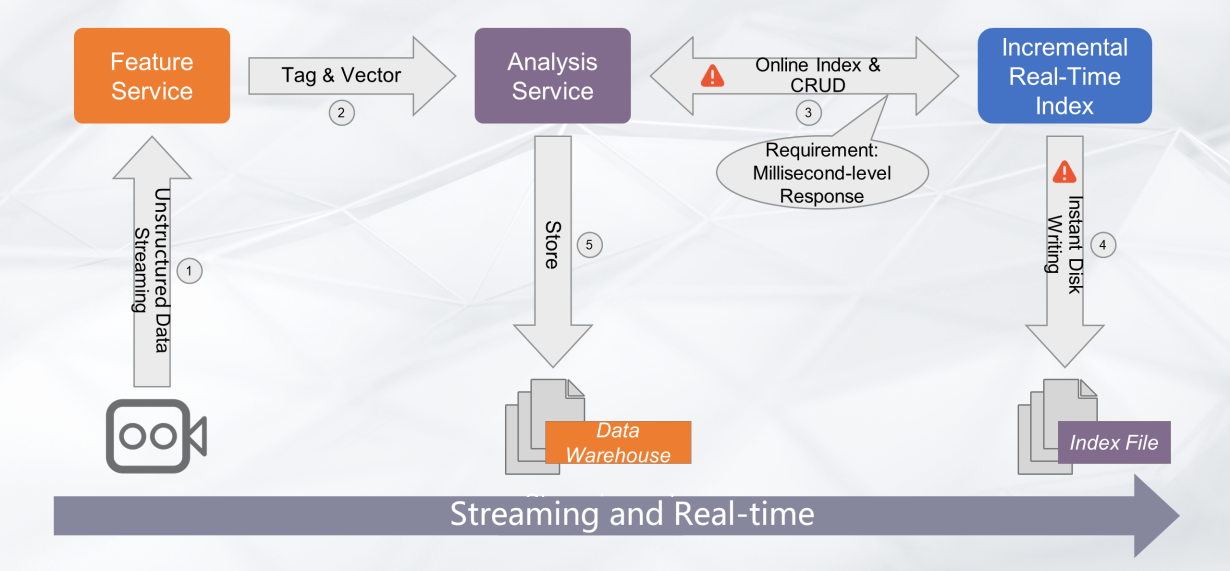

It is easy to implement Create, Retrieve, Update, and Delete (CRUD) operations with traditional retrieval methods. Vector retrieval relies on data distribution and distance measurement, and some methods also need data set training. Even a slight change on a single data point would cause massive changes. Therefore, algorithms and engineering still face some challenges to realize the full-streaming construction of vector indexes from 0 to 1, fast retrieval with addition, instant disk writing, and real-time dynamic index update.

Currently, non-training retrieval methods can easily support online dynamic addition and query of full memory index. However, in terms of instant disk writing, insufficient memory, online dynamic vector update and deletion, it is costly and non-real-time.

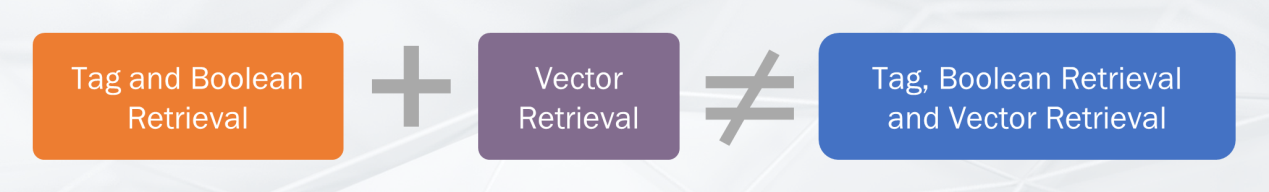

In most business scenarios, both tag retrieval and similarity retrieval are required, such as querying similar images under certain attribute conditions. This type of retrieval is called "vector retrieval with conditions".

Currently, the K-way merge method is adopted in the industry. That is, the results are merged after tags and vectors are retrieved respectively. Through this method, some problems can be solved, but the results are not ideal in most cases. The main reason is that there is no precise scope for vector retrieval, while the goal of vector retrieval is to ensure the accuracy of TOPK as much as possible. When TOPK is large, the accuracy may decline, resulting in inaccurate or even empty merging results.

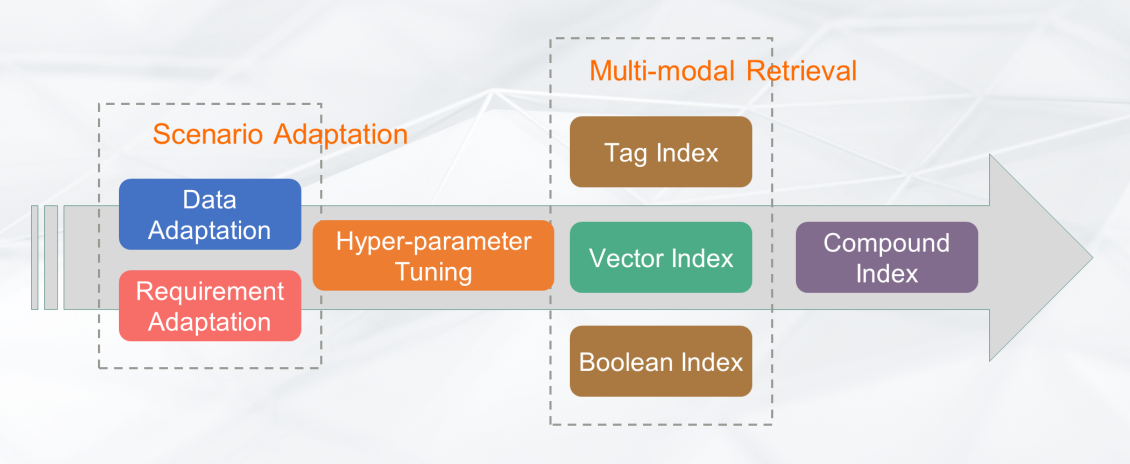

Through vector retrieval is universally applied, no universal algorithms can adapt to any scenarios or data for now. Even if the same algorithm works with different data, the parameter settings may vary. For example, for the multi-layer clustering search algorithm, clustering algorithm, number of layers and classes, and convergence threshold differ when dealing with diverse scenarios and data. It is the hyper-parameter tuning that greatly increases the user threshold.

To make it more user-friendly, scenario adaptation needs to be considered. It mainly includes data adaptation and requirement adaptation. Data adaptation includes data scale, data distribution, data dimensions and so on. Requirement adaptation includes recall rate, throughput, latency, streaming, and real-time performance, etc. Based on different data distribution, appropriate algorithms and parameters are selected to meet the actual business needs.

Proxima is an exclusive vector retrieval kernel of Alibaba DAMO Academy. At present, its core capabilities are widely used in many business scenarios of Alibaba Group and Ant Financial, such as Taobao search and recommendation, Ant Financial face payment, Youku video search, and Alimama advertising search. Proxima is also deeply integrated into a variety of big data and database products, such as Alibaba Cloud Hologres, Elasticsearch and ZSearch, MaxCompute (previously known as ODPS), to provide vector retrieval capabilities.

Proxima is a universal vector retrieval engine. It implements high-performance similarity search for big data in multiple hardware platforms such as ARM64, x86, and GPU. Moreover, Proxima supports embedded devices and high-performance servers, covering from edge computing to cloud computing. It also supports high-accuracy and high-performance index construction and retrieval of a single index at the billion level.

As shown in the preceding figure, Proxima has the following core features:

Currently, the vector retrieval library – Faiss (Facebook AI Similarity Search) is commonly used in the industry, invented by the Facebook AI team. Faiss is excellent and is the core of many service-based engines. However, Faiss still has some limitations in large-scale universal retrieval scenarios, such as real-time streaming computing, offline distributed computing, online heterogeneous acceleration, joint retrieval of tags and vectors, cost control and servitization.

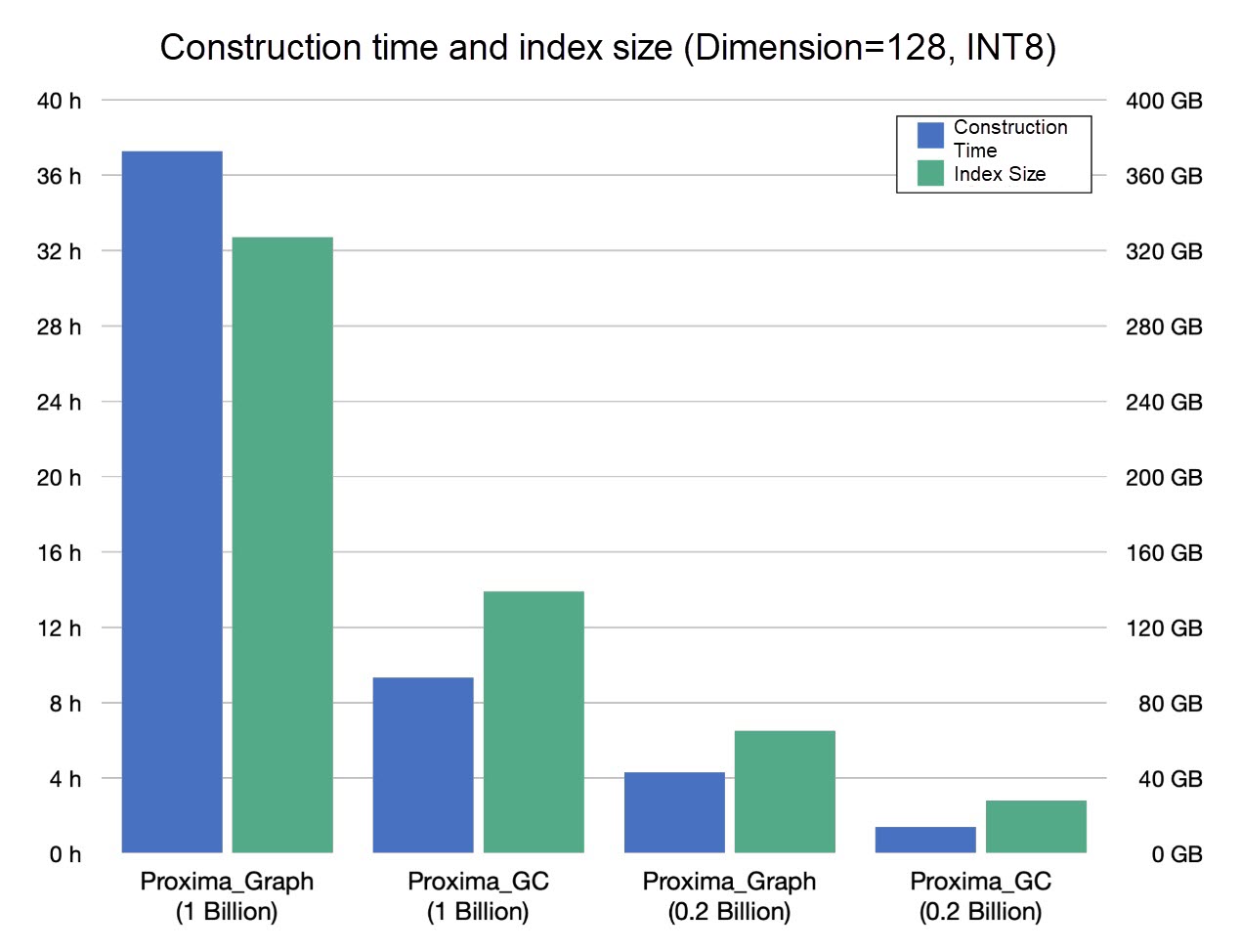

For example, for the public data set ANN_SIFT1B (from corpus-texmex.irisa.fr) at one billion scales on the server of Intel(R) Xeon(R) Platinum 8163 CPU and 512GB memory, the computing resources required by Faiss are too large to implement the construction and retrieval of a billion of indexes. However, Proxima can easily perform index construction and retrieval of a billion of indexes by using a single machine under the same environment and data volume.

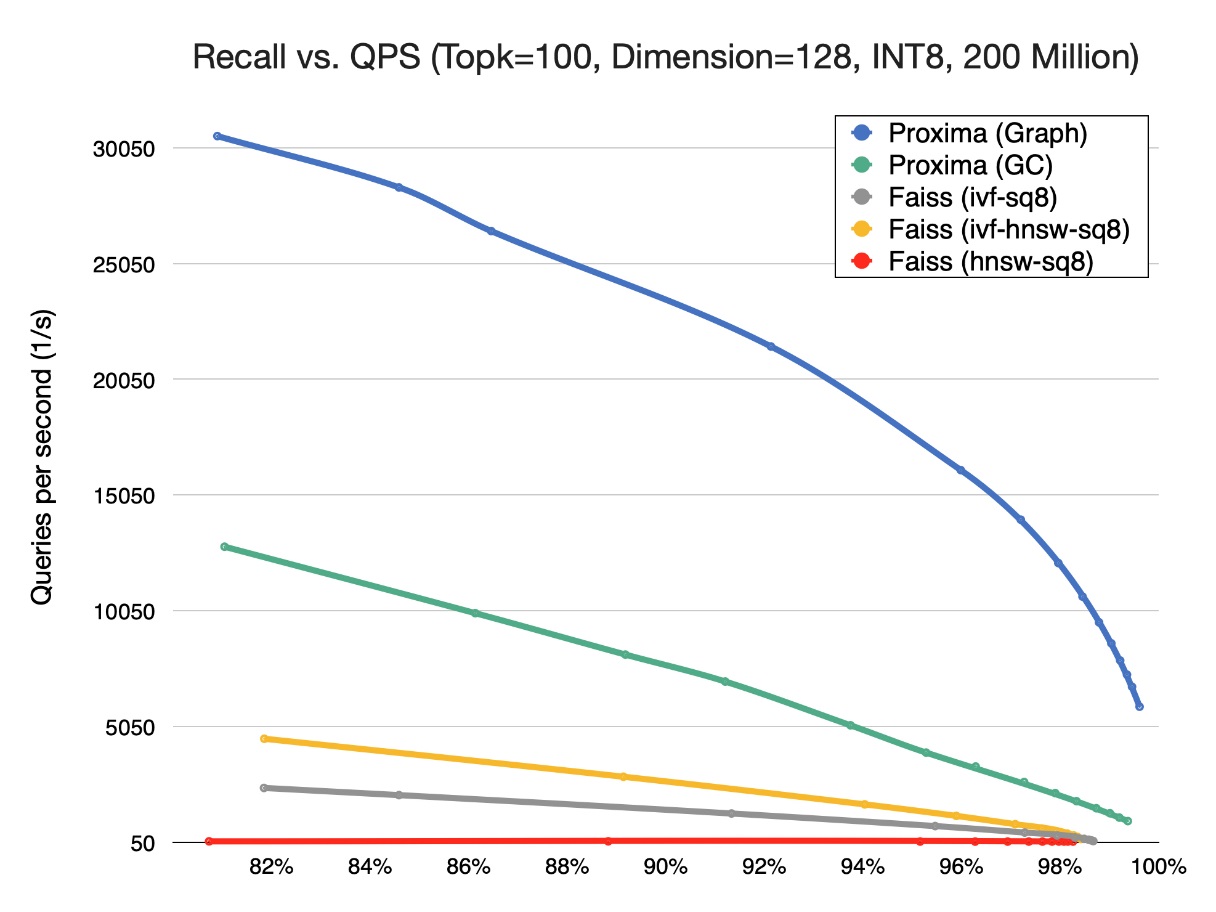

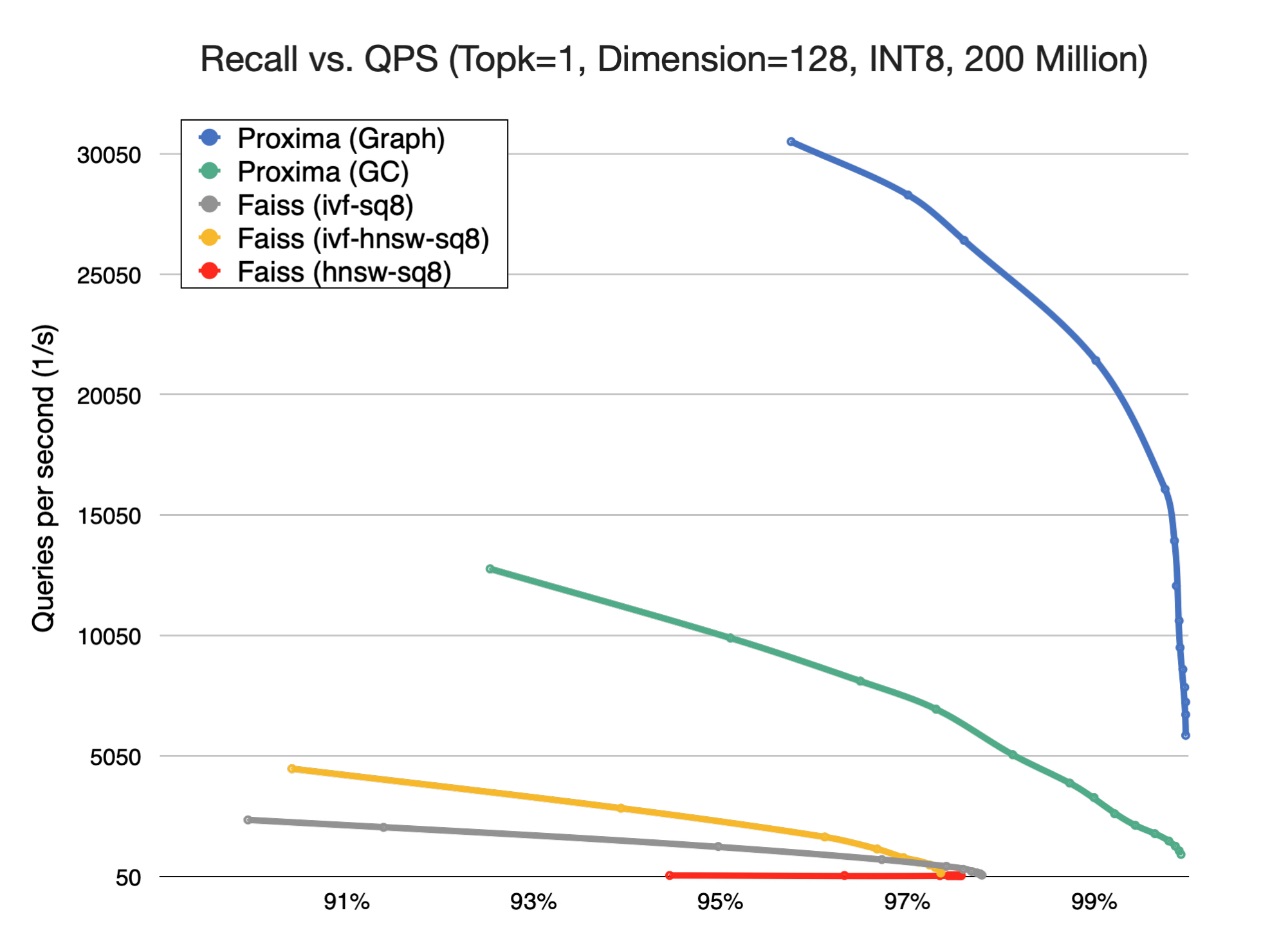

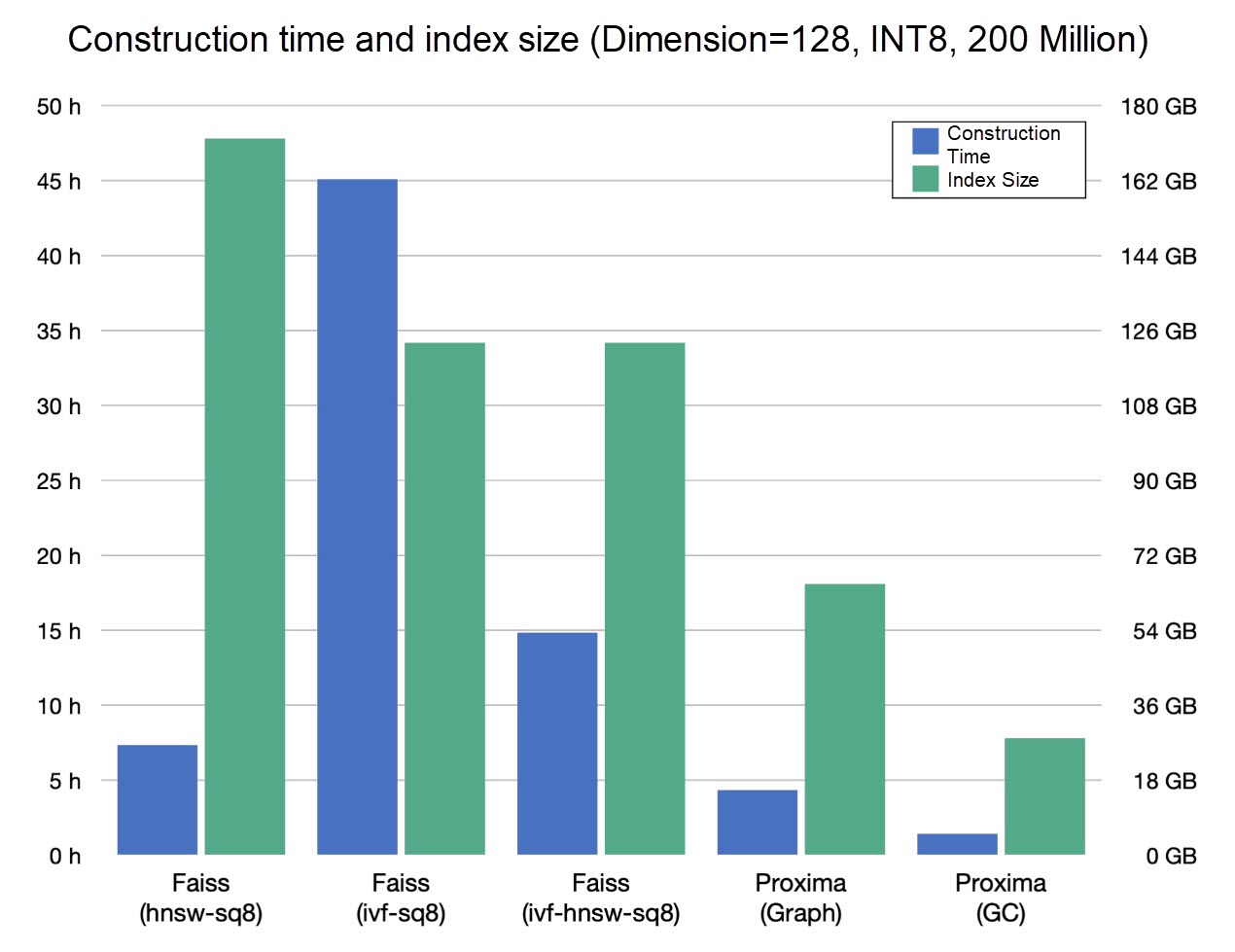

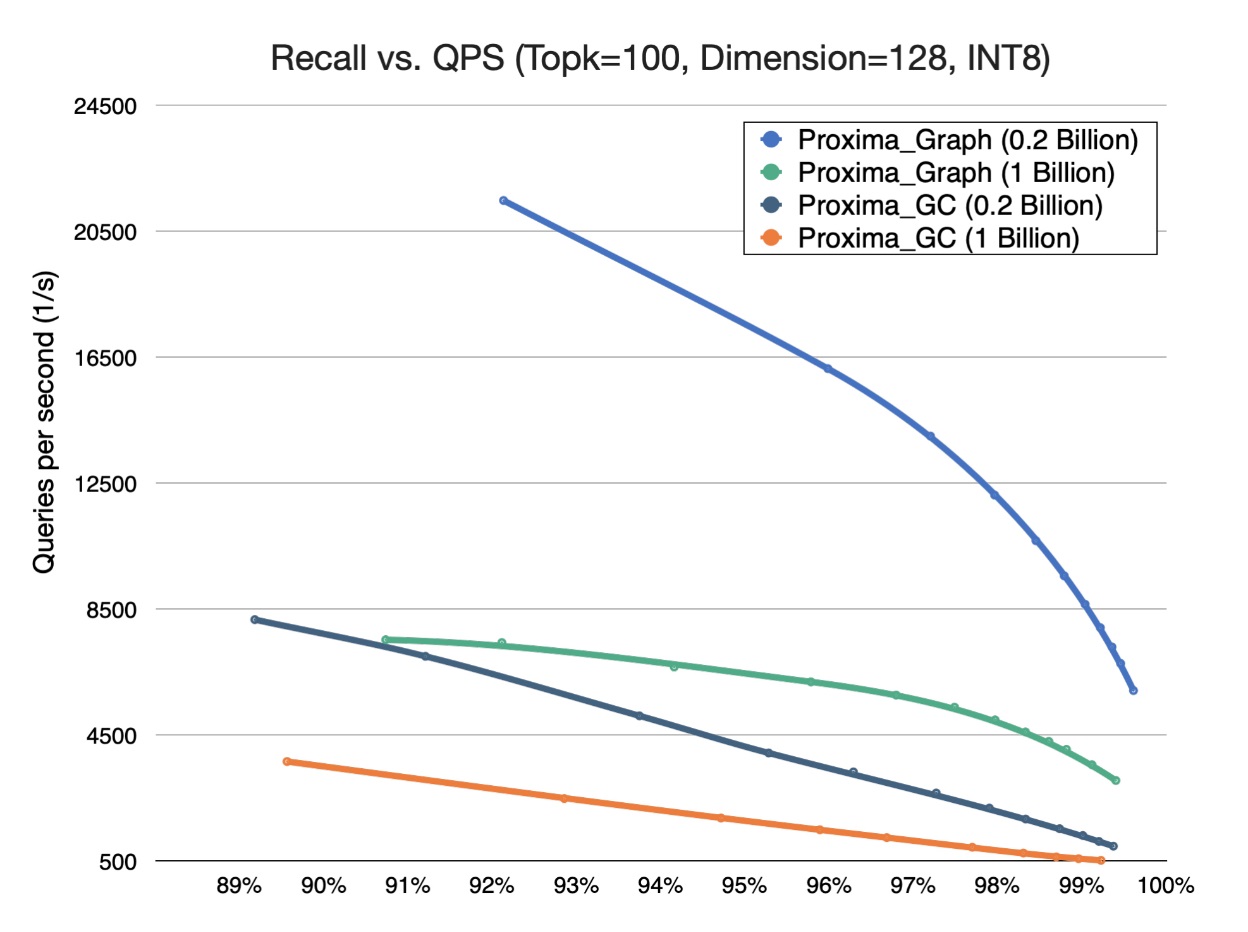

Considering the feasibility of the test, DAMO Academy team compared Faiss and Proxima in index construction and retrieval of 200 million data. Besides, based on 20 million data, the team also compared the heterogeneous computing capabilities of Faiss and Proxima single card. For the one billion data volumes, Proxima is tested separately, and the results are as follows.

Proxima is several times better Faiss in terms of the retrieval performance, and it can achieve higher precision of recall. In addition, the retrieval technology for Topk is even better. In addition, Faiss also has design defects in some algorithm implementations, such as the HNSW implementation, and low retrieval performance for large-scale indexes.

For 200 million data, the index construction of Faiss takes 45 hours, which can be shortened to 15 hours using HNSW optimization. Proxima can finish the construction within two hours under the same resources with smaller index storage and higher precision (see retrieval comparison).

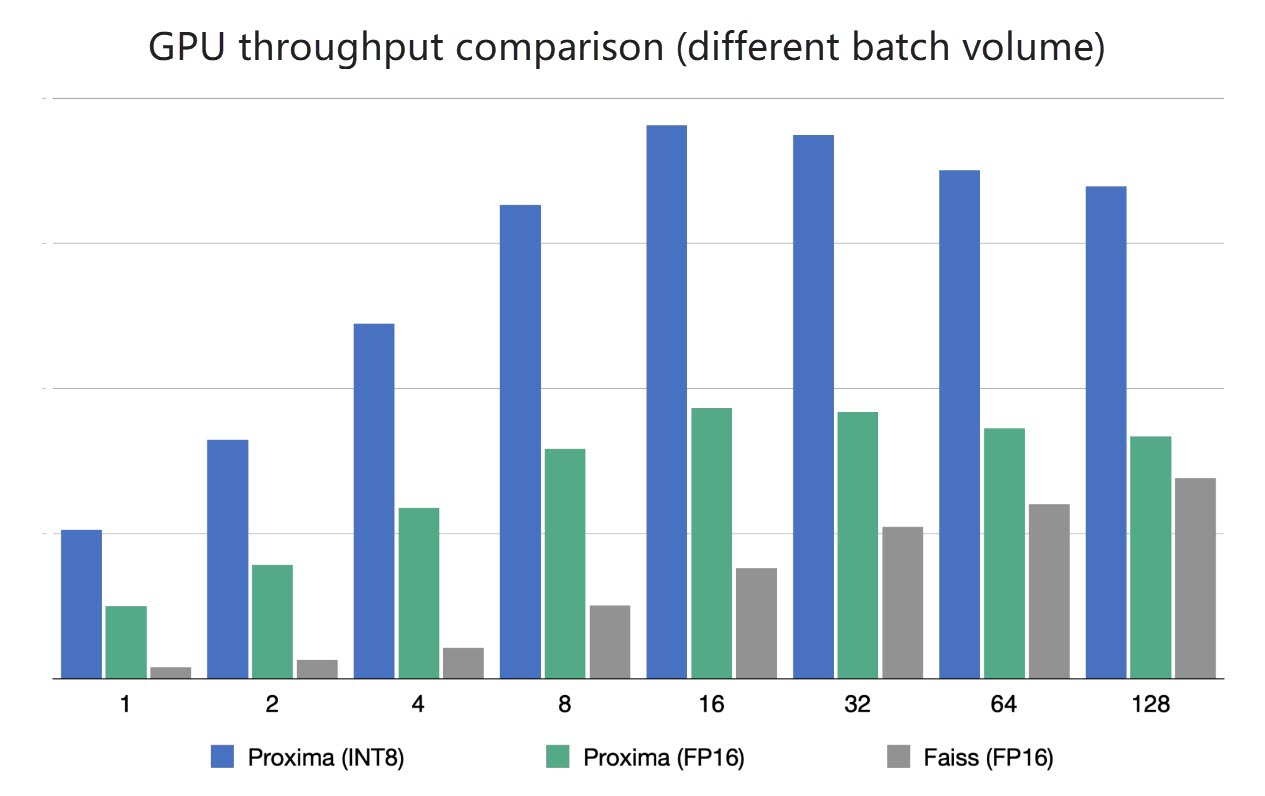

Proxima adopts a GPU computing method different from Faiss. It optimizes online retrieval scenarios that suffer from "small batches, low latency, and high throughput".

Proxima shows amazing advantages in small batch scenarios. It features small batches, low latency, and high throughput, and makes full use of GPU resources. At present, this retrieval solution is widely used in Alibaba's search and recommendation scenario.

Proxima supports streaming indexes and half-memory retrieval construction. This creates indexes at a billion of scale and implements high-performance and high-precision retrieval under limited resources. The high-performance and low-cost capabilities of Proxima provide strong and basic support for AI large-scale offline training and online retrieval.

With the wide application of AI technology and the continuous growth of data, vector retrieval, as a mainstream method in deep learning, will be further developed with its extensive retrieval and multi-modal search capabilities. The entities and features of the physical world are characterized and combined through vectorization technology to map to the digital world. Computers are used to calculate and retrieve, thus digging out the potential logic and implicit relationships for more intelligent services to human society.

In the future, vector retrieval will not only face the continuous increase of data scale, but also needs to solve the problems of hybrid spatial retrieval, sparse spatial retrieval, ultra-high dimension, and generalized consistency in algorithms. In engineering, vector retrieval will have to deal with more and more extensive and complex scenarios. How to form a powerful and systematic system that runs through scenarios and applications will be the key to the future development of vector retrieval.

PM - C2C_Yuan - April 18, 2024

Data Geek - April 30, 2024

PM - C2C_Yuan - May 31, 2024

Alibaba Cloud Community - June 16, 2022

Alibaba Cloud MaxCompute - December 6, 2021

Alibaba Clouder - July 22, 2020

Offline Visual Intelligence Software Packages

Offline Visual Intelligence Software Packages

Offline SDKs for visual production, such as image segmentation, video segmentation, and character recognition, based on deep learning technologies developed by Alibaba Cloud.

Learn More Image Search

Image Search

An intelligent image search service with product search and generic search features to help users resolve image search requests.

Learn More CT Image Analytics Solution

CT Image Analytics Solution

This technology can assist realizing quantitative analysis, speeding up CT image analytics, avoiding errors caused by fatigue and adjusting treatment plans in time.

Learn More AI Acceleration Solution

AI Acceleration Solution

Accelerate AI-driven business and AI model training and inference with Alibaba Cloud GPU technology

Learn More