This article looks at some of the typical questions that arise when working with databases. In our discussion, we will not stick to analyzing only a single type of database. Instead, we will be focusing on the concepts and principles involved in resolving some basic questions. These questions cover three core functionalities of databases, which are highlighted before each question.

Q: Can traditional relational databases achieve strong consistency between the master database and the slave database without the shared storage mechanism?

A: Unfortunately, no. A database can achieve high consistency between the master and slave databases only by using shared storage. This holds true for Oracle, MySQL, PostgreSQL and OceanBase as well.

Q: How can we achieve strong consistency between the master and slave databases?

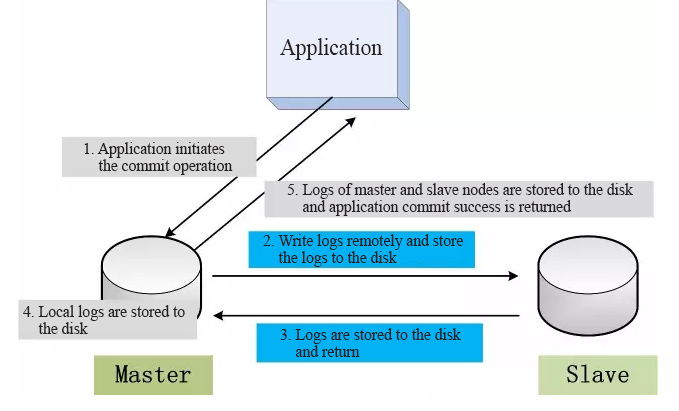

A: WAL (Write-Ahead-Logging), a well-known and important technology in database, is needed for this purpose-. When the system updates the operation write logs (Oracle Redo Log and MySQL Binlog among others) or commits a transaction, one should first ensure the flushing of the logs generated by the transaction to the disk. This would ensure no data loss occurs.

The method for achieving the strong data consistency between the master and slave databases is also simple:

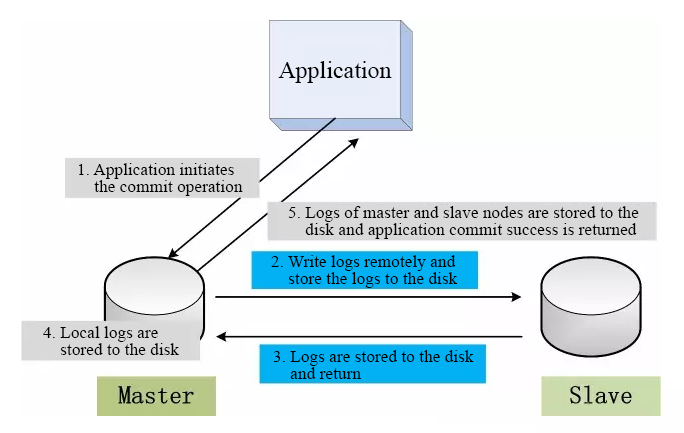

The logic of the entire transaction committing is as shown in the figure below:

As shown in the figure above, the logs generated by the transaction exist on both the master database and the slave database. Once the transaction committing operation returns to the application, the system achieves a stable synchronization.

If the master database crashes at a certain point in time, the slave database continues providing the service with data that is consistent with that in the master database. There is no loss of transaction data and strong consistency between the master and slave databases is achieved. If you have used Oracle, you should be familiar with Data Guard. It has three modes, namely Maximum Performance, Maximum Availability, and Maximum Protection. Among them, the Maximum Protection mode follows a similar idea with the logic diagram above.

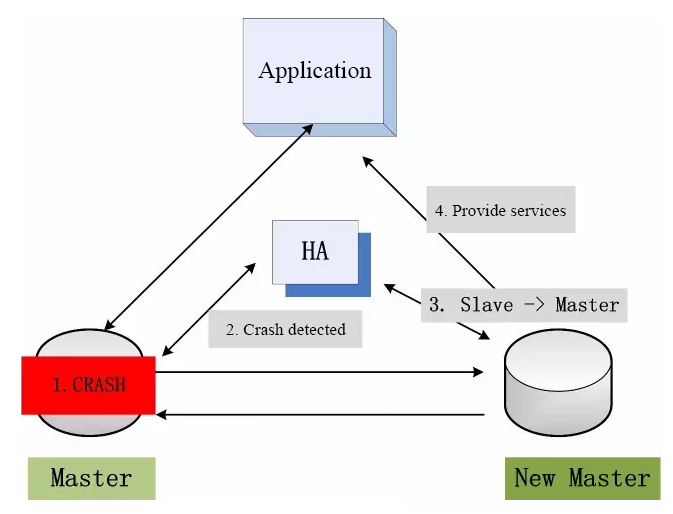

After realizing the strong synchronization of data, we can start to consider the issue of availability. Now we already have the master and slave databases with identical data. The significance of the slave database is to take over requests from applications when the master database fails to ensure continuous service. When the master database crashes, the system promotes the slave database to the master database position to provide external services. The issue that may arise in this case is that of decision-making. Who will take charge of the master database-slave database switch? A manual switch is a possibility but is too inefficient, not to mention the unpredictability of crashes.

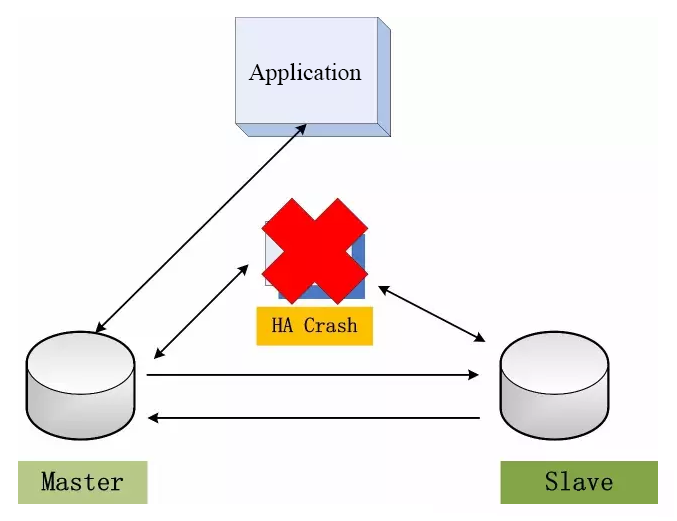

An automated mechanism is ideal to avoid the above-mentioned issues. An HA (High Availability) is a testing tool best suited to eliminate errors. The HA tool is usually deployed on the third server connected to the master and slave databases at the same time. When it detects any connection failures to the master database, it switches the database service provider to the slave database. The processing logic is quite simple, as shown in the figure below:

The master and slave databases implement the strong data synchronization mechanism through logs, thus ensuring data security and preservation.

Q: Does synchronization of log-based data and the HA software for automated master database-slave database switch guarantees the success of handovers?

A: This mechanism can solve more than 90% of problems but also causes some major problems under certain circumstances.

Firstly, a prominent problem may arise. After the master database crashes and the slave database assumes the role of the master database, the database now becomes a single point and this situation continues until such a time that the original master database restarts and resumes service. If the new storage system crashes again during this period, the whole system will be unavailable. Adding replicas and additional slave databases should be able to solve this problem.

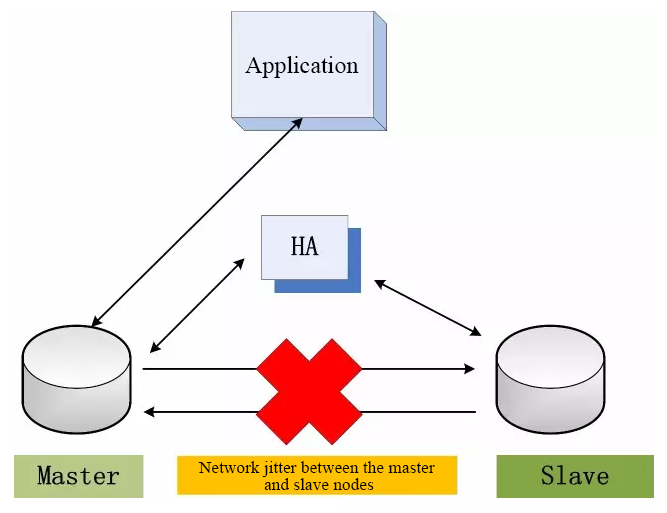

Secondly, in the master database-slave environment, it is easy to handle the failure of the master database. One just needs to switch to the slave database in case the master database crashes. However, cases arise when the master database does not crash, but the network has some problems, as shown in the figure below:

It is possible for the network between the master database and slave nodes to fail due to factors such as network disconnection and jitter. In case of network failures, how would the database handle it? Will the master database continue to provide service? Since the slave database is not synchronizing logs, will there be data loss? What would happen if the master database stops providing services?

The application will be unavailable. In Oracle, if you set the mode to Maximum Availability, the master database will continue to provide service and data inconsistency is permissible. If you set the mode to Maximum Protection, the master database will stop providing service. Therefore, if you set the mode to Maximum Protection in Oracle, we usually suggest you should set two or more slave databases. If any of the slave databases successfully synchronizes the logs, the master database will continue to provide service and system availability.

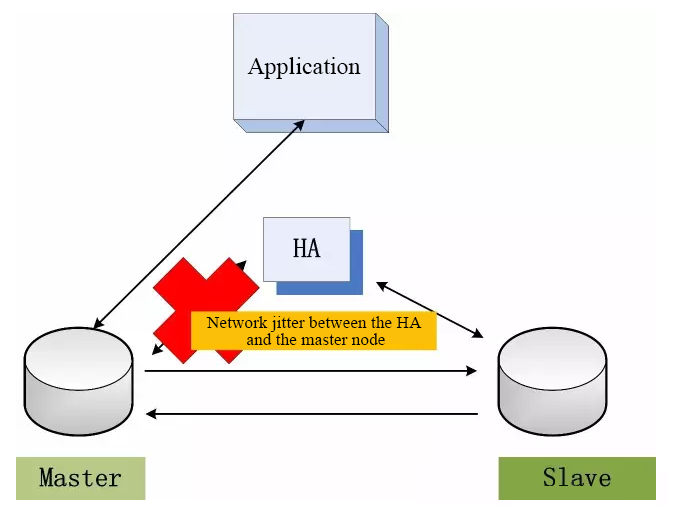

Network problems can happen not only between the master and slave databases but also between the HA and the master database, or between the HA and the slave database. Consider the following situation:

When the network between the HA and the master database fails, the HA faces two potential problems:

The HA software itself may also have problems, as shown in the figure below:

Through deploying the HA, we can ensure that the database system is continuously available in various scenarios. However, how can we ensure the continuous availability of the HA? Configuring a master database-slave mechanism for the HA and deploying another HA layer for the original HA is a possible solution but an inefficient one.

In fact, the problems mentioned above are about the consistency in a typical distributed environment (consensus). The Lamport's Paxos protocol which has been a hit in recent years and the Raft protocol recently released by the Stanford University, both target such problems.

Q: How can we ensure that the database is continuously available in various situations? Can we introduce distributed consistency protocols such as Paxos and Raft to solve the various problems earlier mentioned?

A: The answer is yes. We can introduce protocols similar to Paxos and Raft to solve the various challenges to ensure the continuous availability of the entire database system. These protocols still use a strong consistency system composed of two databases as the master and slave databases, and HA for monitoring and switching between the master and slave databases.

Q: How can we ensure the availability of the HA software itself? If the HA software cannot access the master database, how can we identify whether it is because the master database has crashed or because the HA software's network connection to the master database has failed?

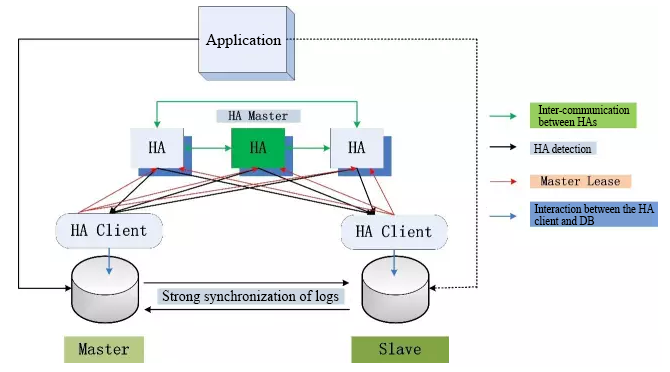

A: The new system for solving these problems is as shown below:

Compared with the previous system, we can see that this new system is much more complicated. The database system still adopts one-master-and-one-slave mechanism with strong data synchronization. Apart from this, there are also many other changes, including:

Q: Can these changes solve the two problems above?

First, let's see how we can ensure the availability of the HA software itself.

The increase from one HA host to three HA hosts aims to solve this problem. The HA service is stateless in itself. The three HA hosts can elect their master automatically through the Paxos/Raft protocol. In all, the deployment of three HA hosts and the introduction of Paxos/Raft protocol can ensure the high availability of the HA service. It thus ensures the HA software's availability.

Having solved the first problem, let's move on to the second problem: identifying whether the current problem is a result of a network failure or the master database crashing. How can we ensure that there is always one master database in the database system providing external services in any circumstances?

Solving the second problem can occur entirely through deployment of HA clients on the database server and introduction of the lease mechanism from the HA client to the HA master. The so-called lease mechanism from the HA client to the HA master refers to the database instance in the figure, which does not always hold the permission of being the master database (or the slave database). The current master database acts as the master database for a limited time range, such as 10 seconds. The HA client must initiate a new lease to the HA master once every 10 seconds to renew the master database state. As long as it receives the confirmation for continuing the lease from the HA master once every 10 seconds, the system will not downgrade the current master database to a slave database.

We can further divide the second question into three scenarios:

Scenario 1: The master database crashes, but the location server of the master database runs normally, and the HA client runs normally.

The master database crashes and the HA client runs normally. In this scenario, the HA client will send a request for giving up the master database lease to the HA master. The HA master receives the request and directly promotes the slave database to the master database. The original master database serves as the slave database after itreboots.

Scenario 2: The host location of the master database crashes. (The master database and the HA client crash at the same time).

Because the HA client and the master database crash at the same time, the communication from the HA master to the HA client fails. The HA master cannot promote the slave database to the master database instantly because it cannot distinguish the cause, whether it is the case in Scenario 2 or Scenario 3, discussed next (network issue). Therefore, the HA master will wait for the lease term to expire (such as 12 seconds). If no lease renewal request is received before the lease term expires, the HA master will promote the slave database to the master database to provide external services. The original master database serves as a slave database after its host restarts.

Scenario 3: The master database is normal, but the network connection between the master database and the HA master fails.

The HA master cannot distinguish Scenario 2 from Scenario 3. Therefore, the HA master will process Scenario 3 in the same logic as it does for Scenario 2. It waits until the lease term expires. If it does not receive any renewal request, it promotes the original slave database to the master database. But before the promotion, the HA client of the original master database needs to perform some additional functions. The HA client of the original master database has not received a response for its lease renewal request to the HA master because of the network issue, and the lease term has expired. It will automatically downgrade the local master database to a slave database. In this way, when the HA master promotes the original slave database to the master database, the original master database has also been downgraded to the slave database by the HA client. The process eliminates the dual master databases situation and no dual writes will occur for the application.

One can also solve the second problem in this architecture through the analysis of the above three scenarios. In the process of solving the second problem, the system should wait for the set lease term time at most. If the lease is set to 10 seconds, the service downtime in case of various problems will be 10 seconds at most, basically ensuring continuous availability. The service downtime is completely dependent on the lease term settings.

By now we can say that achieving a continuously available (ensured availability of partitions) database system with strong data consistency of master-slave databases is not impossible. It is also feasible to renovate the existing database system. But considering the actual implementations, the renovation is a highly complicated process. We implement the master-slave switch of the database within the database system. In such a scenario, the system promotes the HA master to the master database and downgrades the HA client to the slave database. The original master database resumes its position as the slave database following the successful recovery of the crashed database subject to the implementation of the lease mechanism of the master database through the HA client, and achieve the availability of HA hosts. All these are very difficult to implement on the existing database.

Ideally, the strong synchronization of logs ensures data consistency for any data. The introduction of Paxos/Raft alongside other distributed consistency protocols based on strong data synchronization guarantees the system's continuous availability, also termed partition availability. Next, let us take a look at a question that many readers would be interested in.

Q: How to ensure a high performance while ensuring the strong synchronization?

A: Back to the first figure in this article:

When an application initiates a request to commit a transaction, it must synchronize the transaction logs to the slave database to ensure the robust synchronization of data and store the logs to the disk. Compared with the asynchronous writings to the slave, the synchronous mode has one more network interaction from the master database to the slave database and one more sync operation to the disk on the slave database. In the application layer, one commit operation will take a longer time.

How much longer? It depends on the network latency from the master database to the slave database and the disk performance of the slave database.

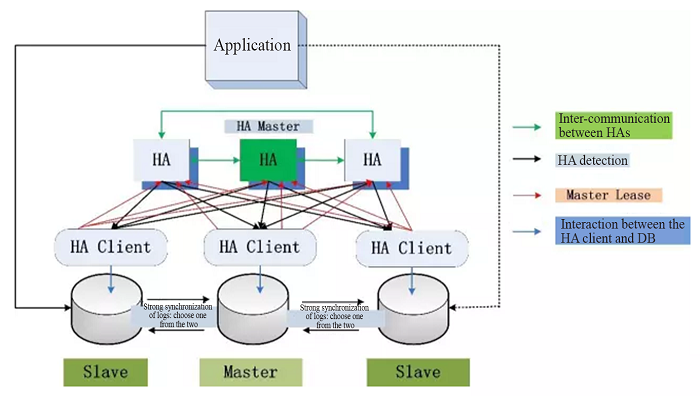

The first simple idea to improve the performance is to deploy multiple slave databases. As long as one slave database has completed the log synchronization and returns the response, and the local master database logs have been stored to the disk, it is possible to return commit operation successfully. The deployment of multiple slave databases is very helpful for eliminating instant network jitters. In the official suggestion of Oracle, enabling the Maximum Protection mode necessitates engagement of multiple slave databases to minimize the impact of network jitters. In cases where one chooses to deploy two slave databases, the new deployment architecture diagram is as shown below:

There is an additional slave database, and three copies of data are generated. As long as one of the two slave databases completes the log synchronization, the transaction can be committed, significantly reducing the impact of network jitters on one database. The added copy can also solve the data security issue after the master database crashes. Even if the master database crashes, two copies can still provide services without creating a single point of failure.

However, the introduction of a third copy of the data creates another problem: when the master database crashes, which slave database should assume the role of the new master database? Of course, the HA master still has the right to execute master election. But how will the HA master determine the selection?

We can use the following criteria to judge and solve the problem easily:

After the election of the new master database, the first task is to synchronize the logs of the new master database with the remaining slave database. Once the two have consistent logs, the master database starts to provide services for the application. At this time, the tri-replica problem degenerates to a dual-replica problem. The advantage of tri-replica against network jitters disappears. But thanks to the strong data synchronization of the two replicas, the system can still guarantee data reliability and consistency.

Of course, there are more optimization options available apart from this simple tri-replica optimization. The idea of optimization is generally the synchronous-to-asynchronous processing, such as the log writes for transaction committing, adoption of a finer lock and adoption of lockless programming for critical paths.

The secure synchronization of multiple replicas will not necessarily cause system performance loss if well handled. So, what does "well-handled" look like? Here is a possible solution.

After such an elaborate discussion, here are a few general questions for further information:

Q: Can various mainstream relational database systems implement high consistency of master and slave databases, and can we ensure data consistency without dependency on storage?

A: Yes. Oracle, MySQL 5.7, Alibaba Cloud RDS, Netease RDS all provide similar features.

Q: At present, can the various relational database systems provide continuous availability and high performance of the system based on strong data consistency between the master and slave databases?

A: Yes, it can. The presence of 2 databases functioning at the same time results in the data being captured by the master database as well as the slave database. This will result in a continuous availability of the system database.

In this article, we discussed on the main concepts of effective database synchronization. Among the focus areas have been the means with which to achieve consistency between master and slave databases and how to ensure continuous database availability under various strenuous conditions. We've also seen that strong synchronization of logs ensures data consistency, an aspect crucial to performance optimization.

2,593 posts | 793 followers

FollowAlibaba Clouder - September 3, 2019

Apache Flink Community - August 14, 2025

Rupal_Click2Cloud - December 12, 2022

Alibaba Clouder - August 14, 2020

ApsaraDB - November 12, 2024

Apache Flink Community - February 20, 2025

2,593 posts | 793 followers

Follow Database for FinTech Solution

Database for FinTech Solution

Leverage cloud-native database solutions dedicated for FinTech.

Learn More Oracle Database Migration Solution

Oracle Database Migration Solution

Migrate your legacy Oracle databases to Alibaba Cloud to save on long-term costs and take advantage of improved scalability, reliability, robust security, high performance, and cloud-native features.

Learn More Database Migration Solution

Database Migration Solution

Migrating to fully managed cloud databases brings a host of benefits including scalability, reliability, and cost efficiency.

Learn More DBStack

DBStack

DBStack is an all-in-one database management platform provided by Alibaba Cloud.

Learn MoreMore Posts by Alibaba Clouder