Download the "Real Time is the Future - Apache Flink Best Practices in 2020" whitepaper to learn about Flink's development and evolution in the past year, as well as Alibaba's contributions to the Flink community.

By Wang Feng (Mowen), at Alibaba

Apache Flink is a well-recognized, next-generation computing engine for big data. Its pipeline operation system can run both batch and stream processing programs. Flink has become one of the most active projects in the Apache Foundation and the GitHub community. At Flink Forward Asia 2019, Wang Feng, a senior technical expert and head of real-time computing at Alibaba, summarized Flink's development and evolution in China in 2019, as well as Alibaba's contributions to the Flink community and the future development of Flink.

This article is based on the paper he presented at Flink Forward Asia 2019.

You can download Flink from GitHub at this link.

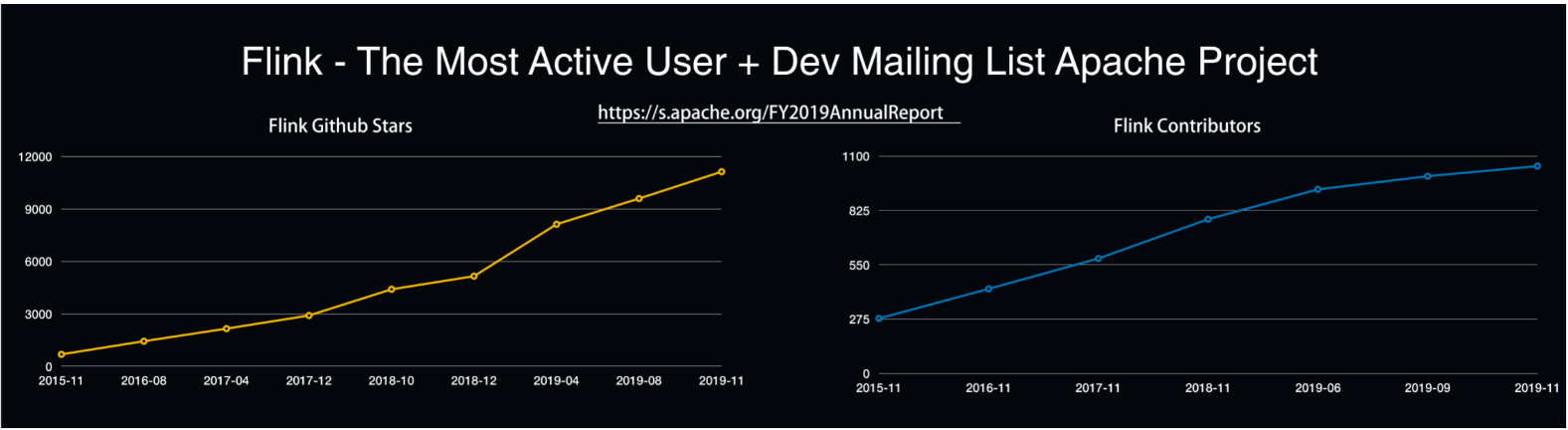

Let's take a brief look at the development of the Flink community. Since Flink began contributing to the open-source community in 2014, it has developed rapidly. Currently, Flink is one of the most active projects in the Apache Foundation. On GitHub, Flink is among the top three Apache projects. Interestingly, Flink doubled its star count on GitHub in 2019 alone, and its number of contributors also continued to grow. Relevant data shows that more and more enterprises and developers are constantly joining the Flink community and contributing to the development of Flink. Among them, Chinese developers have made a great number of contributions.

Let's give Flink a star on GitHub.

As the Flink community developed rapidly, its technology has gradually matured. In 2019, many local Internet companies in China began to use Apache Flink as a real-time computing solution. At the same time, several big Internet company both in China and abroad, including Uber, Netflix, Microsoft, and Amazon, started using Apache Flink, too.

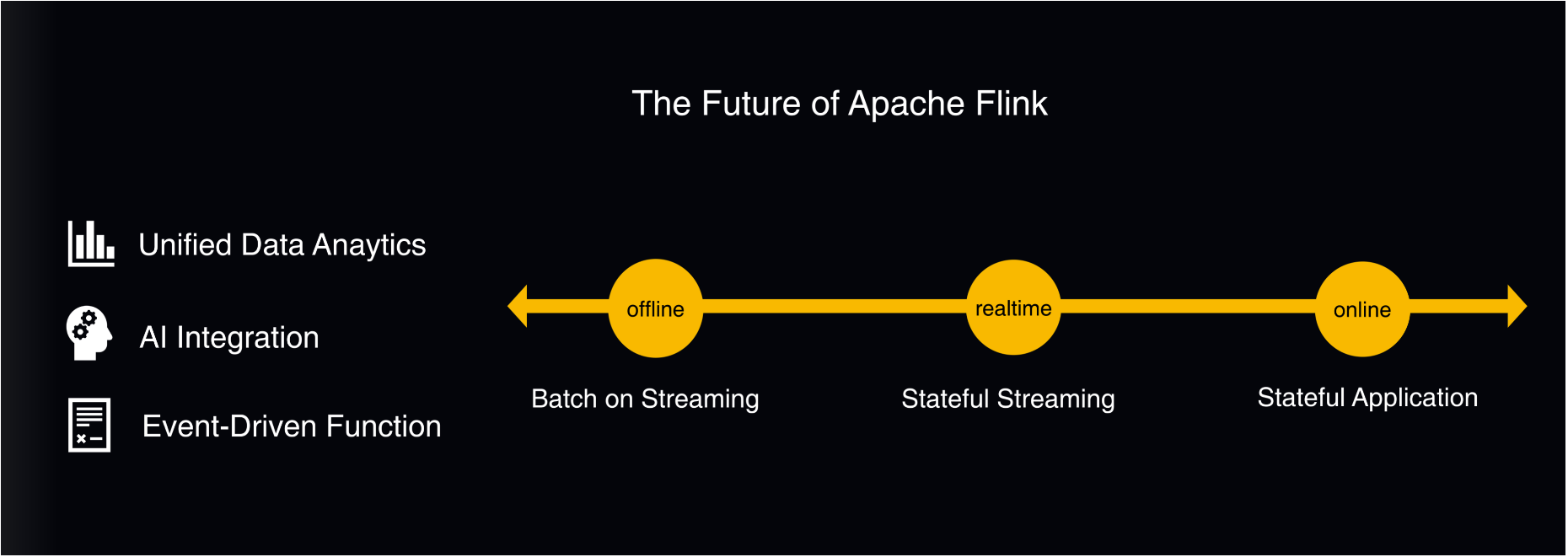

Today, Flink is mainly used for data analysis, especially real-time data analysis. In essence, Flink is a stream processing engine designed for real-time data analysis, real-time risk control, and real-time ETL processing. But, in the future, the community hopes that Flink will continue to evolve into a full-fledged and unified data engine.

In terms of offline data processing, at Alibaba we hope that Flink will build on its stream processing capabilities to further unify batch and stream processing and provide a unified solution for data processing and analysis. We also see that Flink is evolving towards online data analysis and processing. In this area, Flink leverages its core advantages, its Event-Driven Function capability, and its built-in state management to implement online function computing.

In recent years, AI scenarios have been developing rapidly, and the relevant computing scales are growing. Therefore, the Flink community, especially the community in China, also hopes to actively embrace and support AI scenarios in Flink machine learning. The community is even cooperating with AI-native deep learning engines, such as Flink + TensorFlow and Flink + PyTorch, to provide an end-to-end solution for big data + AI.

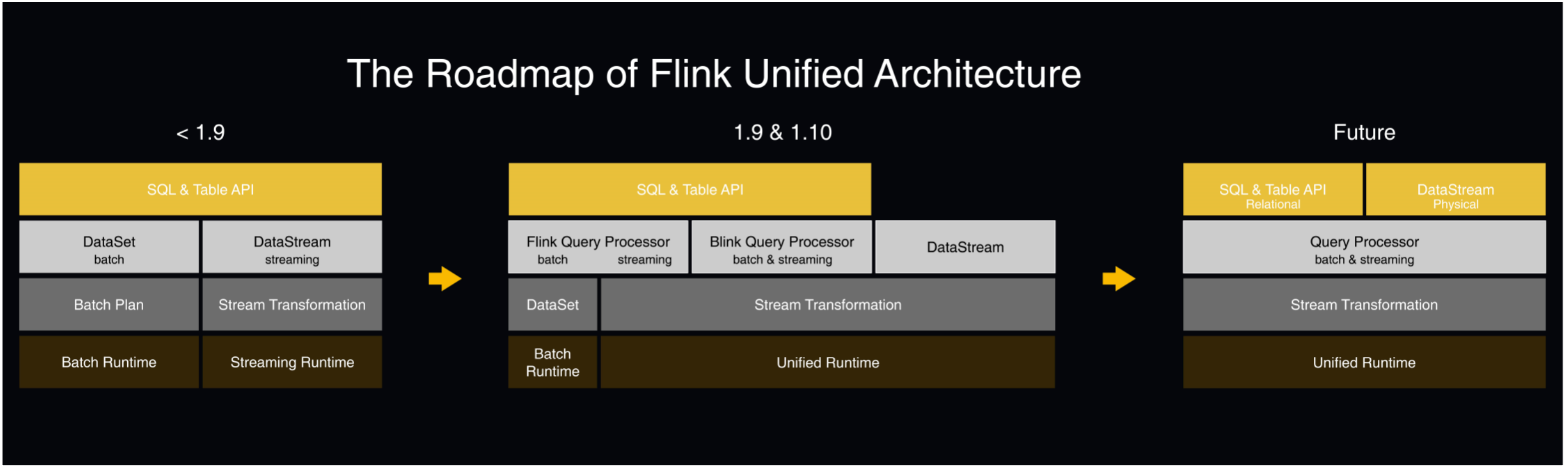

The following figure shows the development roadmap of Apache Flink's unified batch and stream processing operations. Before version 1.9, Flink's batch and stream processing belonged to two different code paths, DataSet and DataStream were two independent APIs, and two different runtime environments existed. A high level of unified batch and stream processing had not yet been achieved. So, in Flink version 1.9 released in 2019 and the upcoming version 1.10, the community worked hard to unify batch and stream processing in the Flink architecture. After one year of hard work, Flink 1.10 finally achieved a high level of unified batch and stream processing in the Flink Task runtime environment, execution engine layer, and SQL and Table layers. However, the Flink architecture has not yet fully achieved the unification of batch and stream processing. In the future, the community hopes to use the DataSet and DataStream APIs to further unify batch and stream processing.

SQL has a well-deserved reputation as the best language for big data processing. It is also the most widely used language. Some unified SQL functions have been released in Flink 1.9, and more new functions will be released in Flink 1.10. For example, the new version will support unified batch and stream query processors and the complete Data Definition Language (DDL) function. In addition, Flink has also passed the test and validation sets of TPC-H and TPC-DS, and achieved production-level availability. Flink 1.10 also provides enhanced Python support. Currently, Flink SQL allows the convenient use of user-defined functions (UDFs) in Python. In addition, Flink has actively embraced the Hive ecosystem and made Flink SQL compatible with Hive. This allows users to try out new Flink technologies at a very low cost.

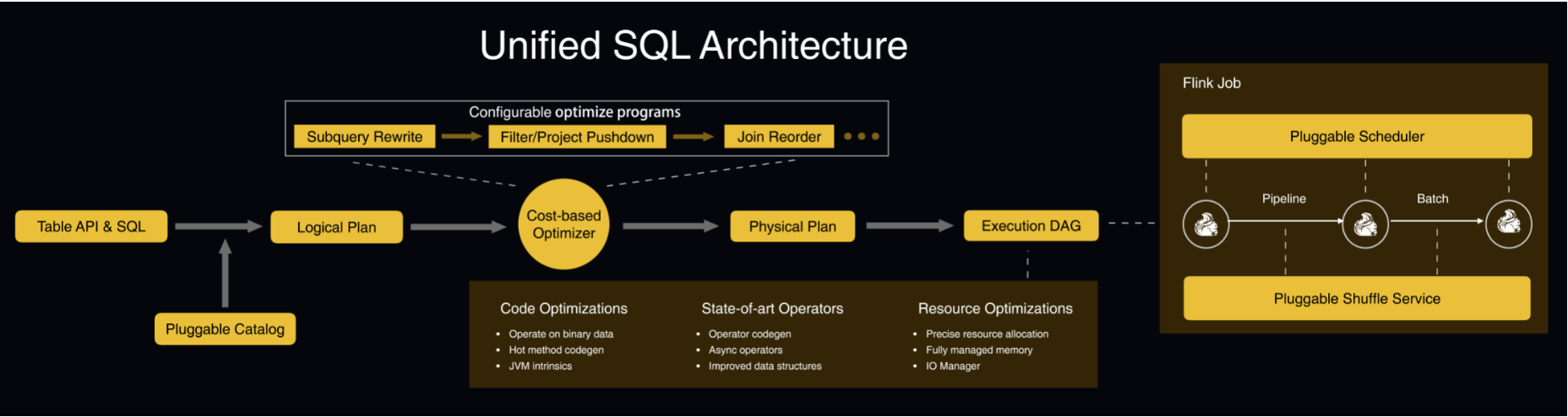

The following section describes how the Flink Unified SQL architecture unifies batch and stream processing operations at the technical level. For a user-entered SQL statement, batch and stream processing may read data in the same mode, but the output result may be a one-time or continuous output. In Flink, you can use a unified processor to parse, compile, and optimize user-entered SQL statements and finally submit a Flink job to a Flink cluster to be run.

The new Flink versions provide many technical optimizations for query processing, such as the optimization of execution plans and policies, execution operators, binary data structure, automatic code generation, and of java virtual machines (JVM). These optimizations make SQL-compiled jobs more efficient. In terms of Runtime, the Flink execution engine has also been restructured. The core underlying functions have been abstracted into pluggable scheduling policies and the Shuffle Service. This makes Runtime very flexible. You can freely adapt to the batch and streaming job modes, and even convert streaming operators and batch operators in the same job.

In order to allow everyone to truly use Flink SQL, we need to consider more than excellent kernel technology and comprehensive functions. We also need to look at migration costs. Ideally, you should be able to enjoy the new technological achievements of Flink SQL without modifying your existing systems, data, or metadata. Therefore, one of Flink SQL's major achievements in 2019 was to better connect with the Hive ecosystem.

In Flink 1.10, SQL with unified batch and stream processing can be seamlessly connected to the Hive metastore so that you can directly share metadata with Hive. Flink Connector can directly read partition table data in Hive without causing any negative effects. Flink is also compatible with Hive UDFs and can run directly in a Hive cluster without the need to define additional clusters. Due to these changes, users can easily switch between Hive SQL and Flink SQL at a very low cost. Another inherent advantage of Flink SQL is its support for streaming data. That is, the same set of business logic can process real-time data from Kafka and other message queues while processing Hive data.

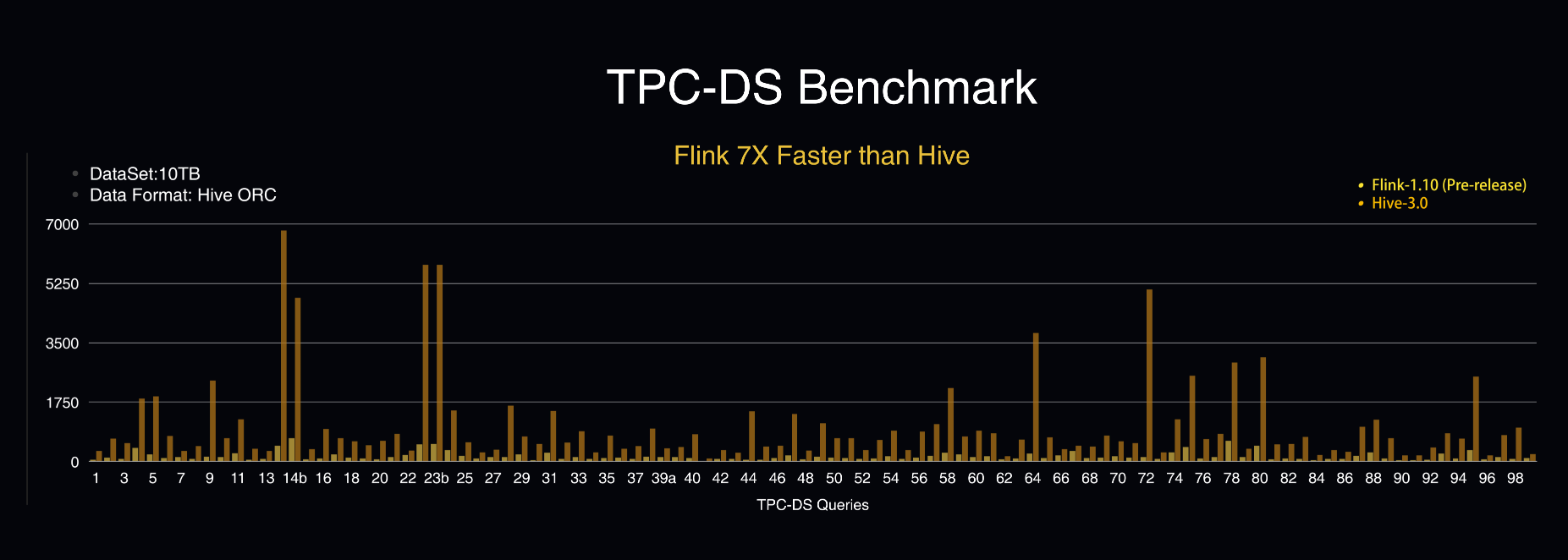

The following figure shows Flink's performance in the TPC-DS benchmark test. Here, the dataset size is 10 TB and the data format is Hive ORC. Hive 3.0 and the Flink 1.10 pre-release were tested against each other.

The results show that not only did Flink run through 99 TPC-DS queries, but its performance was seven times better than Hive. The benchmark test shows us that Flink SQL is among the industry leaders in all aspects such as functional completeness and performance and has also achieved production-level availability.

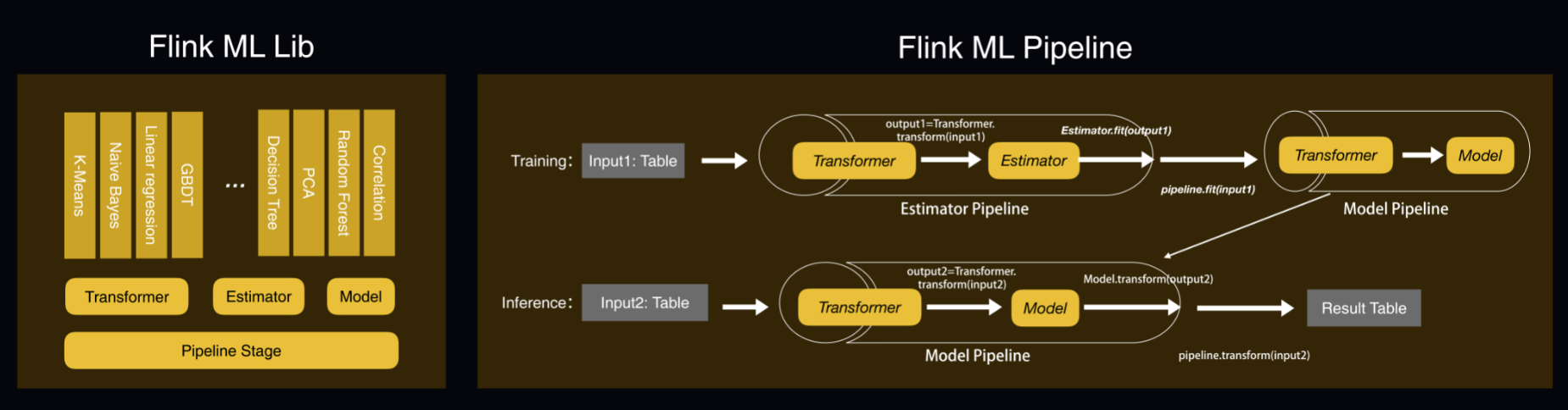

In 2019, AI was the most popular topic in technology circles. In addition to data processing, Flink also hopes to better embrace AI scenarios. In 2019, Flink first laid the foundation for machine learning in the AI field. Its first step was to implement the basic APIs of the Flink Machine Learning library (Flink ML Lib). This was called ML Pipeline.

The core of ML Pipeline is the machine learning process, and its main concepts include Transformer, Estimator, and Model. Developers of Flink machine learning algorithms can use these APIs to develop different Transformers, Estimators, and Models, allowing them to implement a variety of typical machine learning algorithms. This is very convenient. ML Pipeline-based APIs can freely combine components to build machine learning training and prediction processes.

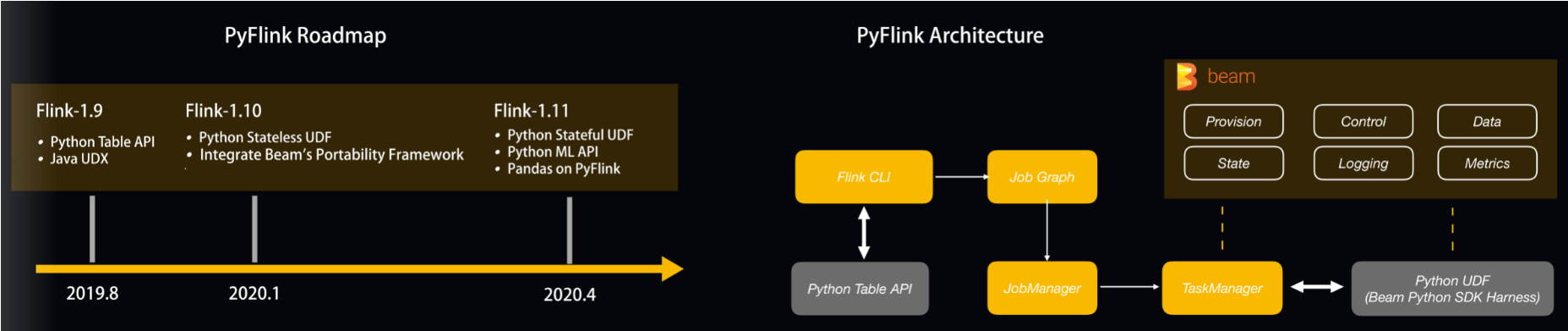

AI developers prefer Python to SQL. Therefore, the support for Python is particularly important for Flink. In 2019, the Flink community invested a lot of resources to improve Flink's Python ecosystem. These efforts gave birth to the PyFlink project. In addition, Python supports Table APIs in Flink 1.9. However, this is not enough. Flink 1.10 will also provide support for Python UDFs. Two technical solutions could achieve this goal. The first was to implement communication from Java to Python from scratch, while the second was to directly use a mature framework. Fortunately, the Beam community provides strong support for Python. This led the Flink community to cooperate with the Beam community. Flink uses the Python resources of Beam, including SDKs, frameworks, and data communication formats. In the future, Flink will further improve its support for Python APIs and UDFs, increase support for Python in ML Pipeline, and introduce more mature Python libraries.

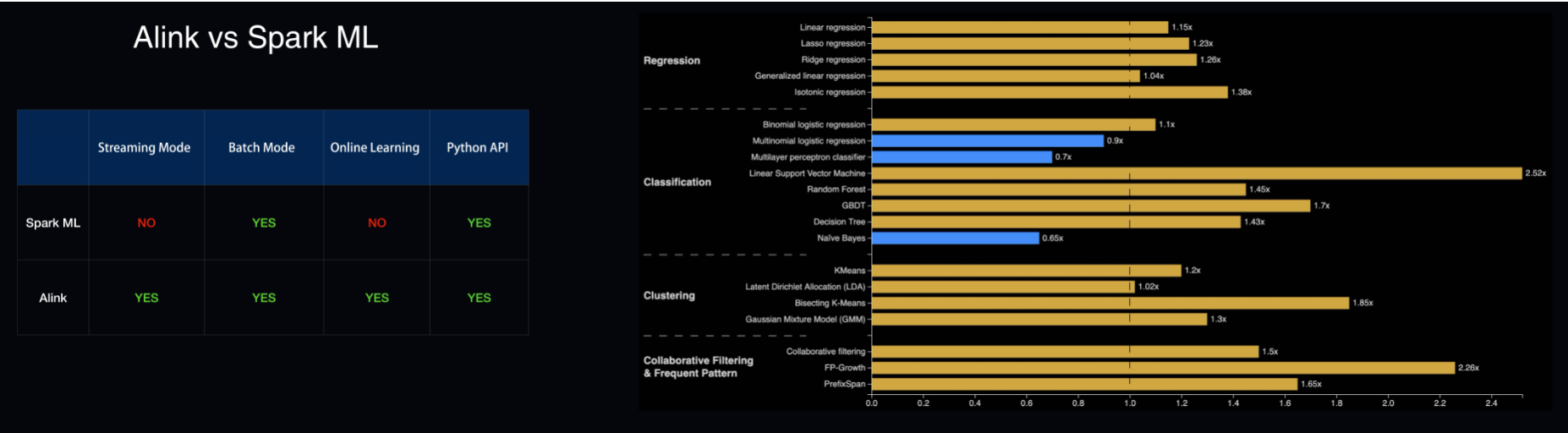

As we all know, Alibaba launched Blink, its internal Flink version, in 2018. Alink is a Flink-based machine learning algorithm library developed by the PAI team of Alibaba Cloud. Alink is a distributed machine learning algorithm library with unified batch and stream processing. It not only makes full use of Flink's unified batch and stream computing capabilities, but also provides some advantages in machine learning infrastructure. Alink has been integrated with Alibaba business scenarios. Currently, we are contributing hundreds of machine learning algorithms in Alink to the Flink community. We hope that they will become a new-generation FlinkML. To provide everyone with the technical benefits of Alink, Alibaba decided to open source the Alink project.

In contrast to mainstream machine learning algorithm libraries, Alink supports both batch training and online machine learning scenarios. In offline machine learning scenarios, Alink is comparable to the mainstream Spark ML. All algorithms in their function sets are basically consistent, and Alink and Spark ML provide similar performance in offline training scenarios. However, the advantage of Alink is that some of its algorithms can use stream computing to better implement online machine learning.

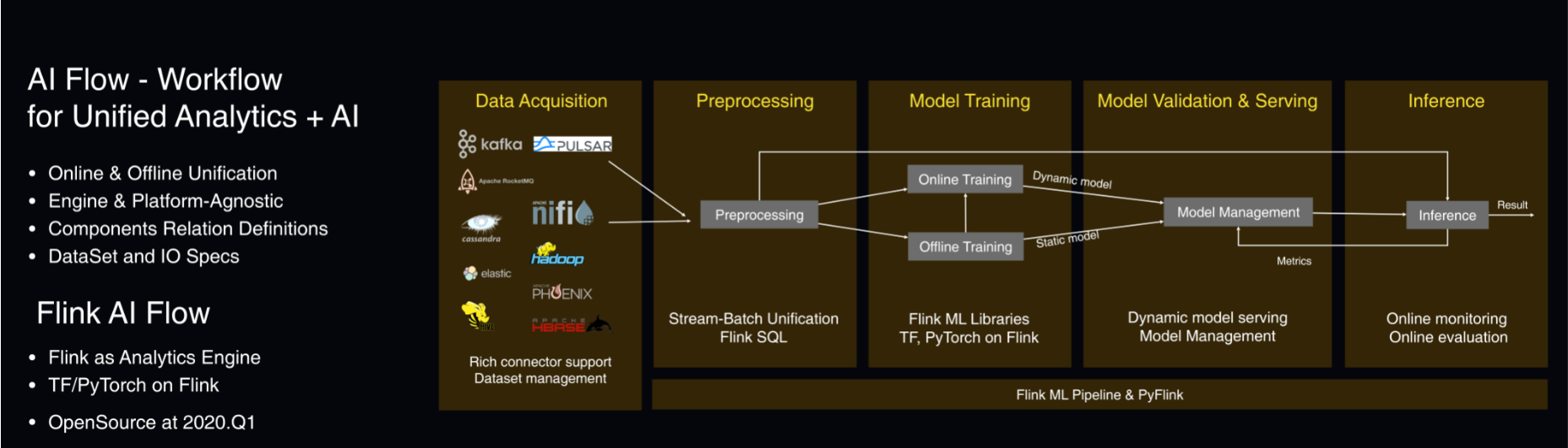

AI Flow, a new AI project, also deserves our attention. AI Flow is a processing workflow platform for big data and AI. By defining metadata formats and the relationships between different data in AI Flow, you can easily build a set of big data and AI processing workflows. These workflows are not bound to an engine or platform. This means that you can use Flink's unified batch and stream processing to build your own big data and AI solutions. Currently, we are still getting the AI Flow project ready. We expect to open source it by following the Alink model in the first quarter of 2020.

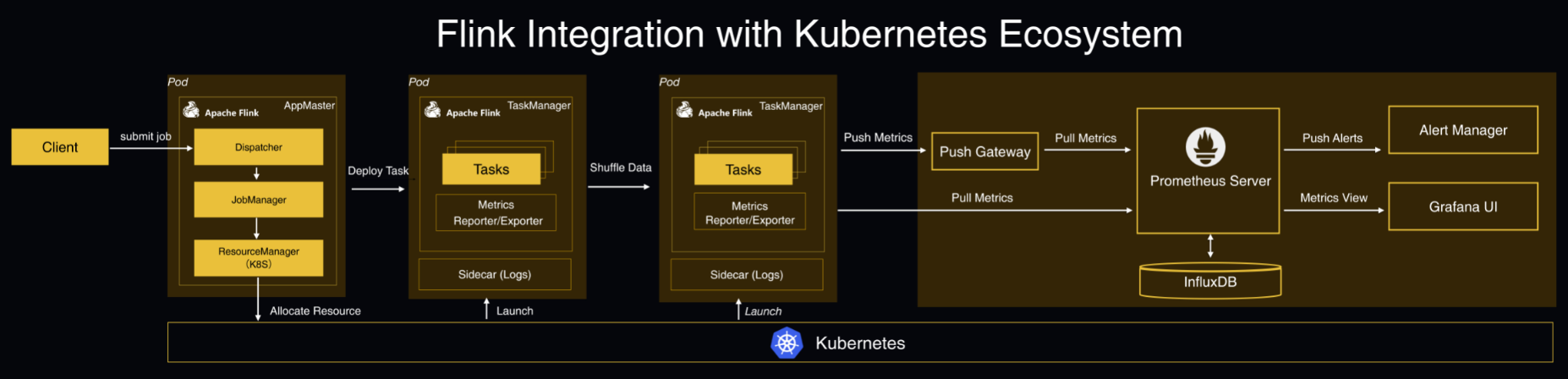

Flink 1.10 will provide a function to integrate Flink with the Kubernetes ecosystem, enabling Flink to run natively on the Kubernetes management platform. Placing Flink on Kubernetes provides the following advantages:

Blink was officially open sourced in March 2019. At this time, Alibaba wanted to contribute Blink's capabilities back to Flink to help build the Flink community. Flink has basically completed this process through its 1.9 version and the upcoming 1.10 version. Over the past 10 months, Alibaba has contributed more than 1 million lines of code to the Flink community, providing a large amount of the architectural optimization work involved in Blink back to the Flink community. Our contributions covers many aspects such as Runtime, SQL, PyFlink, and ML.

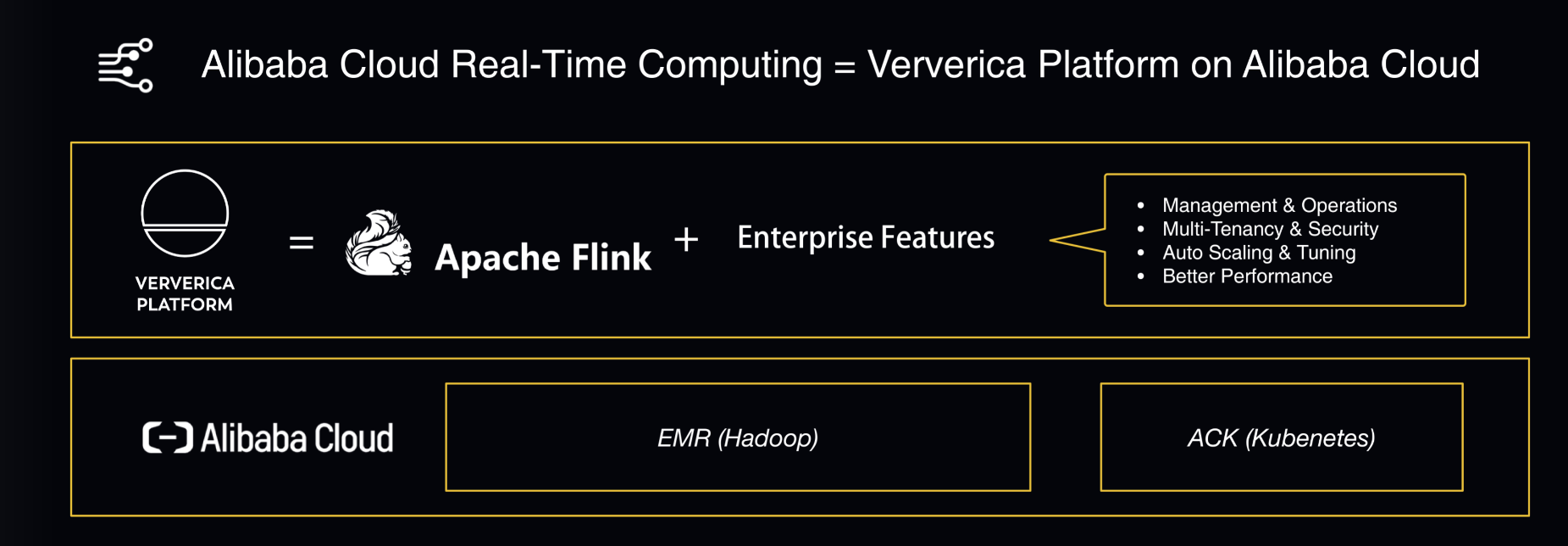

After gradually contributing the achievements we made in Blink to the Flink community, Alibaba decided to gradually merge the two kernels into one in 2020. We will merge the Blink kernel into the Flink kernel to fully support the development of the open-source community. In the future, Alibaba Cloud products and internal services will be implemented based on the open-source Flink kernel. In addition, the Alibaba technical team and the Flink founding team worked together to build the Flink Enterprise Edition named Ververica Platform. This new Enterprise Edition will support Alibaba's internal and cloud businesses. Alibaba will also invest more in the development of open-source Flink and the construction of the Flink community. We hope to work with colleagues in the industry to encourage the development of the Chinese Flink community.

Lyft's Large-scale Flink-based Near Real-time Data Analytics Platform

206 posts | 54 followers

FollowApache Flink Community China - March 29, 2021

Alibaba Clouder - December 2, 2020

Alibaba Clouder - November 25, 2020

5141082168496076 - February 20, 2020

Apache Flink Community China - January 11, 2021

Alibaba Cloud Ambassador - October 26, 2021

206 posts | 54 followers

Follow Realtime Compute for Apache Flink

Realtime Compute for Apache Flink

Realtime Compute for Apache Flink offers a highly integrated platform for real-time data processing, which optimizes the computing of Apache Flink.

Learn More Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More MaxCompute

MaxCompute

Conduct large-scale data warehousing with MaxCompute

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn MoreMore Posts by Apache Flink Community