By Huolang, from Alibaba Cloud Storage Team

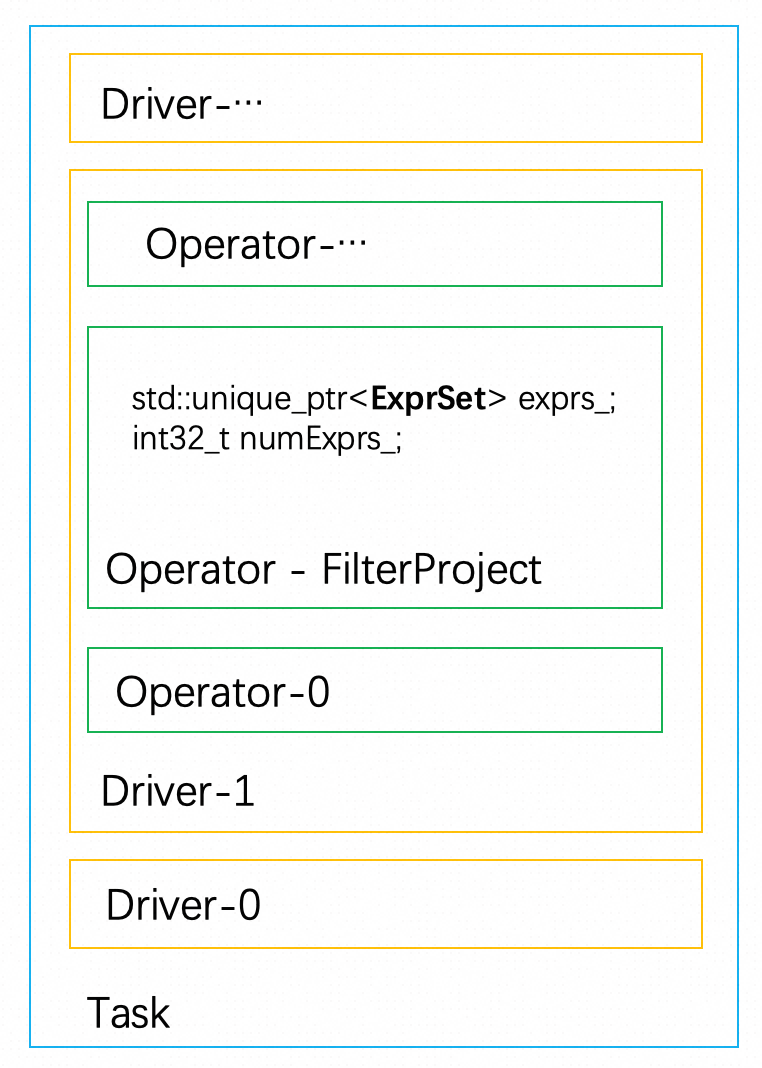

Velox is a unified computing engine developed by Meta that is primarily used in Presto and Spark architectures. Implemented in C++, Velox is a vectorized computing engine. Its execution engine consists of concepts such as Task, Driver, and Operator, which execute internally to externally. The Driver corresponds to the running thread, while the Operators use the volcano model-pull mode to execute sequentially.

Velox converts the Plan into a tree composed of PlanNodes, and then converts the PlanNodes into Operators. The base class of the Operators mainly defines interfaces such as addInput, IsBlocked, and getOutput to handle data processing and flow.

Taking the Operator of FilterProject as an example, the variable std::unique_ptr exprs_ is used to perform filtering and projection calculations. Since ExprSet is the core component of the FilterProject computing, this article focuses on explaining how ExprSet performs computations.

ExprSet is an encapsulation of Expr, which represents executable expressions in Velox.

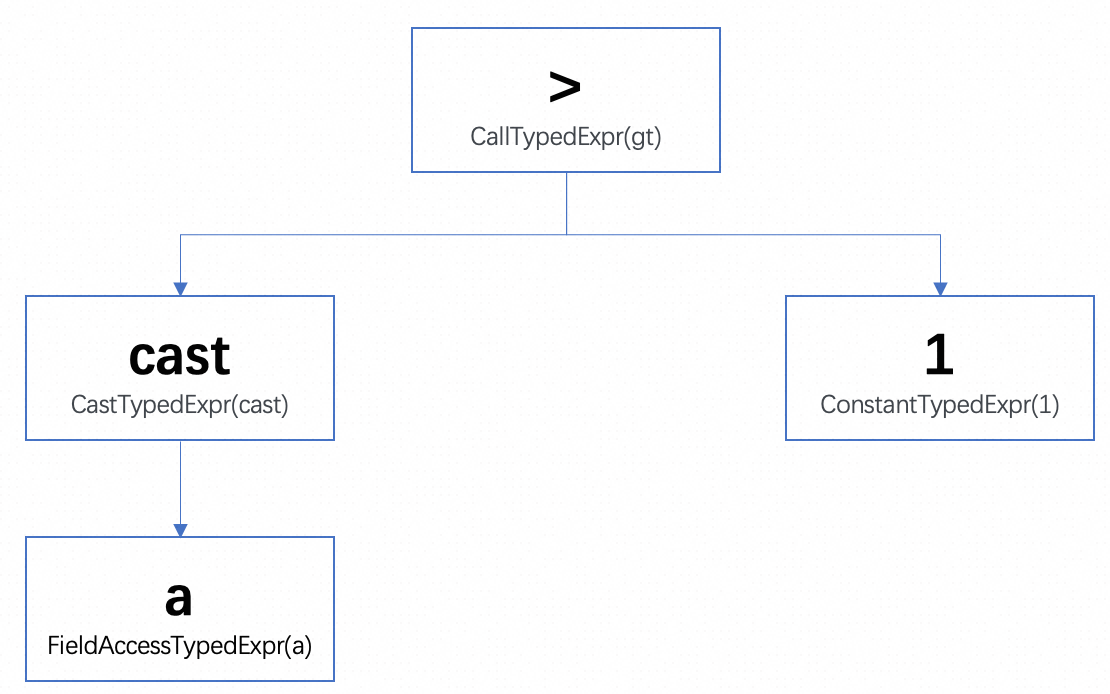

This article uses the expression cast(a as bigint) > 1 as an example to illustrate the execution process of Velox expressions, with some source code references included.

Velox is a vectorized computing engine, and the computing target of a Velox expression is a Vector. To optimize performance and memory usage, Velox provides various encoded Vectors that adapt to different scenarios, such as FlatVector, SimpleVector, and DictionaryVector.

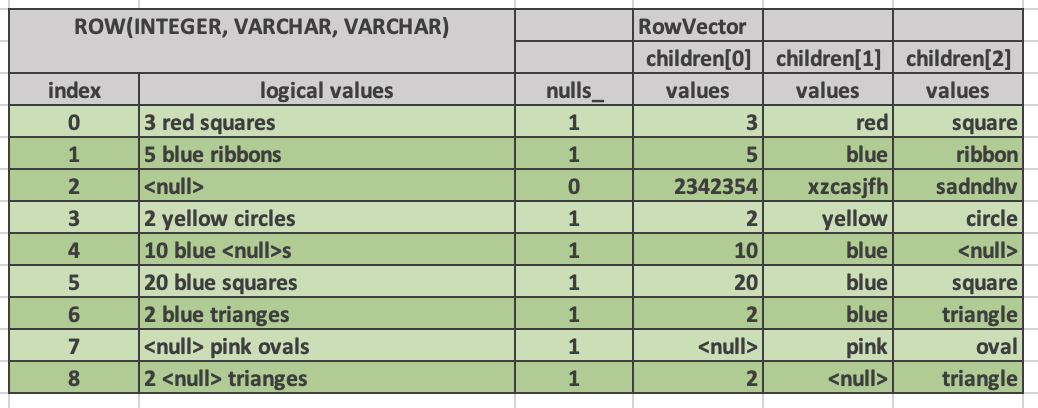

Velox has a structure called RowVector, which represents multi-column vectors. In terms of logical representation, RowVector functions as a column table model, while in terms of storage, it is an array that contains column vectors. The size of the children refers to the number of columns, and each column vector can be of the type FlatVector or DictionaryVector.

The following example demonstrates the format of a RowVector, which consists of three columns: INTEGER, VARCHAR, and VARCHAR.

Next, a three-column RowVector is used as an example. The logical values of the RowVector are as follows,

a<string> |

b<int> |

c<string> |

| "2" | 3 | "a" |

| "a5" | 0 | "b" |

| null | 4 | "c" |

| "-1" | 4 | "d" |

In this article, we will use the given expression: cast(a as bigint) > 1 to investigate the internal implementation of Velox.

Next, I'll raise a few questions and answer the following questions step by step through the source code.

• How is the expression represented, and how is it executed?

• Is it row-by-row execution or column batch execution?

• The input has three columns: A, B, and C. Will columns B and C be used in the computing process? That is, will B and C take up extra memory?

• If column A is encoded in a dictionary, will the expression be computed after column A is materialized? Is there any optimization for different coding?

• Will the expression computing crash if the conversion fails in column A? Is an exception reported, or is the result null?

• In the process of execution, what optimization measures does Velox have?

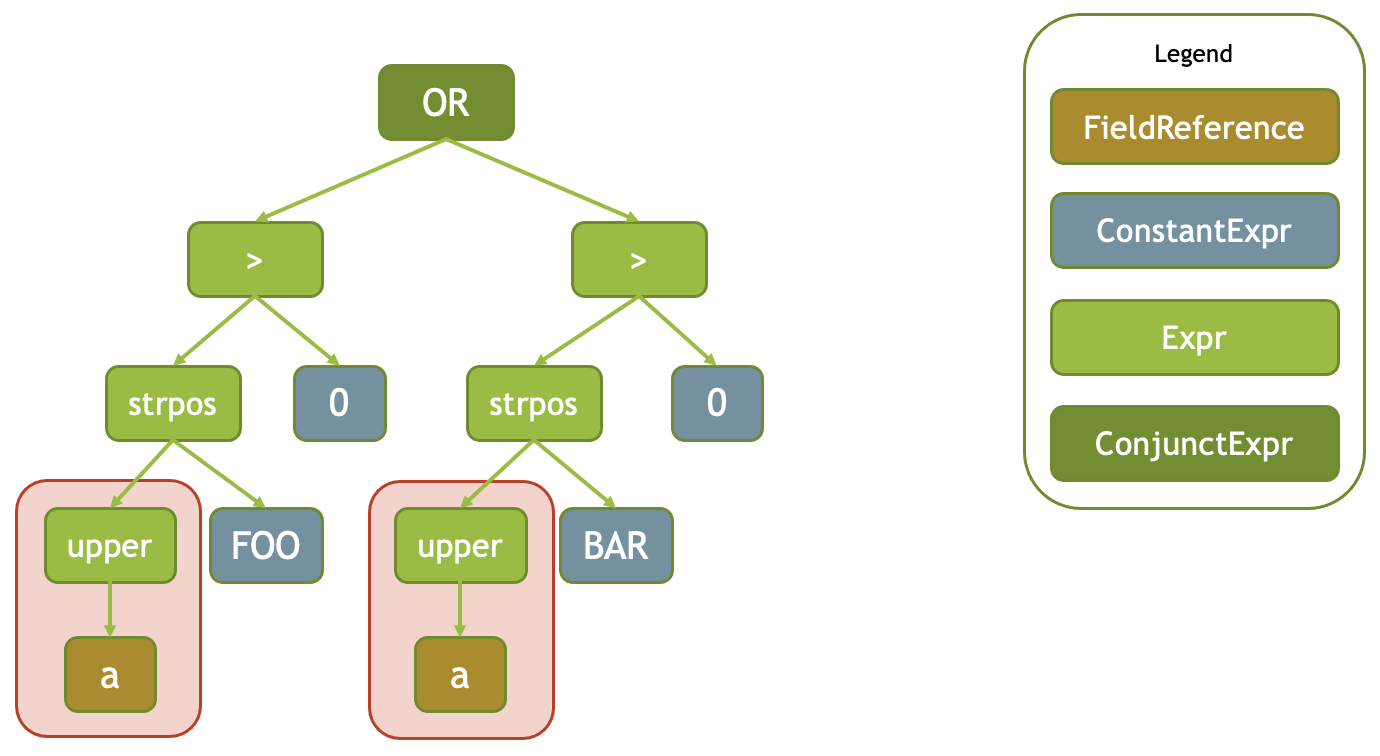

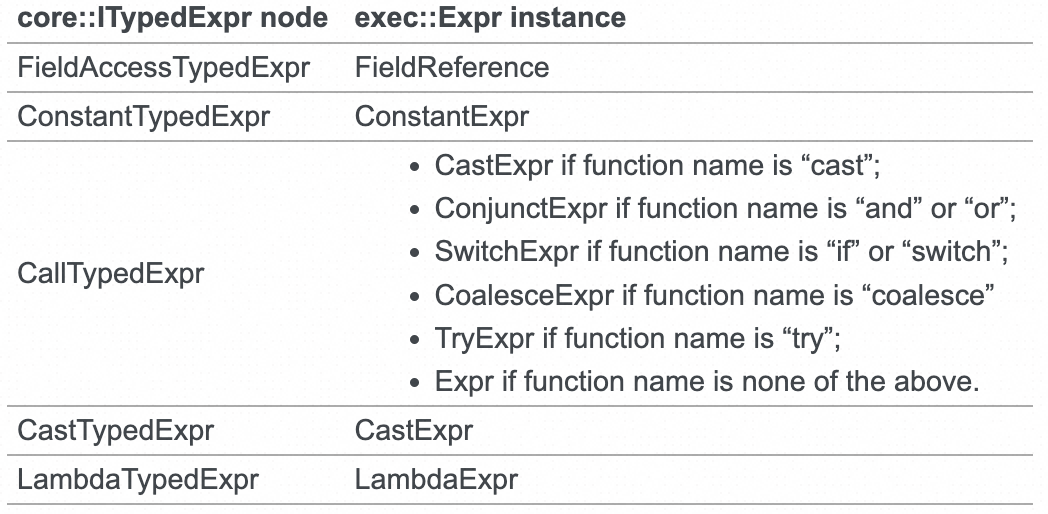

Like expressions in other languages, a Velox expression is often described through a tree. The static nodes of the expression tree are from core::ITypedExpr and contain five types

| Node type | Description |

| FieldAccessTypedExpr | Represents a column in a RowVector and acts as the leaf node of an expression. |

| ConstantTypedExpr | Represents a constant value and acts as a leaf node. |

| CallTypedExpr | • Represents a function call expression. Child nodes represent input parameters. • Represents a special type expression, such as if, and, or, switch, cast, try, or coalesce expression. |

| CastTypedExpr | Converts expression types. |

| LambdaTypedExpr | lambda expressions, serving as leaf nodes |

For the cast(a as bigint) > 1 expression, its corresponding expression tree (before compilation) is as follows:

The expression of Velox mainly includes compilation and execution. The expression compilation process is similar to the process of converting a PlanNode to an Operator, that is, converting a static expression in an execution plan to an executable expression instance.

The expression is expressed as core::ITypedExpr before compilation and as exec::Expr after compilation.

Execution process: Use deep traversal to execute, because the parent node depends on the execution result of the child node.

Expr:type_ indicates the returned type, and inputs_ indicates its child node. If the current expression is a function, vectorFunction_ indicates a pointer to the corresponding function.

class Expr {

...

private:

const TypePtr type_;

const std::vector<std::shared_ptr<Expr>> inputs_;

const std::string name_;

const std::shared_ptr<VectorFunction> vectorFunction_;

const bool specialForm_;

const bool supportsFlatNoNullsFastPath_;

std::vector<VectorPtr> inputValues_;

}The execution is mainly performed through the Expr::eval method. The function is the following:

• Rows indicate which rows need to be involved in the calculation.

• Context contains the context associated with the input RowVector and memory pool.

• Result indicates the result after the expression is executed. The type is VectorPtr.

class Expr {

...

public:

void eval(

const SelectivityVector& rows,

EvalCtx& context,

VectorPtr& result,

const ExprSet* FOLLY_NULLABLE parentExprSet = nullptr);

...

}The main members of the EvalCtx structure are listed as follows:

class EvalCtx {

const RowVector* FOLLY_NULLABLE row_;

bool inputFlatNoNulls_;

// Corresponds 1:1 to children of 'row_'. Set to an inner vector

// after removing dictionary/sequence wrappers.

std::vector<VectorPtr> peeledFields_;

// Set if peeling was successful, that is, common encodings from inputs were

// peeled off.

std::shared_ptr<PeeledEncoding> peeledEncoding_;

}Back to the Expr::eval method, its main call stack is as follows:

evalAllImpl

evalSpecialFormWithStats(rows, context, result);

As can be seen from the call sequence, Velox expressions are generally executed in a post-order traversal manner. This means that the expression computation of the child nodes is executed first, followed by the applyFunction of the current node.

In theory, the logic of each expression during post-order traversal execution is not very complex. It simply requires putting the expression result of each child node directly into the inputValues_ for recursive calls. So, why are there intermediate processes such as evalEncoding and evalWithNulls? In fact, Velox has been highly optimized for specific scenarios in order to achieve optimal performance. In the following section, I will explain the execution of expressions by combining the aforementioned issues with these optimizations.

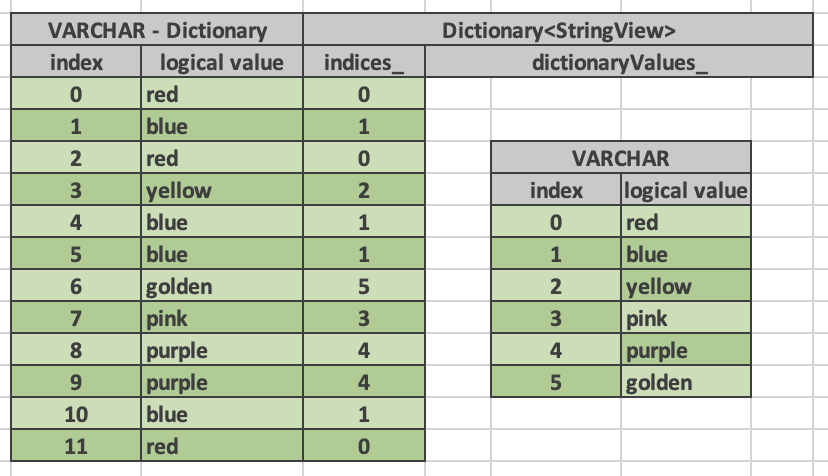

In the implementation of evalEncodings, I will first introduce DictionaryVector and then explain the process of peeling encodings from DictionaryVector.

DictionaryVector is a heavily used vector type in Velox, and it represents a dictionary encoding. The implementation of DictionaryVector includes an internal vector member called dictionaryValues_. The indices_ record the field index of each row of data corresponding to the internal vector, which is useful in scenarios with duplicate values.

• Advantages: It occupies small memory space, and only dictionaryValues_ is computed, reducing repetitive computations.

• Disadvantages: Decoding is required when extracting values from the outer vector. The decoding process involves finding the value of the internal vector through indices_. Moreover, DictionaryVector supports multi-layer nesting. In such cases, to obtain the value of a row, you need to traverse through each layer of the innermost vector. Hence, its performance may not be satisfactory.

To facilitate accessing values from DictionaryVector, Velox provides the DecodedVector class, which supports the materialization of DictionaryVector. Its implementation involves peeling off each layer of the DictionaryVector to obtain the innermost Vector.

In expression computing, suppose that column A is of the Dict(Flat) type. If the length of the innermost vector of A is 3, the value is ["2", "3", "5"], and the length of A is 1000, the value is ["2", "3", "3", "3", "5"...], the value range is limited to "2", "3", "5".

When executing cast(a as bigint), the intuitive logic would be to traverse A, loop 1000 times, and perform cast(a as bigint). However, this is not the most efficient approach.

In fact, only the inner vector needs to be computed, requiring only three loops instead of computing 1000 materialized values. This is the significance of the evalEncodings existence. In cases involving multiple layers, such as Dict(Dict(Dict(Flat))), materialization before computation would result in wastage of computing resources. Similar problems also occur with ConstantVector encoding. Here is an example of peeling DictionaryVector:

The main task of evalEncodings is to extract the value behind the special encoding vector, such as DictionaryVector, rather than directly compute the logical value of the outer layer (to avoid potential materialization costs). The process is as follows:

• Determine whether each specific field is of the Flat type. If it is not of the Flat type, peel the encoding to obtain the peeled vector and encoding.

• The results are obtained by calculating the innermost vector after peeling.

• Then, encapsulate the preceding result by using the encoding in the first step.

The process of peeling mainly uses the PeeledEncoding::peel method to ultimately obtain an array of VectorPtr, which contains the inner vector.

std::vector<VectorPtr> peeledVectors;

auto peeledEncoding = PeeledEncoding::peel(

vectorsToPeel, rowsToPeel, localDecoded, propagatesNulls_, peeledVectors);The implementation process is a do-while loop, which peels fields one by one (line 5 ) and layer by layer (line 20 ) until the innermost layer is not encoded by DICTIONARY (line 13 ). The complete implementation also includes Const type processing. Here, the details are omitted to focus on the main logic.

do {

peeled = true;

BufferPtr firstIndices;

maybePeeled.resize(numFields);

for (int fieldIndex = 0; fieldIndex < numFields; fieldIndex++) {

auto leaf = peeledVectors.empty() ? vectorsToPeel[fieldIndex]

: peeledVectors[fieldIndex];

if (leaf == nullptr) {

continue;

}

...

auto encoding = leaf->encoding();

if (encoding == VectorEncoding::Simple::DICTIONARY) {

...

setPeeled(leaf->valueVector(), fieldIndex, maybePeeled);

} else {

...

}

}

if (peeled) {

++numLevels;

peeledVectors = std::move(maybePeeled);

}

} while (peeled && nonConstant);The peeledVectors array obtained, in which elements are sequenced in terms of the ordinal numbers of the fields, will eventually be placed in the peeledFields_ of ExprCtx.

How does ExprCtx use this peeled vector? Note that ExprCtx has a getField method, which is used to get the vector of a specific column for computing. Then, find the place to call getField.

const VectorPtr& EvalCtx::getField(int32_t index) const {

const VectorPtr* field;

if (!peeledFields_.empty()) {

field = &peeledFields_[index];

} else {

field = &row_->childAt(index);

}

...

return *field;

}Go back to the beginning of the expression execution process. When evalAllImpl is executed, there is the following code:

if (isSpecialForm()) {

evalSpecialFormWithStats(rows, context, result);

return;

}In the cast(a as bigint) > 1 expression, the execution expression corresponding to A is FieldReference, which conforms to isSpecailForm().

class FieldReference : public SpecialFormTherefore, when FieldReference is executed (FieldReference is a leaf node, and post-order traversal will be executed first), evalSpecailForm will be called, and context.getField(index_) (line 12) will be called in its implementation.

The preceding process shows that the peeled inner vector is used for computing when the field values of the RowVector are obtained.

if (inputs_.empty()) {

row = context.row();

} else {

// ...

}

if (index_ == -1) {

auto rowType = dynamic_cast<const RowType*>(row->type().get());

VELOX_CHECK(rowType);

index_ = rowType->getChildIdx(field_);

}

VectorPtr child =

inputs_.empty() ? context.getField(index_) : row->childAt(index_);

// ...• At the beginning of Eval, evalEncodings is used to complete the peeling, and the peeled results are placed in the context.

• Then, call evalAllImpl to traverse each leaf node. When FieldReference is executed as a leaf node, the peeled result has been used.

• At the same time, we also solved a question from line 12: Will cast(a as bigint) > 1 use the B/C field? The answer is no. Only the value corresponding to index_ will be taken. Will the B/C field be used in the process of peeling? The answer is also no, because the distinct_fields is computed according to the expression, not the input content. There is only A in the expression, so the distinct_fields will only peel A.

• After computing the peeled data, Velox will wrap the original encoding into the result. For example, cast(a as bigint) is actually executed three times. What is really needed externally is 1,000 results, and wrap encoding is required.

As the name suggests, evalWithNulls is responsible for handling null values. Why do we need to handle null values? It is well known that most functions used in SQL produce a null result when the input data is null. For example, the result of 1 + null is null.

In this case, we only need to determine whether a line of input in the expression is null, instead of actually computing the expression. Now let's take a look at the specific implementation process of Velox's evalWithNulls:

• Check if each column has a null value (line 6)

• If there are null values (line 12), remove the null rows (line 14) and hand them over to evalAll for processing. evalAll only processes non-null rows (line 17).

• After processing, add the null values to the result (line 20)

if (propagatesNulls_ && !skipFieldDependentOptimizations()) {

bool mayHaveNulls = false;

for (auto* field : distinctFields_) {

const auto& vector = context.getField(field->index(context));

//...

if (vector->mayHaveNulls()) {

mayHaveNulls = true;

break;

}

}

if (mayHaveNulls) {

LocalSelectivityVector nonNullHolder(context);

if (removeSureNulls(rows, context, nonNullHolder)) {

ScopedVarSetter noMoreNulls(context.mutableNullsPruned(), true);

if (nonNullHolder.get()->hasSelections()) {

evalAll(*nonNullHolder.get(), context, result);

}

auto rawNonNulls = nonNullHolder.get()->asRange().bits();

addNulls(rows, rawNonNulls, context, result);

return;

}

}

}It can be seen that Velox simply removes null rows to avoid computing them.

After all the child nodes of the expression are executed, the applyFunction will be executed, indicating that the current expression node is a function call. Next, look at its core implementation:

• This includes optimization of ascii characters. If the input and output are both ascii, the callAscii function is used for more efficient processing.

• Then, the core (line 18) calls vectorFunction_->apply to process the result.

• The input is the inputValues_ array. The length of the array is equal to the number of child nodes of the function expression, and is used as the parameter of the function (in the preceding execution process, when the child nodes are traversed, the result is placed in the inputValues_).

• The result is the output, which is VectorPtr.

• It can be seen that the input parameters of the vectorFunction_ are column vectors, instead of rows of data.

void Expr::applyFunction(

const SelectivityVector& rows,

EvalCtx& context,

VectorPtr& result) {

stats_.numProcessedVectors += 1;

stats_.numProcessedRows += rows.countSelected();

auto timer = cpuWallTimer();

std::optional<bool> isAscii = std::nullopt;

if (FLAGS_enable_expr_ascii_optimization) {

computeIsAsciiForInputs(vectorFunction_.get(), inputValues_, rows);

isAscii = type()->isVarchar()

? computeIsAsciiForResult(vectorFunction_.get(), inputValues_, rows)

: std::nullopt;

}

try {

vectorFunction_->apply(rows, inputValues_, type(), context, result);

} catch (const VeloxException& ve) {

throw;

} catch (const std::exception& e) {

VELOX_USER_FAIL(e.what());

}

// ...

}From the definition of VectorFunction, it can be seen that the input parameter of apply is a list of column vectors. When implementing VectorFunction, you only need to inherit VectorFunction.

class VectorFunction {

// ...

virtual void apply(

const SelectivityVector& rows,

std::vector<VectorPtr>& args, // Not using const ref so we can reuse args

const TypePtr& outputType,

EvalCtx& context,

VectorPtr& result) const = 0;

}But not all Velox functions are implemented by inheriting VectorFunction. The answer is no. When implementing each function, dealing with column vectors as parameters can be complex. In fact, most functions only require a single line of processing logic, with other lines being traversed. These types of functions are called SimpleFunction in Velox. In scenarios where using column vectors as inputs provides distinct advantages, such as aggregate evaluation, column vectors encoded as Const, or column vectors encoded as Dictionary, the function can be implemented as a VectorFunction.

Most of Velox's functions are SimpleFunction, implementing the logic for single-line processing. In the simplest scenario, only the call function needs to be implemented.

template <typename T>

struct CeilFunction {

template <typename TOutput, typename TInput = TOutput>

FOLLY_ALWAYS_INLINE void call(TOutput& result, const TInput& a) {

if constexpr (std::is_integral_v<TInput>) {

result = a;

} else {

result = ceil(a);

}

}

};The above is the simplest form of SimpleFunction. Although SimpleFunction is row processing, Velox still supports many optimizations in function implementation:

Zero-copy string. Reusing input strings is supported by setting reuse_strings_from_arg.

Last question: How is SimpleFunction converted into VectorFunction? After all, VectorFunction is used in Expr, Velox is implemented through a simpleFunctionAdapter, and SimpleFunctionAdapterFactoryImpl is used when we register SimpleFunction functions.

// This function should be called once and alone.

template <typename UDFHolder>

void registerSimpleFunction(const std::vector<std::string>& names) {

mutableSimpleFunctions()

.registerFunction<SimpleFunctionAdapterFactoryImpl<UDFHolder>>(names);

}Let's look at the implementation of the SimpleFunctionAdapterFactoryImpl:

template <typename UDFHolder>

class SimpleFunctionAdapterFactoryImpl : public SimpleFunctionAdapterFactory {

public:

// Exposed for use in FunctionRegistry

using Metadata = typename UDFHolder::Metadata;

explicit SimpleFunctionAdapterFactoryImpl() {}

std::unique_ptr<VectorFunction> createVectorFunction(

const core::QueryConfig& config,

const std::vector<VectorPtr>& constantInputs) const override {

return std::make_unique<SimpleFunctionAdapter<UDFHolder>>(

config, constantInputs);

}

};It can be seen that the conversion from SimpleFunction to VectorFunction is implemented in the createVectorFunction.

Where does this conversion take place? It takes place during the construction of Expr. During ExprPtr compileExpression, simpleFunction will be converted into VectorFunction and put into Expr.

auto simpleFunctionEntry =

simpleFunctions().resolveFunction(call->name(), inputTypes)) {

VELOX_USER_CHECK(

resultType->equivalent(*simpleFunctionEntry->type().get()),

"Found incompatible return types for '{}' ({} vs. {}) "

"for input types ({}).",

call->name(),

simpleFunctionEntry->type(),

resultType,

folly::join(", ", inputTypes));

auto func = simpleFunctionEntry->createFunction()->createVectorFunction(

config, getConstantInputs(compiledInputs));

result = std::make_shared<Expr>(

resultType,

std::move(compiledInputs),

std::move(func),

call->name(),

trackCpuUsage);One more question: If the conversion fails in column A, will the expression computing crash? Is an exception reported, or is the result null?

In the expression, what will happen when the conversion fails if the a in cast(a as bigint) is a string? Let's look directly at the source code. The expression corresponding to Cast is CastExpr.

class CastExpr : public SpecialFormNext, look at its evalSpecialForm implementation. context.applyToSelectedNoThrow is called many times in CastExpr's conversion. No exception will be thrown according to the name of the function.

context.applyToSelectedNoThrow(rows, [&](int row) {

// ...

}Is that the truth? Next, look at its implementation. It does handle the exception.

template <typename Callable>

void applyToSelectedNoThrow(const SelectivityVector& rows, Callable func) {

rows.template applyToSelected([&](auto row) INLINE_LAMBDA {

try {

func(row);

} catch (const std::exception& e) {

setError(row, std::current_exception());

}

});

}See the implementation of setError: Line 5 actually throws an exception, which is judged according to the throwOnError_ field of EvalCtx. If throwOnError_ = true, an exception will be thrown. Otherwise, an error message will be set in addError.

void EvalCtx::setError(

vector_size_t index,

const std::exception_ptr& exceptionPtr) {

if (throwOnError_) {

throwError(exceptionPtr);

}

addError(index, toVeloxException(exceptionPtr), errors_);

}In Expr.h, bool throwOnError_{true}, it can be seen that the default value is true, so when cast fails, an exception will be thrown. If you want to see that no exception is thrown, you can set it by ScopedVarSetter. In the implementation of TryExpr.cpp, there are similar calls. After setting, expressions wrapped by try will not throw an exception.

ScopedVarSetter throwOnError(context.mutableThrowOnError(), false);TryExpr will use context.errors() to obtain the error of the expression. In the process of handling the error, the result will be set to null.

In Velox, there is also a class called ExprSet, which stores a list of Exprs. ExprSet has an eval method that sequentially calls the eval method in the Expr list. The advantage of using ExprSet is that when processing multiple Exprs, common subexpressions can be processed only once, avoiding unnecessary repetition.

In practical applications of Operators, ExprSet is often used instead of directly using Exprs. For example, the FilterProject operator uses ExprSet to store one Expr for filtering and multiple Exprs for projection simultaneously.

Throughout the implementation process of expressions in Velox, specific optimizations have been made for different scenarios, which are worth learning. During expression execution, different execution paths with higher efficiency are chosen based on the input. The Velox source code contains many more details. However, due to space and level limitations, some related concepts are not covered, such as common subexpression detection, And/OR expression flattening, constant expression folding, and SIMD.

https://github.com/facebookincubator/velox

https://facebookincubator.github.io/velox/develop/expression-evaluation.html

https://facebookincubator.github.io/velox/develop/scalar-functions.html

Disclaimer: The views expressed herein are for reference only and don't necessarily represent the official views of Alibaba Cloud.

Now Available on Alibaba Cloud: Salesforce Sales Cloud, Service Cloud, and Platform

1,081 posts | 268 followers

FollowApsaraDB - November 14, 2024

Alibaba Cloud Community - December 20, 2023

ApsaraDB - July 26, 2023

ApsaraDB - August 4, 2023

Alibaba Cloud Native Community - January 6, 2023

ApsaraDB - May 17, 2023

1,081 posts | 268 followers

Follow Storage Capacity Unit

Storage Capacity Unit

Plan and optimize your storage budget with flexible storage services

Learn More Hybrid Cloud Storage

Hybrid Cloud Storage

A cost-effective, efficient and easy-to-manage hybrid cloud storage solution.

Learn More Hybrid Cloud Distributed Storage

Hybrid Cloud Distributed Storage

Provides scalable, distributed, and high-performance block storage and object storage services in a software-defined manner.

Learn More Data Lake Storage Solution

Data Lake Storage Solution

Build a Data Lake with Alibaba Cloud Object Storage Service (OSS) with 99.9999999999% (12 9s) availability, 99.995% SLA, and high scalability

Learn MoreMore Posts by Alibaba Cloud Community