It is a classic issue to find TopK in massive data, and the derived deep pagination query brings great challenges to analytical databases. This article introduces how the IMCI feature of the cloud-native relational database PolarDB addresses such challenges and ultimately outperforms ClickHouse whose performance is excellent in single table queries.

There is a common scenario in business systems where a batch of records is filtered based on given conditions. These records are sorted by user-specified conditions and displayed in paging mode. For example, filter out the products a merchant is selling, rank them by sales, and display them in pagination mode.

The preceding scenarios, reflected in databases, are often implemented with the TopK query like ORDER BY column LIMIT n, m. For example, assuming that 100 records are displayed on each page in the business system, page 1 can be displayed by ORDER BY column LIMIT 0, 100, and page 10001 can be displayed by ORDER BY column LIMIT 1000000, 100.

In the absence of indexes, such queries are often implemented in the database through the classic heap-based TopK algorithm. A heap of size K is maintained in the memory, and the top of the heap is the record currently ranked Kth. This heap is maintained in real-time during the execution of the algorithm to ensure that the records in the heap are always ranked in the top K. When the shallow pagination query is performed (as shown above on page 1), K is small. The heap-based TopK algorithm above is very efficient.

Deep pagination query also occurs in business scenarios (hereinafter referred to as deep pagination), such as page 10001 above. In this scenario, K is very large, and the heap with size K may not be cached in the memory, and the query results cannot be obtained by the preceding method. Even if the memory is sufficient, since the operation of maintaining the heap is out of order, when the heap is very large, the memory access efficiency of the traditional TopK algorithm is less efficient, and the final performance is unsatisfactory.

PolarDB IMCI uses the preceding method to implement such queries. When the memory is insufficient to cache the heap of size K, this query method degrades to a full-table sorting, and the system fetches the records in the corresponding position after sorting. Therefore, the performance in deep pagination queries is not very satisfactory. To this end, we analyzed the characteristics of deep pagination scenarios and the problems of traditional solutions, investigated related research and industrial implementations, and redesigned the Sort/TopK operator of PolarDB IMCI. In the test scenario, the redesigned Sort/TopK operator significantly improves the performance of PolarDB IMCI in deep pagination scenarios.

TopK is a very classic issue. There are many solutions to efficiently implement TopK queries. The core of these solutions is to reduce operations on non-result set data. Three main solutions have been applied in the industry:

It has been briefly introduced in the background part.

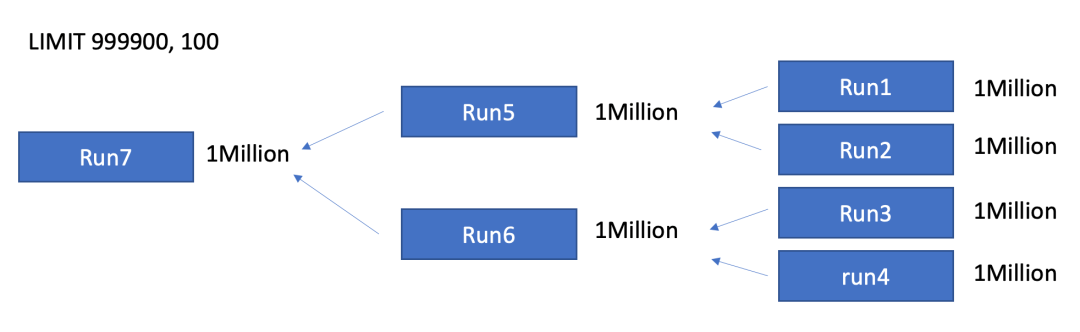

When the memory is insufficient to cache the Priority Queue of size K, some databases use merge sort to process TopK queries (such as PolarDB IMCI, ClickHouse, SQL Server, and DuckDB). Since the TopK query only needs to obtain the record ranked in the [offset, offset + limit) position, you do not need to sort all data during each merge sorted run. You only need to output the new sorted run with the length of offset + limit. The preceding truncation operation during merge can ensure the correctness of the result and reduce the number of operations on non-result set data.

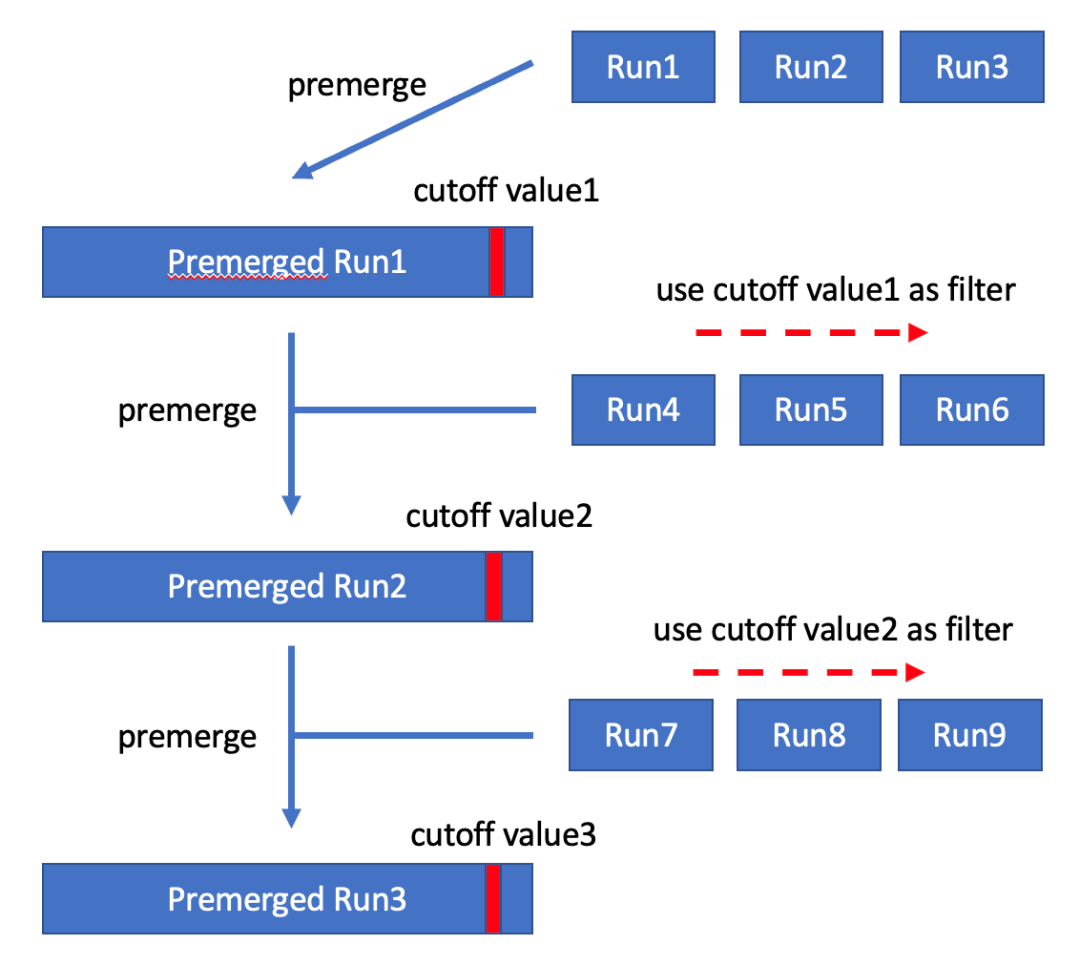

This solution was originally proposed in Goetz Graefe's paper, and ClickHouse adopts this solution. This solution maintains a cutoff value during execution and ensures that records larger than the cutoff value will not appear in the result set of TopK. When the new sorted run is generated, the solution will filter the data using the current cutoff value. After the new sorted run is generated, if K is less than the length of the new sorted run, the current cutoff value is replaced with the Kth record in the new sorted run. Since the data in the new sorted run is filtered by the old cutoff value, there must be new cutoff value <= old cutoff value, which means the cutoff value is a constantly sharpening value. Finally, you only need to merge these filtered sorted runs to obtain the result set.

A simple example is used to illustrate the preceding algorithm: assume that K=3 in the current TopK query, the sorted run generated after the first batch of data is read is (1, 2, 10, 15, 21), and the cutoff value is updated to 10. Next, use the cutoff value=10 to filter the second batch of data. If the generated second sorted run is (2, 3, 5, 6, 8), the cutoff value is updated to 5. Then, the third batch of data is read and filtered. If the generated third sorted run is (1, 2, 3, 3, 3), the cutoff value is updated to 3, etc. Keep sharpening the cutoff value to filter more data.

If K in the TopK query is greater than the length of a single sorted run, this solution accumulates enough sorted runs (the number of records contained is greater than K) and then pre-merges these sorted runs to obtain the cutoff value. Next, we can use the cutoff value to filter data and continue to accumulate enough sorted runs to obtain a smaller cutoff value, etc. The entire execution process is similar to the case where K is less than the length of a single sorted run. The difference is that you must merge enough sorted runs to obtain the cutoff value.

Deep pagination is a special scenario in the TopK query. The special feature is that the queried K is extremely large, but the result set is very small. For example, in the example shown on page 10001 above, for ORDER BY column LIMIT 1000000, 100, K=1,000,100, but the final result set contains only 100 records. This feature presents the following challenges to the solution described in the previous section:

Note: In this topic, sufficient memory means the data structure used to manage at least K records in the algorithm can be cached in the execution memory, not that the input data of TopK queries can be cached in the execution memory. In the scenarios discussed in this article, the input data for TopK queries is much larger than the execution memory.

In addition, from the perspective of system design, the following two points should be considered when designing a solution to deep pagination queries:

Based on the research and analysis above, we redesigned the Sort/TopK operator of PolarDB IMCI to address the challenges brought by deep pagination based on the existing mature solutions:

Instead of relying on a fixed threshold to select a memory or disk algorithm, we dynamically adjust the algorithm used during execution based on the amount of available execution memory.

Since the two solutions have been introduced in the previous section, only the reasons for choosing these two solutions will be introduced next. This article also introduces the use of SIMD to improve filtering efficiency, the use of ZoneMap to do pruning, and the dynamic selection of the memory algorithm and disk algorithm.

When the memory is sufficient, we directly adopt the Self-Sharpening Input Filter solution for two reasons:

The Self-Sharpening Input Filter is similar to the Priority Queue-Based algorithm (to some extent). The cutoff value is similar to the heap top, both of which are used to filter the subsequently read data. The difference between the two is that the Priority Queue-based algorithm updates the heap top in real-time, while the Self-Sharpening Input Filter accumulates data in sorted runs and updates the cutoff value in batch mode.

Filtering by the cutoff value is a very important process in the Self-Sharpening Input Filter, involving data comparison. The operation is simple but repeated frequently, so we use SIMD to speed up this process. Since filtering based on cutoff value is similar to filtering based on Predicate in TableScan, we directly reuse the Predicate expression in the specific implementation to improve filtering efficiency and reduce the time for calculating TopK.

When the memory is insufficient, we use merge sort and do truncation based on offset and limit. The main reasons are listed below:

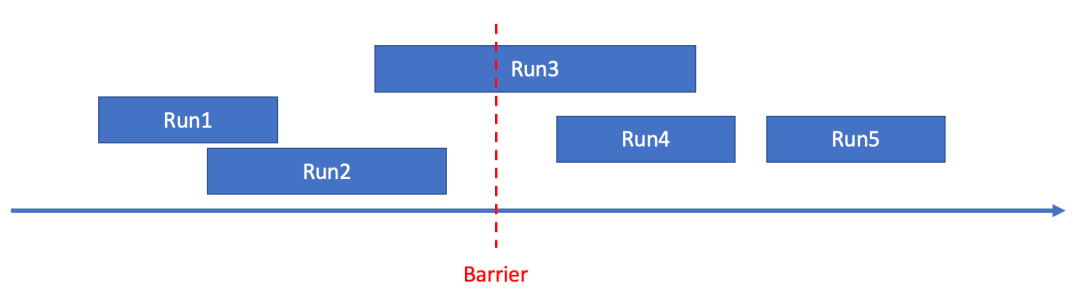

The following figure is used to illustrate the principle of using statistics for pruning. In the following figure, the arrow indicates the number axis, the corresponding positions of the left and right ends of the rectangle representing sorted runs on the number axis indicate the min/max values of sorted runs, and Barrier indicates the threshold on which pruning depends.

▷ Any Barrier can be used to classify all sorted runs into three categories:

▷ For any Barrier, if the amount of data in type A and type B is less than the offset in the TopK query, the data in type A must be ranked in the [0, offset) position, and the sorted run in type A does not participate in the subsequent merge operation.

▷ For any Barrier, if the amount of data in type A is greater than the offset + limit in the TopK query, the data in type C must be ranked in the [offset + limit, N) position, and the sorted run in type C does not participate in the subsequent merge operation.

Based on the preceding principles, the specific process of using statistics for pruning is listed below:

In our solution, the memory algorithm is different from the disk algorithm. If a fixed threshold is used as the basis for selecting the memory algorithm or the disk algorithm (for example, the memory algorithm is used when K is less than the threshold. Otherwise, the disk algorithm is used), different thresholds need to be set for different available execution memory, which brings the overhead of manual intervention.

Therefore, we have designed a simple fallback mechanism that can dynamically adjust the algorithm used during execution according to the size of the available execution memory:

Since the memory algorithm and the disk algorithm use the same data organization format, the fallback mechanism does not need to reorganize the data, and the overhead is small. In addition, the memory algorithm only filters data not in the result set. Therefore, if you directly use the sorted run accumulated by the memory algorithm to participate in the computing process of the disk algorithm, there is no problem with correctness.

Late materialization is an optimization in engineering implementation. It means that when a sorted run is generated, only expressions (columns) related to RowID and ORDER BY are materialized. After the result set of TopK is generated, the columns to be output for the query are retrieved from the storage based on the RowID in the result set. Late materialization has two advantages over materializing all the columns to be output for the query when sorted runs are generated:

The disadvantage of late materialization is that some random IO may be generated when the column to be output for the query is obtained from the storage based on the RowID in the result set. According to our analysis, although K is particularly large in the deep pagination scenario, the actual result set is small. Therefore, the overhead generated by random IO is small when late materialization is used.

When applying Self-Sharpening Input Filter, we push the constantly updated cutoff value down to the table scan operator as a new predicate in the SQL statement. When the table scan operator obtains data, the pruner is reused to filter the pack (or row group) based on the new predicate.

Computing pushdown can improve the performance of TopK queries in two ways:

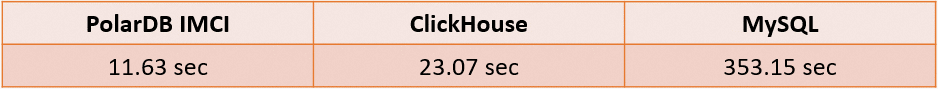

We performed a simple validation of our solution on the TPC-H 100G dataset:

select

l_orderkey,

sum(l_quantity)

from

lineitem

group by

l_orderkey

order by

sum(l_quantity) desc

limit

1000000, 100;

Nanlong Yu (Gushen), from Alibaba Cloud Database-PolarDB New Storage Engine Group, is engaged in the research and development of In Memory Column Index (IMCI) provided by PolarDB.

[Infographic] Highlights | Database New Feature in April 2023

Serverless Data Warehouse Exploration for Agile Data Analysis

ApsaraDB - August 8, 2023

ApsaraDB - January 4, 2024

ApsaraDB - June 26, 2025

ApsaraDB - June 7, 2022

ApsaraDB - August 7, 2023

ApsaraDB - August 4, 2023

PolarDB for MySQL

PolarDB for MySQL

Alibaba Cloud PolarDB for MySQL is a cloud-native relational database service 100% compatible with MySQL.

Learn More PolarDB for PostgreSQL

PolarDB for PostgreSQL

Alibaba Cloud PolarDB for PostgreSQL is an in-house relational database service 100% compatible with PostgreSQL and highly compatible with the Oracle syntax.

Learn More PolarDB for Xscale

PolarDB for Xscale

Alibaba Cloud PolarDB for Xscale (PolarDB-X) is a cloud-native high-performance distributed database service independently developed by Alibaba Cloud.

Learn More Database for FinTech Solution

Database for FinTech Solution

Leverage cloud-native database solutions dedicated for FinTech.

Learn MoreMore Posts by ApsaraDB