By Huolang, from Alibaba Cloud Storage Team

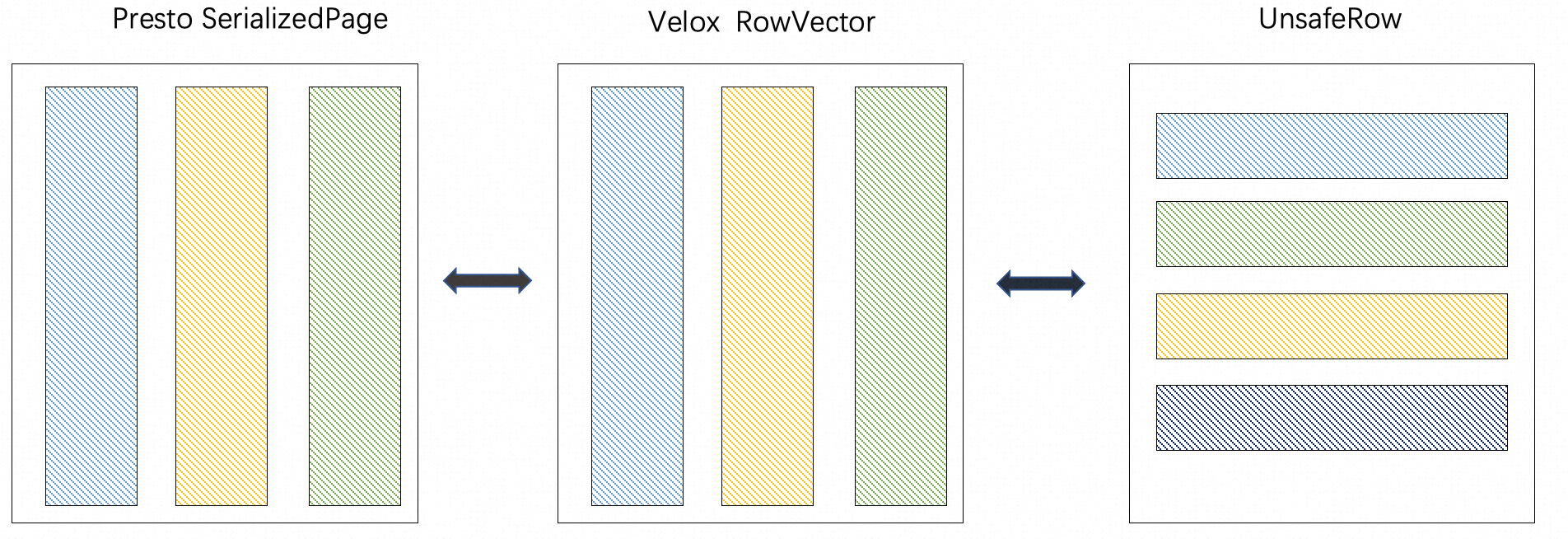

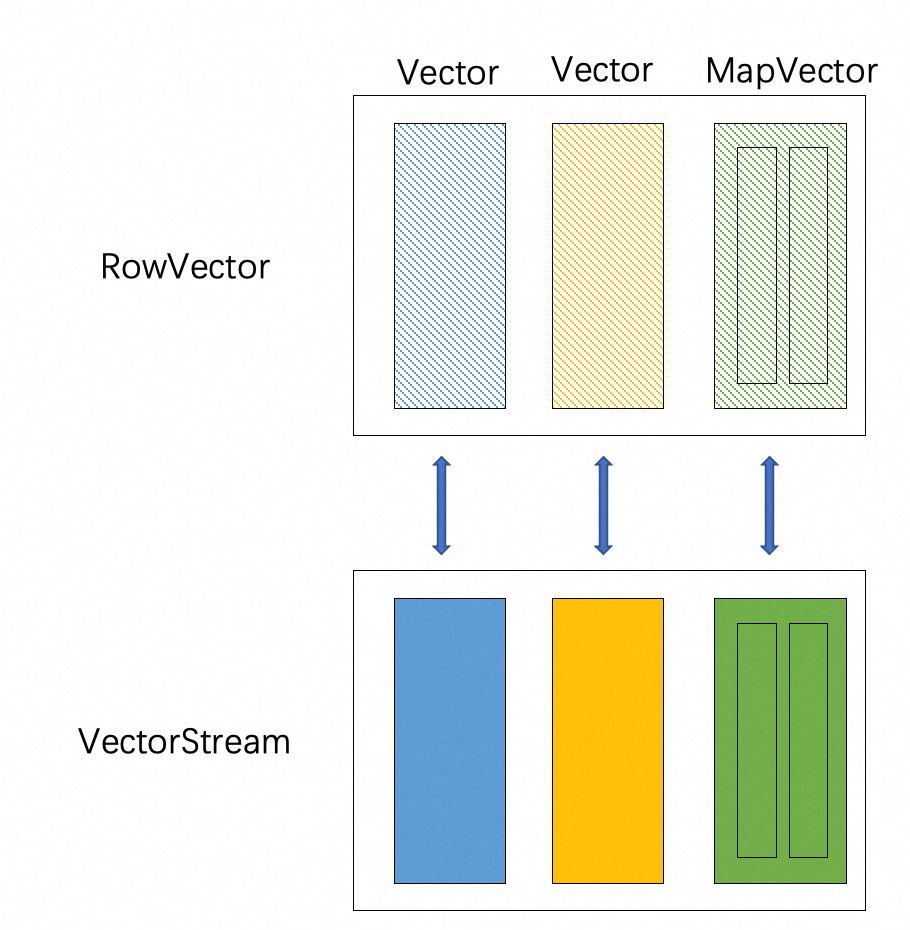

Velox, as a computing engine, can be embedded and used by Presto and Spark. In the Operator data transfer, Velox utilizes the columnar structure RowVector, while Presto and Spark have their corresponding data structures, SerializedPage and UnsafeRow, for communication between compute nodes. When using the Velox computing engine with Presto and Spark, there is a need for converting between these two data formats.

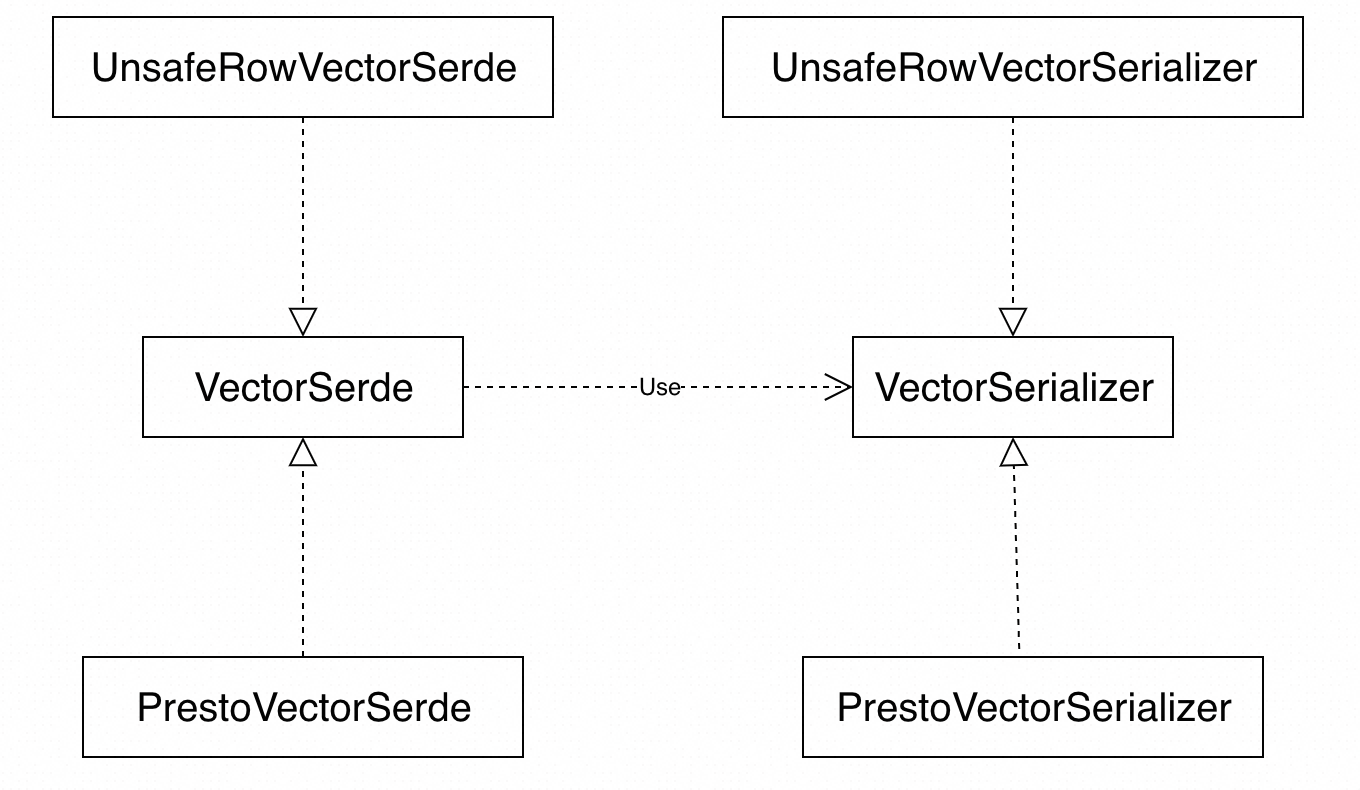

To address this, Velox provides the VectorSerde interface and a registration method called registerVectorSerde. By implementing and registering the corresponding VectorSerde interface, external systems can achieve mutual conversion with Velox's RowVector.

In this article, we will focus on describing two representative implementations of the VectorSerde interface: PrestoVectorSerde and UnsafeRowVectorSerde. These implementations are used to convert RowVector to Presto's SerializedPage and RowVector to Spark's UnsafeRow, respectively. SerializedPage and UnsafeRow represent column and row models.

Furthermore, we will introduce the basic implementations of StreamArena, ByteStream, and other classes related to Velox's memory management during serialization. Following that, we will delve into the implementations of PrestoVectorSerde and UnsafeRowVectorSerde.

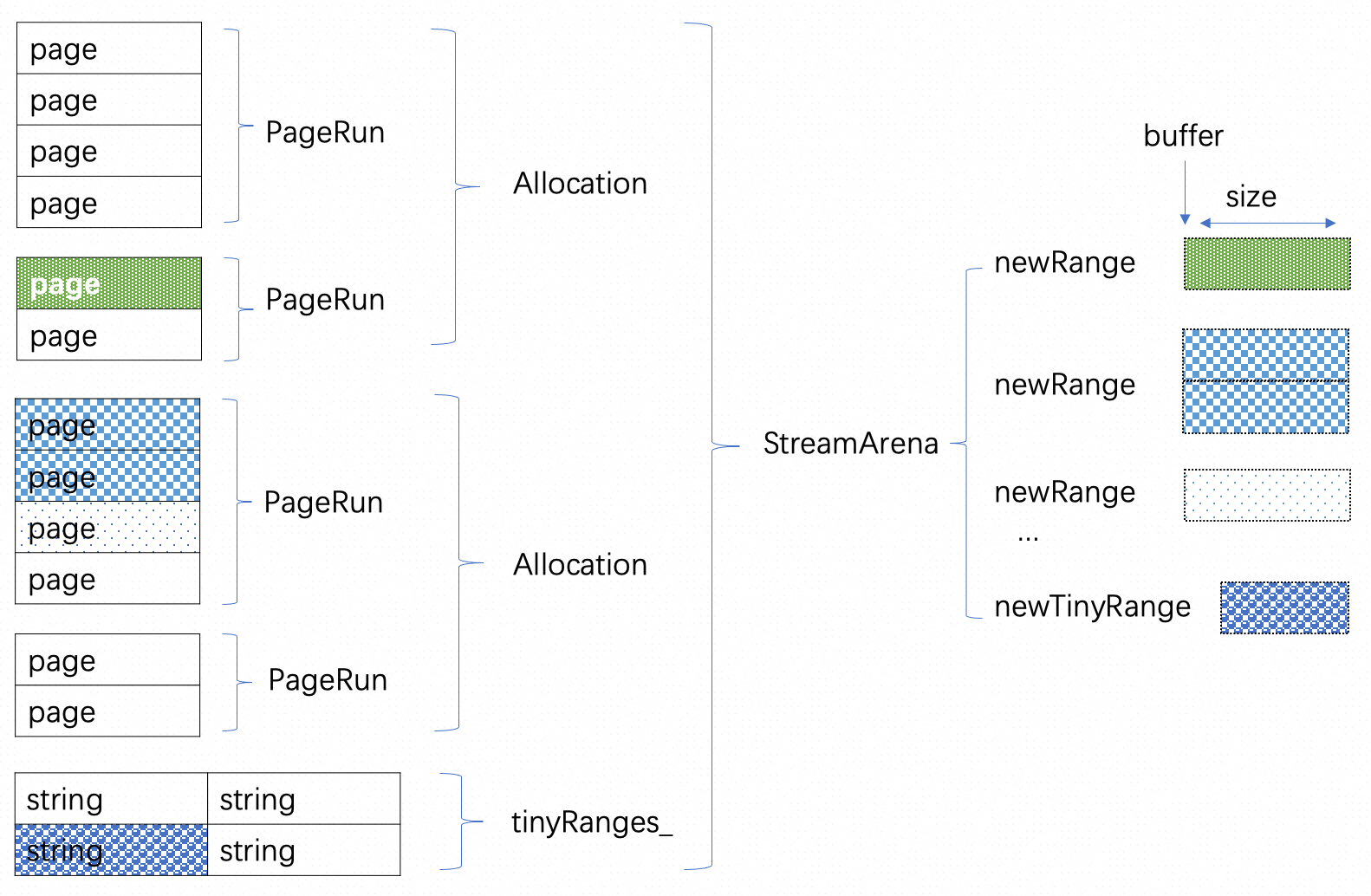

Let's first understand the memory-related concepts through this diagram:

Allocation stores an array of PageRun. Allocation is obtained by the MemoryPool::allocateNonContiguous method, which can specify the number of pages to be requested.

StreamArena is a vector whose element is Allocation and exposes two interfaces for memory requests:

The PageRun structure is relatively simple, and the header address is specified in the constructor.

Allocation contains the vector of PageRun.

class PageRun {

public:

...

PageRun(void* address, MachinePageCount numPages) {

auto word = reinterpret_cast<uint64_t>(address); // NOLINT

data_ =

word | (static_cast<uint64_t>(numPages) << kPointerSignificantBits);

}

template <typename T = uint8_t>

T* data() const {

return reinterpret_cast<T*>(data_ & kPointerMask); // NOLINT

}

//...

private:

uint64_t data_;

}

class Allocation {

MemoryPool* pool_{nullptr};

std::vector<PageRun> runs_;

}StreamArena uses allocations_ to store the requested memory allocation, and uses currentRun_ and currentPage_to point to the indexes of PageRun and Page of the Allocation currently needed:

tinyRanges_is a vector of strings:

class StreamArena {

//...

private:

// All allocations.

std::vector<std::unique_ptr<memory::Allocation>> allocations_;

// The allocation from which pages are given out. Moved to 'allocations_' when used up.

memory::Allocation allocation_;

int32_t currentRun_ = 0;

int32_t currentPage_ = 0;

memory::MachinePageCount allocationQuantum_ = 2;

std::vector<std::string> tinyRanges_;

}

void StreamArena::newRange(int32_t bytes, ByteRange* range) {

VELOX_CHECK_GT(bytes, 0);

memory::MachinePageCount numPages =

bits::roundUp(bytes, memory::AllocationTraits::kPageSize) /

memory::AllocationTraits::kPageSize;

int32_t numRuns = allocation_.numRuns();

if (currentRun_ >= numRuns) {

if (numRuns) {

allocations_.push_back(

std::make_unique<memory::Allocation>(std::move(allocation_)));

}

pool_->allocateNonContiguous(

std::max(allocationQuantum_, numPages), allocation_);

currentRun_ = 0;

currentPage_ = 0;

size_ += allocation_.byteSize();

}

auto run = allocation_.runAt(currentRun_);

int32_t available = run.numPages() - currentPage_;

range->buffer =

run.data() + memory::AllocationTraits::kPageSize * currentPage_;

range->size = std::min<int32_t>(numPages, available) *

memory::AllocationTraits::kPageSize;

range->position = 0;

currentPage_ += std::min<int32_t>(available, numPages);

if (currentPage_ == run.numPages()) {

++currentRun_;

currentPage_ = 0;

}

}

void StreamArena::newTinyRange(int32_t bytes, ByteRange* range) {

tinyRanges_.emplace_back();

tinyRanges_.back().resize(bytes);

range->position = 0;

range->buffer = reinterpret_cast<uint8_t*>(tinyRanges_.back().data());

range->size = bytes;

}

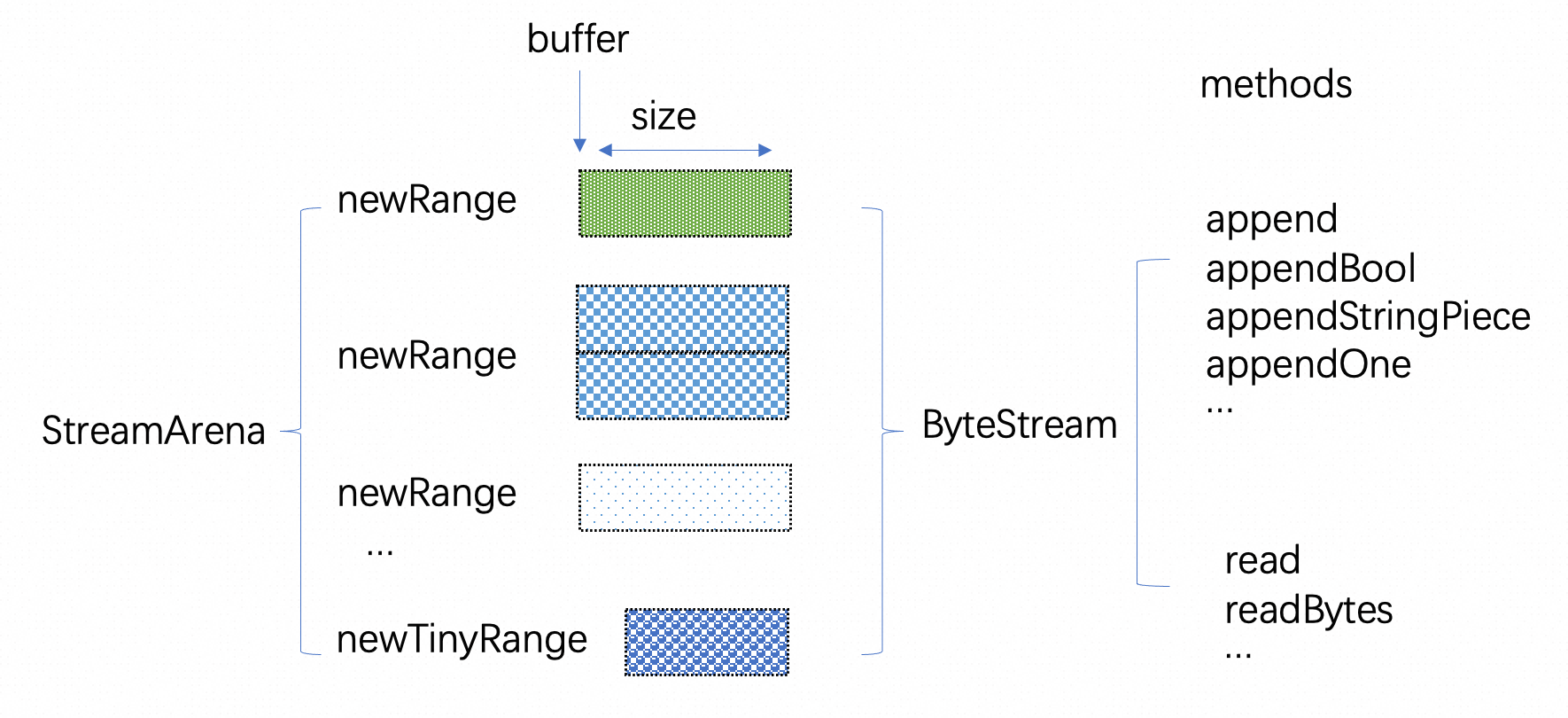

According to the introduction above, StreamArena can be used to request memory and store the memory in ByteRange. ByteStream is used to serialize and deserialize data, which reads and writes data of a specific type and stores ByteRange. The main method is as follows:

VectorSerde is used to serialize and deserialize RowVector. From the structure of VectorSerde:

Serialization: Instead of providing the serialization method directly, VectorSerializer is used to serialize the Vector and provides createSerializer methods to create VectorSerializer. When creating a VectorSerializer:

class VectorSerde {

public:

//...

virtual std::unique_ptr<VectorSerializer> createSerializer(

RowTypePtr type,

int32_t numRows,

StreamArena* streamArena,

const Options* options = nullptr) = 0;

virtual void deserialize(

ByteStream* source,

velox::memory::MemoryPool* pool,

RowTypePtr type,

RowVectorPtr* result,

const Options* options = nullptr) = 0;

};VectorSerializer does not provide a method for direct serialization, but provides the append method: Append data first, and then flush the results to OutputStream.

class VectorSerializer {

public:

virtual ~VectorSerializer() = default;

/// Serialize a subset of rows in a vector.

virtual void append(

const RowVectorPtr& vector,

const folly::Range<const IndexRange*>& ranges) = 0;

/// Serialize all rows in a vector.

void append(const RowVectorPtr& vector);

/// Write serialized data to 'stream'.

virtual void flush(OutputStream* stream) = 0;

};Both VectorSerde and VectorSerializer interfaces need to be implemented during the interconversion of external system data structures to Velox's RowVector. For example:

Next, I'll parse the two implementations separately.

In the process of exploring its implementations, we also need to pay attention to the request and copy of memory.

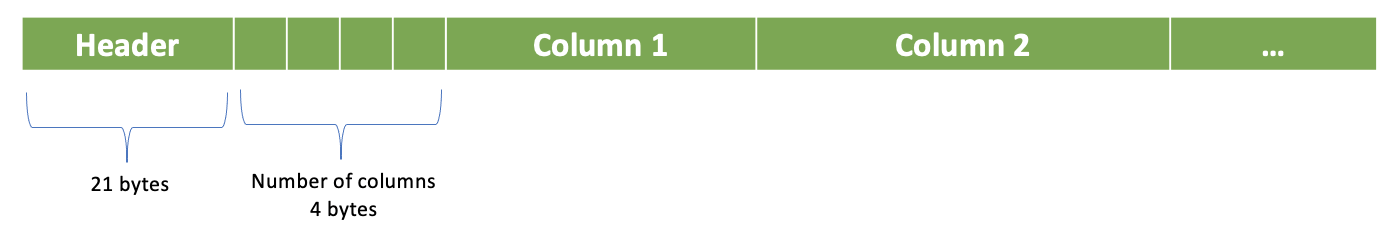

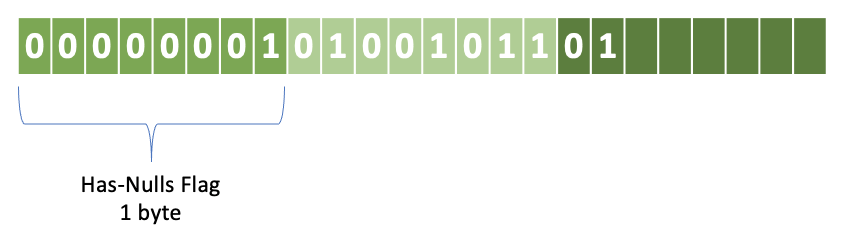

Before introducing serialization and deserialization, the structure of SerializedPage needs to be briefly introduced. For more information, see SerializedPage Wire Format.

The format includes header, number of columns, and columns. The number of rows is stored in the header.

The column structure also contains column headers, null flags, and actual values.

The column content: Classified into the following types based on the column type:

The implementation of the PrestoVectorSerializer is relatively clear:

Here are some questions, and let's study them one by one.

class PrestoVectorSerializer : public VectorSerializer {

public:

PrestoVectorSerializer(

std::shared_ptr<const RowType> rowType,

int32_t numRows,

StreamArena* streamArena,

bool useLosslessTimestamp) {

auto types = rowType->children();

auto numTypes = types.size();

streams_.resize(numTypes);

for (int i = 0; i < numTypes; i++) {

streams_[i] = std::make_unique<VectorStream>(

types[i], streamArena, numRows, useLosslessTimestamp);

}

}

void append(

const RowVectorPtr& vector,

const folly::Range<const IndexRange*>& ranges) override {

auto newRows = rangesTotalSize(ranges);

if (newRows > 0) {

numRows_ += newRows;

for (int32_t i = 0; i < vector->childrenSize(); ++i) {

serializeColumn(vector->childAt(i).get(), ranges, streams_[i].get());

}

}

}

void flush(OutputStream* out) override {

flushInternal(numRows_, false /*rle*/, out);

}

//...

private:

...

int32_t numRows_{0};

std::vector<std::unique_ptr<VectorStream>> streams_;

};

} // namespaceVectorStream is used to serialize SerializedPage. Its members store the column headers, null flags, and length required in the SerializedPage structure. SeralizedPage also supports the encoding of the ROW, ARRAY, and MAP types. Correspondingly, VectorStream uses nested VectorStream for these three types.

class VectorStream {

//...

private:

const TypePtr type_;

//...

ByteRange header_;

ByteStream nulls_;

ByteStream lengths_;

ByteStream values_;

std::vector<std::unique_ptr<VectorStream>> children_;

};When a VectorStream is constructed, the preceding members are initialized, and the ByteStream::startWrite method is called for memory requests. The memory is prerequested based on the data type of each column in the RowVector.

The following lists the important field initialization code: the length_ in the code corresponds to the offsets in the SerializedPage.

class VectorStream {

public:

VectorStream(

const TypePtr type,

StreamArena* streamArena,

int32_t initialNumRows,

bool useLosslessTimestamp)

: type_(type),

useLosslessTimestamp_(useLosslessTimestamp),

nulls_(streamArena, true, true),

lengths_(streamArena),

values_(streamArena) {

//...

if (initialNumRows > 0) {

switch (type_->kind()) {

case TypeKind::ROW:

if (isTimestampWithTimeZoneType(type_)) {

values_.startWrite(initialNumRows * 4);

break;

}

[[fallthrough]];

case TypeKind::ARRAY:

case TypeKind::MAP:

hasLengths_ = true;

lengths_.startWrite(initialNumRows * sizeof(vector_size_t));

children_.resize(type_->size());

for (int32_t i = 0; i < type_->size(); ++i) {

children_[i] = std::make_unique<VectorStream>(

type_->childAt(i),

streamArena,

initialNumRows,

useLosslessTimestamp);

}

break;

case TypeKind::VARCHAR:

case TypeKind::VARBINARY:

hasLengths_ = true;

lengths_.startWrite(initialNumRows * sizeof(vector_size_t));

values_.startWrite(initialNumRows * 10);

break;

default:;

values_.startWrite(initialNumRows * 4);

break;

}

}

}

private:

const TypePtr type_;

//...

ByteRange header_;

ByteStream nulls_;

ByteStream lengths_;

ByteStream values_;

std::vector<std::unique_ptr<VectorStream>> children_;

};The serialized Append method ultimately calls the serializeColumn method for each column, which specifically handles each column type in its implementation. For example, the serializeFlatVector method is called for data of the Flat type.

The main process for each serialization is to write the data to the length_ and values_ fields of the VectorStream, based on the format of the extracted data. For composite types, recursive calls are used.

It is important to note that when data is written to the StreamArena memory, a memory copy is used, even for data of the String type. This means that a complete copy of the RowVector data is stored in the StreamArena.

void serializeColumn(

const BaseVector* vector,

const folly::Range<const IndexRange*>& ranges,

VectorStream* stream) {

switch (vector->encoding()) {

case VectorEncoding::Simple::FLAT:

VELOX_DYNAMIC_SCALAR_TYPE_DISPATCH_ALL(

serializeFlatVector, vector->typeKind(), vector, ranges, stream);

break;

case VectorEncoding::Simple::CONSTANT:

VELOX_DYNAMIC_TYPE_DISPATCH_ALL(

serializeConstantVector, vector->typeKind(), vector, ranges, stream);

break;

case VectorEncoding::Simple::BIASED:

switch (vector->typeKind()) {

case TypeKind::SMALLINT:

serializeBiasVector<int16_t>(vector, ranges, stream);

break;

case TypeKind::INTEGER:

serializeBiasVector<int32_t>(vector, ranges, stream);

break;

case TypeKind::BIGINT:

serializeBiasVector<int64_t>(vector, ranges, stream);

break;

default:

throw std::invalid_argument("Invalid biased vector type");

}

break;

case VectorEncoding::Simple::ROW:

serializeRowVector(vector, ranges, stream);

break;

case VectorEncoding::Simple::ARRAY:

serializeArrayVector(vector, ranges, stream);

break;

case VectorEncoding::Simple::MAP:

serializeMapVector(vector, ranges, stream);

break;

case VectorEncoding::Simple::LAZY:

serializeColumn(vector->loadedVector(), ranges, stream);

break;

default:

serializeWrapped(vector, ranges, stream);

}

}serializeColumn writes data to the memory of the StreamArena, and the flush method can write data to the OutputStream strictly in the SerializedPage format. The ROW, ARRAY, and MAP types recursively call the flush method of children.

void flush(OutputStream* out) {

out->write(reinterpret_cast<char*>(header_.buffer), header_.size);

switch (type_->kind()) {

case TypeKind::ROW:

//...

case TypeKind::ARRAY:

//...

case TypeKind::MAP:

//...

case TypeKind::VARCHAR:

case TypeKind::VARBINARY:

//...

default:

//...

}

}In the flush method of the PrestoVectorSerializer, the flush method of the corresponding VectorStream is called one by one based on the number of columns.

Deserialization refers to the conversion from the SerializePage binary format to RowVector.

void deserialize(

ByteStream* source,

velox::memory::MemoryPool* pool,

std::shared_ptr<const RowType> type,

std::shared_ptr<RowVector>* result) override;The core method of deserialization is readColumns, which reads the data of each column to the children of the result.

auto children = &(*result)->children();

auto childTypes = type->as<TypeKind::ROW>().children();

readColumns(source, pool, childTypes, children);Next, take a look at the core implementation of readColumns:

void readColumns(

ByteStream* source,

velox::memory::MemoryPool* pool,

const std::vector<TypePtr>& types,

std::vector<VectorPtr>* result) {

static std::unordered_map<

TypeKind,

std::function<void(

ByteStream * source,

std::shared_ptr<const Type> type,

velox::memory::MemoryPool * pool,

VectorPtr * result)>>

readers = {

{TypeKind::BOOLEAN, &read<bool>},

{TypeKind::TINYINT, &read<int8_t>},

{TypeKind::SMALLINT, &read<int16_t>},

{TypeKind::INTEGER, &read<int32_t>},

{TypeKind::BIGINT, &read<int64_t>},

{TypeKind::REAL, &read<float>},

{TypeKind::DOUBLE, &read<double>},

{TypeKind::TIMESTAMP, &read<Timestamp>},

{TypeKind::DATE, &read<Date>},

{TypeKind::VARCHAR, &read<StringView>},

{TypeKind::VARBINARY, &read<StringView>},

{TypeKind::ARRAY, &readArrayVector},

{TypeKind::MAP, &readMapVector},

{TypeKind::ROW, &readRowVector},

{TypeKind::UNKNOWN, &read<UnknownValue>}};

for (int32_t i = 0; i < types.size(); ++i) {

auto it = readers.find(types[i]->kind());

//...

it->second(source, types[i], pool, &(*result)[i]);

}

}The following are examples of simple and complex types:

template <typename T>

void read(

ByteStream* source,

std::shared_ptr<const Type> type,

velox::memory::MemoryPool* pool,

VectorPtr* result) {

int32_t size = source->read<int32_t>();

if (*result && result->unique()) {

(*result)->resize(size);

} else {

*result = BaseVector::create(type, size, pool);

}

auto flatResult = (*result)->asFlatVector<T>();

auto nullCount = readNulls(source, size, flatResult);

BufferPtr values = flatResult->mutableValues(size);

readValues<T>(source, size, flatResult->nulls(), nullCount, values);

}void readMapVector(

ByteStream* source,

std::shared_ptr<const Type> type,

velox::memory::MemoryPool* pool,

VectorPtr* result) {

MapVector* mapVector =

(*result && result->unique()) ? (*result)->as<MapVector>() : nullptr;

std::vector<TypePtr> childTypes = {type->childAt(0), type->childAt(1)};

std::vector<VectorPtr> children(2);

if (mapVector) {

children[0] = mapVector->mapKeys();

children[1] = mapVector->mapValues();

}

readColumns(source, pool, childTypes, &children);

//...

mapVector->setKeysAndValues(children[0], children[1]);

//...

}Similarly, in the process of deserialization, data is copied from ByteStream to Vector, and memory copy occurs.

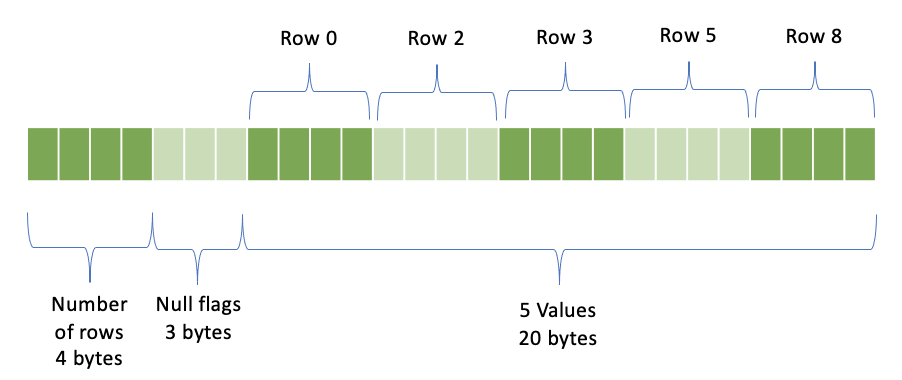

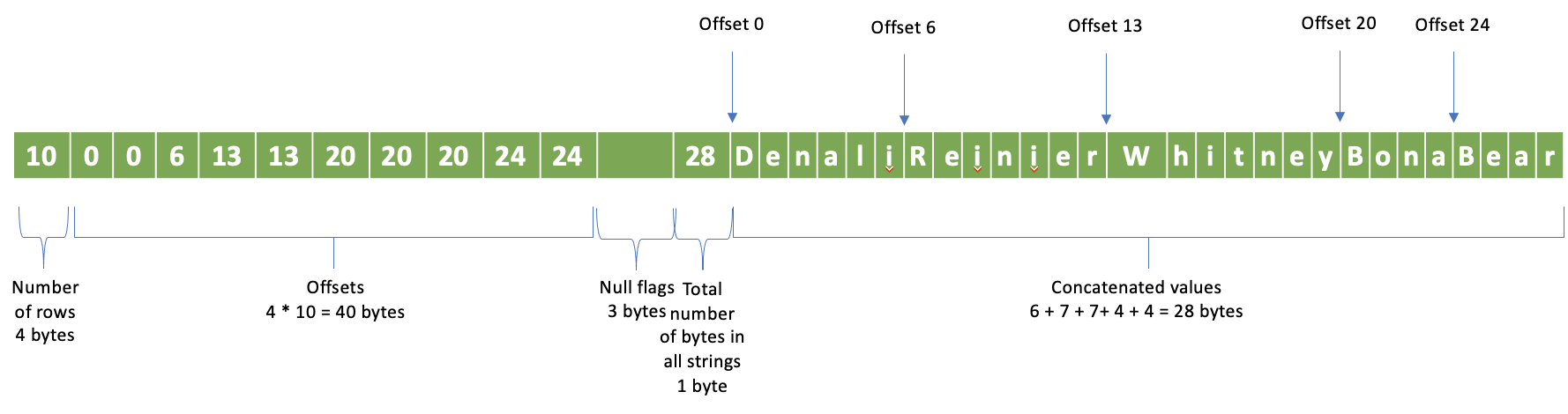

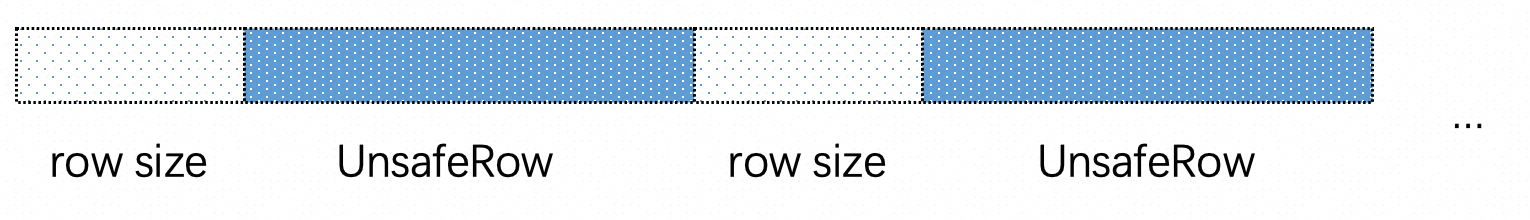

UnsafeRow is used in Spark and is a binary row-oriented storage format. Data is stored row by row. The binary representation of multiple rows of data is as follows:

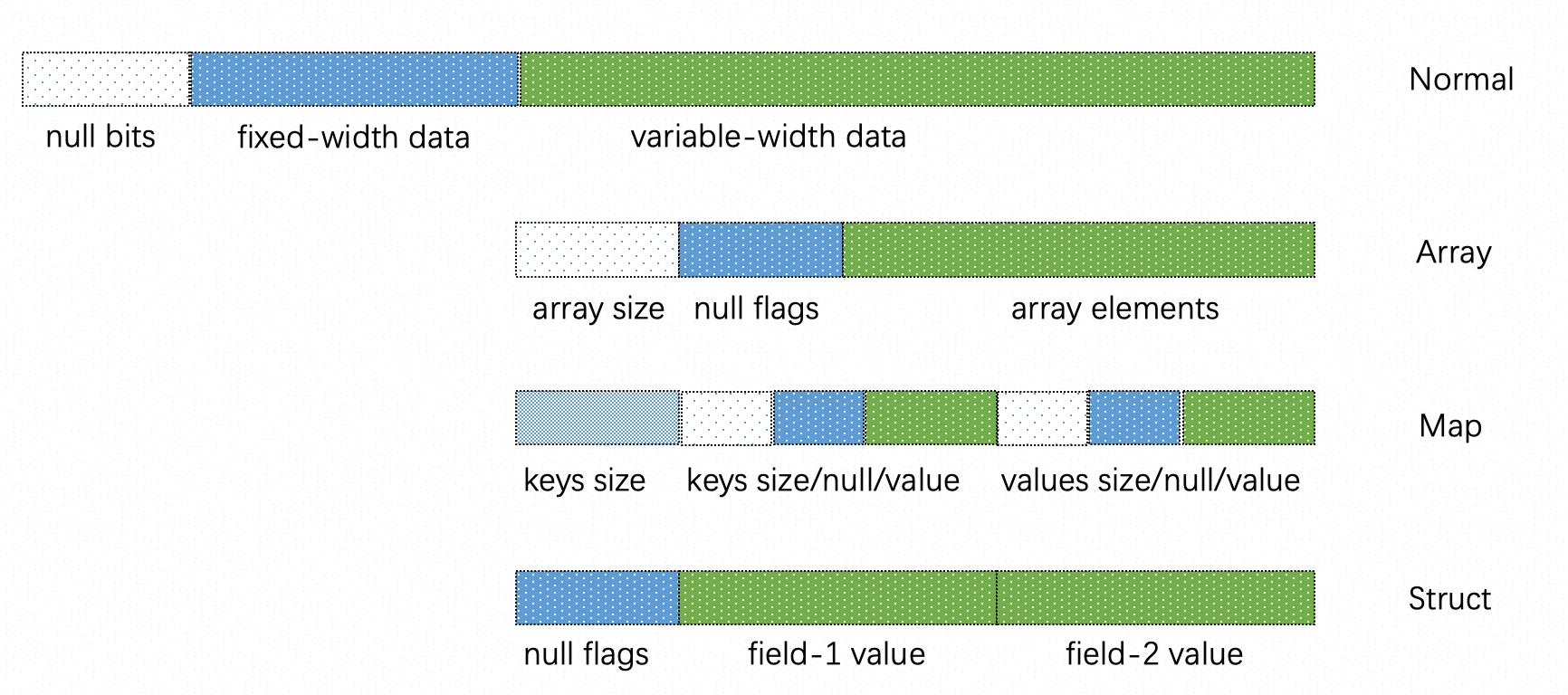

UnsafeRow represents a row of data. Each row of data has multiple columns. For different types, UnsafeRow defines three parts: null flags, fixed-length data, and variable-length data.

Similarly, UnsafeRowVectorSerde inherits from VectorSerde and focuses on the implementation of UnsafeRowVectorSerializer. The main process is as follows:

Calculate the total size of the RowVector, and then request the corresponding memory size. The requested memory is stored in buffers_.

class UnsafeRowVectorSerializer : public VectorSerializer {

public:

using TRowSize = uint32_t;

explicit UnsafeRowVectorSerializer(StreamArena* streamArena)

: pool_{streamArena->pool()} {}

void append(

const RowVectorPtr& vector,

const folly::Range<const IndexRange*>& ranges) override {

size_t totalSize = 0;

row::UnsafeRowFast unsafeRow(vector);

totalSize = //... calculate total size

BufferPtr buffer = AlignedBuffer::allocate<char>(totalSize, pool_, 0);

auto rawBuffer = buffer->asMutable<char>();

buffers_.push_back(std::move(buffer));

size_t offset = 0;

for (auto& range : ranges) {

for (auto i = range.begin; i < range.begin + range.size; ++i) {

// Write row data.

TRowSize size =

unsafeRow.serialize(i, rawBuffer + offset + sizeof(TRowSize));

// Write raw size. Needs to be in big endian order.

*(TRowSize*)(rawBuffer + offset) = folly::Endian::big(size);

offset += sizeof(TRowSize) + size;

}

}

}

void flush(OutputStream* stream) override {

for (const auto& buffer : buffers_) {

stream->write(buffer->as<char>(), buffer->size());

}

buffers_.clear();

}

private:

memory::MemoryPool* const FOLLY_NONNULL pool_;

std::vector<BufferPtr> buffers_;

};UnsafeRowFast accepts RowVectors and converts the RowVectors to DecodedVectors.

class UnsafeRowFast {

public:

explicit UnsafeRowFast(const RowVectorPtr& vector);

...

/// Serializes row at specified index into 'buffer'.

/// 'buffer' must have sufficient capacity and set to all zeros.

int32_t serialize(vector_size_t index, char* buffer);

protected:

explicit UnsafeRowFast(const VectorPtr& vector);

void initialize(const TypePtr& type);

private:

const TypeKind typeKind_;

DecodedVector decoded_;

/// ARRAY, MAP and ROW types only.

std::vector<UnsafeRowFast> children_;

std::vector<bool> childIsFixedWidth_;

};children_ and encoded_ are implemented in the initialization function. The details are as follows:

void UnsafeRowFast::initialize(const TypePtr& type) {

auto base = decoded_.base();

switch (typeKind_) {

case TypeKind::ARRAY: {

auto arrayBase = base->as<ArrayVector>();

children_.push_back(UnsafeRowFast(arrayBase->elements()));

//...

break;

}

case TypeKind::MAP: {

auto mapBase = base->as<MapVector>();

children_.push_back(UnsafeRowFast(mapBase->mapKeys()));

children_.push_back(UnsafeRowFast(mapBase->mapValues()));

//...

break;

}

case TypeKind::ROW: {

auto rowBase = base->as<RowVector>();

for (const auto& child : rowBase->children()) {

children_.push_back(UnsafeRowFast(child));

}

//...

break;

}

case TypeKind::BOOLEAN:

valueBytes_ = 1;

fixedWidthTypeKind_ = true;

break;

case TypeKind::TINYINT:

FOLLY_FALLTHROUGH;

case TypeKind::SMALLINT:

FOLLY_FALLTHROUGH;

case TypeKind::INTEGER:

FOLLY_FALLTHROUGH;

case TypeKind::BIGINT:

FOLLY_FALLTHROUGH;

case TypeKind::REAL:

FOLLY_FALLTHROUGH;

case TypeKind::DOUBLE:

FOLLY_FALLTHROUGH;

case TypeKind::DATE:

case TypeKind::UNKNOWN:

valueBytes_ = type->cppSizeInBytes();

fixedWidthTypeKind_ = true;

supportsBulkCopy_ = decoded_.isIdentityMapping();

break;

case TypeKind::TIMESTAMP:

valueBytes_ = sizeof(int64_t);

fixedWidthTypeKind_ = true;

break;

case TypeKind::VARCHAR:

FOLLY_FALLTHROUGH;

case TypeKind::VARBINARY:

// Nothing to do.

break;

default:

VELOX_UNSUPPORTED("Unsupported type: {}", type->toString());

}

}The serialization process mainly calls the serializeRow method by traversing children, which is also used to distinguish between fixed-length columns and non-fixed-length columns:

int32_t UnsafeRowFast::serializeRow(vector_size_t index, char* buffer) {

auto childIndex = decoded_.index(index);

int64_t variableWidthOffset = rowNullBytes_ + kFieldWidth * children_.size();

for (auto i = 0; i < children_.size(); ++i) {

auto& child = children_[i];

// Write null bit.

if (child.isNullAt(childIndex)) {

bits::setBit(buffer, i, true);

continue;

}

// Write value.

if (childIsFixedWidth_[i]) {

child.serializeFixedWidth(

childIndex, buffer + rowNullBytes_ + i * kFieldWidth);

} else {

auto size = child.serializeVariableWidth(

childIndex, buffer + variableWidthOffset);

// Write size and offset.

uint64_t sizeAndOffset = variableWidthOffset << 32 | size;

reinterpret_cast<uint64_t*>(buffer + rowNullBytes_)[i] = sizeAndOffset;

variableWidthOffset += alignBytes(size);

}

}

return variableWidthOffset;

}Next, look at the specific implementations of fixed-length and non-fixed-length columns:

Non-fixed-length columns:

void UnsafeRowFast::serializeFixedWidth(

vector_size_t offset,

vector_size_t size,

char* buffer) {

VELOX_DCHECK(supportsBulkCopy_);

// decoded_.data<char>() can be null if all values are null.

if (decoded_.data<char>()) {

memcpy(

buffer,

decoded_.data<char>() + decoded_.index(offset) * valueBytes_,

valueBytes_ * size);

}

}

int32_t UnsafeRowFast::serializeVariableWidth(

vector_size_t index,

char* buffer) {

switch (typeKind_) {

case TypeKind::VARCHAR:

FOLLY_FALLTHROUGH;

case TypeKind::VARBINARY: {

auto value = decoded_.valueAt<StringView>(index);

memcpy(buffer, value.data(), value.size());

return value.size();

}

case TypeKind::ARRAY:

return serializeArray(index, buffer);

case TypeKind::MAP:

return serializeMap(index, buffer);

case TypeKind::ROW:

return serializeRow(index, buffer);

default:

VELOX_UNREACHABLE(

"Unexpected type kind: {}", mapTypeKindToName(typeKind_));

};

}Deserialization uses the UnsafeRowDeserializer::deserialize method and accepts the vector of string_view. Each element of the vector represents a row of data, and the convertToVectors method is mainly called during deserialization.

static VectorPtr deserialize(

const std::vector<std::optional<std::string_view>>& data,

const TypePtr& type,

memory::MemoryPool* pool) {

return convertToVectors(getBatchIteratorPtr(data, type), pool);

}static VectorPtr convertToVectors(

const DataBatchIteratorPtr& dataIterator,

memory::MemoryPool* pool) {

const TypePtr& type = dataIterator->type();

if (type->isPrimitiveType()) {

return convertPrimitiveIteratorsToVectors(dataIterator, pool);

} else if (type->isRow()) {

return convertStructIteratorsToVectors(dataIterator, pool);

} else if (type->isArray()) {

return convertArrayIteratorsToVectors(dataIterator, pool);

} else if (type->isMap()) {

return convertMapIteratorsToVectors(dataIterator, pool);

} else {

VELOX_NYI("Unsupported data iterators type");

}

}getBatchIteratorPtr generates different DataBatchIterators according to different data types.

inline DataBatchIteratorPtr getBatchIteratorPtr(

const std::vector<std::optional<std::string_view>>& data,

const TypePtr& type) {

if (type->isPrimitiveType()) {

return std::make_shared<PrimitiveBatchIterator>(data, type);

} else if (type->isRow()) {

return std::make_shared<StructBatchIterator>(data, type);

} else if (type->isArray()) {

return std::make_shared<ArrayBatchIterator>(data, type);

} else if (type->isMap()) {

return std::make_shared<MapBatchIterator>(data, type);

}

VELOX_NYI("Unknown data type " + type->toString());

return nullptr;

}In convertToVectors, take convertPrimitiveIteratorsToVectors as an example, which eventually calls the createFlatVector.

Then, call the iterator->next() method on a row-by-row basis for data iteration. In the process of iteration, read data according to the type of data.

template <TypeKind Kind>

static VectorPtr createFlatVector(

const DataBatchIteratorPtr& dataIterator,

const TypePtr& type,

memory::MemoryPool* pool) {

auto iterator =

std::dynamic_pointer_cast<PrimitiveBatchIterator>(dataIterator);

size_t size = iterator->numRows();

auto vector = BaseVector::create(type, size, pool);

using T = typename TypeTraits<Kind>::NativeType;

using TypeTraits = ScalarTraits<Kind>;

auto* flatResult = vector->asFlatVector<T>();

for (int32_t i = 0; i < size; ++i) {

if (iterator->isNull(i)) {

vector->setNull(i, true);

iterator->next();

} else {

vector->setNull(i, false);

if constexpr (std::is_same_v<T, StringView>) {

StringView val =

UnsafeRowPrimitiveBatchDeserializer::deserializeStringView(

iterator->next().value());

TypeTraits::set(flatResult, i, val);

} else {

typename TypeTraits::SerializedType val =

UnsafeRowPrimitiveBatchDeserializer::deserializeFixedWidth<

typename TypeTraits::SerializedType>(

iterator->next().value());

TypeTraits::set(flatResult, i, val);

}

}

}

return vector;

}For the ROW, ARRAY, and MAP types, convertStructIteratorsToVectors, convertArrayIteratorsToVectors, and convertMapIteratorsToVectors are called respectively in a similar way, which is parsing them in a row-by-row manner and building the corresponding vectors.

For classes that have implemented VectorSerde, they can be registered in the system using the registerVectorSerde method. The system can then utilize the getNamedVectorSerde method to find the corresponding VectorSerde implementation class for serializing and deserializing external data formats. Here is a brief summary:

• VectorSerde serves as the interface for external communication with the Velox engine. The system's extensibility is maintained through this interface, allowing external implementations to handle reading and writing of external data without directly modifying the Velox engine.

• When working with Velox Vector, it is important to be familiar with the different encoding types available, especially in columnar scenarios. Different encodings have corresponding "Fast Path" execution paths to improve efficiency.

• Multiple memory copies can be observed during the serialization and deserialization process. This is because the lifecycle of the original data ends during the execution of Serde. Using memory references may not be safe in this context, and further investigation is required for this part of the logic.

• In the implementation of StreamArena, consideration is given to requesting small-sized memory. For page sizes smaller than 4K, memory is directly allocated using vector.

• The two serialization methods mentioned earlier in Velox have fixed schemas, meaning the number and format of columns are predetermined. Velox itself, as well as Presto and Spark, follow column-specific patterns. This article does not cover scenarios where the schema is not fixed, known as SchemaFree, where the columns of each row can vary.

Disclaimer: The views expressed herein are for reference only and don't necessarily represent the official views of Alibaba Cloud.

Alibaba Cloud's Young Power Journey: From One Day to Day One

1,344 posts | 470 followers

FollowAlibaba Cloud Community - December 20, 2023

ApsaraDB - November 14, 2024

Alibaba Cloud Community - November 25, 2024

Alibaba Cloud Native Community - May 16, 2023

Alibaba Clouder - December 5, 2016

jianzhang.yjz - July 9, 2021

1,344 posts | 470 followers

Follow YiDA Low-code Development Platform

YiDA Low-code Development Platform

A low-code development platform to make work easier

Learn More mPaaS

mPaaS

Help enterprises build high-quality, stable mobile apps

Learn More Storage Capacity Unit

Storage Capacity Unit

Plan and optimize your storage budget with flexible storage services

Learn More Hybrid Cloud Storage

Hybrid Cloud Storage

A cost-effective, efficient and easy-to-manage hybrid cloud storage solution.

Learn MoreMore Posts by Alibaba Cloud Community