Read/write splitting is a common way of using databases. Middleware, like a MySQL proxy, sends write and update traffic to a primary node and forwards query traffic to a read-only node. This frees up CPU and I/O resources on the primary node and improves overall database availability.

This article analyzes how to use read-only instances to process massive volumes of read requests sent to the database in order to increase the throughput of applications.

The Three-node Enterprise Edition implements read-only instances by adding a learner. The learner receives committed logs from the leader and stores the logs in the consensus log. The secondary thread reads and distributes the logs to worker threads. Finally, the logs are concurrently played back to the state machine. In this case, the learner node is read-only for external clients.

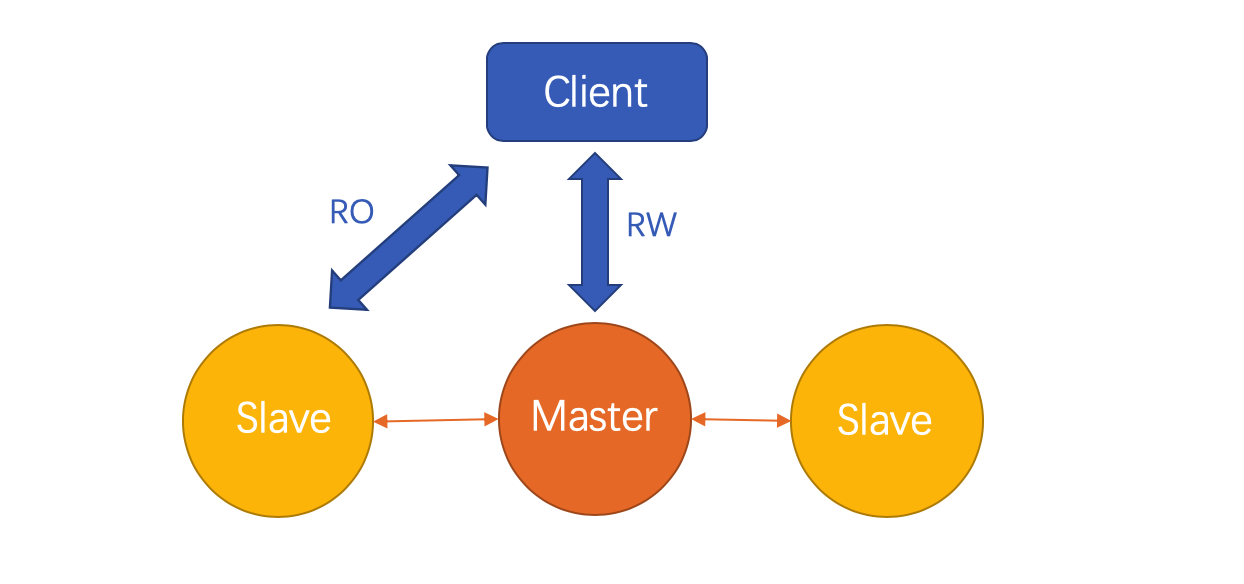

Users who have used MySQL cloud products may be familiar with the concept of read-only nodes. In the Dual-node High-availability Edition, two instances are initially generated. One is a primary node that performs read and write operations. The other is a secondary node in a read-only state. However, this node is not exposed to users and does not provide the read service externally. To add a read-only instance to support read/write splitting, the console creates a secondary node through backup and mounts it to the primary node. When this node synchronizes the latest data from the primary node, that is, when Second_Behind_Master is 0, it begins to provide the read service externally. The following figure shows the deployment mode of the nodes.

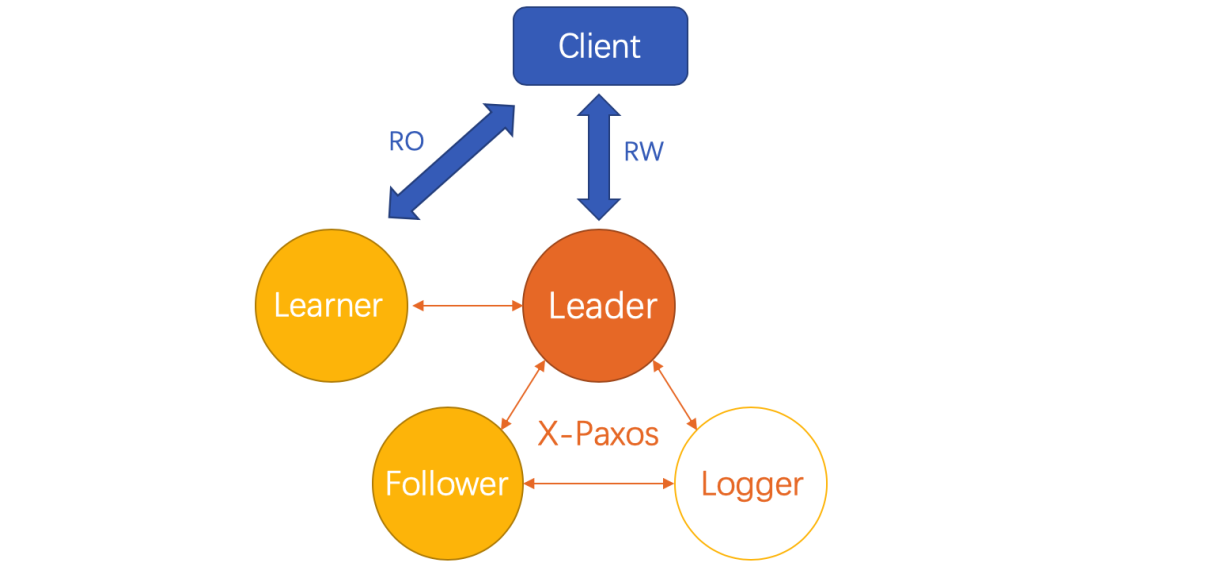

The read-only nodes of the Three-node Enterprise Edition are similar to those used in MySQL cloud products. First, a new learner node is created through backup and mounted to the leader. Then, the learner begins to receive and playback incremental consensus logs. When the logs played back to the learner node and catches up with the logs on the leader node, the learner node enables the external read service. The following figure shows the deployment mode of the nodes.

Compared to the read-only nodes of the High-availability Edition, the learner has the advantage of being connected to the X-Paxos system. This ensures that the data on the learner is consistent with that in the three-node cluster, regardless of failover between the primary node (the leader) and the disaster recovery nodes (the follower and the secondary node).

Assuming that in a dual-node high-availability scenario, the primary database updates the value of x from 1 to 2 and synchronizes the change only to the read-only node, but not to the secondary database. If the primary database fails, the secondary database becomes the new primary database, and the read-only node points to this secondary database. In this case, the data in the new primary database is inconsistent with that on the read-only node, because the value of x is 1 in the new primary database and 2 in the read-only node. If the service or database administrator (DBA) detects the database inconsistency and performs data backfilling to update the value of x in the new secondary database from 1 to 2 when the binary log of the transaction is synchronized to the read-only node, the SQL thread on the read-only node returns an error and exits. This exception is handled only through manual intervention. If a large amount of data needs to be backfilled, it is impossible to complete this operation through manual O&M. You must re-create a read-only node based on the backup of the new primary database. This makes the read-only node unavailable for a period of time. However, this problem does not occur in the Three-node Enterprise Edition.

The Three-node Enterprise Edition uses a special version of XtraBackup to back up and recover data for instances. We have improved XtraBackup based on the snapshot interface provided by X-Paxos. It now supports the creation of physical backup snapshots with consistent points in time and quickly incubates and adds a new learner node to the cluster to extend its read capability. In the forthcoming Three-node Edition of RDS 8.0, we will also integrate the new clone plug-in feature released in the Official Edition of MySQL 8.0, to provide snapshots with consistent points in time-based on the clone plug-in. This will make the incubation feature of the learner node faster and easier to maintain.

For more information about the clone plug-in, visit the following pages:

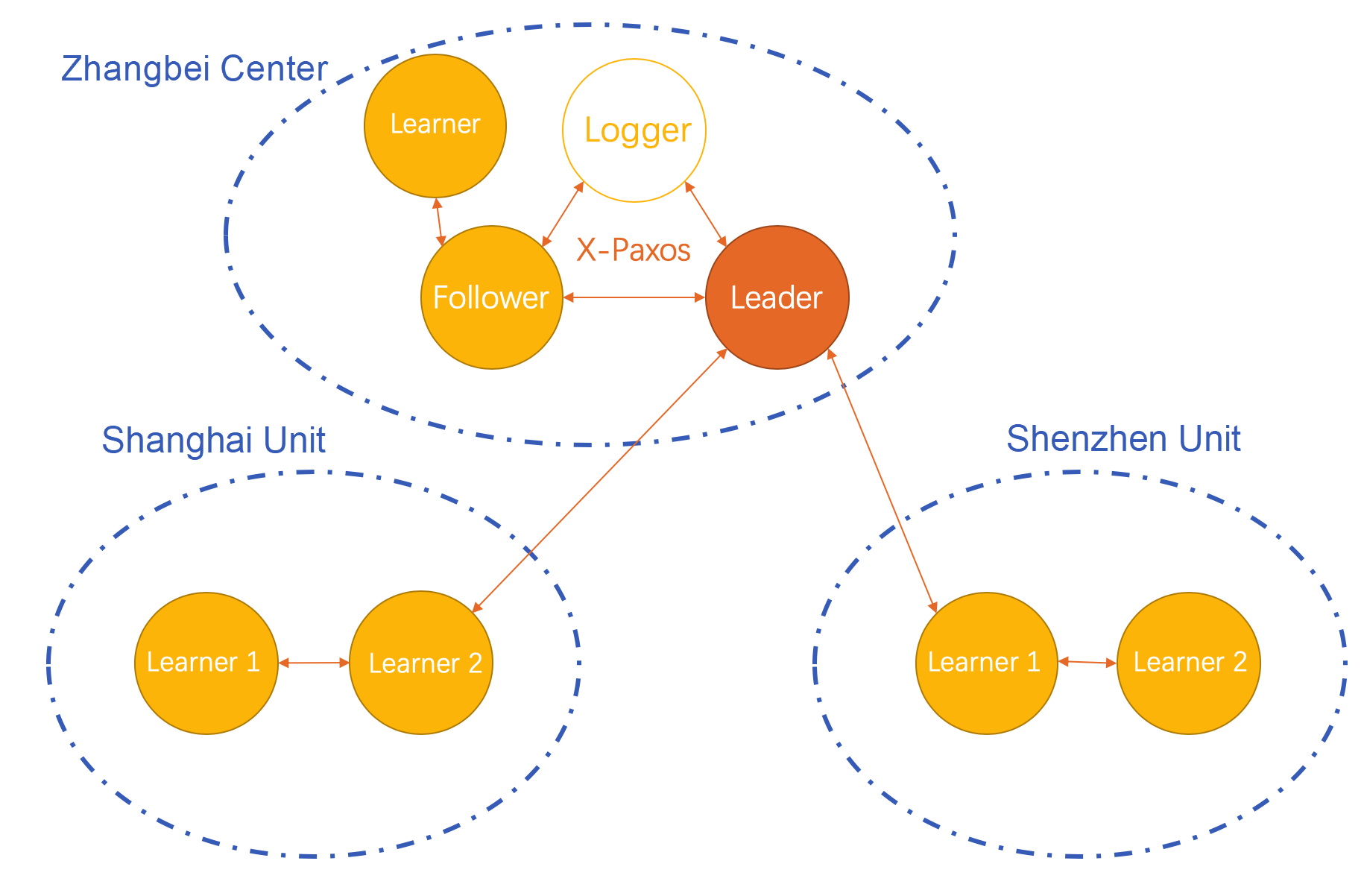

The read-only node of the Three-node Enterprise Edition easily implements a flexible replication topology by using the LearnerSource feature of X-Paxos and custom data sources. The three-node replication topology is configured through the control SQL statements related to membership change on the leader. Centralized configuration management ensures data consistency at the cluster level. The advantage of custom data sources is that, when a large number of read-only nodes are involved, the custom data sources divert loads of log transfers on the leader and scatter the volumes of data transmitted on the network, to reduce the latency of log synchronization.

The custom data source feature of the Three-node Enterprise Edition also supports region-based load balancing and LearnerSource-based disaster recovery. Specifically, load balancing allows automatically mounting the read-only node in each region to the follower or learner in the same region with the read-only node. In case, a fault occurs in a data source, the data source temporarily degrades to the leader node in the same region with the data source, until the faulty data source recovers. This topology ensures that the read-only nodes in each region synchronize data only from the nodes in the same region with the read-only nodes. This cascaded deployment greatly reduces the network bandwidth usage across regions, preventing bandwidth limits from causing latency in cross-region services.

The following figure shows an example of deployment mode within the Alibaba Group.

Certainly, in conventional MySQL, construct a series of primary-secondary-secondary topologies and configure replication relationships for the instances by running change master statements. However, this method has poor disaster recovery, which results in high management and O&M costs. The worse scenarios is as the number of read-only nodes increases, the risk of data inconsistency increases after disaster recovery.

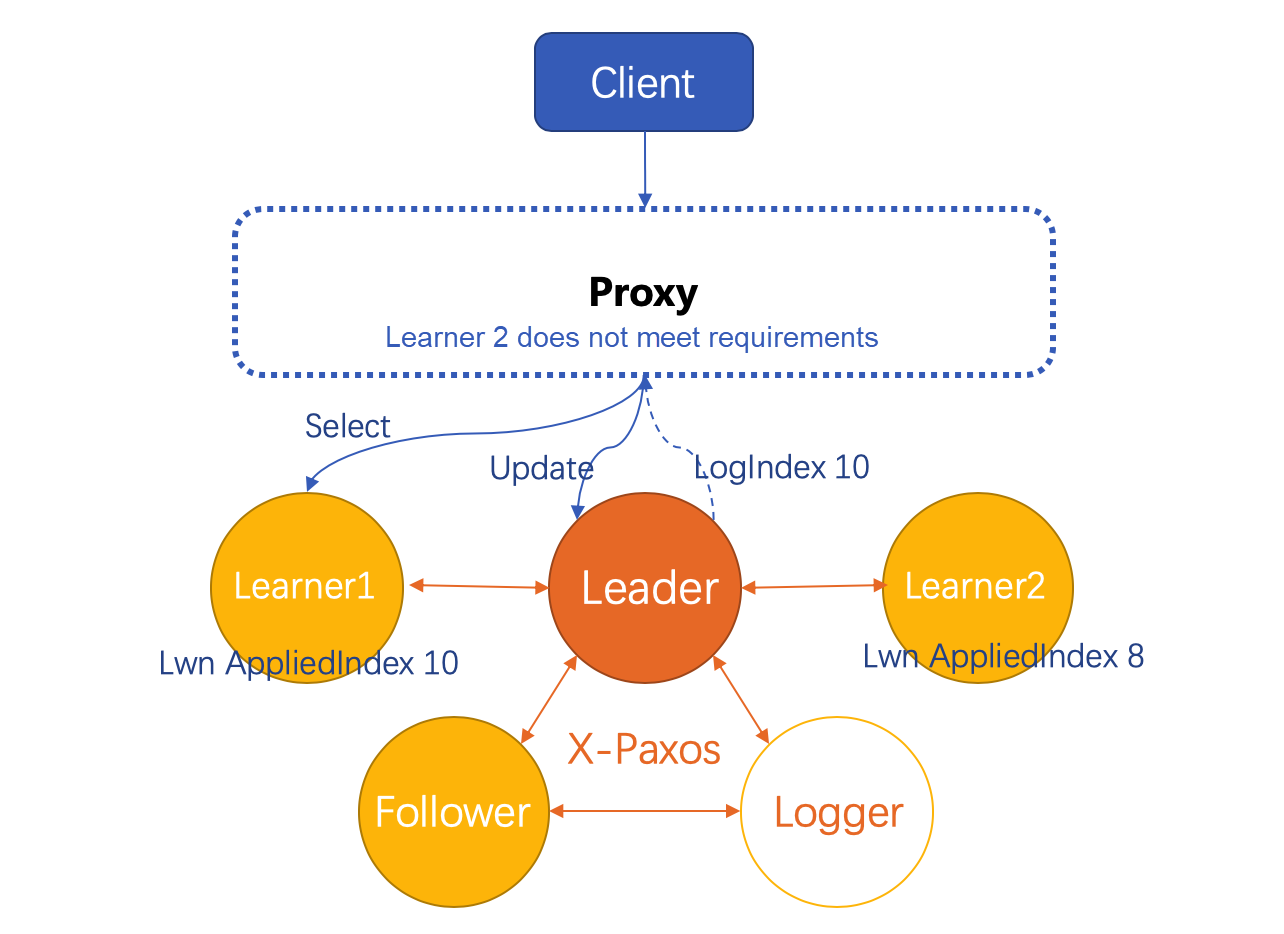

The read-only node receives and plays backlogs and receives external query requests. However, there is a challenge. Log synchronization and playback are asynchronous on the learner. Although the latency is less than 5 seconds in most scenarios, this does not guarantee that the data returned for each query is the latest. In particular, when a data definition language (DDL) statement for large tables or a large transaction is executed on the primary database, significant latency may occur on the read-only nodes. To solve this problem, the Three-node Enterprise Edition introduces MaxScale as a proxy for read/write splitting and implements session-wide read consistency in MaxScale. This ensures that subsequent read operations within a single session can read the data previously written in the same session, but does not ensure that the latest version of the data in other sessions can be read.

In X-Paxos, each log has a LogIndex, which corresponds to the instance number in Multi-Paxos. In addition, the read-only node maintains a concurrent log playback window when multiple threads play backlogs to the state machine in a disordered manner. The window calculates the low watermark for a played LogIndex (Lwm AppliedIndex).

All the logs before the Lwm AppliedIndex have been played back to the state machine, but the logs after the Lwm AppliedIndex still contain empty logs. In the Three-node Enterprise Edition, the proxy for the read/write splitting layer tracks the Lwm AppliedIndex of each read-only node in the cache and records the LogIndex of the current transaction in the updates of each leader. While receiving a new request, the proxy compares the latest LogIndex of the session with the Lwm AppliedIndex of each read-only node, and then only sends the request to a node whose Lwm AppliedIndex is greater than or equal to the LogIndex of the session. This ensures data consistency within the session. This mechanism achieves high read/write splitting performance in scenarios with more reads than writes.

By using the learner role from X-Paxos, the Three-node Enterprise Edition allows creating read-only instances to elastically scale the read capability and share the load of the primary database. Use read-only instances to process massive volumes of read requests sent to the database to increase the throughput of applications. Currently, the Alibaba Cloud official website allows users to create and use read-only instances in the Three-node Enterprise Edition of RDS 5.7. We welcome you to try it out.

Why AliSQL Could Become the Preferred Database Service of Large Enterprises

Alibaba Clouder - January 28, 2021

ApsaraDB - April 28, 2020

ApsaraDB - August 1, 2022

Alibaba Clouder - February 25, 2021

ApsaraDB - August 21, 2024

ApsaraDB - June 19, 2024

ApsaraDB for MyBase

ApsaraDB for MyBase

ApsaraDB Dedicated Cluster provided by Alibaba Cloud is a dedicated service for managing databases on the cloud.

Learn More PolarDB for MySQL

PolarDB for MySQL

Alibaba Cloud PolarDB for MySQL is a cloud-native relational database service 100% compatible with MySQL.

Learn More ApsaraDB RDS for MySQL

ApsaraDB RDS for MySQL

An on-demand database hosting service for MySQL with automated monitoring, backup and disaster recovery capabilities

Learn More ApsaraDB for OceanBase

ApsaraDB for OceanBase

A financial-grade distributed relational database that features high stability, high scalability, and high performance.

Learn MoreMore Posts by ApsaraDB