The RDS 5.7 3-node Enterprise Edition is a high-availability and strongly consistent database product incubated at Alibaba Group with support for global deployment. This product has been used in Alibaba Group's own businesses since 2017, providing reliable support for the Double 11 Shopping Festival over many years. After two years of internal polishing, this edition was officially made available on the public cloud in July 2019. Compared to the RDS 5.6 three-node edition, we adopted a new kernel design, especially in terms of the consensus protocol.

The core of the Three-node Enterprise Edition is the consensus protocol. In version 5.7, we integrated the consensus protocol library X-Paxos developed by Alibaba into AliSQL. In addition to 100% compatibility with MySQL, we implemented automatic primary database selection, log synchronization, strong data consistency, and online configuration changes. X-Paxos adopts the Multi-Paxos implementation solution for unique proposers and provides many innovative features and performance optimizations. X-Paxos is a consensus protocol that has greater significance in the production environment.

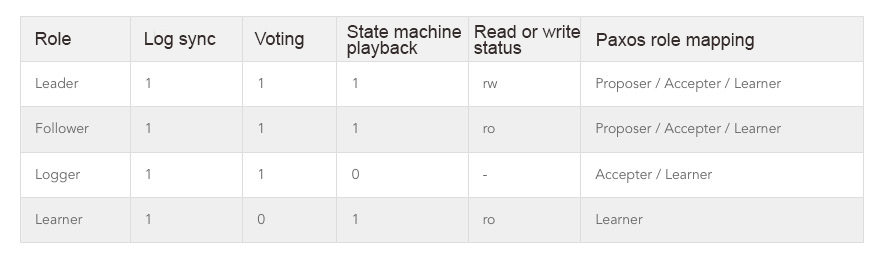

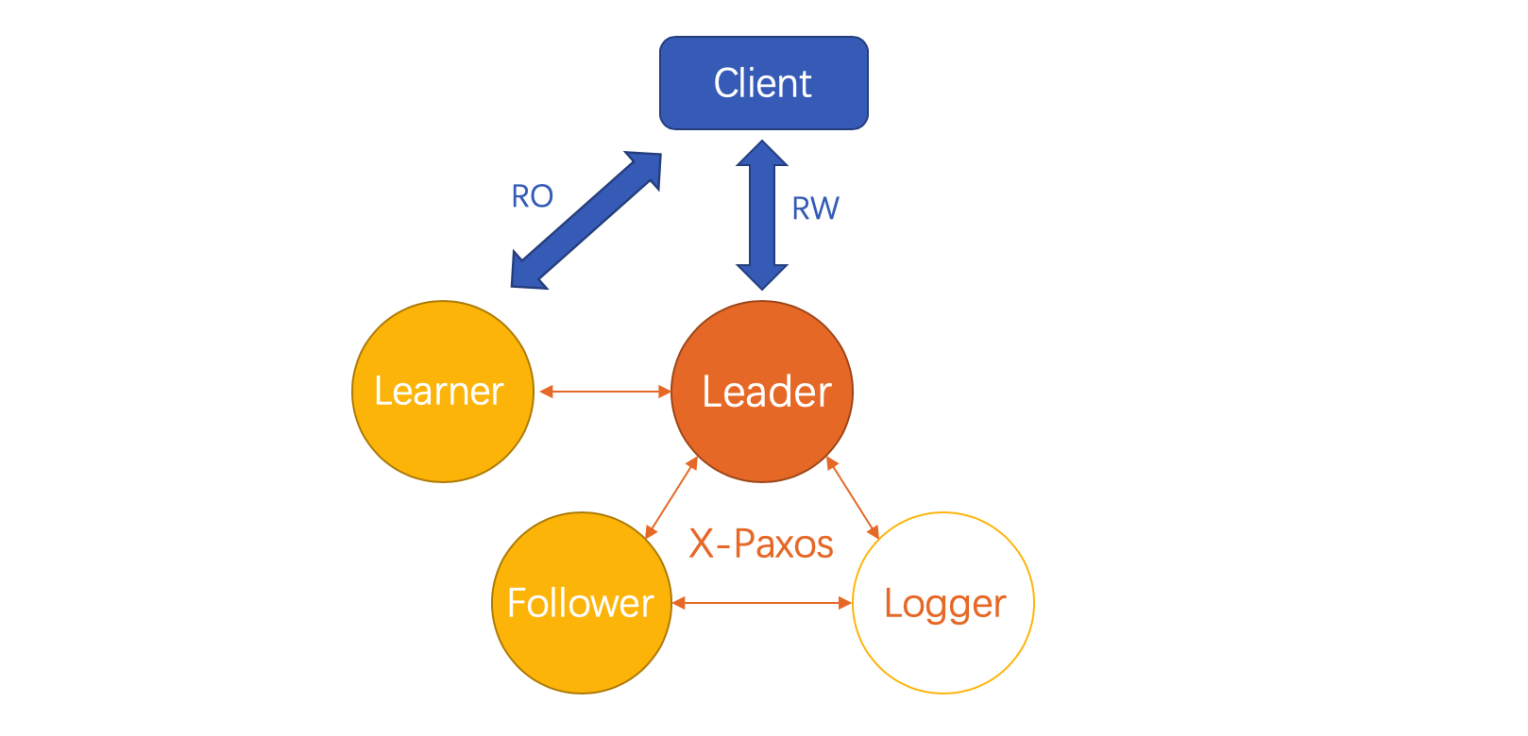

Anyone who has read about Paxos knows that the Paxos algorithm includes three roles: Proposer, Accepter, and Learner. In X-Paxos, there are four node roles:

The persistent storage of the entire consensus protocol is divided into two parts: logs and state machines. The logs record the updates of the state machines, which store the actual data that is read and written by external services.

The Leader is the only node in the cluster that supports read and write. It sends newly written logs to all nodes in the cluster, permits commitment after a quorum is reached, and plays backlogs to the local state machine. As you probably know, the standard Paxos version has a livelock problem. When two proposers alternate in initiating Prepare requests, Accept requests in each round fail, and the proposal number increases in an endless loop. This implies that consensus will never be achieved. Therefore, the best practice in the industry is to select a primary proposer to ensure the liveness of the algorithm. On the other hand in database scenarios, only the primary Proposer is allowed to initiate a proposal, which simplifies the handling of transaction conflicts and ensures high performance. This primary Proposer is called a Leader.

The Follower is a disaster recovery node. It is used to collect logs sent by the Leader and playback the logs that reach a quorum to the state machine. When the Leader fails, remaining nodes in the cluster select a new Follower to be the new Leader so that it accepts read and write requests.

The Logger is a special type of Follower that does not provide external services. The Logger does two things: store the latest logs for Leader quorum judgment and vote while selecting a new Leader. The Logger does not play backlogs to the state machine and regularly clears old logs, so it occupies very few computing and storage resources. Therefore, based on the Leader, Follower, and Logger deployment method, the three-node high-availability edition only costs slightly more than the two-node edition.

The Learner does not have the right to vote and does not participate in the quorum calculation. Instead, it only synchronizes logs committed by the Leader and plays them back to the state machine. In practice, we use the Learner as a read-only replica for read-write splitting at the application layer. In addition, X-Paxos allows nodes to switch between the Learner and Follower roles. This function allows faulty nodes to be migrated and replaced.

The Three-node Enterprise Edition supports various cluster modification and configuration management functions. For example:

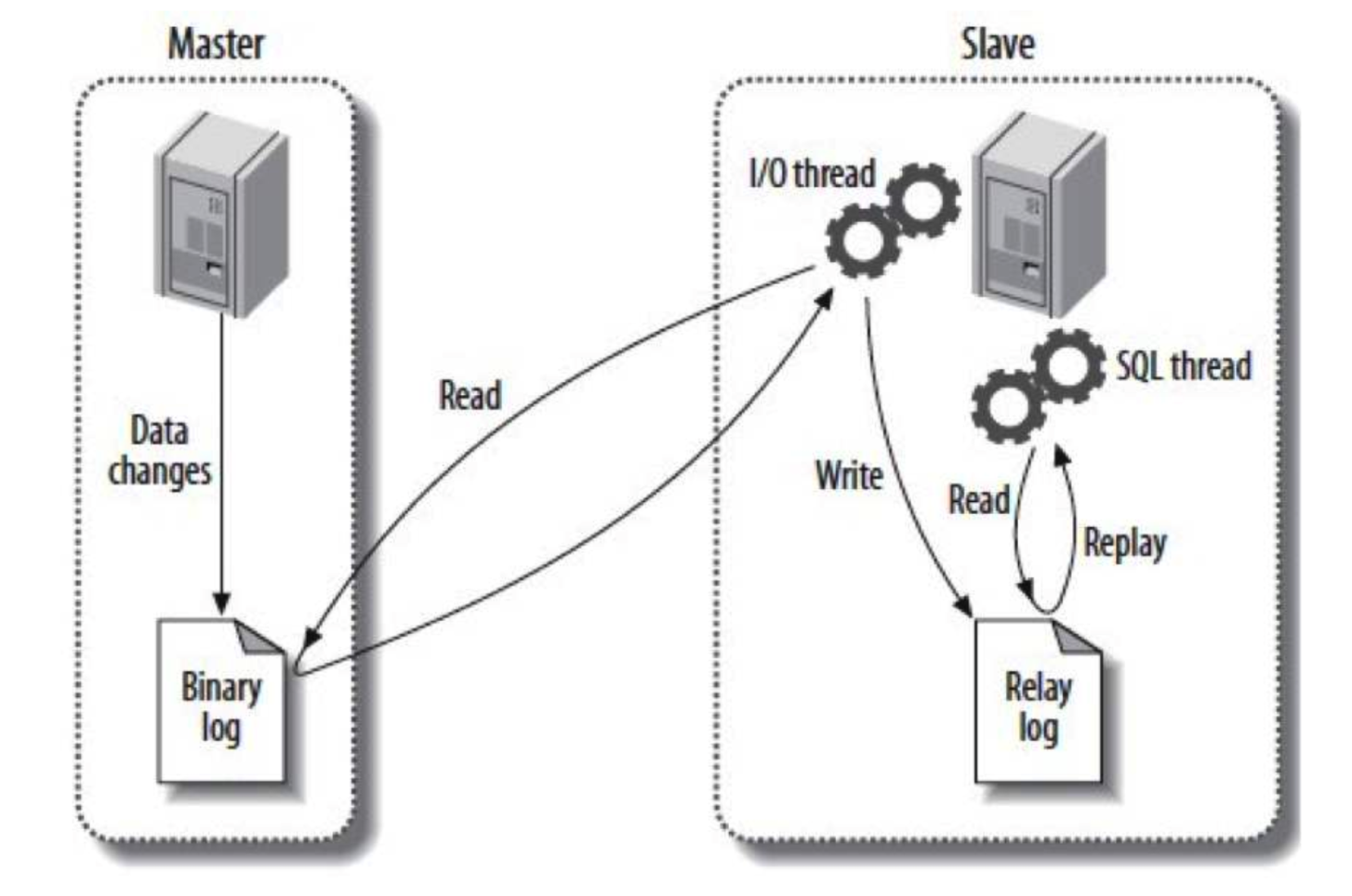

First, let's review the replication mode of the MySQL dual-node high-availability edition. The primary node writes binary logs and commits transactions. The secondary node uses an I/O thread to initiate dump requests from the primary node to pull the binary logs and store them in the local relay log. Finally, the SQL thread of the secondary node plays back the relay log.

The following figure illustrates the dual-node replication mode.

Generally, log-slave-updates must be enabled for the secondary node to ensure that the secondary database also provides log synchronization for downstream nodes. Therefore, in addition to the relay log, the secondary thread has a redundant binary log.

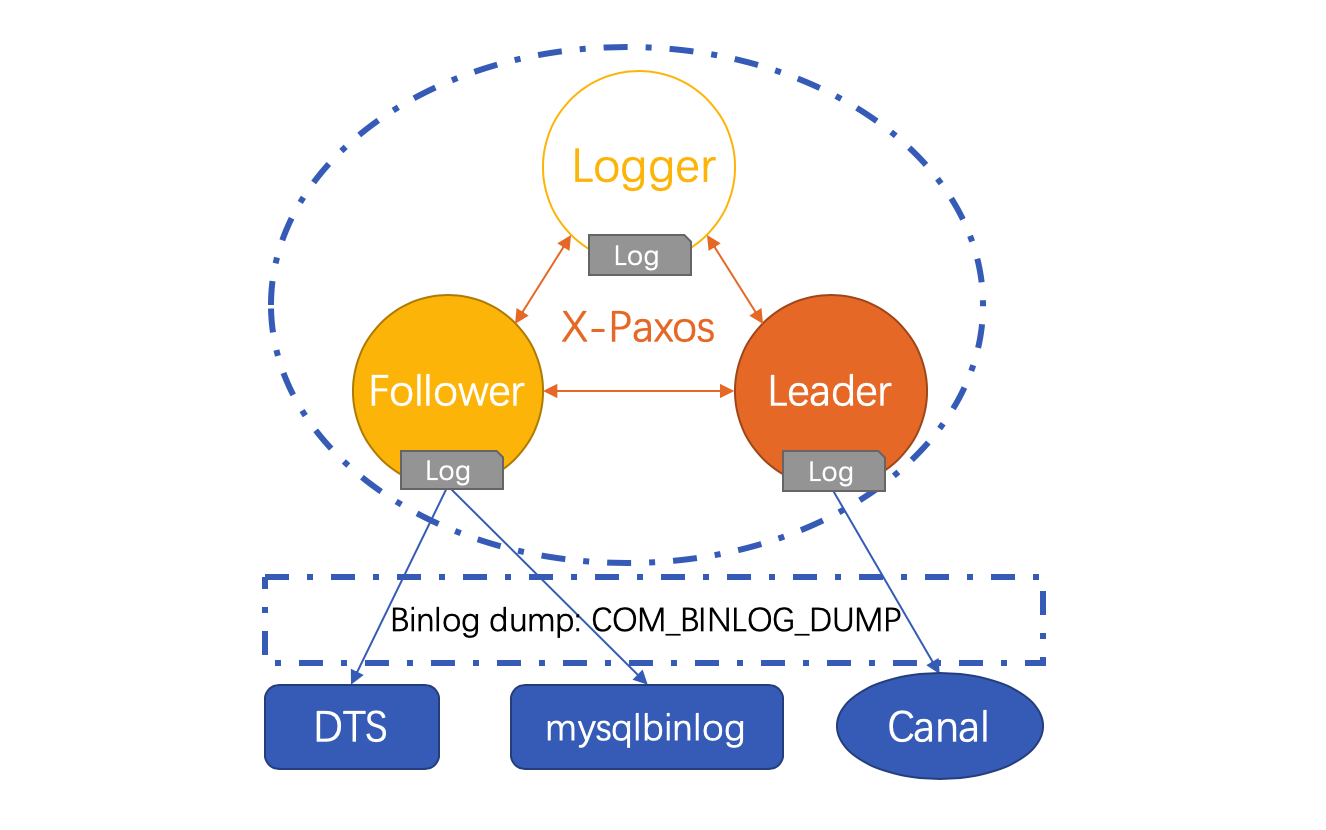

The Three-node Enterprise Edition inventively integrates the binary log and relay log to implement a unified consensus log, reducing the cost of log storage. When a node is a Leader, its consensus log assumes the role of the binary log. Similarly, when a node is switched to the Follower or Learner node, its consensus log assumes the role of the relay log.

The X-Paxos consensus protocol layer takes over the synchronization logic of the consensus log and provides external interfaces for log writing and state machine playback. Based on the consensus protocol and state machine replication theory, the new consensus log ensures data consistency among multiple nodes. In addition, the logs of the Three-node Enterprise Edition are implemented according to the MySQL binary log standard and are seamlessly compatible with binlog incremental subscription tools commonly used in the industry, such as Alibaba Cloud DTS and Canal.

The following figure illustrates the three-node replication mode.

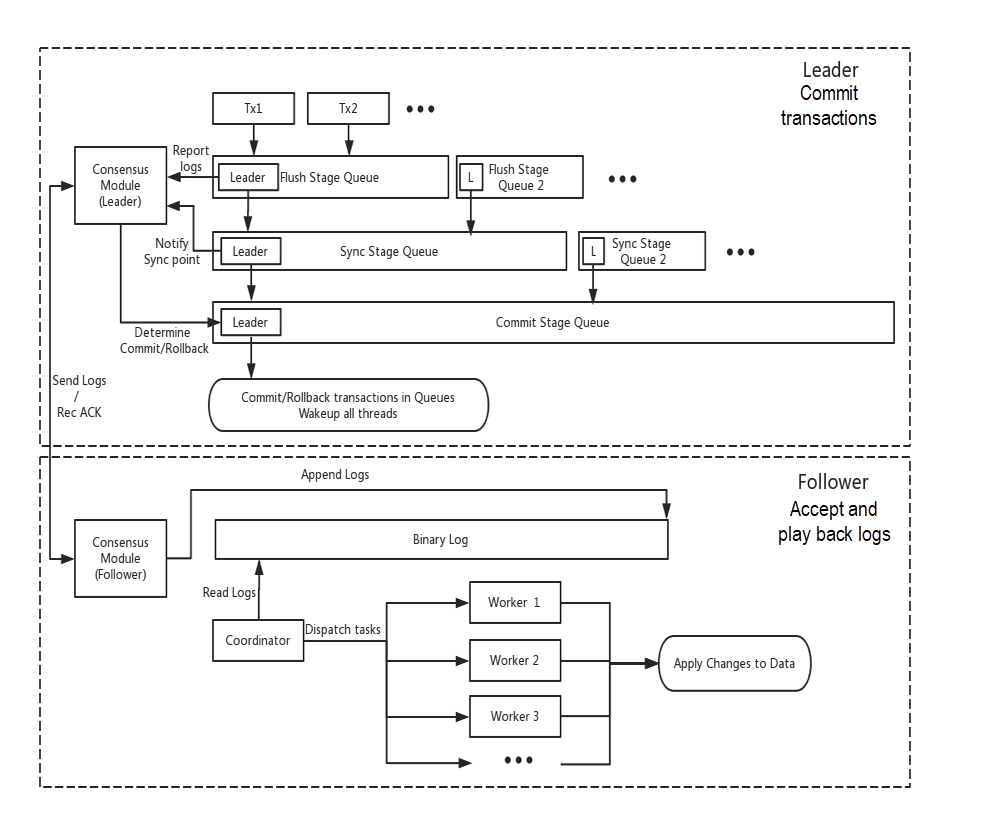

The state machine implementation of the Three-node Enterprise Edition improves upon MySQL's original transaction commitment process. There are several technical articles on MySQL Group Commit online.

The original Group Commit process has three stages: flush stage, sync stage, and commit stage. For the Leader node, the Three-node Enterprise Edition modifies the implementation of the commit stage. All transactions that enter the commit stage are pushed to an asynchronous queue, enter the quorum resolution phase, and wait for transaction logs to be synchronized to most nodes. Only transactions that meet the quorum conditions are committed. In addition, local writing and log synchronization are performed in parallel for consensus logs on the Leader, ensuring high performance.

On the Follower node, the SQL thread reads the consensus log and waits for a notification from the Leader. The Leader periodically synchronizes the commitment status of each log to the Follower. Logs that reach quorum are distributed to worker threads for parallel execution.

The logic of the Learner node is simpler than that of the Follower. The consensus protocol ensures that the Learner does not receive uncommitted logs. The SQL thread only needs to distribute the latest logs to worker threads without waiting for any conditions. In addition, the Three-node Enterprise Edition uses a special version of Xtrabackup to back up and restore instances. We have improved Xtrabackup based on the snapshot interface provided by X-Paxos. It now supports the creation of physical backup snapshots with consistent points and quickly incubates a new Learner node, which is added to the cluster to expand its read capability.

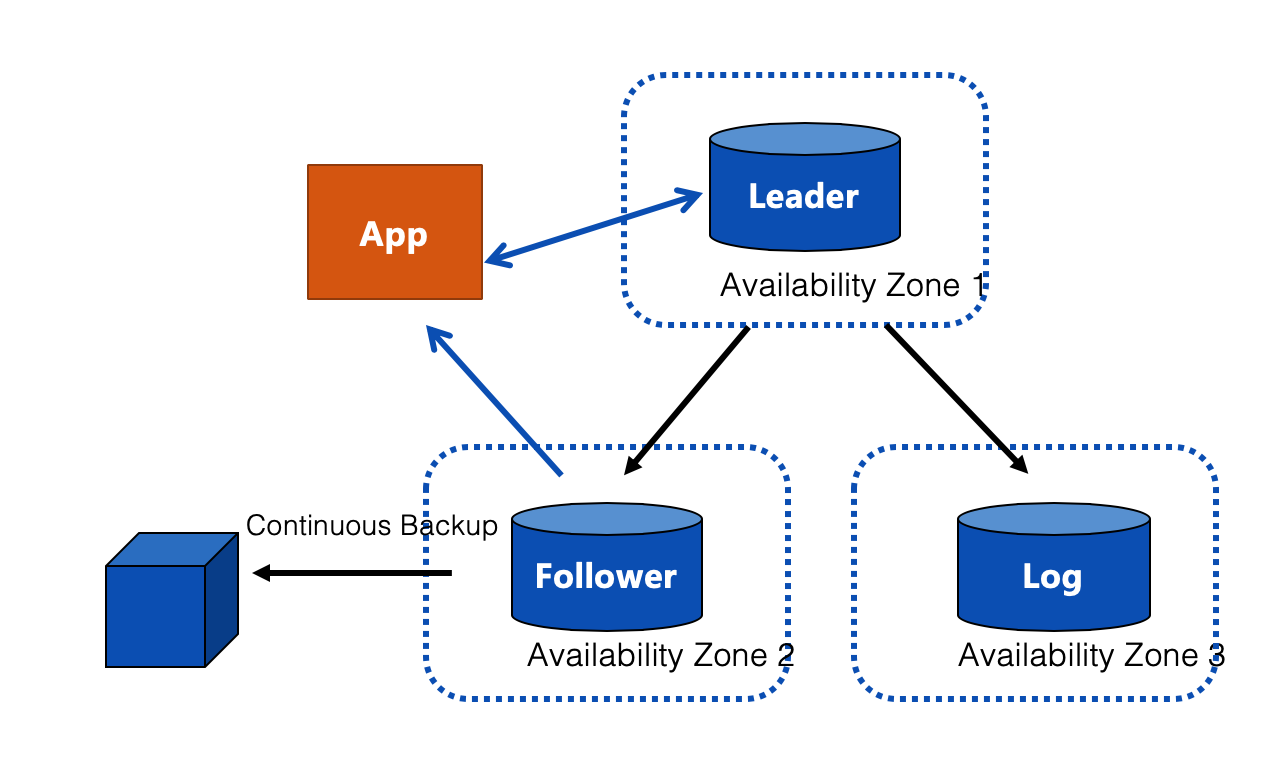

Three local replicas is the default deployment mode in the public cloud. Compared with the traditional dual-IDC active-standby high-availability edition, the three-node edition does not increase storage costs while providing high availability and strong consistency.

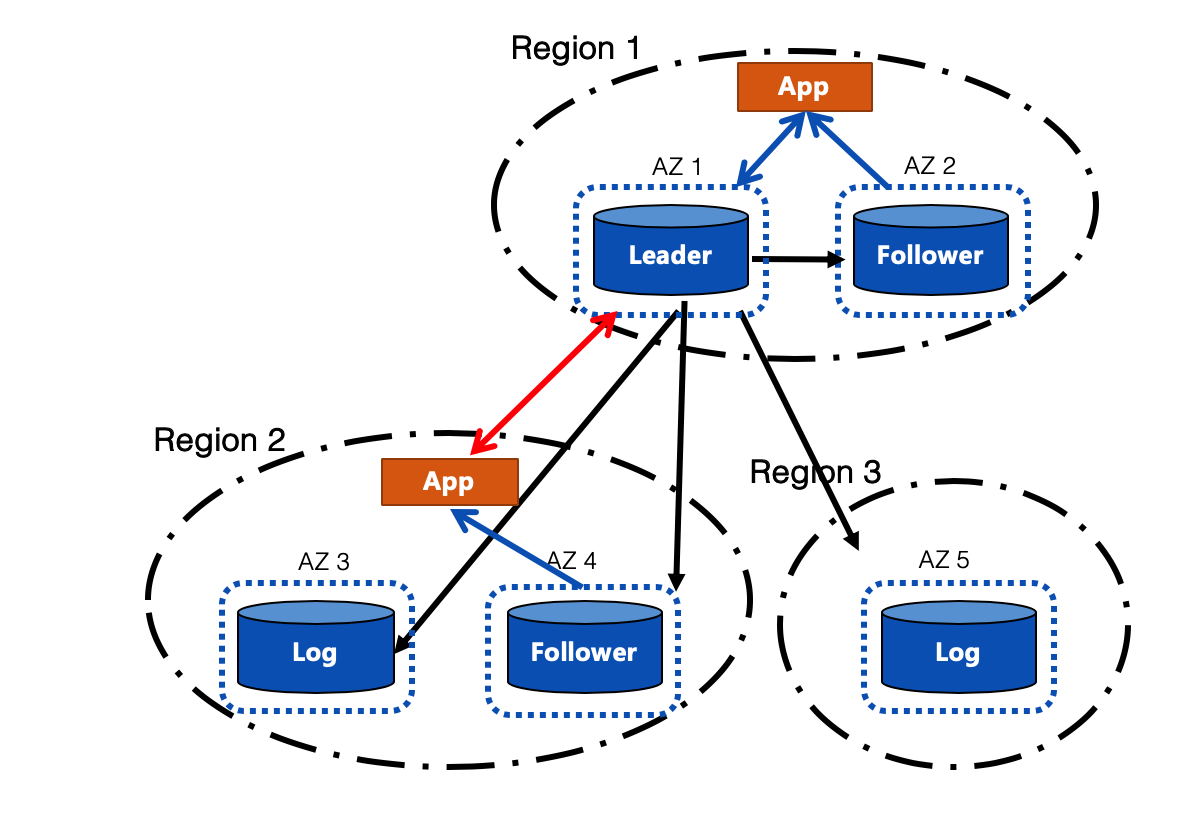

We recommend using the five cross-region replica architecture for cross-region disaster recovery. Compared to building a simple three cross-region replica architecture, the five-replica solution provides the following advantages:

With the development of the Internet, customers in the cloud are stressing more and more on data security, and many industries require data storage across data centers and regions. The RDS 5.7 Three-node Enterprise Edition is a cloud-based database solution built on proprietary Alibaba technologies. It is designed for users with high data quality requirements. In addition, current users of the RDS 5.7 High-availability Edition upgrades to the Three-node Edition with one click.

Alibaba Clouder - January 28, 2021

Alibaba Clouder - November 29, 2019

ApsaraDB - April 13, 2020

ApsaraDB - July 23, 2021

ApsaraDB - August 1, 2022

ApsaraDB - February 19, 2020

Application High Availability Service

Application High Availability Service

Application High Available Service is a SaaS-based service that helps you improve the availability of your applications.

Learn More PolarDB for MySQL

PolarDB for MySQL

Alibaba Cloud PolarDB for MySQL is a cloud-native relational database service 100% compatible with MySQL.

Learn More AnalyticDB for MySQL

AnalyticDB for MySQL

AnalyticDB for MySQL is a real-time data warehousing service that can process petabytes of data with high concurrency and low latency.

Learn MoreMore Posts by ApsaraDB