By Bairui

JOIN is a common operation in relational database queries. By joining several tables, users can obtain information more efficiently on the basis of complying with the database design paradigm. In analysis queries, the Join operation between large tables or between large and small tables is usually implemented through a Hash Join algorithm, which is usually one of the bottlenecks of query performance. Therefore, the most important task of the computing engine is to find a way to optimize the query performance of the Join operation.

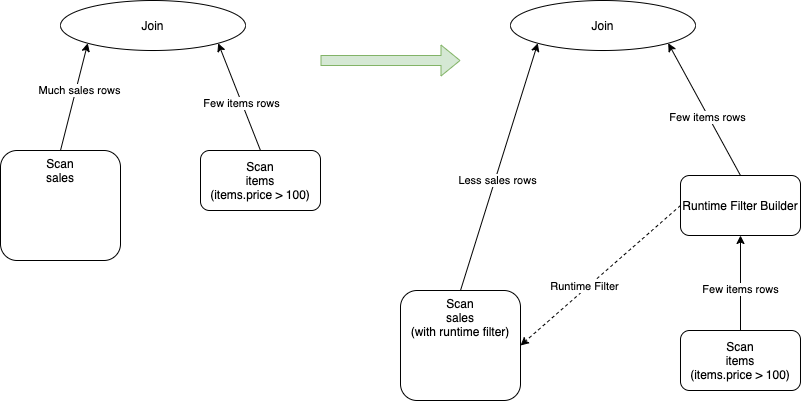

Runtime Filter is a widely used optimization technology in databases mentioned in [4]. Its basic principle is to significantly reduce data transmission and computing in the join operation by filtering out input data that does not match the join in advance for the probe side, thus reducing the overall execution time. The following example shows the original execution plan, where sales is a fact table and items is a latitude table:

SELECT * FROM sales JOIN items ON sales.item_id = items.id WHERE items.price > 100

As shown in the left half of the above figure, when performing the join, it is not only necessary to transfer the full sales data to operators of the join but also each row of sales data requires join operations, including hash values and comparison operations. If here items.price > 100 has a high selectivity (for example, 50%), so most of the data in the sales table will definitely not be joined. If the data is filtered out in advance, the data transmission and computing overhead can be reduced.

The right half of the preceding figure is the added execution plan after the runtime filter. As shown in the figure, a new RuntimeFilterBuilder operator is added to the process of pulling data from the build side of the join. This operator is used to collect build-side information, form a runtime filter, and send the collected information to the scan nodes of the probe side. By doing so, the probe nodes can reduce the amount of input data in scan operation, achieving performance improvement.

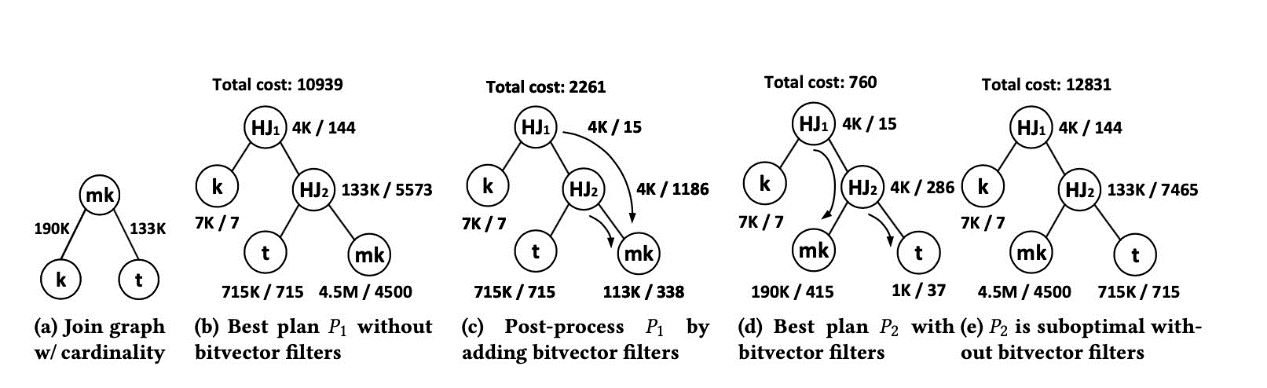

In most current systems, the operators required by a runtime filter are inserted into a physical execution plan after CBO phase of the optimizer by using a rule-based optimization method. However, as pointed out in [3], if the effect of runtime filter on the execution plan is taken into account in the CBO phase, the execution plan can be further optimized. The following shows an example:

In this example, figure (a) shows an original query and the join operation is performed on the three tables (k, mk, and t). Figure (b) shows the physical execution plan obtained from the CBO phase regardless of the runtime filter. The preceding figure (c) shows how to add the runtime filter to the physical execution plan based on the rules in (b). Figure (d) shows the physical execution plan obtained by placing the runtime filter in the CBO phase. It can be seen that the optimal physical execution plan obtained in figure (d) has a smaller final cost than the plan obtained in figure (c).

However, if the runtime filter is directly added to CBO, an exponential increase in the search space of the optimizer will be caused, since the CBO phase of existing optimizers is mostly based on dynamic programming algorithms. If a runtime filter is put into CBO, the optimal solution for the child plan depends on the combination of the filter pushed by the parent nodes in the query plan and ways that the runtime filter is applied to the table. Such a combination will cause an explosion of the search space. For star queries and snowflake queries, which are queries that join by associating latitude and fact tables by primary and foreign keys, certain join operations are equivalent after adding runtime filters. Therefore, the linear growth of the search space of the optimizer in the CBO phase is ensured.

As an HTAP database, PolarDB-X not only meets the requirements of high-performance online transaction processing (OLTP) scenarios, but also supports high-performance analysis of massive data. To meet customers' requirements for big data analysis, Runtime Filters in our exclusive MPP engine have been implemented. Its basic principles are the same as above. However, some special optimizations for distributed database scenarios have been made.

In PolarDB-X, the bloom filter[1] is selected to filter data, which has several advantages as follow:

Certainly, other systems also contain some other kinds of filters. For example, in Spark SQL, if the data is filtered by partitioned columns and the data volume on the build side is small, the full amount of input data will be used for dynamic partition pruning. If the queried data is indexed, such as Parquet or ORC, it generates a simple filter such as min/max to filter data. However, most of these filters are scenario-specific and not general enough.

The generation, transmission, and checking of a Runtime Filter introduce additional overhead. If the filter id abused without restraint, performance degradation will occur. Due to the complexity of cost estimation and implementation, most open-source systems only support implementing Runtime Filter in broadcast join, for example, Trino (formerly Presto). The advantage of this approach is that it is easy to implement with relatively small changes to the existing system, but many optimization opportunities will be lost.

In PolarDB-X, Runtime Filter generation rules are effectively combined with the optimizer statistics to determine whether a Runtime Filter needs to be generated based on data from multiple dimensions:

1 – buildNdv * (1+fpp)/probeNdv. A runtime filter is generated only when the filter ratio is greater than a certain threshold.The MPP engine of PolarDB-X is a distributed computing engine that is created for interactive analysis. Unlike Spark and Flink, it uses the push-based execution model. The advantage of this model is that the intermediate data is not written to disk, greatly reducing the latency of waiting during computing, yet also increasing the complexity of developing this feature of the Runtime Filter. Different from most open-source computing engines, the Runtime Filter in PolarDB-X supports broadcast join as well as other distributed join algorithms. Here one of the above SQL statements will be used as an example:

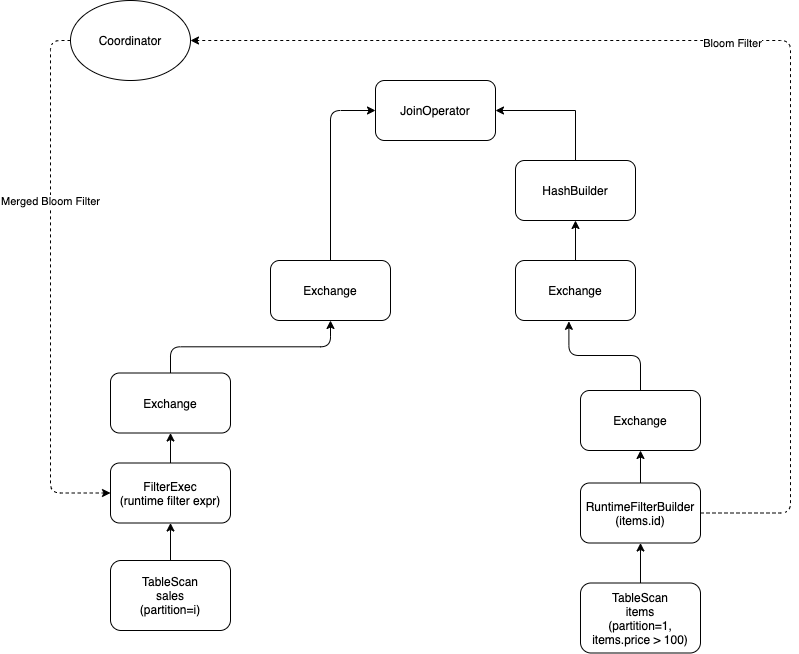

SELECT * FROM sales JOIN items ON sales.item_id = items.id WHERE items.price > 100The physical execution logic after the runtime filter is enabled is as follows:

As shown in the figure, the build side sends the generated bloom filters to the coordinator. The coordinator waits for each partition's bloom filter to be sent before performing a merge operation and sends the merged bloom filter to the FilterExec operator to achieve the filtering effect. The bloom filter merged by the coordinator is as large as the bloom filter of a single partition, but is transmitted only once for each probe side, greatly reducing data transmission. Meanwhile, FilterExec does not block the process of waiting for bloom filters but receives bloom filters asynchronously, thus minimizing the impact of bloom filter generation on latency.

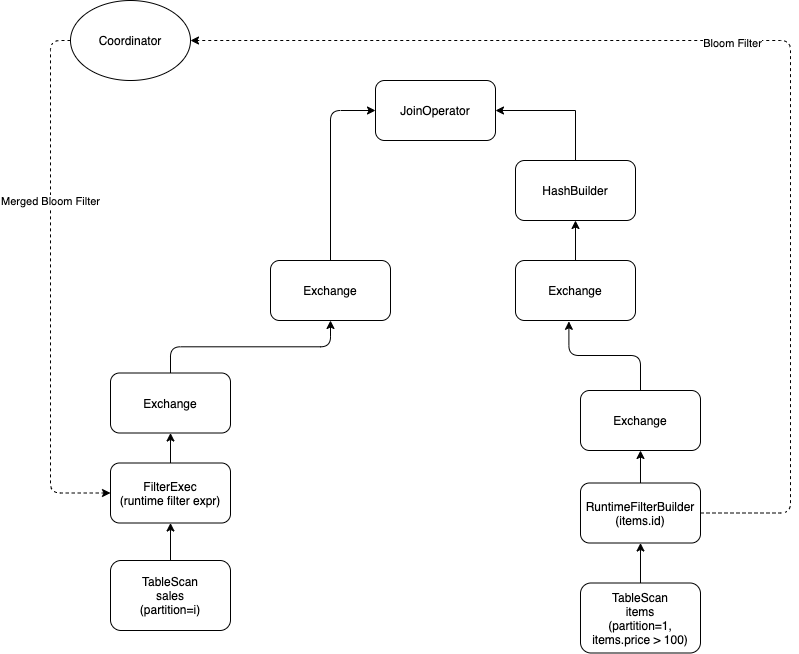

To further reduce data transmission, the bloom filter will be pushed down to the DN layer by implementing UDFs and data will be filtered on the DN side, greatly reducing the network overhead. As shown in the following figure, PolarDB-X pushes the bloom filter down to the DN side, reducing the amount of data pulled from DN and the overhead of network transmission and data parsing.

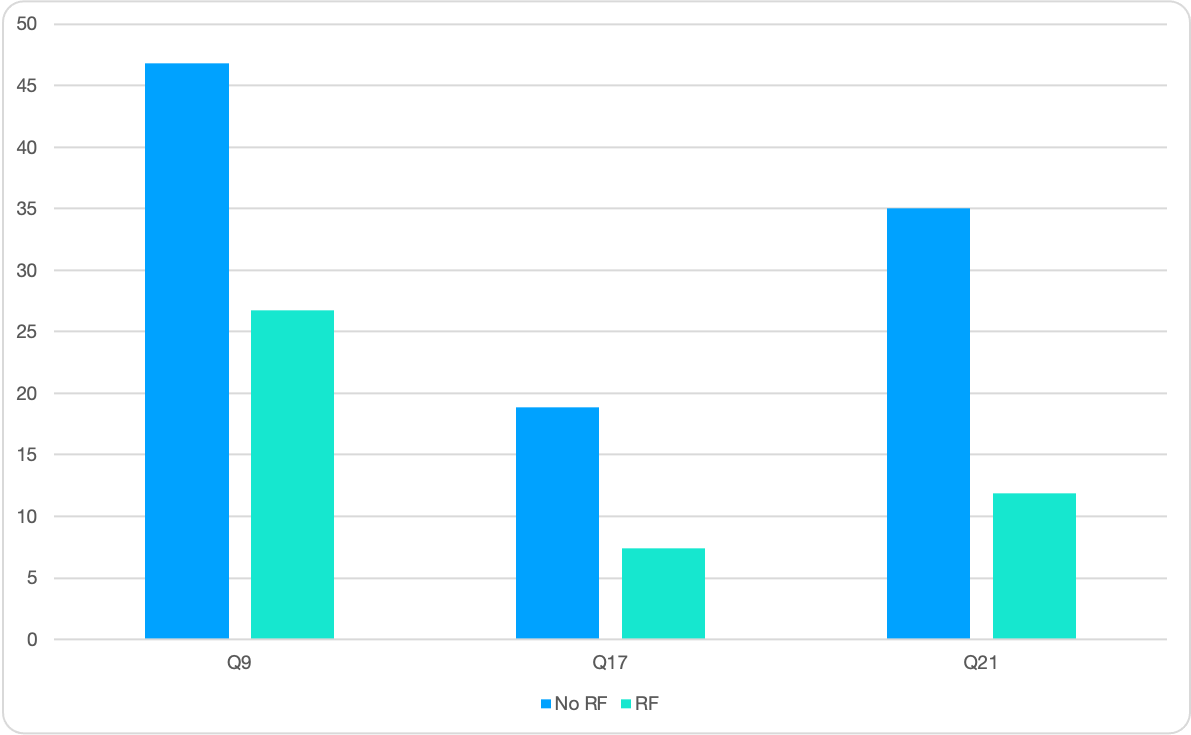

The comparison results of the Runtime Filter on the TPC-H 100G dataset are as shown below:

It can be seen that the performance on time-consuming large queries such as Q9 and Q21 has been improved by two to three times. For other medium-sized queries, the performance is also doubled, with the overall performance improved by about 20%.

ApsaraDB - August 8, 2023

ApsaraDB - October 16, 2024

Alibaba EMR - July 9, 2020

Alibaba EMR - March 16, 2021

ApsaraDB - November 8, 2024

Alibaba EMR - April 25, 2024

PolarDB for PostgreSQL

PolarDB for PostgreSQL

Alibaba Cloud PolarDB for PostgreSQL is an in-house relational database service 100% compatible with PostgreSQL and highly compatible with the Oracle syntax.

Learn More PolarDB for Xscale

PolarDB for Xscale

Alibaba Cloud PolarDB for Xscale (PolarDB-X) is a cloud-native high-performance distributed database service independently developed by Alibaba Cloud.

Learn More PolarDB for MySQL

PolarDB for MySQL

Alibaba Cloud PolarDB for MySQL is a cloud-native relational database service 100% compatible with MySQL.

Learn More ApsaraDB for HBase

ApsaraDB for HBase

ApsaraDB for HBase is a NoSQL database engine that is highly optimized and 100% compatible with the community edition of HBase.

Learn MoreMore Posts by ApsaraDB