By Jianwei Liu

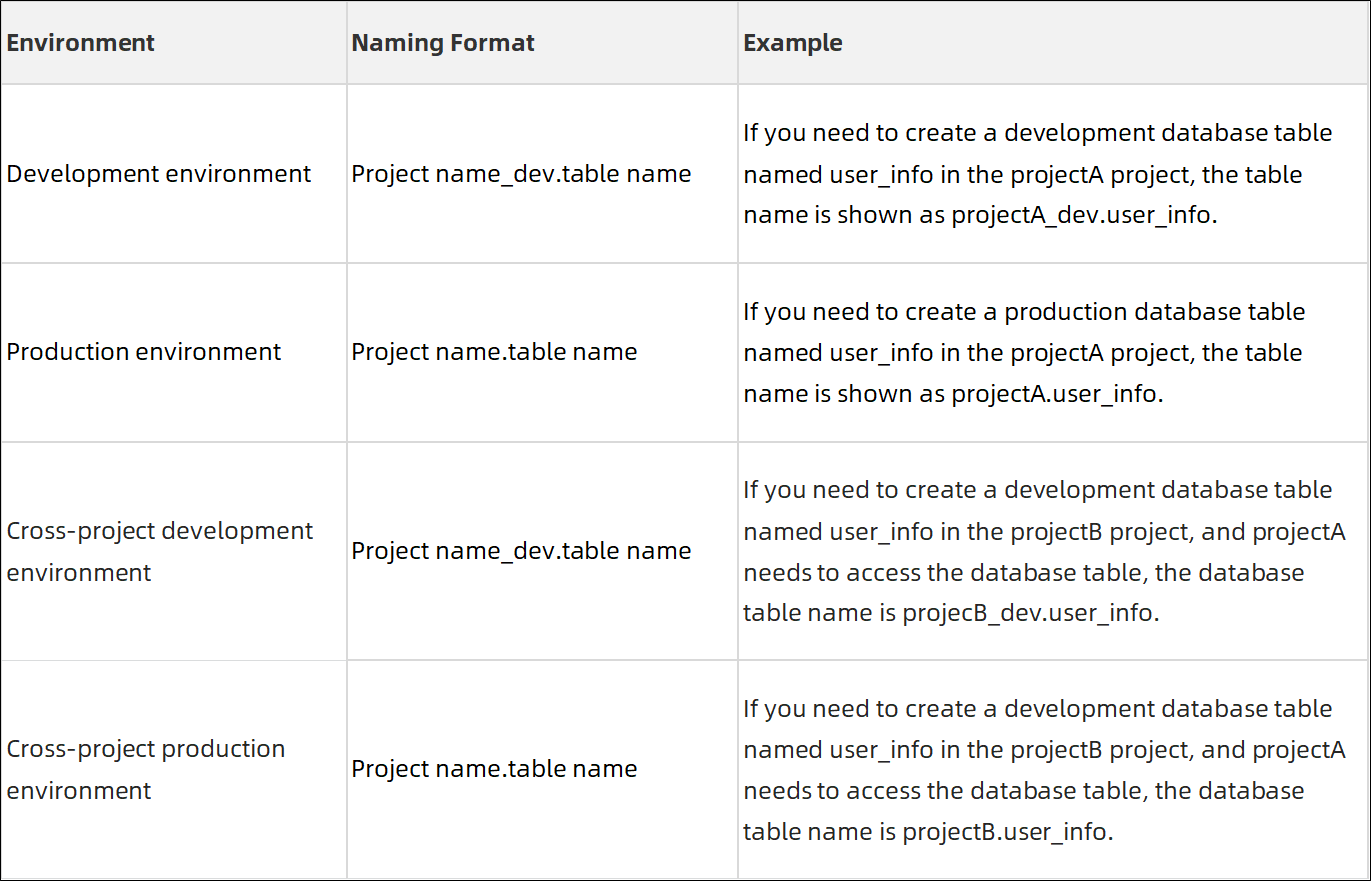

The development environment is isolated from the production environment in the standard mode of DataWorks. Thus, the naming formats of database tables differ in the two environments. If you need to access the database tables of the production environment from the development environment or access tables of project space B from project space A, you are required to distinguish the names of database tables to prevent inappropriate operations. The following table describes the naming formats of database tables for the two environments.

For SQL tasks in the development environment, [Development environment.table name] projectA_dev.user_info is required to use a table. You need to manually change the development environment name to the production environment name to publish the projectA.user_info to the production environment.

Use the Python SDK get_project method to get the project name. Then, use assignment nodes to provide the task results of the upstream nodes to the downstream nodes for use.

Assignment nodes only support three assignment languages: ODPS SQL, SHELL, and Python2. You need to download the ODPS package to use Python2. In addition, it is required to specify AK and ProjectName to connect to ODPS in the code. Although this solution can obtain upstream results and assign the values to downstream nodes, it cannot see the space name by flexible switching to satisfy user scenarios.

Then, if we consider using a general node PyODPS of DataWorks to manually add upstream output parameters, is it feasible to use assignment nodes or parameter nodes for downstream?

The solution also has problems in the time test.

The output part of the Context parameter for an upstream node must be specified in the input part of the Context parameter for a downstream node, so you cannot directly pass the query results of the upstream nodes to the downstream nodes. If you want to pass the query results of the upstream nodes to the downstream nodes, you can use the assignment nodes.

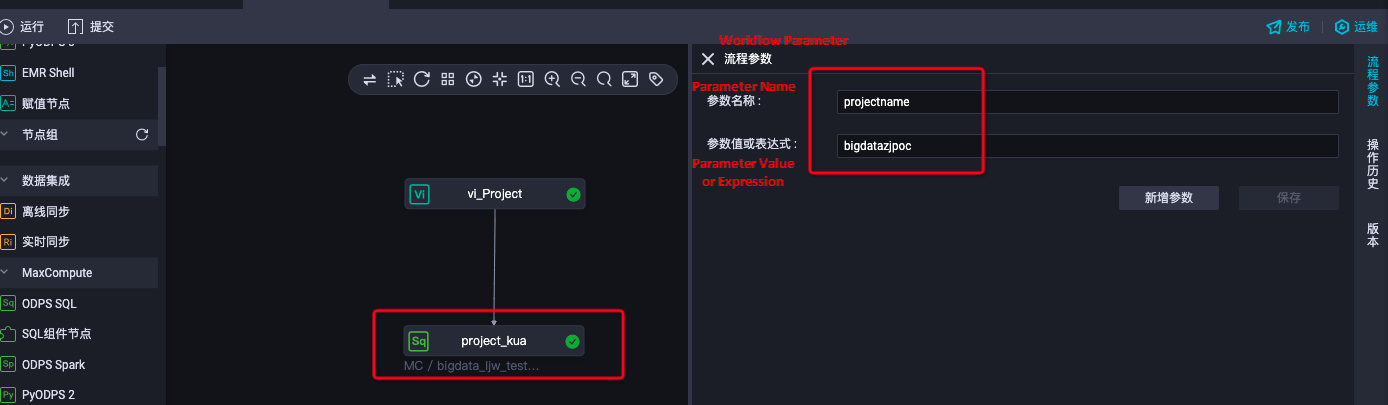

If you need to assign a value to a variable or replace the value of a parameter for all nodes in a workflow, you can adopt workflow parameters. If the value assigned to a workflow parameter is inconsistent with the value assigned to a single node parameter, the value of the workflow parameter overwrites the value of the node parameter.

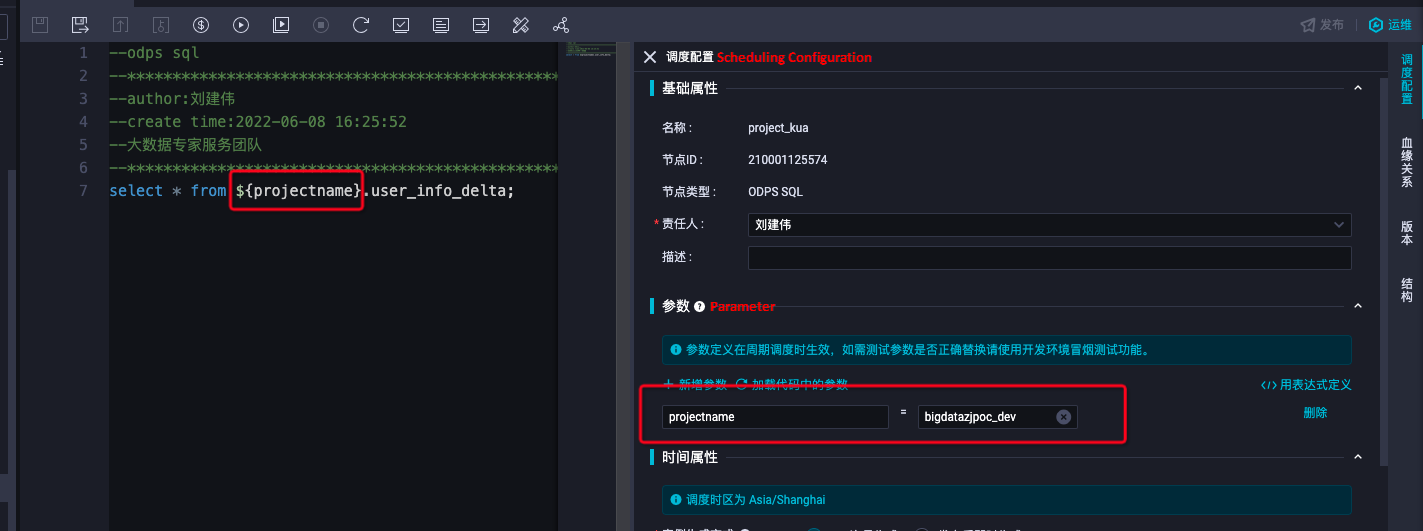

This article takes the cross-project space as an example. Access the table data of the project space bigdatazjpoc in the project space bigdtata_ljw_test.

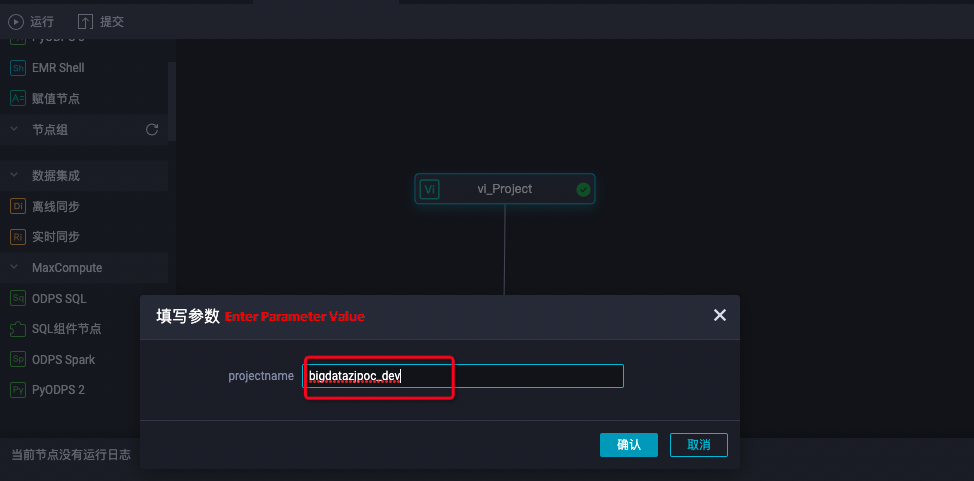

projectname=bigdatazjpoc_dev

-- Reference in code: The current project name is bigdtata_ljw_test

select * from ${projectname}.user_info_delta;The following figure shows the configuration:

Parameter name: projectname

Parameter value or expression: bigdatazjpoc

⚠️ Note: The workflow parameter name must be the same as the node name.

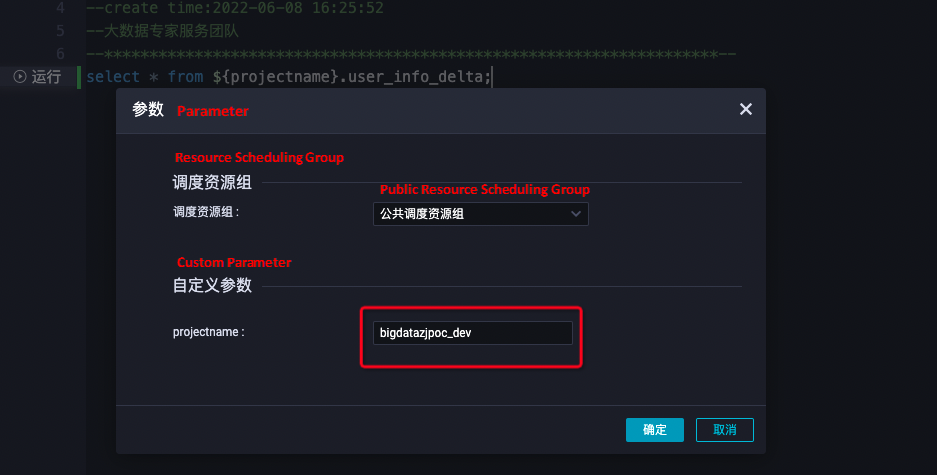

Parameters are set in nodes. In the development environment, single-node testing requires smoke testing or advanced running. During the advanced running, the parameter is bigdatazjpoc_dev and the cross-project development environment. It meets the needs of the development environment to access the development environment.

In the development environment, the entire workflow test is manually entering the parameter bigdatazjpoc_dev. The test running also accesses table data in the development environment.

Submitting to the production environment for running retroactive data, you can see the project in the production environment where the execution parameter is configured for the workflow parameter. (Ignore the run failure in the screenshot caused by permission issues.)

If the value assigned to a workflow parameter is inconsistent with the value assigned to a node parameter, the value of the workflow parameter overwrites the value of the node parameter. The workflow parameter is configured as the project space in the production environment corresponding to the cross-project space. This scenario is satisfied.

If you need to access table data across projects or with isolated connections between the development and production environments, you can use the DataWorks process parameters feature without frequently modifying the project name.

137 posts | 20 followers

FollowAlibaba Cloud MaxCompute - December 18, 2018

Alibaba Cloud MaxCompute - April 25, 2019

Alibaba Clouder - February 20, 2020

Alibaba Clouder - September 3, 2019

Alibaba Cloud MaxCompute - June 2, 2021

Alibaba Clouder - September 3, 2019

137 posts | 20 followers

Follow Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More MaxCompute

MaxCompute

Conduct large-scale data warehousing with MaxCompute

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn More DataWorks

DataWorks

A secure environment for offline data development, with powerful Open APIs, to create an ecosystem for redevelopment.

Learn MoreMore Posts by Alibaba Cloud MaxCompute

Dikky Ryan Pratama June 23, 2023 at 8:47 am

awesome!