By Yang Che

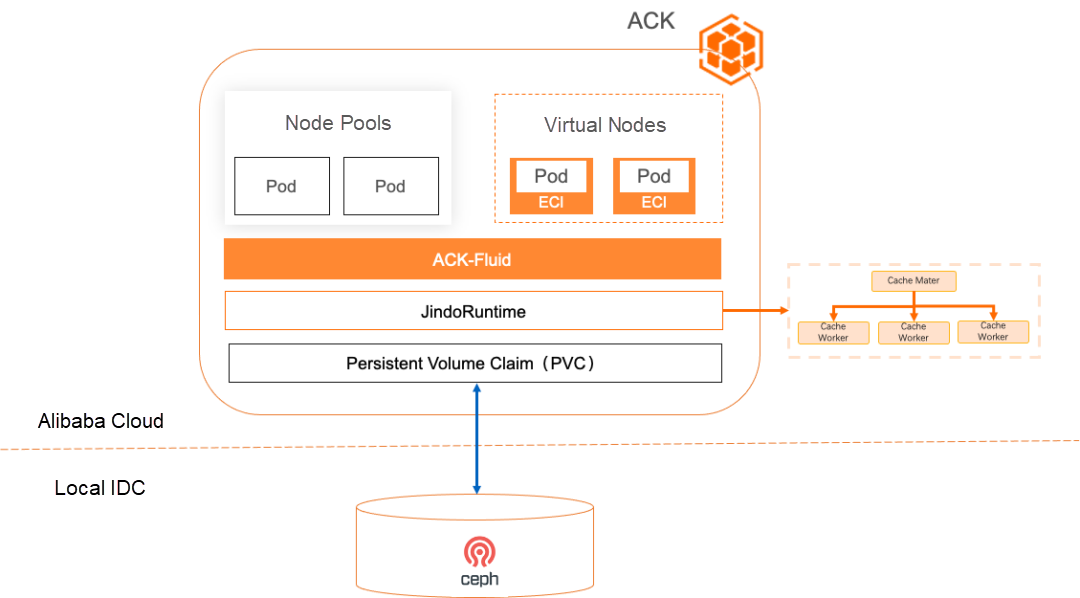

In the previous article, Build Bridges Between Elastic Computing Instances and Third-party Storage, I introduced how to use ACK Fluid to connect to third-party distributed storage. This allows you to access and transmit data between Elastic Container Instances (ECI) and Elastic Compute Service (ECS) and the on-premises storage system. This effectively solves the connectivity issue in the first phase of cloud migration.

In a production environment, when cloud computing accessing the on-premises storage system becomes a regular practice, performance, cost, and stability need to be considered. For example, what are the annual costs of accessing offline data in the cloud? Is there a significant difference in the time consumed by cloud computing tasks compared to tasks in the original IDC (Internet Data Center) environment? And how can the loss of cloud computing tasks be minimized if there is a problem with the leased line?

This article will focus on accelerating access to third-party storage, achieving better performance, lower costs, and reducing dependence on the stability of the leased line.

Even though cloud computing can access the enterprise's offline storage using the standardized PV (Persistent Volume) protocol of Kubernetes, it still faces challenges and requirements in terms of performance and cost:

• Limited data access bandwidth and high latency: Accessing on-premises storage from cloud computing results in high latency and limited bandwidth, leading to time-consuming high-performance computing and low utilization of computing resources.

• Reading redundant data leads to high network costs: During hyperparameter tuning of deep learning models and automatic parameter tuning of deep learning tasks, the same data is repeatedly accessed. However, the native scheduler of Kubernetes cannot determine the data cache status, resulting in poor application scheduling and the inability to reuse the cache. As a result, repeated data retrieval increases Internet and leased line fees.

• Offline distributed storage becomes a bottleneck for concurrent data access and faces performance and stability challenges: When large-scale computing power concurrently accesses offline storage and the I/O pressure of deep learning training increases, offline distributed storage easily becomes a performance bottleneck. This affects computing tasks and may even cause the entire computing cluster to fail.

• Strongly affected by network instability: If the network between the public cloud and the data center is unstable, it can result in data synchronization errors and unavailability of applications.

• Data security requirements: Metadata and data need to be protected and cannot be persistently stored on disks.

ACK Fluid provides a general acceleration capability for PV volumes based on JindoRuntime. It supports third-party storage that meets the requirements of PVCs (Persistent Volume Claims) and achieves data access acceleration through a distributed cache in a simple, fast, and secure manner. The following benefits are provided:

1. Zero adaptation cost: You only need to implement the third-party storage for the PVC in the CSI (Container Storage Interface) protocol and can immediately use it without additional development.

2. Significantly improved data access performance to enhance engineering efficiency:

3. Avoiding repeated reading of hot data and saving network costs: The distributed cache is used to persistently store hot data in the cloud, reducing data reading and network traffic.

4. Data-centric automated operation and maintenance (O&M) enables efficient data access and improves O&M efficiency: It includes automatic and scheduled data cache preheating to avoid repeated data retrieval. It also supports data cache scale-out, scale-in, and cleaning to implement automatic data cache management.

5. Using a distributed memory cache to avoid metadata and data persistence on disks for enhanced security: For users with data security concerns, ACK Fluid provides a distributed memory cache. It not only offers good performance but also alleviates worries about data persistence on disks.

Summary: ACK Fluid provides benefits such as out-of-the-box functionality, high performance, low cost, automation, and no data persistence for cloud computing to access third-party storage PVCs.

• An ACK Pro cluster is created. The cluster version is 1.18 or later. For more information, see Create an ACK Pro cluster [1].

• Cloud-native AI suite is installed, and ack-fluid components are deployed. Important: If you have open source Fluid installed, uninstall it and then deploy ack-fluid components.

• If the cloud-native AI suite is not installed, enable Fluid Data Acceleration during installation. For more information, see Install the cloud-native AI suite [2].

• If the cloud-native AI suite is installed, deploy the ack-fluid on the Cloud-native AI Suite page in the Container Service console.

• A kubectl client is connected to the cluster. For more information, see Use kubectl to connect to a cluster [3].

• You have created PV volumes and PVC volume claims that need to access the storage system. In the Kubernetes environment, different storage systems have different storage volume creation methods. To ensure stable connections between the storage systems and the Kubernetes clusters, make preparations according to the official documentation of the corresponding storage system.

Note: For hybrid cloud scenarios, we recommend configuring the data access mode as read-only for better data security and performance.

Run the following command to query the information about PV and PVC volume claims in Kubernetes:

$ kubectl get pvc,pvExpected output:

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

persistentvolumeclaim/demo-pvc Bound demo-pv 5Gi ROX 19h

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

persistentvolume/demo-pv 30Gi ROX Retain Bound default/demo-pvc 19hThe PV volume demo-pv has a capacity of 30 GB and supports RWX access mode. The PV volume has been bound to a volume claim whose PVC name is demo-pvc. Both can be used normally.

1) Create a dataset.yaml file. The following Yaml file contains two Fluid resource objects to be created, Dataset and JindoRuntime.

• Dataset: describes the claim information of the PVC volume to be mounted.

• JindoRuntime: the configurations of the JindoFS distributed cache system to be started, including the number of worker component replicas and the maximum available cache capacity for each worker component.

apiVersion: data.fluid.io/v1alpha1

kind: Dataset

metadata:

name: pv-demo-dataset

spec:

mounts:

- mountPoint: pvc://demo-pvc

name: data

path: /

accessModes:

- ReadOnlyMany

---

apiVersion: data.fluid.io/v1alpha1

kind: JindoRuntime

metadata:

name: pv-demo-dataset

spec:

replicas: 2

tieredstore:

levels:

- mediumtype: MEM

path: /dev/shm

quota: 10Gi

high: "0.9"

low: "0.8"The following table describes the object parameters in the configuration file.

| Parameter | Description |

| mountPoint | The information about the data source to be mounted. If you want to mount a PVC as a data source, you can use the pvc: //<pvc_name>/<path> format to mount the PVC. The field parsing is as follows:• pvc name: the name of the PVC volume to be mounted. The PVC must be in the same namespace as the Dataset resource to be created. • path: specifies the subdirectory under the volume to be mounted. Make sure that the subpath exists. Otherwise, the volume fails to be mounted. |

| replicas | The number of workers for the JindoFS cache system. You can modify the number based on your requirements. |

| mediumtype | The type of cache. Only HDD (Mechanical Hard Disk Drive), SSD (Solid State Drive), and MEM (Memory) are supported. In AI training scenarios, we recommend that you use MEM. When MEM is used, the cache data storage directory specified by path needs to be set as the memory file system. For example, set a temporary mount directory and mount the directory as the Tmpfs file system. |

| path | The directory used by JindoFS workers to cache data. For optimal data access performance, it is recommended to use /dev/shm or other paths where the memory file system is mounted. |

| quota | The maximum cache size that each worker can use. You can modify the number based on your requirements. |

2) Run the following commands to create the Dataset and JindoRuntime resource objects

$ kubectl create -f dataset.yaml3) Run the following command to check the deployment of Dataset

$ kubectl get dataset pv-demo-datasetExpected output: The image pulling process is required when JindoFS is started for the first time. Due to factors such as network environment, it may take 2 to 3 minutes. If the Dataset is in the Bound state, the JindoFS cache system is started in the cluster. Application pods can access the data defined in the Dataset.

The data access efficiency of application pods may be low because the first access cannot hit the data cache. Fluid provides the DataLoad cache preheating operation to improve the efficiency of the first data access.

1) Create a dataload.yaml file. Sample code:

apiVersion: data.fluid.io/v1alpha1

kind: DataLoad

metadata:

name: dataset-warmup

spec:

dataset:

name: pv-demo-dataset

namespace: default

loadMetadata: true

target:

- path: /

replicas: 1The following table describes the object parameters.

| Parameter | Description |

spec.dataset.name |

The name of the Dataset object to be preheated. |

spec.dataset.namespace |

The namespace to which the Dataset object belongs. The namespace must be the same as the namespace of the DataLoad object. |

spec.loadMetadata |

Specifies whether to synchronize metadata before preheating. This parameter must be set to true for JindoRuntime. |

spec.target[*].path |

The path or file to be preheated. The path must be a relative path of the mount point specified in the Dataset object. For example, if the data source mounted to the Dataset is pvc://my-pvc/mydata, setting the path to /test will preheat the /mydata/test directory under my-pvc's corresponding storage system. |

spec.target[*].replicas |

The number of worker pods created to cache the preheated path or file. |

2) Run the following command to create a DataLoad object

$ kubectl create -f dataload.yaml3) Run the following command to check the DataLoad status

$ kubectl get dataload dataset-warmupExpected output:

NAME DATASET PHASE AGE DURATION

dataset-warmup pv-demo-dataset Complete 62s 12s4) Run the following command to check the data cache status

$ kubectl get datasetExpected output:

NAME UFS TOTAL SIZE CACHED CACHE CAPACITY CACHED PERCENTAGE PHASE AGE

pv-demo-dataset 10.96GiB 10.96GiB 20.00GiB 100.0% Bound 3m13sAfter the DataLoad cache preheating is performed, the cached data volume of the dataset has been updated to the size of the entire dataset, which means that the entire dataset has been cached, and the cached percentage is 100.0%.

1) Use the following YAML to create a pod.yaml file, and modify the claimName in the YAML file to be the same as the name of the Dataset created in Step 2.

apiVersion: v1

kind: Pod

metadata:

name: nginx

spec:

containers:

- name: nginx

image: nginx

command:

- "bash"

- "-c"

- "sleep inf"

volumeMounts:

- mountPath: /data

name: data-vol

volumes:

- name: data-vol

persistentVolumeClaim:

claimName: pv-demo-dataset # The name must be the same as the Dataset. 2) Run the following command to create an application pod.

$ kubectl create -f pod.yaml3) Run the following command to log on to the pod to access data.

$ kubectl exec -it nginx bashExpected output:

# In the Nginx Pod, there is a file named demofile in the /data directory, which is 11 GB in size.

total 11G

-rw-r----- 1 root root 11G Jul 28 2023 demofile

# Run the cat /data/demofile > /dev/null command to read the demofile file and write the file to /dev/null, which takes 11.004 seconds.

$ time cat /data/demofile > /dev/null

real 0m11.004s

user 0m0.065s

sys 0m3.089sBecause all data in the dataset has been cached in the distributed cache system, the data will be read from the cache instead of from the remote storage system, thus reducing the network transmission and improving the data access efficiency.

[1] Create an ACK Pro cluster

https://www.alibabacloud.com/help/en/ack/ack-managed-and-ack-dedicated/user-guide/create-an-ack-managed-cluster-2#task-skz-qwk-qfb

[2] Install the cloud-native AI suite

https://www.alibabacloud.com/help/en/ack/cloud-native-ai-suite/user-guide/deploy-the-cloud-native-ai-suite#task-2038811

[3] Use kubectl to connect to a cluster

https://www.alibabacloud.com/help/en/ack/ack-managed-and-ack-dedicated/user-guide/obtain-the-kubeconfig-file-of-a-cluster-and-use-kubectl-to-connect-to-the-cluster#task-ubf-lhg-vdb

212 posts | 13 followers

FollowAlibaba Cloud Native - November 29, 2023

Alibaba Cloud Native - November 29, 2023

Alibaba Cloud Native - November 29, 2023

Alibaba Cloud Native - November 30, 2023

Alibaba Cloud Native Community - September 19, 2023

Alibaba Container Service - November 15, 2024

212 posts | 13 followers

Follow ACK One

ACK One

Provides a control plane to allow users to manage Kubernetes clusters that run based on different infrastructure resources

Learn More Container Service for Kubernetes

Container Service for Kubernetes

Alibaba Cloud Container Service for Kubernetes is a fully managed cloud container management service that supports native Kubernetes and integrates with other Alibaba Cloud products.

Learn More Storage Capacity Unit

Storage Capacity Unit

Plan and optimize your storage budget with flexible storage services

Learn More Hybrid Cloud Storage

Hybrid Cloud Storage

A cost-effective, efficient and easy-to-manage hybrid cloud storage solution.

Learn MoreMore Posts by Alibaba Cloud Native